Presentation, case study_event detection in twitter

- 1. Social Media Mining Case Study : Event Detection in Twitter Based on 'Event Detection in Twitter' by Jianshu Weng, Bu-Sung Lee ICWSM 2011 Presented by : Yue HE & Falitokiniaina RABEARISON

- 2. Motivation Case Study : Event Detection in Twitter - 01

- 3. Outline Related work Presentation of EDCoW Experiments and Results Conclusion Discussion Case Study : Event Detection in Twitter - 03

- 4. Related Work Case Study : Event Detection in Twitter - 05

- 5. Related Work Term-weighting-based approaches Topic-modeling-based approaches Clustering-based approaches Case Study : Event Detection in Twitter - 06

- 6. PRESENTATION of EDCoWCase Study : Event Detection in Twitter - 07

- 7. EDCoW [ Workflow] Case Study : Event Detection in Twitter - 08

- 8. Case Study : Event Detection in Twitter - 09 EDCoW [Components]

- 9. EXPERIMENTS and RESULTS Case Study : Event Detection in Twitter - 10

- 10. Dataset Description and Preparation Case Study : Event Detection in Twitter - 11

- 11. Signal Construction Case Study : Event Detection in Twitter - 12

- 12. “santa + wine” Case Study : Event Detection in Twitter - 13

- 13. Signal Construction Q: How can you group the sets of words? A: Similarity between words Case Study : Event Detection in Twitter - 14

- 14. Auto Correlation Computation Filter: Median Absolute Deviation(MAD) : 2400 words 32 keywords Case Study : Event Detection in Twitter - 15

- 15. Cross Correlation Computation Case Study : Event Detection in Twitter - 16

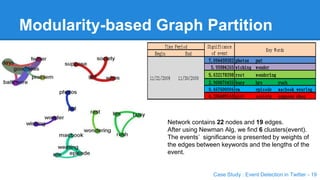

- 16. Cross Correlation Computation 22 nodes 19 edges Case Study : Event Detection in Twitter - 17 32 nodes 465 edges (nodes=signals/keywords)

- 17. Reduction Case Study : Event Detection in Twitter - 18

- 18. Modularity-based Graph Partition Network contains 22 nodes and 19 edges. After using Newman Alg, we find 6 clusters(event). The events’ significance is presented by weights of the edges between keywords and the lengths of the event. Case Study : Event Detection in Twitter - 19

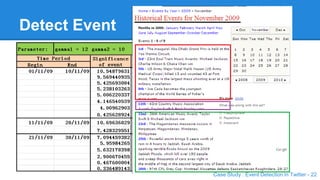

- 19. Event Case Study : Event Detection in Twitter - 20

- 20. Detect Event Case Study : Event Detection in Twitter - 21

- 21. Detect Event Case Study : Event Detection in Twitter - 22

- 22. CONCLUSION Case Study : Event Detection in Twitter - 23

- 23. Conclusion â—Ź After studying and implementing the Event Detection with Clustering of Wavelet-based Signals Algorithm in Java, we find it works in different time period. We also try to use different parameters to evaluate it on the twitter dataset. â—Ź Some places to improve: â—Ź How to control the parameters? â—Ź How to let the keywords(events) make sense? â—Ź It need some background to translate the combined keywords into event. â—Ź How to utilize the results from public opinion? Case Study : Event Detection in Twitter - 24

- 24. Integrate EDCoW into SONDY Case Study : Event Detection in Twitter - 25

- 25. EDCoW [Javadoc] Case Study : Event Detection in Twitter - 26

- 26. Case Study : Event Detection in Twitter - 27

- 27. Acknowledge Thank Jairo Cugliari & Adrien Guille for your guides, comments and discussions! Case Study : Event Detection in Twitter - 28

- 28. DISCUSSION Thanks for your attention! ;) Case Study : Event Detection in Twitter - 29

Editor's Notes

- #2: Hello, Everyone Today, me and Yue we’d like to talk about our implementation of EDCoW (Event Detection with Clustering of Wavelet-based Signals) This case study was under the guidance of ...

- #3: Well, Twitter is one of the most popular microblogging services, and has received much attention recently. And, Microblogging is a form of blogging that allows user to send brief text updates. For Twitter it is 140 characters per tweets. users can post photographs or audio clips similar to text. This is the screenshot of Twitter Public Time. Twitter users write tweets several times in a single day. The number of tweets was claimed to be about 200million per day. So, there is the large number of tweets, which results in many reports related to events. For example, they include social events such as parties, baseball games and presidential campaigns. And they also include disastrous events, such as storms, traffic jams riots , heavy rain-fall and earthquakes. We want to know how we can detect these events.

- #6: Different event detection methods adapted to Twitter were studied in several papers. In general, as mentioned in a survey paper that Adrien shared, we have these approaches (1) The idea is to identify words that are particular to a fixed length time window. (2) The idea is to incrementally update the topic model in each time window using previously generated model to guide the learning of the new evolves. (3) The idea is to study the similarity between pairs of words, then to cluster the words -> EDCoW is in that approache

- #8: Just to give you an overview of EDCoW, here is the very simplified workflow. First, we have as input a list of keywords that were stored in different files each. Then, we filter them to get the significant keywords. After that, we get the cross correlation matrix of these keywords. Finally, we cluster the very correlated keywords to get bag of words of events.

- #9: So all in all, EDCoW is the composition of 3 components Signal Construction / Cross Correlation Computation / Modularity-based Graph Partition In Signal Construction, To identify burst, EDCoW uses the frequency of individual words to get wavelets, leverages Fourier; and Shannon theories to compute the change of wavelet entropy. In Cross Correlation Computation Trivial words are filtered away based on their corresponding signal’s autocorrelation, and the similarity between each pair of non-trivial words are measured then measured. In Modularity-based Graph Partition in the end, events are detected with modularity-based Graph partitionning. After having the clusters, events’significance : depends on 2 factors : - number of words - corss correlation among the words and we filter the detected events. The wavelet analysis provides precise measurements regarding when and how the frequency of the signal changes over time - Determine the existence of any natural division of vertices of a network. - Use Newman’s modularity function (without a ground truth). - Disjoint communities in a graph. - Weighted network. Modularity reflects the concentration of nodes within modules compared with random distribution of links between all nodes regardless of modules. Modularity Q =(# of edges within groups) - (expected # of edges within groups) For a random network, Q = 0 (the number of edges within a community is no different from what you would expect) High modularity dense connections between the nodes within modules + sparse connections between nodes in different modules

- #14: maybe you will ask, there are too many words in the dataset, how can you group the sets of words?

- #18: maybe you will ask how to find the events from the network? (see next slide)

![EDCoW [ Workflow]

Case Study : Event Detection in Twitter - 08](https://image.slidesharecdn.com/presentationcasestudy-eventdetectionintwitter-140912160454-phpapp01/85/Presentation-case-study_event-detection-in-twitter-7-320.jpg)

![Case Study : Event Detection in Twitter - 09

EDCoW [Components]](https://image.slidesharecdn.com/presentationcasestudy-eventdetectionintwitter-140912160454-phpapp01/85/Presentation-case-study_event-detection-in-twitter-8-320.jpg)

![EDCoW [Javadoc]

Case Study : Event Detection in Twitter - 26](https://image.slidesharecdn.com/presentationcasestudy-eventdetectionintwitter-140912160454-phpapp01/85/Presentation-case-study_event-detection-in-twitter-25-320.jpg)