RNNs for Speech

0 likes75 views

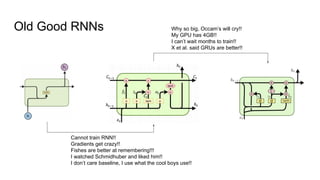

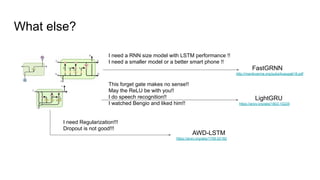

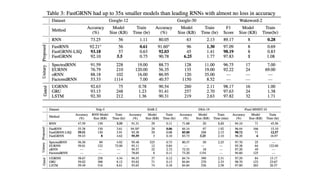

This document summarizes and compares several techniques for improving RNN performance for speech recognition: 1) FastGRNN proposes techniques like low-rank matrix approximation and quantization to make GRUs faster and smaller. 2) LightGRU removes the reset gate from GRUs and replaces tanh with ReLU for improved speech recognition performance. 3) AWD-LSTM incorporates techniques like dropout, averaged SGD, and activation regularization to prevent overfitting in LSTMs. Overall the document evaluates different approaches for making RNNs more efficient and effective for speech tasks.

1 of 15

Download to read offline

Ad

Recommended

DevOps Cebu Presentation

DevOps Cebu PresentationNeil Alwin Hermosilla

╠²

This document discusses Puppet, a configuration management tool. It provides tips to avoid common mistakes when using Puppet such as coding without planning, poor orchestration using tags, and lack of change management between environments. It also discusses using watchdog to monitor Puppet activity and reboot systems if needed, as well as following the three Rs of coding - reuse, reproduce, and recycle - to ensure Puppet code is adaptive for different environments.Automated Speech Recognition

Automated Speech Recognition Pruthvij Thakar

╠²

This document describes the implementation of various neural network architectures for speech recognition using a dataset from VoxForge. It discusses preprocessing audio data into acoustic features, and implementing recurrent neural networks (RNNs), convolutional neural networks (CNNs), and combinations of CNNs and RNNs as acoustic models. Five models are implemented and evaluated: RNN with time-distributed dense layer; CNN plus RNN; deeper RNN; bidirectional RNN; and a custom architecture with CNN and deep RNN layers. The best performing model is selected for predicting speech from the test data.Cheatsheet recurrent-neural-networks

Cheatsheet recurrent-neural-networksSteve Nouri

╠²

1. Recurrent neural networks (RNNs) allow information to persist from previous time steps through hidden states and can process input sequences of variable lengths. Common RNN architectures include LSTMs and GRUs which address the vanishing gradient problem of traditional RNNs.

2. RNNs are commonly used for natural language processing tasks like machine translation, sentiment classification, and named entity recognition. They learn distributed word representations through techniques like word2vec, GloVe, and negative sampling.

3. Machine translation models use an encoder-decoder architecture with an RNN encoder and decoder. Beam search is commonly used to find high-probability translation sequences. Performance is evaluated using metrics like BLEU score.Rnn presentation 2

Rnn presentation 2Shubhangi Tandon

╠²

The document discusses recurrent neural networks (RNNs) and their ability to handle sequence data. It provides resources on LSTMs and RNN effectiveness. It describes 5 types of sequence problems RNNs can address and how RNNs are well-suited for sequences. Challenges like vanishing and exploding gradients are discussed along with strategies like LSTM gates, gradient clipping and leaky units to help optimize long term dependencies. Memory networks and their use of soft addressing mechanisms are also summarized.Recurrent Neural Networks

Recurrent Neural NetworksSharath TS

╠²

This document discusses recurrent neural networks (RNNs) and some of their applications and design patterns. RNNs are able to process sequential data like text or time series due to their ability to maintain an internal state that captures information about what has been observed in the past. The key challenges with training RNNs are vanishing and exploding gradients, which various techniques like LSTMs and GRUs aim to address. RNNs have been successfully applied to tasks involving sequential input and/or output like machine translation, image captioning, and language modeling. Memory networks extend RNNs with an external memory component that can be explicitly written to and retrieved from.Chatbot ppt

Chatbot pptManish Mishra

╠²

This document discusses different approaches for building chatbots, including retrieval-based and generative models. It describes recurrent neural networks like LSTMs and GRUs that are well-suited for natural language processing tasks. Word embedding techniques like Word2Vec are explained for representing words as vectors. Finally, sequence-to-sequence models using encoder-decoder architectures are presented as a promising approach for chatbots by using a context vector to generate responses.Deep learning architectures

Deep learning architecturesJoe li

╠²

This document discusses various machine learning concepts including neural network architectures like convolutional neural networks, LSTMs, autoencoders, and GANs. It also covers optimization techniques for training neural networks such as gradient descent, stochastic gradient descent, momentum, and Adagrad. Finally, it provides strategies for developing neural networks including selecting an appropriate network structure, checking for bugs, initializing parameters, and determining if the model is powerful enough to overfit the data.Text classification based on gated recurrent unit combines with support vecto...

Text classification based on gated recurrent unit combines with support vecto...IJECEIAES

╠²

This document discusses a proposed text classification model that combines Gated Recurrent Units (GRU) and Support Vector Machine (SVM) to improve performance in processing unstructured text data. It highlights the advantages of GRU in handling long-term dependencies in sequential data and presents empirical results demonstrating that the GRU-SVM model outperforms traditional classifiers. The study also details modifications to the GRU architecture by integrating leaky-relu activation and replacing softmax with SVM for better classification accuracy.RNN and LSTM model description and working advantages and disadvantages

RNN and LSTM model description and working advantages and disadvantagesAbhijitVenkatesh1

╠²

Recurrent neural networks (RNNs) are designed to process sequential data like time series and text. RNNs include loops that allow information to persist from one step to the next to model temporal dependencies in data. However, vanilla RNNs suffer from exploding and vanishing gradients when training on long sequences. Long short-term memory (LSTM) and gated recurrent unit (GRU) networks address this issue using gating mechanisms that control the flow of information, allowing them to learn from experiences far back in time. RNNs have been successfully applied to many real-world problems involving sequential data, such as speech recognition, machine translation, sentiment analysis and image captioning.Deep Learning Architectures for NLP (Hungarian NLP Meetup 2016-09-07)

Deep Learning Architectures for NLP (Hungarian NLP Meetup 2016-09-07)M├Īrton Mih├Īltz

╠²

The document surveys various neural network architectures for Natural Language Processing (NLP), focusing on recurrent neural networks (RNNs), long short-term memory (LSTM), gated recurrent units (GRUs), convolutional neural networks (CNNs), and their applications in sentiment analysis and text classification. It highlights recent advancements in memory networks, attention models, and hybrid architectures, along with the tools and resources available for implementing these techniques. The discussion covers challenges like long-term dependencies and vanishing gradients, alongside solutions such as advanced architectures and pre-trained embedding vectors.Deep Neural Machine Translation with Linear Associative Unit

Deep Neural Machine Translation with Linear Associative UnitSatoru Katsumata

╠²

The document proposes a novel linear associative unit (LAU) for neural machine translation. LAU reduces the gradient path inside recurrent units to address issues with optimizing deep neural networks. Experiments on Chinese-English, English-German, and English-French translation tasks show LAU outperforms GRU baselines, and increasing model depth is more effective than width. Analysis indicates LAU enables information transfer better than GRU, especially for longer sentences.Video captioning in Vietnamese using deep learning

Video captioning in Vietnamese using deep learningIJECEIAES

╠²

This paper discusses the use of deep learning techniques to develop Vietnamese video captioning models, utilizing three main approaches: sequence-to-sequence based on recurrent neural networks (RNN), RNN with attention mechanisms, and transformer models. The researchers leverage a dataset comprising 1,970 videos and over 85,000 translated Vietnamese captions to evaluate the models' effectiveness in generating meaningful captions from video content. The study highlights advancements in video content description which facilitate applications in various fields such as security, assisting the blind, and human-robot interaction.Recurrent Neural Networks for Text Analysis

Recurrent Neural Networks for Text Analysisodsc

╠²

Recurrent neural networks (RNNs) are well-suited for analyzing text data because they can model sequential and structural relationships in text. RNNs use gating mechanisms like LSTMs and GRUs to address the problem of exploding or vanishing gradients when training on long sequences. Modern RNNs trained with techniques like gradient clipping, improved initialization, and optimized training algorithms like Adam can learn meaningful representations from text even with millions of training examples. RNNs may outperform conventional bag-of-words models on large datasets but require significant computational resources. The author describes an RNN library called Passage and provides an example of sentiment analysis on movie reviews to demonstrate RNNs for text analysis.Log Message Anomaly Detection with Oversampling

Log Message Anomaly Detection with Oversampling gerogepatton

╠²

The paper presents a model for log message anomaly detection that tackles the issue of imbalanced data using a sequence generative adversarial network (seqgan) for oversampling. The proposed model incorporates an autoencoder for feature extraction and a gated recurrent unit (GRU) for classification, demonstrating improved anomaly detection accuracy on three datasets (BGL, OpenStack, and Thunderbird) when compared to without oversampling. Results indicate that appropriate data balancing techniques significantly enhance performance in classifying sparse log messages.LOG MESSAGE ANOMALY DETECTION WITH OVERSAMPLING

LOG MESSAGE ANOMALY DETECTION WITH OVERSAMPLINGgerogepatton

╠²

This paper presents a model for detecting log message anomalies by addressing the imbalanced dataset problem using a SeqGAN for oversampling, combined with an autoencoder and a Gated Recurrent Unit (GRU) network for anomaly detection. The proposed method was evaluated on three datasets: BGL, OpenStack, and Thunderbird, demonstrating improved accuracy in anomaly detection when compared to methods without oversampling. The results indicate that the use of appropriate oversampling techniques significantly enhances the classification performance of machine learning algorithms in this context.LOG MESSAGE ANOMALY DETECTION WITH OVERSAMPLING

LOG MESSAGE ANOMALY DETECTION WITH OVERSAMPLINGijaia

╠²

The paper presents a model utilizing a seqgan network for generating oversampled text log messages to address the issue of imbalanced data in log message classification and anomaly detection. The model incorporates an autoencoder for feature extraction and a GRU network for detection, demonstrating improved accuracy through proper oversampling on three imbalanced log datasets (BGL, Openstack, and Thunderbird). Experimental results indicate that the proposed method enhances anomaly detection performance compared to traditional approaches without oversampling.Duplicate_Quora_Question_Detection

Duplicate_Quora_Question_DetectionJayavardhan Reddy Peddamail

╠²

1. The datasets for this project on question pair similarity come from Kaggle and include over 4 million question pairs split between train and test sets.

2. Several neural network architectures were implemented including CNNs, LSTMs, and bidirectional LSTMs with different word embeddings.

3. The best performing model was an LSTM with Glove word embeddings, which achieved a validation loss of 0.434 after 25 minutes of training.

4. Additional text preprocessing techniques like handling class imbalance and question symmetry were explored and improved the model performance further.Training at AI Frontiers 2018 - Lukasz Kaiser: Sequence to Sequence Learning ...

Training at AI Frontiers 2018 - Lukasz Kaiser: Sequence to Sequence Learning ...AI Frontiers

╠²

This document discusses sequence to sequence learning with Tensor2Tensor (T2T) and sequence models. It provides an overview of T2T, which is a library for deep learning models and datasets. It discusses basics of sequence models including recurrent neural networks (RNNs), convolutional models, and the Transformer model based on attention. It encourages experimenting with different sequence models and datasets in T2T.One Perceptron to Rule them All: Deep Learning for Multimedia #A2IC2018

One Perceptron to Rule them All: Deep Learning for Multimedia #A2IC2018Universitat Polit├©cnica de Catalunya

╠²

The document presents a talk on deep learning for multimedia by Xavier Giro-i-Nieto at an event in Barcelona, focusing on the integration of speech, vision, and text in various applications. It highlights different encoding and decoding techniques used in tasks like image classification, speech recognition, and translation, referencing significant research papers in the field. The talk emphasizes advances in cross-modal learning and representation for improving multimedia tasks.Multi modal retrieval and generation with deep distributed models

Multi modal retrieval and generation with deep distributed modelsRoelof Pieters

╠²

The document discusses multi-modal retrieval and generation using deep learning models, emphasizing the challenge of handling the deluge of digital media across text, video, and audio. It explores the application of neural networks to create embeddings that can represent these different modalities and facilitate efficient searching and collaboration between humans and machines. Additionally, it highlights various advanced techniques and models used in natural language processing and generative AI for understanding and generating content across different media types.Recurrent Neural Networks

Recurrent Neural NetworksRakuten Group, Inc.

╠²

The document discusses various aspects of neural networks, particularly focusing on recurrent neural networks (RNN) and their applications in language modeling, translation, and more. It highlights historical perspectives, limitations, and future trends in deep learning technologies. Important figures and developments in the field are illustrated with images and references to further resources.RNN.pdf

RNN.pdfNiharikaThakur32

╠²

Modeling sequential data using recurrent neural networks can be done as follows:

1. Text data is first vectorized using word embeddings to convert words to dense vectors before being fed into the model.

2. A recurrent neural network such as an LSTM network is used, which can learn patterns from sequences of vectors.

3. For text classification tasks, an LSTM layer is often stacked on top of an embedding layer to learn temporal patterns in the word sequences. Additional LSTM layers may be stacked and their outputs combined to learn higher-level patterns.

4. The LSTM outputs are then passed to dense layers for classification or regression tasks. The model is trained end-to-end using backpropagation.New research articles 2020 october issue international journal of multimedi...

New research articles 2020 october issue international journal of multimedi...ijma

╠²

The document discusses a study comparing various acoustic feature extraction methods for Bangla speech recognition using Long Short-Term Memory (LSTM) neural networks. Key techniques evaluated include Linear Predictive Coding (LPC), Mel Frequency Cepstral Coefficients (MFCC), and Perceptual Linear Prediction (PLP), with performance analyzed through statistical tools like Bhattacharyya and Mahalanobis distances. The authors present their findings along with references to existing literature on speech recognition and neural network architectures.Sequence learning and modern RNNs

Sequence learning and modern RNNsGrigory Sapunov

╠²

The document discusses recurrent neural networks (RNNs) and their variations, such as long short-term memory (LSTM) and gated recurrent units (GRU), highlighting their advantages in handling sequence data and temporal dependencies. It also explores advancements in multi-modal learning and sequence-to-sequence architectures, along with potential solutions to common issues like slow training times and vanishing gradients. Additionally, it mentions the importance of powerful hardware in improving RNN performance and the significance of representation learning in natural language processing.recurrent_neural_networks_april_2020.pptx

recurrent_neural_networks_april_2020.pptxSagarTekwani4

╠²

The document provides an overview of recurrent neural networks (RNNs), including their implementation and applications. It discusses how RNNs can process sequential data due to their ability to preserve information from previous time steps. However, vanilla RNNs suffer from exploding and vanishing gradients during training. Newer RNN architectures like LSTMs and GRUs address this issue through gated connections that allow for better propagation of errors. Sequence learning tasks that can be addressed with RNNs include time-series forecasting, language processing, and image/video caption generation. The document outlines RNN architectures for different types of sequential inputs and outputs.A pragmatic introduction to natural language processing models (October 2019)

A pragmatic introduction to natural language processing models (October 2019)Julien SIMON

╠²

The document provides an overview of natural language processing (NLP) models, discussing various methodologies for creating word embeddings and their applications in different NLP tasks like text classification and translation. It covers notable models such as Word2Vec, GloVe, FastText, ELMo, BERT, and XLNet, highlighting their strengths and limitations. The text emphasizes the importance of utilizing pre-trained models and fine-tuning for specific business problems while acknowledging the computational costs associated with training advanced models.CoreML for NLP (Melb Cocoaheads 08/02/2018)

CoreML for NLP (Melb Cocoaheads 08/02/2018)Hon Weng Chong

╠²

This document provides an overview of using CoreML for natural language processing (NLP) tasks on Android and iOS. It discusses topics like word embeddings, recurrent neural networks, using Keras/Tensorflow models with CoreML, and an automated workflow for training models and deploying them to Android and iOS. It describes using FastText word embeddings to vectorize text, building recurrent neural network models in Keras, converting models to CoreML format, and using Jinja templating to generate code for integrating models into mobile applications. The overall goal is to automatically train NLP models and deploy them to mobile in a way that supports offline usage.Detecting Misleading Headlines in Online News: Hands-on Experiences on Attent...

Detecting Misleading Headlines in Online News: Hands-on Experiences on Attent...Kunwoo Park

╠²

The document discusses a research project aimed at detecting misleading news headlines using deep neural networks with an emphasis on attention mechanisms. It outlines the model architecture, input data processing, and the implementation of text classifiers in TensorFlow. The talk is designed to provide practical experience with attention-based RNNs to effectively analyze headline-body text incongruities.Poster SCGlowTTS Interspeech 2021

Poster SCGlowTTS Interspeech 2021Bilkent University

╠²

This paper proposes SC-GlowTTS, a zero-shot multi-speaker text-to-speech model based on flow-based generative models. SC-GlowTTS uses a speaker encoder trained on a large multi-speaker dataset to condition the model on speaker embeddings. It explores different encoder architectures and fine-tunes a GAN-based vocoder with predicted mel-spectrograms. Evaluation shows the model achieves promising results using only 11 speakers for training, comparable to a Tacotron 2 baseline trained with 98 speakers. This demonstrates potential for zero-shot TTS in low-resource languages.Qualcomm research-imagenet2015

Qualcomm research-imagenet2015Bilkent University

╠²

NeoNet developed an object-centric training approach for image recognition tasks. Key aspects included training inception networks on object crops and bounding boxes, and ensembling multiple networks. This approach achieved competitive results on ImageNet classification (4.8% top-5 error), localization (12.6% error), detection (53.6% mAP), and Places2 scene classification (17.6% error). The document describes the object-centric training techniques and component-level improvements that led to these results.More Related Content

Similar to RNNs for Speech (20)

RNN and LSTM model description and working advantages and disadvantages

RNN and LSTM model description and working advantages and disadvantagesAbhijitVenkatesh1

╠²

Recurrent neural networks (RNNs) are designed to process sequential data like time series and text. RNNs include loops that allow information to persist from one step to the next to model temporal dependencies in data. However, vanilla RNNs suffer from exploding and vanishing gradients when training on long sequences. Long short-term memory (LSTM) and gated recurrent unit (GRU) networks address this issue using gating mechanisms that control the flow of information, allowing them to learn from experiences far back in time. RNNs have been successfully applied to many real-world problems involving sequential data, such as speech recognition, machine translation, sentiment analysis and image captioning.Deep Learning Architectures for NLP (Hungarian NLP Meetup 2016-09-07)

Deep Learning Architectures for NLP (Hungarian NLP Meetup 2016-09-07)M├Īrton Mih├Īltz

╠²

The document surveys various neural network architectures for Natural Language Processing (NLP), focusing on recurrent neural networks (RNNs), long short-term memory (LSTM), gated recurrent units (GRUs), convolutional neural networks (CNNs), and their applications in sentiment analysis and text classification. It highlights recent advancements in memory networks, attention models, and hybrid architectures, along with the tools and resources available for implementing these techniques. The discussion covers challenges like long-term dependencies and vanishing gradients, alongside solutions such as advanced architectures and pre-trained embedding vectors.Deep Neural Machine Translation with Linear Associative Unit

Deep Neural Machine Translation with Linear Associative UnitSatoru Katsumata

╠²

The document proposes a novel linear associative unit (LAU) for neural machine translation. LAU reduces the gradient path inside recurrent units to address issues with optimizing deep neural networks. Experiments on Chinese-English, English-German, and English-French translation tasks show LAU outperforms GRU baselines, and increasing model depth is more effective than width. Analysis indicates LAU enables information transfer better than GRU, especially for longer sentences.Video captioning in Vietnamese using deep learning

Video captioning in Vietnamese using deep learningIJECEIAES

╠²

This paper discusses the use of deep learning techniques to develop Vietnamese video captioning models, utilizing three main approaches: sequence-to-sequence based on recurrent neural networks (RNN), RNN with attention mechanisms, and transformer models. The researchers leverage a dataset comprising 1,970 videos and over 85,000 translated Vietnamese captions to evaluate the models' effectiveness in generating meaningful captions from video content. The study highlights advancements in video content description which facilitate applications in various fields such as security, assisting the blind, and human-robot interaction.Recurrent Neural Networks for Text Analysis

Recurrent Neural Networks for Text Analysisodsc

╠²

Recurrent neural networks (RNNs) are well-suited for analyzing text data because they can model sequential and structural relationships in text. RNNs use gating mechanisms like LSTMs and GRUs to address the problem of exploding or vanishing gradients when training on long sequences. Modern RNNs trained with techniques like gradient clipping, improved initialization, and optimized training algorithms like Adam can learn meaningful representations from text even with millions of training examples. RNNs may outperform conventional bag-of-words models on large datasets but require significant computational resources. The author describes an RNN library called Passage and provides an example of sentiment analysis on movie reviews to demonstrate RNNs for text analysis.Log Message Anomaly Detection with Oversampling

Log Message Anomaly Detection with Oversampling gerogepatton

╠²

The paper presents a model for log message anomaly detection that tackles the issue of imbalanced data using a sequence generative adversarial network (seqgan) for oversampling. The proposed model incorporates an autoencoder for feature extraction and a gated recurrent unit (GRU) for classification, demonstrating improved anomaly detection accuracy on three datasets (BGL, OpenStack, and Thunderbird) when compared to without oversampling. Results indicate that appropriate data balancing techniques significantly enhance performance in classifying sparse log messages.LOG MESSAGE ANOMALY DETECTION WITH OVERSAMPLING

LOG MESSAGE ANOMALY DETECTION WITH OVERSAMPLINGgerogepatton

╠²

This paper presents a model for detecting log message anomalies by addressing the imbalanced dataset problem using a SeqGAN for oversampling, combined with an autoencoder and a Gated Recurrent Unit (GRU) network for anomaly detection. The proposed method was evaluated on three datasets: BGL, OpenStack, and Thunderbird, demonstrating improved accuracy in anomaly detection when compared to methods without oversampling. The results indicate that the use of appropriate oversampling techniques significantly enhances the classification performance of machine learning algorithms in this context.LOG MESSAGE ANOMALY DETECTION WITH OVERSAMPLING

LOG MESSAGE ANOMALY DETECTION WITH OVERSAMPLINGijaia

╠²

The paper presents a model utilizing a seqgan network for generating oversampled text log messages to address the issue of imbalanced data in log message classification and anomaly detection. The model incorporates an autoencoder for feature extraction and a GRU network for detection, demonstrating improved accuracy through proper oversampling on three imbalanced log datasets (BGL, Openstack, and Thunderbird). Experimental results indicate that the proposed method enhances anomaly detection performance compared to traditional approaches without oversampling.Duplicate_Quora_Question_Detection

Duplicate_Quora_Question_DetectionJayavardhan Reddy Peddamail

╠²

1. The datasets for this project on question pair similarity come from Kaggle and include over 4 million question pairs split between train and test sets.

2. Several neural network architectures were implemented including CNNs, LSTMs, and bidirectional LSTMs with different word embeddings.

3. The best performing model was an LSTM with Glove word embeddings, which achieved a validation loss of 0.434 after 25 minutes of training.

4. Additional text preprocessing techniques like handling class imbalance and question symmetry were explored and improved the model performance further.Training at AI Frontiers 2018 - Lukasz Kaiser: Sequence to Sequence Learning ...

Training at AI Frontiers 2018 - Lukasz Kaiser: Sequence to Sequence Learning ...AI Frontiers

╠²

This document discusses sequence to sequence learning with Tensor2Tensor (T2T) and sequence models. It provides an overview of T2T, which is a library for deep learning models and datasets. It discusses basics of sequence models including recurrent neural networks (RNNs), convolutional models, and the Transformer model based on attention. It encourages experimenting with different sequence models and datasets in T2T.One Perceptron to Rule them All: Deep Learning for Multimedia #A2IC2018

One Perceptron to Rule them All: Deep Learning for Multimedia #A2IC2018Universitat Polit├©cnica de Catalunya

╠²

The document presents a talk on deep learning for multimedia by Xavier Giro-i-Nieto at an event in Barcelona, focusing on the integration of speech, vision, and text in various applications. It highlights different encoding and decoding techniques used in tasks like image classification, speech recognition, and translation, referencing significant research papers in the field. The talk emphasizes advances in cross-modal learning and representation for improving multimedia tasks.Multi modal retrieval and generation with deep distributed models

Multi modal retrieval and generation with deep distributed modelsRoelof Pieters

╠²

The document discusses multi-modal retrieval and generation using deep learning models, emphasizing the challenge of handling the deluge of digital media across text, video, and audio. It explores the application of neural networks to create embeddings that can represent these different modalities and facilitate efficient searching and collaboration between humans and machines. Additionally, it highlights various advanced techniques and models used in natural language processing and generative AI for understanding and generating content across different media types.Recurrent Neural Networks

Recurrent Neural NetworksRakuten Group, Inc.

╠²

The document discusses various aspects of neural networks, particularly focusing on recurrent neural networks (RNN) and their applications in language modeling, translation, and more. It highlights historical perspectives, limitations, and future trends in deep learning technologies. Important figures and developments in the field are illustrated with images and references to further resources.RNN.pdf

RNN.pdfNiharikaThakur32

╠²

Modeling sequential data using recurrent neural networks can be done as follows:

1. Text data is first vectorized using word embeddings to convert words to dense vectors before being fed into the model.

2. A recurrent neural network such as an LSTM network is used, which can learn patterns from sequences of vectors.

3. For text classification tasks, an LSTM layer is often stacked on top of an embedding layer to learn temporal patterns in the word sequences. Additional LSTM layers may be stacked and their outputs combined to learn higher-level patterns.

4. The LSTM outputs are then passed to dense layers for classification or regression tasks. The model is trained end-to-end using backpropagation.New research articles 2020 october issue international journal of multimedi...

New research articles 2020 october issue international journal of multimedi...ijma

╠²

The document discusses a study comparing various acoustic feature extraction methods for Bangla speech recognition using Long Short-Term Memory (LSTM) neural networks. Key techniques evaluated include Linear Predictive Coding (LPC), Mel Frequency Cepstral Coefficients (MFCC), and Perceptual Linear Prediction (PLP), with performance analyzed through statistical tools like Bhattacharyya and Mahalanobis distances. The authors present their findings along with references to existing literature on speech recognition and neural network architectures.Sequence learning and modern RNNs

Sequence learning and modern RNNsGrigory Sapunov

╠²

The document discusses recurrent neural networks (RNNs) and their variations, such as long short-term memory (LSTM) and gated recurrent units (GRU), highlighting their advantages in handling sequence data and temporal dependencies. It also explores advancements in multi-modal learning and sequence-to-sequence architectures, along with potential solutions to common issues like slow training times and vanishing gradients. Additionally, it mentions the importance of powerful hardware in improving RNN performance and the significance of representation learning in natural language processing.recurrent_neural_networks_april_2020.pptx

recurrent_neural_networks_april_2020.pptxSagarTekwani4

╠²

The document provides an overview of recurrent neural networks (RNNs), including their implementation and applications. It discusses how RNNs can process sequential data due to their ability to preserve information from previous time steps. However, vanilla RNNs suffer from exploding and vanishing gradients during training. Newer RNN architectures like LSTMs and GRUs address this issue through gated connections that allow for better propagation of errors. Sequence learning tasks that can be addressed with RNNs include time-series forecasting, language processing, and image/video caption generation. The document outlines RNN architectures for different types of sequential inputs and outputs.A pragmatic introduction to natural language processing models (October 2019)

A pragmatic introduction to natural language processing models (October 2019)Julien SIMON

╠²

The document provides an overview of natural language processing (NLP) models, discussing various methodologies for creating word embeddings and their applications in different NLP tasks like text classification and translation. It covers notable models such as Word2Vec, GloVe, FastText, ELMo, BERT, and XLNet, highlighting their strengths and limitations. The text emphasizes the importance of utilizing pre-trained models and fine-tuning for specific business problems while acknowledging the computational costs associated with training advanced models.CoreML for NLP (Melb Cocoaheads 08/02/2018)

CoreML for NLP (Melb Cocoaheads 08/02/2018)Hon Weng Chong

╠²

This document provides an overview of using CoreML for natural language processing (NLP) tasks on Android and iOS. It discusses topics like word embeddings, recurrent neural networks, using Keras/Tensorflow models with CoreML, and an automated workflow for training models and deploying them to Android and iOS. It describes using FastText word embeddings to vectorize text, building recurrent neural network models in Keras, converting models to CoreML format, and using Jinja templating to generate code for integrating models into mobile applications. The overall goal is to automatically train NLP models and deploy them to mobile in a way that supports offline usage.Detecting Misleading Headlines in Online News: Hands-on Experiences on Attent...

Detecting Misleading Headlines in Online News: Hands-on Experiences on Attent...Kunwoo Park

╠²

The document discusses a research project aimed at detecting misleading news headlines using deep neural networks with an emphasis on attention mechanisms. It outlines the model architecture, input data processing, and the implementation of text classifiers in TensorFlow. The talk is designed to provide practical experience with attention-based RNNs to effectively analyze headline-body text incongruities.One Perceptron to Rule them All: Deep Learning for Multimedia #A2IC2018

One Perceptron to Rule them All: Deep Learning for Multimedia #A2IC2018Universitat Polit├©cnica de Catalunya

╠²

More from Bilkent University (6)

Poster SCGlowTTS Interspeech 2021

Poster SCGlowTTS Interspeech 2021Bilkent University

╠²

This paper proposes SC-GlowTTS, a zero-shot multi-speaker text-to-speech model based on flow-based generative models. SC-GlowTTS uses a speaker encoder trained on a large multi-speaker dataset to condition the model on speaker embeddings. It explores different encoder architectures and fine-tunes a GAN-based vocoder with predicted mel-spectrograms. Evaluation shows the model achieves promising results using only 11 speakers for training, comparable to a Tacotron 2 baseline trained with 98 speakers. This demonstrates potential for zero-shot TTS in low-resource languages.Qualcomm research-imagenet2015

Qualcomm research-imagenet2015Bilkent University

╠²

NeoNet developed an object-centric training approach for image recognition tasks. Key aspects included training inception networks on object crops and bounding boxes, and ensembling multiple networks. This approach achieved competitive results on ImageNet classification (4.8% top-5 error), localization (12.6% error), detection (53.6% mAP), and Places2 scene classification (17.6% error). The document describes the object-centric training techniques and component-level improvements that led to these results.Fame cvpr

Fame cvprBilkent University

╠²

The document describes the FAME (Face Association through Model Evolution) method for improving face recognition using a framework that iteratively prunes outliers from weakly labeled image datasets. It emphasizes high-dimensional feature representation and outlines a series of steps for discerning category candidates and references, eliminating spurious instances to enhance classification accuracy. The work incorporates various datasets and achieves improvements over state-of-the-art methods in face recognition tasks.Performance Evaluation for Classifiers tutorial

Performance Evaluation for Classifiers tutorialBilkent University

╠²

The document describes the author's experience with evaluating a new machine learning algorithm. It discusses how:

- The author and a student developed a new algorithm that performed better than state-of-the-art according to standard evaluation practices, winning an award, but the NIH disagreed.

- This surprised the author and led to an realization that evaluation is more complex than initially understood, motivating the author to learn more about evaluation methods from other fields.

- The author has since co-written a book on evaluating machine learning algorithms from a classification perspective, and the tutorial presented is based on this book to provide an overview of issues in evaluation and available resources.Eren_Golge_MS_Thesis_2014

Eren_Golge_MS_Thesis_2014Bilkent University

╠²

The document discusses methodologies for concept learning using weakly-labeled images from the internet, addressing challenges such as polysemy and irrelevancy. Two primary methods, cmap (Concept Map) and ame (Association through Model Evolution), are introduced for clustering and refining data to build high-quality concept models. Experimental results demonstrate the effectiveness of these methods in learning attributes, objects, faces, and scenes, outperforming state-of-the-art approaches.Cmap presentation

Cmap presentationBilkent University

╠²

The document presents a novel algorithm called RSOM for learning visual concepts from weakly-labeled web images, addressing challenges like polysemy and irrelevant data. The method involves clustering, outlier detection, and learning classifiers to improve model accuracy with minimal human effort. The results indicate that RSOM outperforms many state-of-the-art methods while being more cost-effective.Ad

Recently uploaded (20)

Securing Account Lifecycles in the Age of Deepfakes.pptx

Securing Account Lifecycles in the Age of Deepfakes.pptxFIDO Alliance

╠²

Securing Account Lifecycles in the Age of DeepfakesOWASP Barcelona 2025 Threat Model Library

OWASP Barcelona 2025 Threat Model LibraryPetraVukmirovic

╠²

Threat Model Library Launch at OWASP Barcelona 2025

https://owasp.org/www-project-threat-model-library/Raman Bhaumik - Passionate Tech Enthusiast

Raman Bhaumik - Passionate Tech EnthusiastRaman Bhaumik

╠²

A Junior Software Developer with a flair for innovation, Raman Bhaumik excels in delivering scalable web solutions. With three years of experience and a solid foundation in Java, Python, JavaScript, and SQL, she has streamlined task tracking by 20% and improved application stability."Database isolation: how we deal with hundreds of direct connections to the d...

"Database isolation: how we deal with hundreds of direct connections to the d...Fwdays

╠²

What can go wrong if you allow each service to access the database directly? In a startup, this seems like a quick and easy solution, but as the system scales, problems appear that no one could have guessed.

In my talk, I'll share Solidgate's experience in transforming its architecture: from the chaos of direct connections to a service-based data access model. I will talk about the transition stages, bottlenecks, and how isolation affected infrastructure support. I will honestly show what worked and what didn't. In short, we will analyze the controversy of this talk.Edge-banding-machines-edgeteq-s-200-en-.pdf

Edge-banding-machines-edgeteq-s-200-en-.pdfAmirStern2

╠²

ū×ūøūĢūĀū¬ ū¦ūĀūśūÖūØ ūöū×ū¬ūÉūÖū×ūö ū£ūĀūÆū©ūÖūĢū¬ ū¦ūśūĀūĢū¬ ūÉūĢ ūÆūōūĢū£ūĢū¬ (ūøū×ūøūĢūĀū¬ ūÆūÖūæūĢūÖ).

ū×ūōūæūÖū¦ūö ū¦ūĀūśūÖūØ ū×ūÆū£ūÖū£ ūÉūĢ ūżūĪūÖūØ, ūóūō ūóūĢūæūÖ ū¦ūĀūś ŌĆō 3 ū×"ū× ūĢūóūĢūæūÖ ūŚūĢū×ū© ūóūō 40 ū×"ū×. ūæū¦ū© ū×ū×ūĢūŚū®ūæ ūöū×ū¬ū©ūÖūó ūóū£ ū¬ū¦ū£ūĢū¬, ūĢū×ūĀūĢūóūÖūØ ū×ūÉūĪūÖūæūÖūÖūØ ū¬ūóū®ūÖūÖū¬ūÖūÖūØ ūøū×ūĢ ūæū×ūøūĢūĀūĢū¬ ūöūÆūōūĢū£ūĢū¬.Improving Data Integrity: Synchronization between EAM and ArcGIS Utility Netw...

Improving Data Integrity: Synchronization between EAM and ArcGIS Utility Netw...Safe Software

╠²

Utilities and water companies play a key role in the creation of clean drinking water. The creation and maintenance of clean drinking water is becoming a critical problem due to pollution and pressure on the environment. A lot of data is necessary to create clean drinking water. For fieldworkers, two types of data are key: Asset data in an asset management system (EAM for example) and Geographic data in a GIS (ArcGIS Utility Network ). Keeping this type of data up to date and in sync is a challenge for many organizations, leading to duplicating data and creating a bulk of extra attributes and data to keep everything in sync. Using FME, it is possible to synchronize Enterprise Asset Management (EAM) data with the ArcGIS Utility Network in real time. Changes (creation, modification, deletion) in ArcGIS Pro are relayed to EAM via FME, and vice versa. This ensures continuous synchronization of both systems without daily bulk updates, minimizes risks, and seamlessly integrates with ArcGIS Utility Network services. This presentation focuses on the use of FME at a Dutch water company, to create a sync between the asset management and GIS.FIDO Seminar: Perspectives on Passkeys & Consumer Adoption.pptx

FIDO Seminar: Perspectives on Passkeys & Consumer Adoption.pptxFIDO Alliance

╠²

FIDO Seminar: Perspectives on Passkeys & Consumer AdoptionPyCon SG 25 - Firecracker Made Easy with Python.pdf

PyCon SG 25 - Firecracker Made Easy with Python.pdfMuhammad Yuga Nugraha

╠²

Explore the ease of managing Firecracker microVM with the firecracker-python. In this session, I will introduce the basics of Firecracker microVM and demonstrate how this custom SDK facilitates microVM operations easily. We will delve into the design and development process behind the SDK, providing a behind-the-scenes look at its creation and features. While traditional Firecracker SDKs were primarily available in Go, this module brings a simplicity of Python to the table.Turning the Page ŌĆō How AI is Exponentially Increasing Speed, Accuracy, and Ef...

Turning the Page ŌĆō How AI is Exponentially Increasing Speed, Accuracy, and Ef...Impelsys Inc.

╠²

Artificial Intelligence (AI) has become a game-changer in content creation, automating tasks that were once very time-consuming and labor-intensive. AI-powered tools are now capable of generating high-quality articles, blog posts, and even poetry by analyzing large datasets of text and producing human-like writing.

However, AIŌĆÖs influence on content generation is not limited to text; it has also made advancements in multimedia content, such as image, video, and audio generation. AI-powered tools can now transform raw images and footage into visually stunning outputs, and are all set to have a profound impact on the publishing industry.Artificial Intelligence in the Nonprofit Boardroom.pdf

Artificial Intelligence in the Nonprofit Boardroom.pdfOnBoard

╠²

OnBoard recently partnered with Microsoft Tech for Social Impact on the AI in the Nonprofit Boardroom Survey, an initiative designed to uncover the current and future role of artificial intelligence in nonprofit governance. Powering Multi-Page Web Applications Using Flow Apps and FME Data Streaming

Powering Multi-Page Web Applications Using Flow Apps and FME Data StreamingSafe Software

╠²

Unleash the potential of FME Flow to build and deploy advanced multi-page web applications with ease. Discover how Flow Apps and FMEŌĆÖs data streaming capabilities empower you to create interactive web experiences directly within FME Platform. Without the need for dedicated web-hosting infrastructure, FME enhances both data accessibility and user experience. Join us to explore how to unlock the full potential of FME for your web projects and seamlessly integrate data-driven applications into your workflows.OpenACC and Open Hackathons Monthly Highlights June 2025

OpenACC and Open Hackathons Monthly Highlights June 2025OpenACC

╠²

The OpenACC organization focuses on enhancing parallel computing skills and advancing interoperability in scientific applications through hackathons and training. The upcoming 2025 Open Accelerated Computing Summit (OACS) aims to explore the convergence of AI and HPC in scientific computing and foster knowledge sharing. This year's OACS welcomes talk submissions from a variety of topics, from Using Standard Language Parallelism to Computer Vision Applications. The document also highlights several open hackathons, a call to apply for NVIDIA Academic Grant Program and resources for optimizing scientific applications using OpenACC directives.June Patch Tuesday

June Patch TuesdayIvanti

╠²

IvantiŌĆÖs Patch Tuesday breakdown goes beyond patching your applications and brings you the intelligence and guidance needed to prioritize where to focus your attention first. Catch early analysis on our Ivanti blog, then join industry expert Chris Goettl for the Patch Tuesday Webinar Event. There weŌĆÖll do a deep dive into each of the bulletins and give guidance on the risks associated with the newly-identified vulnerabilities. AI vs Human Writing: Can You Tell the Difference?

AI vs Human Writing: Can You Tell the Difference?Shashi Sathyanarayana, Ph.D

╠²

This slide illustrates a side-by-side comparison between human-written, AI-written, and ambiguous content. It highlights subtle cues that help readers assess authenticity, raising essential questions about the future of communication, trust, and thought leadership in the age of generative AI.9-1-1 Addressing: End-to-End Automation Using FME

9-1-1 Addressing: End-to-End Automation Using FMESafe Software

╠²

This session will cover a common use case for local and state/provincial governments who create and/or maintain their 9-1-1 addressing data, particularly address points and road centerlines. In this session, you'll learn how FME has helped Shelby County 9-1-1 (TN) automate the 9-1-1 addressing process; including automatically assigning attributes from disparate sources, on-the-fly QAQC of said data, and reporting. The FME logic that this presentation will cover includes: Table joins using attributes and geometry, Looping in custom transformers, Working with lists and Change detection.MuleSoft for AgentForce : Topic Center and API Catalog

MuleSoft for AgentForce : Topic Center and API Catalogshyamraj55

╠²

This presentation dives into how MuleSoft empowers AgentForce with organized API discovery and streamlined integration using Topic Center and the API Catalog. Learn how these tools help structure APIs around business needs, improve reusability, and simplify collaboration across teams. Ideal for developers, architects, and business stakeholders looking to build a connected and scalable API ecosystem within AgentForce.Crypto Super 500 - 14th Report - June2025.pdf

Crypto Super 500 - 14th Report - June2025.pdfStephen Perrenod

╠²

This OrionX's 14th semi-annual report on the state of the cryptocurrency mining market. The report focuses on Proof-of-Work cryptocurrencies since those use substantial supercomputer power to mint new coins and encode transactions on their blockchains. Only two make the cut this time, Bitcoin with $18 billion of annual economic value produced and Dogecoin with $1 billion. Bitcoin has now reached the Zettascale with typical hash rates of 0.9 Zettahashes per second. Bitcoin is powered by the world's largest decentralized supercomputer in a continuous winner take all lottery incentive network.ŌĆ£From Enterprise to Makers: Driving Vision AI Innovation at the Extreme Edge,...

ŌĆ£From Enterprise to Makers: Driving Vision AI Innovation at the Extreme Edge,...Edge AI and Vision Alliance

╠²

For the full video of this presentation, please visit: https://www.edge-ai-vision.com/2025/06/from-enterprise-to-makers-driving-vision-ai-innovation-at-the-extreme-edge-a-presentation-from-sony-semiconductor-solutions/

Amir Servi, Edge Deep Learning Product Manager at Sony Semiconductor Solutions, presents the ŌĆ£From Enterprise to Makers: Driving Vision AI Innovation at the Extreme EdgeŌĆØ tutorial at the May 2025 Embedded Vision Summit.

SonyŌĆÖs unique integrated sensor-processor technology is enabling ultra-efficient intelligence directly at the image source, transforming vision AI for enterprises and developers alike. In this presentation, Servi showcases how the AITRIOS platform simplifies vision AI for enterprises with tools for large-scale deployments and model management.

Servi also highlights his companyŌĆÖs collaboration with Ultralytics and Raspberry Pi, which brings YOLO models to the developer community, empowering grassroots innovation. Whether youŌĆÖre scaling vision AI for industry or experimenting with cutting-edge tools, this presentation will demonstrate how Sony is accelerating high-performance, energy-efficient vision AI for all.From Manual to Auto Searching- FME in the Driver's Seat

From Manual to Auto Searching- FME in the Driver's SeatSafe Software

╠²

Finding a specific car online can be a time-consuming task, especially when checking multiple dealer websites. A few years ago, I faced this exact problem while searching for a particular vehicle in New Zealand. The local classified platform, Trade Me (similar to eBay), wasnŌĆÖt yielding any results, so I expanded my search to second-hand dealer sitesŌĆöonly to realise that periodically checking each one was going to be tedious. ThatŌĆÖs when I noticed something interesting: many of these websites used the same platform to manage their inventories. Recognising this, I reverse-engineered the platformŌĆÖs structure and built an FME workspace that automated the search process for me. By integrating API calls and setting up periodic checks, I received real-time email alerts when matching cars were listed. In this presentation, IŌĆÖll walk through how I used FME to save hours of manual searching by creating a custom car-finding automation system. While FME canŌĆÖt buy a car for youŌĆöyetŌĆöit can certainly help you find the one youŌĆÖre after!ŌĆ£From Enterprise to Makers: Driving Vision AI Innovation at the Extreme Edge,...

ŌĆ£From Enterprise to Makers: Driving Vision AI Innovation at the Extreme Edge,...Edge AI and Vision Alliance

╠²

Ad

RNNs for Speech

- 1. RNNs for Speech Faster and smaller RNNs with new regularization techniques.

- 2. Old Good RNNs Cannot train RNN!! Gradients get crazy!! Fishes are better at remembering!!! I watched Schmidhuber and liked him!! I donŌĆÖt care baseline, I use what the cool boys use!! Why so big, OccamŌĆÖs will cry!! My GPU has 4GB!! I canŌĆÖt wait months to train!! X et al. said GRUs are better!!

- 3. What else? I need a RNN size model with LSTM performance !! I need a smaller model or a better smart phone !! FastGRNN http://manikvarma.org/pubs/kusupati18.pdf This forget gate makes no sense!! May the ReLU be with you!! I do speech recognition!! I watched Bengio and liked him!! LightGRU https://arxiv.org/abs/1803.10225 I need Regularization!!! Dropout is not good!!! AWD-LSTM https://arxiv.org/abs/1708.02182

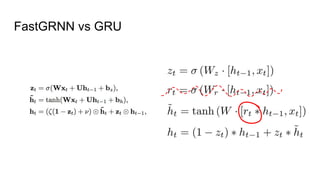

- 4. Fast GRNN ŌŚÅ 2 trainable matrices vs 6 trainable matrices in a GRU layer. ŌŚÅ Low rank approximation of matrices: w = w1(w2).T ŌŚÅ Integer quantization for parameters. ŌŚÅ Piecewise linear approximation of non-linearities.

- 7. Light Gated Recurrent Units ŌŚÅ Remove the reset gate. ŌŚÅ Replace tanh with ReLU ŌŚÅ Batch normalization to reduce ReLU unstability. ŌŚÅ Specifically targeting speech recognition. ŌŚÅ Orthogonal weight initialization, Variational dropout

- 8. Redundancy of Reset Gate

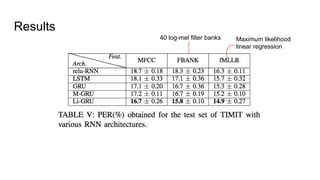

- 9. Results 40 log-mel filter banks Maximum likelihood linear regression

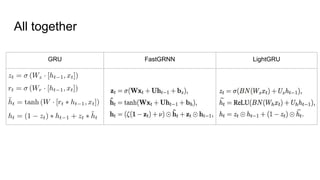

- 10. All together GRU FastGRNN LightGRU

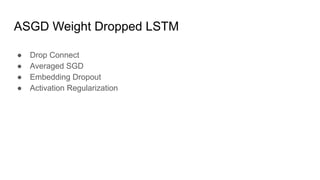

- 11. ASGD Weight Dropped LSTM ŌŚÅ Drop Connect ŌŚÅ Averaged SGD ŌŚÅ Embedding Dropout ŌŚÅ Activation Regularization

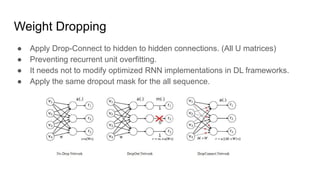

- 12. Weight Dropping ŌŚÅ Apply Drop-Connect to hidden to hidden connections. (All U matrices) ŌŚÅ Preventing recurrent unit overfitting. ŌŚÅ It needs not to modify optimized RNN implementations in DL frameworks. ŌŚÅ Apply the same dropout mask for the all sequence.

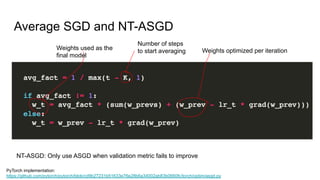

- 13. Average SGD and NT-ASGD Number of steps to start averaging Weights optimized per iterationWeights used as the final model PyTorch implementation: https://github.com/pytorch/pytorch/blob/cd9b27231b51633e76e28b6a34002ab83b0660fc/torch/optim/asgd.py NT-ASGD: Only use ASGD when validation metric fails to improve

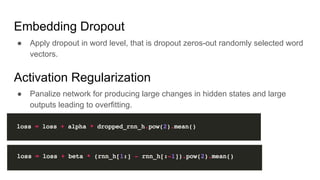

- 14. Embedding Dropout ŌŚÅ Apply dropout in word level, that is dropout zeros-out randomly selected word vectors. Activation Regularization ŌŚÅ Panalize network for producing large changes in hidden states and large outputs leading to overfitting.

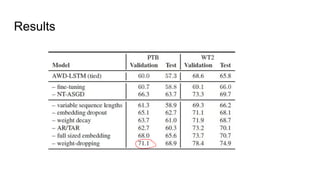

- 15. Results