RSJ 2017 - Grasp Adaptation Control with Finger Vision

- 1. Grasp Adaptation Control with Finger Vision Verificationwith Deformable and Fragile Objects Akihiko Yamaguchi(*1*2), Chris G. Atkeson(*1) *1 Robotics Institute, Carnegie Mellon University *2 Grad Schl of Info Sci, Tohoku University

- 2. Tactile Sensing for AI-based Manipulation For next generation of robot manipulation (deformableobjects, fragile, cooking, ˇ), AI-based approach is necessary Robot Learning,ReinforcementLearning, Machine Learning, Deep Learning,Deep RL, Planning, Optimization Tactile sensing improves AI-based manipulation but we donˇŻt know a good strategy to use tactile sensing in learning manipulation2

- 3. Is Tactile Sensing Really Necessary? E.g. Learning grasping with deep learning (L) Learning to grasp from 50K Tries, Pinto et al. 2016 https://youtu.be/oSqHc0nLkm8 (R) Learning hand-eye coordination for robotic grasping, Levine et al. 2017 https://youtu.be/l8zKZLqkfII No tactile sensing was used 3

- 4. Tactile Sensing is Useful in Many Scenarios What if uncertain external force is applied? What if grasping the same-visual / different-weight containers? Imagine: Wearing heavy gloves; Frozen hands 4

- 7. Using Tactile Sensing in Learning Manipulation To make manipulation robust and learning faster In this study: Using FingerVision for tactile sensing(+¦Á) Learning general policy of grasping deformable and fragile objects 7

- 8. FingerVision: Vision-based Tactile Sensing 8 Multimodal tactile sensing Force distribution Proximity Vision ? Slip / Deformation ? Object pose, texture,shape Low-cost and easy to manufacture Physically robust Cameras are becoming smaller and cheaper thanks to smart phone & IoT NanEye: 1mm x 1mm x 1mm http://www.bapimgsys.com/area- camera/ac62kusb-color-area-camera-based- on-naneye-sensor.html

- 12. How to Use FingerVision in Learning Grasping? Eternal or head vision is necessary Representation of policy to be learned: No tactile: (Vision) ? (Command) With tactile: (Vision, Tactile) ? (Command) 12

- 13. How to Use FingerVision in Learning Grasping? Grasping (picking up) structure: Deciding a grasp pose (vision) Reaching gripper to a grasp pose (vision) Grasping the object (tactile[force], (vision)) Lifting up the object (tactile[slip, force], (vision)) Evaluating grasp (vision, tactile) 13

- 14. How to Use FingerVision in Learning Grasping? Grasping (picking up) structure: Grasp Pose Estimator(vision-based) Deciding a grasp pose (vision) Reaching gripper to a grasp pose (vision) Grasp Adaptation Controller (tactile-based) Grasping the object (tactile[force], (vision)) Lifting up the object (tactile[slip, force], (vision)) ----------------------------------------- Evaluating grasp (vision, tactile) 14

- 15. Grasp Adaptation Controller Grasping and lifting up Idea: Controlling to avoid slip Simple state machine: [1] Grasp test ? Moving the object upward slightly ? If slip detected --> moving the object to the initial height and closing the gripper slightly ? No slip --> [2] [2] Lift-up ? Moving the object upward to a target height ? If slip detected --> [1] Slip avoidance feedback controller is always activated15

- 16. Experiments 16

- 19. Computer vision failure (1)

- 20. Computer vision failure (2)

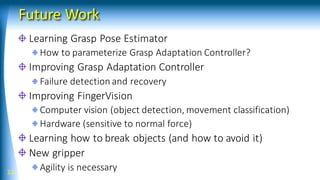

- 22. Future Work Learning Grasp Pose Estimator How to parameterize Grasp Adaptation Controller? Improving Grasp Adaptation Controller Failure detection and recovery Improving FingerVision Computer vision (object detection, movement classification) Hardware (sensitive to normal force) Learning how to break objects (and how to avoid it) New gripper Agility is necessary22

- 23. Academic Crowdfunding for FingerVision Demo at: 1şĹđ^1104 (4-2) 23 http://akihikoy.net/p/fv.html

![How to Use FingerVision in Learning Grasping?

Grasping (picking up) structure:

Deciding a grasp pose (vision)

Reaching gripper to a grasp pose (vision)

Grasping the object (tactile[force], (vision))

Lifting up the object (tactile[slip, force], (vision))

Evaluating grasp (vision, tactile)

13](https://image.slidesharecdn.com/rsj2017-170918125409/85/RSJ-2017-Grasp-Adaptation-Control-with-Finger-Vision-13-320.jpg)

![How to Use FingerVision in Learning Grasping?

Grasping (picking up) structure:

Grasp Pose Estimator(vision-based)

Deciding a grasp pose (vision)

Reaching gripper to a grasp pose (vision)

Grasp Adaptation Controller (tactile-based)

Grasping the object (tactile[force], (vision))

Lifting up the object (tactile[slip, force], (vision))

-----------------------------------------

Evaluating grasp (vision, tactile)

14](https://image.slidesharecdn.com/rsj2017-170918125409/85/RSJ-2017-Grasp-Adaptation-Control-with-Finger-Vision-14-320.jpg)

![Grasp Adaptation Controller

Grasping and lifting up

Idea: Controlling to avoid slip

Simple state machine:

[1] Grasp test

? Moving the object upward slightly

? If slip detected --> moving the object to the initial height and closing

the gripper slightly

? No slip --> [2]

[2] Lift-up

? Moving the object upward to a target height

? If slip detected --> [1]

Slip avoidance feedback controller is always activated15](https://image.slidesharecdn.com/rsj2017-170918125409/85/RSJ-2017-Grasp-Adaptation-Control-with-Finger-Vision-15-320.jpg)