1 of 28

Download to read offline

Recommended

Quarkus k8s

Quarkus k8sGeorgios Andrianakis

?

This document introduces Quarkus, an open source Java framework for building container-native microservices. Quarkus uses GraalVM to compile Java code ahead-of-time, resulting in applications that are up to 10x smaller and 100x faster to start than traditional Java applications. It is optimized for Kubernetes and serverless workloads. Quarkus achieves these benefits through ahead-of-time compilation using GraalVM, which analyzes code statically and removes unused classes and code to generate efficient native executables.How Apache Kafka? Works

How Apache Kafka? Worksconfluent

?

Watch this talk here: https://www.confluent.io/online-talks/how-apache-kafka-works-on-demand

Pick up best practices for developing applications that use Apache Kafka, beginning with a high level code overview for a basic producer and consumer. From there we’ll cover strategies for building powerful stream processing applications, including high availability through replication, data retention policies, producer design and producer guarantees.

We’ll delve into the details of delivery guarantees, including exactly-once semantics, partition strategies and consumer group rebalances. The talk will finish with a discussion of compacted topics, troubleshooting strategies and a security overview.

This session is part 3 of 4 in our Fundamentals for Apache Kafka series.Mutiny + quarkus

Mutiny + quarkusEdgar Domingues

?

This document provides an overview of reactive programming concepts and technologies. It defines reactive programming as using asynchronous and non-blocking code to build responsive and resilient applications. It discusses reactive concepts like the event loop, back pressure, and overflow management. Frameworks like Vert.x and libraries like SmallRye Mutiny that support reactive programming on the JVM are also introduced. The key advantages of reactive programming are supporting more concurrent connections using fewer threads and efficiently processing asynchronous data streams.Deeper Look Into HSAIL And It's Runtime

Deeper Look Into HSAIL And It's Runtime HSA Foundation

?

The document introduces the HSA Intermediate Language (HSAIL) which allows for split compilation between a high-level compiler and a finalizer compiler targeting the specific hardware. HSAIL defines a virtual instruction set architecture that provides optimization opportunities for both compilers while allowing code to run across different machines. It aims to improve performance, portability, and time to market compared to compiling directly to native instruction sets.Oracle Cloud Infrastructure セキュリティの取り組み [2021年2月版]![Oracle Cloud Infrastructure セキュリティの取り組み [2021年2月版]](https://cdn.slidesharecdn.com/ss_thumbnails/ocisecurityoverview202102-210219093947-thumbnail.jpg?width=560&fit=bounds)

![Oracle Cloud Infrastructure セキュリティの取り組み [2021年2月版]](https://cdn.slidesharecdn.com/ss_thumbnails/ocisecurityoverview202102-210219093947-thumbnail.jpg?width=560&fit=bounds)

![Oracle Cloud Infrastructure セキュリティの取り組み [2021年2月版]](https://cdn.slidesharecdn.com/ss_thumbnails/ocisecurityoverview202102-210219093947-thumbnail.jpg?width=560&fit=bounds)

![Oracle Cloud Infrastructure セキュリティの取り組み [2021年2月版]](https://cdn.slidesharecdn.com/ss_thumbnails/ocisecurityoverview202102-210219093947-thumbnail.jpg?width=560&fit=bounds)

Oracle Cloud Infrastructure セキュリティの取り組み [2021年2月版]オラクルエンジニア通信

?

Oracle Cloud Infrastructure のセキュリティへの取り組みのご紹介です。

セキュリティを最優先に設計した Oracle Cloud におけるセキュリティの特長として「自動化されたセキュリティ管理」、「データ中心のセキュリティ」、「セキュリティ?バイ?デザイン」を中心にセキュリティソリューションをご紹介します。Oracle Extended Clusters for Oracle RAC

Oracle Extended Clusters for Oracle RACMarkus Michalewicz

?

"Extended" or "Stretched" Oracle RAC has been available as a concept for a while. Oracle RAC 12c Release 2 introduces an Oracle Extended Cluster configuration, in which the cluster understands the concept of sites and extended setups. This knowledge is used to more efficiently manage "Extended Oracle RAC", whether the nodes are 0.1 mile or 10 miles apart.

The presentation was last updated on August 7th 2017 to add a reference to the new MAA White Paper: "Installing Oracle Extended Clusters on Exadata Database Machine" - http://www.oracle.com/technetwork/database/availability/maa-extclusters-installguide-3748227.pdf and to correct some minor details. Oracle Database / Exadata Cloud 技術情報(Oracle Cloudウェビナーシリーズ: 2020年7月9日)

Oracle Database / Exadata Cloud 技術情報(Oracle Cloudウェビナーシリーズ: 2020年7月9日)オラクルエンジニア通信

?

Oracle Cloud ウェビナーシリーズ情報: https://oracle.com/goto/ocws-jp

セッション動画: https://go.oracle.com/LP=96105Quarkus - a next-generation Kubernetes Native Java framework

Quarkus - a next-generation Kubernetes Native Java frameworkSVDevOps

?

For years, the client-server architecture has been the de-facto standard to build applications. But a major shift happened. The one model rules them all age is over. A new range of applications and architectures has emerged and impacts how code is written and how applications are deployed and executed. HTTP microservices, reactive applications, message-driven microservices, and serverless are now central players in modern systems.

Quarkus has been designed with this new world in mind and provides first-class support for these different paradigms. Developers using the Red Hat build of Quarkus can now choose between deploying natively compiled code or JVM-based code depending on an application’s needs. Natively compiled Quarkus applications are extremely fast and memory-efficient, making Quarkus a great choice for serverless and high-density cloud deployments.

Speakers

1) Shanna Chan, Senior Solutions Architect at Red Hat

2) Mark Baker, Senior Solutions Architect at Red Hat

Speaker Bios

Shanna Chan - Shanna is passionate about how open source solutions help others in their journey of application modernization and transformation of their business into cloud infrastructures. Her background includes application developments, DevOps, and architecting solutions for large enterprises. More about Shanna at http://linkedin.com/in/shanna-chan

Mark Baker - Mark’s experiences coalesce around solution /business architecture and leadership bringing together people in both post / pre-sales software projects bridging traditional legacy systems (i.e. Jakarta (JEE) MVC) with Cloud tolerant and Cloud native open source in the journey of modernization and transformation. More about Mark at http://linkedin.com/in/markwbaker-tslOracle WebLogic Server製品紹介資料(2020年/3月版)

Oracle WebLogic Server製品紹介資料(2020年/3月版)オラクルエンジニア通信

?

20年以上に渡って活用され続けるJavaアプリケーションサーバ製品「Oracle WebLogic Server」の概要と活用メリットをご理解いただける資料です。DevOps Supercharged with Docker on Exadata

DevOps Supercharged with Docker on ExadataMarketingArrowECS_CZ

?

This document discusses using Docker containers on Oracle Exadata systems. It provides an overview of Docker and its key components. It then discusses using Docker for various use cases with Exadata, including hosting Oracle applications and database releases in containers for test and development. It also provides instructions for setting up an Oracle Database in a Docker container on Exadata, such as downloading the necessary files from GitHub, building the Docker image, and using DBCA to configure the database.Domain Driven Design

Domain Driven DesignNikolay Vasilev

?

The document provides an overview of Domain Driven Design (DDD). It discusses that DDD is not a technology or methodology, but rather a set of principles and patterns for designing software focused around the domain. The key aspects of DDD are understanding the problem domain, creating an expressive model of the domain, and growing a ubiquitous language within the model. The document then discusses what constitutes a model and how it can be represented through diagrams, text descriptions, automated tests or code.Spark

SparkKoushik Mondal

?

Spark is an open-source cluster computing framework that allows processing of large datasets in parallel. It supports multiple languages and provides advanced analytics capabilities. Spark SQL was built to overcome limitations of Apache Hive by running on Spark and providing a unified data access layer, SQL support, and better performance on medium and small datasets. Spark SQL uses DataFrames and a SQLContext to allow SQL queries on different data sources like JSON, Hive tables, and Parquet files. It provides a scalable architecture and integrates with Spark's RDD API. Kubernetes Architecture

Kubernetes ArchitectureKnoldus Inc.

?

In this session, we will discuss the architecture of a Kubernetes cluster. we will go through all the master and worker components of a kubernetes cluster. We will also discuss the basic terminology of Kubernetes cluster such as Pods, Deployments, Service etc. We will also cover networking inside Kuberneets. In the end, we will discuss options available for the setup of a Kubernetes cluster.Oracle RAC on Extended Distance Clusters - Presentation

Oracle RAC on Extended Distance Clusters - PresentationMarkus Michalewicz

?

NOTE that a newer version of this presentation (covering Oracle RAC 12c Release) has been uploaded to my 狠狠撸Share: /MarkusMichalewicz/oracle-extended-clusters-for-oracle-rac

This presentation can be used as an illustration for some of the ideas and best practices discussed in the paper "Oracle RAC and Oracle RAC One Node on Extended Distance (Stretched) Clusters"Standard Edition High Availability (SEHA) - The Why, What & How

Standard Edition High Availability (SEHA) - The Why, What & HowMarkus Michalewicz

?

Standard Edition High Availability (SEHA) is the latest addition to Oracle’s high availability solutions. This presentation explains the motivation for Standard Edition High Availability, how it is implemented and the way it works currently as well as what is planned for future improvements. It was first presented during Oracle Groundbreakers Yatra (OGYatra) Online in July 2020. OCI GoldenGate Overview 2021年4月版

OCI GoldenGate Overview 2021年4月版オラクルエンジニア通信

?

Oracle Cloud Infrastructure GoldenGate に関する概要資料です。

2021年3月にリリースされ4月に正式アナウンスされました。

最新情報は、随時アップデートされた資料やマニュアルを御確認下さい。OpenShift Introduction

OpenShift IntroductionRed Hat Developers

?

In this session, Diógenes gives an introduction of the basic concepts that make OpenShift, giving special attention to its relationship with Linux containers and Kubernetes.Quarkus Denmark 2019

Quarkus Denmark 2019Max Andersen

?

1. The document provides requirements and suggestions for hands-on development with Quarkus, including using Java 8 or 11 for just VM development, GraalVM 19.2.1 for native development, and ideas for projects like enabling favorite frameworks or following guides.

2. Ideas mentioned include getting started with Quarkus, following various guides, creating an ASCII banner from a PNG, and using Docker compose with Kafka.

3. Project length is estimated at 6-8 hours and developers are also encouraged to pursue their own ideas.Red Hat Java Update and Quarkus Introduction

Red Hat Java Update and Quarkus IntroductionJohn Archer

?

Presentation for the Houston Java Users Group to celebrate 22 years of HJUG. Introduction to Quarkus.io for Java containers and serverless.CI-CD Jenkins, GitHub Actions, Tekton

CI-CD Jenkins, GitHub Actions, Tekton Araf Karsh Hamid

?

Building Cloud-Native App Series - Part 9 of 11

Microservices Architecture Series

CI-CD Jenkins, GitHub Actions, Tekton Oracle Exadata Management with Oracle Enterprise Manager

Oracle Exadata Management with Oracle Enterprise ManagerEnkitec

?

This document discusses Oracle Exadata management using Oracle Enterprise Manager. It provides an overview of the key capabilities including monitoring of databases, storage cells, and the full Exadata system. It describes how to discover Exadata targets within Enterprise Manager and ensure proper configuration. Troubleshooting tools are also covered to help diagnose any discovery or monitoring issues. The presentation aims to help customers get started with and take full advantage of Exadata management through Enterprise Manager.Open shift 4 infra deep dive

Open shift 4 infra deep diveWinton Winton

?

The document provides an overview of Red Hat OpenShift Container Platform, including:

- OpenShift provides a fully automated Kubernetes container platform for any infrastructure.

- It offers integrated services like monitoring, logging, routing, and a container registry out of the box.

- The architecture runs everything in pods on worker nodes, with masters managing the control plane using Kubernetes APIs and OpenShift services.

- Key concepts include pods, services, routes, projects, configs and secrets that enable application deployment and management.Apache Spark Streaming in K8s with ArgoCD & Spark Operator

Apache Spark Streaming in K8s with ArgoCD & Spark OperatorDatabricks

?

Over the last year, we have been moving from a batch processing jobs setup with Airflow using EC2s to a powerful & scalable setup using Airflow & Spark in K8s.

The increasing need of moving forward with all the technology changes, the new community advances, and multidisciplinary teams, forced us to design a solution where we were able to run multiple Spark versions at the same time by avoiding duplicating infrastructure and simplifying its deployment, maintenance, and development.Oracle Cloud Infrastructure:2021年9月度サービス?アップデート

Oracle Cloud Infrastructure:2021年9月度サービス?アップデートオラクルエンジニア通信

?

過去資料はこちら:https://bit.ly/3AUoYWY

2021年9月のOracle Cloud Infrastructure サービス?アップデートです。Issues of OpenStack multi-region mode

Issues of OpenStack multi-region modeJoe Huang

?

This document discusses issues with running OpenStack in a multi-region mode and proposes Tricircle as a solution. It notes that in a multi-region OpenStack deployment, each region runs independently with separate instances of services like Nova, Cinder, Neutron, etc. Tricircle aims to integrate multiple OpenStack regions into a unified cloud by acting as a central API gateway and providing global views and replication of resources, tenants, and metering data across regions. It discusses how Tricircle could address issues around networking, quotas, resource utilization monitoring and more in a multi-region OpenStack deployment.OpenShift Virtualization- Technical Overview.pdf

OpenShift Virtualization- Technical Overview.pdfssuser1490e8

?

OpenShift Virtualization allows running virtual machines as containers managed by Kubernetes. It uses KVM with QEMU and libvirt to run virtual machines inside containers. Virtual machines are scheduled and managed like pods through Kubernetes APIs and can access container networking and storage. Templates can be used to simplify virtual machine creation and configuration. Virtual machines can be imported, viewed, managed, and deleted through the OpenShift console and CLI like other Kubernetes resources. Metrics on virtual machine resources usage are also collected.[Oracle DBA & Developer Day 2014] しばちょう先生による特別講義! RMANの運用と高速化チューニング![[Oracle DBA & Developer Day 2014] しばちょう先生による特別講義! RMANの運用と高速化チューニング](https://cdn.slidesharecdn.com/ss_thumbnails/b2-2screenrevise2021jan-210105022231-thumbnail.jpg?width=560&fit=bounds)

![[Oracle DBA & Developer Day 2014] しばちょう先生による特別講義! RMANの運用と高速化チューニング](https://cdn.slidesharecdn.com/ss_thumbnails/b2-2screenrevise2021jan-210105022231-thumbnail.jpg?width=560&fit=bounds)

![[Oracle DBA & Developer Day 2014] しばちょう先生による特別講義! RMANの運用と高速化チューニング](https://cdn.slidesharecdn.com/ss_thumbnails/b2-2screenrevise2021jan-210105022231-thumbnail.jpg?width=560&fit=bounds)

![[Oracle DBA & Developer Day 2014] しばちょう先生による特別講義! RMANの運用と高速化チューニング](https://cdn.slidesharecdn.com/ss_thumbnails/b2-2screenrevise2021jan-210105022231-thumbnail.jpg?width=560&fit=bounds)

[Oracle DBA & Developer Day 2014] しばちょう先生による特別講義! RMANの運用と高速化チューニングオラクルエンジニア通信

?

Oracle DBA & Developer Day 2014でしばちょう先生が講演したRMAN Backupの運用オペレーション例と高速化チューニング方法の資料(Oracle Database 11g Release 2ベース)オラクルの運用管理ソリューションご紹介(2021/02 版)

オラクルの運用管理ソリューションご紹介(2021/02 版)オラクルエンジニア通信

?

オラクルの新しい運用管理ソリューションであるOracle Cloud Infrastructure Observability and Management Platformのサービスのご紹介資料です。各サービス機能についても紹介しております。More Related Content

What's hot (20)

Oracle WebLogic Server製品紹介資料(2020年/3月版)

Oracle WebLogic Server製品紹介資料(2020年/3月版)オラクルエンジニア通信

?

20年以上に渡って活用され続けるJavaアプリケーションサーバ製品「Oracle WebLogic Server」の概要と活用メリットをご理解いただける資料です。DevOps Supercharged with Docker on Exadata

DevOps Supercharged with Docker on ExadataMarketingArrowECS_CZ

?

This document discusses using Docker containers on Oracle Exadata systems. It provides an overview of Docker and its key components. It then discusses using Docker for various use cases with Exadata, including hosting Oracle applications and database releases in containers for test and development. It also provides instructions for setting up an Oracle Database in a Docker container on Exadata, such as downloading the necessary files from GitHub, building the Docker image, and using DBCA to configure the database.Domain Driven Design

Domain Driven DesignNikolay Vasilev

?

The document provides an overview of Domain Driven Design (DDD). It discusses that DDD is not a technology or methodology, but rather a set of principles and patterns for designing software focused around the domain. The key aspects of DDD are understanding the problem domain, creating an expressive model of the domain, and growing a ubiquitous language within the model. The document then discusses what constitutes a model and how it can be represented through diagrams, text descriptions, automated tests or code.Spark

SparkKoushik Mondal

?

Spark is an open-source cluster computing framework that allows processing of large datasets in parallel. It supports multiple languages and provides advanced analytics capabilities. Spark SQL was built to overcome limitations of Apache Hive by running on Spark and providing a unified data access layer, SQL support, and better performance on medium and small datasets. Spark SQL uses DataFrames and a SQLContext to allow SQL queries on different data sources like JSON, Hive tables, and Parquet files. It provides a scalable architecture and integrates with Spark's RDD API. Kubernetes Architecture

Kubernetes ArchitectureKnoldus Inc.

?

In this session, we will discuss the architecture of a Kubernetes cluster. we will go through all the master and worker components of a kubernetes cluster. We will also discuss the basic terminology of Kubernetes cluster such as Pods, Deployments, Service etc. We will also cover networking inside Kuberneets. In the end, we will discuss options available for the setup of a Kubernetes cluster.Oracle RAC on Extended Distance Clusters - Presentation

Oracle RAC on Extended Distance Clusters - PresentationMarkus Michalewicz

?

NOTE that a newer version of this presentation (covering Oracle RAC 12c Release) has been uploaded to my 狠狠撸Share: /MarkusMichalewicz/oracle-extended-clusters-for-oracle-rac

This presentation can be used as an illustration for some of the ideas and best practices discussed in the paper "Oracle RAC and Oracle RAC One Node on Extended Distance (Stretched) Clusters"Standard Edition High Availability (SEHA) - The Why, What & How

Standard Edition High Availability (SEHA) - The Why, What & HowMarkus Michalewicz

?

Standard Edition High Availability (SEHA) is the latest addition to Oracle’s high availability solutions. This presentation explains the motivation for Standard Edition High Availability, how it is implemented and the way it works currently as well as what is planned for future improvements. It was first presented during Oracle Groundbreakers Yatra (OGYatra) Online in July 2020. OCI GoldenGate Overview 2021年4月版

OCI GoldenGate Overview 2021年4月版オラクルエンジニア通信

?

Oracle Cloud Infrastructure GoldenGate に関する概要資料です。

2021年3月にリリースされ4月に正式アナウンスされました。

最新情報は、随時アップデートされた資料やマニュアルを御確認下さい。OpenShift Introduction

OpenShift IntroductionRed Hat Developers

?

In this session, Diógenes gives an introduction of the basic concepts that make OpenShift, giving special attention to its relationship with Linux containers and Kubernetes.Quarkus Denmark 2019

Quarkus Denmark 2019Max Andersen

?

1. The document provides requirements and suggestions for hands-on development with Quarkus, including using Java 8 or 11 for just VM development, GraalVM 19.2.1 for native development, and ideas for projects like enabling favorite frameworks or following guides.

2. Ideas mentioned include getting started with Quarkus, following various guides, creating an ASCII banner from a PNG, and using Docker compose with Kafka.

3. Project length is estimated at 6-8 hours and developers are also encouraged to pursue their own ideas.Red Hat Java Update and Quarkus Introduction

Red Hat Java Update and Quarkus IntroductionJohn Archer

?

Presentation for the Houston Java Users Group to celebrate 22 years of HJUG. Introduction to Quarkus.io for Java containers and serverless.CI-CD Jenkins, GitHub Actions, Tekton

CI-CD Jenkins, GitHub Actions, Tekton Araf Karsh Hamid

?

Building Cloud-Native App Series - Part 9 of 11

Microservices Architecture Series

CI-CD Jenkins, GitHub Actions, Tekton Oracle Exadata Management with Oracle Enterprise Manager

Oracle Exadata Management with Oracle Enterprise ManagerEnkitec

?

This document discusses Oracle Exadata management using Oracle Enterprise Manager. It provides an overview of the key capabilities including monitoring of databases, storage cells, and the full Exadata system. It describes how to discover Exadata targets within Enterprise Manager and ensure proper configuration. Troubleshooting tools are also covered to help diagnose any discovery or monitoring issues. The presentation aims to help customers get started with and take full advantage of Exadata management through Enterprise Manager.Open shift 4 infra deep dive

Open shift 4 infra deep diveWinton Winton

?

The document provides an overview of Red Hat OpenShift Container Platform, including:

- OpenShift provides a fully automated Kubernetes container platform for any infrastructure.

- It offers integrated services like monitoring, logging, routing, and a container registry out of the box.

- The architecture runs everything in pods on worker nodes, with masters managing the control plane using Kubernetes APIs and OpenShift services.

- Key concepts include pods, services, routes, projects, configs and secrets that enable application deployment and management.Apache Spark Streaming in K8s with ArgoCD & Spark Operator

Apache Spark Streaming in K8s with ArgoCD & Spark OperatorDatabricks

?

Over the last year, we have been moving from a batch processing jobs setup with Airflow using EC2s to a powerful & scalable setup using Airflow & Spark in K8s.

The increasing need of moving forward with all the technology changes, the new community advances, and multidisciplinary teams, forced us to design a solution where we were able to run multiple Spark versions at the same time by avoiding duplicating infrastructure and simplifying its deployment, maintenance, and development.Oracle Cloud Infrastructure:2021年9月度サービス?アップデート

Oracle Cloud Infrastructure:2021年9月度サービス?アップデートオラクルエンジニア通信

?

過去資料はこちら:https://bit.ly/3AUoYWY

2021年9月のOracle Cloud Infrastructure サービス?アップデートです。Issues of OpenStack multi-region mode

Issues of OpenStack multi-region modeJoe Huang

?

This document discusses issues with running OpenStack in a multi-region mode and proposes Tricircle as a solution. It notes that in a multi-region OpenStack deployment, each region runs independently with separate instances of services like Nova, Cinder, Neutron, etc. Tricircle aims to integrate multiple OpenStack regions into a unified cloud by acting as a central API gateway and providing global views and replication of resources, tenants, and metering data across regions. It discusses how Tricircle could address issues around networking, quotas, resource utilization monitoring and more in a multi-region OpenStack deployment.OpenShift Virtualization- Technical Overview.pdf

OpenShift Virtualization- Technical Overview.pdfssuser1490e8

?

OpenShift Virtualization allows running virtual machines as containers managed by Kubernetes. It uses KVM with QEMU and libvirt to run virtual machines inside containers. Virtual machines are scheduled and managed like pods through Kubernetes APIs and can access container networking and storage. Templates can be used to simplify virtual machine creation and configuration. Virtual machines can be imported, viewed, managed, and deleted through the OpenShift console and CLI like other Kubernetes resources. Metrics on virtual machine resources usage are also collected.[Oracle DBA & Developer Day 2014] しばちょう先生による特別講義! RMANの運用と高速化チューニング![[Oracle DBA & Developer Day 2014] しばちょう先生による特別講義! RMANの運用と高速化チューニング](https://cdn.slidesharecdn.com/ss_thumbnails/b2-2screenrevise2021jan-210105022231-thumbnail.jpg?width=560&fit=bounds)

![[Oracle DBA & Developer Day 2014] しばちょう先生による特別講義! RMANの運用と高速化チューニング](https://cdn.slidesharecdn.com/ss_thumbnails/b2-2screenrevise2021jan-210105022231-thumbnail.jpg?width=560&fit=bounds)

![[Oracle DBA & Developer Day 2014] しばちょう先生による特別講義! RMANの運用と高速化チューニング](https://cdn.slidesharecdn.com/ss_thumbnails/b2-2screenrevise2021jan-210105022231-thumbnail.jpg?width=560&fit=bounds)

![[Oracle DBA & Developer Day 2014] しばちょう先生による特別講義! RMANの運用と高速化チューニング](https://cdn.slidesharecdn.com/ss_thumbnails/b2-2screenrevise2021jan-210105022231-thumbnail.jpg?width=560&fit=bounds)

[Oracle DBA & Developer Day 2014] しばちょう先生による特別講義! RMANの運用と高速化チューニングオラクルエンジニア通信

?

Oracle DBA & Developer Day 2014でしばちょう先生が講演したRMAN Backupの運用オペレーション例と高速化チューニング方法の資料(Oracle Database 11g Release 2ベース)オラクルの運用管理ソリューションご紹介(2021/02 版)

オラクルの運用管理ソリューションご紹介(2021/02 版)オラクルエンジニア通信

?

オラクルの新しい運用管理ソリューションであるOracle Cloud Infrastructure Observability and Management Platformのサービスのご紹介資料です。各サービス機能についても紹介しております。Similar to Shellを書こう 02 shUnit2を使おう (12)

Startup JavaScript

Startup JavaScriptAkinari Tsugo

?

「JavaやC#は使ったことあるけどJavaScriptはまだよくわからない…」と言った人向けに、ES3 ~ ES6 までのポイントをダイジェスト。

笔辞飞别谤蝉丑别濒濒勉强会 v5 (こちらが最新です。)

笔辞飞别谤蝉丑别濒濒勉强会 v5 (こちらが最新です。)Tetsu Yama

?

Powershellの最新は v6 2017/12/30現在

目次を付けました!

基礎編はこれで完結です。

.Netモジュールの使い方等も解説したいですが、後々きっと。

翱辫别苍厂迟补肠办トラブルシューティング入门

翱辫别苍厂迟补肠办トラブルシューティング入门VirtualTech Japan Inc.

?

1. 検証環境の構築

--- Nested Virtualization

2. 動作確認の方法

--- コマンドによる監視

3. トラブルシューティング

--- 障害と解決例

CloudFoundry 2 on Apache CloudStack 4.2.1

CloudFoundry 2 on Apache CloudStack 4.2.1Kotaro Noyama

?

本資料はCloudStack Advent Calendar 2014の16日目のエントリです。Apache CloudStack 4.2.1の上にCloudFoundry 2環境を構築した際の手順メモになります。笔辞飞别谤蝉丑别濒濒勉强会 v4 (もっと新しいバージョンがあります。)

笔辞飞别谤蝉丑别濒濒勉强会 v4 (もっと新しいバージョンがあります。)Tetsu Yama

?

自社勉強会用。

Powershell 初心者にも基礎からわかるように書いてみましたん。

目次をつける予定。

=> v5 で目次つけました!

More from Keisuke Oohata (11)

Google SpreadSheetて?twitter bot作ったよ

Google SpreadSheetて?twitter bot作ったよKeisuke Oohata

?

SSBOTを使って自分用のTwitter Botを作ったお話+

Google Apps Scritにコード追加して予約投稿機能を

追加したお話Kanazawa.rb 3周年KPT

Kanazawa.rb 3周年KPTKeisuke Oohata

?

碍补苍补锄补飞补.谤产の3周年碍笔罢です。基本的には参加者データを分析から碍别别辫、笔谤辞产濒别尘を书き出しています。罢谤测に関してはやりたいこと、こうしたらどうかなどの案を出しています。イヘ?ント継続のコツ

イヘ?ント継続のコツKeisuke Oohata

?

kanazawa.rbは8/29で3年周年となります。

私が思う継続のコツを紹介しています。

現在どのように運営をしているのかをbefore(最初)、after(現在)

として説明しています。Shellを書こう 02 shUnit2を使おう

- 2. @cotton_desu

- 3. シェル书いてますか?

- 5. 実は

- 7. 蝉丑鲍苍颈迟2とは

- 8. シェルスクリプトのテストフレームワーク ? 対応OS (FreeBSD,Linux,Mac OS X,Solaris..etc) ? 対応シェル (sh,bash,dash,ksh,pdksh,zsh) 蝉丑鲍苍颈迟2とは

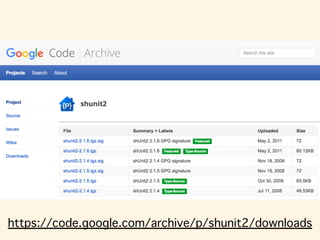

- 9. インストールは简単

- 11. ダウンロード&补尘辫;展开のみ

- 12. 使い方

- 13. ? testで始まるファンクションがテスト対象 ? テストスクリプトの最後にshunit2を 読み込む ? テストスクリプトを実行 使い方(ルール)

- 15. 例えば、补蝉蝉别谤迟贰辩耻补濒蝉

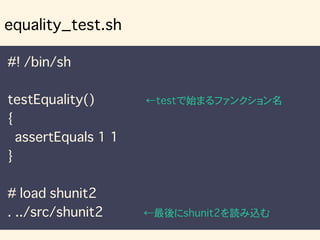

- 17. 作成例

- 18. #! /bin/sh testEquality() ←testで始まるファンクション名 { assertEquals 1 1 } # load shunit2 . ../src/shunit2 ←最後にshunit2を読み込む equality_test.sh

- 19. 実行例

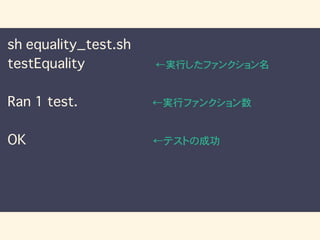

- 20. sh equality_test.sh testEquality ←実行したファンクション名 Ran 1 test. ←実行ファンクション数 OK ←テストの成功

- 21. 失败例

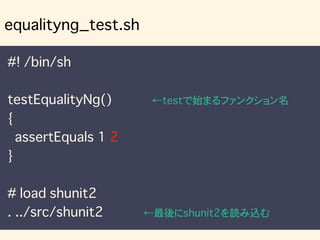

- 22. #! /bin/sh testEqualityNg() ←testで始まるファンクション名 { assertEquals 1 2 } # load shunit2 . ../src/shunit2 ←最後にshunit2を読み込む equalityng_test.sh

- 23. 実行例

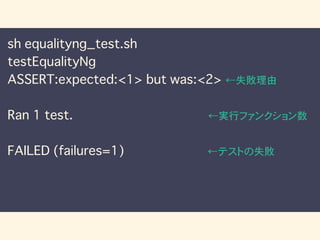

- 24. sh equalityng_test.sh testEqualityNg ASSERT:expected:<1> but was:<2> ←失敗理由 Ran 1 test. ←実行ファンクション数 FAILED (failures=1) ←テストの失敗

- 27. Demo

- 28. ? インストールも使い方も簡単 ? テストコードの書き方も簡単 ? shellによるテストライフを始めましょう 総括