SIEDS Presentation 4-26

- 1. Optimizing Multi-Channel Health Information Delivery for Behavioral Change Sponsor: Locus Health Michael Buhl, James Famulare, Chris Glazier, Jennifer Harris, Alan McDowell, Greg Waldrip Advisors: Laura E. Barnes and MatthewGerber University ofVirginia, Department of Systems and Information Engineering 1

- 2. Executive Summary Implemented and tested content personalization methods for a third-party software with a UVA student population âĒ Machine Learning Methods outperformed Random Selection, but experiment lacked power to determine best personalization method âĒ Post-Hoc Survey indicated that using daily reminders increased system interaction (97%), but did not support behavioral change (11%) Recommend expansion of study and system functionality 2

- 3. Benefits ofTelehealth âĒ Reduction in readmissions rates by 51% for heart failure and 44% for other illnesses (Veterans Health Administration) [1] âĒ No difference in efficacy between virtual and in-person care over 8,000 patient study [2] âĒ Estimated return of $3.30 for every $1 spent on telecare (Geisinger Health Plan) [1] 3

- 4. To maximize patient health through telehealth system To decrease 30 day hospital readmission rates To maximize patient engagement ObjectivesTree 4

- 5. Behavioral Change Support System DesignRespond to information or question cards SMS or Email Random Content Receive Awards Interaction Data Recorded 5

- 6. To maximize patient health through telehealth application To decrease 30 day hospital readmission rates To maximize patient engagement 6

- 7. To maximize patient engagement To maximize frequency of page visits Consecutive Login Rate % of days consecutively logged in Open Email Rate % of total emails opened DwellTime Total time spent on cards To maximize effectiveness of cards 7

- 8. To maximize patient engagement To maximize frequency of page visits Consecutive Login Rate % of days consecutively logged in Open Email Rate % of total emails opened DwellTime Total time spent on cards To maximize effectiveness of cards 8

- 9. To maximize effectiveness of cards To maximizing the effectiveness of information cards Response Rate % of total cards responded to To maximize the effectiveness of questionnaire cards 9

- 10. To maximize effectiveness of cards To maximizing the effectiveness of information cards Response Rate % of total cards responded to To maximize the effectiveness of questionnaire cards 10

- 11. To maximize the effectiveness of questionnaire cards To increase medical usage rates Daily Habit Rate % of healthy card responses To increase other healthy habit rates Daily Habit Rate % of healthy card responses To maximize the number of card interaction Response Rate % of total cards responded to 11

- 12. Literature Review Morrisonâs (2015) psychology theory research suggests targeting content to improve digital health behavioral systems [3] Recommender Systems and Regression Analysis personalize content âĒ Recommender Systems use inferred ratings of viewed content to estimate ratings of unviewed content [4] âĒ Ratings based on user characteristics (collaborative filtering) or content characteristics (content-based filtering) [4] âĒ Regression Analysis identify statistically significant correlations between demographic information, internet behaviors, and contextual data and metrics [5] 12

- 13. Behavioral Change Support System DesignRespond to information or question cards SMS or Email Receive Awards Interaction Data Recorded Data Exported Content Strategies Determined Strategies Updated in System Targeted Content 13

- 14. Surveyed Systems Engineering Student Population Study Group Randomize Content Control Group Randomize Content Regression Group Target Content Collaborative Filtering Group Target Content Experimental Design: Student Exercise Study n = 15 n = 14 n = 15 Week 1 n = 44 Week 2 14

- 15. Preliminary Results:Week 1 âĒ Over half of participants responded to content (58.4%) âĒ A majority of users logged in on consecutive days (64.9%) âĒ Most users opened daily email reminders to access system (71.5%) âĒ Participants spent an average of 32.5 seconds in the system per 5 cards Data informedWeek 2 content targeting strategies 15

- 16. Models: Regression Regressed 5 metrics on 17 predictors based on user and card characteristics, looking for statistically significant and actionable predictors âĒ Regression indicated significant negative correlation between response rate and information cards We removed fact-based and non-fact based information cards to test these results overWeek 2 for the Regression group 16

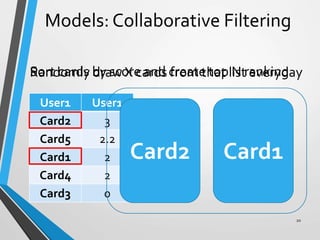

- 17. Card1 Card2 Card3 Card4 Card5 User1 2 3 0 2 User2 3 1 2 3 User3 1 2 3 2 Card score determined implicitly by set of rules that rewards users for card interactions: âĒ First week average Card Score: 1.88 out of a max of 3 Models: Collaborative Filtering 17

- 18. Card1 Card2 Card3 Card4 Card5 User1 2 3 0 2 User2 3 1 2 3 User3 1 2 3 2 Goal: Fill in the blanks Card1 Card2 Card3 Card4 Card5 User1 .25 1.25 -1.75 .25 User2 .75 -1.25 -.25 .75 User3 -1 0 1 0 Normalized ratings used to calculate user similarity Sim(1, 2) = .95 Sim(1, 3) = -.57 Sim(2, 3) = -.76 Models: Collaborative Filtering 18

- 19. Card1 Card2 Card3 Card4 Card5 User1 2 3 0 2 2.2 User2 2.8 3 1 2 3 User3 1 2 3 2 2 Card1 Card2 Card3 Card4 Card5 User1 .25 1.25 -1.75 .25 User2 .75 -1.25 -.25 .75 User3 -1 0 1 0 Sim(1, 2) = .95 Sim(1, 3) = -.57 Sim(2, 3) = -.76 ð·ððð ððððð ðšðððð = ðĒð ððððĢð + ðâ ð ðð ðð ðððððððððĄðĶâðððððððð§ðð ð ððĄððð ðâ ð ðð ðð ðððððððððĄðĶ Models: Collaborative Filtering 19

- 20. User1 Card2 3 Card5 2.2 Card1 2 Card4 2 Card3 0 User1 Card2 Card5 Card1 Card4 Card2 Card1 Sort cards by score and create top N rankingRandomly draw X cards from that list everyday Models: Collaborative Filtering 20

- 21. âĒ We evaluated three collaborative filtering methods, where each used a different method to infer unknown card scores âĒ Based on cross validation, item-based collaborative filtering provided the lowest RMSE âĒ Method results provided basis for collaborative filtering user group inWeek 2 testing Models: Collaborative Filtering 21

- 22. Week 2 Results: Metrics ContentTargeting yielded higher response and consecutive login rates than Random Selection, but experiment lacked statistical significance to determine best personalization method. 22

- 23. Post-Study Survey Results All 44 users completed a post-study questionnaire to evaluate system and experimental efficacy âĒ Suggested improvements âĒ Inhibit simply clicking through questions âĒ Increase goal setting implementations or incentives âĒ Increase content diversity âĒ Endorsed features âĒ Email notifications âĒ System usability 23

- 24. Preferred Mechanism Of Communication âĒ Participants preferred email notifications over text messages âĒ 3% of participants did not want daily reminders 3% 24

- 25. LikelihoodTo Exercise More DueTo Study Participation âĒ 11% of participants felt the study increased their likelihood to exercise more 25

- 26. Increase In AccessTo System During SecondWeek Of Study âĒ 23% of participants felt they used the system more in the second week 26

- 27. Limitations âĒ Experiment size limited significance âĒ Non-medical population proved a poor proxy âĒ Lack of content variation negatively impacted collaborative filtering effectiveness âĒ System Shortcomings âĒ No method to prevent rapid clicking through cards âĒ Top card lists did not automatically update 27

- 28. FutureWork âĒ Expand experiment scale to validate targeting method âĒ Use large non-homogeneous medical population âĒ Create larger, more diverse content base âĒ Improve content targeting process âĒ Automate collaborative filtering process âĒ Increase effectiveness of information cards 28

- 29. Conclusions Implemented and tested content personalization methods for a third-party software with a UVA student population âĒ Machine Learning Methods outperformed Random Selection, but experiment lacked power to determine best personalization method âĒ Post-Hoc Survey indicated that using daily reminders increased system interaction (97%), but did not support behavioral change (11%) Recommend expansion of study and system functionality 29

- 30. References [1]The Promise ofTelehealth For Hospitals, Health Systems and their Communities. 2015. http://www.aha.org/research/reports/tw/15jan-tw- telehealth.pdf [2]Telemedicine Guide:Telemedicine Statistics. 2016. evisit.com/what-is- telemedicine/#13 [3] Morrison, Leanne G. âTheory-based Strategies for enhancing the Impact and Usage of Digital Health Behavior Changer Interventions: A Review.â Digital Health 1, no. 1 (2015): 1-10. [4] Rajaraman, Anand, Ullman, J. âRecommendation Systems.â Mining of massive datasets 1 (2012). [5] Drive Higher Conversions by Personalizing theWebsite Content Based on theVisitor. 2016. http://www.hebsdigital.com/ourservices/smartcms- modules/dynamic-content-personalization. 30

- 31. Appendix 31

- 32. Card Scoring method âĒ +1 point for information card response âĒ +0.5 points for question card response, +0.5 points for healthy question card response âĒ +1 point for time spent on cards > 30 âĒ +1 point for consecutive visit to cards on the day before âĒ First week average Card Score: 1.88 [1.82, 1.93] 32

- 33. State of Mental Health in the US âĒ 1 in 4 adults experience mental illness each year âĒ Only 40% receive treatment âĒ 55% of 3,100 counties have no practicing mental healthcare workers âĒ Telemental health is a viable solution

- 34. Literature Review âĒ Existing telehealth applications employ basic, rule-based content targeting methods âĒ Morrisonâs (2015) psychology theory research suggests targeting content to improve digital health behavioral systems âĒ Regression models and machine learning applications use demographic information, internet behaviors, and contextual data to target content âĒ Recommender systems, an application of machine learning, of interest due to use in current content targeting systems (i.e. Netflix) and ability to be automated 34

- 35. 1 2 3 4 5 1 0 0.5 -0.5 2 0.167 0.167 -0.33 3 0.75 -0.25 -0.25 -0.25 0.75 -0.75 0 -0.25 0.25 1 2 3 4 5 1 0 0.5 -0.5 2 0.167 0.167 -0.33 3 0.75 -0.25 -0.25 -0.25 Users Cards User responds to question and information cards Scores calculated by executing card scoring algorithm Cross validation selects best collaborative filter Best collaborative filter computes user card rankings System randomly draws 5 cards from top 10 ranking 35

Editor's Notes

- This capstone project will design and execute a small, local IRIS trial to generate sample data and develop machine learning techniques and predictive analytics algorithms.

- http://evisit.com/36-telemedicine-statistics-know/

- Mention IRB and details of population

- 0x254061

- Adapted from Recommender Systems and Introduction

- Adapted from Recommender Systems and Introduction

- Adapted from Recommender Systems and Introduction

- This capstone project will design and execute a small, local Moxie trial to generate sample data and develop machine learning techniques and predictive analytics algorithms.

- Ps.psychiatryonline.org Rene Quashie: âpatients surveyed have consistently stated that they believe telemental health to be a credible and effective practice of medicine, and studies have found little or no difference in patient satisfaction as compared with face-to-face mental health consultationsâ http://www.techhealthperspectives.com/2015/08/24/the-boom-in-telemental-health/#%2EVds4MOh521E%2Elinkedin

![Benefits ofTelehealth

âĒ Reduction in readmissions rates by 51% for heart failure

and 44% for other illnesses (Veterans Health

Administration) [1]

âĒ No difference in efficacy between virtual and in-person

care over 8,000 patient study [2]

âĒ Estimated return of $3.30 for every $1 spent on

telecare (Geisinger Health Plan) [1]

3](https://image.slidesharecdn.com/384a53ef-20e5-44bb-bc43-7cab5f6a5790-170205195739/85/SIEDS-Presentation-4-26-3-320.jpg)

![Literature Review

Morrisonâs (2015) psychology theory research suggests targeting

content to improve digital health behavioral systems [3]

Recommender Systems and Regression Analysis personalize

content

âĒ Recommender Systems use inferred ratings of viewed content

to estimate ratings of unviewed content [4]

âĒ Ratings based on user characteristics (collaborative filtering) or

content characteristics (content-based filtering) [4]

âĒ Regression Analysis identify statistically significant

correlations between demographic information, internet

behaviors, and contextual data and metrics [5]

12](https://image.slidesharecdn.com/384a53ef-20e5-44bb-bc43-7cab5f6a5790-170205195739/85/SIEDS-Presentation-4-26-12-320.jpg)

![References

[1]The Promise ofTelehealth For Hospitals, Health Systems and their

Communities. 2015. http://www.aha.org/research/reports/tw/15jan-tw-

telehealth.pdf

[2]Telemedicine Guide:Telemedicine Statistics. 2016. evisit.com/what-is-

telemedicine/#13

[3] Morrison, Leanne G. âTheory-based Strategies for enhancing the Impact

and Usage of Digital Health Behavior Changer Interventions: A Review.â

Digital Health 1, no. 1 (2015): 1-10.

[4] Rajaraman, Anand, Ullman, J. âRecommendation Systems.â Mining of

massive datasets 1 (2012).

[5] Drive Higher Conversions by Personalizing theWebsite Content Based

on theVisitor. 2016. http://www.hebsdigital.com/ourservices/smartcms-

modules/dynamic-content-personalization.

30](https://image.slidesharecdn.com/384a53ef-20e5-44bb-bc43-7cab5f6a5790-170205195739/85/SIEDS-Presentation-4-26-30-320.jpg)

![Card Scoring method

âĒ +1 point for information card response

âĒ +0.5 points for question card response, +0.5 points for

healthy question card response

âĒ +1 point for time spent on cards > 30

âĒ +1 point for consecutive visit to cards on the day before

âĒ First week average Card Score: 1.88 [1.82, 1.93]

32](https://image.slidesharecdn.com/384a53ef-20e5-44bb-bc43-7cab5f6a5790-170205195739/85/SIEDS-Presentation-4-26-32-320.jpg)