Spark_Talha.pptx

- 1. Apache Spark Muhammad Talha Ashfaq 1

- 2. Introduction • Big Data is a term used to describe extremely large and complex data sets that are difficult to process using traditional methods. • In the early 2000s, the amount of data being generated exploded exponentially with the use of the internet, social media, and various digital technologies. • Organizations found themselves facing a massive volume of data that was very hard to process. 2

- 3. Hadoop • To address the challenge of processing Big Data, Hadoop was developed in 2006. Hadoop is a distributed processing framework that allows organizations to store and process large volumes of data across multiple computers. Hadoop has two main components: • Hadoop Distributed File System (HDFS): A distributed storage system for storing data across multiple computers. • MapReduce: A programming model for processing large data sets in parallel. 3

- 4. Apache Spark • Apache Spark is a unified analytics engine for large-scale data processing. • It was developed in 2009 as a research project at the University of California, Berkeley. • Spark is built on top of Hadoop and addresses some of the limitations of Hadoop, such as slow performance and the inability to process data in real time. 4

- 5. Spark's Key Features • Spark has several key features that make it a popular choice for Big Data processing: • In-memory processing: Spark stores data in memory, which allows it to process data much faster than Hadoop. • Real-time processing: Spark can process data in real time, which makes it ideal for applications such as fraud detection and social media analysis. • Support for multiple programming languages: Spark can be programmed in Java, Scala, Python, and R. • Unified analytics engine: Spark can be used for a variety of tasks, including batch processing, stream processing, machine learning, and graph processing. 5

- 6. Spark's Architecture Spark's architecture is based on the following components: • Cluster manager: The cluster manager is responsible for managing the cluster of computers and allocating resources to Spark applications. • Driver: The driver is responsible for coordinating the execution of Spark applications across the cluster. • Executors: Executors are responsible for executing the code of Spark applications on the cluster nodes. • RDD (Resilient Distributed Dataset): RDD is a distributed data structure that represents data stored in memory across the cluster. 6

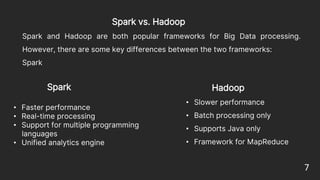

- 7. Spark vs. Hadoop Spark and Hadoop are both popular frameworks for Big Data processing. However, there are some key differences between the two frameworks: Spark 7 Spark • Faster performance • Real-time processing • Support for multiple programming languages • Unified analytics engine Hadoop • Slower performance • Batch processing only • Supports Java only • Framework for MapReduce

- 8. Conclusion • Spark is a popular choice for Big Data processing because it is faster, more versatile, and easier to use than Hadoop. • However, Hadoop is still widely used, especially for batch processing applications. 8