Sql optimize

- 1. DBA 322 Optimizing Stored Procedure Performance Kimberly L. Tripp Solid Quality Learning â SolidQualityLearning.com Email: Kimberly@SolidQualityLearning.com SYSolutions, Inc. â SQLSkills.com Email: Kimberly@SQLSkills.com

- 2. Introduction Kimberly L. Tripp, SQL Server MVP Principal Mentor, Solid Quality Learning * In-depth, high quality training around the world! www.SolidQualityLearning.com Content Manager for www.SQLSkills.com Writer/Editor for TSQL Solutions/SQL Mag www.tsqlsolutions.com and www.sqlmag.com Consultant/Trainer/Speaker Coauthor for MSPress title: SQL Server 2000 High Availability Presenter/Technical Manager for SQL Server 2000 High Availability Overview DVD Very approachable. Please ask me questions!

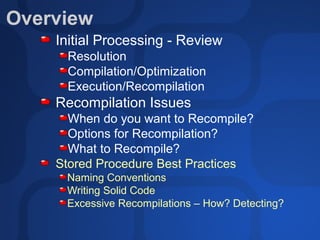

- 3. Overview Initial Processing - Review Resolution Compilation/Optimization Execution/Recompilation Recompilation Issues When do you want to Recompile? Options for Recompilation? What to Recompile? Stored Procedure Best Practices Naming Conventions Writing Solid Code Excessive Recompilations â How? Detecting?

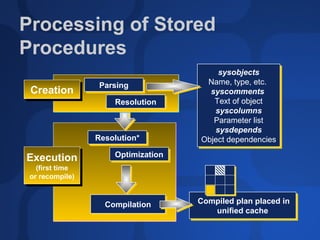

- 4. Processing of Stored Procedures sysobjects sysobjects Parsing Name, type, etc. Name, type, etc. Creation Parsing Creation syscomments syscomments Resolution Resolution Text of object Text of object syscolumns syscolumns Parameter list Parameter list sysdepends sysdepends Resolution* Resolution* Object dependencies Object dependencies Execution Optimization Optimization Execution (first time (first time or recompile) or recompile) Compilation Compiled plan placed in Compiled plan placed in Compilation unified cache unified cache

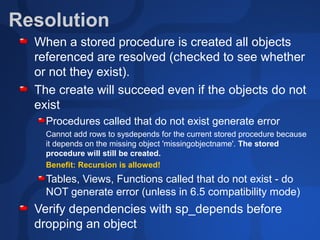

- 5. Resolution When a stored procedure is created all objects referenced are resolved (checked to see whether or not they exist). The create will succeed even if the objects do not exist Procedures called that do not exist generate error Cannot add rows to sysdepends for the current stored procedure because it depends on the missing object 'missingobjectname'. The stored procedure will still be created. Benefit: Recursion is allowed! Tables, Views, Functions called that do not exist - do NOT generate error (unless in 6.5 compatibility mode) Verify dependencies with sp_depends before dropping an object

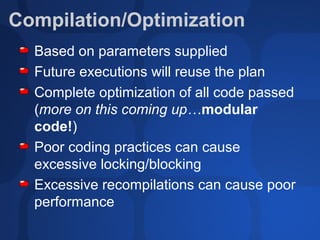

- 6. Compilation/Optimization Based on parameters supplied Future executions will reuse the plan Complete optimization of all code passed (more on this coming upâĶmodular code!) Poor coding practices can cause excessive locking/blocking Excessive recompilations can cause poor performance

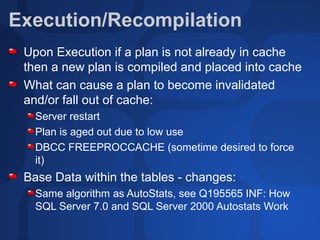

- 7. Execution/Recompilation Upon Execution if a plan is not already in cache then a new plan is compiled and placed into cache What can cause a plan to become invalidated and/or fall out of cache: Server restart Plan is aged out due to low use DBCC FREEPROCCACHE (sometime desired to force it) Base Data within the tables - changes: Same algorithm as AutoStats, see Q195565 INF: How SQL Server 7.0 and SQL Server 2000 Autostats Work

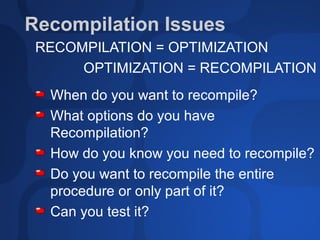

- 8. Recompilation Issues RECOMPILATION = OPTIMIZATION OPTIMIZATION = RECOMPILATION When do you want to recompile? What options do you have Recompilation? How do you know you need to recompile? Do you want to recompile the entire procedure or only part of it? Can you test it?

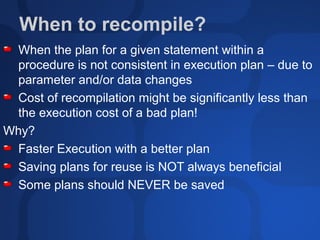

- 9. When to recompile? When the plan for a given statement within a procedure is not consistent in execution plan â due to parameter and/or data changes Cost of recompilation might be significantly less than the execution cost of a bad plan! Why? Faster Execution with a better plan Saving plans for reuse is NOT always beneficial Some plans should NEVER be saved

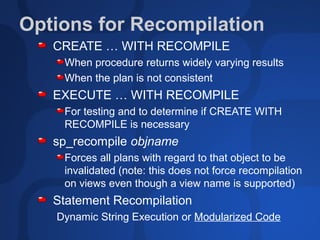

- 10. Options for Recompilation CREATE âĶ WITH RECOMPILE When procedure returns widely varying results When the plan is not consistent EXECUTE âĶ WITH RECOMPILE For testing and to determine if CREATE WITH RECOMPILE is necessary sp_recompile objname Forces all plans with regard to that object to be invalidated (note: this does not force recompilation on views even though a view name is supported) Statement Recompilation Dynamic String Execution or Modularized Code

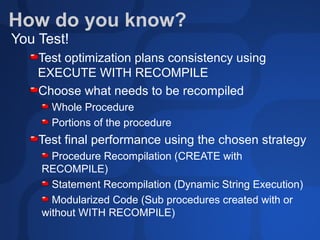

- 11. How do you know? You Test! Test optimization plans consistency using EXECUTE WITH RECOMPILE Choose what needs to be recompiled Whole Procedure Portions of the procedure Test final performance using the chosen strategy Procedure Recompilation (CREATE with RECOMPILE) Statement Recompilation (Dynamic String Execution) Modularized Code (Sub procedures created with or without WITH RECOMPILE)

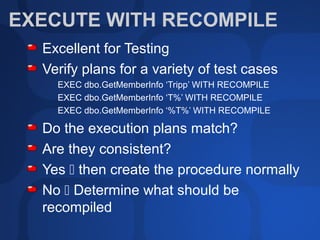

- 12. EXECUTE WITH RECOMPILE Excellent for Testing Verify plans for a variety of test cases EXEC dbo.GetMemberInfo âTrippâ WITH RECOMPILE EXEC dbo.GetMemberInfo âT%â WITH RECOMPILE EXEC dbo.GetMemberInfo â%T%â WITH RECOMPILE Do the execution plans match? Are they consistent? Yes ï then create the procedure normally No ï Determine what should be recompiled

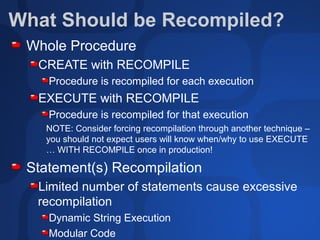

- 13. What Should be Recompiled? Whole Procedure CREATE with RECOMPILE Procedure is recompiled for each execution EXECUTE with RECOMPILE Procedure is recompiled for that execution NOTE: Consider forcing recompilation through another technique â you should not expect users will know when/why to use EXECUTE âĶ WITH RECOMPILE once in production! Statement(s) Recompilation Limited number of statements cause excessive recompilation Dynamic String Execution Modular Code

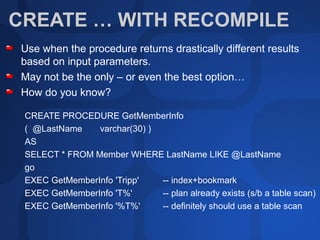

- 14. CREATE âĶ WITH RECOMPILE Use when the procedure returns drastically different results based on input parameters. May not be the only â or even the best optionâĶ How do you know? CREATE PROCEDURE GetMemberInfo ( @LastName varchar(30) ) AS SELECT * FROM Member WHERE LastName LIKE @LastName go EXEC GetMemberInfo 'Tripp' -- index+bookmark EXEC GetMemberInfo 'T%' -- plan already exists (s/b a table scan) EXEC GetMemberInfo '%T%' -- definitely should use a table scan

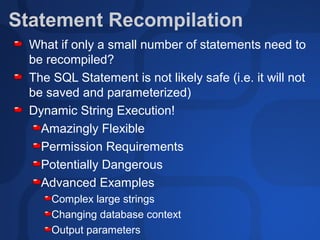

- 15. Statement Recompilation What if only a small number of statements need to be recompiled? The SQL Statement is not likely safe (i.e. it will not be saved and parameterized) Dynamic String Execution! Amazingly Flexible Permission Requirements Potentially Dangerous Advanced Examples Complex large strings Changing database context Output parameters

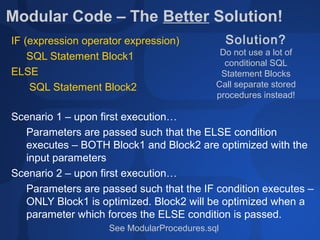

- 16. Modular Code â The Better Solution! IF (expression operator expression) Solution? Do not use a lot of SQL Statement Block1 conditional SQL ELSE Statement Blocks SQL Statement Block2 Call separate stored procedures instead! Scenario 1 â upon first executionâĶ Parameters are passed such that the ELSE condition executes â BOTH Block1 and Block2 are optimized with the input parameters Scenario 2 â upon first executionâĶ Parameters are passed such that the IF condition executes â ONLY Block1 is optimized. Block2 will be optimized when a parameter which forces the ELSE condition is passed. See ModularProcedures.sql

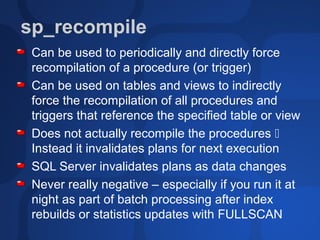

- 17. sp_recompile Can be used to periodically and directly force recompilation of a procedure (or trigger) Can be used on tables and views to indirectly force the recompilation of all procedures and triggers that reference the specified table or view Does not actually recompile the procedures ï Instead it invalidates plans for next execution SQL Server invalidates plans as data changes Never really negative â especially if you run it at night as part of batch processing after index rebuilds or statistics updates with FULLSCAN

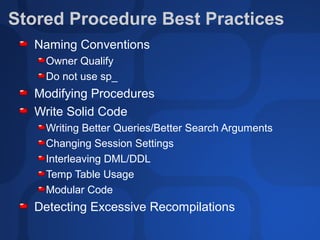

- 18. Stored Procedure Best Practices Naming Conventions Owner Qualify Do not use sp_ Modifying Procedures Write Solid Code Writing Better Queries/Better Search Arguments Changing Session Settings Interleaving DML/DDL Temp Table Usage Modular Code Detecting Excessive Recompilations

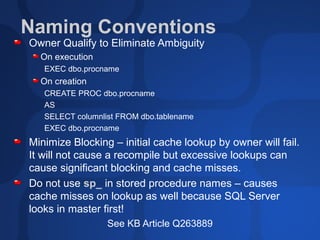

- 19. Naming Conventions Owner Qualify to Eliminate Ambiguity On execution EXEC dbo.procname On creation CREATE PROC dbo.procname AS SELECT columnlist FROM dbo.tablename EXEC dbo.procname Minimize Blocking â initial cache lookup by owner will fail. It will not cause a recompile but excessive lookups can cause significant blocking and cache misses. Do not use sp_ in stored procedure names â causes cache misses on lookup as well because SQL Server looks in master first! See KB Article Q263889

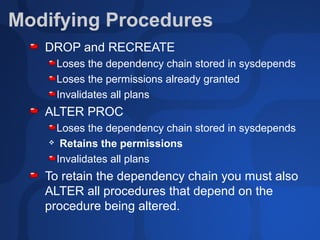

- 20. Modifying Procedures DROP and RECREATE Loses the dependency chain stored in sysdepends Loses the permissions already granted Invalidates all plans ALTER PROC Loses the dependency chain stored in sysdepends ïķ Retains the permissions Invalidates all plans To retain the dependency chain you must also ALTER all procedures that depend on the procedure being altered.

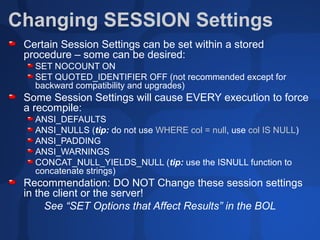

- 21. Changing SESSION Settings Certain Session Settings can be set within a stored procedure â some can be desired: SET NOCOUNT ON SET QUOTED_IDENTIFIER OFF (not recommended except for backward compatibility and upgrades) Some Session Settings will cause EVERY execution to force a recompile: ANSI_DEFAULTS ANSI_NULLS (tip: do not use WHERE col = null, use col IS NULL) ANSI_PADDING ANSI_WARNINGS CONCAT_NULL_YIELDS_NULL (tip: use the ISNULL function to concatenate strings) Recommendation: DO NOT Change these session settings in the client or the server! See âSET Options that Affect Resultsâ in the BOL

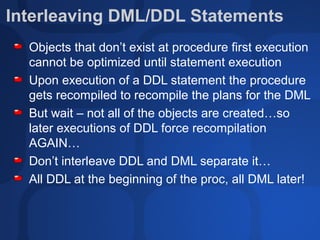

- 22. Interleaving DML/DDL Statements Objects that donât exist at procedure first execution cannot be optimized until statement execution Upon execution of a DDL statement the procedure gets recompiled to recompile the plans for the DML But wait â not all of the objects are createdâĶso later executions of DDL force recompilation AGAINâĶ Donât interleave DDL and DML separate itâĶ All DDL at the beginning of the proc, all DML later!

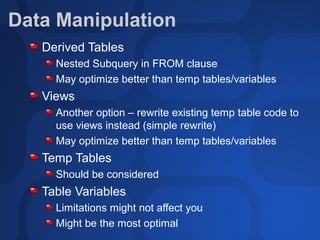

- 23. Data Manipulation Derived Tables Nested Subquery in FROM clause May optimize better than temp tables/variables Views Another option â rewrite existing temp table code to use views instead (simple rewrite) May optimize better than temp tables/variables Temp Tables Should be considered Table Variables Limitations might not affect you Might be the most optimal

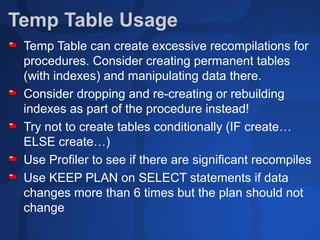

- 24. Temp Table Usage Temp Table can create excessive recompilations for procedures. Consider creating permanent tables (with indexes) and manipulating data there. Consider dropping and re-creating or rebuilding indexes as part of the procedure instead! Try not to create tables conditionally (IF createâĶ ELSE createâĶ) Use Profiler to see if there are significant recompiles Use KEEP PLAN on SELECT statements if data changes more than 6 times but the plan should not change

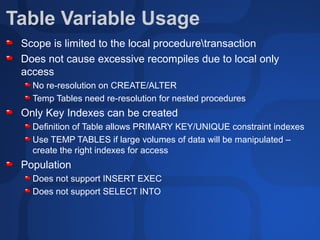

- 25. Table Variable Usage Scope is limited to the local proceduretransaction Does not cause excessive recompiles due to local only access No re-resolution on CREATE/ALTER Temp Tables need re-resolution for nested procedures Only Key Indexes can be created Definition of Table allows PRIMARY KEY/UNIQUE constraint indexes Use TEMP TABLES if large volumes of data will be manipulated â create the right indexes for access Population Does not support INSERT EXEC Does not support SELECT INTO

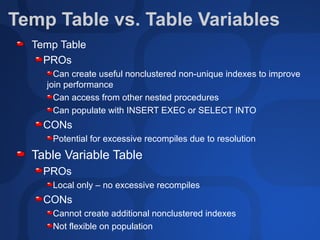

- 26. Temp Table vs. Table Variables Temp Table PROs Can create useful nonclustered non-unique indexes to improve join performance Can access from other nested procedures Can populate with INSERT EXEC or SELECT INTO CONs Potential for excessive recompiles due to resolution Table Variable Table PROs Local only â no excessive recompiles CONs Cannot create additional nonclustered indexes Not flexible on population

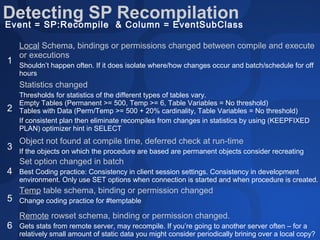

- 27. Detecting SP Recompilation Event = SP:Recompile & Column = EventSubClass Local Schema, bindings or permissions changed between compile and execute or executions 1 Shouldnât happen often. If it does isolate where/how changes occur and batch/schedule for off hours Statistics changed Thresholds for statistics of the different types of tables vary. Empty Tables (Permanent >= 500, Temp >= 6, Table Variables = No threshold) 2 Tables with Data (Perm/Temp >= 500 + 20% cardinality, Table Variables = No threshold) If consistent plan then eliminate recompiles from changes in statistics by using (KEEPFIXED PLAN) optimizer hint in SELECT Object not found at compile time, deferred check at run-time 3 If the objects on which the procedure are based are permanent objects consider recreating Set option changed in batch 4 Best Coding practice: Consistency in client session settings. Consistency in development environment. Only use SET options when connection is started and when procedure is created. Temp table schema, binding or permission changed 5 Change coding practice for #temptable Remote rowset schema, binding or permission changed. 6 Gets stats from remote server, may recompile. If youâre going to another server often â for a relatively small amount of static data you might consider periodically brining over a local copy?

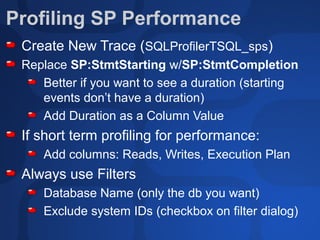

- 28. Profiling SP Performance Create New Trace (SQLProfilerTSQL_sps) Replace SP:StmtStarting w/SP:StmtCompletion Better if you want to see a duration (starting events donât have a duration) Add Duration as a Column Value If short term profiling for performance: Add columns: Reads, Writes, Execution Plan Always use Filters Database Name (only the db you want) Exclude system IDs (checkbox on filter dialog)

- 29. Review Initial Processing - Review Resolution Compilation/Optimization Execution/Recompilation Recompilation Issues When do you want to Recompile? Options for Recompilation? What to Recompile? Stored Procedure Best Practices Naming Conventions Writing Solid Code Excessive Recompilations â How? Detecting?

- 30. Other SessionsâĶ DAT 335 â SQL Server Tips and Tricks for DBAs and Developers Tuesday, 1 July 2003, 15:15-16:30 DBA 324 â Designing for Performance: Structures, Partitioning, Views and Constraints Wednesday, 2 July 2003, 08:30-09:45 DBA 328 â Designing for Performance: Optimization with Indexes Wednesday, 2 July 2003, 16:45-18:00 DBA 322 â Optimizing Stored Procedure Performance in SQL Server 2000 Thursday, 3 July 2003, 08:30-09:45

- 31. ArticlesâĶ Articles in TSQLSolutions at www.tsqlsolutions.com (FREE, just register) All About Raiserror, InstantDoc ID#22980 Saving Production Data from Production DBAs, InstantDoc ID#22073 Articles in SQL Server Magazine, Sept 2002: Before Disaster Strikes, InstantDoc ID#25915 Log Backups Paused for Good Reason, InstantDoc ID#26032 Restoring After Isolated Disk Failure, InstantDoc #26067 Filegroup Usage for VLDBs, InstantDoc ID#26031 Search www.sqlmag.com and www.tsqlsolutions.com for additional articles

- 32. ResourcesâĶ Whitepaper: Query Recompilation in SQL Server 2000 http://msdn.microsoft.com/library/default.asp? url=/nhp/Default.asp?contentid=28000409

- 33. Community Resources Community Resources http://www.microsoft.com/communities/default.mspx Most Valuable Professional (MVP) http://www.mvp.support.microsoft.com/ Newsgroups Converse online with Microsoft Newsgroups, including Worldwide http://www.microsoft.com/communities/newsgroups/default.mspx User Groups Meet and learn with your peers http://www.microsoft.com/communities/usergroups/default.mspx

- 34. Ask The Experts Get Your Questions Answered I will be available in the ATE area after most of my sessions!

- 35. Thank You! Kimberly L. Tripp Principal Mentor, Solid Quality Learning Website: www.SolidQualityLearning.com Email: Kimberly@SolidQualityLearning.com President, SYSolutions, Inc. Website: www.SQLSkills.com Email: Kimberly@SQLSkills.com

- 36. Suggested Reading And Resources The tools you need to put technology to work! TITLE Available MicrosoftÂŪ SQL ServerâĒ 2000 High Availability: 0-7356-1920-4 7/9/03 MicrosoftÂŪ SQL ServerâĒ 2000 Administrator's Companion:0- Today 7356-1051-7 Microsoft Press books are 20% off at the TechEd Bookstore Also buy any TWO Microsoft Press books and get a FREE T-Shirt

- 37. evaluations

- 38. ÂĐ 2003 Microsoft Corporation. All rights reserved. This presentation is for informational purposes only. MICROSOFT MAKES NO WARRANTIES, EXPRESS OR IMPLIED, IN THIS SUMMARY.

![Jdbc[1]](https://cdn.slidesharecdn.com/ss_thumbnails/jdbc1-100429052525-phpapp02-thumbnail.jpg?width=560&fit=bounds)