Stable_Diffusion_Diagrams_Version_2.pptx

- 1. Coding Stable Diffusion form scratch in PyTorch Umar Jamil License: Creative Commons Attribution-NonCommercial 4.0 International (CC BY-NC 4.0): https://creativecommons.org/licenses/by-nc/4.0/legalcode Not for commercial use Umar Jamil - https://github.com/hkproj/pytorch-stable-diffusion

- 2. Umar Jamil - https://github.com/hkproj/pytorch-stable-diffusion Topics and Prerequisites Topics discussed ŌĆó Latent Diffusion Models (Stable Diffusion) from scratch in PyTorch. No other libraries used except for tokenizer. ŌĆó Maths of diffusion models as defined in the DDPM paper (simplified!) ŌĆó Classifier-Free Guidance ŌĆó Text ŌĆō to ŌĆō Image ŌĆó Image ŌĆō to ŌĆō Image ŌĆó Inpainting Future videos ŌĆó Score-based models ŌĆó ODE and SDE theoretical framework for diffusion models ŌĆó Euler, Runge-Kutta and derived samplers. Prerequisites concepts. ŌĆó Basics of probability and statistics (multivariate gaussian, conditional probability, marginal probability, likelihood, BayersŌĆÖ rule). ŌĆó I will give a non-maths intuition for most ŌĆó Basics of PyTorch and neural networks ŌĆó How the attention mechanism works (watch my video on the Transformer model). ŌĆó How convolution layers work

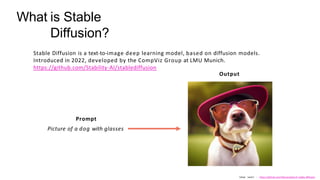

- 3. What is Stable Diffusion? Stable Diffusion is a text-to-image deep learning model, based on diffusion models. Introduced in 2022, developed by the CompViz Group at LMU Munich. https://github.com/Stability-AI/stablediffusion Output Prompt Picture of a dog with glasses Umar Jamil - https://github.com/hkproj/pytorch-stable-diffusion

- 4. Umar Jamil - https://github.com/hkproj/pytorch-stable-diffusion What is a generative model? A generative model learns a probability distribution of the data set such that we can then sample from the distribution to create new instances of data. For example, if we have many pictures of cats and we train a generative model on it, we then sample from this distribution to create new images of cats.

- 5. Why do we model data as distributions? ŌĆó Imagine youŌĆÖre a criminal, and you want to generate thousands of fake identities. Each fake identity, is made up of variables, representing the characteristics of a person (Age, Height). ŌĆó You can ask the Statistics Department of the Government to give you statistics about the age and the height of the population and then sample from these distributions. Age: N(40, 302) ŌĆó At first, you may sample from each distribution independently to create a fake identity, but that would produce unreasonable pairs of (Age, Height). ŌĆó To generate fake identities that make sense, you need the joint distribution, otherwise you may end up with an unreasonable pair of (Age, Height) ŌĆó We can also evaluate probabilities on one of the two variables using conditional probability and/or by marginalizing a variable. Height: N(120, 1002) Umar Jamil - https://github.com/hkproj/pytorch-stable-diffusion

- 6. Umar Jamil - https://github.com/hkproj/pytorch-stable-diffusion Learning the distribution p(x) of our data. ŌĆó We have a data set made of up images, and we want to learn a very complex distribution that we can then use to sample from.

- 7. X0 Z1 Z2 Z3 ŌĆ” ZT Pure noise Original image Reverse process: Neural network Umar Jamil - https://github.com/hkproj/pytorch-stable-diffusion Forward process: Fixed

- 8. The math of diffusion modelsŌĆ” simplified! Umar Jamil - https://github.com/hkproj/pytorch-stable-diffusion

- 9. Ho, J., Jain, A. and Abbeel, P., 2020. Denoising diffusion probabilistic models. Advances in Neural Information Processing Systems, 33, pp.6840-6851. Reverse process p Forward process q Evidence Lower Bound (ELBO) Just like with a VAE, we want to learn the parameters of the latent space Umar Jamil - https://github.com/hkproj/pytorch-stable-diffusion

- 10. We take a sample from our dataset We generate a random number t, between 1 and T We sample some noise We add noise to our image, and we train the model to learn to predict the amount of noise present in it. We sample some noise We keep denoising the image progressively for T steps. Umar Jamil - https://github.com/hkproj/pytorch-stable-diffusion

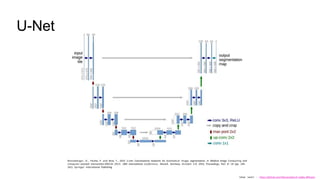

- 11. U-Net Ronneberger, O., Fischer, P. and Brox, T., 2015. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted InterventionŌĆōMICCAI 2015: 18th International Conference, Munich, Germany, October 5-9, 2015, Proceedings, Part III 18 (pp. 234- 241). Springer International Publishing. Umar Jamil - https://github.com/hkproj/pytorch-stable-diffusion

- 12. X0 Z1 Z2 Z3 ŌĆ” ZT Pure noise Original image Reverse process: Neural network Umar Jamil - https://github.com/hkproj/pytorch-stable-diffusion Forward process: Fixed How to generate new data?

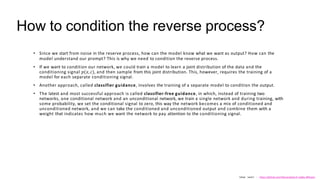

- 13. Umar Jamil - https://github.com/hkproj/pytorch-stable-diffusion How to condition the reverse process? ŌĆó Since we start from noise in the reserve process, how can the model know what we want as output? How can the model understand our prompt? This is why we need to condition the reverse process. ŌĆó If we want to condition our network, we could train a model to learn a joint distribution of the data and the conditioning signal ØæØ(Øæź, ØæÉ), and then sample from this joint distribution. This, however, requires the training of a model for each separate conditioning signal. ŌĆó Another approach, called classifier guidance, involves the training of a separate model to condition the output. ŌĆó The latest and most successful approach is called classifier-free guidance, in which, instead of training two networks, one conditional network and an unconditional network, we train a single network and during training, with some probability, we set the conditional signal to zero, this way the network becomes a mix of conditioned and unconditioned network, and we can take the conditioned and unconditioned output and combine them with a weight that indicates how much we want the network to pay attention to the conditioning signal.

- 14. Classifier Free Guidance (Training) Ronneberger, O., Fischer, P. and Brox, T., 2015. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted InterventionŌĆōMICCAI 2015: 18th International Conference, Munich, Germany, October 5-9, 2015, Proceedings, Part III 18 (pp. 234- 241). Springer International Publishing. Umar Jamil - https://github.com/hkproj/pytorch-stable-diffusion

- 15. Classifier Free Guidance (Inference) Ronneberger, O., Fischer, P. and Brox, T., 2015. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted InterventionŌĆōMICCAI 2015: 18th International Conference, Munich, Germany, October 5-9, 2015, Proceedings, Part III 18 (pp. 234- 241). Springer International Publishing. Umar Jamil - https://github.com/hkproj/pytorch-stable-diffusion

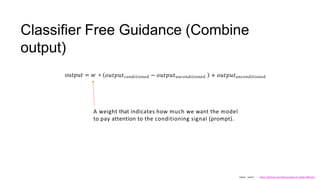

- 16. Classifier Free Guidance (Combine output) oØæóØæĪØæØØæóØæĪ = Øæż ŌłŚ Øæ£ØæóØæĪØæØØæóØæĪØæÉØæ£ØæøØææØæ¢ØæĪØæ¢Øæ£ØæøØæÆØææ ŌłÆ Øæ£ØæóØæĪØæØØæóØæĪØæóØæøØæÉØæ£ØæøØææØæ¢ØæĪØæ¢Øæ£ØæøØæÆØææ + Øæ£ØæóØæĪØæØØæóØæĪØæóØæøØæÉØæ£ØæøØææØæ¢ØæĪØæ¢Øæ£ØæøØæÆØææ A weight that indicates how much we want the model to pay attention to the conditioning signal (prompt). Umar Jamil - https://github.com/hkproj/pytorch-stable-diffusion

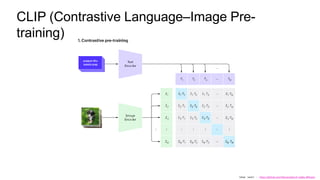

- 17. CLIP (Contrastive LanguageŌĆōImage Pre- training) Umar Jamil - https://github.com/hkproj/pytorch-stable-diffusion

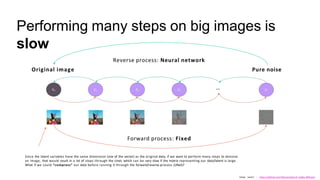

- 18. X0 Z1 Z2 Z3 ŌĆ” ZT Pure noise Original image Reverse process: Neural network Forward process: Fixed Performing many steps on big images is slow Since the latent variables have the same dimension (size of the vector) as the original data, if we want to perform many steps to denoise an image, that would result in a lot of steps through the Unet, which can be very slow if the matrix representing our data/latent is large. What if we could ŌĆ£compressŌĆØ our data before running it through the forward/reverse process (UNet)? Umar Jamil - https://github.com/hkproj/pytorch-stable-diffusion

- 19. Latent Diffusion Model ŌĆó Stable Diffusion is a latent diffusion model, in which we donŌĆÖt learn the distribution p(x) of our data set of images, but rather, the distribution of a latent representation of our data by using a Variational Autoencoder. ŌĆó This allows us to reduce the computation we need to perform the steps needed to generate a sample, because each data will not be represented by a 512x512 image, but its latent representation, which is 64x64. Umar Jamil - https://github.com/hkproj/pytorch-stable-diffusion

- 20. What is an Autoencoder? X Encoder Z XŌĆÖ Decoder Input Code [1.2, 3.65, ŌĆ”] Reconstructed Input [1.6, 6.00, ŌĆ”] [10.1, 9.0, ŌĆ”] Umar Jamil - https://github.com/hkproj/pytorch-stable-diffusion [2.5, 7.0, ŌĆ”] * The values are random and have no meaning

- 21. WhatŌĆÖs the problem with Autoencoders? The code learned by the model makes no sense. That is, the model can just assign any vector to the inputs without the numbers in the vector representing any pattern. The model doesnŌĆÖt capture any semantic relationship between the data. X Encoder XŌĆÖ Decoder Code Input Reconstructed Input Umar Jamil - https://github.com/hkproj/pytorch-stable-diffusion Z

- 22. Introducing the Variational Autoencoder The variational autoencoder, instead of learning a code, learns a ŌĆ£latent spaceŌĆØ. The latent space represents the parameters of a (multivariate) distribution. X Encoder XŌĆÖ Decoder Latent Space Input Reconstructed Input Umar Jamil - https://github.com/hkproj/pytorch-stable-diffusion Z

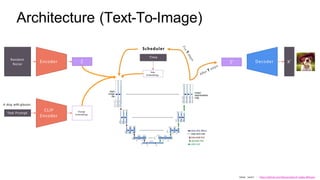

- 23. Random Noise Architecture (Text-To-Image) Encoder Z Text Prompt CLIP Encoder Prompt Embeddings Time Embeddings Scheduler Time XŌĆÖ Decoder ZŌĆÖ A dog with glasses Umar Jamil - https://github.com/hkproj/pytorch-stable-diffusion

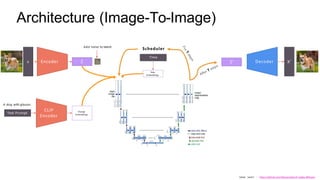

- 24. X Architecture (Image-To-Image) Encoder Z Text Prompt CLIP Encoder Prompt Embeddings Time Embeddings Scheduler Time XŌĆÖ Decoder ZŌĆÖ A dog with glasses Add noise to latent Umar Jamil - https://github.com/hkproj/pytorch-stable-diffusion

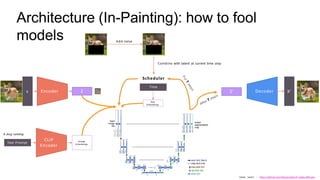

- 25. X Architecture (In-Painting): how to fool models Encoder Z Text Prompt CLIP Encoder Prompt Embeddings Time Embeddings Scheduler Time XŌĆÖ Decoder ZŌĆÖ A dog running Combine with latent at current time step Add noise Umar Jamil - https://github.com/hkproj/pytorch-stable-diffusion

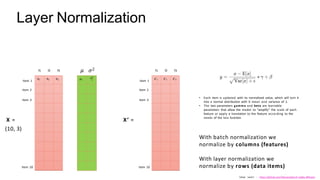

- 26. Layer Normalization a1 a2 a3 X = (10, 3) Item 1 Item 2 Item 3 Item 10 f1 f2 f3 Ø£ć1 Ø£Ä2 1 Ø£ć Ø£Ä2 a'1 a'2 a'3 XŌĆÖ = Item 1 Item 2 Item 3 Item 10 f1 f2 f3 ŌĆó Each item is updated with its normalized value, which will turn it into a normal distribution with 0 mean and variance of 1. ŌĆó The two parameters gamma and beta are learnable parameters that allow the model to ŌĆ£amplifyŌĆØ the scale of each feature or apply a translation to the feature according to the needs of the loss function. With batch normalization we normalize by columns (features) Umar Jamil - https://github.com/hkproj/pytorch-stable-diffusion With layer normalization we normalize by rows (data items)

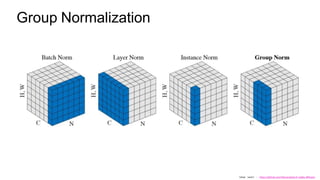

- 27. Group Normalization Umar Jamil - https://github.com/hkproj/pytorch-stable-diffusion

- 28. Umar Jamil - https://github.com/hkproj/pytorch-stable-diffusion The full code is available on GitHub! Full code: https://github.com/hkproj/pytorch-stable- diffusion Special thanks to: 1. https://github.com/CompVis/stable-diffusion/ 2. https://github.com/divamgupta/stable-diffusion-tensorflow 3. https://github.com/kjsman/stable-diffusion-pytorch 4. https://github.com/huggingface/diffusers/

- 29. Umar Jamil - https://github.com/hkproj/pytorch-stable-diffusion Thanks for watching! DonŌĆÖt forget to subscribe for more amazing content on AI and Machine Learning!

![What is an Autoencoder?

X Encoder Z XŌĆÖ

Decoder

Input

Code

[1.2, 3.65, ŌĆ”]

Reconstructed

Input

[1.6, 6.00, ŌĆ”]

[10.1, 9.0, ŌĆ”]

Umar Jamil - https://github.com/hkproj/pytorch-stable-diffusion

[2.5, 7.0, ŌĆ”]

* The values are random and

have no meaning](https://image.slidesharecdn.com/stablediffusiondiagramsv2-240525020104-01ed44ed/85/Stable_Diffusion_Diagrams_Version_2-pptx-20-320.jpg)