Storage virtualisation loughtec

- 1. Storage Virtualization Software Technical Feature Descriptions 1

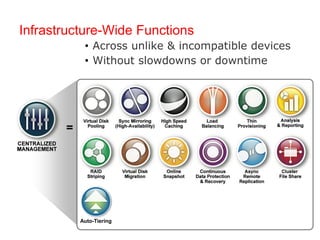

- 2. Infrastructure-Wide Functions ŌĆó Across unlike & incompatible devices ŌĆó Without slowdowns or downtime Auto-Tiering

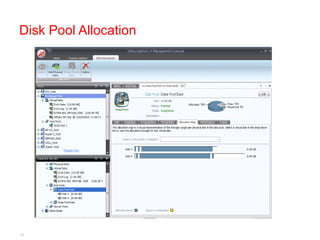

- 4. Virtual Disk Pooling Consolidate like or unlike disk resources Ō¢║ Split pool into tiers of different price/performance/capacity Ō¢║ Create and assign virtual disks of desired sizes Ō¢║ Define access rights Ō¢║ Explicitly assign virtual disks to hosts or groups of hosts Ō¢║ Expand capacity without downtime Ō¢║ Eliminate stranded disk space 4

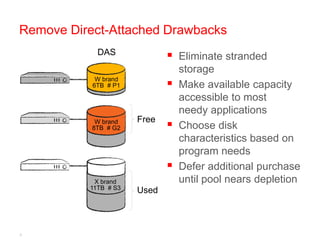

- 5. Remove Direct-Attached Drawbacks DAS ’é¦ Eliminate stranded storage W brand 6TB # P1 ’é¦ Make available capacity accessible to most needy applications Free W brand 8TB # G2 ’é¦ Choose disk characteristics based on program needs ’é¦ Defer additional purchase X brand until pool nears depletion 11TB # S3 Used 5

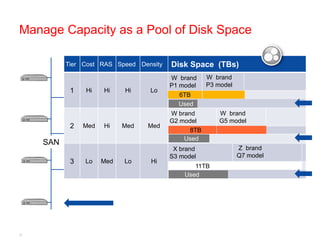

- 6. Manage Capacity as a Pool of Disk Space Tier Cost RAS Speed Density Disk Space (TBs) W brand W brand P1 model P3 model 1 Hi Hi Hi Lo 6TB Used W brand W brand G2 model G5 model 2 Med Hi Med Med 8TB Used SAN X brand Z brand S3 model Q7 model 3 Lo Med Lo Hi 11TB Used 6

- 7. SAN-wide Centralized Management Control / monitor all pooled resources from one console Ō¢║ Intuitive to set up and operate Ō¢║ Automates repetitive tasks Ō¢║ Self-guided wizards for key workflows Ō¢║ Comprehensive diagnostics & troubleshooting tips Ō¢║ Configurable views of system behavior and performance Ō¢║ Role-based, administrative permissions 7

- 8. Auto-Tiering Intelligent trade-offs between cost and performance Ō¢║ No special disk hardware required Ō¢║ You select which disks make up each tier Ō¢║ Adapts to provide most demanding workloads with speediest response 8

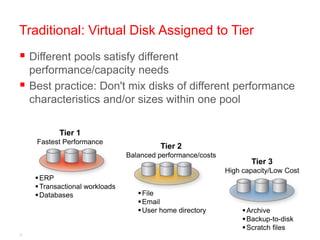

- 9. Traditional: Virtual Disk Assigned to Tier ’é¦ Different pools satisfy different performance/capacity needs ’é¦ Best practice: Don't mix disks of different performance characteristics and/or sizes within one pool Tier 1 Fastest Performance Tier 2 Balanced performance/costs Tier 3 High capacity/Low Cost ’é¦ ERP ’é¦ Transactional workloads ’é¦ Databases ’é¦ File ’é¦ Email ’é¦ User home directory ’é¦ Archive ’é¦ Backup-to-disk ’é¦ Scratch files 9

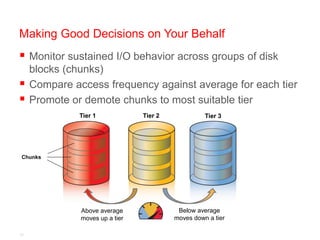

- 10. Making Good Decisions on Your Behalf ’é¦ Monitor sustained I/O behavior across groups of disk blocks (chunks) ’é¦ Compare access frequency against average for each tier ’é¦ Promote or demote chunks to most suitable tier Tier 1 Tier 2 Tier 3 Chunks Above average Below average moves up a tier moves down a tier 11

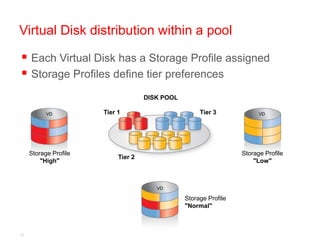

- 11. Virtual Disk distribution within a pool ’é¦ Each Virtual Disk has a Storage Profile assigned ’é¦ Storage Profiles define tier preferences DISK POOL VD Tier 1 Tier 3 VD Storage Profile Storage Profile Tier 2 "High" "Low" VD Storage Profile "Normal" 12

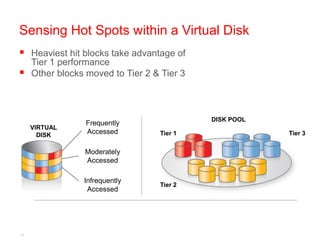

- 12. Sensing Hot Spots within a Virtual Disk ’é¦ Heaviest hit blocks take advantage of Tier 1 performance ’é¦ Other blocks moved to Tier 2 & Tier 3 DISK POOL Frequently VIRTUAL DISK Accessed Tier 1 Tier 3 Moderately Accessed Infrequently Tier 2 Accessed 13

- 13. High-Speed Caching Speeds up performance Ō¢║ Accelerates disk I/O response from existing storage Ō¢║ Uses x86-64 CPUs and memory from DataCore nodes as powerful, inexpensive ŌĆ£mega cachesŌĆØ Ō¢║ Anticipates next blocks to be read, and groups writes to avoid waiting on disks 14

- 14. Load Balancing Improve response and throughput Improve response and throughput Ō¢║ Overcome typical storage- related bottlenecks Ō¢║ Spread load on physical devices using different channels for different virtual disks Ō¢║ Automatically bypasses failed or offline channels 15

- 15. Thin Provisioning Allocate just enough space, just-in-time Ō¢║ Appears to computers as very large drives (e.g. 2 TB disks) Ō¢║ Takes up only space actually being written to Ō¢║ Dynamically allocates more disk space when required Ō¢║ Reduces need to resize LUNs Ō¢║ Reclaims zeroed out disk space 16

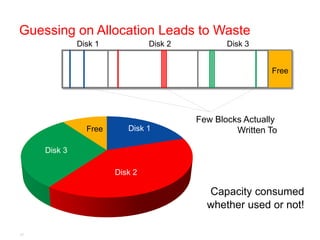

- 16. Guessing on Allocation Leads to Waste Disk 1 Disk 2 Disk 3 Free Few Blocks Actually Free Disk 1 Written To Free Disk 3 Free Free Disk 2 Disk 2 Capacity consumed whether used or not! 17

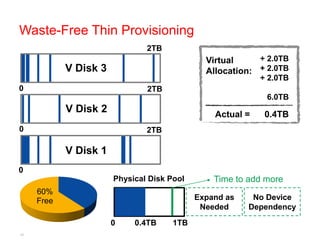

- 17. Waste-Free Thin Provisioning 2TB Free Virtual + 2.0TB V Disk 3 Allocation: + 2.0TB + 2.0TB 0 2TB 6.0TB Free V Disk 2 Actual = 0.4TB 0 2TB Free V Disk 1 0 Physical Disk Pool Time to add more 60% Free Expand as No Device Needed Dependency 0 0.4TB 1TB 18

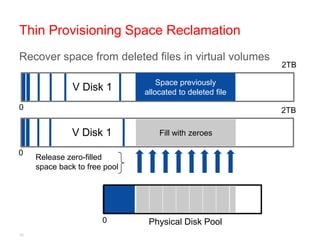

- 19. Thin Provisioning Space Reclamation Recover space from deleted files in virtual volumes 2TB Free Space previously V Disk 1 allocated to deleted file 0 2TB Free V Disk 1 Fill with zeroes 0 Release zero-filled space back to free pool 0 Physical Disk Pool 20

- 20. RAID Striping Better protection & performance Ō¢║ Circumvents drive failures Ō¢║ Spreads I/O across multiple spindles Ō¢║ Offloads RAID 0 & 1 Ō¢║ Supports popular RAID devices in pool 21

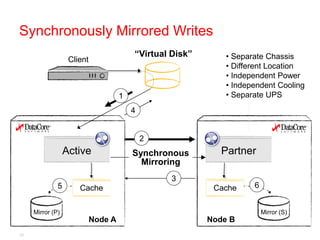

- 21. Synchronous Mirroring Real-time I/O replication for High-Availability Ō¢║ Eliminates storage as a single point of failure Ō¢║ Enhances survivability using physically separate nodes Ō¢║ Updates two distributed copies simultaneously Ō¢║ Mirrored virtual disks behave as one, multi-ported shared drive 22

- 22. Synchronously Mirrored Writes ŌĆ£Virtual DiskŌĆØ ŌĆó Separate Chassis Client ŌĆó Different Location ŌĆó Independent Power ŌĆó Independent Cooling 1 ŌĆó Separate UPS 4 2 Active Synchronous Partner Mirroring 3 5 Cache Cache 6 Mirror (P) Mirror (S) Node A Node B 23

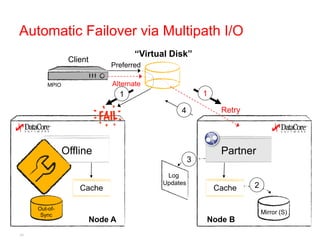

- 23. Automatic Failover via Multipath I/O ŌĆ£Virtual DiskŌĆØ Client Preferred MPIO Alternate 1 1 4 Retry Offline Partner 3 Log Updates 2 Cache Cache Out-of- Mirror (P) Sync Mirror (S) Node A Node B 24

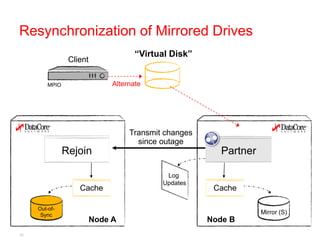

- 24. Resynchronization of Mirrored Drives ŌĆ£Virtual DiskŌĆØ Client MPIO Alternate Transmit changes since outage Rejoin Partner Log Updates Cache Cache Out-of- Mirror (P) Sync Mirror (S) Node A Node B 25

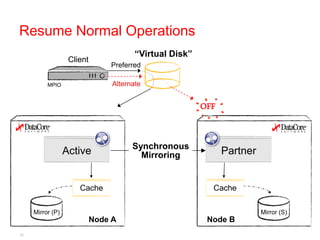

- 25. Resume Normal Operations ŌĆ£Virtual DiskŌĆØ Client Preferred MPIO Alternate OFF Synchronous Active Mirroring Partner Cache Cache Mirror (P) Mirror (S) Node A Node B 26

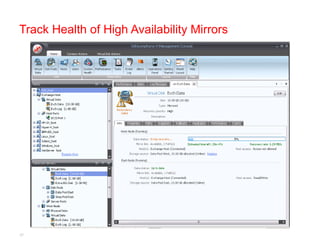

- 26. Track Health of High Availability Mirrors 27

- 27. Virtual Disk Migration Transparently move contents from one disk to another Ō¢║ Allows non-disruptive hardware disk upgrades Ō¢║ Clears & reclaims space occupied by original Ō¢║ Provides pass-through access to drives previously used on other systems 28

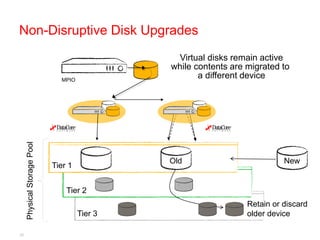

- 28. Non-Disruptive Disk Upgrades Virtual disks remain active while contents are migrated to MPIO a different device Physical Storage Pool Old New Tier 1 Tier 2 Retain or discard Tier 3 older device 29

- 29. Online Snapshots Capture point-in-time images without tying up much disk space or make complete clones Ō¢║ Recover quickly at disk speeds to known good state Ō¢║ Eliminate back-up window Ō¢║ Provide ŌĆ£liveŌĆØ environment for analysis, development & testing Ō¢║ Save snapshots in lower tier, thin-provisioned disks without taking up space on premium storage devices 30

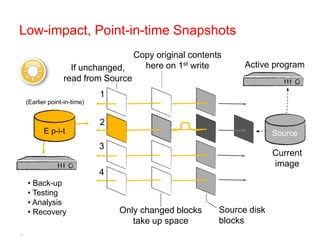

- 30. Low-impact, Point-in-time Snapshots Copy Empty when original contents Snapshot st write here on 1 enabled Active program If unchanged, read from Source 1 (Earlier point-in-time) 2 E p-i-t Source 3 Current image 4 ŌĆó Back-up ŌĆó Testing ŌĆó Analysis ŌĆó Recovery Only changed blocks Source disk take up space blocks 31

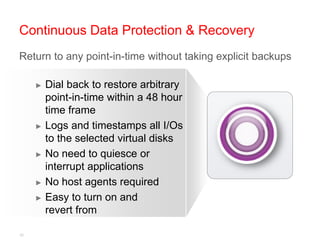

- 31. Continuous Data Protection & Recovery Return to any point-in-time without taking explicit backups Ō¢║ Dial back to restore arbitrary point-in-time within a 48 hour time frame Ō¢║ Logs and timestamps all I/Os to the selected virtual disks Ō¢║ No need to quiesce or interrupt applications Ō¢║ No host agents required Ō¢║ Easy to turn on and revert from 32

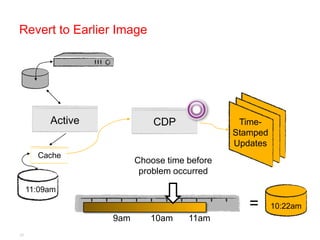

- 32. Revert to Earlier Image Active CDP Time- Stamped Updates Cache Choose time before problem occurred 11:09am = 10:22am 9am 10am 11am 33

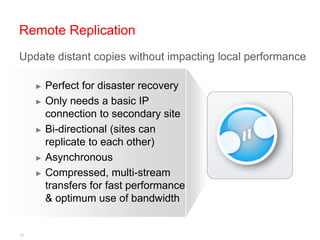

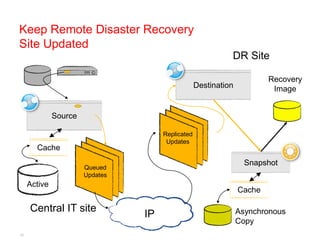

- 33. Remote Replication Update distant copies without impacting local performance Ō¢║ Perfect for disaster recovery Ō¢║ Only needs a basic IP connection to secondary site Ō¢║ Bi-directional (sites can replicate to each other) Ō¢║ Asynchronous Ō¢║ Compressed, multi-stream transfers for fast performance & optimum use of bandwidth 34

- 34. Keep Remote Disaster Recovery Site Updated DR Site Recovery Destination Image Source Replicated Updates Cache Snapshot Queued Updates Active Cache Central IT site Asynchronous IP Copy 35

- 35. Advanced Site Recovery Expedite central site restoration Ō¢║ Reverses direction of replication from the disaster recovery (DR) site to the primary datacenter Ō¢║ Same automated process for virtual & physical systems 36

- 36. THANK YOU ! 37