Tensorflowv5.0

- 1. Sanjib Basak Digital River Inc.

- 2.  Working as a director of data science at Digital River  Working in analytics field for 15 plus years  Built analytical applications in Retail and Healthcare  Fascinated by Machine Learning and Deep Learning  Playing with Tensor Flow for 6-7 months  Host of another meet up “Twin cities Big Data Analytics”

- 3.  Introduction to Tensor Flow  A basic model with Tensor Flow  Language Model  RNN with Tensor Flow  Advantages of Tenor Flow over Python Numpy  Question and Answer

- 4.  TensorFlow™ is an open source software library for numerical computation using data flow graphs.  Open sourced by Google in Nov,2015  The flexible architecture allows to deploy computation to one or more CPUs or GPUs in a desktop, server, or mobile device with a single API.  One of the most popular project in open source community– with 30,000+ Stars and 13,000+ Forks in Git Hub

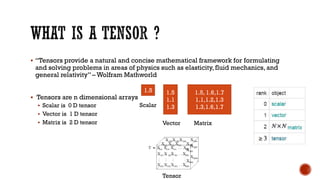

- 5.  “Tensors provide a natural and concise mathematical framework for formulating and solving problems in areas of physics such as elasticity, fluid mechanics, and general relativity” – Wolfram Mathworld  Tensors are n dimensional arrays  Scalar is 0 D tensor  Vector is 1 D tensor  Matrix is 2 D tensor 1.5 1.1 1.3 1.5, 1.6,1.7 1.1,1.2,1.3 1.3,1.6,1.7 Vector Matrix 1.5 Scalar Tensor

- 6.  Node: Represents Operations like Addition, Multiplications etc.  Edges: Carriers of the operations  Kernels: Implementation of the operations in device - CPU , GPU  Session: A session is created when client program establish communication with TF run time code  Tensor Flow data structure:  Rank : Rank as defined above  Shape : Number of rows and columns  Type – Int,Float32

- 7.  A linear regression model using ipython notebook

- 8. • Language Models computes the probability of occurrences of words in a sequence • Widely used in speech and translation system • Model chooses best word ordering from various possibilities “The world is small”,“small is the world”

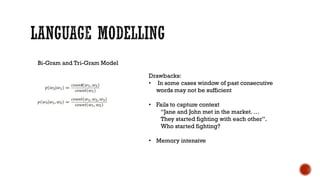

- 9. Bi-Gram and Tri-Gram Model Drawbacks: • In some cases window of past consecutive words may not be sufficient • Fails to capture context “Jane and John met in the market. … They started fighting with each other”. Who started fighting? • Memory intensive

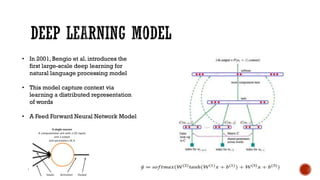

- 10. • In 2001, Bengio et al. introduces the first large-scale deep learning for natural language processing model • This model capture context via learning a distributed representation of words • A Feed Forward Neural Network Model

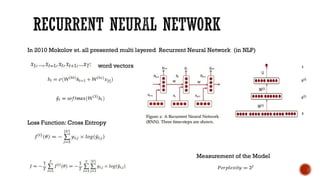

- 11. word vectors Loss Function: Cross Entropy Measurement of the Model In 2010 Mokolov et. all presented multi layered Recurrent Neural Network (in NLP)

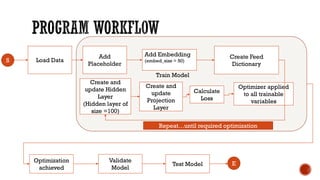

- 12. Load Data Add Placeholder Create Feed Dictionary Validate Model Test Model Add Embedding (embed_size = 50) Create and update Hidden Layer (Hidden layer of size =100) Create and update Projection Layer Calculate Loss Optimizer applied to all trainable variables S Optimization achieved E Repeat…until required optimization Train Model

- 13.  Problem Definition  Test 1 Trained on 20 sections of WSJ data of Penn Tree Bank  Test 2 Trained on Harry Potter and Deathly Hallows

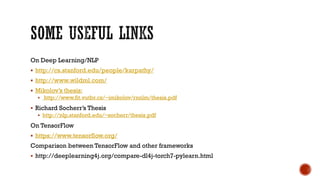

- 16. On Deep Learning/NLP  http://cs.stanford.edu/people/karpathy/  http://www.wildml.com/  Mikolov’s thesis:  http://www.fit.vutbr.cz/~imikolov/rnnlm/thesis.pdf  Richard Socherr’s Thesis  http://nlp.stanford.edu/~socherr/thesis.pdf On TensorFlow  https://www.tensorflow.org/ Comparison between TensorFlow and other frameworks  http://deeplearning4j.org/compare-dl4j-torch7-pylearn.html

- 17. Q&˛ąłľ±č;´ˇ