The Five Tribes of Machine Learning Explainers

- 1. The Five Tribes of ML Explainers (and what you can learn from each) Micha? ?opuszy©Įski PyData Berlin, 07.07.2018

- 2. Intro Looking at performance metrics for your model is great but....

- 3. Intro Looking at performance metrics for your model is great but.... Why? Why? Why? Why? Why? Why? your model, predicts what it predicts?

- 5. The Featurists Feature importance? Feature importance from models (RF, ExtraTrees, Boosted trees, linear models)? Model agnostic feature importance (e.g. permutation importance from ELI5)? Feature selection? Filters - e.g. filtering most correlated features? Wrappers - e.g. forward/backward selection? Embedded methods - e.g. lasso? Idea: Find features important to your model? all my features Model Class Reliance: Variable Importance Measures for any Machine Learning Model Class, from the Ī░RashomonĪ▒ Perspective (2018)

- 7. The Speculators Idea: Check how your model responds to change of one variable? Answer 1: Partial dependence plots:? Example from free book Interpretable ML by Christoph Molnar

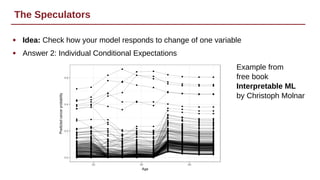

- 8. The Speculators Idea: Check how your model responds to change of one variable? Answer 2: Individual Conditional Expectations? Example from free book Interpretable ML by Christoph Molnar

- 10. The Localizers Idea: Fit interpretable model, which is locally correct? Simple model = Linear Why Should I Trust You? Explaining the Predictions of Any Classifier Ribeiro, Singh, Guestrin LIME Simple model = Rules Anchors: High-Precision Model-Agnostic Explanations Ribeiro, Singh, Guestrin Anchors

- 11. The Convoluters

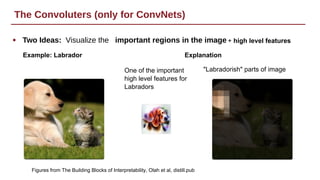

- 12. The Convoluters (only for ConvNets) Two Ideas: Visualize the important regions in the image? Example: Labrador One of the important high level features for Labradors + high level features "Labradorish" parts of image Explanation Figures from The Building Blocks of Interpretability, Olah et al, distill.pub

- 13. The Trainalyzers

- 14. The Trainalyzers Idea: Which training examples contributed mostly to a given prediction? Understanding Black-box Predictions via Influence Functions, W. Koh, P. Liang Sample approach - influence functions

- 15. I collect links to interesting papers & soft @lopusz github.com/lopusz/awesome-interpretable-machine-learning There is a lot more!