Titanic survivor prediction by machine learning

- 1. Titanic Survivor Prediction by Machine Learning Ding Li 2018.05 online store: costumejewelry1.com

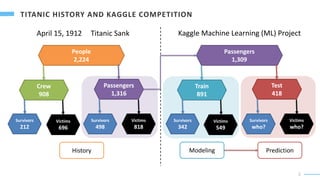

- 2. 2 April 15, 1912 Titanic Sank People 2,224 Crew 908 Passengers 1,316 Survivors 212 Victims 696 Survivors 498 Victims 818 Passengers 1,309 Train 891 Test 418 Survivors 342 Victims 549 Survivors who? Victims who? Modeling Prediction History Kaggle Machine Learning (ML) Project

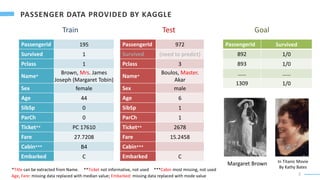

- 3. 3 PassengerId 195 Survived 1 Pclass 1 Name* Brown, Mrs. James Joseph (Margaret Tobin) Sex female Age 44 SibSp 0 ParCh 0 Ticket** PC 17610 Fare 27.7208 Cabin*** B4 Embarked C Train PassengerId 972 Survived (need to predict) Pclass 3 Name* Boulos, Master. Akar Sex male Age 6 SibSp 1 ParCh 1 Ticket** 2678 Fare 15.2458 Cabin*** Embarked C Test Goal PassengerId Survived 892 1/0 893 1/0 ĪŁĪŁ ĪŁĪŁ 1309 1/0 Margaret Brown In Titanic Movie By Kathy Bates *Title can be extracted from Name. **Ticket not informative, not used ***Cabin most missing, not used Age, Fare: missing data replaced with median value; Embarked: missing data replaced with mode value

- 4. 4 Embarked from S: Southampton C: Cherbourg Q: Queenstown SibSp: # of Siblings or Spouse ParCh: # of Parents or Children Family Size = ????? + ????? + 1 Is Alone = 1 0 if Family Size = 1 if Family Size > 1

- 5. 5

- 6. 6 Survived ©C Sex -0.54 P Class -0.34 Fare bin 0.3 Embarked -0.17 Fare bin ©C P Class -0.63 Family Size 0.47 Age bin ©C P Class -0.36 Title code 0.32

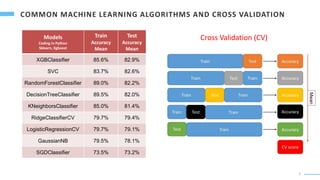

- 7. 7 Models Coding in Python Sklearn, Xgboost Train Accuracy Mean Test Accuracy Mean XGBClassifier 85.6% 82.9% SVC 83.7% 82.6% RandomForestClassifier 89.0% 82.2% DecisionTreeClassifier 89.5% 82.0% KNeighborsClassifier 85.0% 81.4% RidgeClassifierCV 79.7% 79.4% LogisticRegressionCV 79.7% 79.1% GaussianNB 79.5% 78.1% SGDClassifier 73.5% 73.2% Cross Validation (CV)

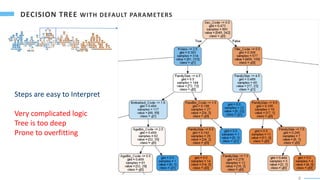

- 8. 8 Steps are easy to Interpret Very complicated logic Tree is too deep Prone to overfitting

- 9. 9 Observation Model Accuracy Train 891 Survivors 342 (38%) Victims 549 (62%) Train 891 Victims 891 all 549 891 = 62% Train 891 Male 577 (35%) Female 314 (65%) Survivors 109 (19%) Victims 468 (81%) Survivors 234 (74%) Victims 80 (26%) Train 891 Male 577 (35%) Female 314 (65%) Victims 577 Survivors 314 468+234 891 = 702 891 = 79% With one more layer, hand-made tree can reach 82% accuracy

- 10. 10 Before Turning: Training Score = 89.5% Test Score = 82.05% After Turning: (Best max_depth = 4) Training Score = 89.4% Test Score = 87.4% Alleviate the overfitting

- 11. 11 ? Kaggle is a convenient platform to study and practice machine learning. ? Python code can be executed directly at the host server from the browser. ? Numerous datasets were provided on the site, including training and test data. ? Once the prediction file is submitted, a score will be returned to evaluate your model. ? Many developers share runnable code with detailed explanation. ? Appling artificial intelligence blindly without human intelligence is dangerous. ? Some ML models can be too complicated, leading to overfitting. ? The performance of some ML models can be worse than simple hand-made model. ? Combining AI and human logic can make the analytical process enjoyable and reliable. Python code of the project at kaggle: https://www.kaggle.com/dingli/titanic-survivor-prediction-machine-learning