Topic Extraction using Machine Learning

- 1. Topic Extraction using Machine Learning Sanjib Basak Director of Data Science, Digital River Jan,2016 Twin cities Big Data Analytics and Apache Spark user group meet up

- 2. Agenda ? History of Topic Models ? A Use Case ? Demo using R ? Demo using Spark ? Conclusion

- 3. History ofTopic Modeling ? TF-IDF model (Salton and McGill, 1983) ? A basic vocabulary of ˇ°wordsˇ± or ˇ°termsˇ± is chosen, and, for each document in the corpus, a count is formed of the number of occurrences of each word. (TF) ? After suitable normalization, this term frequency count is compared to an Inverse Document Frequency (IDF) count, which measures the number of occurrences of a word in the entire corpus. ? Not a generative model TF-IDF

- 4. History ofTopic Modeling ? To address the shortcomings ofTF-IDF Deerwester et al. 1990 came up with LSI(Latent Semantic Indexing) model. ? LSI uses a singular value decomposition of term document matrix to identify a linear subspace in the space ofTF-IDF features that captures most of the variance in the collection ? They claim that the model can capture some aspects of basic linguistic notions such as synonymy and polysemy ? Still not a useful model to capture distribution of words LSI

- 5. PLSI ? Hofmann (1999), presented the Probabilistic Latent Semantic Analysis (pLSI) model, also known as the aspect model, as an alternative to LSI. ? Models each word in a document as a sample from a mixture model, where the mixture components are multinomial random variables that can be viewed as representations of ˇ°topics.ˇ± ? The model is still incomplete ? Not a probabilistic model at the level of documents ? Each document is represented as a list of numbers (the mixing proportions for topics) History ofTopic Modeling

- 6. ? De Finetti (1990) establishes that any collection of exchangeable random variables has a representation as a mixture distributionˇŞin general an infinite mixture.. This line of thinking leads to the latent Dirichlet allocation (LDA) model ? Blei, Ng and Jordon 2003 explained LDA ? Hierarchical Bayesian Model - Each item or word is modeled as a finite mixture over an underlying set of topics. Each topic is, in turn, modeled as an infinite mixture over an underlying set of topic probabilities. LDA History ofTopic Modeling Taken fromWikipedia

- 7. LDA ? The original paper used a variational Bayes approximation of the posterior distribution ? Alternative inference techniques use Gibbs sampling, Expectation Maximization Algorithm, OnlineVariation and many more.

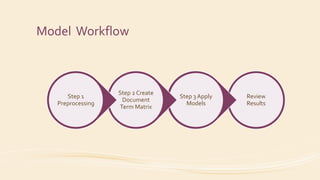

- 8. Model Workflow Review Results Step 3 Apply Models Step 2 Create Document Term Matrix Step 1 Preprocessing

- 9. K-Means ? Choose number of clusters (K) ? Initialize the clusters. Make one observation as centroid ? Determine observations that are closest to the centroid and assign them part of the cluster ? Revise the cluster center as mean of the assigned observation ? Repeat above steps until convergence

- 10. Demo in R ? Use Case ? Model with K-Means ? Model with LDA and visualization ? Github Code Location - https://github.com/sanjibb/R-Code

- 11. K-Means Result

- 12. Experimentation with Spark MLLib ? Work with dataset and in Scala ? 2 variations of optimization model ¨C ? EMVariation Optimizer ? online variational inference - http://www.cs.columbia.edu/~blei/papers/WangPaisleyBlei2011.pdf Github code Location ? https://github.com/sanjibb/spark_example

- 13. Conclusion 1. LDA provides mixture of topics on the words vs K-Means provides distinct topics 1. In real-life topics may not be distinctively separated 2. Unsupervised LDA model may require to work with SMEs to get better representation of topics 1. There is a supervised LDA model (sLDA) as well, which I have not covered in this presentation)

Editor's Notes

- Thus, if we wish to consider exchangeable representations for documents and words, we need to consider mixture models that capture the exchangeability of both words and documents