Traveling salesman problem

- 1. Tackling the travelling salesman problem Using Hill Climbing search algorithm

- 2. Agenda •What is the TSP problem ? •stochastic optimization •hill-climbing

- 3. What is the TSP problem ? The traveling salesman problem (TSP) asks for the shortest route to visit a collection of cities and return to the starting point. Despite an intensive study by mathematicians, computer scientists, operations researchers, and others, over the past 50 years, it remains an open question whether or not an efficient general solution method exists.

- 4. TSP is an NP-Hard Problem That does not necessarily mean any one instance of the problem will be hard to solve, it just means that we do not currently have an algorithm that can give us the guaranteed best solution for all problems in “polynomial time”.

- 5. Stochastic optimization Stochastic optimization (SO) methods are optimization methods that generate and use random variables. For stochastic problems, the random variables appear in the formulation of the optimization problem itself, which involve random objective functions or random constraints, for example. Stochastic optimization methods also include methods with random iterates.

- 6. We take randomly generated routes through the cities and incrementally improve/change them in some fashion to search for a better route. How these changes are made depends on the algorithm being used, but there are a couple of simple approaches we can take, that I will outline here: • swapping the position of two cities on a route • reversing the order of an entire section of a route

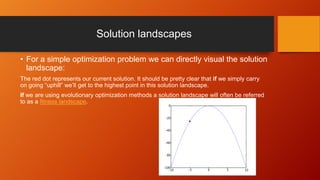

- 8. Solution landscapes • A common way to visualize searching for solutions in an optimization problem, such as the TSP, is to think of the solutions existing within a “landscape”. Better solutions exist higher up and we can take a step from one solution to another in search of better solutions. How we make steps will depend on the “move operators” we have available and will therefore also affect how the landscape “looks”. It will change which solutions are “adjacent” to each other.

- 9. Solution landscapes • For a simple optimization problem we can directly visual the solution landscape: The red dot represents our current solution. It should be pretty clear that if we simply carry on going “uphill” we’ll get to the highest point in this solution landscape. If we are using evolutionary optimization methods a solution landscape will often be referred to as a fitness landscape.

- 10. Hill-climbing • Hill-climbing, pretty much the simplest of the stochastic optimisation methods, works like this: • pick a place to start • take any step that goes “uphill” • if there are no more uphill steps, stop; otherwise carry on taking uphill steps • Metaphorically the algorithm climbs up a hill one step at a time. It is a “greedy” algorithm and only ever takes steps that take it uphill (though it can be adapted to behave differently). This means that it is pretty quick to get to the top of a hill

- 11. Hill-climbing As you can see our current solution (the red dot) can only go downhill from it’s current position – yet it is not at the highest point in the solution landscape.

- 12. Hill-climbing The “biggest” hill in the solution landscape is known as the global maximum. The top of any other hill is known as a local maximum (it’s the highest point in the local area). Standard hill-climbing will tend to get stuck at the top of a local maximum, so we can modify our algorithm to restart the hill-climb if need be. This will help hill-climbing find better hills to climb – though it’s still a random search of the initial starting points.

- 13. Thanks