Unsupervised sentence-embeddings by manifold approximation and projection

- 1. Unsupervised Sentence-embeddings by Manifold Approximation and Projection Deep Kayal deep.kayal@pm.me

- 2. Setting the tone Modern NLP systems are increasingly being powered by Transfer learning

- 3. Setting the tone Modern NLP systems are increasingly being powered by Transfer learning

- 4. Setting the tone But often, the downstream task is not known a-priori or adaptation is not possible. E.g. in search

- 5. Setting the tone But often, the downstream task is not known a-priori or adaptation is not possible. E.g. in search

- 6. Setting the tone In these cases we need universal sentence encoders

- 7. Pretrained model Setting the tone In these cases we need universal sentence encoders

- 8. Pretrained model Setting the tone In these cases we need universal sentence encoders Who are you? Where is this? This is Amsterdam. ...

- 9. Pretrained model Setting the tone In these cases we need universal sentence encoders Who are you? Where is this? This is Amsterdam. ... [0.2 0.3 -0.01 0.4...] [0.8 0.1 -0.5 0.4...] [0.5 0.9 0.9 0.3 ...] ...

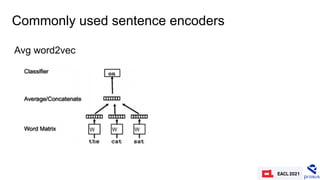

- 10. Commonly used sentence encoders Avg word2vec

- 11. Commonly used sentence encoders Avg word2vec

- 12. Commonly used sentence encoders Doc2vec

- 13. Commonly used sentence encoders Sentence BERT (BERT fine-tuned on SNLI dataset)

- 14. Related Work Word movers distance, Matt Kusner et al.

- 15. Related Work Word movers embeddings, Lingfei Wu et al.

- 16. Observation: Word movers distance is one of many ways to compute distance between sets of words Contributions of this work

- 17. Observation: Word movers distance is one of many ways to compute distance between sets of words Contribution 1: Test and compare other common set-distance metrics Contributions of this work

- 18. Contributions of this work Observation: Word movers distance is one of many ways to compute distance between sets of words Contribution 1: Test and compare other common set-distance metrics - WMD - Hausdorff distance - Energy distance

- 19. Contributions of this work Observation: Using a set-distance metric, we can construct a neighbourhood graph using sentences and these distances

- 20. Contributions of this work Observation: Using a set-distance metric, we can construct a neighbourhood graph using sentences and these distances Contribution 2: Generate fixed-dimensional embeddings such they preserve the above neighbourhood graph

- 21. Contributions of this work Observation: Using a set-distance metric, we can construct a neighbourhood graph using sentences and these distances Contribution 2: Generate fixed-dimensional embeddings such they preserve the above neighbourhood graph - Universal manifold approximation and projection (UMAP)

- 25. Steps to generate embeddings Make approximate nearest neighbours graph

- 26. Steps to generate embeddings Generate initial low dimensional graph and minimize cross entropy between the two representations

- 27. Steps to generate embeddings Points on low dimensional graphs are the desired embeddings

- 28. Evaluation Sentence classification task on 6 datasets

- 29. Experimental Settings First test: - Use kNN with the set-distances to classify sentences directly

- 30. Experimental Settings First test: - Use kNN with the set-distances to classify sentences directly - Versus, our method of generating embeddings using the neighbourhood graph - We use a linear SVM with the generated embeddings

- 31. Experimental Settings Second test: - Test 6 other popular approaches to produce sentence embeddings - Versus, our method of generating embeddings using the neighbourhood graph

- 32. Results Embeddings + classifier vs kNN

- 33. Results Comparison of various embeddings

- 34. Takeaways - We propose a novel sentence embedding mechanism

- 35. Takeaways - We propose a novel sentence embedding mechanism - Using set distances

- 36. Takeaways - We propose a novel sentence embedding mechanism - Using set distances - And neighbourhood graph approximation

- 37. Takeaways - We propose a novel sentence embedding mechanism - Using set distances - And neighbourhood graph approximation - The embeddings are better at capturing information than the distance metric alone

- 38. Takeaways - We propose a novel sentence embedding mechanism - Using set distances - And neighbourhood graph approximation - The embeddings are better at capturing information than the distance metric alone - The embeddings perform favourably as compared to various other efficient mechanisms

![Pretrained model

Setting the tone

In these cases we need universal sentence encoders

Who are you?

Where is this?

This is Amsterdam.

...

[0.2 0.3 -0.01 0.4...]

[0.8 0.1 -0.5 0.4...]

[0.5 0.9 0.9 0.3 ...]

...](https://image.slidesharecdn.com/eacl-21-deep-unsup-sent-embed-210516111746/85/Unsupervised-sentence-embeddings-by-manifold-approximation-and-projection-9-320.jpg)