Using fairness metrics to solve ethical dilemmas of machine learning

- 1. Using fairness metrics to solve ethical dilemmas of machine learning Kov├Īcs L├Īszl├│ laszlo@peak1014.com

- 3. Trust & Fairness ŌŚÅ Machine learning systems are not inherently fair ŌŚÅ Data requirements from the Probably Approximately Correct learning theory: plenty of representative data ŌŚÅ Bias in ŌåÆ bias out ŌŚÅ Algorithms are objective, success criteria are not

- 4. Bias vs bias

- 5. The letter of the law European Union: Policy and investment recommendations for trustworthy Artificial Intelligence (guideline) ... 5. Measure and monitor the societal impact of AI 28. Consider the need for new regulation to ensure adequate protection from adverse impacts 12. Safeguard fundamental rights in AI-based public services and protect societal infrastructures United States of America: Algorithmic Accountability Act of 2019 A bill to direct the Federal Trade Commission to require entities that use, store, or share personal information to conduct automated decision system impact assessments (ADSIA). [... an ADSIA] means a study evaluating an automated decision system and the automated decision systemŌĆÖs development process, including the design and training data of the automated decision system, for impacts on accuracy, fairness, bias, discrimination, privacy

- 6. The spirit of the law Be fair, do not discriminate along sensitive attributes. Be transparent, explainable, interpretable

- 7. What features, and by what amount lead to the prediction for a single case? What features, and by what amount does the model generally use to make the predictions? Explainability (local scope) Interpretability (global scope) Gaining the trust of the user Gaining the trust of the analyst/regulator

- 8. This is not true.

- 9. This is not true.

- 10. The problem of fairness: error distribution NegativePositive Neg. Pos. True Positive False Negative False Positive True Negative Reality Prediction Discrimination or unfairness: systematic over- or underestimation for members of a group

- 11. Fairness metrics NegativePositive Neg. Pos. True Positive False Negative False Positive True Negative Reality Prediction FalseTrue False True Reality Prediction FalseTrue False True Reality Prediction Group A Group B Population

- 12. Fairness metrics: not just for sensitive decisions

- 13. COMPAS

- 14. COMPAS

- 15. A critique by ProPublica

- 16. A critique by ProPublica Overall, NorthpointeŌĆÖs assessment tool correctly predicts recidivism 61 percent of the time. But blacks are almost twice as likely as whites to be labeled a higher risk but not actually re-o’äÄfend. It makes the opposite mistake among whites: They are much more likely than blacks to be labeled lower risk but go on to commit other crimes.

- 17. Results from ProPublica ŌŚÅ They calculated the False Positive and False Negative rate These are not the de’¼ünition of the FPR/FNR!

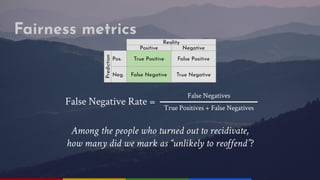

- 18. Fairness metrics NegativePositive Neg. Pos. True Positive False Negative False Positive True Negative Reality Prediction False Negatives True Positives + False Negatives False Negative Rate = Among the people who turned out to recidivate, how many did we mark as ŌĆ£unlikely to reo’äÄfendŌĆØ?

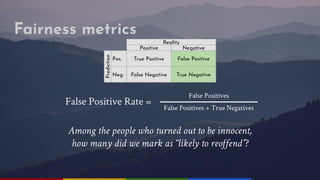

- 19. Fairness metrics NegativePositive Neg. Pos. True Positive False Negative False Positive True Negative Reality Prediction False Positives False Positives + True Negatives False Positive Rate = Among the people who turned out to be innocent, how many did we mark as ŌĆ£likely to reo’äÄfendŌĆØ?

- 20. Fairness metrics NegativePositive Neg. Pos. True Positive False Negative False Positive True Negative Reality Prediction True Positives True Positives + False Positives Precision = Among the people who we marked as ŌĆ£likely to reo’äÄfendŌĆØ, how many did actually do so? Precision is also known as Positive Predictive Value.

- 21. Results from ProPublica With proper False Discovery Rate (1ŌĆōPrecision) & False Omission Rate calculations: > < < >

- 22. ŌŚÅ There was a mistake in the calculation. ŌŚÅ Can we have parity for all metrics?

- 23. The impossibility of fairness metrics In real-life situations where ŌŚÅ the rate of positive cases in the groups are not equal and ŌŚÅ the model is not perfect, it is impossible to satisfy all of these: False Negative Rate Parity False Positive Rate Parity Positive Predictive Value (precision)

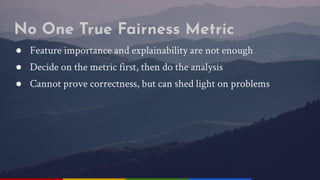

- 24. No One True Fairness Metric ŌŚÅ Feature importance and explainability are not enough ŌŚÅ Decide on the metric first, then do the analysis ŌŚÅ Cannot prove correctness, but can shed light on problems

- 25. ŌŚÅ Aequitas Bias report ŌŚÅ AI Fairness 360 by IBM Tools for calculating fairness

- 26. Pre-processing What can I do? Fair models Post-processing Use models which are using a penalty for unfair behaviour Transform features in a way so that the new features are not correlated with the sensitive variable Use different thresholds for the different groups ItŌĆÖs a hard optimization task, and some existing models cannot be modified. The newly created features will be meaningless. Only makes corrections at the marginal cases.

- 27. Transparency can help ŌŚÅ Audit decision-making systems ŌŚÅ Do not store or use sensitive data ŌŚÅ Rethink contract strategy ŌĆō some algorithms are proprietary

- 29. ŌŚÅ Mountains under white mist at daytime by Ivana Cajina, https://unsplash.com/photos/HDd-NQ_AMNQ ŌŚÅ Over’äÄitting by Chabacano ŌĆō Own work, CC BY-SA 4.0, https://commons.wikimedia.org/w/index.php?curid=3610704 ŌŚÅ In an antique shop window by Pattie ŌĆō https://www.flickr.com/photos/piratealice/3082374723 ŌŚÅ Motorcycle wheel and tire by Oliver Walthard ŌĆō https://unsplash.com/photos/mbL0XQy-d3o ŌŚÅ Woman wearing white and red blouse buying some veggies by Renate Vanaga ŌĆō https://unsplash.com/photos/2pV2LwPVP9A ŌŚÅ Turned-on monitor by Blake Wisz ŌĆō https://unsplash.com/photos/tE6th1h6Bfk ŌŚÅ https://worldvectorlogo.com/logo/the-washington-post, https://worldvectorlogo.com/logo/the-guardian-new-2018 ŌŚÅ Shallow focus photo of compass by Aaron Burden ŌĆō https://unsplash.com/photos/NXt5PrOb_7U Image credits

- 30. Other Sources ŌŚÅ https://www.congress.gov/bill/116th-congress/house-bill/2231/text ŌŚÅ https://eur-lex.europa.eu/eli/reg/2016/679/oj ŌŚÅ https://www.theguardian.com/technology/2019/nov/10/apple-card-issuer-investigated-after-claims-of-sexist-credit-checks ŌŚÅ https://www.washingtonpost.com/health/2019/10/24/racial-bias-medical-algorithm-favors-white-patients-over-sicker-black-patients/ ŌŚÅ https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing ŌŚÅ Alexandra Chouldechova: Fair prediction with disparate impact: A study of bias in recidivism prediction instruments ŌĆō https://arxiv.org/pdf/1610.07524.pdf

![The letter of the law

European Union: Policy and investment recommendations for trustworthy Artificial

Intelligence (guideline)

...

5. Measure and monitor the societal impact of AI

28. Consider the need for new regulation to ensure adequate protection from adverse impacts

12. Safeguard fundamental rights in AI-based public services and protect societal infrastructures

United States of America: Algorithmic Accountability Act of 2019

A bill to direct the Federal Trade Commission to require entities that use, store, or share personal

information to conduct automated decision system impact assessments (ADSIA). [... an ADSIA] means a

study evaluating an automated decision system and the automated decision systemŌĆÖs development process,

including the design and training data of the automated decision system, for impacts on accuracy, fairness,

bias, discrimination, privacy](https://image.slidesharecdn.com/presentationpublic-200904195823/85/Using-fairness-metrics-to-solve-ethical-dilemmas-of-machine-learning-5-320.jpg)