You and Your Research -- LLMs Perspective

- 1. You and Your Research LLMs Perspective Dr Mohamed Elawady Department of Computer and Information Sciences University of Strathclyde 4th ML/AI Workshop 14th Sep 2023

- 2. Agenda ŌŚÅ Introduction: LLMs ŌŚÅ History of LLMs ŌŚÅ LLMs + Chatbots ŌŚÅ LLMs + Research 2 https://www.reddit.com/r/ChatGPTMemes/comme nts/102mvys/yours_sincerely_chatgpt/?rdt=43569 ŌĆ£I visualise a time when we will be to robots what dogs are to humans, and IŌĆÖm rooting for the machines.ŌĆØ Claude Shannon (1916-2001)

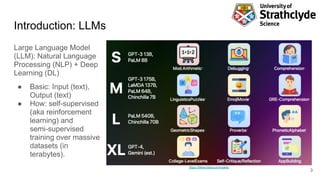

- 3. Introduction: LLMs Large Language Model (LLM): Natural Language Processing (NLP) + Deep Learning (DL) ŌŚÅ Basic: Input (text), Output (text) ŌŚÅ How: self-supervised (aka reinforcement learning) and semi-supervised training over massive datasets (in terabytes). 3 https://lifearchitect.ai/models/

- 4. History of LLMs 4 Zhao, Wayne Xin, et al. "A survey of large language models." arXiv preprint arXiv:2303.18223 (2023). ŌŚÅ WhatŌĆÖs behind ŌŚŗ Transformers ŌŚŗ Massive data ŌŚŗ GPUs ŌŚÅ Popular ŌŚŗ OpenAI GPT 3/4 ŌŚŗ Google Bard ŌŚŗ Meta LLaMA ŌŚŗ Google T5 ŌŚŗ BLOOM ŌŚÅ Coming Soon! ŌŚŗ Deepmind Gemini ŌŚŗ OpenAI GPT 5

- 5. LLMs + Chatbots ŌŚÅ GPT-3.5/4 + ChatGPT (OpenAI) ŌŚÅ LaMDA + Bard (Google) ŌŚÅ GPT 4 + Bing (Microsoft) ŌŚÅ GPT 4 + YouChat (You.com) ŌŚÅ Claude + Claude AI (Anthropic) ŌŚÅ GPT 4 + ChatSonic (ChatSonic) 5

- 6. LLMs + Research ŌŚÅ Sentence-BERT / T5 / GPT-3 + Elicit ŌŚÅ SciBERT + Scite Assistant ŌŚÅ GPT-4 + Consensus 6

- 7. More Resources ŌŚÅ LLM Introduction: Learn Language Models, GitHub Gist: https://gist.github.com/rain-1/eebd5e5eb2784feecf450324e3341c8d ŌŚÅ Awesome-LLM: a curated list of Large Language Model, GitHub: https://github.com/Hannibal046/Awesome-LLM ŌŚÅ Demos over Hugging Face platform (signup required) ŌŚŗ Text-to-Text Generation: https://huggingface.co/google/flan-t5-base ŌŚŗ Text Summarization: https://huggingface.co/facebook/bart-large-cnn ŌŚŗ Text Generation: https://huggingface.co/bigscience/bloom 7

- 8. References ŌŚÅ (GPT-3) Brown, Tom, et al. "Language models are few-shot learners." Advances in neural information processing systems 33 (2020): 1877-1901. ŌŚÅ (GPT-4) OpenAI. ŌĆ£GPT-4 Technical Report.ŌĆØ ArXiv abs/2303.08774 (2023). ŌŚÅ (LaMDA) Thoppilan, Romal, et al. "Lamda: Language models for dialog applications." arXiv preprint arXiv:2201.08239 (2022). ŌŚÅ (SciBERT) Beltagy, Iz, Kyle Lo, and Arman Cohan. "SciBERT: A pretrained language model for scientific text." arXiv preprint arXiv:1903.10676 (2019). ŌŚÅ (Sentence-bert) Reimers, Nils, and Iryna Gurevych. "Sentence-bert: Sentence embeddings using siamese bert-networks." arXiv preprint arXiv:1908.10084 (2019). ŌŚÅ (T5) Raffel, Colin, et al. "Exploring the limits of transfer learning with a unified text-to-text transformer." The Journal of Machine Learning Research 21.1 (2020): 5485-5551. ŌŚÅ (LLaMA) Touvron, Hugo, et al. "Llama: Open and efficient foundation language models." arXiv preprint arXiv:2302.13971 (2023). ŌŚÅ (BLOOM) Scao, Teven Le, et al. "Bloom: A 176b-parameter open-access multilingual language model." arXiv preprint arXiv:2211.05100 (2022). ŌŚÅ (LaMDA) Thoppilan, Romal, et al. "Lamda: Language models for dialog applications." arXiv preprint arXiv:2201.08239 (2022). ŌŚÅ (PaLM) Chowdhery, Aakanksha, et al. "Palm: Scaling language modeling with pathways." arXiv preprint arXiv:2204.02311 (2022). ŌŚÅ (Chinchilla) Hoffmann, Jordan, et al. "Training compute-optimal large language models." arXiv preprint arXiv:2203.15556 (2022). 8