SDC20 ScaleFlux.pptx

- 1. 2020 Storage Developer Conference. ÂĐ ScaleFlux. All Rights Reserved. 1 The True Value of Storage Drives with Built-in Transparent Compression: Far Beyond Lower Storage Cost Tong Zhang ScaleFlux Inc. San Jose, CA

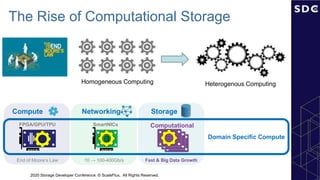

- 2. 2020 Storage Developer Conference. ÂĐ ScaleFlux. All Rights Reserved. 2 Storage Computational Storage Fast & Big Data Growth The Rise of Computational Storage Domain Specific Compute Compute FPGA/GPU/TPU End of Mooreâs Law Networking SmartNICs 10 â 100-400Gb/s Homogeneous Computing Heterogenous Computing

- 3. 2020 Storage Developer Conference. ÂĐ ScaleFlux. All Rights Reserved. 3 Computational Storage: A Very Simple Idea ïą End of Mooreâs Law ïĻ heterogeneous computing Low-hanging fruits FPGA/GPU/TPU SmartNIC s Computational Storage Flash Control NAND Flash FPGA In-line per-4KB zlib compression & decompression HW â âSW Computational Storage Drive (CSD) with Data Path Transparent Compression

- 4. 2020 Storage Developer Conference. ÂĐ ScaleFlux. All Rights Reserved. 4 ScaleFlux Computational Storage Drive: CSD 2000 ïž Complete, validated solution ïž Pre-Programmed FPGA ïž Hardware ïž Software ïž Firmware ïž No FPGA knowledge or coding ïž Field upgradeable ïž Standard U.2 & AIC form factors Multiple, discrete components for Compute and SSD Functions SSD CPU FPGA Flash Controller Flash Flash FPGA FC Flash Flash CSD CPU Single FPGA combines Compute and SSD Functions

- 5. 2020 Storage Developer Conference. ÂĐ ScaleFlux. All Rights Reserved. 5 CSD 2000: Data Path Transparent Compression 0 50 100 150 200 250 100% 90% 80% 70% 60% 50% 40% 30% 20% 10% 0% 100% Reads 0 100 200 300 400 500 600 700 100% 90% 80% 70% 60% 50% 40% 30% 20% 10% 0% IOPS (k) Better 170% 70/30 R/W 100% Writes FIO: 4K Random R/W IOPS FIO: 16K Random R/W IOPS 230% 100% Write 220% 70/30 R/W 220% 100% Write 2.5:1 Compressible Data, 8 jobs, 32 QD, steady state after preconditioning IOPS (k) Better CSD 2000 NVMe SSD CSD 2000 NVMe SSD 100% Reads 100% Writes 2.5:1 Compressible Data, 8 jobs, 32 QD, steady state after preconditioning CSD 2000

- 6. 2020 Storage Developer Conference. ÂĐ ScaleFlux. All Rights Reserved. 6 Comparing Compression Options CSD 2000 Scalable CSD-based compression reduces Cost/GB without choking the CPU No Compression Host-Based Offload Card CSD 2000 No CPU Overhead ïž ïŧ ïž ïž Reduced $/User GB ïŧ ïž ïž ïž Performance scales with capacity ïž ïŧ ïŧ ïž Transparent App Integration - ïŧ ïŧ ïž Zero App Latency ïž ïŧ ïŧ ïž No incremental power usage ïž ïŧ ïŧ ïž No incremental physical footprint ïž ïž ïŧ ïž

- 7. 2020 Storage Developer Conference. ÂĐ ScaleFlux. All Rights Reserved. 7 In-Storage Transparent Compression: Why is It Hard to Build? Flash Translation Layer (w/o compression) 4KB LBA ïĻ 4KB flash block mapping Regularity & uniformity ïž Relatively simple FTL implementation ïž Relatively easy to achieve high speed ïž Relatively easy to ensure storage stability Proc. 1 ... ... Flash Memory . . . Proc. 2 Proc. n ... ... Logical Block Address (LBA)

- 8. 2020 Storage Developer Conference. ÂĐ ScaleFlux. All Rights Reserved. 8 In-Storage Transparent Compression: Why is It Hard to Build? Flash Translation Layer (w. compression) 4KB LBA ïĻ variable-length flash block mapping Proc. 1 Flash Memory . . . Proc. 2 Proc. n ... ... Logical Block Address (LBA) ... ... Irregularity & randomness ïž Much more complicated FTL implementation ïž Much harder to achieve high speed ïž Much harder to ensure storage stability

- 9. 2020 Storage Developer Conference. ÂĐ ScaleFlux. All Rights Reserved. 9 CSD 2000: Highest OLTP TPS, Lowest $/User GB Better Better 2.4TB Dataset Physical Flash consumed on NVMe A; 0.9TB on CSD 2000 4.8TB Dataset Physical Flash consumed on NVMe A; 1.6TB on CSD 2000 CSD 2000 delivers 30% higher Read-Write TPS in this cost comparison Flexible Drive Capacity Enables the Best Performance â Cost Performance: 150% TPS Cost: 50% Less $/User GB ï§ Sysbench (MySQL 5.7.25, InnoDB) ï§ 50M records, 64 Threads ï§ 1hr Test run ï§ Intel(R) Xeon(R) CPU E5-2667 v4 @ 3.20GHz, 256GB DRAM

- 10. 2020 Storage Developer Conference. ÂĐ ScaleFlux. All Rights Reserved. 10 Open a Door for System Innovation Logical storage space utilization efficiency Physical storage space utilization efficiency OS/Applications can purposely waste logical storage space to gain benefits Transparent compression FTL with transparent compression NAND Flash (e.g., 4TB) Exposed LBA space (e.g., 32TB) SSD Valid user data 0âs 4KB Transparent compression Compressed data Unnecessary to fill each 4KB sector with user data Unnecessary to use all the LBAs

- 11. 2020 Storage Developer Conference. ÂĐ ScaleFlux. All Rights Reserved. 11 Case Study 1: PostgreSQL Normalized Performance Physical storage usage 600GB 1.2TB 100% 200% 300GB Data 8KB/page Fillfactor (FF) Reserved for future update FF Performance Storage space Data 0âs 8KB/page Transparent compression Compressed data Commodity SSD SFX CSD 2000 RLâ0 RLâ4KB

- 12. 2020 Storage Developer Conference. ÂĐ ScaleFlux. All Rights Reserved. 12 Case Study 1: PostgreSQL 60.0% 70.0% 80.0% 90.0% 100.0% 110.0% 120.0% 130.0% 140.0% FF100 (740GB) Vendor-A Normalized TPS CSD 2000 FF100 (178GB) FF75 (905GB) 60.0% 70.0% 80.0% 90.0% 100.0% 110.0% 120.0% 130.0% 140.0% 150.0% FF75 (189GB) FF100 (1,433GB) FF100 (342GB) FF75 (1,762GB) FF75 (365GB) Vendor-A CSD 2000 Fillfactor Drive Logical size (GB) Physical size (GB) Comp Ratio 100 Vendor-A 740 740 1.00 CSD 2000 178 4.12 75 Vendor-A 905 905 1.00 CSD 2000 189 4.75 740GB 1.4TB Fillfactor Drive Logical size (GB) Physical size (GB) Comp Ratio 100 Vendor-A 1,433 1,433 1.00 CSD 2000 342 4.19 75 Vendor-A 1,762 1,762 1.00 CSD 2000 365 4.82 Normalized TPS

- 13. 2020 Storage Developer Conference. ÂĐ ScaleFlux. All Rights Reserved. 13 TRX-1 Case Study 2: Sparse Write-Ahead Logging ïą Write-ahead logging (WAL) ï Universally used by data management systems to achieve atomicity and durability TRX-1 0âs 0âs commit @ t1 In-memory WAL buffer On-storage WAL LBA x0001 fsync @ t1 TRX-1 commit @ t2 TRX-2 TRX-1 commit @ t3 TRX-2 TRX-3 0âs TRX-1 TRX-2 TRX-1 TRX-2 TRX-3 0âs fsync @ t2 fsync @ t3 LBA x0001 LBA x0001 Transparent compression NAND Flash memory . . . . . .

- 14. 2020 Storage Developer Conference. ÂĐ ScaleFlux. All Rights Reserved. 14 TRX-1 Case Study 2: Sparse Write-Ahead Logging TRX-1 0âs 0âs commit @ t1 In-memory WAL buffer On-storage WAL LBA x0001 fsync @ t1 TRX-1 commit @ t2 TRX-2 TRX-1 commit @ t3 TRX-2 TRX-3 0âs TRX-1 TRX-2 TRX-1 TRX-2 TRX-3 0âs fsync @ t2 fsync @ t3 LBA x0001 LBA x0001 Transparent compression NAND Flash memory . . . . . . Write amplification More interference with other IOs Shorter NAND flash memory lifetime

- 15. 2020 Storage Developer Conference. ÂĐ ScaleFlux. All Rights Reserved. 15 Case Study 2: Sparse Write-Ahead Logging ïą Sparse WAL: Allocate a new 4KB sector per transaction commit ïž Waste logical storage space ïĻ reduce WAL-induced write amplification TRX-1 TRX-1 0âs 0âs commit @ t1 In-memory WAL buffer On-storage WAL LBA x0001 fsync @ t1 commit @ t2 TRX-2 commit @ t3 TRX-3 0âs 0âs TRX-2 TRX-3 0âs 0âs fsync @ t2 fsync @ t3 LBA x0002 LBA x0003 Transparent compression NAND Flash memory . . . . . .

- 16. 2020 Storage Developer Conference. ÂĐ ScaleFlux. All Rights Reserved. 16 Case Study 2: Sparse Write-Ahead Logging ïą Sparse WAL: Allocate a new 4KB sector per transaction commit ïž Waste logical storage space ïĻ reduce WAL-induced write amplification Data size per transaction Normalized write volume Better 0% 10% 20% 30% 40% 50% 60% 70% 80% 90% 100% 128B 256B 512B 1024B 2048B 94% reduction

- 17. 2020 Storage Developer Conference. ÂĐ ScaleFlux. All Rights Reserved. 17 Case Study 3: Table-less Hash-based KV Store ïą Very simple idea ï Hash key space directly onto logical storage space ïĻ eliminate the in-memory hash table ï Transparent compression eliminates the âunoccupied spaceâ from physical storage space Key space K . . . Hash function fKï L . . . 4KB In-memory hash table . . . Key space K . . . . . . 4KB LBA space L LBA space L KV pairs are tightly packed in L KV pairs are loosely packed in L Hash function fKï T Unoccupied space Transparent compression NAND Flash

- 18. 2020 Storage Developer Conference. ÂĐ ScaleFlux. All Rights Reserved. 18 Case Study 3: Table-less Hash-based KV Store ïą Eliminate in-memory hash table ïž Very small memory footprint ïž High operational parallelism ïž Short data access data path ïž Very simple code base Key space K . . . Hash function fKï L . . . 4KB LBA space L KV pairs are loosely packed in L Unoccupied space ïą Under-utilize logical storage space ïž Obviate frequent background operations (e.g., GC and compaction) High performance, low memory cost, and low CPU usage

- 19. 2020 Storage Developer Conference. ÂĐ ScaleFlux. All Rights Reserved. 19 Case Study 3: Table-less Hash-based KV Store ïą Experimental Setup ï 24-core 2.6GHz Intel CPU, 32GB DDR4 DRAM, and a 3.2TB SFX CSD2000 ï RocksDB 6.10 (12 compaction threads and 4 flush threads) ï 400-byte KV pair size, 1 billion KVs ïĻ 400GB raw data ï Memory usage: RocksDB (5GB), KallaxDB (600MB) Storage Usage RocksDB (no compression) 428GB RocksDB (LZ4-only) 235GB RocksDB (LZ4+ZSTD) 201GB KallaxDB 216GB YCSB A 50% reads, 50% updates YCSB B 95% reads, 5% updates YCSB C 100% reads YCSB D 95% reads, 5% inserts YCSB F 50% reads, 50% read-modify- writes

- 20. 2020 Storage Developer Conference. ÂĐ ScaleFlux. All Rights Reserved. 20 Case Study 3: Experimental Results (24 clients) 0 50,000 100,000 150,000 200,000 250,000 YCSB A YCSB B YCSB C YCSB D YCSB F Average ops/s RocksDB (no compression) RocksDB (LZ4-only) RocksDB (LZ4+ZSTD) KallaxDB Better 0 50 100 150 200 250 YCSB A YCSB B YCSB C YCSB D YCSB F Average Read Latency (us) RocksDB (no compression) RocksDB (LZ4-only) RocksDB (LZ4+ZSTD) KallaxDB Better 0 1000 2000 3000 4000 5000 6000 YCSB A YCSB B YCSB C YCSB D YCSB F 99.9% Read Tail Latency (us) Better 0 100 200 300 400 500 YCSB A YCSB B YCSB C YCSB D YCSB F Ccycle/Op (K) Better

- 21. 2020 Storage Developer Conference. ÂĐ ScaleFlux. All Rights Reserved. 21 Open a Door for System Innovation Logical storage space utilization efficiency Physical storage space utilization efficiency OS/Applications can purposely waste logical storage space to gain benefits Transparent compression FTL with transparent compression NAND Flash (e.g., 4TB) Exposed LBA space (e.g., 32TB) SSD Valid user data 0âs 4KB Transparent compression Compressed data Unnecessary to fill each 4KB sector with user data Unnecessary to use all the LBAs

- 22. 2020 Storage Developer Conference. ÂĐ ScaleFlux. All Rights Reserved. 22 Open a Door for System Innovation Data 8KB/page Reserved for future update Reserve more space for future update to improve performance @ zero storage overhead Sparse WAL Reduce WAL-induced write amplification @ zero storage overhead Table-less hash-based KV store Key space K . . . Hash function fKï L . . . 4KB LBA space L KV pairs are loosely packed in L High performance, low memory/CPU usage @ zero storage overhead

- 23. 2020 Storage Developer Conference. ÂĐ ScaleFlux. All Rights Reserved. 23 Thank You www.scaleflux.com info@scaleflux.com tong.zhang@scaleflux.com