Load webinar dissemination

- 1. Welcome! Life of a Dataset: Disseminate Wednesday, January 30, 2013

- 2. Program Outline • Life of a Dataset, short introduction • Dissemination – Goal of Dissemination – Metadata – Release – Means of Dissemination – Post-Dissemination Enhancements

- 3. Life of a Dataset - the Web site • www.icpsr.umich.edu/icpsrweb/content/data management/life-of-dataset.html

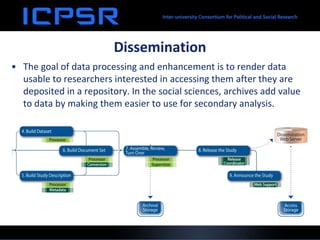

- 4. Dissemination • The goal of data processing and enhancement is to render data usable to researchers interested in accessing them after they are deposited in a repository. In the social sciences, archives add value to data by making them easier to use for secondary analysis.

- 5. Metadata • Technical documentation or the codebook – XML – eXtensibe Markup Language • Developed by W3C, the governing body for all Web standards • For example: <question>Do you own your own home?</question> – DDI – Data Documentation Initiative (ddialliance.org) • Written in XML • Has rules specially for describing social, behavioral and economic data • Offers authoring tools on Web site

- 8. Metadata Reviewer’s Duties • Build study-level and dataset-level metadata – Works cooperatively with the Process to complete tasks needed to provide quality document to support data • Build document set – Performs Quality Checks on all documentation – Digitizes any hard copy documentation and converts that to .pdf format – Assembles and conveys documentation to Processor

- 9. Release Manager’s Duties • Prepares data to be released through the Web site, SDA, and/or restricted data use agreements.

- 10. Web Manager’s Duties • Announce the new study – Register DOI – Post announcement to ICPSR blog and email list about recent updates and new studies

- 11. Means of Dissemination • Public and Member’s Only Studies

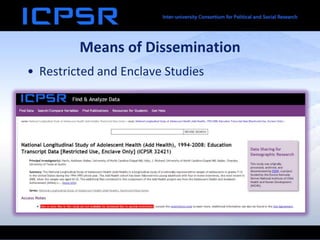

- 12. Means of Dissemination • Restricted and Enclave Studies

- 13. Post-Dissemination Enhancements • Instructional Materials – Data-Driven Learning Guides – Exercise Sets • Bibliography of Data-Related Literature

- 14. Email Lists

- 15. Contacts • Matthew Richardson, Web Project Manager, matvey@umich.edu , 734-615-7901 • Sue Hodge, Instructional Resources, shodge@umich.edu, 734-615-7850

- 16. Thank you! Life of a Dataset: Disseminate Wednesday, January 30, 2013

Editor's Notes

- #3: Welcome to the Life of a Dataset Webinar Series. This is the second in the series, Dissemination. In this webinar, you will learn about how ICPSR works to protect data, and the confidentiality of study’s respondents. We will talk about the tools we use to secure data, the methods employed to insure confidentiality, and the steps taken with the data and associated files to bring a codebook, a data file, and data in multiple usable formats to our website. We will be joined by Matthew Richardson, Web Project Manager for ICPSR.

- #4: The name of this webinar series is derived from a tour of ICPSR’s home during the OR Meeting in 2011. This meeting is held every other year on the campus of the University of Michigan in Ann Arbor. ICPSR invites its campus representatives from around the world to visit here for training. These representatives are known as Official Representatives - ORs, for short. The Life of a Dataset Tour was transformed into a poster sessions for the 50th Anniversary Open House held last year at ICPSR. The staff from each of the units in the tour created posters on their unit’s role in the data lifecycle. ORs requested a web site has been built with a short description of the Life of a Dataset and the posters which are downloadable there. The brochure from the tour is also available on the site. Also, This series of webinars is created in response to requests of the ORs who wish to be able to share this information with faculty and students on their campuses. When completed they will reside on the ICPSR Youtube Channel and will be linked into the Life of a Dataset page. Each of the webinars focus on the main theme of the three stages of a data life cycle, Deposit, Process, and Dissemination. The second page of the brochure that is downloadable from this page, has the pipeline through which data travel from deposit to dissemination. We’ll be looking at a processing piece of it shortly. If you need help with this page, please feel free to contact me. My contact information will be on a slide at the end of the webinar.

- #5: Access and DisseminationICPSR disseminates data to researchers, students, policymakers, and journalists around the world. Those downloading data or analyzing them online are expected to comply with standards of responsible use. Before gaining access to data, users are asked to read a Responsible Use Statement that says the following:The datasets are to be used solely for statistical analysis and reporting aggregated information.The confidentiality of research participants is to be guarded in all ways.Anything that can potentially breach participants' confidentiality is to be reported promptly to ICPSR.The data are not to be redistributed or sold to others without the written agreement of ICPSR.The user will inform ICPSR of the use of the data in books, articles, and other forms of publication.ICPSR's commitment to access has the following objectives:Support the repository's Designated Community: researchers, students, practitioners, and other users who need to obtain access to the ICPSR archive of social science dataEnsure uninterrupted access to valuable data resources over time through technology changesProvide access to digital holdings in a manner consistent with the protection of the privacy of survey participantsSupport the objectives of sponsoring agencies that contract with ICPSR to make their data resources available to a wide audienceLet’s take a step back to the work that the Metadata Reviewers do in preparing documentation prior to the release of the data.

- #6: Metadata — often called technical documentation or the codebook — are critical to effective data use as they convey information that is necessary to fully exploit the analytic potential of the data.ICPSR recommends using XML to create structured documentation compliant with the Data Documentation Initiative (DDI) metadata specification, an international standard for the content and exchange of documentation. XML stands for eXtensible Markup Language and was developed by the W3C, the governing body for all Web standards. Structured, XML-based metadata are ideal for documenting research data because the structure provides machine-actionability and the potential for metadata reuse.XML defines structured rules for tagging text in a way that allows the author to express semantic meaning in the markup. Thus, question text — for example, <question>Do you own your own home?</question> — can be tagged separately from the answer categories. This type of tagging embeds “intelligence” in the metadata and permits flexibility in rendering the information for display on the Web.Data Documentation Intiative (DDI)We encourage data producers to generate documentation that is tagged according to the Data Documentation Initiative (DDI) metadata specification, an emerging international standard for the content, presentation, transport, and preservation of documentation. The DDI specification is written in XML, which permits the markup, or tagging, of technical documentation content for retrieval and repurposing across the data life cycle. The Data Documentation Initiative (DDI) provides a set of XML rules specifically for describing social, behavioral, and economic data. DDI is designed to encourage the use of a comprehensive set of elements to describe social science datasets, thereby providing the potential data analyst with broader knowledge about a given collection. In addition, DDI supports a life cycle orientation to data that is crucial for thorough understanding of a dataset. DDI enables the documentation of a project from its earliest stages through questionnaire development, data collection, archiving and dissemination, and beyond, with no metadata loss.Several XML authoring tools are available to facilitate the creation of DDI metadata. With a generic XML editor, the user imports the DDI rules (i.e., the DDI XML Schema) into the software and is then able to enter text for specific DDI elements and attributes. The resulting document is a valid DDI instance or file.There are also DDI-specific tools, such as Nesstar Publisher and Colectica, which produce DDI-compliant XML markup automatically. For more information on DDI and a list of tools and other XML resources, please consult the DDI Web site.

- #7: Since most standard computer programs will produce frequency distributions that show counts and percentages for each value of numeric variables, it may seem logical to use that information as the basis for documentation, but there are several reasons why this is not recommended. First, the output typically does not show the exact form of the question or item. Second, it does not contain other important information such as skip patterns, derivations of constructed variables, etc.A list of the most important items to include in social science metadata is presented below (DCMI) element set. The DCMI is a standard aimed at making it easier to describe and to find resources using the Internet. Some of the most important metadata are:Principal investigator(s) [Dublin Core -- Creator]. Principal investigator name(s), and affiliation(s) at time of data collection.Title [Dublin Core -- Title]. Official title of the data collection.Funding sources. Names of funders, including grant numbers and related acknowledgments.Data collector/producer. Persons or organizations responsible for data collection, and the date and location of data production.

- #8: The metadata editor is used by both the processor and metadata reviewer to insure that the fields are as complete and correct as possible and that ICPSR naming and style conventions are observed. The citation for the study is drawn from the completed metadata editor and presented in the codebook and on the study’s homepage.

- #9: The reviewer is the person who receives the draft metadata record from the Processor and copy edits for format, style, consistency and compliance with ICPSR standards. This person creates the metadata database record and creates study description files in XML and pdf formats. In building the document set, she/he receives files from Processor to be included in codebook and documentation. The Reviewer prepares files by digitizing (if necessary), formatting, and converting files to a pdf format. Quality checks are performed on all documents to insure that all pages are present, pagination, and page layout is correct, and all bookmarks are present and functional. Finally, the complete and correct documentation set is assembled and sent to the Processor for inclusion in the release of the study.

- #10: The Release Manager reviews the study submitted for release, using the manifest file – a part of each download, ensures that all of the files are present, and all links and bookmarks function. The site is then re-indexed. For restricted use studies that are being released, all documentation files will be present but there will be no link to the dataset.

- #11: The very last step in the life of a dataset is the announcement of its presence on the website. It is given a doi (direct object identifier) that permanently points to the study and by that we mean the data and all of the documentation associated with it. The announcement is broadcasted to those who have signed up for the email list on new and updated studies and post to the blog which is seen on our main page. Announcements are generally made weekly.

- #12: Studies that are held in externally funded archives such as NACJD or SAMHDA are publically available but access to those dataset held in the General Archive are limited to students faculty or others affiliated with member institutions.

- #13: Data that are restricted in any way from download from our webiste will have this note attched to it and will require application for access to them. This includes Enclave data.

- #14: Data-Driven Learning Guides are standardized exercises include a statement of learning goals, a description of the focal concept in language similar to that found in an introductory textbook, a short description of the dataset, a series of data outputs and accompanying questions for students, a guide to interpreting the output, and a bibliography related to the topic discussed. They are categorized by the highest level of statistical analysis to assist with selecting appropriate exercises, but there is no assumption that any one activity must be used prior to any other.Exercise Setsare made up of sequenced activities. While assignments may be created using a few of the exercises in a set, the full package must be used to meet the stated learning objectives for each. Exercise Sets are often appropriate for Research Methods courses and more substantively focused courses. To name a few of these resources, we have:Exploring Data Through Research Literature – developed by Rachael Barlow, Social Science Research and Data Coordinator at Trinity CollegeInvestigating Community and Social Capital – developed by Lori M. Weber Professor of political science at CSU, ChicoVoting Behavior in the 2008 Election (SETUPS) – developed by Charles Prysby, professor of political science at the University of North Carolina at Greensboro and Carmine Scavo, associate professor political science at East Carolina University in Greenville, North Carolina. The ICPSR Bibliography of Data-related Literature, a searchable database that contains over 60,000 citations of known published and unpublished works resulting from analyses of data held in the ICPSR archive. It is a continuously-updated database of thousands of citations of works using data held in the ICPSR archive. The works include journal articles, books, book chapters, government and agency reports, working papers, dissertations, conference papers, meeting presentations, unpublished manuscripts, magazine and newspaper articles, and audiovisual materials. The Bibliography facilitates literature searches by social scientists, students, journalists, policymakers, and funding agencies.Â

- #15: If you are interested in receiving an email about updates to study or new studies in the archives. Our email lists are used primarily by the Official Representatives and other staff at member institution, but the public is free to subscribe to them. Each of the lists have a primary focus, and the “Recent Updates and Additions List” may be useful for someone with a eye out for a change in a dataset currently held in one of our archives or if they are looking for a new study to be available. We announce all new and updated studies to this email list and that message is posted on the main page as an announcement.