1 of 27

Download to read offline

Ad

Recommended

141115 making web site

141115 making web siteHimi Sato

?

About how to make a Website. I used this for Women Who Code Tokyo(http://www.meetup.com/Women-Who-Code-Tokyo/)'s meetup. It's would be glad if it could help someone.jQuery Performance Tips ©C jQueryż╦ż¬ż▒żļĖ▀╦┘╗» -

jQuery Performance Tips ©C jQueryż╦ż¬ż▒żļĖ▀╦┘╗» -Hayato Mizuno

?

źĻź├ź┴ż╩WebźóźūźĻź▒®`źĘźńź¾ż╦īØż╣żļź╦®`ź║ż╬ēł╝ėż╦░ķżżĪóJavaScriptżŪ╩«Ęųż╩źčźšź®®`ź▐ź¾ź╣ż“ĄŻ▒Żż╣żļż│ż╚ż¼ļyżĘż»ż╩ż├żŲżŁżŲżżż▐ż╣ĪŻż╚żĻż’ż▒Īóź╣ź▐®`ź╚źšź®ź¾ż╬żĶż”ż╩ĘŪ┴”ż╩źŪźąźżź╣żŪżŽę╗īėźĘźėźóż╩ź┴źÕ®`ź╦ź¾ź░ż¼Ū¾żßżķżņżļżŪżĘżńż”ĪŻ▒Šź╗ź├źĘźńź¾żŪżŽĪóżŌż├ż╚żŌź▌źįźÕźķ®`ż╩JavaScriptźķźżźųźķźĻżŪżóżļĪĖjQueryĪ╣ż“ųąą─ż╚żĘż┐Īóżżż»ż─ż½ż╬źčźšź®®`ź▐ź¾ź╣ĮŌøQż╬ż┐żßż╬źęź¾ź╚ż╦ż─żżżŲż┤ĮBĮķżĄż╗żŲżżż┐ż└żŁż▐ż╣ĪŻ

http://frontrend.github.com/events/04/#pocotan001SEOż╚Java ScriptĪŻ ?╬─Ģ°śŗįņż╚ź┴®`źÓż╚ĪóĢrĪ®Īóķ£?

SEOż╚Java ScriptĪŻ ?╬─Ģ°śŗįņż╚ź┴®`źÓż╚ĪóĢrĪ®Īóķ£?Yuki Minakawa

?

2016/01/16ż╦┐¬┤▀żĄżņż┐░┬Č┘╣¾čąą▐ĪĖ░ļÜiŽ╚ż╬│¦ĘĪ░┐żŪä┐┬╩żĶż»Įß╣¹ż“│÷żĮż”Ī╣żŪ░k▒ĒżĄż╗żŲČźżżż┐│ó░šżŪż╣ĪŻnanocż╬helper └¹ė├īg└²

nanocż╬helper └¹ė├īg└²Go Maeda

?

Rubyż╦żĶżļwebźĄźżź╚źĖź¦ź═źņ®`ź┐nanocż╬ĮMż▀▐zż▀helperż╬└¹ė├└²ż“ĮŌšhĪŻ

2013─Ļ4į┬6╚š Ą┌0╗žnanoc├ŃÅŖ╗ß░k▒Ē┘Y┴ŽĪŻ║╬ż╩ż╬│”Ż┐

║╬ż╩ż╬│”Ż┐Mutsumi IWAISHI

?

╚š▒Šnanocźµ®`źČ╗ßįO┴óż╬╝»żż & Ą┌0╗žnanoc├ŃÅŖ╗ßżŪż¬įÆżĘż┐Īónanocż╬╗∙▒ŠĄ─ż╩įÆż╬ź╣źķźżź╔żŪż╣ĪŻ

@sada4żĄż¾ż╬ż┤ųĖš¬żŪę╗▓┐ūĘėøżĘż▐żĘż┐ĪŻŻ©config.yaml Ī· nanoc.yamlŻ®╗Õ▒▒╣Š▒▓§▒ż“└¹ė├żĘż┐╚Žį^ż╦ż─żżżŲŻ└▓čŠ▒▓į▓╣│ŠŠ▒░∙▓·

╗Õ▒▒╣Š▒▓§▒ż“└¹ė├żĘż┐╚Žį^ż╦ż─żżżŲŻ└▓čŠ▒▓į▓╣│ŠŠ▒░∙▓·Jun Fukaya

?

│ŠŠ▒▓į▓╣│ŠŠ▒.░∙▓·ż╦żŲ░k▒ĒżĘż┐╚Žį^ż╦ż─żżżŲż╬ū╩┴Ž╗ĘŠ│╣╣ų■ż½żķ╩╝żßżļČ┘┬ß▓╣▓į▓Ą┤Ūź┴źÕ®`ź╚źĻźóźļ

╗ĘŠ│╣╣ų■ż½żķ╩╝żßżļČ┘┬ß▓╣▓į▓Ą┤Ūź┴źÕ®`ź╚źĻźóźļsakihohoribe

?

Ė┤╩²ż╬░┬ĘĪĄ■ź┌®`źĖŪ©ęŲżõ░õ│¦│¦źšźĪźżźļż╬šiż▀▐zż▀BMXUG ż─żŁżĖ#4

BMXUG ż─żŁżĖ#4K Kimura

?

BMXUG ż─żŁżĖ#4 żŪĮBĮķżĘż┐ź│ź¾źŲź¾ź─Ż©ż╬ę╗▓┐Ż®

https://bmxug.connpass.com/event/74656/╩╦╩┬żŪ╩╣ż”ż┴żńż├ż╚żĘż┐ź│®`ź╔ż“OSSż╚żĘżŲķ_░kźßź¾źŲżĘżŲżżż»

- Django Redshift Backend ż╬ķ_░k - PyCon JP 2016

╩╦╩┬żŪ╩╣ż”ż┴żńż├ż╚żĘż┐ź│®`ź╔ż“OSSż╚żĘżŲķ_░kźßź¾źŲżĘżŲżżż»

- Django Redshift Backend ż╬ķ_░k - PyCon JP 2016Takayuki Shimizukawa

?

Why donĪ»t you share your code snippet for your job

as a Open Source Softwareż│żņż½żķż╬JSż╬įÆż“żĘżĶż” Ī½jQueryżŪū„żļTwitterźóźūźĻĪ½ (Gunma.web #2 2010/10/9)

ż│żņż½żķż╬JSż╬įÆż“żĘżĶż” Ī½jQueryżŪū„żļTwitterźóźūźĻĪ½ (Gunma.web #2 2010/10/9)parrotstudio

?

2010/10/9ż╦ż¬ż│ż╩ż├ż┐źūźņź╝ź¾ū╩┴Ž(żŁż├ż╚)żóż╩ż┐ż╦żŌ│÷└┤żļŻĪHyperledger composer żŪźųźĒź├ź»ź┴ź¦®`ź¾źóźūźĻż“äėż½żĘżŲż▀ż┐

(żŁż├ż╚)żóż╩ż┐ż╦żŌ│÷└┤żļŻĪHyperledger composer żŪźųźĒź├ź»ź┴ź¦®`ź¾źóźūźĻż“äėż½żĘżŲż▀ż┐K Kimura

?

ź¬®`źūź¾źĮ®`ź╣░µźųźĒź├ź»ź┴ź¦®`ź¾ŁhŠ│żŪżóżļ Hyperledger Fabric ż╦īØÅĻżĘż┐źóźūźĻź▒®`źĘźńź¾ż“Īó═¼źūźĒźĖź¦ź»ź╚ż½żķ╠ß╣®źĄżņżŲżżżļ Hyperledger Composer żŪ║åģgż╦ū„żļ┘Y┴ŽĪŻźŽź¾ź║ź¬ź¾ėążĻĪŻ2017-12-03 BMXUG Č¼ż╬┤¾├ŃÅŖ╗ß░k▒Ē┘Y┴Žż╬┼õ▓╝░µźūźĒź└ź»ź╚ķ_░kżĘżŲż’ż½ż├ż┐Djangoż╬╔Ņ?żżźč®`ź▀ź├źĘźńź¾╣▄└Ēż╬įÆ @ PyconJP2017

źūźĒź└ź»ź╚ķ_░kżĘżŲż’ż½ż├ż┐Djangoż╬╔Ņ?żżźč®`ź▀ź├źĘźńź¾╣▄└Ēż╬įÆ @ PyconJP2017hirokiky

?

PyQ https://pyq.jp/ ż╚żżż”źūźĒź└ź»ź╚ż“źĻźĻ®`ź╣Īóķ_░kżĘżŲżżżŲĘųż½ż├ż┐źč®`ź▀ź├źĘźńź¾ĪóšJ┐╔ż╬╔ŅżżįÆżŪż╣ĪŻ

pźßź┐źūźĒź░źķź▀ź¾ź░żŪČ┘│¦│óż“╩ķż│ż”

źßź┐źūźĒź░źķź▀ź¾ź░żŪČ┘│¦│óż“╩ķż│ż”Tatsuya Sasaki

?

The document defines a User class with attributes for name and age that has many accounts. It then opens a CSV file, splits each line into an ID, name, and age, and appends them to an array. If the array reaches over 1000 items, an insert is executed. It also defines a parse method that takes a filepath and separator, iterates over each line split on the separator, and yields to a block.More Related Content

What's hot (20)

║╬ż╩ż╬│”Ż┐

║╬ż╩ż╬│”Ż┐Mutsumi IWAISHI

?

╚š▒Šnanocźµ®`źČ╗ßįO┴óż╬╝»żż & Ą┌0╗žnanoc├ŃÅŖ╗ßżŪż¬įÆżĘż┐Īónanocż╬╗∙▒ŠĄ─ż╩įÆż╬ź╣źķźżź╔żŪż╣ĪŻ

@sada4żĄż¾ż╬ż┤ųĖš¬żŪę╗▓┐ūĘėøżĘż▐żĘż┐ĪŻŻ©config.yaml Ī· nanoc.yamlŻ®╗Õ▒▒╣Š▒▓§▒ż“└¹ė├żĘż┐╚Žį^ż╦ż─żżżŲŻ└▓čŠ▒▓į▓╣│ŠŠ▒░∙▓·

╗Õ▒▒╣Š▒▓§▒ż“└¹ė├żĘż┐╚Žį^ż╦ż─żżżŲŻ└▓čŠ▒▓į▓╣│ŠŠ▒░∙▓·Jun Fukaya

?

│ŠŠ▒▓į▓╣│ŠŠ▒.░∙▓·ż╦żŲ░k▒ĒżĘż┐╚Žį^ż╦ż─żżżŲż╬ū╩┴Ž╗ĘŠ│╣╣ų■ż½żķ╩╝żßżļČ┘┬ß▓╣▓į▓Ą┤Ūź┴źÕ®`ź╚źĻźóźļ

╗ĘŠ│╣╣ų■ż½żķ╩╝żßżļČ┘┬ß▓╣▓į▓Ą┤Ūź┴źÕ®`ź╚źĻźóźļsakihohoribe

?

Ė┤╩²ż╬░┬ĘĪĄ■ź┌®`źĖŪ©ęŲżõ░õ│¦│¦źšźĪźżźļż╬šiż▀▐zż▀BMXUG ż─żŁżĖ#4

BMXUG ż─żŁżĖ#4K Kimura

?

BMXUG ż─żŁżĖ#4 żŪĮBĮķżĘż┐ź│ź¾źŲź¾ź─Ż©ż╬ę╗▓┐Ż®

https://bmxug.connpass.com/event/74656/╩╦╩┬żŪ╩╣ż”ż┴żńż├ż╚żĘż┐ź│®`ź╔ż“OSSż╚żĘżŲķ_░kźßź¾źŲżĘżŲżżż»

- Django Redshift Backend ż╬ķ_░k - PyCon JP 2016

╩╦╩┬żŪ╩╣ż”ż┴żńż├ż╚żĘż┐ź│®`ź╔ż“OSSż╚żĘżŲķ_░kźßź¾źŲżĘżŲżżż»

- Django Redshift Backend ż╬ķ_░k - PyCon JP 2016Takayuki Shimizukawa

?

Why donĪ»t you share your code snippet for your job

as a Open Source Softwareż│żņż½żķż╬JSż╬įÆż“żĘżĶż” Ī½jQueryżŪū„żļTwitterźóźūźĻĪ½ (Gunma.web #2 2010/10/9)

ż│żņż½żķż╬JSż╬įÆż“żĘżĶż” Ī½jQueryżŪū„żļTwitterźóźūźĻĪ½ (Gunma.web #2 2010/10/9)parrotstudio

?

2010/10/9ż╦ż¬ż│ż╩ż├ż┐źūźņź╝ź¾ū╩┴Ž(żŁż├ż╚)żóż╩ż┐ż╦żŌ│÷└┤żļŻĪHyperledger composer żŪźųźĒź├ź»ź┴ź¦®`ź¾źóźūźĻż“äėż½żĘżŲż▀ż┐

(żŁż├ż╚)żóż╩ż┐ż╦żŌ│÷└┤żļŻĪHyperledger composer żŪźųźĒź├ź»ź┴ź¦®`ź¾źóźūźĻż“äėż½żĘżŲż▀ż┐K Kimura

?

ź¬®`źūź¾źĮ®`ź╣░µźųźĒź├ź»ź┴ź¦®`ź¾ŁhŠ│żŪżóżļ Hyperledger Fabric ż╦īØÅĻżĘż┐źóźūźĻź▒®`źĘźńź¾ż“Īó═¼źūźĒźĖź¦ź»ź╚ż½żķ╠ß╣®źĄżņżŲżżżļ Hyperledger Composer żŪ║åģgż╦ū„żļ┘Y┴ŽĪŻźŽź¾ź║ź¬ź¾ėążĻĪŻ2017-12-03 BMXUG Č¼ż╬┤¾├ŃÅŖ╗ß░k▒Ē┘Y┴Žż╬┼õ▓╝░µźūźĒź└ź»ź╚ķ_░kżĘżŲż’ż½ż├ż┐Djangoż╬╔Ņ?żżźč®`ź▀ź├źĘźńź¾╣▄└Ēż╬įÆ @ PyconJP2017

źūźĒź└ź»ź╚ķ_░kżĘżŲż’ż½ż├ż┐Djangoż╬╔Ņ?żżźč®`ź▀ź├źĘźńź¾╣▄└Ēż╬įÆ @ PyconJP2017hirokiky

?

PyQ https://pyq.jp/ ż╚żżż”źūźĒź└ź»ź╚ż“źĻźĻ®`ź╣Īóķ_░kżĘżŲżżżŲĘųż½ż├ż┐źč®`ź▀ź├źĘźńź¾ĪóšJ┐╔ż╬╔ŅżżįÆżŪż╣ĪŻ

p╩╦╩┬żŪ╩╣ż”ż┴żńż├ż╚żĘż┐ź│®`ź╔ż“OSSż╚żĘżŲķ_░kźßź¾źŲżĘżŲżżż»

- Django Redshift Backend ż╬ķ_░k - PyCon JP 2016

╩╦╩┬żŪ╩╣ż”ż┴żńż├ż╚żĘż┐ź│®`ź╔ż“OSSż╚żĘżŲķ_░kźßź¾źŲżĘżŲżżż»

- Django Redshift Backend ż╬ķ_░k - PyCon JP 2016Takayuki Shimizukawa

?

Viewers also liked (20)

źßź┐źūźĒź░źķź▀ź¾ź░żŪČ┘│¦│óż“╩ķż│ż”

źßź┐źūźĒź░źķź▀ź¾ź░żŪČ┘│¦│óż“╩ķż│ż”Tatsuya Sasaki

?

The document defines a User class with attributes for name and age that has many accounts. It then opens a CSV file, splits each line into an ID, name, and age, and appends them to an array. If the array reaches over 1000 items, an insert is executed. It also defines a parse method that takes a filepath and separator, iterates over each line split on the separator, and yields to a block.ź»ź├ź»źčź├ź╔żŪż╬▒│Š░∙└¹ė├╩┬└²

ź»ź├ź»źčź├ź╔żŪż╬▒│Š░∙└¹ė├╩┬└²Tatsuya Sasaki

?

The document discusses the history and features of Amazon's Elastic MapReduce (EMR) service. It mentions that in 2009 Hadoop was used with MySQL for analyzing large datasets, and that in 2010 Amazon launched EMR to make Hadoop easier to use on EC2. EMR supports the open-source Hadoop framework and makes it simple to set up Hadoop clusters without having to acquire and configure hardware. The document also provides a brief comparison of EMR to other Hadoop distributions like Cloudera and discusses how EMR can be used to run MapReduce jobs on large datasets stored in databases.ź▐®`ź▒źŲźŻź¾ź░ż╬ż┐żßż╬▒ß▓╣╗Õ┤Ū┤Ū▒Ķ└¹ė├

ź▐®`ź▒źŲźŻź¾ź░ż╬ż┐żßż╬▒ß▓╣╗Õ┤Ū┤Ū▒Ķ└¹ė├Tatsuya Sasaki

?

This document discusses Hadoop and its use on Amazon Web Services. It describes how Hadoop can be used to process large amounts of data in parallel across clusters of computers. Specifically, it outlines how to run Hadoop jobs on an Elastic Compute Cloud (EC2) cluster configured with Hadoop and store data in Amazon Simple Storage Service (S3). The document also provides examples of using Hadoop Streaming to run MapReduce jobs written in Ruby on an EC2 Hadoop cluster.Wimba

Wimbajvonharz

?

Wimba is a distance education delivery company focused on enhancing online learning through its collaborative suite, which includes tools for live instruction, content conversion, and instant messaging. The suite integrates with existing course management systems, offers extensive training and support, and enables educators to engage students in interactive learning environments. Despite some potential downsides such as cost increases and signal disruptions, Wimba's solutions could significantly benefit K-12 online education, facilitating the introduction of distance learning opportunities.Kc2guestb508b8

?

Das Dokument erw?hnt die Won-Hyo Tul, die 26 Bewegungen umfasst. Es wird angedeutet, dass eine erw?hnte Bewegung m?glicherweise nicht dazugeh?rt. Zudem enth?lt es einen humorvollen Verweis auf die Figur Walker, Texas Ranger.UXHK 2015 Presentation Designing the Context for Design

UXHK 2015 Presentation Designing the Context for DesignTed Kilian

?

The document emphasizes the importance of designers recognizing and working within constraints to effectively tackle design problems. It discusses the dynamics between makers, enablers, and consumers, highlighting the roles and motivations involved in the design process. Additionally, it outlines the significance of creating a supportive context for design that encourages creativity, risk-taking, and collaboration.Customer Experience for SMEs Key Person of Influence Talk

Customer Experience for SMEs Key Person of Influence TalkTed Kilian

?

The document discusses how a company's products and services should be designed holistically as experiences that deliver value to customers. It emphasizes that products exist within a larger context and companies should design based on understanding customers and their needs within their environments. The total product experience, from structure and flow to interaction and interface, should be crafted to solve customer problems and bring meaning while fitting within their contexts.The rules of indices

The rules of indicesYu Kok Hui

?

The document discusses rules for simplifying expressions involving indices (exponents). It defines indices as powers and explains that the plural of index is indices. It then presents four rules:

1) Multiplication of Indices: an Ī┴ am = an+m

2) Division of Indices: an Ī┬ am = an-m

3) For negative indices: a-m = 1/am

4) For Powers of Indices: (am)n = amn

The document applies these rules to simplify various expressions involving integer indices. It also extends the rules to expressions involving fractional indices obtained from roots.800═“╚╦ż╬&▒ń│▄┤Ū│┘;╩│ż┘ż┐żż&▒ń│▄┤Ū│┘;ż“▒ß▓╣╗Õ┤Ū┤Ū▒ĶżŪĘų╔óäI└Ē

800═“╚╦ż╬&▒ń│▄┤Ū│┘;╩│ż┘ż┐żż&▒ń│▄┤Ū│┘;ż“▒ß▓╣╗Õ┤Ū┤Ū▒ĶżŪĘų╔óäI└ĒTatsuya Sasaki

?

Hadoop is an open-source software framework for distributed storage and processing of large datasets across clusters of computers. It allows for the distributed processing of large datasets across clusters of nodes using simple programming models. Hadoop is highly scalable, running on thousands of nodes, and is designed to reliably handle failures at the hardware or software level.Uxhk 2015art of start workshop share.key

Uxhk 2015art of start workshop share.keyTed Kilian

?

The document outlines a workshop on 'The Art of the Start,' focusing on project initiation and design thinking. It includes discussions on client roles, project vision, objectives, and constraints, with structured exercises to identify problems and develop solutions. Key elements emphasize defining value propositions, the importance of inquiry, and iterative feedback in the design process.▒ß▓╣╗Õ┤Ū┤Ū▒Ķż“ęĄ╬±żŪ╩╣ż├żŲż▀ż┐

▒ß▓╣╗Õ┤Ū┤Ū▒Ķż“ęĄ╬±żŪ╩╣ż├żŲż▀ż┐Tatsuya Sasaki

?

Hadoop is an open-source software framework for distributed storage and processing of large datasets across clusters of computers. It was inspired by Google's MapReduce and GFS papers. Hadoop allows for the distributed processing of large data sets across clusters of commodity hardware. It is designed to scale up from single servers to thousands of machines, each offering local computation and storage.▒ß▓╣╗Õ┤Ū┤Ū▒Ķż“ęĄ╬±żŪ╩╣ż├żŲż▀ż▐żĘż┐

▒ß▓╣╗Õ┤Ū┤Ū▒Ķż“ęĄ╬±żŪ╩╣ż├żŲż▀ż▐żĘż┐Tatsuya Sasaki

?

Hadoop is an open-source software framework for distributed storage and processing of large datasets across clusters of computers. It allows for the distributed processing of large datasets across clusters of nodes using simple programming models. Hadoop can distribute data and computations across a cluster of commodity machines and scale to thousands of nodes, handling failures in an automatic way. Common uses of Hadoop include distributed computing, big data analytics, data mining, and scientific applications.961═“╚╦ż╬╩│ū┐ż“ų¦ż©żļźŪ®`ź┐ĮŌ╬÷

961═“╚╦ż╬╩│ū┐ż“ų¦ż©żļźŪ®`ź┐ĮŌ╬÷Tatsuya Sasaki

?

This document discusses Hadoop and its ecosystem. It covers Hadoop distributions like Cloudera and Amazon's Elastic MapReduce service. It also discusses running SQL-like queries using MapReduce and moving data between MySQL and Hadoop. Key algorithms like map and reduce functions are explained through examples. Different Hadoop deployment options on EC2 like standalone, Cloudera, and EMR are also summarized.Transitioning into UX: General Assembly Hong Kong 2015

Transitioning into UX: General Assembly Hong Kong 2015Ted Kilian

?

This document provides an overview of user experience (UX) design and how to transition into a career in UX. It defines UX and discusses how UX creates value for businesses. It outlines the key skillsets involved in UX work, such as user research, strategy, information architecture, and design. The document also describes the typical phases of a UX process from discovery to design to implementation and testing. Finally, it offers advice on how to begin developing UX skills through reading, online courses, networking, and hands-on projects. The overall message is that passion and experience are more important than technical skills when starting a career in UX.░õ░┐░┐░Ł▒╩┤ĪČ┘żŪż╬▒ß▓╣╗Õ┤Ū┤Ū▒Ķ└¹ė├

░õ░┐░┐░Ł▒╩┤ĪČ┘żŪż╬▒ß▓╣╗Õ┤Ū┤Ū▒Ķ└¹ė├Tatsuya Sasaki

?

This document discusses Hadoop and its usage at Cookpad. It covers topics like Hadoop architecture with MapReduce and HDFS, using Hadoop on Amazon EC2 with S3 storage, and common issues encountered with the S3 Native FileSystem in Hadoop. Code examples are provided for filtering log data using target IDs in Hadoop.▒Ę┤Ū│¦▓Ž│óźŪ®`ź┐ź┘®`ź╣ż¼ĄŪ│ĪżĘż┐▒│Š░ż╚╠žÅš

▒Ę┤Ū│¦▓Ž│óźŪ®`ź┐ź┘®`ź╣ż¼ĄŪ│ĪżĘż┐▒│Š░ż╚╠žÅšTatsuya Sasaki

?

2010/11/13ż╦ ¢|▒▒Ūķł¾ź╗źŁźÕźĻźŲźŻ├ŃÅŖ╗ß#5 żŪ╩╣ė├żĘż┐ź╣źķźżź╔żŪż╣ #THK_ITSHadoopī¦╚ļ╩┬└² in ź»ź├ź»źčź├ź╔

Hadoopī¦╚ļ╩┬└² in ź»ź├ź»źčź├ź╔Tatsuya Sasaki

?

1. Hadoop is a framework for distributed processing of large datasets across clusters of computers.

2. Hadoop can be used to perform tasks like large-scale sorting and data analysis faster than with traditional databases like MySQL.

3. Example applications of Hadoop include processing web server logs, managing user profiles for a large website, and performing machine learning on massive datasets.Ad

Recently uploaded (7)

PGConf.dev 2025 ▓╬╝ėźņź▌®`ź╚ (JPUGŠt╗ßüŃįOź╗ź▀ź╩®`2025 ░k▒Ē┘Y┴Ž)

PGConf.dev 2025 ▓╬╝ėźņź▌®`ź╚ (JPUGŠt╗ßüŃįOź╗ź▀ź╩®`2025 ░k▒Ē┘Y┴Ž)NTT DATA Technology & Innovation

?

PGConf.dev 2025 ▓╬╝ėźņź▌®`ź╚

(JPUGŠt╗ßüŃįOź╗ź▀ź╩®`2025 ░k▒Ē┘Y┴Ž)

2025─Ļ6į┬14╚š(═┴)

NTTźŪ®`ź┐

OSSźėźĖź═ź╣═Ų▀M╩ę

žæ║ļ ╠®▌oĪó│ž╠’ ┴▌╠½└╔├ŃÅŖ╗ß_ź┐®`ź▀ź╩źļź│ź▐ź¾ź╚?╚ļ┴”čĖ╦┘╗»_20250620. pptx. .

├ŃÅŖ╗ß_ź┐®`ź▀ź╩źļź│ź▐ź¾ź╚?╚ļ┴”čĖ╦┘╗»_20250620. pptx. .iPride Co., Ltd.

?

2025/06/20ż╬├ŃŪ┐╗ßżŪ░k▒ĒżĄżņż┐żŌż╬żŪż╣ĪŻProtect Your IoT Data with UbiBot's Private Platform.pptx

Protect Your IoT Data with UbiBot's Private Platform.pptxźµźėź▄ź├ź╚ ųĻ╩Į╗ß╔ń

?

Our on-premise IoT platform offers a secure and scalable solution for businesses, with features such as real-time monitoring, customizable alerts and open API support, and can be deployed on your own servers to ensure complete data privacy and control.Vibe Codingż“╩╝żßżĶż” ?Cursorż“└²ż╦Īóź╬®`ź│®`ź╔żŪż╬źūźĒź░źķź▀ź¾ź░╠Õ“Y?

Vibe Codingż“╩╝żßżĶż” ?Cursorż“└²ż╦Īóź╬®`ź│®`ź╔żŪż╬źūźĒź░źķź▀ź¾ź░╠Õ“Y?iPride Co., Ltd.

?

2025/06/20ż╬├ŃŪ┐╗ßżŪ░k▒ĒżĄżņż┐żŌż╬żŪż╣ĪŻ┤Ī▒§╝╝╩§╣▓ėą╗ß2025-06-05│ÕČ┘▒▒▒ĶĖķ▒▓§▒▓╣░∙│”│¾ż╬└ĒĮŌż╚īg╝∙.▒Ķ╗Õ┤┌

┤Ī▒§╝╝╩§╣▓ėą╗ß2025-06-05│ÕČ┘▒▒▒ĶĖķ▒▓§▒▓╣░∙│”│¾ż╬└ĒĮŌż╚īg╝∙.▒Ķ╗Õ┤┌Takuma Oda

?

Deep Researchż╬╝╝ągĖ┼ꬿõ╗Ņė├└²ż╩ż╔ż╦ż─żżżŲĮBĮķAd

YUI

- 1. YUI

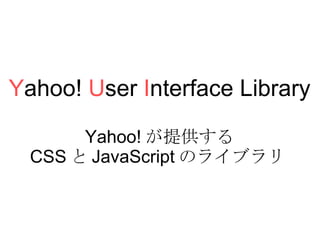

- 3. Y ahoo! U ser I nterface Library Yahoo! ż¼╠ß╣®ż╣żļ CSS ż╚ JavaScript ż╬źķźżźųźķźĻ

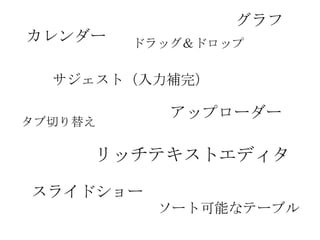

- 5. ź½źņź¾ź└®` źĻź├ź┴źŲźŁź╣ź╚ź©źŪźŻź┐ źóź├źūźĒ®`ź└®` ź╔źķź├ź░Ż”ź╔źĒź├źū ź┐źųŪążĻ╠µż© ź╣źķźżź╔źĘźń®` źĄźĖź¦ź╣ź╚Ż©╚ļ┴”ča═ĻŻ® ź░źķźš źĮ®`ź╚┐╔─▄ż╩źŲ®`źųźļ

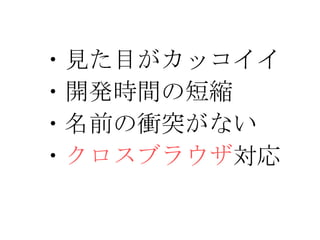

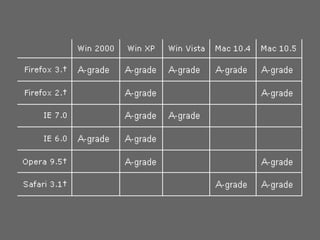

- 6. źßźĻź├ź╚

- 7. ?ęŖż┐─┐ż¼ź½ź├ź│źżźż ?ķ_░kĢrķgż╬Č╠┐s ?├¹Ū░ż╬ąn═╗ż¼ż╩żż ? ź»źĒź╣źųźķź”źČ īØÅĻ

- 8. ?

- 9. źŪźßźĻź├ź╚

- 11. ╩╣ż├żŲż▀ż▐żĘżńż” ( ? ”ž ? ) ?

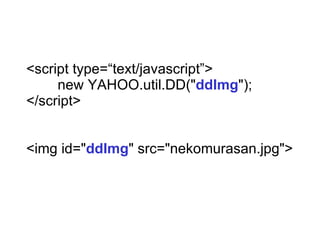

- 13. <script type=Ī░text/javascriptĪ▒> ĪĪĪĪ new YAHOO.util.DD(" ddImg "); </script> <img id=" ddImg " src=/sasata299/yui/"nekomurasan.jpg">

- 14. ź┐źųŪążĻ╠µż©

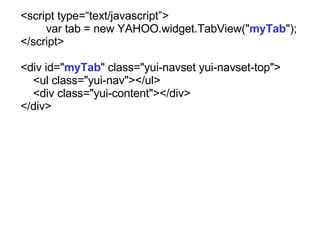

- 15. <script type=Ī░text/javascriptĪ▒> ĪĪĪĪ var tab = new YAHOO.widget.TabView(" myTab "); </script> <div id=" myTab " class="yui-navset yui-navset-top"> <ul class="yui-nav"></ul> <div class="yui-content"></div> </div>

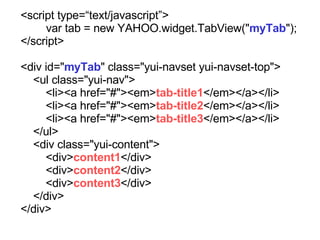

- 16. <script type=Ī░text/javascriptĪ▒> ĪĪĪĪ var tab = new YAHOO.widget.TabView(" myTab "); </script> <div id=" myTab " class="yui-navset yui-navset-top"> <ul class="yui-nav"> <li><a href="#"><em> tab-title1 </em></a></li> <li><a href="#"><em> tab-title2 </em></a></li> <li><a href="#"><em> tab-title3 </em></a></li> </ul> <div class="yui-content"> <div> content1 </div> <div> content2 </div> <div> content3 </div> </div> </div>

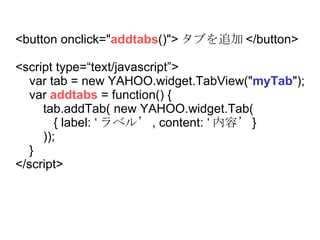

- 17. <button onclick=" addtabs ()"> ź┐źųż“ūĘ╝ė </button> <script type=Ī░text/javascriptĪ▒> var tab = new YAHOO.widget.TabView(" myTab "); var addtabs = function() { tab.addTab( new YAHOO.widget.Tab( { label: Ī« źķź┘źļĪ» , content: Ī« ─┌╚▌Ī» } )); } </script>

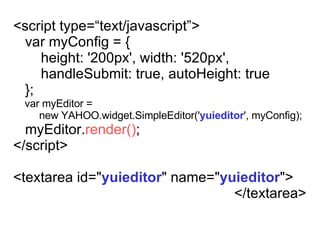

- 19. <script type=Ī░text/javascriptĪ▒> var myConfig = { height: '200px', width: '520px', handleSubmit: true, autoHeight: true }; var myEditor = new YAHOO.widget.SimpleEditor(' yuieditor ', myConfig); myEditor. render() ; </script> <textarea id=" yuieditor " name=" yuieditor "> </textarea>

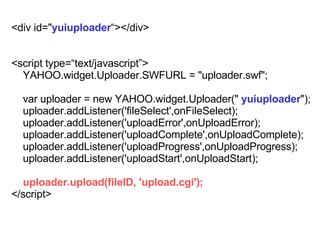

- 21. <div id=" yuiuploader Ī░></div> <script type=Ī░text/javascriptĪ▒> YAHOO.widget.Uploader.SWFURL = "uploader.swf"; var uploader = new YAHOO.widget.Uploader(" yuiuploader "); uploader.addListener('fileSelect',onFileSelect); uploader.addListener('uploadError',onUploadError); uploader.addListener('uploadComplete',onUploadComplete); uploader.addListener('uploadProgress',onUploadProgress); uploader.addListener('uploadStart',onUploadStart); uploader.upload(fileID, 'upload.cgi'); </script>

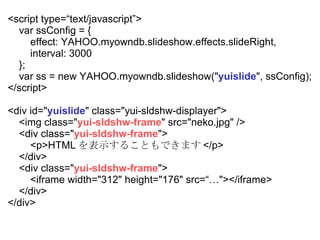

- 23. ź╣źķźżź╔źĘźń®` Ī∙ slideshow.js ż╚żżż”żŌż╬ż“╩╣ż├żŲżżż▐ż╣ĪŻ

- 24. <script type=Ī░text/javascriptĪ▒> var ssConfig = { effect: YAHOO.myowndb.slideshow.effects.slideRight, interval: 3000 }; var ss = new YAHOO.myowndb.slideshow(" yuislide ", ssConfig); </script> <div id=" yuislide " class="yui-sldshw-displayer"> <img class=" yui-sldshw-frame " src=/sasata299/yui/"neko.jpg" /> <div class=" yui-sldshw-frame "> <p>HTML ż“▒Ē╩Šż╣żļż│ż╚żŌżŪżŁż▐ż╣ </p> </div> <div class=" yui-sldshw-frame "> <iframe width="312" height="176" src=Ī░ĪŁ"></iframe> </div> </div>

- 25. ż▐ż╚żß

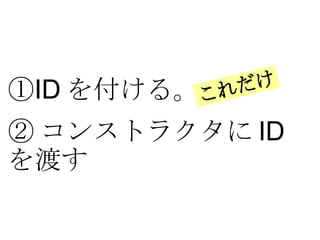

- 26. ó┘ ID ż“ĖČż▒żļĪŻ ó┌ ź│ź¾ź╣ź╚źķź»ź┐ż╦ ID ż“Č╔ż╣ ż│żņż└ż▒

- 27. YUI++ YUI ż“└¹ė├żĘżŲĪóČ╠ĢrķgżŪź½ź├ź│źżźż Web ź┌®`źĖż“ū„ż├ż┴żŃżżż▐żĘżńż”