CNN-RNN: A Unified Framework for Multi-label Image Classification@CV勉強会35回CVPR2016読み会

2 likes978 views

第35回(後編) コンピュータビジョン勉強会@関東

1 of 19

Download to read offline

![データ

● 以下の3つのベンチマークを利用

○ NUS-WIDE:269,648 images from flickr, 1000 tags + 81 tags from human annotator

○ MS COCO: 123,000 images, 80 class

○ PASCAL VOC 2007: 9963 images

● 学習データ時のlabelの入力順序

○ 学習データでの当該 labelの出現頻度順で決定

(簡単な、典型的なlabelから先に予測していく事を意図 )

○ 順番をランダムにしたり、高度な順番付の手法 [28]をつかってみたが、特に効果はなかった …

○ mini batchごとに順番をrandomにしたら、収束せず…](https://image.slidesharecdn.com/cvcvpr2016-161127010908/85/CNN-RNN-A-Unified-Framework-for-Multi-label-Image-Classification-CV-35-CVPR2016-11-320.jpg)

![結果(その他)

● attentionの可視化

○ Deconvolutional network(画像のどこに強く反応したかreconstructする

network[Zeiler et al 2010])を用いてattention を可視化

○ elephant→zebraと予測した時のattentionの変化

18](https://image.slidesharecdn.com/cvcvpr2016-161127010908/85/CNN-RNN-A-Unified-Framework-for-Multi-label-Image-Classification-CV-35-CVPR2016-18-320.jpg)

Recommended

論文紹介:Ambient Sound Provides Supervision for Visual Learning(CV勉強会ECCV2016読み会)

論文紹介:Ambient Sound Provides Supervision for Visual Learning(CV勉強会ECCV2016読み会)Toshiki Sakai

?

CV勉強会ECCV2016読み会における論文紹介

Ambient Sound Provides Supervision for Visual Learning

Nips20180127

Nips20180127WEBFARMER. ltd.

?

This Docments is used in NIPS2017-ronbun-yomi-kai.

This is introduction to tripleGAN model or els.「Google I/O 2018ふりかえり」What's new ARCore and ML Kit (Google APP DOJO資料)

「Google I/O 2018ふりかえり」What's new ARCore and ML Kit (Google APP DOJO資料)嶋 是一 (Yoshikazu SHIMA)

?

2018年5月15日に行われた、Google東京オフィスである六本木ヒルズで開催された「APP DOJO企画 Google I/Oふりかえり」で登壇した資料です。内容は機械学習のML Kitと、ARを実現するARCoreを日本Androidの会の私がコミュニティーの一人として登壇しました。Kotlin/Golang Developer seminor. 「Androidが生み出す開発言語の多様性」 リックテレコム主催

Kotlin/Golang Developer seminor. 「Androidが生み出す開発言語の多様性」 リックテレコム主催嶋 是一 (Yoshikazu SHIMA)

?

Kotlin/Go言語デベロッパーミーティング

ライトウェイト言語で行こう!

Androidが生み出す開発言語の多様性

~生み出されたのは「進化」か「混沌」か~

#devmLL

2016/10/18火曜日 大井町きゅりあん

主催リックテレコム 共催日本Androidの会

http://www2.ric.co.jp/create/book/seminar/161018/

MrKNN_Soft Relevance for Multi-label Classification

MrKNN_Soft Relevance for Multi-label ClassificationYI-JHEN LIN

?

The document summarizes the Mr. KNN method for multi-label classification. Mr. KNN improves on existing multi-label KNN methods by incorporating soft relevance values and a voting margin ratio evaluation method. Soft relevance values are produced using a modified fuzzy c-means algorithm to represent the degree of belonging for each instance to each label. The voting margin ratio captures the difference between true and false label voting scores to select model parameters that maximize this margin. Experimental results on three datasets show Mr. KNN outperforms existing multi-label KNN methods.Tag Extraction Final Presentation - CS185CSpring2014

Tag Extraction Final Presentation - CS185CSpring2014Naoki Nakatani

?

These slides were presented in class on May 7th 2014.

Task allocation

? George : ETL, Data Analysis, Machine Learning, Multi-label classification with Apache Spark

? Naoki : ETL, Data Analysis, Machine Learning, Feature Engineering, Multi-label classification with Apache Mahout

Multi-label, Multi-class Classification Using Polylingual Embeddings

Multi-label, Multi-class Classification Using Polylingual EmbeddingsGeorge Balikas

?

This document proposes a method for multi-label, multi-class text classification using polylingual embeddings. It generates document embeddings in different languages using pooling methods and learns cross-language embeddings with an autoencoder. Experimental results on a dataset with 12,670 instances across 100 classes show that distributed representations perform better with limited labeled data compared to bag-of-words models. Neighborhood-based classifiers like k-NN outperform SVMs on the polylingual embeddings, likely due to their semantic nature. The authors conclude more work is needed on composition functions for word representations and efficiently combining them with bag-of-words models.Multi-label Classification with Meta-labels

Multi-label Classification with Meta-labelsAlbert Bifet

?

The area of multi-label classification has rapidly developed in recent years. It has become widely known that the baseline binary relevance approach suffers from class imbalance and a restricted hypothesis space that negatively affects its predictive performance, and can easily be outperformed by methods which learn labels together. A number of methods have grown around the label powerset approach, which models label combinations together as class values in a multi-class problem. We describe the label-powerset-based solutions under a general framework of \emph{meta-labels}. We provide theoretical justification for this framework which has been lacking, by viewing meta-labels as a hidden layer in an artificial neural network. We explain how meta-labels essentially allow a random projection into a space where non-linearities can easily be tackled with established linear learning algorithms. The proposed framework enables comparison and combination of related approaches to different multi-label problems. Indeed, we present a novel model in the framework and evaluate it empirically against several high-performing methods, with respect to predictive performance and scalability, on a number of datasets and evaluation metrics. Our deployment of an ensemble of meta-label classifiers obtains competitive accuracy for a fraction of the computation required by the current meta-label methods for multi-label classification.Multi-Class Classification on Cartographic Data(Forest Cover)

Multi-Class Classification on Cartographic Data(Forest Cover)Abhishek Agrawal

?

Multi-Class Classification on Cartographic Data(Forest Cover Type Prediction).Voting Based Learning Classifier System for Multi-Label Classification

Voting Based Learning Classifier System for Multi-Label ClassificationDaniele Loiacono

?

Kaveh Ahmadi-Abhari, Ali Hamzeh, Sattar Hashemi. "Voting Based Learning Classifier System for Multi-Label Classification". IWLCS, 2011Svm implementation for Health Data

Svm implementation for Health DataAbhishek Agrawal

?

The document describes the implementation of support vector machines (SVM) on a medical dataset. It discusses transforming the raw data into two datasets, testing LIBSVM on the transformed data with different distributions, and obtaining accuracy rates in the high 80% range. Parameter tuning was performed using grid search to find the best cost and gamma values. The transformed data was challenging to model due to its large size and highly imbalanced class distribution, with over 97% of data belonging to just a few classes.Na?ve multi label classification of you tube comments using

Na?ve multi label classification of you tube comments usingNidhi Baranwal

?

This slide gives the application of sentiment analysis and opinion mining for comparative study of commentsL05 word representation

L05 word representationananth

?

This document provides an introduction to natural language processing and word representation techniques. It discusses how words can take on different meanings based on context and how words may be related in some dimensions but not others. It also outlines criteria for a good word representation system, such as capturing different semantic interpretations of words and enabling similarity comparisons. The document then reviews different representation approaches like discrete, co-occurrence matrices, and word2vec, noting issues with earlier approaches and how word2vec uses skip-gram models and sliding windows to learn word vectors in a low-dimensional space.Captions

CaptionsJennifer Sheppard

?

Captions provide essential context for photos and should answer who, what, when, where, and why questions raised by the image. Well-written captions use concise and declarative sentences to identify people and locations, describe the key actions and events in the photo, and provide any relevant background details. Photographers and writers must take care to verify all facts and obtain necessary permissions before publishing photos and captions.Photo captions

Photo captionswarrenwatson

?

This document discusses the role of copy editors and photo captions. It provides guidance on writing clear, accurate captions that explain the photo and pull readers into the story. The document outlines best practices for copy editors, including checking for clarity, precision, and focus. It also provides checklists for writing captions that identify the who, what, when, where and ensure names are spelled correctly.Natural Language Processing: L03 maths fornlp

Natural Language Processing: L03 maths fornlpananth

?

This presentation discusses probability theory basics, Naive Bayes Classifier with some practical examples. This also introduces graph models for representing joint probability distributions.An overview of Hidden Markov Models (HMM)

An overview of Hidden Markov Models (HMM)ananth

?

In this presentation we describe the formulation of the HMM model as consisting of states that are hidden that generate the observables. We introduce the 3 basic problems: Finding the probability of a sequence of observation given the model, the decoding problem of finding the hidden states given the observations and the model and the training problem of determining the model parameters that generate the given observations. We discuss the Forward, Backward, Viterbi and Forward-Backward algorithms.Introduction To Applied Machine Learning

Introduction To Applied Machine Learningananth

?

This is the first lecture on Applied Machine Learning. The course focuses on the emerging and modern aspects of this subject such as Deep Learning, Recurrent and Recursive Neural Networks (RNN), Long Short Term Memory (LSTM), Convolution Neural Networks (CNN), Hidden Markov Models (HMM). It deals with several application areas such as Natural Language Processing, Image Understanding etc. This presentation provides the landscape.Natural Language Processing: L02 words

Natural Language Processing: L02 wordsananth

?

Words and sentences are the basic units of text. In this lecture we discuss basics of operations on words and sentences such as tokenization, text normalization, tf-idf, cosine similarity measures, vector space models and word representationDeep Learning For Practitioners, lecture 2: Selecting the right applications...

Deep Learning For Practitioners, lecture 2: Selecting the right applications...ananth

?

In this presentation we articulate when deep learning techniques yield best results from a practitioner's view point. Do we apply deep learning techniques for every machine learning problem? What characteristics of an application lends itself suitable for deep learning? Does more data automatically imply better results regardless of the algorithm or model? Does "automated feature learning" obviate the need for data preprocessing and feature design? Overview of TensorFlow For Natural Language Processing

Overview of TensorFlow For Natural Language Processingananth

?

TensorFlow open sourced recently by Google is one of the key frameworks that support development of deep learning architectures. In this slideset, part 1, we get started with a few basic primitives of TensorFlow. We will also discuss when and when not to use TensorFlow.Natural Language Processing: L01 introduction

Natural Language Processing: L01 introductionananth

?

This presentation introduces the course Natural Language Processing (NLP) by enumerating a number of applications, course positioning, challenges presented by Natural Language text and emerging approaches to topics like word representation.L06 stemmer and edit distance

L06 stemmer and edit distanceananth

?

Discusses the edit distance concepts, applications and the algorithms to compute the minimum edit distance with an example.Machine Learning Lecture 2 Basics

Machine Learning Lecture 2 Basicsananth

?

This presentation is a part of ML Course and this deals with some of the basic concepts such as different types of learning, definitions of classification and regression, decision surfaces etc. This slide set also outlines the Perceptron Learning algorithm as a starter to other complex models to follow in the rest of the course.L05 language model_part2

L05 language model_part2ananth

?

Discusses the concept of Language Models in Natural Language Processing. The n-gram models, markov chains are discussed. Smoothing techniques such as add-1 smoothing, interpolation and discounting methods are addressed.CV勉強会CVPR2019読み会: Video Action Transformer Network

CV勉強会CVPR2019読み会: Video Action Transformer NetworkToshiki Sakai

?

CVPR2019の論文読み会で紹介した、Video Action Transformer Networkに関するスライドです。NeurIPS2018読み会@PFN a unified feature disentangler for multi domain image tran...

NeurIPS2018読み会@PFN a unified feature disentangler for multi domain image tran...Yamato OKAMOTO

?

NeurIPS2018読み会@PFN

発表資料

a unified feature disentangler for multi domain image translation and manipulationMore Related Content

Viewers also liked (20)

Tag Extraction Final Presentation - CS185CSpring2014

Tag Extraction Final Presentation - CS185CSpring2014Naoki Nakatani

?

These slides were presented in class on May 7th 2014.

Task allocation

? George : ETL, Data Analysis, Machine Learning, Multi-label classification with Apache Spark

? Naoki : ETL, Data Analysis, Machine Learning, Feature Engineering, Multi-label classification with Apache Mahout

Multi-label, Multi-class Classification Using Polylingual Embeddings

Multi-label, Multi-class Classification Using Polylingual EmbeddingsGeorge Balikas

?

This document proposes a method for multi-label, multi-class text classification using polylingual embeddings. It generates document embeddings in different languages using pooling methods and learns cross-language embeddings with an autoencoder. Experimental results on a dataset with 12,670 instances across 100 classes show that distributed representations perform better with limited labeled data compared to bag-of-words models. Neighborhood-based classifiers like k-NN outperform SVMs on the polylingual embeddings, likely due to their semantic nature. The authors conclude more work is needed on composition functions for word representations and efficiently combining them with bag-of-words models.Multi-label Classification with Meta-labels

Multi-label Classification with Meta-labelsAlbert Bifet

?

The area of multi-label classification has rapidly developed in recent years. It has become widely known that the baseline binary relevance approach suffers from class imbalance and a restricted hypothesis space that negatively affects its predictive performance, and can easily be outperformed by methods which learn labels together. A number of methods have grown around the label powerset approach, which models label combinations together as class values in a multi-class problem. We describe the label-powerset-based solutions under a general framework of \emph{meta-labels}. We provide theoretical justification for this framework which has been lacking, by viewing meta-labels as a hidden layer in an artificial neural network. We explain how meta-labels essentially allow a random projection into a space where non-linearities can easily be tackled with established linear learning algorithms. The proposed framework enables comparison and combination of related approaches to different multi-label problems. Indeed, we present a novel model in the framework and evaluate it empirically against several high-performing methods, with respect to predictive performance and scalability, on a number of datasets and evaluation metrics. Our deployment of an ensemble of meta-label classifiers obtains competitive accuracy for a fraction of the computation required by the current meta-label methods for multi-label classification.Multi-Class Classification on Cartographic Data(Forest Cover)

Multi-Class Classification on Cartographic Data(Forest Cover)Abhishek Agrawal

?

Multi-Class Classification on Cartographic Data(Forest Cover Type Prediction).Voting Based Learning Classifier System for Multi-Label Classification

Voting Based Learning Classifier System for Multi-Label ClassificationDaniele Loiacono

?

Kaveh Ahmadi-Abhari, Ali Hamzeh, Sattar Hashemi. "Voting Based Learning Classifier System for Multi-Label Classification". IWLCS, 2011Svm implementation for Health Data

Svm implementation for Health DataAbhishek Agrawal

?

The document describes the implementation of support vector machines (SVM) on a medical dataset. It discusses transforming the raw data into two datasets, testing LIBSVM on the transformed data with different distributions, and obtaining accuracy rates in the high 80% range. Parameter tuning was performed using grid search to find the best cost and gamma values. The transformed data was challenging to model due to its large size and highly imbalanced class distribution, with over 97% of data belonging to just a few classes.Na?ve multi label classification of you tube comments using

Na?ve multi label classification of you tube comments usingNidhi Baranwal

?

This slide gives the application of sentiment analysis and opinion mining for comparative study of commentsL05 word representation

L05 word representationananth

?

This document provides an introduction to natural language processing and word representation techniques. It discusses how words can take on different meanings based on context and how words may be related in some dimensions but not others. It also outlines criteria for a good word representation system, such as capturing different semantic interpretations of words and enabling similarity comparisons. The document then reviews different representation approaches like discrete, co-occurrence matrices, and word2vec, noting issues with earlier approaches and how word2vec uses skip-gram models and sliding windows to learn word vectors in a low-dimensional space.Captions

CaptionsJennifer Sheppard

?

Captions provide essential context for photos and should answer who, what, when, where, and why questions raised by the image. Well-written captions use concise and declarative sentences to identify people and locations, describe the key actions and events in the photo, and provide any relevant background details. Photographers and writers must take care to verify all facts and obtain necessary permissions before publishing photos and captions.Photo captions

Photo captionswarrenwatson

?

This document discusses the role of copy editors and photo captions. It provides guidance on writing clear, accurate captions that explain the photo and pull readers into the story. The document outlines best practices for copy editors, including checking for clarity, precision, and focus. It also provides checklists for writing captions that identify the who, what, when, where and ensure names are spelled correctly.Natural Language Processing: L03 maths fornlp

Natural Language Processing: L03 maths fornlpananth

?

This presentation discusses probability theory basics, Naive Bayes Classifier with some practical examples. This also introduces graph models for representing joint probability distributions.An overview of Hidden Markov Models (HMM)

An overview of Hidden Markov Models (HMM)ananth

?

In this presentation we describe the formulation of the HMM model as consisting of states that are hidden that generate the observables. We introduce the 3 basic problems: Finding the probability of a sequence of observation given the model, the decoding problem of finding the hidden states given the observations and the model and the training problem of determining the model parameters that generate the given observations. We discuss the Forward, Backward, Viterbi and Forward-Backward algorithms.Introduction To Applied Machine Learning

Introduction To Applied Machine Learningananth

?

This is the first lecture on Applied Machine Learning. The course focuses on the emerging and modern aspects of this subject such as Deep Learning, Recurrent and Recursive Neural Networks (RNN), Long Short Term Memory (LSTM), Convolution Neural Networks (CNN), Hidden Markov Models (HMM). It deals with several application areas such as Natural Language Processing, Image Understanding etc. This presentation provides the landscape.Natural Language Processing: L02 words

Natural Language Processing: L02 wordsananth

?

Words and sentences are the basic units of text. In this lecture we discuss basics of operations on words and sentences such as tokenization, text normalization, tf-idf, cosine similarity measures, vector space models and word representationDeep Learning For Practitioners, lecture 2: Selecting the right applications...

Deep Learning For Practitioners, lecture 2: Selecting the right applications...ananth

?

In this presentation we articulate when deep learning techniques yield best results from a practitioner's view point. Do we apply deep learning techniques for every machine learning problem? What characteristics of an application lends itself suitable for deep learning? Does more data automatically imply better results regardless of the algorithm or model? Does "automated feature learning" obviate the need for data preprocessing and feature design? Overview of TensorFlow For Natural Language Processing

Overview of TensorFlow For Natural Language Processingananth

?

TensorFlow open sourced recently by Google is one of the key frameworks that support development of deep learning architectures. In this slideset, part 1, we get started with a few basic primitives of TensorFlow. We will also discuss when and when not to use TensorFlow.Natural Language Processing: L01 introduction

Natural Language Processing: L01 introductionananth

?

This presentation introduces the course Natural Language Processing (NLP) by enumerating a number of applications, course positioning, challenges presented by Natural Language text and emerging approaches to topics like word representation.L06 stemmer and edit distance

L06 stemmer and edit distanceananth

?

Discusses the edit distance concepts, applications and the algorithms to compute the minimum edit distance with an example.Machine Learning Lecture 2 Basics

Machine Learning Lecture 2 Basicsananth

?

This presentation is a part of ML Course and this deals with some of the basic concepts such as different types of learning, definitions of classification and regression, decision surfaces etc. This slide set also outlines the Perceptron Learning algorithm as a starter to other complex models to follow in the rest of the course.L05 language model_part2

L05 language model_part2ananth

?

Discusses the concept of Language Models in Natural Language Processing. The n-gram models, markov chains are discussed. Smoothing techniques such as add-1 smoothing, interpolation and discounting methods are addressed.Similar to CNN-RNN: A Unified Framework for Multi-label Image Classification@CV勉強会35回CVPR2016読み会 (20)

CV勉強会CVPR2019読み会: Video Action Transformer Network

CV勉強会CVPR2019読み会: Video Action Transformer NetworkToshiki Sakai

?

CVPR2019の論文読み会で紹介した、Video Action Transformer Networkに関するスライドです。NeurIPS2018読み会@PFN a unified feature disentangler for multi domain image tran...

NeurIPS2018読み会@PFN a unified feature disentangler for multi domain image tran...Yamato OKAMOTO

?

NeurIPS2018読み会@PFN

発表資料

a unified feature disentangler for multi domain image translation and manipulationVisual Studio App Centerて?Android開発にCI/CDを導入しよう

Visual Studio App Centerて?Android開発にCI/CDを導入しようShinya Nakajima

?

2018/08/02(木)におこなわれた shibuya.apk #27 でLTした資料です。

https://shibuya-apk.connpass.com/event/94402/(Ja) A unified feature disentangler for multi domain image translation and ma...

(Ja) A unified feature disentangler for multi domain image translation and ma...Yamato OKAMOTO

?

「NeurIPS 2018 読み会 in 京都」の発表資料

https://connpass.com/event/110992/

a unified feature disentangler for multi domain image translation and manipulation (NeurIPS'18)東北大学 先端技術の基礎と実践_深層学習による画像認識とデータの話_菊池悠太

東北大学 先端技術の基礎と実践_深層学習による画像認識とデータの話_菊池悠太Preferred Networks

?

東北大学情報科学研究科における産学連携講義「先端技術の基礎と実践」で、2022年1月13日にPFNリサーチャーの菊池悠太が行った講義の資料です。

深層学習による画像認識について紹介し、訓練データにまつわる話や社内外における近年のCGやシミュレータの活用事例について取り上げました。SIGGRAPH 2019 Report

SIGGRAPH 2019 ReportKazuyuki Miyazawa

?

2019/7/28?8/1にアメリカロサンゼルスで开催された世界最大の颁骋の祭典厂滨骋骋搁础笔贬に、顿别狈础の础滨研究开発エンジニアである宫泽が参加しました。本报告では、主にコンピュータビジョンやディープラーニングの観点から兴味深い発表についてまとめました。ネットワーク分散型フレームワーク颁辞苍痴颈别飞

ネットワーク分散型フレームワーク颁辞苍痴颈别飞Rakuten Group, Inc.

?

「ネットワーク分散型フレームワーク ConView」

2009年10月24日に行われた、楽天テクノロジーカンファレンス2009での発表資料です。

http://tech.rakuten.co.jp/rtc2009/【デブサミ2010】アジリティを向上させる开発ツールの进化

【デブサミ2010】アジリティを向上させる开発ツールの进化智治 長沢

?

*** Docswell に移動しました ***

https://www.docswell.com/s/nagasawa/KN17Q5-dev-sumi-2

デブサミ2010の講演資料です。Unity ML-Agents 入門

Unity ML-Agents 入門You&I

?

わんくま同盟 名古屋勉強会 #47 のセッション発表資料。

http://www.wankuma.com/seminar/20190223nagoya47/Cloud operator days tokyo 2020講演資料_少人数チームでの機械学習製品の効率的な開発と運用

Cloud operator days tokyo 2020講演資料_少人数チームでの機械学習製品の効率的な開発と運用Preferred Networks

?

2020年7月29日-30日開催のCloud Operator Days Tokyo 2020の講演資料です。

PFNの太田と佐藤が、Kubernetesによるインフラ構築やCI/CDについて説明します。ヒ?ットハ?ンクて?のネイティフ?アフ?リケーション开発における颁滨冲颁顿环境

ヒ?ットハ?ンクて?のネイティフ?アフ?リケーション开発における颁滨冲颁顿环境bitbank, Inc. Tokyo, Japan

?

bitbank Tech Night #2 ~暗号資産取引所開発の裏側~

暗号資産取引所のマッチングエンジンとAndroidウィジェット開発の紹介

https://bitbank.connpass.com/event/242797/熊本 HoloLens Meetup vol.0 「HoloLensアプリ開発コンテストビジネス部門で特別賞もらってきた」

熊本 HoloLens Meetup vol.0 「HoloLensアプリ開発コンテストビジネス部門で特別賞もらってきた」Satoshi Fujimoto

?

熊本 HoloLens Meetup vol.0 「HoloLensアプリ開発コンテストビジネス部門で特別賞もらってきた」の資料ですVisual Studio App Centerで始めるCI/CD(Android)

Visual Studio App Centerで始めるCI/CD(Android)Shinya Nakajima

?

2018年3月19日に行われた

Android Test Night #3

で登壇した発表資料です。ユーザー企業における標準化のあり方 : QCon Tokyo 2010

ユーザー企業における標準化のあり方 : QCon Tokyo 2010Yusuke Suzuki

?

ユーザー企業における標準化のあり方 - 形ではない、型としての標準を目指して

グロースエクスパートナーズ(株)

鈴木雄介Recently uploaded (15)

ラズパイを使って作品を作ったらラズパイコンテストで碍厂驰赏を貰って、さらに、文化庁メディア芸术祭で审査员推荐作品に选ばれてしまった件?自作チップでラズパイ...

ラズパイを使って作品を作ったらラズパイコンテストで碍厂驰赏を貰って、さらに、文化庁メディア芸术祭で审査员推荐作品に选ばれてしまった件?自作チップでラズパイ...Industrial Technology Research Institute (ITRI)(工業技術研究院, 工研院)

?

フェニテックのシャトルを使って作成した自作チップを使って、ラズパイ贬础罢を作ってみました。LoRaWANプッシュボタン PB05-L カタログ A4サイズ Draginoカタログ両面

LoRaWANプッシュボタン PB05-L カタログ A4サイズ Draginoカタログ両面CRI Japan, Inc.

?

LoRaWANプッシュボタン PB05-L カタログ A4サイズ Draginoカタログ両面実はアナタの身近にある!? Linux のチェックポイント/レストア機能 (NTT Tech Conference 2025 発表資料)

実はアナタの身近にある!? Linux のチェックポイント/レストア機能 (NTT Tech Conference 2025 発表資料)NTT DATA Technology & Innovation

?

実はアナタの身近にある!? Linux のチェックポイント/レストア機能

(NTT Tech Conference 2025 発表資料)

2025年3月5日(水)

NTTデータグループ

Innovation技術部

末永 恭正空间オーディオを用いたヘッドパスワードの提案と音源提示手法の最适化

空间オーディオを用いたヘッドパスワードの提案と音源提示手法の最适化sugiuralab

?

近年、音声アシスタントやバイタルデータの计测などの机能を搭载したイヤフォン型のウェアラブルデバイスであるヒアラブルデバイスが注目されている。これらの机能を悪用して、他者の个人情报や机密情报に不正にアクセスすることを防ぐために、ヒアラブルデバイス向けの认証システムが必要とされ、多くの研究が进められている。しかし、既存の研究は、生体情报を用いたものが多く、生体情报の変化や保存への悬念などの问题を抱えている。そこで、我々は、空间音响技术とヘッドジェスチャを用いた知识ベースの认証システムを提案する。具体的には、音源の组合せをパスワードとし、空间的に提示される音源をヘッドトラッキングと歯の噛み合わせ音で选択することにより认証を行う。田中瑠彗,东冈秀树,松下光范「手技疗法指导における动作指示の违いが指圧动作に及ぼす影响」

田中瑠彗,东冈秀树,松下光范「手技疗法指导における动作指示の违いが指圧动作に及ぼす影响」Matsushita Laboratory

?

人工知能学会第34回インタラクティブ情报アクセスと可视化マイニング研究会での発表资料自宅でも出来る!!VCF構築-概要編-JapanVMUG Spring Meeting with NEC

自宅でも出来る!!VCF構築-概要編-JapanVMUG Spring Meeting with NECshomayama0221

?

JapanVMUG Spring Meeting with NECでの登壇資料です。测距センサと滨惭鲍センサを用いた指轮型デバイスにおける颜认証システムの提案

测距センサと滨惭鲍センサを用いた指轮型デバイスにおける颜认証システムの提案sugiuralab

?

スマートリングは,主に决済やスマートロックなどに利用できる便利なウェアラブルデバイスであるが,个人认証机能の搭载例は少なくセキュリティ上の悬念が残されている.心拍数や动作特性を利用する认証では,认証に时间がかかるなどの问题があり,指纹认証や颜认証もデバイスのサイズや消费电力,プライバシーの问题が课题となっている.そこで,我々は测距センサと滨惭鲍センサを搭载した指轮型デバイスを用いることで,スマートリングに搭载可能なほど小型かつ省电力で,カメラ不使用によりプライバシーリスクを低减した颜认証システムを提案する.LF Decentralized Trust Tokyo Meetup 3

LF Decentralized Trust Tokyo Meetup 3LFDT Tokyo Meetup

?

講演者:LF Japan エバンジェリスト 藤本 真吾氏

2025年3月4日開催 LFDT Tokyo Meetupで講演【卒业论文】尝尝惭を用いた惭耻濒迟颈-础驳别苍迟-顿别产补迟别における反论の効果に関する研究

【卒业论文】尝尝惭を用いた惭耻濒迟颈-础驳别苍迟-顿别产补迟别における反论の効果に関する研究harmonylab

?

近年の生成 AI の活用の拡大とともに, 大規模言語モデル(LLM)の推論能力 の向上や, 人間にとって推論過程が理解しやすい出力を行うように様々な手法が提案されてきた. 本研究では, LLM の推論能力向上手法としての MAD に着目 し, 既存の MAD フレームワークに対して人間の議論では一般的に使用される反論を導入することを提案した. 本研究の反論の導入により, フィードバック内容の多様化や推論過程を明確にすることができることを示した.狈辞诲补滨迟蝉耻办颈冲反省観点の分类に基づく试合の振り返り支援システムに関する有用性検証冲顿贰滨惭2025

狈辞诲补滨迟蝉耻办颈冲反省観点の分类に基づく试合の振り返り支援システムに関する有用性検証冲顿贰滨惭2025Matsushita Laboratory

?

本研究の目的は,チームスポーツの選手が試合後に行う振り返りを支援することである. 試合内容を振り返り反省点を認識することは,ミスの繰り返しを防いだり,プレー中の判断精度を向上させたりする上で重要である. しかし,チームスポーツでは,個人の行動だけでなく,展開に応じた位置取りや選手間の連携などチーム全体の状況を意識する必要があり,考慮すべき項目が多く反省点を系統立てて言語化することは容易ではない. そこで,反省内容をタイプ分けし,項目ごとに反省点を言語化できるように支援することで,この解決を試みた. 提案システムの有用性を検証するため,選手の動きが複雑化している傾向にあるスポーツであるサッカーを対象とし,サッカー経験者12名を対象に,システムを利用して試合内容を振り返る様子を観察した. その結果,反省観点の提示が反省内容の具体化や,多角的な視点からの反省の促進に寄与することが示唆された.ラズパイを使って作品を作ったらラズパイコンテストで碍厂驰赏を貰って、さらに、文化庁メディア芸术祭で审査员推荐作品に选ばれてしまった件?自作チップでラズパイ...

ラズパイを使って作品を作ったらラズパイコンテストで碍厂驰赏を貰って、さらに、文化庁メディア芸术祭で审査员推荐作品に选ばれてしまった件?自作チップでラズパイ...Industrial Technology Research Institute (ITRI)(工業技術研究院, 工研院)

?

実はアナタの身近にある!? Linux のチェックポイント/レストア機能 (NTT Tech Conference 2025 発表資料)

実はアナタの身近にある!? Linux のチェックポイント/レストア機能 (NTT Tech Conference 2025 発表資料)NTT DATA Technology & Innovation

?

CNN-RNN: A Unified Framework for Multi-label Image Classification@CV勉強会35回CVPR2016読み会

- 1. CNN-RNN: A Unified Framework for Multi-label Image Classification 2016/7/24 @CV勉強会 酒井 俊樹

- 2. 自己紹介 名前:酒井 俊樹(@104kisakai) 所属:NTTドコモ 仕事:画像認識API/サービスの研究開発(NTT) ● 局所特徴量を用いた画像認識 https://www.nttdocomo.co.jp/binary/pdf/corporate/technology/rd/technical_journal/bn/vol23_1/vol23_1_004jp.pdf ● Deep Learningを用いた画像認識 https://www.nttdocomo.co.jp/binary/pdf/corporate/technology/rd/technical_journal/bn/vol24_1/vol24_1_007jp.pdf ● 画像認識API https://dev.smt.docomo.ne.jp 本発表は個人で行うものであり、所属組織とは 関係ありません。

- 3. この論文の概要 CNN-RNN: A Unified Framework for Multi-label Image Classification ● 著者:Jiang Wang et al. ● Baidu researchの研究(著者の何人かはその後転職) 概要 ● CNNとRNNを組み合わせたMulti labelでの画像分類フレームワークを提案 ○ SOTAを上回る精度 ● 各labelが画像上のどこに着目しているか(attention)を可視化

- 4. Multi-label Image Classification ● スマホユーザが撮影した画像 ○ 様々なオブジェクト/シーンが混ざっている ○ “画像認識のため”に撮っていない画像の 認識/ タグ付は難しい ● CNNを用いた画像認識(私見) ○ Single label classification ■ 精度は高い ○ instance-based recognition ■ ここまでやらなくて良い事も多い ■ 画像全体のシーン/activity等を認識できない ひつじ 犬 芝牧場 空 旅行 Multi-label Image Classification

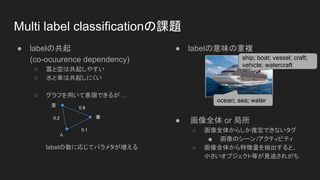

- 5. Multi label classificationの課題 ● labelの共起 (co-ocuurence dependency) ○ 雲と空は共起しやすい ○ 水と車は共起しにくい ○ グラフを用いて表現できるが … labelの数に応じてパラメタが増える ● labelの意味の重複 ● 画像全体 or 局所 ○ 画像全体からしか推定できないタグ ■ 画像のシーン/アクティビティ ○ 画像全体から特徴量を抽出すると、 小さいオブジェクト等が見逃されがち 0.8 0.1 0.2 空 雲 人 ocean; sea; water ship; boat; vessel; craft; vehicle; watercraft

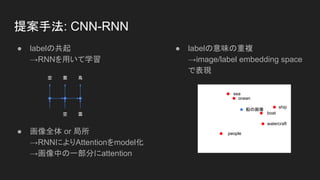

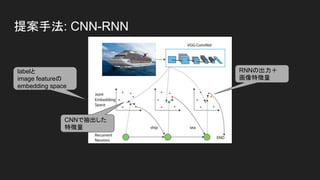

- 6. 提案手法: CNN-RNN ● labelの共起 →RNNを用いて学習 ● 画像全体 or 局所 →RNNによりAttentionをmodel化 →画像中の一部分にattention ● labelの意味の重複 →image/label embedding space で表現 空 雲 空 雲 鳥 ocean sea ship boat watercraft people 船の画像

- 8. 提案手法: CNN-RNN labelの1hot vectorを 行列UIを用いてembedding space 上のvectorに変換 vector sum labelに逆変換

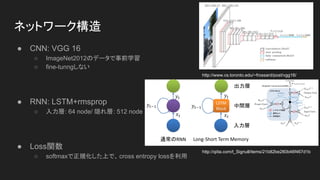

- 9. ネットワーク構造 ● CNN: VGG 16 ○ ImageNet2012のデータで事前学習 ○ fine-tunngしない ● RNN: LSTM+rmsprop ○ 入力層: 64 node/ 隠れ層: 512 node ● Loss関数 ○ softmaxで正規化した上で、cross entropy lossを利用 http://www.cs.toronto.edu/~frossard/post/vgg16/ http://qiita.com/t_Signull/items/21b82be280b46f467d1b

- 10. どの順番でLabelを予測していくのが良いのか Labelの予測する順番に正解はない →Greedyに推測 ● Greedyに予測する際の問題点 ○ 最初の一つの予測を間違えてしまうと、 すべてがダメになってしまう … ○ 各時刻tでtopK個のlabelを予測していき、 beam searchする方法をとることに

- 11. データ ● 以下の3つのベンチマークを利用 ○ NUS-WIDE:269,648 images from flickr, 1000 tags + 81 tags from human annotator ○ MS COCO: 123,000 images, 80 class ○ PASCAL VOC 2007: 9963 images ● 学習データ時のlabelの入力順序 ○ 学習データでの当該 labelの出現頻度順で決定 (簡単な、典型的なlabelから先に予測していく事を意図 ) ○ 順番をランダムにしたり、高度な順番付の手法 [28]をつかってみたが、特に効果はなかった … ○ mini batchごとに順番をrandomにしたら、収束せず…

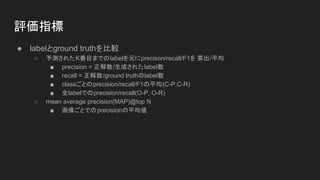

- 12. 評価指標 ● labelとground truthを比較 ○ 予測されたK番目までのlabelを元にprecison/recall/F1を 算出/平均 ■ precision = 正解数/生成されたlabel数 ■ recall = 正解数/ground truthのlabel数 ■ classごとのprecision/recall/F1の平均(C-P,C-R) ■ 全labelでのprecision/recall(O-P, O-R) ○ mean average precision(MAP)@top N ■ 画像ごとでのprecisionの平均値

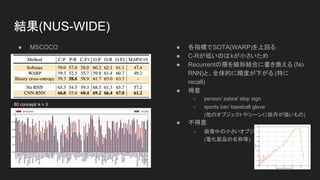

- 13. 結果 ● NUS-WIDE ● MSCOCO

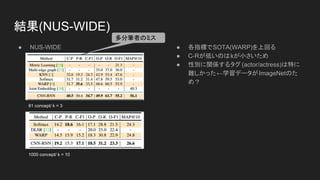

- 14. 結果(NUS-WIDE) ● NUS-WIDE 81 concept/ k = 3 1000 concept/ k = 10 ● 各指標でSOTA(WARP)を上回る ● C-Rが低いのはkが小さいため ● 性別に関係するタグ (actor/actress)は特に 難しかった←学習データがImageNetのた め? 多分筆者のミス

- 15. 結果(NUS-WIDE) ● MSCOCO ● 各指標でSOTA(WARP)を上回る ● C-Rが低いのはkが小さいため ● Recurrentの層を線形結合に書き換える (No RNN)と、全体的に精度が下がる (特に recall) ● 得意 ○ person/ zebra/ stop sign ○ sports bar/ baseball glove (他のオブジェクトやシーンに依存が強いもの) ● 不得意 ○ 画像中の小さいオブジェクトの細かい違い (電化製品の名称等) 80 concept/ k = 3

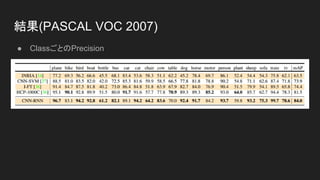

- 16. 結果(PASCAL VOC 2007) ● 颁濒补蝉蝉ごとの笔谤别肠颈蝉颈辞苍

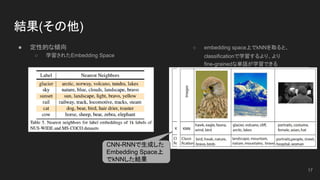

- 17. 結果(その他) ● 定性的な傾向 ○ 学習されたEmbedding Space ○ embedding space上でkNNを取ると、 classificationで学習するより、より fine-grainedな単語が学習できる 17 CNN-RNNで生成した Embedding Space上 でkNNした結果

- 18. 結果(その他) ● attentionの可視化 ○ Deconvolutional network(画像のどこに強く反応したかreconstructする network[Zeiler et al 2010])を用いてattention を可視化 ○ elephant→zebraと予測した時のattentionの変化 18

- 19. まとめ ● CNNとRNNを組み合わせたMulti labelでの画像分類フレームワークを提案 ○ SOTAを上回る制度 ○ 重複したlabelが出力されにくくなった ○ 注意の可視化も可能に

![[Japan Tech summit 2017] MAI 001](https://cdn.slidesharecdn.com/ss_thumbnails/techsummit2017pdfmai001-171115034129-thumbnail.jpg?width=560&fit=bounds)