Dimensionality Reduction : Above PCA

ŌĆó

1 likeŌĆó227 views

Topics Covered : 1. SVD 2. Non-Linear Dimensionality Reduction and important types 3. ISOMAP 4. SNE 5. t-SNE

1 of 15

Download to read offline

Recommended

Principle Component Analysis

Principle Component AnalysisUtkarsh Sharma

╠²

This document discusses principal component analysis (PCA), a dimensionality reduction technique. It explains that PCA calculates the covariance matrix of the data, finds the eigenvectors and eigenvalues, and uses the top eigenvectors as principal components to represent the data in a lower dimensional space while preserving as much information as possible. The original data can then be recovered from the lower dimensional representation.SVD.ppt

SVD.pptcmpt cmpt

╠²

Dimensionality reduction techniques like principal component analysis (PCA) and singular value decomposition (SVD) are important for analyzing high-dimensional data by finding patterns in the data and expressing the data in a lower-dimensional space. PCA and SVD decompose a data matrix into orthogonal principal components/singular vectors that capture the maximum variance in the data, allowing the data to be represented in fewer dimensions without losing much information. Dimensionality reduction is useful for visualization, removing noise, discovering hidden correlations, and more efficiently storing and processing the data.Machine Learning Foundations for Professional Managers

Machine Learning Foundations for Professional ManagersAlbert Y. C. Chen

╠²

20180526@Taiwan AI Academy, Professional Managers Class.

Covering important concepts of classical machine learning, in preparation for deep learning topics to follow. Topics include regression (linear, polynomial, gaussian and sigmoid basis functions), dimension reduction (PCA, LDA, ISOMAP), clustering (K-means, GMM, Mean-Shift, DBSCAN, Spectral Clustering), classification (Naive Bayes, Logistic Regression, SVM, kNN, Decision Tree, Classifier Ensembles, Bagging, Boosting, Adaboost) and Semi-Supervised learning techniques. Emphasis on sampling, probability, curse of dimensionality, decision theory and classifier generalizability.Mini_Project

Mini_ProjectAshish Yadav

╠²

This document discusses data analysis and dimensionality reduction techniques including PCA and LDA. It provides an overview of feature transformation and why it is needed for dimensionality reduction. It then describes the steps of PCA including standardization of data, obtaining eigenvalues and eigenvectors, principal component selection, projection matrix, and projection into feature space. The steps of LDA are also outlined including computing mean vectors, scatter matrices, eigenvectors and eigenvalues, selecting linear discriminants, and transforming samples. Examples applying PCA and LDA to iris and web datasets are presented.Parallel Algorithms for Geometric Graph Problems (at Stanford)

Parallel Algorithms for Geometric Graph Problems (at Stanford)Grigory Yaroslavtsev

╠²

This document summarizes work on developing parallel algorithms for approximating problems on geometric graphs. Specifically, it presents algorithms for computing a (1+╬Ą)-approximate minimum spanning tree (MST) and earth-mover distance in O(1) rounds of parallel computation using a "solve-and-sketch" framework. The MST algorithm imposes a randomly shifted grid tree and computes MSTs within cells, using only short edges and representative points between cells. This achieves an approximation ratio of 1+O(╬Ą) in O(1) rounds. The framework is also extended to compute a (1+╬Ą)-approximate transportation cost.Automated attendance system based on facial recognition

Automated attendance system based on facial recognitionDhanush Kasargod

╠²

A MATLAB based system to take attendance in a classroom automatically using a camera. This project was carried out as a final year project in our Electronics and Communications Engineering course. The entire MATLAB code I've uploaded it in mathworks.com. Also the entire report will be available at academia.edu page. Will be delighted to hear from you.machine learning.pptx

machine learning.pptxAbdusSadik

╠²

- Dimensionality reduction techniques assign instances to vectors in a lower-dimensional space while approximately preserving similarity relationships. Principal component analysis (PCA) is a common linear dimensionality reduction technique.

- Kernel PCA performs PCA in a higher-dimensional feature space implicitly defined by a kernel function. This allows PCA to find nonlinear structure in data. Kernel PCA computes the principal components by finding the eigenvectors of the normalized kernel matrix.

- For a new data point, its representation in the lower-dimensional space is given by projecting it onto the principal components in feature space using the kernel trick, without explicitly computing features.ch10-graphs2.pptx

ch10-graphs2.pptxarkian3

╠²

The document describes a generative model for networks called the Affiliation Graph Model (AGM). The AGM models how communities in a network "generate" the edges between nodes. It represents each node's membership in multiple communities as strengths in a membership matrix. The probability of an edge between two nodes depends on the product of their membership strengths in common communities. The maximum likelihood estimation technique can be used to estimate the community membership strengths matrix that best explains a given network.DimensionalityReduction.pptx

DimensionalityReduction.pptx36rajneekant

╠²

This document provides an overview of dimensionality reduction techniques. It discusses how increasing dimensionality can negatively impact classification accuracy due to the curse of dimensionality. Dimensionality reduction aims to select an optimal set of features of lower dimensionality to improve accuracy. Feature extraction and feature selection are two common approaches. Principal component analysis (PCA) is described as a popular linear feature extraction method that projects data to a lower dimensional space while preserving as much variance as possible.Data Mining Lecture_9.pptx

Data Mining Lecture_9.pptxSubrata Kumer Paul

╠²

Lecture 9: Dimensionality Reduction, Singular Value Decomposition (SVD), Principal Component Analysis (PCA). (ppt,pdf)

Appendices A, B from the book ŌĆ£Introduction to Data MiningŌĆØ by Tan, Steinbach, Kumar.Lect4 principal component analysis-I

Lect4 principal component analysis-Ihktripathy

╠²

Principal Component Analysis (PCA) is a technique used to reduce the dimensionality of data by transforming it to a new coordinate system. It works by finding the principal components - linear combinations of variables with the highest variance - and using those to project the data to a lower dimensional space. PCA is useful for visualizing high-dimensional data, reducing dimensions without much loss of information, and finding patterns. It involves calculating the covariance matrix and solving the eigenvalue problem to determine the principal components.Explainable algorithm evaluation from lessons in education

Explainable algorithm evaluation from lessons in educationCSIRO

╠²

How can we evaluate a portfolio of algorithms to extract meaningful interpretations about them? Suppose we have a set of algorithms. These can be classification, regression, clustering or any other type of algorithm. And suppose we have a set of problems that these algorithms can work on. We can evaluate these algorithms on the problems and get the results. From these results, can we explain the algorithms in a meaningful way? The easy option is to find which algorithm performs best for each problem and find the algorithm that performs best on the greatest number of problems. But, there is a limitation with this approach. We are only looking at the overall best! Suppose a certain algorithm gives the best performance on hard problems, but not on easy problems. We would miss this algorithm by using the ŌĆ£overall bestŌĆØ approach. How do we obtain a salient set of algorithm features? Predictive Modelling

Predictive ModellingRajiv Advani

╠²

Predictive modeling aims to generate accurate estimates of future outcomes by analyzing current and historical data using statistical and machine learning techniques. It involves gathering data, exploring the data, building predictive models using algorithms like regression, decision trees, and neural networks, and evaluating the models. Some common predictive modeling techniques include time series analysis, regression analysis, and clustering algorithms.Distributed Architecture of Subspace Clustering and Related

Distributed Architecture of Subspace Clustering and RelatedPei-Che Chang

╠²

Distributed Architecture of Subspace Clustering and Related

Sparse Subspace Clustering

Low-Rank Representation

Least Squares Regression

Multiview Subspace Clustering Data Science and Machine Learning with Tensorflow

Data Science and Machine Learning with TensorflowShubham Sharma

╠²

Importance of Machine Learning and AI ŌĆō Emerging applications, end-use

Pictures (Amazon recommendations, Driverless Cars)

Relationship betweeen Data Science and AI .

Overall structure and components

What tools can be used ŌĆō technologies, packages

List of tools and their classification

List of frameworks

Artificial Intelligence and Neural Networks

Basics Of ML,AI,Neural Networks with implementations

Machine Learning Depth : Regression Models

Linear Regression : Math Behind

Non Linear Regression : Math Behind

Machine Learning Depth : Classification Models

Decision Trees : Math Behind

Deep Learning

Mathematics Behind Neural Networks

Terminologies

What are the opportunities for data analytics professionals

cnn.pptx

cnn.pptxsghorai

╠²

Computer vision uses machine learning techniques to recognize objects in large amounts of images. A key development was the use of deep neural networks, which can recognize new images not in the training data as well as or better than humans. Graphics processing units (GPUs) enabled this breakthrough due to their ability to accelerate deep learning algorithms. Computer vision tasks involve both unsupervised learning, such as clustering visually similar images, and supervised learning, where algorithms are trained on labeled image data to learn visual classifications and recognize objects.Elhabian lda09

Elhabian lda09Mr. Neelamegam D

╠²

This document provides an overview of Linear Discriminant Analysis (LDA) for dimensionality reduction. LDA seeks to perform dimensionality reduction while preserving class discriminatory information as much as possible, unlike PCA which does not consider class labels. LDA finds a linear combination of features that separates classes best by maximizing the between-class variance while minimizing the within-class variance. This is achieved by solving the generalized eigenvalue problem involving the within-class and between-class scatter matrices. The document provides mathematical details and an example to illustrate LDA for a two-class problem.Paper study: Learning to solve circuit sat

Paper study: Learning to solve circuit satChenYiHuang5

╠²

My own slides on meeting which presenting "Learning to Solve Circuit-SAT: an Unsupervised Differentiable Approach "Aaa ped-17-Unsupervised Learning: Dimensionality reduction

Aaa ped-17-Unsupervised Learning: Dimensionality reductionAminaRepo

╠²

This document provides an overview of dimensionality reduction techniques including PCA and manifold learning. It discusses the objectives of dimensionality reduction such as eliminating noise and unnecessary features to enhance learning. PCA and manifold learning are described as the two main approaches, with PCA using projections to maximize variance and manifold learning assuming data lies on a lower dimensional manifold. Specific techniques covered include LLE, Isomap, MDS, and implementations in scikit-learn.Part2

Part2khawarbashir

╠²

The document summarizes object and face detection techniques including:

- Lowe's SIFT descriptor for specific object recognition using histograms of edge orientations.

- Viola and Jones' face detector which uses boosted classifiers with Haar-like features and an attentional cascade for fast rejection of non-faces.

- Earlier face detection work including eigenfaces, neural networks, and distribution-based methods.Deep learning paper review ppt sourece -Direct clr

Deep learning paper review ppt sourece -Direct clr taeseon ryu

╠²

ļöźļ¤¼ļŗØ ņØ┤ļ»Ėņ¦Ć ļČäļźś ĒģīņŖżĒü¼ņŚÉņä£ļŖö Self-Supervision ĒĢÖņŖĄ ļ░®ļ▓ĢņØ┤ ņ׳ņŖĄļŗłļŗż. ļĀłņØ┤ļĖöņØ┤ ņŚåļŖö ņāüĒā£ņŚÉņä£ context prediction ņØ┤ļéś jigsaw puzzleĻ│╝ Ļ░ÖņØĆ ļ░®ļ▓Ģņ£╝ļĪ£ ĒĢÖņŖĄņŗ£ĒéżļŖö ļ░®ļ▓ĢņØ┤ņ¦Ćļ¦ī ņØ┤ļ¤¼ĒĢ£ self-supervision ĒģīņŖżĒü¼ņŚÉļŖö ļ¬©ļōĀ ņ░©ņøÉņŚÉ ļČäĒżĒĢśņ¦Ć ņĢŖĻ│Ā ĒŖ╣ņĀĢ ļČĆļČä ņ░©ņøÉņ£╝ļĪ£ļ¦ī ĒĢÖņŖĄņØ┤ ļÉśļŖö Dimensional Collapse ļØ╝ļŖö Ļ│Āņ¦łņĀüņØĖ ļ¼ĖņĀ£ļź╝ ņØ╝ņ£╝ĒéĄļŗłļŗż. Self-supervision ņżæ positive pairļŖö Ļ░ĆĻ╣ØĻ▓ī, ĻĘĖļ”¼Ļ│Ā negative pairļŖö ņä£ļĪ£ ļ®Ćņ¢┤ņ¦ĆĻ▓ī ĒĢÖņŖĄņØä ņŗ£ĒéżļŖö Contrastive Learning ņØ┤ ņ׳ņŖĄļŗłļŗż. ņØ┤ļĪ£ņØĖĒĢ┤ Dimensional CollapseņŚÉ Ļ░ĢņØĖĒĢĀ Ļ▓ā ņØ┤ļØ╝Ļ│Ā ņ¦üĻ┤ĆņĀüņ£╝ļĪ£ ņāØĻ░üņØ┤ ļōżņ¦Ćļ¦ī, ĻĘĖļĀćņ¦Ć ņĢŖņĢśņŖĄļŗłļŗż. ņØ┤ļ¤¼ĒĢ£ ļ¼ĖņĀ£ļź╝ ĒĢ┤Ļ▓░ĒĢśĻĖ░ ņ£äĒĢ┤ ļō▒ņןĒĢ£ Direct CLRņØ┤ļØ╝ļŖö ļ░®ļ▓ĢļĪĀņØä ņåīĻ░£ļō£ļ”Įļŗłļŗż.

ļģ╝ļ¼ĖņØś ļ░░Ļ▓ĮļČĆĒä░ Direct CLRļģ╝ļ¼ĖņŚÉ ļīĆĒĢ£ ļööĒģīņØ╝ĒĢ£ ņäżļ¬ģĻ╣īņ¦Ć,

ĒÄĆļööļ®śĒāłĒīĆņØś ņØ┤ņ×¼ņ£żļŗśņØ┤ ņ×ÉņäĖĒĢ£ ļ”¼ļĘ░ ļÅäņÖĆņŻ╝ņģ©ņŖĄļŗłļŗż.

ņśżļŖśļÅä ļ¦ÄņØĆ Ļ┤Ćņŗ¼ ļ»Ėļ”¼ Ļ░Éņé¼ļō£ļ”Įļŗłļŗż !┘ģž»ž«┘ä žź┘ä┘ē ž¬ž╣┘ä┘ģ ž¦┘äžó┘äž®

┘ģž»ž«┘ä žź┘ä┘ē ž¬ž╣┘ä┘ģ ž¦┘äžó┘äž®Fares Al-Qunaieer

╠²

This document provides an overview of regression analysis and linear regression. It explains that regression analysis estimates relationships among variables to predict continuous outcomes. Linear regression finds the best fitting line through minimizing error. It describes modeling with multiple features, representing data in vector and matrix form, and using gradient descent optimization to learn the weights through iterative updates. The goal is to minimize a cost function measuring error between predictions and true values.TPDM Presentation ║▌║▌▀Ż (ICCV23)

TPDM Presentation ║▌║▌▀Ż (ICCV23)Suhyeon Lee

╠²

Presentation slides from the paper "Improving 3D Imaging with Pre-Trained Perpendicular 2D Diffusion Models" (ICCV23).Declarative data analysis

Declarative data analysisSouth West Data Meetup

╠²

May 2015 talk to SW Data Meetup by Professor Hendrik Blockeel from KU Leuven & Leiden University.

With increasing amounts of ever more complex forms of digital data becoming available, the methods for analyzing these data have also become more diverse and sophisticated. With this comes an increased risk of incorrect use of these methods, and a greater burden on the user to be knowledgeable about their assumptions. In addition, the user needs to know about a wide variety of methods to be able to apply the most suitable one to a particular problem. This combination of broad and deep knowledge is not sustainable.

The idea behind declarative data analysis is that the burden of choosing the right statistical methodology for answering a research question should no longer lie with the user, but with the system. The user should be able to simply describe the problem, formulate a question, and let the system take it from there. To achieve this, we need to find answers to questions such as: what languages are suitable for formulating these questions, and what execution mechanisms can we develop for them? In this talk, I will discuss recent and ongoing research in this direction. The talk will touch upon query languages for data mining and for statistical inference, declarative modeling for data mining, meta-learning, and constraint-based data mining. What connects these research threads is that they all strive to put intelligence about data analysis into the system, instead of assuming it resides in the user.

Hendrik Blockeel is a professor of computer science at KU Leuven, Belgium, and part-time associate professor at Leiden University, The Netherlands. His research interests lie mostly in machine learning and data mining. He has made a variety of research contributions in these fields, including work on decision tree learning, inductive logic programming, predictive clustering, probabilistic-logical models, inductive databases, constraint-based data mining, and declarative data analysis. He is an action editor for Machine Learning and serves on the editorial board of several other journals. He has chaired or organized multiple conferences, workshops, and summer schools, including ILP, ECMLPKDD, IDA and ACAI, and he has been vice-chair, area chair, or senior PC member for ECAI, IJCAI, ICML, KDD, ICDM. He was a member of the board of the European Coordinating Committee for Artificial Intelligence from 2004 to 2010, and currently serves as publications chair for the ECMLPKDD steering committee.Understanding Basics of Machine Learning

Understanding Basics of Machine LearningPranav Ainavolu

╠²

This document provides an overview of machine learning concepts including:

1. It defines data science and machine learning, distinguishing machine learning's focus on letting systems learn from data rather than being explicitly programmed.

2. It describes the two main areas of machine learning - supervised learning which uses labeled examples to predict outcomes, and unsupervised learning which finds patterns in unlabeled data.

3. It outlines the typical machine learning process of obtaining data, cleaning and transforming it, applying mathematical models, and using the resulting models to make predictions. Popular models like decision trees, neural networks, and support vector machines are also briefly introduced.Machine Learning on Azure - AzureConf

Machine Learning on Azure - AzureConfSeth Juarez

╠²

Machine Learning can often be a daunting subject to tackle much less utilize in a meaningful manner. In this session, attendees will learn how to take their existing data, shape it, and create models that automatically can make principled business decisions directly in their applications. The discussion will include explanations of the data acquisition and shaping process. Additionally, attendees will learn the basics of machine learning - primarily the supervised learning problem.Industry_Use_Cases.ppt Industry_Use_Cases.ppt

Industry_Use_Cases.ppt Industry_Use_Cases.pptssuser3e8ddb

╠²

Industry_Use_Cases.pptIndustry_Use_Cases.pptMore Related Content

Similar to Dimensionality Reduction : Above PCA (20)

DimensionalityReduction.pptx

DimensionalityReduction.pptx36rajneekant

╠²

This document provides an overview of dimensionality reduction techniques. It discusses how increasing dimensionality can negatively impact classification accuracy due to the curse of dimensionality. Dimensionality reduction aims to select an optimal set of features of lower dimensionality to improve accuracy. Feature extraction and feature selection are two common approaches. Principal component analysis (PCA) is described as a popular linear feature extraction method that projects data to a lower dimensional space while preserving as much variance as possible.Data Mining Lecture_9.pptx

Data Mining Lecture_9.pptxSubrata Kumer Paul

╠²

Lecture 9: Dimensionality Reduction, Singular Value Decomposition (SVD), Principal Component Analysis (PCA). (ppt,pdf)

Appendices A, B from the book ŌĆ£Introduction to Data MiningŌĆØ by Tan, Steinbach, Kumar.Lect4 principal component analysis-I

Lect4 principal component analysis-Ihktripathy

╠²

Principal Component Analysis (PCA) is a technique used to reduce the dimensionality of data by transforming it to a new coordinate system. It works by finding the principal components - linear combinations of variables with the highest variance - and using those to project the data to a lower dimensional space. PCA is useful for visualizing high-dimensional data, reducing dimensions without much loss of information, and finding patterns. It involves calculating the covariance matrix and solving the eigenvalue problem to determine the principal components.Explainable algorithm evaluation from lessons in education

Explainable algorithm evaluation from lessons in educationCSIRO

╠²

How can we evaluate a portfolio of algorithms to extract meaningful interpretations about them? Suppose we have a set of algorithms. These can be classification, regression, clustering or any other type of algorithm. And suppose we have a set of problems that these algorithms can work on. We can evaluate these algorithms on the problems and get the results. From these results, can we explain the algorithms in a meaningful way? The easy option is to find which algorithm performs best for each problem and find the algorithm that performs best on the greatest number of problems. But, there is a limitation with this approach. We are only looking at the overall best! Suppose a certain algorithm gives the best performance on hard problems, but not on easy problems. We would miss this algorithm by using the ŌĆ£overall bestŌĆØ approach. How do we obtain a salient set of algorithm features? Predictive Modelling

Predictive ModellingRajiv Advani

╠²

Predictive modeling aims to generate accurate estimates of future outcomes by analyzing current and historical data using statistical and machine learning techniques. It involves gathering data, exploring the data, building predictive models using algorithms like regression, decision trees, and neural networks, and evaluating the models. Some common predictive modeling techniques include time series analysis, regression analysis, and clustering algorithms.Distributed Architecture of Subspace Clustering and Related

Distributed Architecture of Subspace Clustering and RelatedPei-Che Chang

╠²

Distributed Architecture of Subspace Clustering and Related

Sparse Subspace Clustering

Low-Rank Representation

Least Squares Regression

Multiview Subspace Clustering Data Science and Machine Learning with Tensorflow

Data Science and Machine Learning with TensorflowShubham Sharma

╠²

Importance of Machine Learning and AI ŌĆō Emerging applications, end-use

Pictures (Amazon recommendations, Driverless Cars)

Relationship betweeen Data Science and AI .

Overall structure and components

What tools can be used ŌĆō technologies, packages

List of tools and their classification

List of frameworks

Artificial Intelligence and Neural Networks

Basics Of ML,AI,Neural Networks with implementations

Machine Learning Depth : Regression Models

Linear Regression : Math Behind

Non Linear Regression : Math Behind

Machine Learning Depth : Classification Models

Decision Trees : Math Behind

Deep Learning

Mathematics Behind Neural Networks

Terminologies

What are the opportunities for data analytics professionals

cnn.pptx

cnn.pptxsghorai

╠²

Computer vision uses machine learning techniques to recognize objects in large amounts of images. A key development was the use of deep neural networks, which can recognize new images not in the training data as well as or better than humans. Graphics processing units (GPUs) enabled this breakthrough due to their ability to accelerate deep learning algorithms. Computer vision tasks involve both unsupervised learning, such as clustering visually similar images, and supervised learning, where algorithms are trained on labeled image data to learn visual classifications and recognize objects.Elhabian lda09

Elhabian lda09Mr. Neelamegam D

╠²

This document provides an overview of Linear Discriminant Analysis (LDA) for dimensionality reduction. LDA seeks to perform dimensionality reduction while preserving class discriminatory information as much as possible, unlike PCA which does not consider class labels. LDA finds a linear combination of features that separates classes best by maximizing the between-class variance while minimizing the within-class variance. This is achieved by solving the generalized eigenvalue problem involving the within-class and between-class scatter matrices. The document provides mathematical details and an example to illustrate LDA for a two-class problem.Paper study: Learning to solve circuit sat

Paper study: Learning to solve circuit satChenYiHuang5

╠²

My own slides on meeting which presenting "Learning to Solve Circuit-SAT: an Unsupervised Differentiable Approach "Aaa ped-17-Unsupervised Learning: Dimensionality reduction

Aaa ped-17-Unsupervised Learning: Dimensionality reductionAminaRepo

╠²

This document provides an overview of dimensionality reduction techniques including PCA and manifold learning. It discusses the objectives of dimensionality reduction such as eliminating noise and unnecessary features to enhance learning. PCA and manifold learning are described as the two main approaches, with PCA using projections to maximize variance and manifold learning assuming data lies on a lower dimensional manifold. Specific techniques covered include LLE, Isomap, MDS, and implementations in scikit-learn.Part2

Part2khawarbashir

╠²

The document summarizes object and face detection techniques including:

- Lowe's SIFT descriptor for specific object recognition using histograms of edge orientations.

- Viola and Jones' face detector which uses boosted classifiers with Haar-like features and an attentional cascade for fast rejection of non-faces.

- Earlier face detection work including eigenfaces, neural networks, and distribution-based methods.Deep learning paper review ppt sourece -Direct clr

Deep learning paper review ppt sourece -Direct clr taeseon ryu

╠²

ļöźļ¤¼ļŗØ ņØ┤ļ»Ėņ¦Ć ļČäļźś ĒģīņŖżĒü¼ņŚÉņä£ļŖö Self-Supervision ĒĢÖņŖĄ ļ░®ļ▓ĢņØ┤ ņ׳ņŖĄļŗłļŗż. ļĀłņØ┤ļĖöņØ┤ ņŚåļŖö ņāüĒā£ņŚÉņä£ context prediction ņØ┤ļéś jigsaw puzzleĻ│╝ Ļ░ÖņØĆ ļ░®ļ▓Ģņ£╝ļĪ£ ĒĢÖņŖĄņŗ£ĒéżļŖö ļ░®ļ▓ĢņØ┤ņ¦Ćļ¦ī ņØ┤ļ¤¼ĒĢ£ self-supervision ĒģīņŖżĒü¼ņŚÉļŖö ļ¬©ļōĀ ņ░©ņøÉņŚÉ ļČäĒżĒĢśņ¦Ć ņĢŖĻ│Ā ĒŖ╣ņĀĢ ļČĆļČä ņ░©ņøÉņ£╝ļĪ£ļ¦ī ĒĢÖņŖĄņØ┤ ļÉśļŖö Dimensional Collapse ļØ╝ļŖö Ļ│Āņ¦łņĀüņØĖ ļ¼ĖņĀ£ļź╝ ņØ╝ņ£╝ĒéĄļŗłļŗż. Self-supervision ņżæ positive pairļŖö Ļ░ĆĻ╣ØĻ▓ī, ĻĘĖļ”¼Ļ│Ā negative pairļŖö ņä£ļĪ£ ļ®Ćņ¢┤ņ¦ĆĻ▓ī ĒĢÖņŖĄņØä ņŗ£ĒéżļŖö Contrastive Learning ņØ┤ ņ׳ņŖĄļŗłļŗż. ņØ┤ļĪ£ņØĖĒĢ┤ Dimensional CollapseņŚÉ Ļ░ĢņØĖĒĢĀ Ļ▓ā ņØ┤ļØ╝Ļ│Ā ņ¦üĻ┤ĆņĀüņ£╝ļĪ£ ņāØĻ░üņØ┤ ļōżņ¦Ćļ¦ī, ĻĘĖļĀćņ¦Ć ņĢŖņĢśņŖĄļŗłļŗż. ņØ┤ļ¤¼ĒĢ£ ļ¼ĖņĀ£ļź╝ ĒĢ┤Ļ▓░ĒĢśĻĖ░ ņ£äĒĢ┤ ļō▒ņןĒĢ£ Direct CLRņØ┤ļØ╝ļŖö ļ░®ļ▓ĢļĪĀņØä ņåīĻ░£ļō£ļ”Įļŗłļŗż.

ļģ╝ļ¼ĖņØś ļ░░Ļ▓ĮļČĆĒä░ Direct CLRļģ╝ļ¼ĖņŚÉ ļīĆĒĢ£ ļööĒģīņØ╝ĒĢ£ ņäżļ¬ģĻ╣īņ¦Ć,

ĒÄĆļööļ®śĒāłĒīĆņØś ņØ┤ņ×¼ņ£żļŗśņØ┤ ņ×ÉņäĖĒĢ£ ļ”¼ļĘ░ ļÅäņÖĆņŻ╝ņģ©ņŖĄļŗłļŗż.

ņśżļŖśļÅä ļ¦ÄņØĆ Ļ┤Ćņŗ¼ ļ»Ėļ”¼ Ļ░Éņé¼ļō£ļ”Įļŗłļŗż !┘ģž»ž«┘ä žź┘ä┘ē ž¬ž╣┘ä┘ģ ž¦┘äžó┘äž®

┘ģž»ž«┘ä žź┘ä┘ē ž¬ž╣┘ä┘ģ ž¦┘äžó┘äž®Fares Al-Qunaieer

╠²

This document provides an overview of regression analysis and linear regression. It explains that regression analysis estimates relationships among variables to predict continuous outcomes. Linear regression finds the best fitting line through minimizing error. It describes modeling with multiple features, representing data in vector and matrix form, and using gradient descent optimization to learn the weights through iterative updates. The goal is to minimize a cost function measuring error between predictions and true values.TPDM Presentation ║▌║▌▀Ż (ICCV23)

TPDM Presentation ║▌║▌▀Ż (ICCV23)Suhyeon Lee

╠²

Presentation slides from the paper "Improving 3D Imaging with Pre-Trained Perpendicular 2D Diffusion Models" (ICCV23).Declarative data analysis

Declarative data analysisSouth West Data Meetup

╠²

May 2015 talk to SW Data Meetup by Professor Hendrik Blockeel from KU Leuven & Leiden University.

With increasing amounts of ever more complex forms of digital data becoming available, the methods for analyzing these data have also become more diverse and sophisticated. With this comes an increased risk of incorrect use of these methods, and a greater burden on the user to be knowledgeable about their assumptions. In addition, the user needs to know about a wide variety of methods to be able to apply the most suitable one to a particular problem. This combination of broad and deep knowledge is not sustainable.

The idea behind declarative data analysis is that the burden of choosing the right statistical methodology for answering a research question should no longer lie with the user, but with the system. The user should be able to simply describe the problem, formulate a question, and let the system take it from there. To achieve this, we need to find answers to questions such as: what languages are suitable for formulating these questions, and what execution mechanisms can we develop for them? In this talk, I will discuss recent and ongoing research in this direction. The talk will touch upon query languages for data mining and for statistical inference, declarative modeling for data mining, meta-learning, and constraint-based data mining. What connects these research threads is that they all strive to put intelligence about data analysis into the system, instead of assuming it resides in the user.

Hendrik Blockeel is a professor of computer science at KU Leuven, Belgium, and part-time associate professor at Leiden University, The Netherlands. His research interests lie mostly in machine learning and data mining. He has made a variety of research contributions in these fields, including work on decision tree learning, inductive logic programming, predictive clustering, probabilistic-logical models, inductive databases, constraint-based data mining, and declarative data analysis. He is an action editor for Machine Learning and serves on the editorial board of several other journals. He has chaired or organized multiple conferences, workshops, and summer schools, including ILP, ECMLPKDD, IDA and ACAI, and he has been vice-chair, area chair, or senior PC member for ECAI, IJCAI, ICML, KDD, ICDM. He was a member of the board of the European Coordinating Committee for Artificial Intelligence from 2004 to 2010, and currently serves as publications chair for the ECMLPKDD steering committee.Understanding Basics of Machine Learning

Understanding Basics of Machine LearningPranav Ainavolu

╠²

This document provides an overview of machine learning concepts including:

1. It defines data science and machine learning, distinguishing machine learning's focus on letting systems learn from data rather than being explicitly programmed.

2. It describes the two main areas of machine learning - supervised learning which uses labeled examples to predict outcomes, and unsupervised learning which finds patterns in unlabeled data.

3. It outlines the typical machine learning process of obtaining data, cleaning and transforming it, applying mathematical models, and using the resulting models to make predictions. Popular models like decision trees, neural networks, and support vector machines are also briefly introduced.Machine Learning on Azure - AzureConf

Machine Learning on Azure - AzureConfSeth Juarez

╠²

Machine Learning can often be a daunting subject to tackle much less utilize in a meaningful manner. In this session, attendees will learn how to take their existing data, shape it, and create models that automatically can make principled business decisions directly in their applications. The discussion will include explanations of the data acquisition and shaping process. Additionally, attendees will learn the basics of machine learning - primarily the supervised learning problem.Recently uploaded (20)

Industry_Use_Cases.ppt Industry_Use_Cases.ppt

Industry_Use_Cases.ppt Industry_Use_Cases.pptssuser3e8ddb

╠²

Industry_Use_Cases.pptIndustry_Use_Cases.pptHIRE MUYERN TRUST HACKER FOR AUTHENTIC CYBER SERVICES

HIRE MUYERN TRUST HACKER FOR AUTHENTIC CYBER SERVICESanastasiapenova16

╠²

ItŌĆÖs hard to imagine the frustration and helplessness a 65-year-old man with limited computer skills must feel when facing the aftermath of a crypto scam. Recovering a hacked trading wallet can feel like an absolute nightmare, especially when every step seems to lead you into an endless loop of failed solutions. ThatŌĆÖs exactly what I went through over the past four weeks. After my trading wallet was compromised, the hacker changed my email address, password, and even removed my phone number from the account. For someone with little technical expertise, this was not just overwhelming, it was a disaster. Every suggested solution I came across in online help centers was either too complex or simply ineffective. I tried countless links, tutorials, and forums, only to find myself stuck, not even close to reclaiming my stolen crypto. In a last-ditch effort, I turned to Google and stumbled upon a review about MUYERN TRUST HACKER. At first, I was skeptical, like anyone would be in my position. But the glowing reviews, especially from people with similar experiences, gave me a glimmer of hope. Despite my doubts, I decided to reach out to them for assistance.The team at MUYERN TRUST HACKER immediately put me at ease. They were professional, understanding, and reassuring. Unlike other services that felt impersonal or automated, they took the time to walk me through every step of the recovery process. The fact that they were willing to schedule a 25-minute session to help me properly secure my account after recovery was invaluable. Today, IŌĆÖm grateful to say that my stolen crypto has been fully recovered, and my account is secure again. This experience has taught me that sometimes, even when you feel like all hope is lost, thereŌĆÖs always a way to fight back. If youŌĆÖre going through something similar, donŌĆÖt give up. Reach out to MUYERN TRUST HACKER. Even if youŌĆÖve already tried everything, their expertise and persistence might just be the solution you need.I wholeheartedly recommend MUYERN TRUST HACKER to anyone facing the same situation. Whether youŌĆÖre a novice or experienced in technology, theyŌĆÖre the right team to trust when it comes to recovering stolen crypto or securing your accounts. DonŌĆÖt hesitate to contact them, it's worth it. Reach out to them on telegram at muyerntrusthackertech or web: ht tps :// muyerntrusthacker . o r g for faster response.New Income Tax Bill - Capital Gains .pdf

New Income Tax Bill - Capital Gains .pdfHarshilShah134194

╠²

The #IncomeTaxBill 2025 simplifies capital gains taxation by removing exemptions, restricting tax benefits to long-term gains, and limiting indexation. Here is our detailed analysis of the proposed changes.

International Journal on Cloud Computing: Services and Architecture (IJCCSA)

International Journal on Cloud Computing: Services and Architecture (IJCCSA)ijccsa

╠²

Cloud computing helps enterprises transform business and technology. Companies have begun to

look for solutions that would help reduce their infrastructure costs and improve profitability. Cloud

computing is becoming a foundation for benefits well beyond IT cost savings. Yet, many business

leaders are concerned about cloud security, privacy, availability, and data protection. To discuss

and address these issues, we invite researches who focus on cloud computing to shed more light

on this emerging field. This peer-reviewed open access Journal aims to bring together researchers

and practitioners in all security aspects of cloud-centric and outsourced computing, including (but

not limited to):Pink Yellow Purple Lifestyle Vision Board Scribbles Doodles Whiteboard Pres_2...

Pink Yellow Purple Lifestyle Vision Board Scribbles Doodles Whiteboard Pres_2...jarreldelacruz31

╠²

Intuitive thinking 2025 Trends: What Really Works in SEO and Content Marketing

2025 Trends: What Really Works in SEO and Content MarketingDr. Sasidharan Murugan

╠²

The digital landscape is constantly evolving. Understanding what works in SEO and content marketing today will be critical for success in 2025 and beyond. Let's delve into the key trends shaping the future of online content.Kaggle & Datathons: A Practical Guide to AI Competitions

Kaggle & Datathons: A Practical Guide to AI Competitionsrasheedsrq

╠²

Kaggle & Datathons: A Practical Guide to AI Competitions9th Edition of International Research Awards

9th Edition of International Research Awardssciencereviewerview

╠²

9th Edition of International Research Awards |28-29 March 2025 | San Francisco, United States

The International Research Awards recognize exceptional research contributions, innovation, and excellence across various fields. This prestigious award honors outstanding researchers, scientists, and scholars who have made significant impacts in their respective disciplines, fostering a culture of innovation and discovery.Leveraging-Virtual-Reality-(VR)-and-Augmented-Reality-(AR)-for-Enhanced-Touri...

Leveraging-Virtual-Reality-(VR)-and-Augmented-Reality-(AR)-for-Enhanced-Touri...Krishna Khanal

╠²

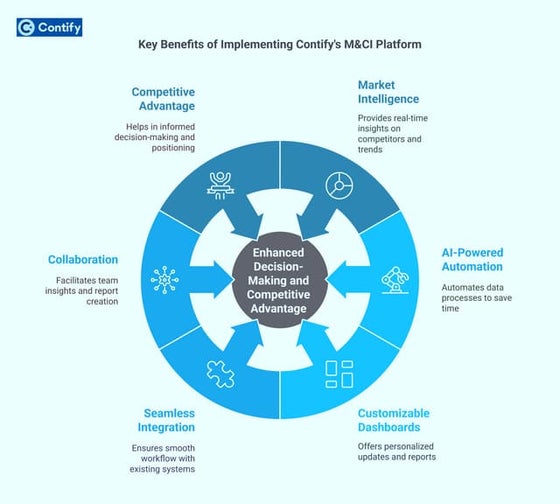

This chapter proposes to explore the intersection of virtual reality (VR), augmented reality (AR), data analytics, and marketing in the context of the tourism and events industry. As technology continues to advance, organizations within this sector are seeking innovative and transformative ways to engage and attract tourists and event attendees. VR and AR have emerged as powerful tools for creating immersive experiences, while data analytics provides insights into consumer behavior and preferences. This chapter will examine how these technologies can be integrated into marketing strategies to enhance engagement, visitor experiences, and decision- making processes. By discussing real-world case studies, ethical considerations, and future trends, this chapter aims to provide a comprehensive overview of the subject and offer practical insights for professionals and researchers in the field.Key Benefits of Implementing Contify's M&CI Platform

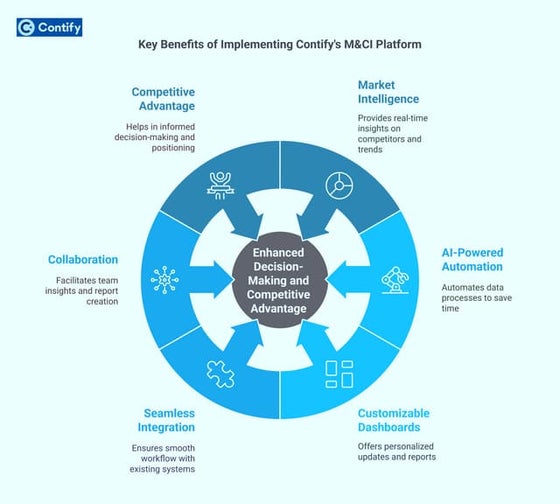

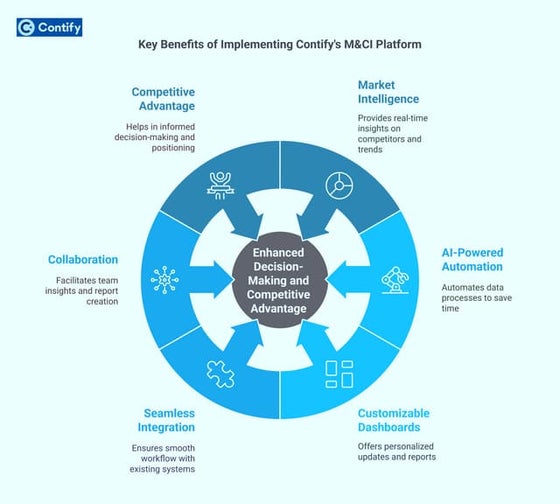

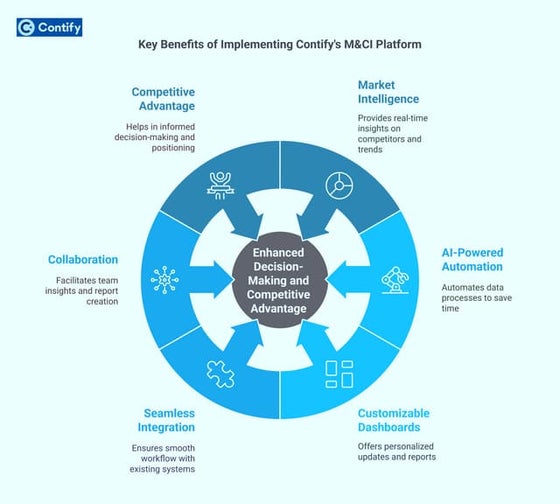

Key Benefits of Implementing Contify's M&CI PlatformContify

╠²

Contify's Market & Competitive Intelligence platform empowers businesses with real-time insights, automated intelligence gathering, and AI-driven analytics. It enhances decision-making, streamlines competitor tracking, and delivers personalized intelligence reports. With Contify, organizations gain a strategic edge by identifying trends, mitigating risks, and seizing growth opportunities in dynamic market landscapes.

For more information please visit here https://www.contify.com/platform/Herding behavior Experimental Studies --AlisonLo

Herding behavior Experimental Studies --AlisonLoAlisonKL

╠²

My experimental studies on information cascades and herding behavior, comparing Bayesian belief updating with other behavioral heuristics that account for the observed behaviorsPlant Disease Prediction with Image Classification using CNN.pdf

Plant Disease Prediction with Image Classification using CNN.pdfTheekshana Wanniarachchi

╠²

Plant diseases pose a significant threat to agricultural productivity. Early detection and classification of plant diseases can help mitigate losses. This project focuses on building a Plant Disease Prediction system using Convolutional Neural Networks (CNNs). The system leverages NumPy, TensorFlow, and Streamlit to develop a model and deploy a web-based application. The final model is also containerized using Docker for efficient deployment.Analyzing Consumer Spending Trends and Purchasing Behavior

Analyzing Consumer Spending Trends and Purchasing Behavioromololaokeowo1

╠²

This project explores consumer spending patterns using Kaggle-sourced data to uncover key trends in purchasing behavior. The analysis involved cleaning and preparing the data, performing exploratory data analysis (EDA), and visualizing insights using ExcelI. Key focus areas included customer demographics, product performance, seasonal trends, and pricing strategies. The project provided actionable insights into consumer preferences, helping businesses optimize sales strategies and improve decision-making.Dimensionality Reduction : Above PCA

- 2. Singular Value Decomposition first right singular vector ŌĆó Singular Value Decomposition (SVD) is also called Spectral Decomposition ŌĆó Instead of using two coordinates (ØÆÖ, ØÆÜ) to describe point locations, letŌĆÖs use only one coordinate ØÆø ŌĆó PointŌĆÖs position is its location along vector ØÆŚ ؤŠŌĆó How to choose ØÆŚ ؤÅ? Minimize reconstruction error J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

- 3. Singular Value Decomposition ŌĆó Goal: Minimize the sum of reconstruction errors: ) ) Øæź+, ŌłÆ Øæ¦+, / 0 ,12 3 +12 ŌĆó where ØÆÖ ØÆŖØÆŗ are the ŌĆ£oldŌĆØ and ØÆøØÆŖØÆŗ are the ŌĆ£newŌĆØ coordinates ŌĆó SVD gives ŌĆśbestŌĆÖ axis to project on: ŌĆó ŌĆśbestŌĆÖ = minimizing the reconstruction errors ŌĆó In other words, minimum reconstruction error J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

- 4. Singular Value Decomposition ŌĆóA = U ╬Ż VT - example: ŌĆó V: ŌĆ£movie-to-conceptŌĆØ matrix ŌĆó U: ŌĆ£user-to-conceptŌĆØ matrix = x x 1 1 1 0 0 3 3 3 0 0 4 4 4 0 0 5 5 5 0 0 0 2 0 4 4 0 0 0 5 5 0 1 0 2 2 0.13 0.02 -0.01 0.41 0.07 -0.03 0.55 0.09 -0.04 0.68 0.11 -0.05 0.15 -0.59 0.65 0.07 -0.73 -0.67 0.07 -0.29 0.32 12.4 0 0 0 9.5 0 0 0 1.3 0.56 0.59 0.56 0.09 0.09 0.12 -0.02 0.12 -0.69 -0.69 0.40 -0.80 0.40 0.09 0.09 variance (ŌĆśspreadŌĆÖ) on the v1 axis Movie 1 rating Movie2rating J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

- 5. Singular Value Decomposition A U Sigma VT = B U Sigma VT = B is best approximation of A How Many Singular Values Should We Retain? ŌĆó A useful rule of thumb is to retain enough singular values to make up 90% of the energy in ╬Ż. ŌĆó Sum of the squares of the retained singular values should be at least 90% of the sum of the squares of all the singular values. ŌĆó Example: the total energy is (12.4)2 + (9.5)2 + (1.3)2 = 245.70, while the retained energy is (12.4)2 + (9.5)2 = 244.01. ŌĆó We have retained over 99% of the energy. However, were we to eliminate the second singular value, 9.5, the retained energy would be only (12.4)2/245.70 or about 63%.J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

- 6. Relation to Eigen-decomposition ŌĆó SVD gives us: ŌĆó A = U ╬Ż VT ŌĆó Eigen-decomposition: ŌĆó A = X ╬ø XT ŌĆó A is symmetric ŌĆó U, V, X are orthonormal (UTU=I), ŌĆó ╬ø, ╬Ż are diagonal ŌĆó Now letŌĆÖs calculate: ŌĆó AAT= U╬Ż VT(U╬Ż VT)T = U╬Ż VT(V╬ŻTUT) = U╬Ż╬ŻT UT ŌĆó ATA = V ╬ŻT UT (U╬Ż VT) = V ╬Ż╬ŻT VT X ╬ø2 XT X ╬ø2 XT Shows how to compute SVD using eigenvalue decomposition! J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

- 8. Brainstorming ŌĆó What is dimensionality of data ? ŌĆó What is degree of freedoms of data ? ŌĆó Is the data always exist in high-dimensional space ? ŌĆó What is the rank of a matrix ? ŌĆó What motivates us for non-linear dimensionality reduction ? ŌĆó Can the deep learningŌĆÖs popular MNIST dataset problem, solvable by simple machine learning model ?

- 9. Why do we need dimensionality reduction? ŌĆó You need to visualize it to some non-technical board members which are probably not familiar with : terms like cosine similarity etc. ŌĆó Based on the constraint, such as preserve 80% of the data. ŌĆó You need to reduce the data you have and any new data as it comes, which method would you choose?

- 10. Non-Linear Dimensionality Reduction ŌĆó Given a low dimensional surface embedded non-linearly in high dimensional space. Such a surfaceiscalledManifold. ŌĆó Agood wayto representdatapointsisbytheir low-dimensionalcoordinates. ŌĆó The low-dimensional representation of the data should capture information about high- dimensionalpairwisedistances. ŌĆó Non-lineardimensionalityreductionisalsocalledManifoldlearning. ŌĆó Idea :- Torecoverthe lowdimensionalsurface

- 12. NLDR over PCA NLDR PCA https://en.wikipedia.org/wiki/Nonlinear_dimensionality_reduction

- 13. Isomap ŌĆó Isomapusesthesame core ideasastheMDS algorithm: ŌĆó Obtainamatrixofproximities(distancesbetweenpointsinadataset). ŌĆó Thisdistancematrixisamatrixofinnerproducts. ŌĆó AnEigendecompositionofthismatrixgivesusthelowerdimensionembedding.

- 14. Stochastic Neighbor Embedding (SNE) Øæā,|+ = ØæÆ ;(<=;<>) /?= @ @ Ōłæ ØæÆ ;(<=;<B) /?= @ @ CD+ Øæä,|+ = ØæÆ F=;F> @ Ōłæ ØæÆ F=;F> @ CD+ Ø£Ä = 1 2Ø£ŗ ØÉŠØÉ┐(Øæā| Øæä = ) Øæā ØæŚ Øæ¢ log Øæā ØæŚ Øæ¢ Øæä ØæŚ Øæ¢ ØæÜØæ¢Øæø F=,F> ØÉŠØÉ┐(Øæā||Øæä) High dimensional space Minimization function Low dimensional space (2-D) 1.Large ØæĘØÆŗ|ØÆŖ is modeled as Low ØæĖ ØÆŗ|ØÆŖ ├Ā High Cost 2.Small ØæĘØÆŗ|ØÆŖ is modeled as High ØæĖ ØÆŗ|ØÆŖ ├Ā Low Cost 1.SNE is not Symmetric whereas t-SNE is Symmetric. 2.Symmetricity makes t-SNE fast.

- 15. T-Stochastic Neighbor Embedding (t-SNE) Øæä,+ = (1 + ( Øæ”+ ŌłÆ Øæ”, / );2 Ōłæ (1 + ( Øæ”+ ŌłÆ Øæ”, / );2 CD+ Øæā,+ = ØæÆ ;(<=;<>) /?@ @ Ōłæ ØæÆ ;(<=;<B) /?@ @ CD+ 1. t-distribution has longer tails, embeds more points in higher dimension to low dimension. 2. There are some heuristics underlying t- SNE. 3. Develops an intuition for whatŌĆÖs going on in the high dimensional data 4. Find structure where other dimensionality-reduction algorithms cannot High dimensional space Low dimensional space (2-D)