Dsirnlp#7

- 1. 隠れセミマルコフモデルによる 教師なし形態素解析 デンソーアイティーラボラトリ 内海 ?慶 ?kuchiumi@d-itlab.co.jp 塚原 ?裕史 ?htsukahara@d-itlab.co.jp 統計数理理研究所 持橋 ??大地 ?daichi@ism.ac.jp 2015/04/30 1

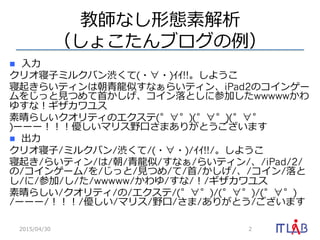

- 2. 教師なし形態素解析 (しょこたんブログの例例) n?? ?入?力力 クリオ寝?子ミルクバン渋くて(???)??!!。しようこ ? 寝起きらいティンは朝?青?龍龍似すなぁらいティン、iPad2のコインゲー ムをじっと?見見つめて?首かしげ、コイン落落としに参加したwwwwwかわ ゆすな!ギザカワユス ? 素晴らしいクオリティのエクステ(゜?゜)(゜?゜)(゜?゜ )ーーー!!!優しいマリス野?口さまありがとうございます n?? 出?力力 クリオ寝?子/ミルクバン/渋くて/(???)/??!!/。しようこ ? 寝起き/らいティン/は/朝/?青?龍龍似/すなぁ/らいティン/、/iPad/2/ の/コインゲーム/を/じっと/?見見つめ/て/?首/かしげ/、/コイン/落落と し/に/参加/し/た/wwwww/かわゆ/すな/!/ギザカワユス ? 素晴らしい/クオリティ/の/エクステ/(゜?゜)/(゜?゜)/(゜?゜) /ーーー/!!!/優しい/マリス/野?口/さま/ありがとう/ございます 2015/04/30 2

- 3. 形態素解析とは n?? ?自然?言語処理理の基礎技術 n?? ?文章を形態素へ分割する p?? 形態素:意味のある最?小の単位 2015/04/30 3 「吾輩は猫である。」の形態素解析結(MeCab) 我輩 ワガハイ 代名詞 は ワ 助詞-系助詞 猫 ネコ 名詞-普通名詞-?一般 で デ 助動詞-ダ ある アル 動詞-?非?自?立立可能 。 。 補助記号-句句点 EOS

- 4. 教師あり形態素解析 2015/04/30 4 ?入?力力?文:我輩は猫である ?文頭 我 輩 我輩 は 猫 で ある である ?文末 形態素辞書を引いて,?見見つかった形態素でラティスを作る 形態素辞書+ 0.3 0.3 0.3 0.1 0.1 0.2 ?一番確率率率の?高い経路路を?見見つける

- 5. ?生起確率率率と遷移確率率率 2015/04/30 5 ?文頭 我 輩 我輩 0.3 0.3 0.3 0.1 0.2 P(名詞 | 名詞) = 0.2 P(我輩 | 名詞) = 0.1 遷移確率率率 ?生起確率率率

- 6. 確率率率の計算 2015/04/30 6 我々 ? ? ? ?名詞 と ? ? ? ? ? ?助詞 して ? ? ? ?動詞 は ? ? ? ? ? ?助詞 まだ ? ? ? ?副詞 希望 ? ? ? ?名詞 は ? ? ? ? ? ?助詞 捨てて ? ?動詞 い ? ? ? ? ? ?接尾辞 ない ? ? ? ?接尾辞 ?。 ? ? ? ? ? ?特殊 正解データ(?人?手) P(名詞 | 名詞) = 名詞と名詞の連接回数 名詞の出現回数 n?? 遷移確率率率 n?? ?生起確率率率 P(我輩 | 名詞) = 名詞と我輩の共起回数 名詞の出現回数

- 7. 正解データの作成や辞書のメンテ n?? ?人?手は?辛い 2015/04/30 7 クリオ寝?子ミルクバン渋くて(???)??!!。しようこ ? 寝起きらいティンは朝?青?龍龍似すなぁらいティン、iPad2のコ インゲームをじっと?見見つめて?首かしげ、コイン落落としに参加 したwwwwwかわゆすな!ギザカワユス ? 素晴らしいクオリティのエクステ(゜?゜)(゜?゜)(゜?゜ )ーーー!!!優しいマリス野?口さまありがとうございます 教師なし形態素解析が望まれる (辞書なし,正解の付与なし)

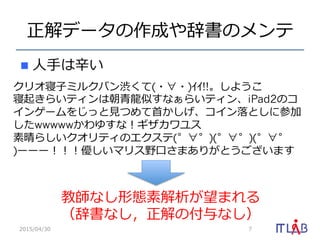

- 8. 従来の教師なし形態素解析 n?? 最?小記述??長原理理に基づく?手法 p??分割?方法はヒューリスティック n?? ベイズ学習?手法 p??単語境界を隠れ変数として推定する 2015/04/30 8 吾 ?輩 ?は ?猫 ?で ?あ ?る 単語境界では連接する?文字列列の バリエーションが多くなる 閾値を記述??長が?小さくなる ように設定 エントロピー 閾値

- 9. 従来の教師なし形態素解析 n?? 従来の教師なし形態素解析は単語分割の みを扱っている n?? 品詞は考慮されない 2015/04/30 9 品詞情報が必要ならば 別途教師なし品詞推定?手法と 組み合わせる必要がある

- 10. 教師なし品詞推定 n?? 基本的に Hidden Markov Model で?行行う p??事前分布や推定?方法がいろいろ n?? 単語分割が与えられていることが前提 2015/04/30 10 x i - 1 xi xi + 1 y i - 1 yi yi+1 y: 品詞 x: 単語

- 11. 単語分割精度度に品詞は影響する? n?? 以下の例例?文を考える n?? ?文法的な知識識なしで解析する場合 n?? 名詞からは動詞?接尾には接続しにくい という知識識 p???文法的に”きのこ/れる”は出にくいと分かる 2015/04/30 11 「この先?生きのこれるのか?」 この/先?生/きのこ/れる/の/か/? この/先/?生き/のこれる/の/か/? (MeCabの解析結果)

- 13. 形態素解析の定式化 n?? 形態素解析: n?? ? ? :単語, ? ? ?:品詞, ? ?:?文字, ? ?:?文 n?? 確率率率 ? ? ? ? ? ? ? ? ? ? ? ? ?を最?大化するような w を推 定する問題 ? ? ? ? ? ? ?w = argmax w p(w|s) s : c1, c2, . . . , cN p(w|s) wn cn szn 13 w = {w1, w2, . . . , wM , z1, z2, . . . , zM }

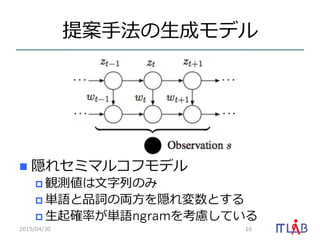

- 14. 部分問題に分割 n?? 形態素解析 w の確率率率を以下とおく n?? 以下のように変形 2015/04/30 14 P(w|s) = M i=1 P(wi, zi|hi 1) hi = {w1, w2, . . . , wi, z1, z2, . . . , zi} P(wi, zi|hi 1) = P(wi|zi, hi 1)P(zi|hi 1) P(wi|zi, hi 1) = P(wi|wi 1 i N+1, zi) P(zi|hi 1) = P(zi|zi 1 i N+1) 品詞毎の 単語ngram 品詞ngram

- 15. ngramモデル 2015/04/30 15 ?文頭 我 輩 我輩 は 猫 で ある である ?文末 0.1 0.2 P(我輩 | 文頭, 名詞) P(名詞 | 名詞) 品詞 bigram 単語 bigram

- 17. ゼロ頻度度問題 n?? ?見見たことの無いngramの確率率率が0になる 2015/04/30 17 ?文頭 我 輩 我輩 は 猫 で ある である ?文末 P(猫 | は, 名詞) = c(猫 | は, 名詞) c(は, 名詞) = 0 P(我輩, は, 猫, で, ある) = 0 観測されてない ?ngram にも適切切な確率率率を与える必要がある 教師なし学習だと最初は単語が分かってないので殆どゼロ頻度度

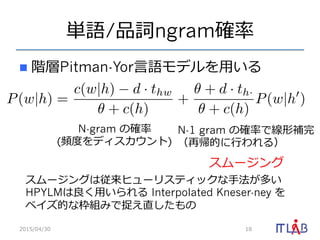

- 18. 単語/品詞ngram確率率率 n?? 階層Pitman-Yor?言語モデルを?用いる 2015/04/30 18 P(w|h) = c(w|h) d · thw + c(h) + + d · th· + c(h) P(w|h ) N-1 gram の確率率率で線形補完 (再帰的に?行行われる) N-gram の確率率率 (頻度度をディスカウント) スムージング スムージングは従来ヒューリスティックな?手法が多い HPYLMは良良く?用いられる Interpolated Kneser-ney を ベイズ的な枠組みで捉え直したもの

- 19. Nested Pitman-Yor Language Model [Mochihashi, 2009] n?? 提案?手法の品詞数を1にした物がNPYLMと ?一致する n?? つまり提案法はNPYLMの拡張になっている n?? 単語 unigram のスムージングには?文字 ? ngramを?用いている 2015/04/30 19

- 20. パラメータ推定 n?? 動的計画法とMCMCを組み合わせた?手法 p??blocked Gibbs sampling n?? ? ? ? ? ? ? ? ? を推定する 2015/04/30 20 : 単語 ?ngram ?言語モデル : 品詞 ?ngram モデルのパラメータ z P(w|s; z, )

- 21. 学習アルゴリズム 1.? 各?文にランダムに品詞を割当てる 2.? ?文を単語と?見見なし,単語/品詞HPYLMを更更新 3.? 収束するまで以下を繰り返す 1.? ランダムに?文sを選択し,sの形態素解析結果w(s)を パラメータから除去 2.? 除去後のパラメータを?用いて形態素解析結果をサン プリング 3.? w’(s)を?用いてパラメータを更更新 2015/04/30 21 w (s) P(w|s; z, ) ※?言語モデルの更更新は以下を参照 Y. W. Teh. A Bayesian Interpretation of In- terpolated Kneser-Ney. Technical Report TRA2/06, School of Computing, NUS.

- 22. 形態素解析のサンプリング n?? ?入?力力:「諸?行行無常の響」 n?? 形態素解析の確率率率に従って1つサンプルす る 2015/04/30 22 P(諸行, 無常, の, 響, 1, 1, 2, 1) = 0.1 P(諸, 行, 無常, の響, 1, 1, 1, 2) = 0.01 ? ? ? 組合せが膨?大なので効率率率が悪い 動的計画法で効率率率的に解く

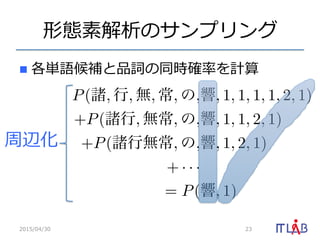

- 23. 形態素解析のサンプリング n?? 各単語候補と品詞の同時確率率率を計算 2015/04/30 23 周辺化 P(諸, 行, 無, 常, の,響, 1, 1, 1, 1, 2, 1) +P(諸行, 無常, の,響, 1, 1, 2, 1) +P(諸行無常, の,響, 1, 2, 1) + · · · = P(響, 1)

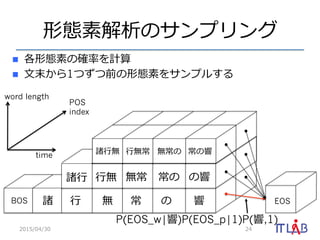

- 24. 形態素解析のサンプリング n?? 各形態素の確率率率を計算 n?? ?文末から1つずつ前の形態素をサンプルする 2015/04/30 24 EOSBOS 諸 ?行行 無 常 の 響 諸?行行 ?行行無 無常 常の の響 word length POS index time 諸?行行無 ?行行無常 無常の 常の響 P(EOS_w|響)P(EOS_p|1)P(響,1)

- 25. 前向き確率率率の計算 2015/04/30 25 [t][k][z] = t k j=1 Z r=0 P(ct t k|ct k t k j+1, z)P(z|r) [t k][j][r] EOSBOS 諸 ?行行 無 常 の 響 諸?行行 ?行行無 無常 常の の響 word length POS index time 諸?行行無 ?行行無常 無常の 常の響 α[6][1][1] →(α[響][1]) つまり周辺化して ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ?を求めている ?P(響, 1)

- 26. 評価 n?? 複数?言語で提案?手法の性能を検証 n?? データセット 2015/04/30 26 ?言語 データ 訓練データ テストデータ ?日本語 京?大コーパス 27,400 1,000 ?日本語 BCCWJ OC 20,000 1,000 中国語 SIGHAN MSR 86,924 3,985 中国語 SIGHAN CITYU 53,019 1,492 中国語 SIGHAN PKU 19,056 1,945 タイ語 InterBEST Novel 1,000 1,000

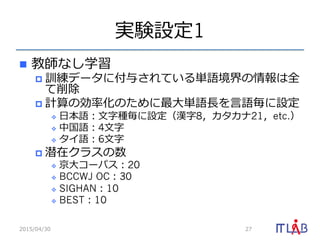

- 27. 実験設定1 n?? 教師なし学習 p?? 訓練データに付与されている単語境界の情報は全 て削除 p?? 計算の効率率率化のために最?大単語??長を?言語毎に設定 v?? ?日本語:?文字種毎に設定(漢字8,カタカナ21,etc.) v?? 中国語:4?文字 v?? タイ語:6?文字 p?? 潜在クラスの数 v?? 京?大コーパス:20 v?? BCCWJ OC:30 v?? SIGHAN:10 v?? BEST:10 2015/04/30 27

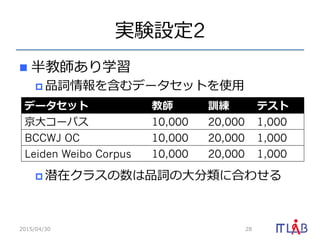

- 28. 実験設定2 n?? 半教師あり学習 p??品詞情報を含むデータセットを使?用 p??潜在クラスの数は品詞の?大分類に合わせる 2015/04/30 28 データセット 教師 訓練 テスト 京?大コーパス 10,000 20,000 1,000 BCCWJ OC 10,000 20,000 1,000 Leiden Weibo Corpus 10,000 20,000 1,000

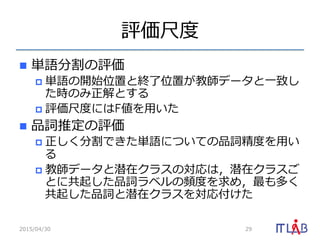

- 29. 評価尺度度 n?? 単語分割の評価 p?? 単語の開始位置と終了了位置が教師データと?一致し た時のみ正解とする p?? 評価尺度度にはF値を?用いた n?? 品詞推定の評価 p?? 正しく分割できた単語についての品詞精度度を?用い る p?? 教師データと潜在クラスの対応は,潜在クラスご とに共起した品詞ラベルの頻度度を求め,最も多く 共起した品詞と潜在クラスを対応付けた 2015/04/30 29

- 30. 教師なし単語分割の評価 2015/04/30 30 データ PYHSMM NPYLM BE+MDL HDP+HMM 京?大コーパス 0.715 0.621 0.713 - BCCWJ 0.705 - - - MSR 0.829 0.802 0.782 0.817 CITYU 0.817 0.824 0.787 - PKU 0.816 - 0.808 0.811 BEST 0.821 - 0.821 -

- 31. 教師なし品詞推定の評価 n?? 正しい単語分割を与えた場合よりも良良い結果 2015/04/30 31 データ PYHSMM NPYLM+BHMM 正解分割+BHMM 京?大コーパス 0.574 0.538 0.495 BCCWJ 0.502 0.441 0.442 LWC 0.330 0.309 0.329 ※LWCの正解は既存の中国語の形態素解析器で付与されたもの ? あくまで参考数値

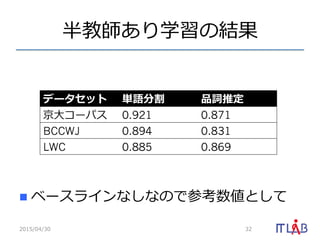

- 32. 半教師あり学習の結果 n?? ベースラインなしなので参考数値として 2015/04/30 32 データセット 単語分割 品詞推定 京?大コーパス 0.921 0.871 BCCWJ 0.894 0.831 LWC 0.885 0.869

- 33. 解析結果の例例 n?? 三河弁の例例(K=50) ウェーブスタジアム/34 刈?谷/28 に/2 FC/1 刈?谷/28 の/2 試 ?合/ 31 を/2 観/35 に/2 ?行行/27 って/40 み/35 りん/3 フォロバ/17 ありがと/19 ございます/19 ! /2 よろしく/19 頼 ?む/35 のん/ 3 。/10 これ/20 ぎし/37 しか/37 ない/12 だ/12 かん/3 ? /10 あけお め/19 だ/12 ぞん/3 !! /10 今年年/18 も/2 よろしく ?/19 頼む/35 ぞん/3 !! /10 おま/13 ー/5 の/2 頭/25 、/2 ちんじゅう/35 だのん/3 !! /8 w/ 8 ぐろ/36 と/24 も/2 ?言/15 う/12 のん/3 !! /8 とちんこで/35 結 んで/19 まったもん/12 で/12 、/2 と/37 れ ?/12 や/45 せん/ 13 に/13 ー/5 (^_^;)/10 のんほい/12 は/2 若若い/24 ?人/20 は/2 あんまし/30 使/15 わ ? ん/12 ぞん/3 !/10 じいさん/24 、/2 ばあさん/37 世代/25 の ?/ 2 ?言葉葉/27 だ/12 のん/3 ! /8 /6 2015/04/30 33

- 37. 参考?文献 n?? Miaohong Chen, et al. 2014. A Joint Model for Unsupervised Chinese Word Segmentation. In EMNLP 2014, pages 854–1 863. n?? Sharon Goldwater, et al. A Fully Bayesian Approach to Unsupervised Part-of-Speech Tagging. In Proceedings of ACL 2007, pages 744– 751. n?? Sharon Goldwater, et al. Contextual Dependencies in Un- supervised Word Segmentation. In Proceedings of ACL/COLING 2006, pages 673–680. n?? Matthew J. Johnson et al. Bayesian Nonparametric Hidden Semi-Markov Models. Journal of Machine Learning Research, 14:673–701. n?? Pierre Magistry et al. Can MDL Improve Unsupervised Chinese Word Segmenta- tion? In Proceedings of the Seventh SIGHAN Work- shop on Chinese Language Processing, pages 2–10. n?? Daichi Mochihashi, et al. Bayesian Unsupervised Word Seg- mentation with Nested Pitman-Yor Language Mod- eling. In Proceedings of ACL-IJCNLP 2009, pages 100–108. n?? Yee Whye Teh. A Bayesian Interpretation of In- terpolated Kneser-Ney. Technical Report TRA2/06, School of Computing, NUS. n?? Valentin Zhikov, et al. An Efficient Algorithm for Unsuper- vised Word Segmentation with Branching Entropy and MDL. In EMNLP 2010, pages 832–842. 2015/04/30 37

![Nested Pitman-Yor Language Model

[Mochihashi, 2009]

n?? 提案?手法の品詞数を1にした物がNPYLMと

?一致する

n?? つまり提案法はNPYLMの拡張になっている

n?? 単語 unigram のスムージングには?文字 ?

ngramを?用いている

2015/04/30 19](https://image.slidesharecdn.com/dsirnlp7-150430055828-conversion-gate01/85/Dsirnlp-7-19-320.jpg)

![前向き確率率率の計算

2015/04/30 25

[t][k][z] =

t k

j=1

Z

r=0

P(ct

t k|ct k

t k j+1, z)P(z|r) [t k][j][r]

EOSBOS 諸 ?行行 無 常 の 響

諸?行行 ?行行無 無常 常の の響

word length

POS

index

time 諸?行行無 ?行行無常 無常の 常の響

α[6][1][1] →(α[響][1])

つまり周辺化して ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ?を求めている ?P(響, 1)](https://image.slidesharecdn.com/dsirnlp7-150430055828-conversion-gate01/85/Dsirnlp-7-25-320.jpg)

![[DL輪読会]Reward Augmented Maximum Likelihood for Neural Structured Prediction](https://cdn.slidesharecdn.com/ss_thumbnails/dlhacks0804-170803075139-thumbnail.jpg?width=560&fit=bounds)