Evaluation of 3d gesture interfaces

Interacting with computational devices was limited to using a keyboard and mouse for many years. More recently, touchscreens have become popular, especially in mobile computing. However, accurate three dimensional gestures are still difficult to recognize. One approach is the use of depth cameras, which are currently receiving much attention again in academic and in commercial fields due to the release of inexpensive consumer electronics like MicrosoftŌĆÖs Kinect in 2010. This presentation reflects depth camera technologies, and how gestures can be used for new interface designs. The first part establishes a framework to evaluate the suitability of depth cameras for gesture recognition. The second part focuses on interactions in three dimensional virtual spaces. I developed a gesture interface for an existing application to navigate in virtual spaces and to construct LEGO models. A user study was performed to compare the gesture input with traditional mouse and keyboard input. Finally, I discussed the influences of the findings for future research in gesture interfaces.

![Results Times for traditional and gesture input

time [min] 35

30

25

20

15

10

5

0

Mouse

Gesture](https://image.slidesharecdn.com/presentationpdf-140211141942-phpapp02/85/Evaluation-of-3d-gesture-interfaces-29-320.jpg)

Recommended

More Related Content

What's hot (6)

Viewers also liked (6)

Similar to Evaluation of 3d gesture interfaces (20)

Recently uploaded (20)

![[Webinar] Scaling Made Simple: Getting Started with No-Code Web Apps](https://cdn.slidesharecdn.com/ss_thumbnails/webinarscalingmadesimplegettingstartedwithno-codewebapps-mar52025-250305183437-f03c78a3-thumbnail.jpg?width=560&fit=bounds)

![[Webinar] Scaling Made Simple: Getting Started with No-Code Web Apps](https://cdn.slidesharecdn.com/ss_thumbnails/webinarscalingmadesimplegettingstartedwithno-codewebapps-mar52025-250305183437-f03c78a3-thumbnail.jpg?width=560&fit=bounds)

Evaluation of 3d gesture interfaces

- 1. Evaluation of Gesture Interfaces 24 June 2013 Alexander Schulze as2271@cam.ac.uk

- 6. hand gesture

- 8. hand gesture position tracking depth image sequence gesture recognition hand 1: U move hand 2: show 5 gesture description

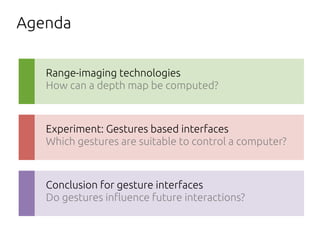

- 9. Agenda Range-imaging technologies How can a depth map be computed? Experiment: Gestures based interfaces Which gestures are suitable to control a computer? Conclusion for gesture interfaces Do gestures in"uence future interactions?

- 11. Range-imaging for gesture interfaces ┬¦’é¦ŌĆ» What are suitable devices for gesture interfaces to be widely used?

- 12. Range-imaging for gesture interfaces ┬¦’é¦ŌĆ» What are suitable devices for gesture interfaces to be widely used? a#ordable

- 13. Range-imaging for gesture interfaces ┬¦’é¦ŌĆ» What are suitable devices for gesture interfaces to be widely used? a#ordable compact

- 14. Range-imaging for gesture interfaces ┬¦’é¦ŌĆ» What are suitable devices for gesture interfaces to be widely used? a#ordable compact mobile

- 15. Range-imaging for gesture interfaces ┬¦’é¦ŌĆ» What are suitable devices for gesture interfaces to be widely used? a#ordable compact mobile markerless

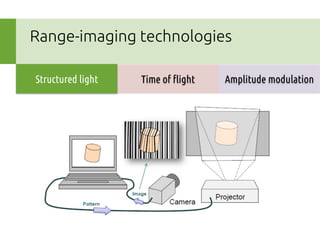

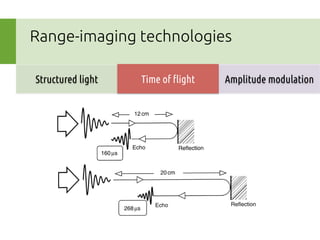

- 16. Range-imaging technologies Structured light Time of "ight Amplitude modulation

- 17. Range-imaging technologies Structured light Time of "ight Amplitude modulation

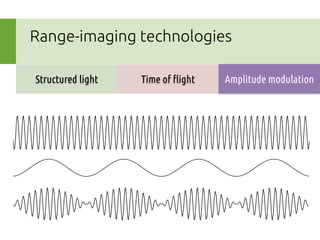

- 18. Range-imaging technologies Structured light Time of "ight Amplitude modulation

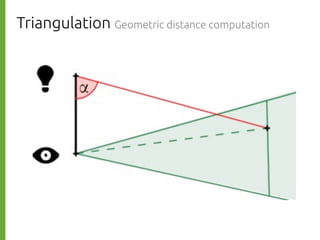

- 19. Triangulation Geometric distance computation

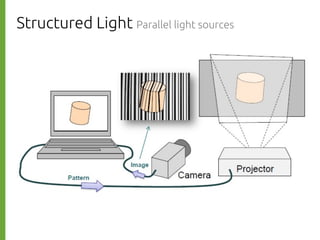

- 20. Structured Light Parallel light sources

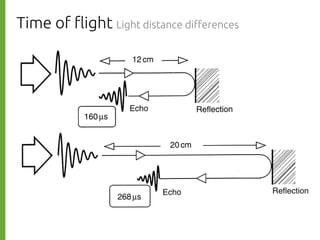

- 21. Time of "ight Light distance di#erences

- 22. Amplitude modulation Sending light waves coherent light source signal amplitude modulated light

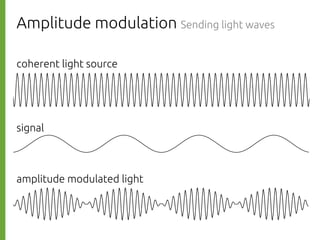

- 23. Comparison Suitable depth cameras Range Amplitude modulation Accuracy Time of Structured light "ight Image resolution < Time of "ight Amplitude modulation Structured light < < Time of "ight Amplitude modulation Structured light

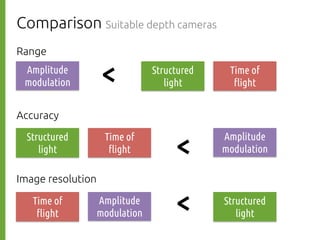

- 24. Comparison Suitable depth cameras ┬¦’é¦ŌĆ» ┬¦’é¦ŌĆ» ┬¦’é¦ŌĆ» ┬¦’é¦ŌĆ» Depth resolution and range Update rate and latency Robustness Spatial resolution of depth image

- 25. 0.01mm

- 26. Experiment: Gesture based interfaces

- 29. Results Times for traditional and gesture input time [min] 35 30 25 20 15 10 5 0 Mouse Gesture

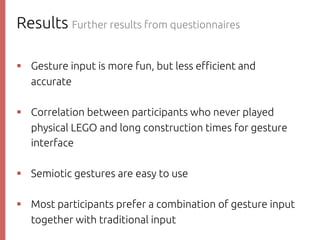

- 30. Results Further results from questionnaires ┬¦’é¦ŌĆ» Gesture input is more fun, but less e$cient and accurate ┬¦’é¦ŌĆ» Correlation between participants who never played physical LEGO and long construction times for gesture interface ┬¦’é¦ŌĆ» Semiotic gestures are easy to use ┬¦’é¦ŌĆ» Most participants prefer a combination of gesture input together with traditional input

- 31. Conclusion for gesture interfaces

- 32. Navigation Accuracy and precision ┬¦’é¦ŌĆ» Imprecise actions can be controlled by gestures ┬¦’é¦ŌĆ» Gestures might support creativity

- 33. Manipulation Accuracy and precision ┬¦’é¦ŌĆ» Gesture input cannot replace precise mouse and keyboard input

- 34. Conclusion Gesture Interpretation ┬¦’é¦ŌĆ» Most current gesture interfaces are built for controlling the computer; however, gestures are a subconscious expression of human feelings ┬¦’é¦ŌĆ» Experience of haptic feedback in ergotic physical gestures is helpful for simulating the haptic feedback in related pseudo-ergotic gestures

- 35. Questions?