INSTRUCTION LEVEL PARALLALISM

Download as PPTX, PDF21 likes22,548 views

This document discusses instruction-level parallelism (ILP), which refers to executing multiple instructions simultaneously in a program. It describes different types of parallel instructions that do not depend on each other, such as at the bit, instruction, loop, and thread levels. The document provides an example to illustrate ILP and explains that compilers and processors aim to maximize ILP. It outlines several ILP techniques used in microarchitecture, including instruction pipelining, superscalar, out-of-order execution, register renaming, speculative execution, and branch prediction. Pipelining and superscalar processing are explained in more detail.

1 of 16

Downloaded 675 times

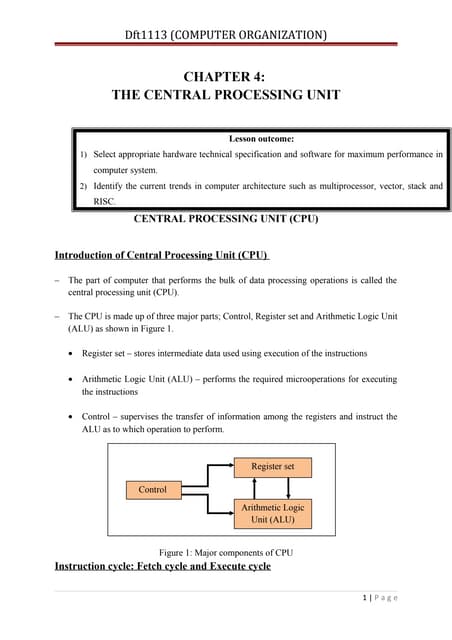

![WHAT IS A PARALLEL INSTRUCTION?

’üĄ Parallel instructions are a set of instructions that do not depend on each other

to be executed.

’üĄ Hierarchy

’é¦ Bit level Parallelism

ŌĆó 16 bit add on 8 bit processor

’é¦ Instruction level Parallelism

’é¦ Loop level Parallelism

ŌĆó for (i=1; i<=1000; i= i+1)

x[i] = x[i] + y[i];

’é¦ Thread level Parallelism

ŌĆó multi-core computers](https://image.slidesharecdn.com/f2ccb870-9ddf-4739-aef7-8e12d9256a14-150413000941-conversion-gate01/85/INSTRUCTION-LEVEL-PARALLALISM-3-320.jpg)

Recommended

Parallel processing

Parallel processingrajshreemuthiah

╠²

The document provides an overview of parallel processing and multiprocessor systems. It discusses Flynn's taxonomy, which classifies computers as SISD, SIMD, MISD, or MIMD based on whether they process single or multiple instructions and data in parallel. The goals of parallel processing are to reduce wall-clock time and solve larger problems. Multiprocessor topologies include uniform memory access (UMA) and non-uniform memory access (NUMA) architectures.Basic MIPS implementation

Basic MIPS implementationkavitha2009

╠²

This document provides an overview of implementing a simplified MIPS processor with a memory-reference instructions, arithmetic-logical instructions, and control flow instructions. It discusses:

1. Using a program counter to fetch instructions from memory and reading register operands.

2. Executing most instructions via fetching, operand fetching, execution, and storing in a single cycle.

3. Building a datapath with functional units for instruction fetching, ALU operations, memory references, and branches/jumps.

4. Implementing control using a finite state machine that sets multiplexers and control lines based on the instruction.Instruction cycle

Instruction cycleshweta-sharma99

╠²

The instruction cycle describes the process a computer follows to execute each machine language instruction. It involves 4 phases: 1) Fetch - the instruction is fetched from memory and placed in the instruction register. 2) Decode - the instruction is analyzed and decoded. 3) Execute - the processor executes the instruction by performing the specified operation. 4) The program counter is then incremented to point to the next instruction, and the cycle repeats. Each phase involves transferring data between the program counter, instruction register, memory, and other components via a common bus under the control of a timing unit. The instruction specifies the operation to be performed, such as a memory reference, register operation, or I/O access.Timing and control

Timing and controlchauhankapil

╠²

The document discusses timing and control in basic computers. It describes two types of control organizations: hardwired control and microprogram control. Hardwired control implements control logic with gates and flip-flops, allowing for fast operation. Microprogram control stores control information in a control memory that programs required microoperations. The document also provides details on the components and functioning of a hardwired control unit, including an instruction register, control logic gates, decoders, and sequence counter used to control the timing of registers based on clock pulses.Memory Organization

Memory OrganizationKamal Acharya

╠²

This slide contain the introduction to memory , hierarchy, types, virtual memory,associative memory and cache memory.Process scheduling

Process schedulingV.V.Vanniaperumal College for Women

╠²

Process scheduling involves managing the CPU and selecting which process runs next based on scheduling strategies. The operating system maintains different queues for processes in various states like ready, blocked, and running. These include the job queue, ready queue, and device queues. Schedulers select processes and move them between queues. The long-term scheduler selects processes to load into memory while the short-term scheduler selects the next process to run on the CPU. The medium-term scheduler handles swapping processes in and out of memory. Context switching involves saving a process's state when it stops running and restoring another process's state when it starts running.Interrupts

InterruptsUrwa Shanza

╠²

The document discusses interrupts in a computer system. It defines an interrupt as a signal that breaks the normal sequence of program execution to handle an event that requires immediate attention, like input from a device. There are two main types of interrupts: hardware interrupts caused by external devices, and software interrupts caused by exceptional conditions in a program like division by zero. The document outlines how interrupts work, including how the processor saves the state of the interrupted program, services the interrupt, and then restores the original program context. It also discusses interrupt priorities and how interrupts can be disabled or deferred based on priority.Direct Memory Access(DMA)

Direct Memory Access(DMA)Page Maker

╠²

Direct Memory Access (DMA) allows certain hardware subsystems to access main system memory independently of the CPU. DMA controllers temporarily borrow the address, data, and control buses from the microprocessor to transfer data directly between an I/O port and memory locations. This allows fast transfer of data to and from devices while the CPU performs other tasks, improving overall system performance. DMA transfers can occur via block transfers where the DMA controller controls the bus for an extended period, or via cycle stealing where it uses the bus for one transfer then returns control to the CPU.Superscalar Architecture_AIUB

Superscalar Architecture_AIUBNusrat Mary

╠²

Faster microprocessor design presentation in American International University-Bangladesh (AIUB). Presentation was taken under the subject "SELECTED TOPICS IN ELECTRICAL AND ELECTRONIC ENGINEERING (PROCESSOR AND DSP HARDWARE DESIGN WITH SYSTEM VERILOG, VHDL AND FPGAS) [MEEE]", as a final semester student of M.Sc at AIUB.

Instruction Set Architecture

Instruction Set ArchitectureDilum Bandara

╠²

The document discusses instruction set architecture (ISA), which is part of computer architecture related to programming. It defines the native data types, instructions, registers, addressing modes, and other low-level aspects of a computer's operation. Well-known ISAs include x86, ARM, MIPS, and RISC. A good ISA lasts through many implementations, supports a variety of uses, and provides convenient functions while permitting efficient implementation. Assembly language is used to program at the level of an ISA's registers, instructions, and execution order.Direct memory access (dma)

Direct memory access (dma)Zubair Khalid

╠²

Direct memory access (DMA) allows certain hardware subsystems to access computer memory independently of the central processing unit (CPU). During DMA transfer, the CPU is idle while an I/O device reads from or writes directly to memory using a DMA controller. This improves data transfer speeds as the CPU does not need to manage each memory access and can perform other tasks. DMA is useful when CPU cannot keep up with data transfer speeds or needs to work while waiting for a slow I/O operation to complete.Superscalar Processor

Superscalar ProcessorManash Kumar Mondal

╠²

This document discusses superscalar processors, which can execute multiple instructions in parallel within a single processor. A superscalar processor improves performance by executing scalar instructions simultaneously. It consists of an instruction dispatch unit that routes decoded instructions to functional units, reservation stations that decouple instruction decoding from execution, and a reorder buffer that stores in-flight instructions and ensures they complete in program order. While superscalar processors can increase performance, they have limitations such as branch delays and complexity that limit scalability.Instruction codes

Instruction codespradeepa velmurugan

╠²

An instruction code consists of an operation code and operand(s) that specify the operation to perform and data to use. Operation codes are binary codes that define operations like addition, subtraction, etc. Early computers stored programs and data in separate memory sections and used a single accumulator register. Modern computers have multiple registers for temporary storage and performing operations faster than using only memory. Computer instructions encode an operation code and operand fields to specify the basic operations to perform on data stored in registers or memory.Unit 3-pipelining & vector processing

Unit 3-pipelining & vector processingvishal choudhary

╠²

This document discusses parallel processing techniques in computer systems, including pipelining and vector processing. It provides information on parallel processing levels and Flynn's classification of computer architectures. Pipelining is described as a technique to decompose sequential processes into overlapping suboperations to improve computational speed. Vector processing involves performing the same operation on multiple data elements simultaneously. The document outlines various pipeline designs and hazards that can occur, such as structural hazards from resource conflicts and data hazards from data dependencies.CPU Scheduling Algorithms

CPU Scheduling AlgorithmsShubhashish Punj

╠²

The document discusses various CPU scheduling algorithms including first come first served, shortest job first, priority, and round robin. It describes the basic concepts of CPU scheduling and criteria for evaluating algorithms. Implementation details are provided for shortest job first, priority, and round robin scheduling in C++.Branch prediction

Branch predictionAneesh Raveendran

╠²

Branch prediction is necessary to reduce penalties from branches in modern deep pipelines. It predicts the direction (taken or not taken) and target of branches. Common techniques include bimodal prediction using saturating counters and two-level prediction using branch history tables and pattern history tables. Real processors use hybrid predictors combining different techniques. Mispredictions require flushing the pipeline and incur a performance penalty.pipelining

pipeliningSiddique Ibrahim

╠²

pipelining is the concept of decomposing the sequential process into number of small stages in which each stage execute individual parts of instruction life cycle inside the processor.Superscalar & superpipeline processor

Superscalar & superpipeline processorMuhammad Ishaq

╠²

This document discusses superscalar and super pipeline approaches to improving processor performance. Superscalar processors execute multiple independent instructions in parallel using multiple pipelines. Super pipelines break pipeline stages into smaller stages to reduce clock period and increase instruction throughput. While superscalar utilizes multiple parallel pipelines, super pipelines perform multiple stages per clock cycle in each pipeline. Super pipelines benefit from higher parallelism but also increase potential stalls from dependencies. Both approaches aim to maximize parallel instruction execution but face limitations from true data and other dependencies.memory reference instruction

memory reference instructionDeepikaT13

╠²

This document discusses memory reference instructions (MRI) and their implementation using microoperations. It defines MRI as instructions that operate on data stored in memory. Seven common MRI are described: AND to AC, ADD to AC, LDA, STA, BUN, BSA, and ISZ. Each MRI is broken down into its constituent microoperations, which are controlled by timing signals. The microoperations transfer data between memory, registers, and logic circuits. A control flow chart illustrates the sequencing of microoperations for each instruction type.Computer registers

Computer registersDeepikaT13

╠²

This document discusses computer registers and their functions. It describes 8 key registers - Data Register, Address Register, Accumulator, Instruction Register, Program Counter, Temporary Register, Input Register and Output Register. It explains what each register stores and its role. For example, the Program Counter holds the address of the next instruction to be executed, while the Accumulator is used for general processing. The registers are connected via a common bus to transfer information between memory and registers for processing instructions.Cache memory

Cache memoryAnuj Modi

╠²

Cache memory is a small, fast memory located between the CPU and main memory. It stores copies of frequently used instructions and data to accelerate access and improve performance. There are different mapping techniques for cache including direct mapping, associative mapping, and set associative mapping. When the cache is full, replacement algorithms like LRU and FIFO are used to determine which content to remove. The cache can write to main memory using either a write-through or write-back policy.Processor Organization and Architecture

Processor Organization and ArchitectureVinit Raut

╠²

The document discusses processor organization and architecture. It covers the Von Neumann model, which stores both program instructions and data in the same memory. The Institute for Advanced Study (IAS) computer is described as the first stored-program computer, designed by John von Neumann to overcome limitations of previous computers like the ENIAC. The document also covers the Harvard architecture, instruction formats, register organization including general purpose, address, and status registers, and issues in instruction format design like instruction length and allocation of bits.Control Unit Design

Control Unit DesignVinit Raut

╠²

This topic describes about hardwired and microprogrammed control unit design methodology. Also it focuses on microinstruction types, RISC and CISC.Modes Of Transfer in Input/Output Organization

Modes Of Transfer in Input/Output OrganizationMOHIT AGARWAL

╠²

This document discusses different modes of data transfer between I/O devices and memory in a computer system. It describes three main modes: programmed I/O, interrupt-initiated I/O, and direct memory access (DMA). Programmed I/O involves constant CPU monitoring during transfers. Interrupt-initiated I/O uses interrupts to notify the CPU when a transfer is ready. DMA allows I/O devices to access memory directly without CPU involvement for improved efficiency.DMA and DMA controller

DMA and DMA controllernishant upadhyay

╠²

The document discusses direct memory access (DMA) and DMA controllers. It explains that DMA allows hardware subsystems like disk drives and graphics cards to access main memory independently of the CPU. This is useful because it allows data transfers to occur in parallel with other CPU operations, improving overall system performance. A DMA controller generates memory addresses and initiates read/write cycles. It has registers that specify the I/O port, transfer direction, and number of bytes to transfer per burst. DMA controllers use different transfer modes like burst, cycle stealing, and transparent to move blocks of data efficiently between peripheral devices and memory.Multi processor scheduling

Multi processor schedulingShashank Kapoor

╠²

Operating System: Multi-Processor scheduling, Multi-Core issues, Processor affinity and Load balancingInstruction format

Instruction formatSanjeev Patel

╠²

An instruction format specifies an operation code and operands. There are three main types of instruction formats: three address instructions specify memory addresses for two operands and one destination; two address instructions specify two memory locations or registers with the destination assumed to be the first operand; and one address instructions use a single accumulator register for all data manipulation. Addressing modes further specify how the address field of an instruction is interpreted to determine the effective address of an operand. Common addressing modes include immediate, register, register indirect, auto-increment/decrement, direct, indirect, relative, indexed, and base register addressing.Parallelism

ParallelismMd Raseduzzaman

╠²

This document discusses parallelism and its goals of increasing computational speed and throughput. It describes two types of parallelism: instruction level parallelism and processor level parallelism. Instruction level parallelism techniques include pipelining and superscalar processing to allow multiple instructions to execute simultaneously. Processor level parallelism involves multiple independent processors working concurrently through approaches like array computers and multi-processors.More Related Content

What's hot (20)

Superscalar Architecture_AIUB

Superscalar Architecture_AIUBNusrat Mary

╠²

Faster microprocessor design presentation in American International University-Bangladesh (AIUB). Presentation was taken under the subject "SELECTED TOPICS IN ELECTRICAL AND ELECTRONIC ENGINEERING (PROCESSOR AND DSP HARDWARE DESIGN WITH SYSTEM VERILOG, VHDL AND FPGAS) [MEEE]", as a final semester student of M.Sc at AIUB.

Instruction Set Architecture

Instruction Set ArchitectureDilum Bandara

╠²

The document discusses instruction set architecture (ISA), which is part of computer architecture related to programming. It defines the native data types, instructions, registers, addressing modes, and other low-level aspects of a computer's operation. Well-known ISAs include x86, ARM, MIPS, and RISC. A good ISA lasts through many implementations, supports a variety of uses, and provides convenient functions while permitting efficient implementation. Assembly language is used to program at the level of an ISA's registers, instructions, and execution order.Direct memory access (dma)

Direct memory access (dma)Zubair Khalid

╠²

Direct memory access (DMA) allows certain hardware subsystems to access computer memory independently of the central processing unit (CPU). During DMA transfer, the CPU is idle while an I/O device reads from or writes directly to memory using a DMA controller. This improves data transfer speeds as the CPU does not need to manage each memory access and can perform other tasks. DMA is useful when CPU cannot keep up with data transfer speeds or needs to work while waiting for a slow I/O operation to complete.Superscalar Processor

Superscalar ProcessorManash Kumar Mondal

╠²

This document discusses superscalar processors, which can execute multiple instructions in parallel within a single processor. A superscalar processor improves performance by executing scalar instructions simultaneously. It consists of an instruction dispatch unit that routes decoded instructions to functional units, reservation stations that decouple instruction decoding from execution, and a reorder buffer that stores in-flight instructions and ensures they complete in program order. While superscalar processors can increase performance, they have limitations such as branch delays and complexity that limit scalability.Instruction codes

Instruction codespradeepa velmurugan

╠²

An instruction code consists of an operation code and operand(s) that specify the operation to perform and data to use. Operation codes are binary codes that define operations like addition, subtraction, etc. Early computers stored programs and data in separate memory sections and used a single accumulator register. Modern computers have multiple registers for temporary storage and performing operations faster than using only memory. Computer instructions encode an operation code and operand fields to specify the basic operations to perform on data stored in registers or memory.Unit 3-pipelining & vector processing

Unit 3-pipelining & vector processingvishal choudhary

╠²

This document discusses parallel processing techniques in computer systems, including pipelining and vector processing. It provides information on parallel processing levels and Flynn's classification of computer architectures. Pipelining is described as a technique to decompose sequential processes into overlapping suboperations to improve computational speed. Vector processing involves performing the same operation on multiple data elements simultaneously. The document outlines various pipeline designs and hazards that can occur, such as structural hazards from resource conflicts and data hazards from data dependencies.CPU Scheduling Algorithms

CPU Scheduling AlgorithmsShubhashish Punj

╠²

The document discusses various CPU scheduling algorithms including first come first served, shortest job first, priority, and round robin. It describes the basic concepts of CPU scheduling and criteria for evaluating algorithms. Implementation details are provided for shortest job first, priority, and round robin scheduling in C++.Branch prediction

Branch predictionAneesh Raveendran

╠²

Branch prediction is necessary to reduce penalties from branches in modern deep pipelines. It predicts the direction (taken or not taken) and target of branches. Common techniques include bimodal prediction using saturating counters and two-level prediction using branch history tables and pattern history tables. Real processors use hybrid predictors combining different techniques. Mispredictions require flushing the pipeline and incur a performance penalty.pipelining

pipeliningSiddique Ibrahim

╠²

pipelining is the concept of decomposing the sequential process into number of small stages in which each stage execute individual parts of instruction life cycle inside the processor.Superscalar & superpipeline processor

Superscalar & superpipeline processorMuhammad Ishaq

╠²

This document discusses superscalar and super pipeline approaches to improving processor performance. Superscalar processors execute multiple independent instructions in parallel using multiple pipelines. Super pipelines break pipeline stages into smaller stages to reduce clock period and increase instruction throughput. While superscalar utilizes multiple parallel pipelines, super pipelines perform multiple stages per clock cycle in each pipeline. Super pipelines benefit from higher parallelism but also increase potential stalls from dependencies. Both approaches aim to maximize parallel instruction execution but face limitations from true data and other dependencies.memory reference instruction

memory reference instructionDeepikaT13

╠²

This document discusses memory reference instructions (MRI) and their implementation using microoperations. It defines MRI as instructions that operate on data stored in memory. Seven common MRI are described: AND to AC, ADD to AC, LDA, STA, BUN, BSA, and ISZ. Each MRI is broken down into its constituent microoperations, which are controlled by timing signals. The microoperations transfer data between memory, registers, and logic circuits. A control flow chart illustrates the sequencing of microoperations for each instruction type.Computer registers

Computer registersDeepikaT13

╠²

This document discusses computer registers and their functions. It describes 8 key registers - Data Register, Address Register, Accumulator, Instruction Register, Program Counter, Temporary Register, Input Register and Output Register. It explains what each register stores and its role. For example, the Program Counter holds the address of the next instruction to be executed, while the Accumulator is used for general processing. The registers are connected via a common bus to transfer information between memory and registers for processing instructions.Cache memory

Cache memoryAnuj Modi

╠²

Cache memory is a small, fast memory located between the CPU and main memory. It stores copies of frequently used instructions and data to accelerate access and improve performance. There are different mapping techniques for cache including direct mapping, associative mapping, and set associative mapping. When the cache is full, replacement algorithms like LRU and FIFO are used to determine which content to remove. The cache can write to main memory using either a write-through or write-back policy.Processor Organization and Architecture

Processor Organization and ArchitectureVinit Raut

╠²

The document discusses processor organization and architecture. It covers the Von Neumann model, which stores both program instructions and data in the same memory. The Institute for Advanced Study (IAS) computer is described as the first stored-program computer, designed by John von Neumann to overcome limitations of previous computers like the ENIAC. The document also covers the Harvard architecture, instruction formats, register organization including general purpose, address, and status registers, and issues in instruction format design like instruction length and allocation of bits.Control Unit Design

Control Unit DesignVinit Raut

╠²

This topic describes about hardwired and microprogrammed control unit design methodology. Also it focuses on microinstruction types, RISC and CISC.Modes Of Transfer in Input/Output Organization

Modes Of Transfer in Input/Output OrganizationMOHIT AGARWAL

╠²

This document discusses different modes of data transfer between I/O devices and memory in a computer system. It describes three main modes: programmed I/O, interrupt-initiated I/O, and direct memory access (DMA). Programmed I/O involves constant CPU monitoring during transfers. Interrupt-initiated I/O uses interrupts to notify the CPU when a transfer is ready. DMA allows I/O devices to access memory directly without CPU involvement for improved efficiency.DMA and DMA controller

DMA and DMA controllernishant upadhyay

╠²

The document discusses direct memory access (DMA) and DMA controllers. It explains that DMA allows hardware subsystems like disk drives and graphics cards to access main memory independently of the CPU. This is useful because it allows data transfers to occur in parallel with other CPU operations, improving overall system performance. A DMA controller generates memory addresses and initiates read/write cycles. It has registers that specify the I/O port, transfer direction, and number of bytes to transfer per burst. DMA controllers use different transfer modes like burst, cycle stealing, and transparent to move blocks of data efficiently between peripheral devices and memory.Multi processor scheduling

Multi processor schedulingShashank Kapoor

╠²

Operating System: Multi-Processor scheduling, Multi-Core issues, Processor affinity and Load balancingInstruction format

Instruction formatSanjeev Patel

╠²

An instruction format specifies an operation code and operands. There are three main types of instruction formats: three address instructions specify memory addresses for two operands and one destination; two address instructions specify two memory locations or registers with the destination assumed to be the first operand; and one address instructions use a single accumulator register for all data manipulation. Addressing modes further specify how the address field of an instruction is interpreted to determine the effective address of an operand. Common addressing modes include immediate, register, register indirect, auto-increment/decrement, direct, indirect, relative, indexed, and base register addressing.Parallelism

ParallelismMd Raseduzzaman

╠²

This document discusses parallelism and its goals of increasing computational speed and throughput. It describes two types of parallelism: instruction level parallelism and processor level parallelism. Instruction level parallelism techniques include pipelining and superscalar processing to allow multiple instructions to execute simultaneously. Processor level parallelism involves multiple independent processors working concurrently through approaches like array computers and multi-processors.Similar to INSTRUCTION LEVEL PARALLALISM (20)

Pipelining 16 computers Artitacher pdf

Pipelining 16 computers Artitacher pdfMadhuGupta99385

╠²

Pipelining is a technique where a microprocessor can begin executing the next instruction before finishing the previous one. It works by dividing instruction processing into discrete stages - fetch, decode, execute, memory, and write back. When an instruction enters one stage, the next instruction can enter the following stage so that multiple instructions are in different stages at the same time, improving efficiency. The pipeline allows for faster overall processing but hazards can occur if instructions depend on previous ones, disrupting the smooth flow.Assembly p1

Assembly p1raja khizar

╠²

Pipelining is a technique used in microprocessors to overlap the execution of multiple instructions by dividing instruction execution into discrete stages. It allows the next instruction to begin executing before the previous one has finished. The pipeline is divided into segments that perform discrete operations concurrently. This improves processor throughput by allowing new instructions to enter the pipeline every clock cycle.pipelining

pipeliningsudhir saurav

╠²

This document discusses pipelining in microprocessors. It describes how pipelining works by dividing instruction processing into stages - fetch, decode, execute, memory, and write back. This allows subsequent instructions to begin processing before previous instructions have finished, improving processor efficiency. The document provides estimated timing for each stage and notes advantages like quicker execution for large programs, while disadvantages include added hardware and potential pipeline hazards disrupting smooth execution. It then gives examples of how four instructions would progress through each stage in a pipelined versus linear fashion.Basic MIPS implementation

Basic MIPS implementationkavitha2009

╠²

This document provides an overview of implementing a simplified MIPS processor with a memory-reference instructions, arithmetic-logical instructions, and control flow instructions. It discusses:

1. Using a program counter to fetch instructions from memory and reading register operands.

2. Executing most instructions via fetching, operand fetching, execution, and storing in a single cycle.

3. Building a datapath with functional units for instruction fetching, ALU operations, memory references, and branches/jumps.

4. Implementing control using a finite state machine that sets multiplexers and control lines based on the instruction.MIPS IMPLEMENTATION.pptx

MIPS IMPLEMENTATION.pptxJEEVANANTHAMG6

╠²

1. A basic MIPS implementation is described that contains only memory-reference, arithmetic-logical, and control flow instructions. It uses a program counter to fetch instructions from memory and reads register operands before using the ALU.

2. A multicycle implementation is proposed that shares functional units like the ALU and registers across different instruction types by using multiplexers. It breaks instruction execution into stages like fetch, decode, execute, and writeback.

3. The control unit for this implementation can be designed using a finite state machine or microprogramming. It defines signals to control multiplexers and functional units on each clock cycle to implement the pipeline.pipelining

pipeliningSadaf Rasheed

╠²

The document discusses parallel processing and pipelining. It defines parallel processing as performing concurrent data processing to achieve faster execution. This can be done by having multiple ALUs that can execute instructions simultaneously. The document then discusses Flynn's classification of computer architectures based on instruction and data streams. It describes single instruction single data (SISD), multiple instruction single data (MISD), and multiple instruction multiple data (MIMD) architectures. The document then defines pipelining as decomposing processes into sub-operations that flow through pipeline stages. It provides examples of arithmetic and instruction pipelines, describing the stages in each.Chapter 3

Chapter 3Rozase Patel

╠²

This document discusses computer architecture and CPU design. It covers the von Neumann architecture, ways to speed up CPU operations like pipelining and superscalar designs. It also discusses the differences between CISC and RISC instruction set architectures. CISC instruction sets became more complex over time while RISC advocates designed simpler instruction sets with more registers to potentially achieve faster execution.Pipelining , structural hazards

Pipelining , structural hazardsMunaam Munawar

╠²

What is Pipelining? its benefits and effects.

What are structural Hazards? How to overcome Structural Hazards.Pipeline & Nonpipeline Processor

Pipeline & Nonpipeline ProcessorSmit Shah

╠²

This slide is about the micro processor & micro controller. The topic describe about the Pipeline & Nonpipeline ProcessorUnit 5-lecture 5

Unit 5-lecture 5vishal choudhary

╠²

The document discusses computer architecture, including the central processing unit architecture, machine organization, and the von Neumann model. It covers topics like speeding up CPU operations through multiple registers, pipelining, superscalar and VLIW architectures. It also discusses the differences between CISC and RISC instruction set architectures.What is simultaneous multithreading

What is simultaneous multithreadingFraboni Ec

╠²

Simultaneous multithreading (SMT) allows multiple independent threads to issue and execute instructions simultaneously each clock cycle by sharing the functional units of a superscalar processor. This improves performance over conventional multithreading approaches like coarse-grained and fine-grained multithreading. SMT provides good performance across a wide range of workloads by utilizing instruction issue slots and execution resources that would otherwise go unused when a single thread is limited by dependencies or cache misses. Implementing SMT requires minimal additional hardware like multiple program counters and per-thread scheduling structures.POLITEKNIK MALAYSIA

POLITEKNIK MALAYSIAAiman Hud

╠²

The CPU is the central processing unit of a computer and consists of three main parts - the control unit, register set, and ALU. The control unit directs operations between the register set and ALU. The register set stores intermediate data and the ALU performs arithmetic and logic operations. The CPU follows a fetch-execute cycle where it fetches instructions from memory and stores them in the instruction register before executing them. Common instruction types include processor-memory operations, I/O operations, data processing, and control operations.Design pipeline architecture for various stage pipelines

Design pipeline architecture for various stage pipelinesMahmudul Hasan

╠²

This document discusses the concepts of single-cycle control, multi-cycle control, and pipelining in processors. It explains that single-cycle control has a low CPI but a long clock period, while multi-cycle control has a short clock period but high CPI. Pipelining allows overlapping the execution of instructions to improve throughput. The document presents diagrams of 5-stage instruction pipelines and describes the fetch, decode, execute, memory, and write-back stages. It also discusses pipeline hazards and performance improvements from pipelining over single-cycle and multi-cycle designs.Basic structure of computers by aniket bhute

Basic structure of computers by aniket bhuteAniket Bhute

╠²

The document summarizes the basic structure and functional units of computers. It discusses how computers handle information through instructions and data. The main functional units that process information are the memory unit, arithmetic and logic unit (ALU), and control unit. It also describes the number representation systems used in computers, focusing on the two's complement system for signed integers. Addition and subtraction are performed through two's complement arithmetic.Implementing True Zero Cycle Branching in Scalar and Superscalar Pipelined Pr...

Implementing True Zero Cycle Branching in Scalar and Superscalar Pipelined Pr...IDES Editor

╠²

In this paper, we have proposed a novel architectural

technique which can be used to boost performance of modern

day processors. It is especially useful in certain code constructs

like small loops and try-catch blocks. The technique is aimed

at improving performance by reducing the number of

instructions that need to enter the pipeline itself. We also

demonstrate its working in a scalar pipelined soft-core

processor developed by us. Lastly, we present how a superscalar

microprocessor can take advantage of this technique and

increase its performance.Pipeline Computing by S. M. Risalat Hasan Chowdhury

Pipeline Computing by S. M. Risalat Hasan ChowdhuryS. M. Risalat Hasan Chowdhury

╠²

The document discusses pipeline computing and its various types and applications. It defines pipeline computing as a technique to decompose a sequential process into parallel sub-processes that can execute concurrently. There are two main types - linear and non-linear pipelines. Linear pipelines use a single reservation table while non-linear pipelines use multiple tables. Common applications of pipeline computing include instruction pipelines in CPUs, graphics pipelines in GPUs, software pipelines using pipes, and HTTP pipelining. The document also discusses implementations of pipeline computing and its advantages like reduced cycle time and increased instruction throughput.Debate on RISC-CISC

Debate on RISC-CISCkollatiMeenakshi

╠²

This document discusses the differences between Complex Instruction Set Computers (CISCs) and Reduced Instruction Set Computers (RISCs). CISCs use multi-step instructions to reduce the number of instructions needed, but this can decrease performance if the complex instructions take significantly longer to execute. RISCs aim to improve performance by limiting memory access to load and store instructions, keeping most data in registers to reduce processing time. While CISCs may have smaller programs, RISCs can have faster execution speeds by optimizing the number of clock cycles needed per instruction.More from Kamran Ashraf (6)

The Maximum Subarray Problem

The Maximum Subarray ProblemKamran Ashraf

╠²

The document discusses the maximum subarray problem and different solutions to solve it. It defines the problem as finding a contiguous subsequence within a given array that has the largest sum. It presents a brute force solution with O(n2) time complexity and a more efficient divide and conquer solution with O(nlogn) time complexity. The divide and conquer approach recursively finds maximum subarrays in the left and right halves of the array and the maximum crossing subarray to return the overall maximum.Ubiquitous Computing

Ubiquitous ComputingKamran Ashraf

╠²

Ubiquitous computing refers to technology that is integrated into everyday life to the extent that it is indistinguishable from it. The vision is for computing services to be available anytime and anywhere through devices that are increasingly more powerful, smaller, and cheaper. Ubiquitous computing is changing daily activities by allowing people to communicate and interact with hundreds of computing devices in new ways. However, it also presents challenges in systems design, security and privacy, and how teaching and learning can take advantage of ubiquitous access to resources and tools.Application programming interface sockets

Application programming interface socketsKamran Ashraf

╠²

The document discusses application programming interfaces (APIs) and how they allow network applications to communicate. It explains that APIs provide an interface between operating systems and network protocols. The socket interface API, defined in Unix, became widely adopted and allowed applications to work across different operating systems. The document then describes the typical functions used in client and server applications to create sockets, establish connections, send/receive data, and close sockets. These include functions like socket(), bind(), listen(), accept(), connect(), send(), and recv().Error Detection types

Error Detection typesKamran Ashraf

╠²

Certain types of errors cannot be detected by error detection algorithms.

1. Two-dimensional parity cannot detect few 4-bit errors or errors of 5 bits or more. CRC can detect errors up to 32 bits.

2. Checksums cannot detect errors where two data items are exchanged or a data item value is increased and another is decreased by the same amount.

3. CRC cannot detect all burst errors affecting an even number of bits, or burst errors longer than the polynomial degree. More complex algorithms like CRC are needed for safe transmission.VIRTUAL MEMORY

VIRTUAL MEMORYKamran Ashraf

╠²

Virtual memory allows programs to execute without requiring their entire address space to be resident in physical memory. It uses virtual addresses that are translated to physical addresses by the hardware. This translation occurs via page tables managed by the operating system. When a virtual address is accessed, its virtual page number is used as an index into the page table to obtain the corresponding physical page frame number. If the page is not in memory, a page fault occurs and the OS handles loading it from disk. Paging partitions both physical and virtual memory into fixed-sized pages to address fragmentation issues. Segmentation further partitions the virtual address space into logical segments. Hardware support for segmentation involves a segment table containing base/limit pairs for each segment. Translation lookaside buffersGraphic Processing Unit

Graphic Processing UnitKamran Ashraf

╠²

This document discusses graphics processing units (GPUs) and GPU rendering. It describes how GPUs assist CPUs in performing complex rendering calculations more quickly. The document outlines some of the latest and most powerful GPU models from AMD and Nvidia, such as the Radeon R9 295X2 and GeForce GTX Titan Z. It also discusses challenges in GPU rendering like realistic lighting simulations and high power consumption. GPU-accelerated computing is increasing performance across many applications by offloading compute-intensive tasks to thousands of GPU cores.INSTRUCTION LEVEL PARALLALISM

- 1. INSTRUCTION LEVEL PARALLALISM PRESENTED BY KAMRAN ASHRAF 13-NTU-4009

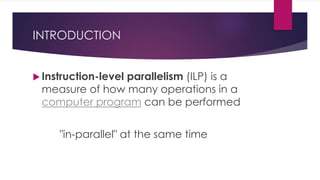

- 2. INTRODUCTION ’üĄ Instruction-level parallelism (ILP) is a measure of how many operations in a computer program can be performed "in-parallel" at the same time

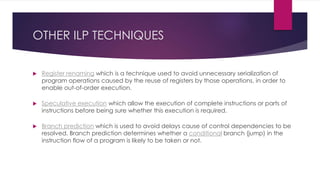

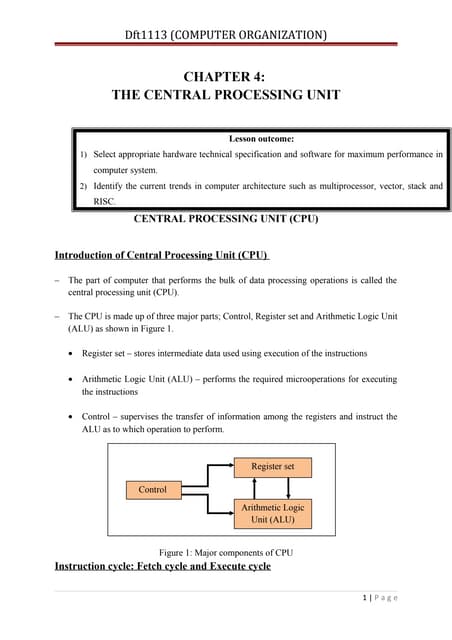

- 3. WHAT IS A PARALLEL INSTRUCTION? ’üĄ Parallel instructions are a set of instructions that do not depend on each other to be executed. ’üĄ Hierarchy ’é¦ Bit level Parallelism ŌĆó 16 bit add on 8 bit processor ’é¦ Instruction level Parallelism ’é¦ Loop level Parallelism ŌĆó for (i=1; i<=1000; i= i+1) x[i] = x[i] + y[i]; ’é¦ Thread level Parallelism ŌĆó multi-core computers

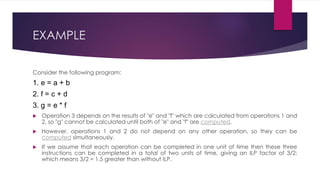

- 4. EXAMPLE Consider the following program: 1. e = a + b 2. f = c + d 3. g = e * f ’üĄ Operation 3 depends on the results of "e" and "f" which are calculated from operations 1 and 2, so "g" cannot be calculated until both of "e" and "f" are computed. ’üĄ However, operations 1 and 2 do not depend on any other operation, so they can be computed simultaneously. ’üĄ If we assume that each operation can be completed in one unit of time then these three instructions can be completed in a total of two units of time, giving an ILP factor of 3/2; which means 3/2 = 1.5 greater than without ILP.

- 5. WHY ILP? ’üĄ One of the goals of compilers and processors designers is to use as much ILP as possible. ’üĄ Ordinary programs are written execute instructions in sequence; one after the other, in the order as written by programmers. ’üĄ ILP allows the compiler and the processor to overlap the execution of multiple instructions or even to change the order in which instructions are executed.

- 6. ILP TECHNIQUES Micro-architectural techniques that use ILP include: ’üĄ Instruction pipelining ’üĄ Superscalar ’üĄ Out-of-order execution ’üĄ Register renaming ’üĄ Speculative execution ’üĄ Branch prediction

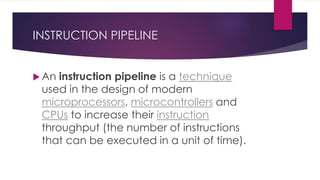

- 7. INSTRUCTION PIPELINE ’üĄ An instruction pipeline is a technique used in the design of modern microprocessors, microcontrollers and CPUs to increase their instruction throughput (the number of instructions that can be executed in a unit of time).

- 8. PIPELINING ’üĄ The main idea is to divide the processing of a CPU instruction into a series of independent steps of "microinstructions with storage at the end of each step. ’üĄ This allows the CPUs control logic to handle instructions at the processing rate of the slowest step, which is much faster than the time needed to process the instruction as a single step.

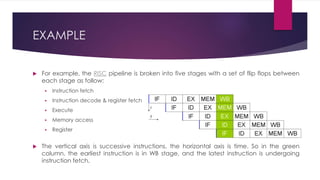

- 9. EXAMPLE ’üĄ For example, the RISC pipeline is broken into five stages with a set of flip flops between each stage as follow: ’é¦ Instruction fetch ’é¦ Instruction decode & register fetch ’é¦ Execute ’é¦ Memory access ’é¦ Register write back ’üĄ The vertical axis is successive instructions, the horizontal axis is time. So in the green column, the earliest instruction is in WB stage, and the latest instruction is undergoing instruction fetch.

- 10. SUPERSCALER ’üĄ A superscalar CPU architecture implements ILP inside a single processor which allows faster CPU throughput at the same clock rate.

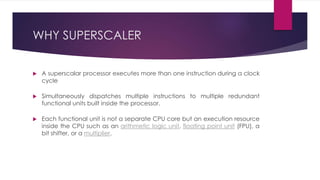

- 11. WHY SUPERSCALER ’üĄ A superscalar processor executes more than one instruction during a clock cycle ’üĄ Simultaneously dispatches multiple instructions to multiple redundant functional units built inside the processor. ’üĄ Each functional unit is not a separate CPU core but an execution resource inside the CPU such as an arithmetic logic unit, floating point unit (FPU), a bit shifter, or a multiplier.

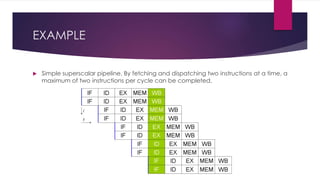

- 12. EXAMPLE ’üĄ Simple superscalar pipeline. By fetching and dispatching two instructions at a time, a maximum of two instructions per cycle can be completed.

- 13. OUT-OF-ORDER EXECUTION ’üĄ OoOE, is a technique used in most high- performance microprocessors. ’üĄ The key concept is to allow the processor to avoid a class of delays that occur when the data needed to perform an operation are unavailable. ’üĄ Most modern CPU designs include support for out of order execution.

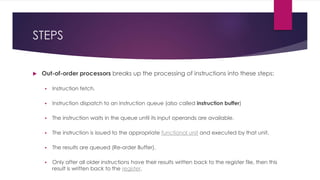

- 14. STEPS ’üĄ Out-of-order processors breaks up the processing of instructions into these steps: ’é¦ Instruction fetch. ’é¦ Instruction dispatch to an instruction queue (also called instruction buffer) ’é¦ The instruction waits in the queue until its input operands are available. ’é¦ The instruction is issued to the appropriate functional unit and executed by that unit. ’é¦ The results are queued (Re-order Buffer). ’é¦ Only after all older instructions have their results written back to the register file, then this result is written back to the register.

- 15. OTHER ILP TECHNIQUES ’üĄ Register renaming which is a technique used to avoid unnecessary serialization of program operations caused by the reuse of registers by those operations, in order to enable out-of-order execution. ’üĄ Speculative execution which allow the execution of complete instructions or parts of instructions before being sure whether this execution is required. ’üĄ Branch prediction which is used to avoid delays cause of control dependencies to be resolved. Branch prediction determines whether a conditional branch (jump) in the instruction flow of a program is likely to be taken or not.

- 16. THANKS