K-Means Clustering Presentation ║▌║▌▀Żs for Machine Learning Course

Download as PPTX, PDF0 likes11 views

KMeans Lecture ║▌║▌▀Żs.

1 of 30

Download to read offline

Recommended

Mathematics online: some common algorithms

Mathematics online: some common algorithmsMark Moriarty

?

Brief overview of some basic algorithms used online and across data-mining, and a word on where to learn them. Prepared specially for UCC Boole Prize 2012.

K means and dbscan

K means and dbscanYan Xu

?

The document discusses K-means clustering and DBSCAN, two popular clustering algorithms. K-means clusters data by minimizing distances between points and cluster centroids. It works by iteratively assigning points to the closest centroid and recalculating centroids. DBSCAN clusters based on density rather than distance; it identifies dense regions separated by sparse regions to form clusters without specifying the number of clusters.Evaluation of programs codes using machine learning

Evaluation of programs codes using machine learningVivek Maskara

?

This document discusses using machine learning to detect copied code submissions. It proposes using unsupervised learning via k-means clustering and dimensionality reduction with principal component analysis (PCA) to group similar codes and reduce complexity from O(n^2) to O(n). Key steps include extracting features from codes, applying PCA to reduce dimensions, running k-means to cluster codes, and detecting copies between clusters. This approach could help identify cheating in online programming contests and evaluate student code submissions.IOEfficientParalleMatrixMultiplication_present

IOEfficientParalleMatrixMultiplication_presentShubham Joshi

?

This document presents an overview of making matrix multiplication algorithms more I/O efficient. It discusses the parallel disk model and how to incorporate locality into algorithms to minimize I/O steps. Cannon's algorithm for matrix multiplication in a 2D mesh network is described. Loop interchange is discussed as a way to improve cache efficiency when multiplying matrices by exploring different loop orderings. Results are shown for parallel I/O efficient matrix multiplication on different sized matrices, with times ranging from 0.38 to 7 seconds. References on cache-oblivious algorithms and distributed memory matrix multiplication are provided.Knn 160904075605-converted

Knn 160904075605-convertedrameswara reddy venkat

?

This document discusses the K-nearest neighbors (KNN) algorithm, an instance-based learning method used for classification. KNN works by identifying the K training examples nearest to a new data point and assigning the most common class among those K neighbors to the new point. The document covers how KNN calculates distances between data points, chooses the value of K, handles feature normalization, and compares strengths and weaknesses of the approach. It also briefly discusses clustering, an unsupervised learning technique where data is grouped based on similarity.machine learning - Clustering in R

machine learning - Clustering in RSudhakar Chavan

?

The document provides an overview of clustering methods and algorithms. It defines clustering as the process of grouping objects that are similar to each other and dissimilar to objects in other groups. It discusses existing clustering methods like K-means, hierarchical clustering, and density-based clustering. For each method, it outlines the basic steps and provides an example application of K-means clustering to demonstrate how the algorithm works. The document also discusses evaluating clustering results and different measures used to assess cluster validity.CSA 3702 machine learning module 3

CSA 3702 machine learning module 3Nandhini S

?

This document provides an overview of clustering and k-means clustering algorithms. It begins by defining clustering as the process of grouping similar objects together and dissimilar objects separately. K-means clustering is introduced as an algorithm that partitions data points into k clusters by minimizing total intra-cluster variance, iteratively updating cluster means. The k-means algorithm and an example are described in detail. Weaknesses and applications are discussed. Finally, vector quantization and principal component analysis are briefly introduced.Towards Accurate Multi-person Pose Estimation in the Wild (My summery)

Towards Accurate Multi-person Pose Estimation in the Wild (My summery)Abdulrahman Kerim

?

This presentation summarizes a paper on multi-person pose estimation using a two-stage deep learning model. The approach uses a Faster R-CNN model to detect person boxes, then applies a separate ResNet model to each box to predict keypoints. It trains on the COCO dataset and evaluates on COCO test images, achieving state-of-the-art accuracy for multi-person pose estimation. Key aspects covered include the motivation, problem definition, approach using heatmap and offset predictions, model training procedure, evaluation metrics and results.PR-132: SSD: Single Shot MultiBox Detector

PR-132: SSD: Single Shot MultiBox DetectorJinwon Lee

?

SSD is a single-shot object detector that processes the entire image at once, rather than proposing regions of interest. It uses a base VGG16 network with additional convolutional layers to predict bounding boxes and class probabilities at three scales simultaneously. SSD achieves state-of-the-art accuracy while running significantly faster than two-stage detectors like Faster R-CNN. It introduces techniques like default boxes, hard negative mining, and data augmentation to address class imbalance and improve results on small objects. On PASCAL VOC 2007, SSD detects objects at 59 FPS with 74.3% mAP, comparable to Faster R-CNN but much faster.k-Means Clustering.pptx

k-Means Clustering.pptxNJYOTSHNA

?

K-means clustering is an unsupervised machine learning algorithm that groups unlabeled data points into a specified number of clusters (k) in which each data point belongs to the cluster with the nearest mean. The algorithm works by first selecting k initial cluster centroids, and then assigning each data point to its nearest centroid to form k clusters. It then computes the new centroids as the means of the data points in each cluster and reassigns points based on the new centroids, repeating this process until centroids do not change significantly. The optimal number of clusters k can be determined using the elbow method by plotting the distortion score against k.Fa18_P2.pptx

Fa18_P2.pptxMd Abul Hayat

?

This document discusses density-based clustering algorithms. It begins by outlining the limitations of k-means clustering, such as its inability to find non-convex clusters or determine the intrinsic number of clusters. It then introduces DBSCAN, a density-based algorithm that can identify clusters of arbitrary shapes and handle noise. The key definitions and algorithm of DBSCAN are described. While effective, DBSCAN relies on parameter selection and cannot handle varying densities well. OPTICS is then presented as an augmentation of DBSCAN that produces a reachability plot to provide insight into the underlying cluster structure and avoid specifying the cluster count.Fast Single-pass K-means Clusterting at Oxford

Fast Single-pass K-means Clusterting at Oxford MapR Technologies

?

This document describes fast single-pass k-means clustering algorithms. It discusses the rationale for using k-means clustering to enable fast search over large datasets. The document outlines ball k-means and surrogate clustering algorithms that can cluster data in a single pass. It discusses how these algorithms work and their implementation, including using locality sensitive hashing and projection searches to speed up clustering over high-dimensional data. Evaluation results show these algorithms can accurately cluster data much faster than traditional k-means approaches. The applications of these fast clustering algorithms include enabling fast nearest neighbor searches over large customer datasets for applications like marketing and fraud prevention.Oxford 05-oct-2012

Oxford 05-oct-2012Ted Dunning

?

This document describes a fast single-pass k-means clustering algorithm. It begins with an overview and rationale for using k-means clustering to enable fast search through large datasets. It then covers the theory behind clusterable data and k-means failure modes. The document outlines ball k-means and surrogate clustering algorithms. It discusses how to implement fast vector search methods like locality sensitive hashing. The document presents results on synthetic datasets and discusses applications like customer segmentation for a company with 100 million customers.A Unified Framework for Computer Vision Tasks: (Conditional) Generative Model...

A Unified Framework for Computer Vision Tasks: (Conditional) Generative Model...Sangwoo Mo

?

Lab seminar introduces Ting Chen's recent 3 works:

- Pix2seq: A Language Modeling Framework for Object Detection (ICLRĪ»22)

- A Unified Sequence Interface for Vision Tasks (NeurIPSĪ»22)

- A Generalist Framework for Panoptic Segmentation of Images and Videos (submitted to ICLRĪ»23)Image Compression using K-Means Clustering Method

Image Compression using K-Means Clustering MethodGyanendra Awasthi

?

Here K means clustering method is being used to compress the images. The input is the number of color required in the output image which is same as the number of clusters. The output is the compressed image having prementioned number of colorsSelection K in K-means Clustering

Selection K in K-means ClusteringJunghoon Kim

?

This document summarizes a paper presentation on selecting the optimal number of clusters (K) for k-means clustering. The paper proposes a new evaluation measure to automatically select K without human intuition. It reviews existing methods, analyzes factors influencing K selection, describes the proposed measure, and applies it to real datasets. The method was validated on artificial and benchmark datasets. It aims to suggest multiple K values depending on the required detail level for clustering. However, it is computationally expensive for large datasets and the data used may not reflect real complexity.K-Means Algorithm

K-Means AlgorithmCarlos Castillo (ChaTo)

?

Part of the course "Algorithmic Methods of Data Science". Sapienza University of Rome, 2015.

http://aris.me/index.php/data-mining-ds-2015Sergei Vassilvitskii, Research Scientist, Google at MLconf NYC - 4/15/16

Sergei Vassilvitskii, Research Scientist, Google at MLconf NYC - 4/15/16MLconf

?

The document discusses new techniques for improving the k-means clustering algorithm. It begins by describing the standard k-means algorithm and Lloyd's method. It then discusses issues with random initialization for k-means. It proposes using furthest point initialization (k-means++) as an improvement. The document also discusses parallelizing k-means initialization (k-means||) and using nearest neighbor data structures to speed up assigning points to clusters, which allows k-means to scale to many clusters. Experimental results show these techniques provide faster and higher quality clustering compared to standard k-means.Training machine learning k means 2017

Training machine learning k means 2017Iwan Sofana

?

K-means clustering is an unsupervised learning algorithm that partitions observations into K clusters by minimizing the within-cluster sum of squares. It works by iteratively assigning observations to the closest cluster centroid and recalculating centroids until convergence. K-means requires the number of clusters K as an input and is sensitive to initialization but is widely used for clustering large datasets due to its simplicity and efficiency.Unsupervised Learning in Machine Learning

Unsupervised Learning in Machine LearningPyingkodi Maran

?

The document discusses various unsupervised learning techniques including clustering algorithms like k-means, k-medoids, hierarchical clustering and density-based clustering. It explains how k-means clustering works by selecting initial random centroids and iteratively reassigning data points to the closest centroid. The elbow method is described as a way to determine the optimal number of clusters k. The document also discusses how k-medoids clustering is more robust to outliers than k-means because it uses actual data points as cluster representatives rather than centroids.Fuzzy c means clustering protocol for wireless sensor networks

Fuzzy c means clustering protocol for wireless sensor networksmourya chandra

?

This document discusses clustering techniques for wireless sensor networks. It describes hierarchical routing protocols that involve clustering sensor nodes into cluster heads and non-cluster heads. It then explains fuzzy c-means clustering, which allows data points to belong to multiple clusters to different degrees, unlike hard clustering methods. Finally, it proposes using fuzzy c-means clustering as an energy-efficient routing protocol for wireless sensor networks due to its ability to handle uncertain or incomplete data.Caustic Object Construction Based on Multiple Caustic Patterns

Caustic Object Construction Based on Multiple Caustic PatternsBudianto Tandianus

?

Was presented in WSCG 2012 ( http://www.wscg.cz/ ) in Plzen, Prague.

Is published in Journal of WSCG, Vol.20, No.1, pp.37-46, ISSN 1213-6972, Union Agency, 2012.Neural nw k means

Neural nw k meansEng. Dr. Dennis N. Mwighusa

?

k-Means is a rather simple but well known algorithms for grouping objects, clustering. Again all objects need to be represented as a set of numerical features. In addition the user has to specify the number of groups (referred to as k) he wishes to identify. Each object can be thought of as being represented by some feature vector in an n dimensional space, n being the number of all features used to describe the objects to cluster. The algorithm then randomly chooses k points in that vector space, these point serve as the initial centers of the clusters. Afterwards all objects are each assigned to center they are closest to. Usually the distance measure is chosen by the user and determined by the learning task. After that, for each cluster a new center is computed by averaging the feature vectors of all objects assigned to it. The process of assigning objects and recomputing centers is repeated until the process converges. The algorithm can be proven to converge after a finite number of iterations. Several tweaks concerning distance measure, initial center choice and computation of new average centers have been explored, as well as the estimation of the number of clusters k. Yet the main principle always remains the same. In this project we will discuss about K-means clustering algorithm, implementation and its application to the problem of unsupervised learning

Adaboost Classifier for Machine Learning Course

Adaboost Classifier for Machine Learning Coursessuserfece35

?

Adaboost Classifier Presentation ║▌║▌▀ŻsBuild_Machine_Learning_System for Machine Learning Course

Build_Machine_Learning_System for Machine Learning Coursessuserfece35

?

A pipeline for Building Machine Learning SystemMore Related Content

Similar to K-Means Clustering Presentation ║▌║▌▀Żs for Machine Learning Course (20)

machine learning - Clustering in R

machine learning - Clustering in RSudhakar Chavan

?

The document provides an overview of clustering methods and algorithms. It defines clustering as the process of grouping objects that are similar to each other and dissimilar to objects in other groups. It discusses existing clustering methods like K-means, hierarchical clustering, and density-based clustering. For each method, it outlines the basic steps and provides an example application of K-means clustering to demonstrate how the algorithm works. The document also discusses evaluating clustering results and different measures used to assess cluster validity.CSA 3702 machine learning module 3

CSA 3702 machine learning module 3Nandhini S

?

This document provides an overview of clustering and k-means clustering algorithms. It begins by defining clustering as the process of grouping similar objects together and dissimilar objects separately. K-means clustering is introduced as an algorithm that partitions data points into k clusters by minimizing total intra-cluster variance, iteratively updating cluster means. The k-means algorithm and an example are described in detail. Weaknesses and applications are discussed. Finally, vector quantization and principal component analysis are briefly introduced.Towards Accurate Multi-person Pose Estimation in the Wild (My summery)

Towards Accurate Multi-person Pose Estimation in the Wild (My summery)Abdulrahman Kerim

?

This presentation summarizes a paper on multi-person pose estimation using a two-stage deep learning model. The approach uses a Faster R-CNN model to detect person boxes, then applies a separate ResNet model to each box to predict keypoints. It trains on the COCO dataset and evaluates on COCO test images, achieving state-of-the-art accuracy for multi-person pose estimation. Key aspects covered include the motivation, problem definition, approach using heatmap and offset predictions, model training procedure, evaluation metrics and results.PR-132: SSD: Single Shot MultiBox Detector

PR-132: SSD: Single Shot MultiBox DetectorJinwon Lee

?

SSD is a single-shot object detector that processes the entire image at once, rather than proposing regions of interest. It uses a base VGG16 network with additional convolutional layers to predict bounding boxes and class probabilities at three scales simultaneously. SSD achieves state-of-the-art accuracy while running significantly faster than two-stage detectors like Faster R-CNN. It introduces techniques like default boxes, hard negative mining, and data augmentation to address class imbalance and improve results on small objects. On PASCAL VOC 2007, SSD detects objects at 59 FPS with 74.3% mAP, comparable to Faster R-CNN but much faster.k-Means Clustering.pptx

k-Means Clustering.pptxNJYOTSHNA

?

K-means clustering is an unsupervised machine learning algorithm that groups unlabeled data points into a specified number of clusters (k) in which each data point belongs to the cluster with the nearest mean. The algorithm works by first selecting k initial cluster centroids, and then assigning each data point to its nearest centroid to form k clusters. It then computes the new centroids as the means of the data points in each cluster and reassigns points based on the new centroids, repeating this process until centroids do not change significantly. The optimal number of clusters k can be determined using the elbow method by plotting the distortion score against k.Fa18_P2.pptx

Fa18_P2.pptxMd Abul Hayat

?

This document discusses density-based clustering algorithms. It begins by outlining the limitations of k-means clustering, such as its inability to find non-convex clusters or determine the intrinsic number of clusters. It then introduces DBSCAN, a density-based algorithm that can identify clusters of arbitrary shapes and handle noise. The key definitions and algorithm of DBSCAN are described. While effective, DBSCAN relies on parameter selection and cannot handle varying densities well. OPTICS is then presented as an augmentation of DBSCAN that produces a reachability plot to provide insight into the underlying cluster structure and avoid specifying the cluster count.Fast Single-pass K-means Clusterting at Oxford

Fast Single-pass K-means Clusterting at Oxford MapR Technologies

?

This document describes fast single-pass k-means clustering algorithms. It discusses the rationale for using k-means clustering to enable fast search over large datasets. The document outlines ball k-means and surrogate clustering algorithms that can cluster data in a single pass. It discusses how these algorithms work and their implementation, including using locality sensitive hashing and projection searches to speed up clustering over high-dimensional data. Evaluation results show these algorithms can accurately cluster data much faster than traditional k-means approaches. The applications of these fast clustering algorithms include enabling fast nearest neighbor searches over large customer datasets for applications like marketing and fraud prevention.Oxford 05-oct-2012

Oxford 05-oct-2012Ted Dunning

?

This document describes a fast single-pass k-means clustering algorithm. It begins with an overview and rationale for using k-means clustering to enable fast search through large datasets. It then covers the theory behind clusterable data and k-means failure modes. The document outlines ball k-means and surrogate clustering algorithms. It discusses how to implement fast vector search methods like locality sensitive hashing. The document presents results on synthetic datasets and discusses applications like customer segmentation for a company with 100 million customers.A Unified Framework for Computer Vision Tasks: (Conditional) Generative Model...

A Unified Framework for Computer Vision Tasks: (Conditional) Generative Model...Sangwoo Mo

?

Lab seminar introduces Ting Chen's recent 3 works:

- Pix2seq: A Language Modeling Framework for Object Detection (ICLRĪ»22)

- A Unified Sequence Interface for Vision Tasks (NeurIPSĪ»22)

- A Generalist Framework for Panoptic Segmentation of Images and Videos (submitted to ICLRĪ»23)Image Compression using K-Means Clustering Method

Image Compression using K-Means Clustering MethodGyanendra Awasthi

?

Here K means clustering method is being used to compress the images. The input is the number of color required in the output image which is same as the number of clusters. The output is the compressed image having prementioned number of colorsSelection K in K-means Clustering

Selection K in K-means ClusteringJunghoon Kim

?

This document summarizes a paper presentation on selecting the optimal number of clusters (K) for k-means clustering. The paper proposes a new evaluation measure to automatically select K without human intuition. It reviews existing methods, analyzes factors influencing K selection, describes the proposed measure, and applies it to real datasets. The method was validated on artificial and benchmark datasets. It aims to suggest multiple K values depending on the required detail level for clustering. However, it is computationally expensive for large datasets and the data used may not reflect real complexity.K-Means Algorithm

K-Means AlgorithmCarlos Castillo (ChaTo)

?

Part of the course "Algorithmic Methods of Data Science". Sapienza University of Rome, 2015.

http://aris.me/index.php/data-mining-ds-2015Sergei Vassilvitskii, Research Scientist, Google at MLconf NYC - 4/15/16

Sergei Vassilvitskii, Research Scientist, Google at MLconf NYC - 4/15/16MLconf

?

The document discusses new techniques for improving the k-means clustering algorithm. It begins by describing the standard k-means algorithm and Lloyd's method. It then discusses issues with random initialization for k-means. It proposes using furthest point initialization (k-means++) as an improvement. The document also discusses parallelizing k-means initialization (k-means||) and using nearest neighbor data structures to speed up assigning points to clusters, which allows k-means to scale to many clusters. Experimental results show these techniques provide faster and higher quality clustering compared to standard k-means.Training machine learning k means 2017

Training machine learning k means 2017Iwan Sofana

?

K-means clustering is an unsupervised learning algorithm that partitions observations into K clusters by minimizing the within-cluster sum of squares. It works by iteratively assigning observations to the closest cluster centroid and recalculating centroids until convergence. K-means requires the number of clusters K as an input and is sensitive to initialization but is widely used for clustering large datasets due to its simplicity and efficiency.Unsupervised Learning in Machine Learning

Unsupervised Learning in Machine LearningPyingkodi Maran

?

The document discusses various unsupervised learning techniques including clustering algorithms like k-means, k-medoids, hierarchical clustering and density-based clustering. It explains how k-means clustering works by selecting initial random centroids and iteratively reassigning data points to the closest centroid. The elbow method is described as a way to determine the optimal number of clusters k. The document also discusses how k-medoids clustering is more robust to outliers than k-means because it uses actual data points as cluster representatives rather than centroids.Fuzzy c means clustering protocol for wireless sensor networks

Fuzzy c means clustering protocol for wireless sensor networksmourya chandra

?

This document discusses clustering techniques for wireless sensor networks. It describes hierarchical routing protocols that involve clustering sensor nodes into cluster heads and non-cluster heads. It then explains fuzzy c-means clustering, which allows data points to belong to multiple clusters to different degrees, unlike hard clustering methods. Finally, it proposes using fuzzy c-means clustering as an energy-efficient routing protocol for wireless sensor networks due to its ability to handle uncertain or incomplete data.Caustic Object Construction Based on Multiple Caustic Patterns

Caustic Object Construction Based on Multiple Caustic PatternsBudianto Tandianus

?

Was presented in WSCG 2012 ( http://www.wscg.cz/ ) in Plzen, Prague.

Is published in Journal of WSCG, Vol.20, No.1, pp.37-46, ISSN 1213-6972, Union Agency, 2012.Neural nw k means

Neural nw k meansEng. Dr. Dennis N. Mwighusa

?

k-Means is a rather simple but well known algorithms for grouping objects, clustering. Again all objects need to be represented as a set of numerical features. In addition the user has to specify the number of groups (referred to as k) he wishes to identify. Each object can be thought of as being represented by some feature vector in an n dimensional space, n being the number of all features used to describe the objects to cluster. The algorithm then randomly chooses k points in that vector space, these point serve as the initial centers of the clusters. Afterwards all objects are each assigned to center they are closest to. Usually the distance measure is chosen by the user and determined by the learning task. After that, for each cluster a new center is computed by averaging the feature vectors of all objects assigned to it. The process of assigning objects and recomputing centers is repeated until the process converges. The algorithm can be proven to converge after a finite number of iterations. Several tweaks concerning distance measure, initial center choice and computation of new average centers have been explored, as well as the estimation of the number of clusters k. Yet the main principle always remains the same. In this project we will discuss about K-means clustering algorithm, implementation and its application to the problem of unsupervised learning

More from ssuserfece35 (7)

Adaboost Classifier for Machine Learning Course

Adaboost Classifier for Machine Learning Coursessuserfece35

?

Adaboost Classifier Presentation ║▌║▌▀ŻsBuild_Machine_Learning_System for Machine Learning Course

Build_Machine_Learning_System for Machine Learning Coursessuserfece35

?

A pipeline for Building Machine Learning SystemIntroduction to Machine Learning Lectures

Introduction to Machine Learning Lecturesssuserfece35

?

This lecture discusses ensemble methods in machine learning. It introduces bagging, which trains multiple models on random subsets of the training data and averages their predictions, in order to reduce variance and prevent overfitting. Bagging is effective because it decreases the correlation between predictions. Random forests apply bagging to decision trees while also introducing more randomness by selecting a random subset of features to consider at each node. The next lecture will cover boosting, which aims to reduce bias by training models sequentially to focus on examples previously misclassified.5 ═ŲŽļ┐Ų╝╝Infervision_Intro_NV_English Intro Material

5 ═ŲŽļ┐Ų╝╝Infervision_Intro_NV_English Intro Materialssuserfece35

?

Infervision is a company that uses artificial intelligence to help doctors by automatically recognizing symptoms on medical images and recommending treatments. Their goal is to make top medical expertise available to everyone by reducing the burden on doctors and improving access to healthcare in rural areas. They have developed powerful AI models for various diseases by combining deep learning with medical data from partner hospitals in China. Their products help generate diagnostic reports and can screen for diseases to improve efficiency and lower healthcare costs.Transformer in Medical Imaging A brief review

Transformer in Medical Imaging A brief reviewssuserfece35

?

Transformers show promise for medical imaging tasks by enabling long-range modeling via self-attention. Two papers presented techniques using transformers for robust fovea localization and multi-lesion segmentation. The first used a transformer block to fuse retinal image and vessel features. The second used relation blocks modeling interactions between lesions and between lesions and vessels, improving hard-to-segment lesion detection. Efficient transformers like Swin and deformable sampling were also discussed, enabling long-range modeling with reduced complexity for 3D tasks. Overall, transformers appear well-suited for medical imaging by capturing global context but efficient techniques are needed for 3D applications.Recently uploaded (20)

Chapter 6. Business and Corporate Strategy Formulation.pdf

Chapter 6. Business and Corporate Strategy Formulation.pdfRommel Regala

?

This integrative course examines the strategic decision-making processes of top management,

focusing on the formulation, implementation, and evaluation of corporate strategies and policies.

Students will develop critical thinking and analytical skills by applying strategic frameworks,

conducting industry and environmental analyses, and exploring competitive positioning. Key

topics include corporate governance, business ethics, competitive advantage, and strategy

execution. Through case studies and real-world applications, students will gain a holistic

understanding of strategic management and its role in organizational success, preparing them to

navigate complex business environments and drive strategic initiatives effectively. U.S. Department of Education certification

U.S. Department of Education certificationMebane Rash

?

Request to certify compliance with civil rights lawsBerry_Kanisha_BAS_PB1_202503 (2) (2).pdf

Berry_Kanisha_BAS_PB1_202503 (2) (2).pdfKanishaBerry

?

Kanisha Berry's Full Sail University Personal Branding Exploration Assignment Knownsense 2025 Finals-U-25 General Quiz.pdf

Knownsense 2025 Finals-U-25 General Quiz.pdfPragya - UEM Kolkata Quiz Club

?

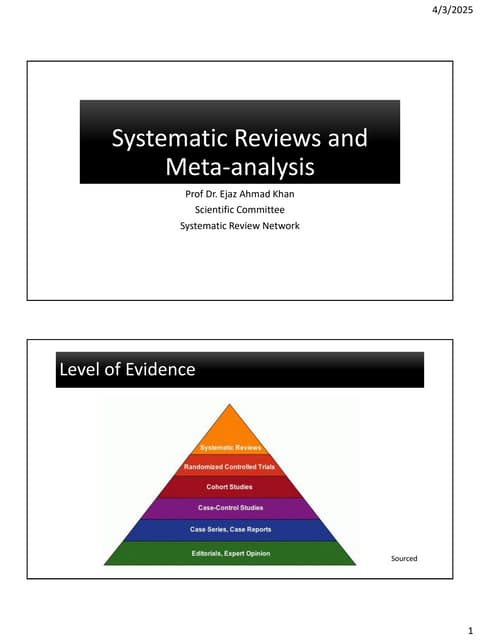

Knownsense is the General Quiz conducted by Pragya the Official Quiz Club of the University of Engineering and Management Kolkata in collaboration with Ecstasia the official cultural fest of the University of Engineering and Management Kolkata Introduction to Systematic Reviews - Prof Ejaz Khan

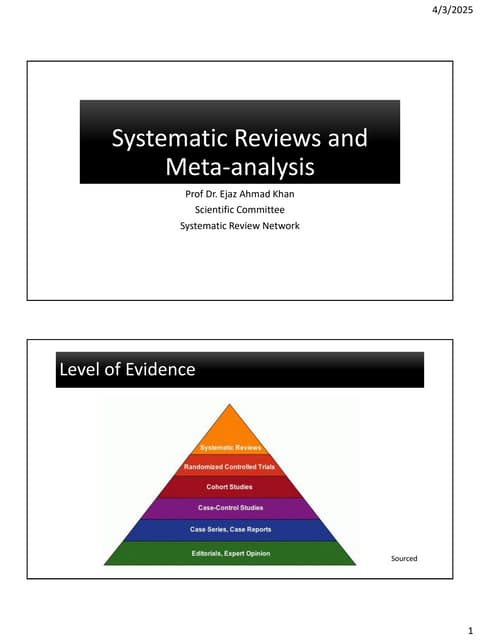

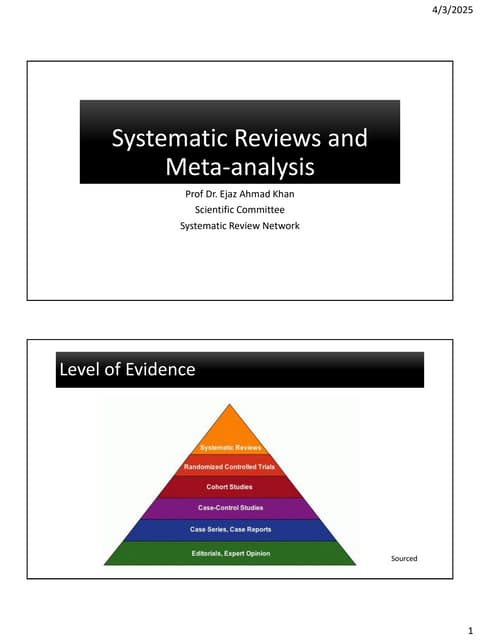

Introduction to Systematic Reviews - Prof Ejaz KhanSystematic Reviews Network (SRN)

?

A Systematic Review:

Provides a clear and transparent process

? Facilitates efficient integration of information for rational decision

making

? Demonstrates where the effects of health care are consistent and

where they do vary

? Minimizes bias (systematic errors) and reduce chance effects

? Can be readily updated, as needed.

? Meta-analysis can provide more precise estimates than individual

studies

? Allows decisions based on evidence , whole of it and not partialRole of Teacher in the era of Generative AI

Role of Teacher in the era of Generative AIProf. Neeta Awasthy

?

We need to layer the technology onto existing workflows

Follow the teachers who inspire you because that instills passion Curiosity & Lifelong Learning.

You can benefit from generative AI even when its intelligence is worse-because of the potential for cost and time savings in low-cost-of-error environments.

Bot tutors are already yielding effective results on learning and mastery.

GenAI may increase the digital divide- its gains may accrue disproportionately to those who already have domain expertise.

GenAI can be used for Coding

Complex structures

Make the content

Manage the content

Solutions to complex numerical problems

Lesson plan

Assignment

Quiz

Question bank

Report & summary of content

Creating videos

Title of abstract & summaries and much more like...

Improving Grant Writing

Learning by Teaching Chatbots

GenAI as peer Learner

Data Analysis for Non-Coders

Student Course Preparation

To reduce Plagiarism

Legal Problems for classes

Understanding Student Learning in Real Time

Simulate a poor

Faculty co-pilot chatbot

Generate fresh Assessments

Data Analysis Partner

Summarize student questions in real-time

Assess depth of students' understanding

The skills to foster are Listening

Communicating

Approaching the problem & solving

Making Real Time Decisions

Logic

Refining Memories

Learning Cultures & Syntax (Foreign Language)

Chatbots & Agentic AI can never so what a professor can do.

The need of the hour is to teach Creativity

Emotions

Judgement

Psychology

Communication

Human Emotions

ĪŁĪŁĪŁĪŁThrough various content!

Digital Electronics: Fundamentals of Combinational Circuits

Digital Electronics: Fundamentals of Combinational CircuitsGS Virdi

?

An in-depth exploration of combinational logic circuit design presented by Dr. G.S. Virdi, former Chief Scientist at CSIR-Central Electronics Engineering Research Institute, Pilani, India. This comprehensive lecture provides essential knowledge on the principles, design procedures, and practical applications of combinational circuits in digital systems.

## Key Topics:

- Fundamental differences between combinational and sequential circuits

- Combinational circuit design principles and implementation techniques

- Detailed coverage of critical components including adders, subtractors, BCD adders, and magnitude comparators

- Practical implementations of multiplexers/demultiplexers, encoders/decoders, and parity checkers

- Code converters and BCD to 7-segment decoder designs

- Logic gate interconnections for specific output requirements

This educational resource is ideal for undergraduate and graduate engineering students, electronics professionals, and digital circuit designers seeking to enhance their understanding of combinational logic implementation. Dr. Virdi draws on his extensive research experience to provide clear explanations of complex digital electronics concepts.

Perfect for classroom instruction, self-study, or professional development in electronic engineering, computer engineering, and related technical fields.CLEFT LIP AND PALATE: NURSING MANAGEMENT.pptx

CLEFT LIP AND PALATE: NURSING MANAGEMENT.pptxPRADEEP ABOTHU

?

Cleft lip, also known as cheiloschisis, is a congenital deformity characterized by a split or opening in the upper lip due to the failure of fusion of the maxillary processes. Cleft lip can be unilateral or bilateral and may occur along with cleft palate. Cleft palate, also known as palatoschisis, is a congenital condition characterized by an opening in the roof of the mouth caused by the failure of fusion of the palatine processes. This condition can involve the hard palate, soft palate, or both.How to Install Odoo 18 with Pycharm - Odoo 18 ║▌║▌▀Żs

How to Install Odoo 18 with Pycharm - Odoo 18 ║▌║▌▀ŻsCeline George

?

In this slide weĪ»ll discuss the installation of odoo 18 with pycharm. Odoo 18 is a powerful business management software known for its enhanced features and ability to streamline operations. Built with Python 3.10+ for the backend and PostgreSQL as its database, it provides a reliable and efficient system. Anti-Viral Agents.pptx Medicinal Chemistry III, B Pharm SEM VI

Anti-Viral Agents.pptx Medicinal Chemistry III, B Pharm SEM VISamruddhi Khonde

?

Antiviral agents are crucial in combating viral infections, causing a variety of diseases from mild to life-threatening. Developed through medicinal chemistry, these drugs target viral structures and processes while minimizing harm to host cells. Viruses are classified into DNA and RNA viruses, with each replicating through distinct mechanisms. Treatments for herpesviruses involve nucleoside analogs like acyclovir and valacyclovir, which inhibit the viral DNA polymerase. Influenza is managed with neuraminidase inhibitors like oseltamivir and zanamivir, which prevent the release of new viral particles. HIV is treated with a combination of antiretroviral drugs targeting various stages of the viral life cycle. Hepatitis B and C are treated with different strategies, with nucleoside analogs like lamivudine inhibiting viral replication and direct-acting antivirals targeting the viral RNA polymerase and other key proteins.

Antiviral agents are designed based on their mechanisms of action, with several categories including nucleoside and nucleotide analogs, protease inhibitors, neuraminidase inhibitors, reverse transcriptase inhibitors, and integrase inhibitors. The design of these agents often relies on understanding the structure-activity relationship (SAR), which involves modifying the chemical structure of compounds to enhance efficacy, selectivity, and bioavailability while reducing side effects. Despite their success, challenges such as drug resistance, viral mutation, and the need for long-term therapy remain.General Quiz at Maharaja Agrasen College | Amlan Sarkar | Prelims with Answer...

General Quiz at Maharaja Agrasen College | Amlan Sarkar | Prelims with Answer...Amlan Sarkar

?

Prelims (with answers) + Finals of a general quiz originally conducted on 13th November, 2024.

Part of The Maharaja Quiz - the Annual Quiz Fest of Maharaja Agrasen College, University of Delhi.

Feedback welcome at amlansarkr@gmail.comViceroys of India & Their Tenure ©C Key Events During British Rule

Viceroys of India & Their Tenure ©C Key Events During British RuleDeeptiKumari61

?

The British Raj in India (1857-1947) saw significant events under various Viceroys, shaping the political, economic, and social landscape.

**Early Period (1856-1888):**

Lord Canning (1856-1862) handled the Revolt of 1857, leading to the British Crown taking direct control. Universities were established, and the Indian Councils Act (1861) was passed. Lord Lawrence (1864-1869) led the Bhutan War and established High Courts. Lord Lytton (1876-1880) enforced repressive laws like the Vernacular Press Act (1878) and Arms Act (1878) while waging the Second Afghan War.

**Reforms & Political Awakening (1880-1905):**

Lord Ripon (1880-1884) introduced the Factory Act (1881), Local Self-Government Resolution (1882), and repealed the Vernacular Press Act. Lord Dufferin (1884-1888) oversaw the formation of the Indian National Congress (1885). Lord Lansdowne (1888-1894) passed the Factory Act (1891) and Indian Councils Act (1892). Lord Curzon (1899-1905) introduced educational reforms but faced backlash for the Partition of Bengal (1905).

**Rise of Nationalism (1905-1931):**

Lord Minto II (1905-1910) saw the rise of the Swadeshi Movement and the Muslim League's formation (1906). Lord Hardinge II (1910-1916) annulled BengalĪ»s Partition (1911) and shifted IndiaĪ»s capital to Delhi. Lord Chelmsford (1916-1921) faced the Lucknow Pact (1916), Jallianwala Bagh Massacre (1919), and Non-Cooperation Movement. Lord Reading (1921-1926) dealt with the Chauri Chaura Incident (1922) and the formation of the Swaraj Party. Lord Irwin (1926-1931) saw the Simon Commission protests, the Dandi March, and the Gandhi-Irwin Pact (1931).

**Towards Independence (1931-1947):**

Lord Willingdon (1931-1936) introduced the Government of India Act (1935), laying India's federal framework. Lord Linlithgow (1936-1944) faced WWII-related crises, including the Quit India Movement (1942). Lord Wavell (1944-1947) proposed the Cabinet Mission Plan (1946) and negotiated British withdrawal. Lord Mountbatten (1947-1948) oversaw India's Partition and Independence on August 15, 1947.

**Final Transition:**

C. Rajagopalachari (1948-1950), IndiaĪ»s last Governor-General, facilitated IndiaĪ»s transition into a republic before the position was abolished in 1950.

The British Viceroys played a crucial role in IndiaĪ»s colonial history, introducing both repressive and progressive policies that fueled nationalist movements, ultimately leading to independence.https://www.youtube.com/@DKDEducationGeneral Quiz at ChakraView 2025 | Amlan Sarkar | Ashoka Univeristy | Prelims ...

General Quiz at ChakraView 2025 | Amlan Sarkar | Ashoka Univeristy | Prelims ...Amlan Sarkar

?

Prelims (with answers) + Finals of a general quiz originally conducted on 9th February, 2025.

This was the closing quiz of the 2025 edition of ChakraView - the annual quiz fest of Ashoka University.

Feedback welcome at amlansarkr@gmail.com Different perspectives on dugout canoe heritage of Soomaa.pdf

Different perspectives on dugout canoe heritage of Soomaa.pdfAivar Ruukel

?

Sharing the story of haabjas to 1st-year students of the University of Tartu MA programme "Folkloristics and Applied Heritage Studies" and 1st-year students of the Erasmus Mundus Joint Master programme "Education in Museums & Heritage". ? Marketing is Everything in the Beauty Business! ??? Talent gets you in the ...

? Marketing is Everything in the Beauty Business! ??? Talent gets you in the ...coreylewis960

?

? Marketing is Everything in the Beauty Business! ???

Talent gets you in the gameĪ¬but visibility keeps your chair full.

TodayĪ»s top stylists arenĪ»t just skilledĪ¬theyĪ»re seen.

ThatĪ»s where MyFi Beauty comes in.

? We Help You Get Noticed with Tools That Work:

? Social Media Scheduling & Strategy

We make it easy for you to stay consistent and on-brand across Instagram, Facebook, TikTok, and more.

YouĪ»ll get content prompts, captions, and posting tools that do the work while you do the hair.

?? Your Own Personal Beauty App

Stand out from the crowd with a custom app made just for you. Clients can:

Book appointments

Browse your services

View your gallery

Join your email/text list

Leave reviews & refer friends

?? Offline Marketing Made Easy

We provide digital flyers, QR codes, and branded business cards that connect straight to your appĪ¬turning strangers into loyal clients with just one tap.

? The Result?

You build a strong personal brand that reaches more people, books more clients, and grows with you. Whether youĪ»re just starting out or trying to level upĪ¬MyFi Beauty is your silent partner in success.

K-Means Clustering Presentation ║▌║▌▀Żs for Machine Learning Course

- 1. 1 DTS304TC: Machine Learning Lecture 7: K-Means Clustering Dr Kang Dang D-5032, Taicang Campus Kang.Dang@xjtlu.edu.cn Tel: 88973341

- 2. 2 Acknowledges This set of lecture notes has been adapted from materials originally provided by Christopher M. BishopĪ»s and Xin Chen.

- 3. Overview. ? K-means clustering ? Application of K-means clustering in image segmentation

- 4. 4 Q&A ? What is clustering? ? What is one application of the clustering?

- 5. Old Faithful Data Set Duration of eruption (minutes) Time between eruptions (minutes)

- 6. K-means Algorithm ? Goal: represent a data set in terms of K clusters each of which is summarized by a prototype ? Initialize prototypes, then iterate between two phases: ? E-step (Cluster Assignment) : assign each data point to nearest prototype ? M-step(Prototype update): update prototypes to be the cluster means ? Simplest version is based on Euclidean distance

- 16. Responsibilities ? Responsibilities assign data points to clusters such that ? Example: 5 data points and 3 clusters n: data point index k: cluster index

- 17. 17 Q&A ? We know ? What does mean?

- 19. Minimizing the Cost Function ? E-step: minimize w.r.t. ? assigns each data point to nearest prototype ? M-step: minimize w.r.t ? gives ? each prototype set to the mean of points in that cluster

- 20. 20 Convergence of K-means Algorithm ? Will K-Means objective oscillate?

- 21. 21 Convergence of K-means Algorithm ? Will K-Means objective oscillate?. ? The answer is NO. Each iteration of K-means algorithm decrease the objective. ? Both E step and M step decrease the objective for each data point

- 22. 22 Convergence of K-means Algorithm ? Will K-Means objective oscillate?. ? The answer is NO. Each iteration of K-means algorithm decrease the objective. ? Both E step and M step decrease the objective for each data point ? The minimum value of the objective is finite. ? The minimal value of objective is simply 0

- 23. 23 Convergence of K-means Algorithm ? Will K-Means objective oscillate?. ? The answer is NO. Each iteration of K-means algorithm decrease the objective. ? Both E step and M step decrease the objective for each data point ? The minimum value of the objective is finite. ? The minimal value of objective is simply 0 ? Therefore K-means algorithm will converge with sufficiently large number of iterations.

- 24. How to choose K? Plot the within-cluster sum of squares (WCSS) against the number of clusters (k). The WCSS decreases as k increases, but the rate of decrease sharply changes at a certain point, creating an "elbow" in the graph.

- 25. Application of K-Means Algorithm to Image Segmentation ? First, we convert all the image pixels to the HSV color space. We then proceed to cluster the image pixels based on their HSV color intensities. Finally, we replace each pixel with the color of its corresponding cluster center.

- 26. Application of K-Means Algorithm to Image Segmentation ? Nice Artistic Effects!

- 27. Limitations of K-means ? Sensitivity to Initial Centroids: ? The final results of k-means clustering are sensitive to the initial random selection of cluster centers. This can lead to different results each time k- means is run. ? For certain initialization, k-means clustering will perform badly. ? Q&A: How to handle the bad initialization issue?

- 28. Limitations of K-means ? Sensitivity to Initial Centroids: ? The final results of k-means clustering are sensitive to the initial random selection of cluster centers. This can lead to different results each time k- means is run. ? For certain initialization, k-means clustering will perform badly. ? Q&A: How to handle the bad initialization issue? ? Run k-means several times with different random initializations and choose the clustering result with the lowest objective score (lowest within-cluster sum of squares (WCSS))

- 29. Limitations of K-means ? Assumption of Spherical Clusters and Equal Variance of Clusters: K- means assumes that clusters are spherical and isotropic, which means all clusters are of the same size (variance) and density ? Difficulty with Non-convex Shapes

- 30. 30 Limitations of K means ? Other limitations: ? Not clear how to choose the value of K ? Sensitivity to Outliers ? Scalability with High Dimensionality GMM can resolve some but not all the above issues.

Editor's Notes

- #6: Our goal is to organize a big bunch of data into K groups, and for each group, we'll pick one item to be its example or "prototype." First, we choose some starting prototypes. Then we do two steps over and over: First, the E-step, where we put each piece of data into the group with the closest prototype. Second, the M-step, where we find the average of all the items in each group and make that the new prototype. We keep doing this until the groups make sense. We measure closeness by the simplest method, which is like measuring a straight line distance between points, called Euclidean distance.

- #24: Plot the within-cluster sum of squares (WCSS) against the number of clusters (k). The WCSS decreases as k increases, but the rate of decrease sharply changes at a certain point, creating an "elbow" in the graph. The elbow generally represents a point where adding more clusters doesn't explain much more variance in the data. Choose k at this point.