Lang Chain (Kitworks Team Study žú§ž†ēŽĻą ŽįúŪĎúžěźŽ£Ć)

0 likes239 views

Kitworks Team Study

1 of 19

Downloaded 17 times

Recommended

LLMOps for Your Data: Best Practices to Ensure Safety, Quality, and Cost

LLMOps for Your Data: Best Practices to Ensure Safety, Quality, and CostAggregage

Őż

Join Shreya Rajpal, CEO of Guardrails AI, and Travis Addair, CTO of Predibase, in this exclusive webinar to learn all about leveraging the part of AI that constitutes your IP ‚Äď your data ‚Äď to build a defensible AI strategy for the future!ChatGPT_Prompts.pptx

ChatGPT_Prompts.pptxChakrit Phain

Őż

ChatGPT is a powerful language model developed by OpenAI. It is designed to generate human-like text based on given prompts. As a prompt engineer, you can utilize ChatGPT to create engaging conversations, provide information, answer questions, and assist users. It's a versatile tool for natural language processing tasks, enabling more interactive and intelligent interactions.Build an LLM-powered application using LangChain.pdf

Build an LLM-powered application using LangChain.pdfAnastasiaSteele10

Őż

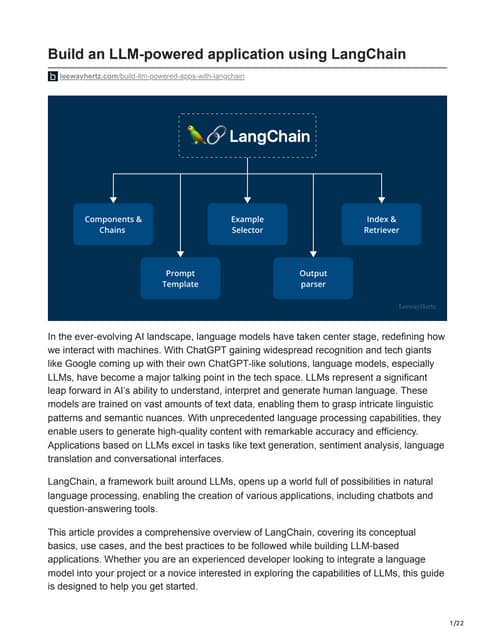

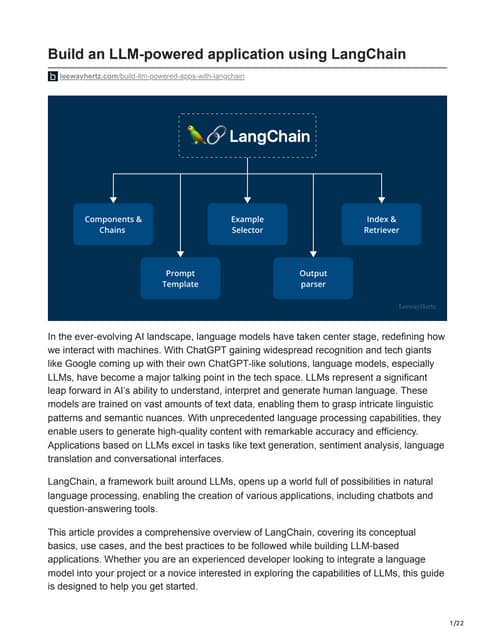

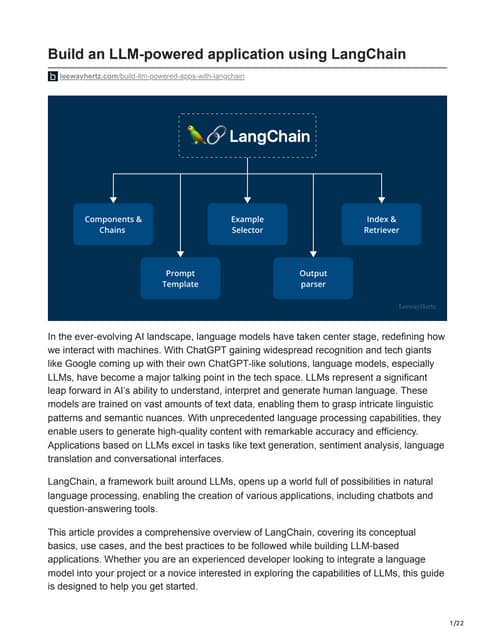

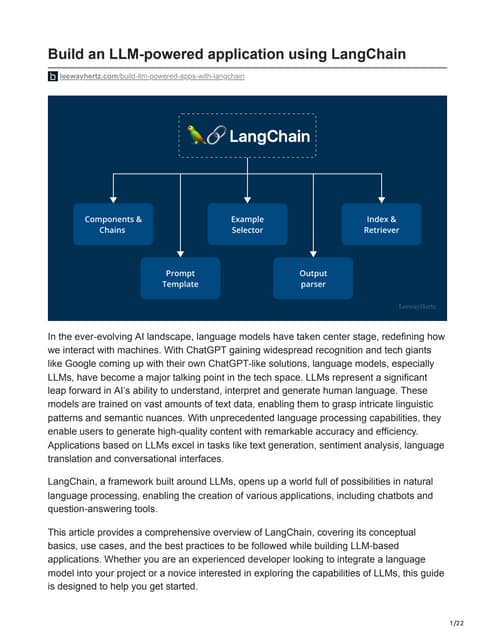

LangChain is an advanced framework that allows developers to create language model-powered applications. It provides a set of tools, components, and interfaces that make building LLM-based applications easier. With LangChain, managing interactions with language models, chaining together various components, and integrating resources like APIs and databases is a breeze. The platform includes a set of APIs that can be integrated into applications, allowing developers to add language processing capabilities without having to start from scratch.Introducing MlFlow: An Open Source Platform for the Machine Learning Lifecycl...

Introducing MlFlow: An Open Source Platform for the Machine Learning Lifecycl...DataWorks Summit

Őż

Specialized tools for machine learning development and model governance are becoming essential. MlFlow is an open source platform for managing the machine learning lifecycle. Just by adding a few lines of code in the function or script that trains their model, data scientists can log parameters, metrics, artifacts (plots, miscellaneous files, etc.) and a deployable packaging of the ML model. Every time that function or script is run, the results will be logged automatically as a byproduct of those lines of code being added, even if the party doing the training run makes no special effort to record the results. MLflow application programming interfaces (APIs) are available for the Python, R and Java programming languages, and MLflow sports a language-agnostic REST API as well. Over a relatively short time period, MLflow has garnered more than 3,300 stars on GitHub , almost 500,000 monthly downloads and 80 contributors from more than 40 companies. Most significantly, more than 200 companies are now using MLflow. We will demo MlFlow Tracking , Project and Model components with Azure Machine Learning (AML) Services and show you how easy it is to get started with MlFlow on-prem or in the cloud. Conversational AI‚Äď Beyond the chatbot hype

Conversational AI‚Äď Beyond the chatbot hypeNUS-ISS

Őż

This document provides an overview of conversational AI and discusses emerging technologies like general and narrow AI. It begins by discussing the evolution of chatbots and how most current AI chatbots work using data correlation rather than causation. It then distinguishes between narrow AI, which can perform repetitive tasks with limited abilities, and general AI, which does not currently exist but would have unlimited abilities and ability to self-program. The document outlines some examples of how companies are using machine learning and AI to improve business operations. It emphasizes the importance of transparency, explainability, security and predictability for practical narrow AI applications. Finally, it discusses the importance of digital identity and outlines statistics on the potential impacts of good digital identity systems.Large Language Models Bootcamp

Large Language Models BootcampData Science Dojo

Őż

This document provides a 50-hour roadmap for building large language model (LLM) applications. It introduces key concepts like text-based and image-based generative AI models, encoder-decoder models, attention mechanisms, and transformers. It then covers topics like intro to image generation, generative AI applications, embeddings, attention mechanisms, transformers, vector databases, semantic search, prompt engineering, fine-tuning foundation models, orchestration frameworks, autonomous agents, bias and fairness, and recommended LLM application projects. The document recommends several hands-on exercises and lists upcoming bootcamp dates and locations for learning to build LLM applications.Use Case Patterns for LLM Applications (1).pdf

Use Case Patterns for LLM Applications (1).pdfM Waleed Kadous

Őż

What are the "use case patterns" for deploying LLMs into production? Understanding these will allow you to spot "LLM-shaped" problems in your own industry. A comprehensive guide to prompt engineering.pdf

A comprehensive guide to prompt engineering.pdfStephenAmell4

Őż

Prompt engineering is the practice of designing and refining specific text prompts to guide transformer-based language models, such as Large Language Models (LLMs), in generating desired outputs. It involves crafting clear and specific instructions and allowing the model sufficient time to process information.Google Cloud GenAI Overview_071223.pptx

Google Cloud GenAI Overview_071223.pptxVishPothapu

Őż

This document provides an overview of Google's generative AI offerings. It discusses large language models (LLMs) and what is possible with generative AI on Google Cloud, including Google's offerings like Vertex AI, Generative AI App Builder, and Foundation Models. It also discusses how enterprises can access, customize and deploy large models through Google Cloud to build innovative applications.Episode 2: The LLM / GPT / AI Prompt / Data Engineer Roadmap

Episode 2: The LLM / GPT / AI Prompt / Data Engineer RoadmapAnant Corporation

Őż

In this episode we'll discuss the different flavors of prompt engineering in the LLM/GPT space. According to your skill level you should be able to pick up at any of the following:

Leveling up with GPT

1: Use ChatGPT / GPT Powered Apps

2: Become a Prompt Engineer on ChatGPT/GPT

3: Use GPT API with NoCode Automation, App Builders

4: Create Workflows to Automate Tasks with NoCode

5: Use GPT API with Code, make your own APIs

6: Create Workflows to Automate Tasks with Code

7: Use GPT API with your Data / a Framework

8: Use GPT API with your Data / a Framework to Make your own APIs

9: Create Workflows to Automate Tasks with your Data /a Framework

10: Use Another LLM API other than GPT (Cohere, HuggingFace)

11: Use open source LLM models on your computer

12: Finetune / Build your own models

Series: Using AI / ChatGPT at Work - GPT Automation

Are you a small business owner or web developer interested in leveraging the power of GPT (Generative Pretrained Transformer) technology to enhance your business processes?

If so, Join us for a series of events focused on using GPT in business. Whether you're a small business owner or a web developer, you'll learn how to leverage GPT to improve your workflow and provide better services to your customers.Prompting is an art / Sztuka promptowania

Prompting is an art / Sztuka promptowaniaMichal Jaskolski

Őż

The document discusses advances in large language models from GPT-1 to the potential capabilities of GPT-4, including its ability to simulate human behavior, demonstrate sparks of artificial general intelligence, and generate virtual identities. It also provides tips on how to effectively prompt ChatGPT through techniques like prompt engineering, giving context and examples, and different response formats.A brief primer on OpenAI's GPT-3

A brief primer on OpenAI's GPT-3Ishan Jain

Őż

The GPT-3 model architecture is a transformer-based neural network that has been fed 45TB of text data. It is non-deterministic, in the sense that given the same input, multiple runs of the engine will return different responses. Also, it is trained on massive datasets that covered the entire web and contained 500B tokens, humongous 175 Billion parameters, a more than 100x increase over GPT-2, which was considered state-of-the-art technology with 1.5 billion parameters.What Is GPT-3 And Why Is It Revolutionizing Artificial Intelligence?

What Is GPT-3 And Why Is It Revolutionizing Artificial Intelligence?Bernard Marr

Őż

GPT-3 is an AI tool created by OpenAI that can generate text in human-like ways. It has been trained on vast amounts of text from the internet. GPT-3 can answer questions, summarize text, translate languages, and generate computer code. However, it has limitations as its output can become gibberish for complex tasks and it operates as a black box system. While impressive, GPT-3 is just an early glimpse of what advanced AI may be able to accomplish.Unlocking the Power of ChatGPT and AI in Testing - NextSteps, presented by Ap...

Unlocking the Power of ChatGPT and AI in Testing - NextSteps, presented by Ap...Applitools

Őż

The document discusses AI tools for software testing such as ChatGPT, Github Copilot, and Applitools Visual AI. It provides an overview of each tool and how they can help with testing tasks like test automation, debugging, and handling dynamic content. The document also covers potential challenges with AI like data privacy issues and tools having superficial knowledge. It emphasizes that AI should be used as an assistance to humans rather than replacing them and that finding the right balance and application of tools is important.Amazon Bedrock in Action - presentation of the Bedrock's capabilities

Amazon Bedrock in Action - presentation of the Bedrock's capabilitiesKrzysztofKkol1

Őż

This presentation delves into the capabilities of Amazon Bedrock, an AWS service designed to empower the use of Generative AI. Multiple use cases and patterns - like summarization, information extraction, or question answering - will be presented during the session, along with the implementation methods and some demos.CrowdCast Monthly: Operationalizing Intelligence

CrowdCast Monthly: Operationalizing IntelligenceCrowdStrike

Őż

In today’s threat environment, adversaries are constantly profiling and attacking your corporate infrastructure to access and collect your intellectual property, proprietary data, and trade secrets. Now, more than ever, Threat Intelligence is increasingly important for organizations who want to proactively defend against advanced threat actors.

While many organizations today are collecting massive amount of threat intelligence, are they able to translate the information into an effective defense strategy?

View the slides now to learn about threat intelligence for operational purposes, including real-world demonstrations of how to consume intelligence and integrate it with existing security infrastructure.

Learn how to prioritize response by differentiating between commodity and targeted attacks and develop a defense that responds to specific methods used by advanced attackers.Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks.pdf

Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks.pdfPo-Chuan Chen

Őż

The document describes the RAG (Retrieval-Augmented Generation) model for knowledge-intensive NLP tasks. RAG combines a pre-trained language generator (BART) with a dense passage retriever (DPR) to retrieve and incorporate relevant knowledge from Wikipedia. RAG achieves state-of-the-art results on open-domain question answering, abstractive question answering, and fact verification by leveraging both parametric knowledge from the generator and non-parametric knowledge retrieved from Wikipedia. The retrieved knowledge can also be updated without retraining the model.Building NLP applications with Transformers

Building NLP applications with TransformersJulien SIMON

Őż

The document discusses how transformer models and transfer learning (Deep Learning 2.0) have improved natural language processing by allowing researchers to easily apply pre-trained models to new tasks with limited data. It presents examples of how HuggingFace has used transformer models for tasks like translation and part-of-speech tagging. The document also discusses tools from HuggingFace that make it easier to train models on hardware accelerators and deploy them to production.Cavalry Ventures | Deep Dive: Generative AI

Cavalry Ventures | Deep Dive: Generative AICavalry Ventures

Őż

Chat GPT 4 can pass the American state bar exam, but before you go expecting to see robot lawyers taking over the courtroom, hold your horses cowboys ‚Äď we're not quite there yet. That being said, AI is becoming increasingly more human-like, and as a VC we need to start thinking about how this new wave of technology is going to affect the way we build and run businesses. What do we need to do differently? How can we make sure that our investment strategies are reflecting these changes? It's a brave new world out there, and we‚Äôve got to keep the big picture in mind!

Sharing here with you what we at Cavalry Ventures found out during our Generative AI deep dive.AI and ML Series - Introduction to Generative AI and LLMs - Session 1

AI and ML Series - Introduction to Generative AI and LLMs - Session 1DianaGray10

Őż

Session 1

ūüĎČThis first session will cover an introduction to Generative AI & harnessing the power of large language models. The following topics will be discussed:

Introduction to Generative AI & harnessing the power of large language models.

What’s generative AI & what’s LLM.

How are we using it in our document understanding & communication mining models?

How to develop a trustworthy and unbiased AI model using LLM & GenAI.

Personal Intelligent Assistant

Speakers:

ūüďĆGeorge Roth - AI Evangelist at UiPath

ūüďĆSharon Palawandram - Senior Machine Learning Consultant @ Ashling Partners & UiPath MVP

ūüďĆRussel Alfeche - Technology Leader RPA @qBotica & UiPath MVPPrompt Engineering - an Art, a Science, or your next Job Title?

Prompt Engineering - an Art, a Science, or your next Job Title?Maxim Salnikov

Őż

It's quite ironic that to interact with the most advanced AI in our history - Large Language Models: ChatGPT, etc. - we must use human language, not programming one. But how to get the most out of this dialogue i.e. how to create robust and efficient prompts so AI returns exactly what's needed for your solution on the first try? After my session, you can add the Junior (at least) Prompt Engineer skill to your CV: I will introduce Prompt Engineering as an emerging discipline with its own methodologies, tools, and best practices. Expect lots of examples that will help you to write ideal prompts for all occasions.

This session is based on my research and experiments in Prompt Engineering and is 100% relevant for cloud developers who investigate adding some LLM-powered features to their solutions. It's a guide to building proper prompts for AI to get desired results fast and cost-efficient.ChatGPT OpenAI Primer for Business

ChatGPT OpenAI Primer for BusinessDion Hinchcliffe

Őż

- ChatGPT was launched in November 2022 and gained over 1 million users in its first 5 days, making it one of the fastest adopted digital products.

- ChatGPT is based on GPT-3, a large language model developed by OpenAI over many years using trillions of words from the internet to power conversational abilities.

- ChatGPT can answer questions, write stories, programs, music and more based on its vast knowledge, but cannot provide fully trustworthy information, create harmful content, or replace all human jobs.Zero Trust Model Presentation

Zero Trust Model PresentationGowdhaman Jothilingam

Őż

This document discusses Zero Trust security and how to implement a Zero Trust network architecture. It begins with an overview of Zero Trust and why it is important given limitations of traditional perimeter-based networks. It then covers the basic components of a Zero Trust network, including an identity provider, device directory, policy evaluation service, and access proxy. The document provides guidance on designing a Zero Trust architecture by starting with questions about users, applications, conditions for access, and corresponding controls. Specific conditions discussed include user/device attributes as well as device health and identity. Benefits of the Zero Trust model include conditional access, preventing lateral movement, and increased productivity.ChatGPT vs. GPT-3.pdf

ChatGPT vs. GPT-3.pdfAddepto

Őż

If you are considering using either language model, but aren’t quite sure which one’s the best fit for your intended purpose, read on for a ChatGPT vs. GPT-3 head-to-head comparison where we evaluate every aspect of the language models, right from their emergence, how they work, and their suitability in different applications.LanGCHAIN Framework

LanGCHAIN FrameworkKeymate.AI

Őż

Langchain Framework is an innovative approach to linguistic data processing, combining the principles of language sciences, blockchain technology, and artificial intelligence. This deck introduces the groundbreaking elements of the framework, detailing how it enhances security, transparency, and decentralization in language data management. It discusses its applications in various fields, including machine learning, translation services, content creation, and more. The deck also highlights its key features, such as immutability, peer-to-peer networks, and linguistic asset ownership, that could revolutionize how we handle linguistic data in the digital age.Generative AI - Responsible Path Forward.pdf

Generative AI - Responsible Path Forward.pdfSaeed Al Dhaheri

Őż

Generative AI: Responsible Path Forward

Dr. Saeed Aldhaheri discusses the potential and risks of generative AI and proposes a responsible path forward. He outlines that (1) while generative AI shows great economic potential and can augment human capabilities, it also poses new ethical risks if not developed responsibly. (2) Current approaches by the tech industry are not sufficient, and a human-centered perspective is needed. (3) Building responsible generative AI requires moving beyond technical solutions to address sociotechnical issues through principles of ethics by design, governance, risk frameworks, and responsible data practices.Generative AI at the edge.pdf

Generative AI at the edge.pdfQualcomm Research

Őż

Generative AI models, such as ChatGPT and Stable Diffusion, can create new and original content like text, images, video, audio, or other data from simple prompts, as well as handle complex dialogs and reason about problems with or without images. These models are disrupting traditional technologies, from search and content creation to automation and problem solving, and are fundamentally shaping the future user interface to computing devices. Generative AI can apply broadly across industries, providing significant enhancements for utility, productivity, and entertainment. As generative AI adoption grows at record-setting speeds and computing demands increase, on-device and hybrid processing are more important than ever. Just like traditional computing evolved from mainframes to today’s mix of cloud and edge devices, AI processing will be distributed between them for AI to scale and reach its full potential.

In this presentation you’ll learn about:

- Why on-device AI is key

- Full-stack AI optimizations to make on-device AI possible and efficient

- Advanced techniques like quantization, distillation, and speculative decoding

- How generative AI models can be run on device and examples of some running now

- Qualcomm Technologies’ role in scaling on-device generative AIgoogle gemini.pdf

google gemini.pdfRoadmapITSolution

Őż

Gemini is the outcome of large-scale collaborative efforts by teams across Google and Google DeepMind ūü߆, who built from the ground up to be multimodal.

Gemini is now available in Gemini Nano and Gemini Pro sizes on Google products- Google Pixel 8 and Google Bard respectively.

Unlike other generative AI multimodal models, Google's Gemini appears to be more product-focused, which is either integrated into the company's ecosystem or better plans to be.

[žĹĒžĄłŽāė, kosena] žÉĚžĄĪAI ŪĒĄŽ°úž†ĚŪäłžôÄ žā¨Ž°Ä![[žĹĒžĄłŽāė, kosena] žÉĚžĄĪAI ŪĒĄŽ°úž†ĚŪäłžôÄ žā¨Ž°Ä](https://cdn.slidesharecdn.com/ss_thumbnails/ai-240212104807-42c291b6-thumbnail.jpg?width=560&fit=bounds)

![[žĹĒžĄłŽāė, kosena] žÉĚžĄĪAI ŪĒĄŽ°úž†ĚŪäłžôÄ žā¨Ž°Ä](https://cdn.slidesharecdn.com/ss_thumbnails/ai-240212104807-42c291b6-thumbnail.jpg?width=560&fit=bounds)

![[žĹĒžĄłŽāė, kosena] žÉĚžĄĪAI ŪĒĄŽ°úž†ĚŪäłžôÄ žā¨Ž°Ä](https://cdn.slidesharecdn.com/ss_thumbnails/ai-240212104807-42c291b6-thumbnail.jpg?width=560&fit=bounds)

![[žĹĒžĄłŽāė, kosena] žÉĚžĄĪAI ŪĒĄŽ°úž†ĚŪäłžôÄ žā¨Ž°Ä](https://cdn.slidesharecdn.com/ss_thumbnails/ai-240212104807-42c291b6-thumbnail.jpg?width=560&fit=bounds)

[žĹĒžĄłŽāė, kosena] žÉĚžĄĪAI ŪĒĄŽ°úž†ĚŪäłžôÄ žā¨Ž°Äkosena

Őż

žÉĚžĄĪ AI ŪĒĄŽ°úž†ĚŪäł žßĄŪĖČ Žį©žēąÍ≥ľ žĶúÍ∑ľ žā¨Ž°ÄŽď§žĚĄ Í≥Ķžú†ŪēėÍ≥†žěź Ūē®Í≤ÄžÉČžóĒžßĄžóź ž†Āžö©Žźú ChatGPT

Í≤ÄžÉČžóĒžßĄžóź ž†Āžö©Žźú ChatGPTTae Young Lee

Őż

ChatGPT is a natural language processing technology developed by OpenAI. This model is based on the GPT-3 architecture and can be applied to various language tasks by training on large-scale datasets. When applied to a search engine, ChatGPT enables the implementation of an AI-based conversational system that understands user questions or queries and provides relevant information.

ChatGPT takes user questions as input and generates appropriate responses based on them. Since this model considers the context of previous conversations, it can provide more natural dialogue. Moreover, ChatGPT has been trained on diverse information from the internet, allowing it to provide practical and accurate answers to user questions.

When applying ChatGPT to a search engine, the system searches for relevant information based on the user's search query and uses ChatGPT to generate answers to present along with the search results. To do this, the search engine provides an interface that connects with ChatGPT, allowing the user's questions to be passed to the model and the answers generated by the model to be presented alongside the search results.More Related Content

What's hot (20)

Google Cloud GenAI Overview_071223.pptx

Google Cloud GenAI Overview_071223.pptxVishPothapu

Őż

This document provides an overview of Google's generative AI offerings. It discusses large language models (LLMs) and what is possible with generative AI on Google Cloud, including Google's offerings like Vertex AI, Generative AI App Builder, and Foundation Models. It also discusses how enterprises can access, customize and deploy large models through Google Cloud to build innovative applications.Episode 2: The LLM / GPT / AI Prompt / Data Engineer Roadmap

Episode 2: The LLM / GPT / AI Prompt / Data Engineer RoadmapAnant Corporation

Őż

In this episode we'll discuss the different flavors of prompt engineering in the LLM/GPT space. According to your skill level you should be able to pick up at any of the following:

Leveling up with GPT

1: Use ChatGPT / GPT Powered Apps

2: Become a Prompt Engineer on ChatGPT/GPT

3: Use GPT API with NoCode Automation, App Builders

4: Create Workflows to Automate Tasks with NoCode

5: Use GPT API with Code, make your own APIs

6: Create Workflows to Automate Tasks with Code

7: Use GPT API with your Data / a Framework

8: Use GPT API with your Data / a Framework to Make your own APIs

9: Create Workflows to Automate Tasks with your Data /a Framework

10: Use Another LLM API other than GPT (Cohere, HuggingFace)

11: Use open source LLM models on your computer

12: Finetune / Build your own models

Series: Using AI / ChatGPT at Work - GPT Automation

Are you a small business owner or web developer interested in leveraging the power of GPT (Generative Pretrained Transformer) technology to enhance your business processes?

If so, Join us for a series of events focused on using GPT in business. Whether you're a small business owner or a web developer, you'll learn how to leverage GPT to improve your workflow and provide better services to your customers.Prompting is an art / Sztuka promptowania

Prompting is an art / Sztuka promptowaniaMichal Jaskolski

Őż

The document discusses advances in large language models from GPT-1 to the potential capabilities of GPT-4, including its ability to simulate human behavior, demonstrate sparks of artificial general intelligence, and generate virtual identities. It also provides tips on how to effectively prompt ChatGPT through techniques like prompt engineering, giving context and examples, and different response formats.A brief primer on OpenAI's GPT-3

A brief primer on OpenAI's GPT-3Ishan Jain

Őż

The GPT-3 model architecture is a transformer-based neural network that has been fed 45TB of text data. It is non-deterministic, in the sense that given the same input, multiple runs of the engine will return different responses. Also, it is trained on massive datasets that covered the entire web and contained 500B tokens, humongous 175 Billion parameters, a more than 100x increase over GPT-2, which was considered state-of-the-art technology with 1.5 billion parameters.What Is GPT-3 And Why Is It Revolutionizing Artificial Intelligence?

What Is GPT-3 And Why Is It Revolutionizing Artificial Intelligence?Bernard Marr

Őż

GPT-3 is an AI tool created by OpenAI that can generate text in human-like ways. It has been trained on vast amounts of text from the internet. GPT-3 can answer questions, summarize text, translate languages, and generate computer code. However, it has limitations as its output can become gibberish for complex tasks and it operates as a black box system. While impressive, GPT-3 is just an early glimpse of what advanced AI may be able to accomplish.Unlocking the Power of ChatGPT and AI in Testing - NextSteps, presented by Ap...

Unlocking the Power of ChatGPT and AI in Testing - NextSteps, presented by Ap...Applitools

Őż

The document discusses AI tools for software testing such as ChatGPT, Github Copilot, and Applitools Visual AI. It provides an overview of each tool and how they can help with testing tasks like test automation, debugging, and handling dynamic content. The document also covers potential challenges with AI like data privacy issues and tools having superficial knowledge. It emphasizes that AI should be used as an assistance to humans rather than replacing them and that finding the right balance and application of tools is important.Amazon Bedrock in Action - presentation of the Bedrock's capabilities

Amazon Bedrock in Action - presentation of the Bedrock's capabilitiesKrzysztofKkol1

Őż

This presentation delves into the capabilities of Amazon Bedrock, an AWS service designed to empower the use of Generative AI. Multiple use cases and patterns - like summarization, information extraction, or question answering - will be presented during the session, along with the implementation methods and some demos.CrowdCast Monthly: Operationalizing Intelligence

CrowdCast Monthly: Operationalizing IntelligenceCrowdStrike

Őż

In today’s threat environment, adversaries are constantly profiling and attacking your corporate infrastructure to access and collect your intellectual property, proprietary data, and trade secrets. Now, more than ever, Threat Intelligence is increasingly important for organizations who want to proactively defend against advanced threat actors.

While many organizations today are collecting massive amount of threat intelligence, are they able to translate the information into an effective defense strategy?

View the slides now to learn about threat intelligence for operational purposes, including real-world demonstrations of how to consume intelligence and integrate it with existing security infrastructure.

Learn how to prioritize response by differentiating between commodity and targeted attacks and develop a defense that responds to specific methods used by advanced attackers.Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks.pdf

Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks.pdfPo-Chuan Chen

Őż

The document describes the RAG (Retrieval-Augmented Generation) model for knowledge-intensive NLP tasks. RAG combines a pre-trained language generator (BART) with a dense passage retriever (DPR) to retrieve and incorporate relevant knowledge from Wikipedia. RAG achieves state-of-the-art results on open-domain question answering, abstractive question answering, and fact verification by leveraging both parametric knowledge from the generator and non-parametric knowledge retrieved from Wikipedia. The retrieved knowledge can also be updated without retraining the model.Building NLP applications with Transformers

Building NLP applications with TransformersJulien SIMON

Őż

The document discusses how transformer models and transfer learning (Deep Learning 2.0) have improved natural language processing by allowing researchers to easily apply pre-trained models to new tasks with limited data. It presents examples of how HuggingFace has used transformer models for tasks like translation and part-of-speech tagging. The document also discusses tools from HuggingFace that make it easier to train models on hardware accelerators and deploy them to production.Cavalry Ventures | Deep Dive: Generative AI

Cavalry Ventures | Deep Dive: Generative AICavalry Ventures

Őż

Chat GPT 4 can pass the American state bar exam, but before you go expecting to see robot lawyers taking over the courtroom, hold your horses cowboys ‚Äď we're not quite there yet. That being said, AI is becoming increasingly more human-like, and as a VC we need to start thinking about how this new wave of technology is going to affect the way we build and run businesses. What do we need to do differently? How can we make sure that our investment strategies are reflecting these changes? It's a brave new world out there, and we‚Äôve got to keep the big picture in mind!

Sharing here with you what we at Cavalry Ventures found out during our Generative AI deep dive.AI and ML Series - Introduction to Generative AI and LLMs - Session 1

AI and ML Series - Introduction to Generative AI and LLMs - Session 1DianaGray10

Őż

Session 1

ūüĎČThis first session will cover an introduction to Generative AI & harnessing the power of large language models. The following topics will be discussed:

Introduction to Generative AI & harnessing the power of large language models.

What’s generative AI & what’s LLM.

How are we using it in our document understanding & communication mining models?

How to develop a trustworthy and unbiased AI model using LLM & GenAI.

Personal Intelligent Assistant

Speakers:

ūüďĆGeorge Roth - AI Evangelist at UiPath

ūüďĆSharon Palawandram - Senior Machine Learning Consultant @ Ashling Partners & UiPath MVP

ūüďĆRussel Alfeche - Technology Leader RPA @qBotica & UiPath MVPPrompt Engineering - an Art, a Science, or your next Job Title?

Prompt Engineering - an Art, a Science, or your next Job Title?Maxim Salnikov

Őż

It's quite ironic that to interact with the most advanced AI in our history - Large Language Models: ChatGPT, etc. - we must use human language, not programming one. But how to get the most out of this dialogue i.e. how to create robust and efficient prompts so AI returns exactly what's needed for your solution on the first try? After my session, you can add the Junior (at least) Prompt Engineer skill to your CV: I will introduce Prompt Engineering as an emerging discipline with its own methodologies, tools, and best practices. Expect lots of examples that will help you to write ideal prompts for all occasions.

This session is based on my research and experiments in Prompt Engineering and is 100% relevant for cloud developers who investigate adding some LLM-powered features to their solutions. It's a guide to building proper prompts for AI to get desired results fast and cost-efficient.ChatGPT OpenAI Primer for Business

ChatGPT OpenAI Primer for BusinessDion Hinchcliffe

Őż

- ChatGPT was launched in November 2022 and gained over 1 million users in its first 5 days, making it one of the fastest adopted digital products.

- ChatGPT is based on GPT-3, a large language model developed by OpenAI over many years using trillions of words from the internet to power conversational abilities.

- ChatGPT can answer questions, write stories, programs, music and more based on its vast knowledge, but cannot provide fully trustworthy information, create harmful content, or replace all human jobs.Zero Trust Model Presentation

Zero Trust Model PresentationGowdhaman Jothilingam

Őż

This document discusses Zero Trust security and how to implement a Zero Trust network architecture. It begins with an overview of Zero Trust and why it is important given limitations of traditional perimeter-based networks. It then covers the basic components of a Zero Trust network, including an identity provider, device directory, policy evaluation service, and access proxy. The document provides guidance on designing a Zero Trust architecture by starting with questions about users, applications, conditions for access, and corresponding controls. Specific conditions discussed include user/device attributes as well as device health and identity. Benefits of the Zero Trust model include conditional access, preventing lateral movement, and increased productivity.ChatGPT vs. GPT-3.pdf

ChatGPT vs. GPT-3.pdfAddepto

Őż

If you are considering using either language model, but aren’t quite sure which one’s the best fit for your intended purpose, read on for a ChatGPT vs. GPT-3 head-to-head comparison where we evaluate every aspect of the language models, right from their emergence, how they work, and their suitability in different applications.LanGCHAIN Framework

LanGCHAIN FrameworkKeymate.AI

Őż

Langchain Framework is an innovative approach to linguistic data processing, combining the principles of language sciences, blockchain technology, and artificial intelligence. This deck introduces the groundbreaking elements of the framework, detailing how it enhances security, transparency, and decentralization in language data management. It discusses its applications in various fields, including machine learning, translation services, content creation, and more. The deck also highlights its key features, such as immutability, peer-to-peer networks, and linguistic asset ownership, that could revolutionize how we handle linguistic data in the digital age.Generative AI - Responsible Path Forward.pdf

Generative AI - Responsible Path Forward.pdfSaeed Al Dhaheri

Őż

Generative AI: Responsible Path Forward

Dr. Saeed Aldhaheri discusses the potential and risks of generative AI and proposes a responsible path forward. He outlines that (1) while generative AI shows great economic potential and can augment human capabilities, it also poses new ethical risks if not developed responsibly. (2) Current approaches by the tech industry are not sufficient, and a human-centered perspective is needed. (3) Building responsible generative AI requires moving beyond technical solutions to address sociotechnical issues through principles of ethics by design, governance, risk frameworks, and responsible data practices.Generative AI at the edge.pdf

Generative AI at the edge.pdfQualcomm Research

Őż

Generative AI models, such as ChatGPT and Stable Diffusion, can create new and original content like text, images, video, audio, or other data from simple prompts, as well as handle complex dialogs and reason about problems with or without images. These models are disrupting traditional technologies, from search and content creation to automation and problem solving, and are fundamentally shaping the future user interface to computing devices. Generative AI can apply broadly across industries, providing significant enhancements for utility, productivity, and entertainment. As generative AI adoption grows at record-setting speeds and computing demands increase, on-device and hybrid processing are more important than ever. Just like traditional computing evolved from mainframes to today’s mix of cloud and edge devices, AI processing will be distributed between them for AI to scale and reach its full potential.

In this presentation you’ll learn about:

- Why on-device AI is key

- Full-stack AI optimizations to make on-device AI possible and efficient

- Advanced techniques like quantization, distillation, and speculative decoding

- How generative AI models can be run on device and examples of some running now

- Qualcomm Technologies’ role in scaling on-device generative AIgoogle gemini.pdf

google gemini.pdfRoadmapITSolution

Őż

Gemini is the outcome of large-scale collaborative efforts by teams across Google and Google DeepMind ūü߆, who built from the ground up to be multimodal.

Gemini is now available in Gemini Nano and Gemini Pro sizes on Google products- Google Pixel 8 and Google Bard respectively.

Unlike other generative AI multimodal models, Google's Gemini appears to be more product-focused, which is either integrated into the company's ecosystem or better plans to be.

Similar to Lang Chain (Kitworks Team Study žú§ž†ēŽĻą ŽįúŪĎúžěźŽ£Ć) (20)

[žĹĒžĄłŽāė, kosena] žÉĚžĄĪAI ŪĒĄŽ°úž†ĚŪäłžôÄ žā¨Ž°Ä![[žĹĒžĄłŽāė, kosena] žÉĚžĄĪAI ŪĒĄŽ°úž†ĚŪäłžôÄ žā¨Ž°Ä](https://cdn.slidesharecdn.com/ss_thumbnails/ai-240212104807-42c291b6-thumbnail.jpg?width=560&fit=bounds)

![[žĹĒžĄłŽāė, kosena] žÉĚžĄĪAI ŪĒĄŽ°úž†ĚŪäłžôÄ žā¨Ž°Ä](https://cdn.slidesharecdn.com/ss_thumbnails/ai-240212104807-42c291b6-thumbnail.jpg?width=560&fit=bounds)

![[žĹĒžĄłŽāė, kosena] žÉĚžĄĪAI ŪĒĄŽ°úž†ĚŪäłžôÄ žā¨Ž°Ä](https://cdn.slidesharecdn.com/ss_thumbnails/ai-240212104807-42c291b6-thumbnail.jpg?width=560&fit=bounds)

![[žĹĒžĄłŽāė, kosena] žÉĚžĄĪAI ŪĒĄŽ°úž†ĚŪäłžôÄ žā¨Ž°Ä](https://cdn.slidesharecdn.com/ss_thumbnails/ai-240212104807-42c291b6-thumbnail.jpg?width=560&fit=bounds)

[žĹĒžĄłŽāė, kosena] žÉĚžĄĪAI ŪĒĄŽ°úž†ĚŪäłžôÄ žā¨Ž°Äkosena

Őż

žÉĚžĄĪ AI ŪĒĄŽ°úž†ĚŪäł žßĄŪĖČ Žį©žēąÍ≥ľ žĶúÍ∑ľ žā¨Ž°ÄŽď§žĚĄ Í≥Ķžú†ŪēėÍ≥†žěź Ūē®Í≤ÄžÉČžóĒžßĄžóź ž†Āžö©Žźú ChatGPT

Í≤ÄžÉČžóĒžßĄžóź ž†Āžö©Žźú ChatGPTTae Young Lee

Őż

ChatGPT is a natural language processing technology developed by OpenAI. This model is based on the GPT-3 architecture and can be applied to various language tasks by training on large-scale datasets. When applied to a search engine, ChatGPT enables the implementation of an AI-based conversational system that understands user questions or queries and provides relevant information.

ChatGPT takes user questions as input and generates appropriate responses based on them. Since this model considers the context of previous conversations, it can provide more natural dialogue. Moreover, ChatGPT has been trained on diverse information from the internet, allowing it to provide practical and accurate answers to user questions.

When applying ChatGPT to a search engine, the system searches for relevant information based on the user's search query and uses ChatGPT to generate answers to present along with the search results. To do this, the search engine provides an interface that connects with ChatGPT, allowing the user's questions to be passed to the model and the answers generated by the model to be presented alongside the search results.31Íłį Í≥†žßÄžõÖ "ÍĶ¨ÍłÄžė§ŪĒąžÜĆžä§"

31Íłį Í≥†žßÄžõÖ "ÍĶ¨ÍłÄžė§ŪĒąžÜĆžä§"hyu_jaram

Őż

31Íłį Í≥†žßÄžõÖ ŪēôžöįžĚė 2017ŽÖĄ 2ŪēôÍłį žěźŽěĆ žĄłŽĮłŽāė žěźŽ£ĆA future that integrates LLMs and LAMs (Symposium)

A future that integrates LLMs and LAMs (Symposium)Tae Young Lee

Őż

Presentation material from the IT graduate school joint event

- Korea University Graduate School of Computer Information and Communication

- Sogang University Graduate School of Information and Communication

- Sungkyunkwan University Graduate School of Information and Communication

- Yonsei University Graduate School of Engineering

- Hanyang University Graduate School of Artificial Intelligence ConvergenceFluenty(ÍĻÄÍįēŪēô ŽĆÄŪĎú)_AI Startup D.PARTY_20161020

Fluenty(ÍĻÄÍįēŪēô ŽĆÄŪĎú)_AI Startup D.PARTY_20161020D.CAMP

Őż

ÍĶ¨ÍłÄŽ≥īŽč§ ŽĻ†Ž•ł 'žä§ŽßąŪäł Ž¶¨ŪĒĆŽĚľžĚī'Íłįžą†žĚė Ūôúžö© ŽįŹ žĪóŽīá ŽĻĆŽĒ© ŪĒĆŽěęŪŹľ[žė§ŪĒąžÜƞ䧞Ľ®žĄ§ŪĆÖ] ÍłįžóÖ Žßěž∂§Ūėē On-Premise LLM Solution![[žė§ŪĒąžÜƞ䧞Ľ®žĄ§ŪĆÖ] ÍłįžóÖ Žßěž∂§Ūėē On-Premise LLM Solution](https://cdn.slidesharecdn.com/ss_thumbnails/llm202411-241111013615-47e5c81c-thumbnail.jpg?width=560&fit=bounds)

![[žė§ŪĒąžÜƞ䧞Ľ®žĄ§ŪĆÖ] ÍłįžóÖ Žßěž∂§Ūėē On-Premise LLM Solution](https://cdn.slidesharecdn.com/ss_thumbnails/llm202411-241111013615-47e5c81c-thumbnail.jpg?width=560&fit=bounds)

![[žė§ŪĒąžÜƞ䧞Ľ®žĄ§ŪĆÖ] ÍłįžóÖ Žßěž∂§Ūėē On-Premise LLM Solution](https://cdn.slidesharecdn.com/ss_thumbnails/llm202411-241111013615-47e5c81c-thumbnail.jpg?width=560&fit=bounds)

![[žė§ŪĒąžÜƞ䧞Ľ®žĄ§ŪĆÖ] ÍłįžóÖ Žßěž∂§Ūėē On-Premise LLM Solution](https://cdn.slidesharecdn.com/ss_thumbnails/llm202411-241111013615-47e5c81c-thumbnail.jpg?width=560&fit=bounds)

[žė§ŪĒąžÜƞ䧞Ľ®žĄ§ŪĆÖ] ÍłįžóÖ Žßěž∂§Ūėē On-Premise LLM SolutionOpen Source Consulting

Őż

žė§ŪĒąžÜƞ䧞Ľ®žĄ§ŪĆÖžĚė ÍłįžóÖ Žßěž∂§Ūėē žė®ŪĒĄŽ†ąŽĮłžä§ LLM žÜĒŽ£®žÖėžĚĄ žÜĆÍįúŪē©ŽčąŽč§.[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ]ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę_202410.pdf![[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ]ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę_202410.pdf](https://cdn.slidesharecdn.com/ss_thumbnails/llm202410-241029044603-0c34e45a-thumbnail.jpg?width=560&fit=bounds)

![[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ]ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę_202410.pdf](https://cdn.slidesharecdn.com/ss_thumbnails/llm202410-241029044603-0c34e45a-thumbnail.jpg?width=560&fit=bounds)

![[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ]ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę_202410.pdf](https://cdn.slidesharecdn.com/ss_thumbnails/llm202410-241029044603-0c34e45a-thumbnail.jpg?width=560&fit=bounds)

![[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ]ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę_202410.pdf](https://cdn.slidesharecdn.com/ss_thumbnails/llm202410-241029044603-0c34e45a-thumbnail.jpg?width=560&fit=bounds)

[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ]ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę_202410.pdfYOHAN LEE

Őż

ÍĶ¨ž∂ēŪėē LLM žÜĒŽ£®žÖė[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ]ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę_202410.pdf![[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ]ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę_202410.pdf](https://cdn.slidesharecdn.com/ss_thumbnails/llm202410-241029044926-6f7c6e61-thumbnail.jpg?width=560&fit=bounds)

![[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ]ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę_202410.pdf](https://cdn.slidesharecdn.com/ss_thumbnails/llm202410-241029044926-6f7c6e61-thumbnail.jpg?width=560&fit=bounds)

![[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ]ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę_202410.pdf](https://cdn.slidesharecdn.com/ss_thumbnails/llm202410-241029044926-6f7c6e61-thumbnail.jpg?width=560&fit=bounds)

![[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ]ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę_202410.pdf](https://cdn.slidesharecdn.com/ss_thumbnails/llm202410-241029044926-6f7c6e61-thumbnail.jpg?width=560&fit=bounds)

[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ]ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę_202410.pdfYOHAN LEE

Őż

ÍĶ¨ž∂ēŪėē LLM žÜĒŽ£®žÖėSlipp ŠĄáŠÖ°ŠÜĮŠĄĎŠÖ≠ ŠĄĆŠÖ°ŠĄÖŠÖ≠ 20151212

Slipp ŠĄáŠÖ°ŠÜĮŠĄĎŠÖ≠ ŠĄĆŠÖ°ŠĄÖŠÖ≠ 20151212Jinsoo Jung

Őż

2015ŽÖĄ 12žõĒ 12žĚľ Slipp ConferencežóźžĄú ŽįúŪĎúŪēú žěźŽ£Ćžě֎蹎č§[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ] ÍłįžóÖ Žßěž∂§Ūėē ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę![[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ] ÍłįžóÖ Žßěž∂§Ūėē ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę](https://cdn.slidesharecdn.com/ss_thumbnails/llm202410-241106053231-5bf93cca-thumbnail.jpg?width=560&fit=bounds)

![[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ] ÍłįžóÖ Žßěž∂§Ūėē ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę](https://cdn.slidesharecdn.com/ss_thumbnails/llm202410-241106053231-5bf93cca-thumbnail.jpg?width=560&fit=bounds)

![[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ] ÍłįžóÖ Žßěž∂§Ūėē ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę](https://cdn.slidesharecdn.com/ss_thumbnails/llm202410-241106053231-5bf93cca-thumbnail.jpg?width=560&fit=bounds)

![[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ] ÍłįžóÖ Žßěž∂§Ūėē ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę](https://cdn.slidesharecdn.com/ss_thumbnails/llm202410-241106053231-5bf93cca-thumbnail.jpg?width=560&fit=bounds)

[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ] ÍłįžóÖ Žßěž∂§Ūėē ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜęOpen Source Consulting

Őż

žė§ŪĒąžÜƞ䧞Ľ®žĄ§ŪĆÖŽßĆžĚė ÍłįžóÖ Žßěž∂§Ūėē žė®ŪĒĄŽ†ąŽĮłžä§ LLM žÜĒŽ£®žÖėžĚĄ žÜĆÍįúŪē©ŽčąŽč§.ŽĒĒžßÄŽ°úÍ∑ł Ž©ĒŽ™® žĖīŪĒĆŽ¶¨žľÄžĚīžÖė

ŽĒĒžßÄŽ°úÍ∑ł Ž©ĒŽ™® žĖīŪĒĆŽ¶¨žľÄžĚīžÖėžäĻŪÉú žóľ

Őż

žā¨žö©žěźÍįÄ žßĀž†Ď Í∑łŽ¶¨ŽäĒ ŪĎúžčĚžóź ŽĒĒžßÄŪĄł ž†ēŽ≥īŽ•ľ ŽßĀŪĀ¨žčúŪā§ŽäĒ ÍłįŽä•žĚĄ ÍįĞߥ Ž©ĒŽ™® žĖīŪĒĆŽ¶¨žľÄžĚīžÖė Mobile Application Development Platform "Morpheus"

Mobile Application Development Platform "Morpheus"ŪÉúžĚľŽ≥łŽ∂Äžě•Žčė(Uracle) Í∂Ć

Őż

ŽĆÄŪēúŽĮľÍĶ≠ No.1 Ž™®ŽįĒžĚľ ÍįúŽįú ŪĒĆŽěęŪŹľ Ž™®ŪĒľžĖīžä§žóź ŽĆÄŪēú žÜĆÍįú žěźŽ£Ćžě֎蹎č§.Ž™®ŪĒľžĖīžä§ŽäĒ ÍĶ≠Žāī 200žó¨ žā¨žĚīŪ䳞󟞥ú Í∑ł Ūö®Í≥ľŽ•ľ žěÖž¶ĚŪēú Ž™®ŽįĒžĚľ ŪĒĆŽěęŪŹľžúľŽ°ú GartnerÍįÄ žöĒÍĶ¨ŪēėŽäĒ MADP(Mobile Application Development Platform)žĚė 7ÍįÄžßÄ žöĒÍĪīžĚĄ ŽßĆž°ĪŪēėŽäĒ ÍĶ≠ŽāīžĚė žú†žĚľŪēú ŪĒĆŽěęŪŹľžě֎蹎č§.

(Morpheus is the No.1 MADP in Korea Market.) LLM ŠĄÜŠÖ©ŠĄÉŠÖ¶ŠÜĮ ŠĄÄŠÖĶŠĄáŠÖ°ŠÜę ŠĄČŠÖ•ŠĄáŠÖĶŠĄČŠÖ≥ ŠĄČŠÖĶŠÜĮŠĄĆŠÖ•ŠÜę ŠĄÄŠÖ°ŠĄčŠÖĶŠĄÉŠÖ≥

LLM ŠĄÜŠÖ©ŠĄÉŠÖ¶ŠÜĮ ŠĄÄŠÖĶŠĄáŠÖ°ŠÜę ŠĄČŠÖ•ŠĄáŠÖĶŠĄČŠÖ≥ ŠĄČŠÖĶŠÜĮŠĄĆŠÖ•ŠÜę ŠĄÄŠÖ°ŠĄčŠÖĶŠĄÉŠÖ≥Tae Young Lee

Őż

Real-time seminar presentation material at the MODU-POP event on September 19, 2023 as ChatGPT Prompt Learning Research Lab Director at MODU's Lab[ŽßąžĚłž¶ąŽě©] Ai ŪĒĆŽěęŪŹľ maum.ai žÜĆÍįúžĄú 201707![[ŽßąžĚłž¶ąŽě©] Ai ŪĒĆŽěęŪŹľ maum.ai žÜĆÍįúžĄú 201707](https://cdn.slidesharecdn.com/ss_thumbnails/aimaum-170726050351-thumbnail.jpg?width=560&fit=bounds)

![[ŽßąžĚłž¶ąŽě©] Ai ŪĒĆŽěęŪŹľ maum.ai žÜĆÍįúžĄú 201707](https://cdn.slidesharecdn.com/ss_thumbnails/aimaum-170726050351-thumbnail.jpg?width=560&fit=bounds)

![[ŽßąžĚłž¶ąŽě©] Ai ŪĒĆŽěęŪŹľ maum.ai žÜĆÍįúžĄú 201707](https://cdn.slidesharecdn.com/ss_thumbnails/aimaum-170726050351-thumbnail.jpg?width=560&fit=bounds)

![[ŽßąžĚłž¶ąŽě©] Ai ŪĒĆŽěęŪŹľ maum.ai žÜĆÍįúžĄú 201707](https://cdn.slidesharecdn.com/ss_thumbnails/aimaum-170726050351-thumbnail.jpg?width=560&fit=bounds)

[ŽßąžĚłž¶ąŽě©] Ai ŪĒĆŽěęŪŹľ maum.ai žÜĆÍįúžĄú 201707Taejoon Yoo

Őż

[ŽßąžĚłž¶ąŽě©] Ai ŪĒĆŽěęŪŹľ maum.ai žÜĆÍįúžĄú 201707žě¨žóÖŽ°úŽďúž£ľžÜĆ: /hnki0104/gsshop-103837144

žě¨žóÖŽ°úŽďúž£ľžÜĆ: /hnki0104/gsshop-103837144Darion Kim

Őż

žė§ŪĒĄŽĚľžĚłžóźžĄú Žď§žúľŽ©ī ŽćĒžöĪŽćĒ žě¨ŽĮłžěąŽäĒ ŠĄÄŠÖĘŠĄáŠÖ°ŠÜĮŠĄáŠÖ°ŠÜľŠĄČŠÖĶŠÜ®ŠĄčŠÖī ŠĄáŠÖߊÜꊥíŠÖ™ŠĄÖŠÖ≥ŠÜĮ ŠĄčŠÖĪŠĄíŠÖ°ŠÜę GSShop ŠĄÄŠÖ©ŠĄÄŠÖģŠÜꊥáŠÖģŠÜꊥźŠÖģŠĄÄŠÖĶžě֎蹎č§.

Ž™®ŽįĒžĚľžóźžĄú ŪôĒŽ©īžĚī ÍĻ®ž†łžĄú Žč§žčú žė¨Ž¶ĹŽčąŽč§.

/hnki0104/gsshop-103837144[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ]ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę_202410.pdf![[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ]ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę_202410.pdf](https://cdn.slidesharecdn.com/ss_thumbnails/llm202410-241029044603-0c34e45a-thumbnail.jpg?width=560&fit=bounds)

![[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ]ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę_202410.pdf](https://cdn.slidesharecdn.com/ss_thumbnails/llm202410-241029044603-0c34e45a-thumbnail.jpg?width=560&fit=bounds)

![[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ]ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę_202410.pdf](https://cdn.slidesharecdn.com/ss_thumbnails/llm202410-241029044603-0c34e45a-thumbnail.jpg?width=560&fit=bounds)

![[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ]ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę_202410.pdf](https://cdn.slidesharecdn.com/ss_thumbnails/llm202410-241029044603-0c34e45a-thumbnail.jpg?width=560&fit=bounds)

[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ]ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę_202410.pdfYOHAN LEE

Őż

[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ]ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę_202410.pdf![[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ]ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę_202410.pdf](https://cdn.slidesharecdn.com/ss_thumbnails/llm202410-241029044926-6f7c6e61-thumbnail.jpg?width=560&fit=bounds)

![[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ]ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę_202410.pdf](https://cdn.slidesharecdn.com/ss_thumbnails/llm202410-241029044926-6f7c6e61-thumbnail.jpg?width=560&fit=bounds)

![[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ]ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę_202410.pdf](https://cdn.slidesharecdn.com/ss_thumbnails/llm202410-241029044926-6f7c6e61-thumbnail.jpg?width=560&fit=bounds)

![[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ]ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę_202410.pdf](https://cdn.slidesharecdn.com/ss_thumbnails/llm202410-241029044926-6f7c6e61-thumbnail.jpg?width=560&fit=bounds)

[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ]ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę_202410.pdfYOHAN LEE

Őż

[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ] ÍłįžóÖ Žßěž∂§Ūėē ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę![[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ] ÍłįžóÖ Žßěž∂§Ūėē ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę](https://cdn.slidesharecdn.com/ss_thumbnails/llm202410-241106053231-5bf93cca-thumbnail.jpg?width=560&fit=bounds)

![[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ] ÍłįžóÖ Žßěž∂§Ūėē ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę](https://cdn.slidesharecdn.com/ss_thumbnails/llm202410-241106053231-5bf93cca-thumbnail.jpg?width=560&fit=bounds)

![[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ] ÍłįžóÖ Žßěž∂§Ūėē ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę](https://cdn.slidesharecdn.com/ss_thumbnails/llm202410-241106053231-5bf93cca-thumbnail.jpg?width=560&fit=bounds)

![[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ] ÍłįžóÖ Žßěž∂§Ūėē ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜę](https://cdn.slidesharecdn.com/ss_thumbnails/llm202410-241106053231-5bf93cca-thumbnail.jpg?width=560&fit=bounds)

[ŠĄčŠÖ©ŠĄĎŠÖ≥ŠÜꊥȊ֩ŠĄČŠÖ≥ŠĄŹŠÖ•ŠÜꊥȊ֕ŠÜĮŠĄźŠÖĶŠÜľ] ÍłįžóÖ Žßěž∂§Ūėē ŠĄčŠÖ©ŠÜꊥϊÖ≥ŠĄÖŠÖ¶ŠĄÜŠÖĶŠĄČŠÖ≥ LLM ŠĄČŠÖ©ŠÜĮŠĄÖŠÖģŠĄČŠÖߊÜęOpen Source Consulting

Őż

LLM ŠĄÜŠÖ©ŠĄÉŠÖ¶ŠÜĮ ŠĄÄŠÖĶŠĄáŠÖ°ŠÜę ŠĄČŠÖ•ŠĄáŠÖĶŠĄČŠÖ≥ ŠĄČŠÖĶŠÜĮŠĄĆŠÖ•ŠÜę ŠĄÄŠÖ°ŠĄčŠÖĶŠĄÉŠÖ≥

LLM ŠĄÜŠÖ©ŠĄÉŠÖ¶ŠÜĮ ŠĄÄŠÖĶŠĄáŠÖ°ŠÜę ŠĄČŠÖ•ŠĄáŠÖĶŠĄČŠÖ≥ ŠĄČŠÖĶŠÜĮŠĄĆŠÖ•ŠÜę ŠĄÄŠÖ°ŠĄčŠÖĶŠĄÉŠÖ≥Tae Young Lee

Őż

More from Wonjun Hwang (20)

20250207_žú§ž†ēŽĻą_Next.jsžóźžĄú žĄúŽ≤Ą žĽīŪŹ¨ŽĄĆŪ䳎•ľ Ž†ĆŽćĒŽßĀŪēėŽäĒ Žį©Ž≤ē.pdf

20250207_žú§ž†ēŽĻą_Next.jsžóźžĄú žĄúŽ≤Ą žĽīŪŹ¨ŽĄĆŪ䳎•ľ Ž†ĆŽćĒŽßĀŪēėŽäĒ Žį©Ž≤ē.pdfWonjun Hwang

Őż

Kit-Works Team Study20250207ŽįúŪĎú-žĖώ觞úó(style-dictionaryŽ•ľ Ūôúžö©Ūēú ŽĒĒžěźžĚł žčúžä§ŪÖú žěźŽŹôŪôĒ).pdf

20250207ŽįúŪĎú-žĖώ觞úó(style-dictionaryŽ•ľ Ūôúžö©Ūēú ŽĒĒžěźžĚł žčúžä§ŪÖú žěźŽŹôŪôĒ).pdfWonjun Hwang

Őż

Kit-Works Team Studyį≠ĺĪ≥Ŕ-į¬ī«įýįž≤ű≥Śį’Īū≤Ļ≥ĺ≥Ś≥ß≥Ŕ≥‹ĽŚ≤‚≥Ś20250103≥Ś«Íīžčú≥ŚÍĻ∂ńÍ≤Ĺžąė.ĪŤĽŚīŕ

į≠ĺĪ≥Ŕ-į¬ī«įýįž≤ű≥Śį’Īū≤Ļ≥ĺ≥Ś≥ß≥Ŕ≥‹ĽŚ≤‚≥Ś20250103≥Ś«Íīžčú≥ŚÍĻ∂ńÍ≤Ĺžąė.ĪŤĽŚīŕWonjun Hwang

Őż

Kit-Works Team StudyKit-Work_Team_Study_20241227_ŪĆÄžä§_ÍĻÄŪēúžÜĒ_Ž™®Žćė žěźŽįĒžä§ŪĀ¨Ž¶ĹŪäłžĚė ŪäĻžßē.pdf

Kit-Work_Team_Study_20241227_ŪĆÄžä§_ÍĻÄŪēúžÜĒ_Ž™®Žćė žěźŽįĒžä§ŪĀ¨Ž¶ĹŪäłžĚė ŪäĻžßē.pdfWonjun Hwang

Őż

Kit-Work Team StudyKit-Works Team Study_zustandžóź ŽĆÄŪēī žēĆžēĄŽ≥īžěź žóľÍ≤®Ž†ą_20241206.pptx

Kit-Works Team Study_zustandžóź ŽĆÄŪēī žēĆžēĄŽ≥īžěź žóľÍ≤®Ž†ą_20241206.pptxWonjun Hwang

Őż

Kit-Works Team StudyKit-Works Team Study_žú†ŪēúŽĻą_ŽįúŪĎúžěźŽ£Ć_žĽīŪŹ¨ŽĄĆŪäł žě¨žā¨žö©_20241129.pdf

Kit-Works Team Study_žú†ŪēúŽĻą_ŽįúŪĎúžěźŽ£Ć_žĽīŪŹ¨ŽĄĆŪäł žě¨žā¨žö©_20241129.pdfWonjun Hwang

Őż

Kit-Works Team StudyKit-Works _ŪėĄŽĆĞ쟎Źôžį®_žļźžä§Ūćľ_žĚīžĽ§Ž®łžä§_ŪöĆÍ≥†Ž°Ě_20241115.pdf

Kit-Works _ŪėĄŽĆĞ쟎Źôžį®_žļźžä§Ūćľ_žĚīžĽ§Ž®łžä§_ŪöĆÍ≥†Ž°Ě_20241115.pdfWonjun Hwang

Őż

Kit-Works Team Study20250207ŽįúŪĎú-žĖώ觞úó(style-dictionaryŽ•ľ Ūôúžö©Ūēú ŽĒĒžěźžĚł žčúžä§ŪÖú žěźŽŹôŪôĒ).pdf

20250207ŽįúŪĎú-žĖώ觞úó(style-dictionaryŽ•ľ Ūôúžö©Ūēú ŽĒĒžěźžĚł žčúžä§ŪÖú žěźŽŹôŪôĒ).pdfWonjun Hwang

Őż

Lang Chain (Kitworks Team Study žú§ž†ēŽĻą ŽįúŪĎúžěźŽ£Ć)

- 2. LangChainžĚīŽěÄ? Žč§žĖĎŪēúžĖłžĖīŽ™®ŽćłžĚĄÍłįŽįėžúľŽ°úŪēėŽäĒžē†ŪĒĆŽ¶¨žľÄžĚīžÖėžĚĄžúĄŪēúŪĒĄŽ†ąžěĄžõĆŪĀ¨žě֎蹎č§. ÍįÄžě•ÍįēŽ†•ŪēėÍ≥†žį®Ž≥ĄŪôĒŽźúžē†ŪĒĆŽ¶¨žľÄžĚīžÖėžĚÄAPIŽ•ľŪÜĶŪēīžĖłžĖīŽ™®ŽćłžĚĄ Ūėłž∂úŪē†ŽŅźŽßĆžēĄŽčąŽĚľ žĚīŽ•ľŪÜĶŪēīÍįúŽįúŽź†Í≤ÉžĚīŽĚľÍ≥†ŽĮŅžäĶŽčąŽč§. žĖłžĖīŽ™®ŽćłžĚĄŽćĒžěėŪôúžö©Ūē†žąėžěąÍ≤ĆŽĀĒŽŹĄžôÄž£ľŽäĒŽŹĄÍĶ¨ ŽćįžĚīŪĄįžĚłžčĚ: žĖłžĖīŽ™®ŽćłžĚĄŽč§Ž•łŽćįžĚīŪĄįžÜƞ䧞óźžóįÍ≤į žóźžĚīž†ĄŪäłÍłįŽä•: ĻģłžĖīŽ™®ŽćłžĚīŪôėÍ≤ĹÍ≥ľžÉņīėłžěĎžö©«Í†žąėžěąŽŹĄŽ°ĚŪē®

- 3. LangChainžĚĄžā¨žö©ŪēėŽäĒžĚīžú† ChatGPTžĚėŪēúÍ≥Ą 01 ChatGPT(GPT-3.5)ŽäĒ2021ŽÖĄÍĻĆžßÄžĚėŽćįžĚīŪĄįŽ•ľŪēôžäĶŪēúLLM(žīąÍĪįŽĆÄžĖłžĖīŽ™®Žćł)žĚīŽĮÄŽ°ú, 2022ŽÖĄŽ∂ÄŪĄįžĚėž†ēŽ≥īžóźŽĆÄŪēīžĄúŽäĒŽčĶŽ≥ÄŪēėžßÄŽ™ĽŪēėÍĪįŽāė, ÍĪįžßúŽčĶŽ≥Ğ̥ž†úÍ≥Ķ ž†ēŽ≥īž†ĎÍ∑ľž†úŪēú 02 03 ChatGPTžóźžĄúž†úÍ≥ĶŪēėŽäĒŽ™®ŽćłžĚłGPT-3.5žôÄGPT-4ŽäĒÍįĀÍįĀ4096, 8192Ū܆ŪĀįžĚīŽĚľŽäĒ žěÖŽ†•Ū܆ŪĀįž†úŪēúžĚīž°īžě¨ žā¨žč§žóźŽĆÄŪēúžßąŽ¨łžĚĄŪĖąžĚĄŽēĆ, žóČŽöĪŪēúŽĆÄŽčĶžĚĄŪēėÍĪįŽāėÍĪįžßďŽßźžĚĄŪēėŽäĒÍ≤ĹžöįÍįÄŽßéžĚĆ Ū܆ŪĀįž†úŪēú ŪôėÍįĀŪėĄžÉĀ

- 4. LangChainžĚĄžā¨žö©ŪēėŽäĒžĚīžú† Fine-tuning ÍįúŽüČ N-shot Learning In-context Learning Íłįž°īŽĒ•Žü¨ŽčĚŽ™®ŽćłžĚėweightŽ•ľž°įž†ēŪēėžó¨žõźŪēėŽäĒžö©ŽŹĄžĚėŽ™®ŽćłŽ°úžóÖŽćįžĚīŪäł 0Íįú~ nÍįúžĚėž∂úŽ†•žėąžčúŽ•ľž†úžčúŪēėžó¨, ŽĒ•Žü¨ŽčĚžĚīžö©ŽŹĄžóźžēĆŽßěžĚÄž∂úŽ†•žĚĄŪēėŽŹĄŽ°Ěž°įž†ē Ž¨łŽß•žĚĄž†úžčúŪēėÍ≥†, žĚīŽ¨łŽß•ÍłįŽįėžúľŽ°úŽ™®ŽćłžĚīž∂úŽ†•ŪēėŽŹĄŽ°Ěž°įž†ē Custom LLM ŽßĆŽďúŽäĒŽį©Ž≤ē

- 5. LangChainžĚĄžā¨žö©ŪēėŽäĒžĚīžú† ChatGPTžĚėŪēúÍ≥Ą 01 ChatGPT(GPT-3.5)ŽäĒ2021ŽÖĄÍĻĆžßÄžĚėŽćįžĚīŪĄįŽ•ľŪēôžäĶŪēúLLM(žīąÍĪįŽĆÄžĖłžĖīŽ™®Žćł)žĚīŽĮÄŽ°ú, 2022ŽÖĄŽ∂ÄŪĄįžĚėž†ēŽ≥īžóźŽĆÄŪēīžĄúŽäĒŽčĶŽ≥ÄŪēėžßÄŽ™ĽŪēėÍĪįŽāė, ÍĪįžßúŽčĶŽ≥Ğ̥ž†úÍ≥Ķ ž†ēŽ≥īž†ĎÍ∑ľž†úŪēú 02 03 ChatGPTžóźžĄúž†úÍ≥ĶŪēėŽäĒŽ™®ŽćłžĚłGPT-3.5žôÄGPT-4ŽäĒÍįĀÍįĀ4096, 8192Ū܆ŪĀįžĚīŽĚľŽäĒ žěÖŽ†•Ū܆ŪĀįž†úŪēúžĚīž°īžě¨ žā¨žč§žóźŽĆÄŪēúžßąŽ¨łžĚĄŪĖąžĚĄŽēĆ, žóČŽöĪŪēúŽĆÄŽčĶžĚĄŪēėÍĪįŽāėÍĪįžßďŽßźžĚĄŪēėŽäĒÍ≤ĹžöįÍįÄŽßéžĚĆ Ū܆ŪĀįž†úŪēú ŪôėÍįĀŪėĄžÉĀ Vectorstore ÍłįŽįėž†ēŽ≥īŪÉźžÉČor Agent Ūôúžö©ŪēúÍ≤ÄžÉČÍ≤įŪē© TextSplitterŽ•ľŪôúžö©ŪēúŽ¨łžĄúŽ∂ĄŪē† ž£ľžĖīžßĄŽ¨łžĄúžóźŽĆÄŪēīžĄúŽßĆŽčĶŪēėŽŹĄŽ°ĚPrompt žěÖŽ†•

- 6. LangChainžĚĄžā¨žö©ŪēėŽäĒžĚīžú† ChatGPTžĚėŪēúÍ≥Ą 01 ChatGPT(GPT-3.5)ŽäĒ2021ŽÖĄÍĻĆžßÄžĚėŽćįžĚīŪĄįŽ•ľŪēôžäĶŪēúLLM(žīąÍĪįŽĆÄžĖłžĖīŽ™®Žćł)žĚīŽĮÄŽ°ú, 2022ŽÖĄŽ∂ÄŪĄįžĚėž†ēŽ≥īžóźŽĆÄŪēīžĄúŽäĒŽčĶŽ≥ÄŪēėžßÄŽ™ĽŪēėÍĪįŽāė, ÍĪįžßúŽčĶŽ≥Ğ̥ž†úÍ≥Ķ ž†ēŽ≥īž†ĎÍ∑ľž†úŪēú 02 03 ChatGPTžóźžĄúž†úÍ≥ĶŪēėŽäĒŽ™®ŽćłžĚłGPT-3.5žôÄGPT-4ŽäĒÍįĀÍįĀ4096, 8192Ū܆ŪĀįžĚīŽĚľŽäĒ žěÖŽ†•Ū܆ŪĀįž†úŪēúžĚīž°īžě¨ žā¨žč§žóźŽĆÄŪēúžßąŽ¨łžĚĄŪĖąžĚĄŽēĆ, žóČŽöĪŪēúŽĆÄŽčĶžĚĄŪēėÍĪįŽāėÍĪįžßďŽßźžĚĄŪēėŽäĒÍ≤ĹžöįÍįÄŽßéžĚĆ Ū܆ŪĀįž†úŪēú ŪôėÍįĀŪėĄžÉĀ Vectorstore ÍłįŽįėž†ēŽ≥īŪÉźžÉČor Agent Ūôúžö©ŪēúÍ≤ÄžÉČÍ≤įŪē© TextSplitterŽ•ľŪôúžö©ŪēúŽ¨łžĄúŽ∂ĄŪē† ž£ľžĖīžßĄŽ¨łžĄúžóźŽĆÄŪēīžĄúŽßĆŽčĶŪēėŽŹĄŽ°ĚPrompt žěÖŽ†• LangChainžĚĄŪôúžö©ŪēėŽ©ī LLMžĚėŽ¨łž†úŪēīÍ≤į+ Ūôúžö©ŽŹĄUP!

- 7. Models LangChainžĚėÍĶ¨ž°į LLM: žīąÍĪįŽĆÄžĖłžĖīŽ™®ŽćłŽ°ú, žÉĚžĄĪŽ™®ŽćłžĚėžóĒžßĄÍ≥ľÍįôžĚÄžó≠Ūē†žĚĄŪēėŽäĒŪēĶžč¨ÍĶ¨žĄĪžöĒžÜĆ GPT-3.5, PAM-2, LLAMA, Stable Vicuna, WizardLm...

- 8. Prompts LangChainžĚėÍĶ¨ž°į Prompts: žīąÍĪįŽĆÄžĖłžĖīŽ™®ŽćłžóźÍ≤ĆžßÄžčúŪēėŽäĒŽ™ÖŽ†ĻŽ¨ł Prompt Templates, Chat Prompt Template, Example Selectors, Output Parsers

- 9. LangChainžĚėÍĶ¨ž°į Index: LLMžĚīŽ¨łžĄúŽ•ľžČĹÍ≤ĆŪÉźžÉČŪē†žąėžěąŽŹĄŽ°ĚÍĶ¨ž°įŪôĒŪēėŽäĒŽ™®Žďą Document Loaders, Text Splitters, Vectorstores, Retrievers ... Indexes

- 10. LangChainžĚėÍĶ¨ž°į Memory: žĪĄŪĆÖžĚīŽ†•žĚĄÍłįžĖĶŪēėŽŹĄŽ°ĚŪēėžó¨žĚīŽ•ľÍłįŽįėžúľŽ°úŽĆÄŪôĒÍįÄÍįÄŽä•ŪēėŽŹĄŽ°ĚŪēėŽäĒŽ™®Žďą ConversationBufferMemory, Enity Memory, Conversation Knowledge Graph Memory... Memory

- 11. LangChainžĚėÍĶ¨ž°į Chains: LLM žā¨žä¨žĚĄŪėēžĄĪŪēėžó¨, žóįžÜ枆ĀžĚłLLM Ūėłž∂úžĚīÍįÄŽä•ŪēėŽŹĄŽ°ĚŪēėŽäĒŪēĶžč¨ÍĶ¨žĄĪžöĒžÜĆ LLM Chain, Question Answering, Summarization, Retrieval Question/Answering... Chains

- 12. LangChainžĚėÍĶ¨ž°į Agents: LLMžĚīÍłįž°īPrompt TemplatežúľŽ°úžąėŪĖČŪē†žąėžóÜŽäĒžěĎžó̥֞ÍįÄŽä•žľÄŪēėŽäĒŽ™®Žďą Custom Agent, Custom MultiAction Agent, Conversation Agent... Agents

- 16. žėąž†ú1

- 17. žėąž†ú2 chunk

- 18. žėąž†ú2 Í≤įÍ≥ľ

![Kit-Works Team Study_[ŪĆÄžä§ŪĄįŽĒĒ]SVG_20241220.pptx](https://cdn.slidesharecdn.com/ss_thumbnails/svg-241223010155-9c6fb33b-thumbnail.jpg?width=560&fit=bounds)