NLP DLforDS

Download as PPTX, PDF0 likes103 views

This is the presentation for NLP 2018 Spring class, talked about the Deep learning application for dialogue systems

1 of 27

Download to read offline

Recommended

Crf based named entity recognition using a korean lexical semantic network

Crf based named entity recognition using a korean lexical semantic networkDanbi Cho

Ã˝

They extracted the features for the named entity recognition task.

They use the UWordMap to learn the characteristics of the korean words.

(28th May, 2021)A Multi-layer LSTM-based Approach for Robot Command Interaction Modeling

A Multi-layer LSTM-based Approach for Robot Command Interaction ModelingMartino Mensio

Ã˝

Presentation given at IROS - Workshop on Language and Robotics

http://iros2018.emergent-symbol.systems/accepted-papersEnd-to-End Memory Networks with Knowledge Carryover for Multi-Turn Spoken Lan...

End-to-End Memory Networks with Knowledge Carryover for Multi-Turn Spoken Lan...Yun-Nung (Vivian) Chen

Ã˝

The document describes an end-to-end memory network model for multi-turn spoken language understanding. The model encodes context from previous utterances using an attention mechanism over the memory of past utterances. It then performs slot tagging on the current utterance incorporating the contextual knowledge. Experiments on a Cortana dataset show the model outperforms alternatives, achieving 67.1% accuracy by encoding both history and current utterances with the memory network.Deep Generative Models

Deep Generative Models Chia-Wen Cheng

Ã˝

Deep generative models can generate synthetic images, speech, text and other data types. There are three popular types: autoregressive models which generate data step-by-step; variational autoencoders which learn the distribution of latent variables to generate data; and generative adversarial networks which train a generator and discriminator in an adversarial game to generate high quality samples. Generative models have applications in image generation, translation between domains, and simulation.Big Data Intelligence: from Correlation Discovery to Causal Reasoning

Big Data Intelligence: from Correlation Discovery to Causal Reasoning Wanjin Yu

Ã˝

The document discusses using sequence-to-sequence learning models for tasks like machine translation, question answering, and image captioning. It describes how recurrent neural networks like LSTMs can be used in seq2seq models to incorporate memory. Finally, it proposes that seq2seq models can be enhanced by incorporating external memory structures like knowledge bases to enable capabilities like causal reasoning for question answering.BIng NLP Expert - Dl summer-school-2017.-jianfeng-gao.v2

BIng NLP Expert - Dl summer-school-2017.-jianfeng-gao.v2Karthik Murugesan

Ã˝

This document provides an outline for a tutorial on deep learning for natural language processing. It begins with an introduction to deep learning and its history, then discusses how neural methods have become prominent in natural language processing. The rest of the tutorial is outlined covering deep semantic models for text, recurrent neural networks for text generation, neural question answering models, and deep reinforcement learning for dialog systems.Deep Learning: concepts and use cases (October 2018)

Deep Learning: concepts and use cases (October 2018)Julien SIMON

Ã˝

An introduction to Deep Learning theory

Neurons & Neural Networks

The Training Process

Backpropagation

Optimizers

Common network architectures and use cases

Convolutional Neural Networks

Recurrent Neural Networks

Long Short Term Memory Networks

Generative Adversarial Networks

Getting started

Deep Learning in practice : Speech recognition and beyond - Meetup

Deep Learning in practice : Speech recognition and beyond - MeetupLINAGORA

Ã˝

Retrouvez la présentation de notre Meetup du 27 septembre 2017 présenté par notre collaborateur Abdelwahab HEBA : Deep Learning in practice : Speech recognition and beyondEnd-to-End Joint Learning of Natural Language Understanding and Dialogue Manager

End-to-End Joint Learning of Natural Language Understanding and Dialogue ManagerYun-Nung (Vivian) Chen

Ã˝

This document summarizes a research paper on end-to-end joint learning of natural language understanding and dialogue management. The paper proposes an end-to-end deep hierarchical model that leverages multi-task learning using three supervised tasks: user intent classification, slot tagging, and system action prediction. The model outperforms previous pipelined models by accessing contextual dialogue history and allowing the dialogue management signals to refine the natural language understanding through backpropagation. Evaluation on a dialogue state tracking dataset shows the joint model achieves better dialogue management performance compared to baselines and also improves natural language understanding.Recurrent Neural Networks for Text Analysis

Recurrent Neural Networks for Text Analysisodsc

Ã˝

Recurrent neural networks (RNNs) are well-suited for analyzing text data because they can model sequential and structural relationships in text. RNNs use gating mechanisms like LSTMs and GRUs to address the problem of exploding or vanishing gradients when training on long sequences. Modern RNNs trained with techniques like gradient clipping, improved initialization, and optimized training algorithms like Adam can learn meaningful representations from text even with millions of training examples. RNNs may outperform conventional bag-of-words models on large datasets but require significant computational resources. The author describes an RNN library called Passage and provides an example of sentiment analysis on movie reviews to demonstrate RNNs for text analysis.Deep Learning for Automatic Speaker Recognition

Deep Learning for Automatic Speaker RecognitionSai Kiran Kadam

Ã˝

Automatic Text-Independent Speaker Recognition and Spoof Detection using Deep Learning for Security and IoT

deepnet-lourentzou.ppt

deepnet-lourentzou.pptyang947066

Ã˝

This document provides an outline for a presentation on machine learning and deep learning. It begins with an introduction to machine learning basics and types of learning. It then discusses what deep learning is and why it is useful. The main components and hyperparameters of deep learning models are explained, including activation functions, optimizers, cost functions, regularization methods, and tuning. Basic deep neural network architectures like convolutional and recurrent networks are described. An example application of relation extraction is provided. The document concludes by listing additional deep learning topics.Deep learning is a subset of machine learning and AI

Deep learning is a subset of machine learning and AIleradiophysicien1

Ã˝

intelligence (AI) that focuses on using neural networks with many layers to model complex patterns in data. Inspired by the structure and function of the human brain, deep learning algorithms are designed to automatically learn representations of data at multiple levels of abstraction. This allows them to excel in tasks such as image and speech recognition, natural language processing, and autonomous driving. The rapid advancements in computational power and the availability of large datasets have significantly contributed to the success of deep learning. By leveraging massive amounts of data and powerful GPUs, deep learning models can achieve remarkable accuracy and efficiency, making them an integral part of modern AI applications.Ask Me Any Rating: A Content-based Recommender System based on Recurrent Neur...

Ask Me Any Rating: A Content-based Recommender System based on Recurrent Neur...Alessandro Suglia

Ã˝

Presentation for "Ask Me Any Rating: A Content-based Recommender System based on Recurrent Neural Networks" at the 7th Italian Information Retrieval Workshop.

See paper: http://ceur-ws.org/Vol-1653/paper_11.pdfAsk Me Any Rating: A Content-based Recommender System based on Recurrent Neur...

Ask Me Any Rating: A Content-based Recommender System based on Recurrent Neur...Claudio Greco

Ã˝

∫›∫›fl£s for the presentation of the paper "Ask Me Any Rating: A Content-based Recommender System based on Recurrent Neural Networks" at the 7th Italian Information Retrieval Workshop.Deep Learning and Watson Studio

Deep Learning and Watson StudioSasha Lazarevic

Ã˝

Deep learning and Watson Studio can be used for various tasks including planet discoveries, particle physics experiments at CERN, and scientific publications analysis. Convolutional neural networks are commonly used for image-related tasks like cancer diagnosis, object detection, and style transfer, while recurrent neural networks with LSTM or GRU are useful for sequential data like text for machine translation, sentiment analysis, and music generation. Hybrid and complex models combine different neural network architectures for tasks such as named entity recognition, music generation, blockchain security, and lip reading. Deep learning is now implemented using frameworks like TensorFlow and Keras on GPUs and distributed systems. Transfer learning helps accelerate development by reusing pre-trained models. Watson Studio provides a platform for developing, testing, and deployDeep Learning, an interactive introduction for NLP-ers

Deep Learning, an interactive introduction for NLP-ersRoelof Pieters

Ã˝

Deep Learning intro for NLP Meetup Stockholm

22 January 2015

http://www.meetup.com/Stockholm-Natural-Language-Processing-Meetup/events/219787462/[Paper Reading] Unsupervised Learning of Sentence Embeddings using Compositi...![[Paper Reading] Unsupervised Learning of Sentence Embeddings using Compositi...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingnaacl-unsupervisedlearningofsentenceembeddingsusingcompositionaln-gramfeatures-181121025013-thumbnail.jpg?width=560&fit=bounds)

![[Paper Reading] Unsupervised Learning of Sentence Embeddings using Compositi...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingnaacl-unsupervisedlearningofsentenceembeddingsusingcompositionaln-gramfeatures-181121025013-thumbnail.jpg?width=560&fit=bounds)

![[Paper Reading] Unsupervised Learning of Sentence Embeddings using Compositi...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingnaacl-unsupervisedlearningofsentenceembeddingsusingcompositionaln-gramfeatures-181121025013-thumbnail.jpg?width=560&fit=bounds)

![[Paper Reading] Unsupervised Learning of Sentence Embeddings using Compositi...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingnaacl-unsupervisedlearningofsentenceembeddingsusingcompositionaln-gramfeatures-181121025013-thumbnail.jpg?width=560&fit=bounds)

[Paper Reading] Unsupervised Learning of Sentence Embeddings using Compositi...Hiroki Shimanaka

Ã˝

(1) The document presents an unsupervised method called Sent2Vec to learn sentence embeddings using compositional n-gram features. (2) Sent2Vec extends the continuous bag-of-words model to train sentence embeddings by composing word vectors with n-gram embeddings. (3) Experimental results show Sent2Vec outperforms other unsupervised models on most benchmark tasks, highlighting the robustness of the sentence embeddings produced.Formal analysis-crypto-proto

Formal analysis-crypto-protoDr. Jayaraj Poroor

Ã˝

The document discusses formal modeling and analysis of cryptographic protocols. It introduces formal methods, the Dolev-Yao model, pi-calculus as a modeling language, and the ProVerif tool. As an example, a naïve handshake protocol is presented and a man-in-the-middle attack is demonstrated. The talk concludes that formal methods provide rigorous analysis of protocol security properties.Deep Learning - Speaker Verification, Sound Event Detection

Deep Learning - Speaker Verification, Sound Event DetectionSai Kiran Kadam

Ã˝

The document discusses several papers on using deep learning techniques for speaker recognition and identification. It describes using convolutional neural networks with spectrograms as input to identify speakers and cluster them without prior identity knowledge. It also discusses using BLSTM recurrent neural networks for polyphonic sound event detection and spoofing detection. An end-to-end attention model with CNNs and temporal pooling is presented for text-dependent speaker verification. Embedding's from deep neural networks are investigated as an alternative to i-vectors for text-independent speaker verification. Related research applying CNNs, DNNs and BLSTM RNNs to speaker recognition tasks is also cited.SNLI_presentation_2

SNLI_presentation_2Viral Gupta

Ã˝

This document discusses natural language inference and summarizes the key points as follows:

1. The document describes the problem of natural language inference, which involves classifying the relationship between a premise and hypothesis sentence as entailment, contradiction, or neutral. This is an important problem in natural language processing.

2. The SNLI dataset is introduced as a collection of half a million natural language inference problems used to train and evaluate models.

3. Several approaches for solving the problem are discussed, including using word embeddings, LSTMs, CNNs, and traditional bag-of-words models. Results show LSTMs and CNNs achieve the best performance.Deep Learning And Business Models (VNITC 2015-09-13)

Deep Learning And Business Models (VNITC 2015-09-13)Ha Phuong

Ã˝

Deep Learning and Business Models

Tran Quoc Hoan discusses deep learning and its applications, as well as potential business models. Deep learning has led to significant improvements in areas like image and speech recognition compared to traditional machine learning. Some business models highlighted include developing deep learning frameworks, building hardware optimized for deep learning, using deep learning for IoT applications, and providing deep learning APIs and services. Deep learning shows promise across many sectors but also faces challenges in fully realizing its potential.Deep learning Tutorial - Part II

Deep learning Tutorial - Part IIQuantUniversity

Ã˝

Interest in Deep Learning has been growing in the past few years. With advances in software and hardware technologies, Neural Networks are making a resurgence. With interest in AI based applications growing, and companies like IBM, Google, Microsoft, NVidia investing heavily in computing and software applications, it is time to understand Deep Learning better!

In this lecture, we will get an introduction to Autoencoders and Recurrent Neural Networks and understand the state-of-the-art in hardware and software architectures. Functional Demos will be presented in Keras, a popular Python package with a backend in Theano. This will be a preview of the QuantUniversity Deep Learning Workshop that will be offered in 2017. Parsimony and Self-Consistency-with-Translation.pptx

Parsimony and Self-Consistency-with-Translation.pptxxzbill

Ã˝

On the Principles of Parsimony and Self-ConsistencyRNN is recurrent neural networks and deep learning

RNN is recurrent neural networks and deep learningFeiXiao19

Ã˝

RNN is recurrent neural networks and deep learningTemporal Hypermap Theory and Application

Temporal Hypermap Theory and ApplicationAbel Nyamapfene

Ã˝

The document summarizes research on modeling multiple sequence processing using an unsupervised neural network approach based on the Hypermap Model. Key points:

- The researcher extends previous models to handle complex sequences with repeating subsequences and multiple sequences occurring together without interference.

- Modifications include incorporating short-term memory to dynamically encode time-varying sequence context and inhibitory links to enable competitive queuing during recall.

- Experimental evaluation shows the network can correctly recall sequences using partial context and when sequences overlap.

- Future work aims to model the transition from single-word to two-word child speech and incorporate temporal processing of multimodal inputs like gestures.NFL_intros.pptx

NFL_intros.pptxLiangqun Lu

Ã˝

This document provides an introduction to the rules and structure of American football in the National Football League (NFL). It outlines the basic field setup with offenses and defenses of 11 players each. It explains the concept of downs, where a team has 4 attempts to advance 10 yards, and what happens if they fail to convert or succeed. It also describes common forms of scoring like touchdowns, field goals, and safeties. The document provides some key rules around penalties, interceptions, fumbles, and sacks. It includes the schedule for the 2022-2023 NFL regular season and playoffs culminating in the Super Bowl each February.BERT: Bidirectional Encoder Representations from Transformers

BERT: Bidirectional Encoder Representations from TransformersLiangqun Lu

Ã˝

BERT was developed by Google AI Language and came out Oct. 2018. It has achieved the best performance in many NLP tasks. So if you are interested in NLP, studying BERT is a good way to go. More Related Content

Similar to NLP DLforDS (20)

End-to-End Joint Learning of Natural Language Understanding and Dialogue Manager

End-to-End Joint Learning of Natural Language Understanding and Dialogue ManagerYun-Nung (Vivian) Chen

Ã˝

This document summarizes a research paper on end-to-end joint learning of natural language understanding and dialogue management. The paper proposes an end-to-end deep hierarchical model that leverages multi-task learning using three supervised tasks: user intent classification, slot tagging, and system action prediction. The model outperforms previous pipelined models by accessing contextual dialogue history and allowing the dialogue management signals to refine the natural language understanding through backpropagation. Evaluation on a dialogue state tracking dataset shows the joint model achieves better dialogue management performance compared to baselines and also improves natural language understanding.Recurrent Neural Networks for Text Analysis

Recurrent Neural Networks for Text Analysisodsc

Ã˝

Recurrent neural networks (RNNs) are well-suited for analyzing text data because they can model sequential and structural relationships in text. RNNs use gating mechanisms like LSTMs and GRUs to address the problem of exploding or vanishing gradients when training on long sequences. Modern RNNs trained with techniques like gradient clipping, improved initialization, and optimized training algorithms like Adam can learn meaningful representations from text even with millions of training examples. RNNs may outperform conventional bag-of-words models on large datasets but require significant computational resources. The author describes an RNN library called Passage and provides an example of sentiment analysis on movie reviews to demonstrate RNNs for text analysis.Deep Learning for Automatic Speaker Recognition

Deep Learning for Automatic Speaker RecognitionSai Kiran Kadam

Ã˝

Automatic Text-Independent Speaker Recognition and Spoof Detection using Deep Learning for Security and IoT

deepnet-lourentzou.ppt

deepnet-lourentzou.pptyang947066

Ã˝

This document provides an outline for a presentation on machine learning and deep learning. It begins with an introduction to machine learning basics and types of learning. It then discusses what deep learning is and why it is useful. The main components and hyperparameters of deep learning models are explained, including activation functions, optimizers, cost functions, regularization methods, and tuning. Basic deep neural network architectures like convolutional and recurrent networks are described. An example application of relation extraction is provided. The document concludes by listing additional deep learning topics.Deep learning is a subset of machine learning and AI

Deep learning is a subset of machine learning and AIleradiophysicien1

Ã˝

intelligence (AI) that focuses on using neural networks with many layers to model complex patterns in data. Inspired by the structure and function of the human brain, deep learning algorithms are designed to automatically learn representations of data at multiple levels of abstraction. This allows them to excel in tasks such as image and speech recognition, natural language processing, and autonomous driving. The rapid advancements in computational power and the availability of large datasets have significantly contributed to the success of deep learning. By leveraging massive amounts of data and powerful GPUs, deep learning models can achieve remarkable accuracy and efficiency, making them an integral part of modern AI applications.Ask Me Any Rating: A Content-based Recommender System based on Recurrent Neur...

Ask Me Any Rating: A Content-based Recommender System based on Recurrent Neur...Alessandro Suglia

Ã˝

Presentation for "Ask Me Any Rating: A Content-based Recommender System based on Recurrent Neural Networks" at the 7th Italian Information Retrieval Workshop.

See paper: http://ceur-ws.org/Vol-1653/paper_11.pdfAsk Me Any Rating: A Content-based Recommender System based on Recurrent Neur...

Ask Me Any Rating: A Content-based Recommender System based on Recurrent Neur...Claudio Greco

Ã˝

∫›∫›fl£s for the presentation of the paper "Ask Me Any Rating: A Content-based Recommender System based on Recurrent Neural Networks" at the 7th Italian Information Retrieval Workshop.Deep Learning and Watson Studio

Deep Learning and Watson StudioSasha Lazarevic

Ã˝

Deep learning and Watson Studio can be used for various tasks including planet discoveries, particle physics experiments at CERN, and scientific publications analysis. Convolutional neural networks are commonly used for image-related tasks like cancer diagnosis, object detection, and style transfer, while recurrent neural networks with LSTM or GRU are useful for sequential data like text for machine translation, sentiment analysis, and music generation. Hybrid and complex models combine different neural network architectures for tasks such as named entity recognition, music generation, blockchain security, and lip reading. Deep learning is now implemented using frameworks like TensorFlow and Keras on GPUs and distributed systems. Transfer learning helps accelerate development by reusing pre-trained models. Watson Studio provides a platform for developing, testing, and deployDeep Learning, an interactive introduction for NLP-ers

Deep Learning, an interactive introduction for NLP-ersRoelof Pieters

Ã˝

Deep Learning intro for NLP Meetup Stockholm

22 January 2015

http://www.meetup.com/Stockholm-Natural-Language-Processing-Meetup/events/219787462/[Paper Reading] Unsupervised Learning of Sentence Embeddings using Compositi...![[Paper Reading] Unsupervised Learning of Sentence Embeddings using Compositi...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingnaacl-unsupervisedlearningofsentenceembeddingsusingcompositionaln-gramfeatures-181121025013-thumbnail.jpg?width=560&fit=bounds)

![[Paper Reading] Unsupervised Learning of Sentence Embeddings using Compositi...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingnaacl-unsupervisedlearningofsentenceembeddingsusingcompositionaln-gramfeatures-181121025013-thumbnail.jpg?width=560&fit=bounds)

![[Paper Reading] Unsupervised Learning of Sentence Embeddings using Compositi...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingnaacl-unsupervisedlearningofsentenceembeddingsusingcompositionaln-gramfeatures-181121025013-thumbnail.jpg?width=560&fit=bounds)

![[Paper Reading] Unsupervised Learning of Sentence Embeddings using Compositi...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingnaacl-unsupervisedlearningofsentenceembeddingsusingcompositionaln-gramfeatures-181121025013-thumbnail.jpg?width=560&fit=bounds)

[Paper Reading] Unsupervised Learning of Sentence Embeddings using Compositi...Hiroki Shimanaka

Ã˝

(1) The document presents an unsupervised method called Sent2Vec to learn sentence embeddings using compositional n-gram features. (2) Sent2Vec extends the continuous bag-of-words model to train sentence embeddings by composing word vectors with n-gram embeddings. (3) Experimental results show Sent2Vec outperforms other unsupervised models on most benchmark tasks, highlighting the robustness of the sentence embeddings produced.Formal analysis-crypto-proto

Formal analysis-crypto-protoDr. Jayaraj Poroor

Ã˝

The document discusses formal modeling and analysis of cryptographic protocols. It introduces formal methods, the Dolev-Yao model, pi-calculus as a modeling language, and the ProVerif tool. As an example, a naïve handshake protocol is presented and a man-in-the-middle attack is demonstrated. The talk concludes that formal methods provide rigorous analysis of protocol security properties.Deep Learning - Speaker Verification, Sound Event Detection

Deep Learning - Speaker Verification, Sound Event DetectionSai Kiran Kadam

Ã˝

The document discusses several papers on using deep learning techniques for speaker recognition and identification. It describes using convolutional neural networks with spectrograms as input to identify speakers and cluster them without prior identity knowledge. It also discusses using BLSTM recurrent neural networks for polyphonic sound event detection and spoofing detection. An end-to-end attention model with CNNs and temporal pooling is presented for text-dependent speaker verification. Embedding's from deep neural networks are investigated as an alternative to i-vectors for text-independent speaker verification. Related research applying CNNs, DNNs and BLSTM RNNs to speaker recognition tasks is also cited.SNLI_presentation_2

SNLI_presentation_2Viral Gupta

Ã˝

This document discusses natural language inference and summarizes the key points as follows:

1. The document describes the problem of natural language inference, which involves classifying the relationship between a premise and hypothesis sentence as entailment, contradiction, or neutral. This is an important problem in natural language processing.

2. The SNLI dataset is introduced as a collection of half a million natural language inference problems used to train and evaluate models.

3. Several approaches for solving the problem are discussed, including using word embeddings, LSTMs, CNNs, and traditional bag-of-words models. Results show LSTMs and CNNs achieve the best performance.Deep Learning And Business Models (VNITC 2015-09-13)

Deep Learning And Business Models (VNITC 2015-09-13)Ha Phuong

Ã˝

Deep Learning and Business Models

Tran Quoc Hoan discusses deep learning and its applications, as well as potential business models. Deep learning has led to significant improvements in areas like image and speech recognition compared to traditional machine learning. Some business models highlighted include developing deep learning frameworks, building hardware optimized for deep learning, using deep learning for IoT applications, and providing deep learning APIs and services. Deep learning shows promise across many sectors but also faces challenges in fully realizing its potential.Deep learning Tutorial - Part II

Deep learning Tutorial - Part IIQuantUniversity

Ã˝

Interest in Deep Learning has been growing in the past few years. With advances in software and hardware technologies, Neural Networks are making a resurgence. With interest in AI based applications growing, and companies like IBM, Google, Microsoft, NVidia investing heavily in computing and software applications, it is time to understand Deep Learning better!

In this lecture, we will get an introduction to Autoencoders and Recurrent Neural Networks and understand the state-of-the-art in hardware and software architectures. Functional Demos will be presented in Keras, a popular Python package with a backend in Theano. This will be a preview of the QuantUniversity Deep Learning Workshop that will be offered in 2017. Parsimony and Self-Consistency-with-Translation.pptx

Parsimony and Self-Consistency-with-Translation.pptxxzbill

Ã˝

On the Principles of Parsimony and Self-ConsistencyRNN is recurrent neural networks and deep learning

RNN is recurrent neural networks and deep learningFeiXiao19

Ã˝

RNN is recurrent neural networks and deep learningTemporal Hypermap Theory and Application

Temporal Hypermap Theory and ApplicationAbel Nyamapfene

Ã˝

The document summarizes research on modeling multiple sequence processing using an unsupervised neural network approach based on the Hypermap Model. Key points:

- The researcher extends previous models to handle complex sequences with repeating subsequences and multiple sequences occurring together without interference.

- Modifications include incorporating short-term memory to dynamically encode time-varying sequence context and inhibitory links to enable competitive queuing during recall.

- Experimental evaluation shows the network can correctly recall sequences using partial context and when sequences overlap.

- Future work aims to model the transition from single-word to two-word child speech and incorporate temporal processing of multimodal inputs like gestures.End-to-End Joint Learning of Natural Language Understanding and Dialogue Manager

End-to-End Joint Learning of Natural Language Understanding and Dialogue ManagerYun-Nung (Vivian) Chen

Ã˝

More from Liangqun Lu (13)

NFL_intros.pptx

NFL_intros.pptxLiangqun Lu

Ã˝

This document provides an introduction to the rules and structure of American football in the National Football League (NFL). It outlines the basic field setup with offenses and defenses of 11 players each. It explains the concept of downs, where a team has 4 attempts to advance 10 yards, and what happens if they fail to convert or succeed. It also describes common forms of scoring like touchdowns, field goals, and safeties. The document provides some key rules around penalties, interceptions, fumbles, and sacks. It includes the schedule for the 2022-2023 NFL regular season and playoffs culminating in the Super Bowl each February.BERT: Bidirectional Encoder Representations from Transformers

BERT: Bidirectional Encoder Representations from TransformersLiangqun Lu

Ã˝

BERT was developed by Google AI Language and came out Oct. 2018. It has achieved the best performance in many NLP tasks. So if you are interested in NLP, studying BERT is a good way to go. Gan summary

Gan summaryLiangqun Lu

Ã˝

This document provides an outline and overview of generative adversarial networks (GANs). It introduces GAN theory and common issues with GANs like mode collapse. It then summarizes different types of GANs including WGAN, EBGAN, LSGAN, and DCGAN. The document also mentions using GANs for feature extraction and conditional generation.Data integration lab_meeting

Data integration lab_meetingLiangqun Lu

Ã˝

This document outlines several unsupervised methods for integrating multi-omic data, including matrix factorization methods (iCluster+), Bayesian methods (BCC), network-based methods (SNF), and multiple kernel learning (rMKL-LPP). It provides details on the procedures and assumptions of each method and discusses applications to discovering subtypes in cancers like breast cancer and glioblastoma multiforme.Lasso

LassoLiangqun Lu

Ã˝

This document discusses Lasso and Bayesian Lasso regression. It provides an overview of Lasso, which performs variable selection by shrinking coefficients towards zero. Bayesian Lasso regression places Laplace priors on the coefficients, allowing posterior inference using Gibbs sampling. The document demonstrates an example of Bayesian Lasso for diabetes data and prostate cancer data, comparing errors to non-Bayesian Lasso and examining coefficient estimates.Irgan

IrganLiangqun Lu

Ã˝

This document discusses using generative adversarial networks (GANs) for information retrieval (IRGAN). It presents GANs and describes how IRGAN formulates a minimax game that unifies generative and discriminative IR models. Experiments on web search, item recommendation, and question answering using real datasets show IRGAN achieves significant performance gains over other ranking models. The generative model benefits more in pointwise IRGAN, while the discriminative model benefits more in pairwise IRGAN.Deep Learning Application in Biology

Deep Learning Application in BiologyLiangqun Lu

Ã˝

Group meeting to discuss two papers on deep learning applications in healthcare. The first paper examines using a deep neural network to classify skin cancer at a dermatologist level using images. The second reviews opportunities and challenges for using deep learning in healthcare. Deep learning uses neural network models with many layers to extract higher-level features from data. It has achieved breakthroughs in areas like computer vision, speech recognition, and natural language processing. Transfer learning allows applying models trained on large datasets to new related problems.Liangqun ms defense.pptx

Liangqun ms defense.pptxLiangqun Lu

Ã˝

- 13 putative driver genes were identified from TCGA somatic mutation data including TP53, CTNNB1, and AXIN1. Some genes were associated with clinical characteristics and survival outcomes.

- Multi-omic data from TCGA including RNA-seq, miRNA-seq, DNA methylation, and copy number variation were integrated using similarity network fusion and clustering. This identified 5 subtypes of HCC with different survival profiles.

- Predictive models were built for each subtype on individual omics datasets, showing concordance between 56-87%. Analysis of gene expression profiles revealed different active biological processes between the subtypes.Thesis ms llq

Thesis ms llqLiangqun Lu

Ã˝

The document discusses hepatocellular carcinoma (HCC) epidemiology and etiology. It states that HCC is the most common type of liver cancer and the second leading cause of cancer death worldwide. The risk of HCC is highest in East Asia and Africa. Major risk factors include hepatitis B and C infections, cirrhosis, alcohol abuse, and aflatoxin exposure. HCC develops through a multi-step process as a result of chronic liver injury and inflammation leading to cirrhosis, then the accumulation of genetic and genomic alterations in hepatocytes results in malignant transformation and tumor progression. Key molecular alterations involved in hepatocarcinogenesis include telomere shortening, p53 mutations, and genomic instability.Liangqun lu 1st_gss_version2

Liangqun lu 1st_gss_version2Liangqun Lu

Ã˝

This document discusses post-traumatic stress disorder (PTSD) and efforts to identify biomarkers for PTSD. It provides background information on PTSD prevalence, diagnosis, and current treatment options. The document then describes a study to identify genetic and molecular biomarkers for PTSD by analyzing differences in genes, miRNAs, and metabolites between healthy control samples and PTSD patient samples using machine learning techniques. The study aims to identify candidate biomarkers that could help diagnose and monitor PTSD.Presentation orientation

Presentation orientationLiangqun Lu

Ã˝

This document outlines a study that used machine learning methods to predict post-traumatic stress disorder (PTSD) based on clinical characteristics from patient data. The study used datasets containing information from 957 patients exposed to traumatic events and assessed for PTSD at three time points. Several machine learning algorithms were tested and achieved AUC scores from 0.76 to 0.82 in predicting PTSD. Feature selection found that focusing on the top consistent predictive features improved accuracy. The study demonstrated the feasibility of machine learning for PTSD prediction and suggests incorporating additional data like genomics could further improve results.Journal club.pptx

Journal club.pptxLiangqun Lu

Ã˝

This document discusses using gene expression profiling to classify breast cancers, predict patient survival, and determine appropriate treatment. It describes developing a gene signature from a training set of 115 breast cancer samples and validating it on an independent test set of 171 samples. The signature was able to predict survival and response to chemotherapy versus endocrine therapy in estrogen receptor-positive breast cancer patients.Final.project

Final.projectLiangqun Lu

Ã˝

This document summarizes a study that used machine learning methods to analyze gene expression data from mouse samples with and without PTSD-like symptoms. The study found that there were more differently expressed genes in the brain samples compared to other tissues. Logistic regression was able to accurately classify 92.2% of the samples as PTSD or control. While 94 genes were identified as potentially contributing to PTSD, further validation of the model is still needed due to noise in the grouped data.Recently uploaded (20)

SOCIAL CHANGE(a change in the institutional and normative structure of societ...

SOCIAL CHANGE(a change in the institutional and normative structure of societ...DrNidhiAgarwal

Ã˝

This PPT is showing the effect of social changes in human life and it is very understandable to the students with easy language.in this contents are Itroduction, definition,Factors affecting social changes ,Main technological factors, Social change and stress , what is eustress and how social changes give impact of the human's life.POWERPOINT-PRESENTATION_DM-NO.017-S.2025.pptx

POWERPOINT-PRESENTATION_DM-NO.017-S.2025.pptxMarilenQuintoSimbula

Ã˝

Rubric level Summary for Teacher 1 to 3, Proficient Teacher. Guide in assessing MOV presented.Computer Application in Business (commerce)

Computer Application in Business (commerce)Sudar Sudar

Ã˝

The main objectives

1. To introduce the concept of computer and its various parts. 2. To explain the concept of data base management system and Management information system.

3. To provide insight about networking and basics of internet

Recall various terms of computer and its part

Understand the meaning of software, operating system, programming language and its features

Comparing Data Vs Information and its management system Understanding about various concepts of management information system

Explain about networking and elements based on internet

1. Recall the various concepts relating to computer and its various parts

2 Understand the meaning of software’s, operating system etc

3 Understanding the meaning and utility of database management system

4 Evaluate the various aspects of management information system

5 Generating more ideas regarding the use of internet for business purpose How to Configure Flexible Working Schedule in Odoo 18 Employee

How to Configure Flexible Working Schedule in Odoo 18 EmployeeCeline George

Ã˝

In this slide, we’ll discuss on how to configure flexible working schedule in Odoo 18 Employee module. In Odoo 18, the Employee module offers powerful tools to configure and manage flexible working schedules tailored to your organization's needs.Lesson Plan M1 2024 Lesson Plan M1 2024 Lesson Plan M1 2024 Lesson Plan M1...

Lesson Plan M1 2024 Lesson Plan M1 2024 Lesson Plan M1 2024 Lesson Plan M1...pinkdvil200

Ã˝

Lesson Plan M1 2024 Lesson Plan M1 2024 Lesson Plan M1 2024 How to attach file using upload button Odoo 18

How to attach file using upload button Odoo 18Celine George

Ã˝

In this slide, we’ll discuss on how to attach file using upload button Odoo 18. Odoo features a dedicated model, 'ir.attachments,' designed for storing attachments submitted by end users. We can see the process of utilizing the 'ir.attachments' model to enable file uploads through web forms in this slide.Research & Research Methods: Basic Concepts and Types.pptx

Research & Research Methods: Basic Concepts and Types.pptxDr. Sarita Anand

Ã˝

This ppt has been made for the students pursuing PG in social science and humanities like M.Ed., M.A. (Education), Ph.D. Scholars. It will be also beneficial for the teachers and other faculty members interested in research and teaching research concepts.A PPT Presentation on The Princess and the God: A tale of ancient India by A...

A PPT Presentation on The Princess and the God: A tale of ancient India by A...Beena E S

Ã˝

A PPT Presentation on The Princess and the God: A tale of ancient India by Aaron ShepardAdventure Activities Final By H R Gohil Sir

Adventure Activities Final By H R Gohil SirGUJARATCOMMERCECOLLE

Ã˝

Adventure Activities Final By H R Gohil SirN.C. DPI's 2023 Language Diversity Briefing

N.C. DPI's 2023 Language Diversity BriefingMebane Rash

Ã˝

The number of languages spoken in NC public schools.FESTIVAL: SINULOG & THINGYAN-LESSON 4.pptx

FESTIVAL: SINULOG & THINGYAN-LESSON 4.pptxDanmarieMuli1

Ã˝

Sinulog Festival of Cebu City, and Thingyan Festival of Myanmar.The Constitution, Government and Law making bodies .

The Constitution, Government and Law making bodies .saanidhyapatel09

Ã˝

This PowerPoint presentation provides an insightful overview of the Constitution, covering its key principles, features, and significance. It explains the fundamental rights, duties, structure of government, and the importance of constitutional law in governance. Ideal for students, educators, and anyone interested in understanding the foundation of a nation’s legal framework.

Year 10 The Senior Phase Session 3 Term 1.pptx

Year 10 The Senior Phase Session 3 Term 1.pptxmansk2

Ã˝

Year 10 The Senior Phase Session 3 Term 1.pptxPrinciple and Practices of Animal Breeding || Boby Basnet

Principle and Practices of Animal Breeding || Boby BasnetBoby Basnet

Ã˝

Principle and Practices of Animal Breeding Full Note

|| Assistant Professor Boby Basnet ||IAAS || AFU || PU || FUDatabase population in Odoo 18 - Odoo slides

Database population in Odoo 18 - Odoo slidesCeline George

Ã˝

In this slide, we’ll discuss the database population in Odoo 18. In Odoo, performance analysis of the source code is more important. Database population is one of the methods used to analyze the performance of our code. NLP DLforDS

- 1. Deep Learning for Dialogue Systems Liangqun Lu PhD program in Biology/Bioinformatics MS program in Computer Science

- 2. JARVIS (Just Another Rather Very Intelligent System) "J.A.R.V.I.S., are you up?" "For you sir, always." "J.A.R.V.I.S.? You ever hear the tale of Jonah?" "I wouldn't consider him a role model." "J.A.R.V.I.S., where's my flight power?!" "Working on it, sir. This is a prototype." https://www.youtube.com/watch?v=ZwOxM0-byvc

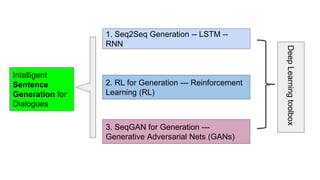

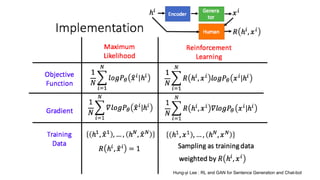

- 4. Intelligent Sentence Generation for Dialogues DeepLearningtoolbox 1. Seq2Seq Generation -- LSTM -- RNN 2. RL for Generation --- Reinforcement Learning (RL) 3. SeqGAN for Generation --- Generative Adversarial Nets (GANs)

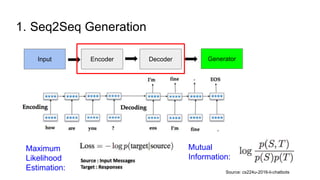

- 5. 1. Seq2Seq Generation Source: cs224u-2016-li-chatbots Encoder Decoder GeneratorInput Maximum Likelihood Estimation: Mutual Information:

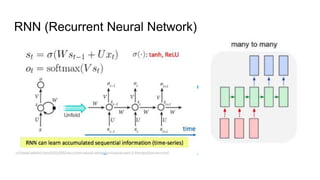

- 6. RNN (Recurrent Neural Network)

- 7. Long short term memories (LSTMs)

- 9. Seq2Seq encoder- decoder example in keras Encoder Model Decoder Model

- 10. Summaries ● Seq2seq model can generate output sentences based on the input sentences ● The maximum likelihood estimation (MLE) objective function does not guarantee good responses to human beings in read world. ● It is likely to generate highly dull and generic responses such as “I don’t know” regardless of the input, which is a buzzkiller in a conversation. ● Mutual Information (MI) could avoid ~30% dull responses. ● It is likely to get stuck in an infinite loop of repetitive responses.

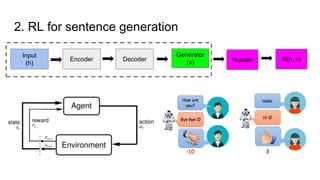

- 11. 2. RL for sentence generation Encoder Decoder Generator (x) Input (h) Human R(h, x)

- 12. Hung-yi Lee : RL and GAN for Sentence Generation and Chat-bot

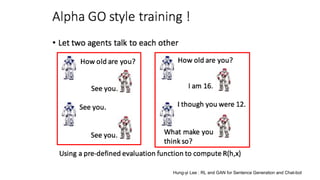

- 13. Hung-yi Lee : RL and GAN for Sentence Generation and Chat-bot

- 14. Hung-yi Lee : RL and GAN for Sentence Generation and Chat-bot

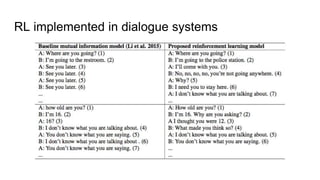

- 15. RL implemented in dialogue systems

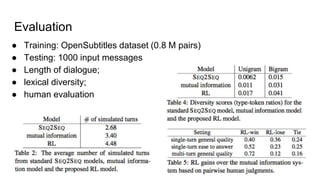

- 16. Evaluation ‚óè Training: OpenSubtitles dataset (0.8 M pairs) ‚óè Testing: 1000 input messages ‚óè Length of dialogue; ‚óè lexical diversity; ‚óè human evaluation

- 17. Summaries ‚óè Reinforcement Learning implemented in dialogue generation rewards the conversation with properties: informativity, coherence and ease of answering ‚óè The model has the advantages on diversity, length, better human judges and more interactive responses ‚óè This approach makes it potential to generate long-term dialogues

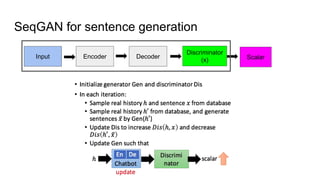

- 18. 3. SeqGAN for sentence generation

- 20. SeqGAN for sentence generation Encoder Decoder Discriminator (x) Input Scalar

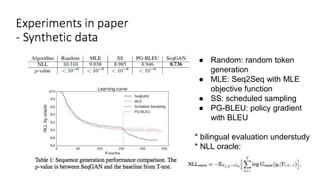

- 23. ‚óè Random: random token generation ‚óè MLE: Seq2Seq with MLE objective function ‚óè SS: scheduled sampling ‚óè PG-BLEU: policy gradient with BLEU * bilingual evaluation understudy * NLL oracle:

- 24. ‚óè The stability of SeqGAN depends on the training strategy such as g-steps, d-steps and epoch number k for g-step ‚óè g-steps=1, d-steps=5, k=3 has the best performance

- 25. ‚óè Table 2: 16,394 Chinese quatrains ‚óè Table 3: 11,092 paragraphs ‚óè Table 4: 695 music

- 26. Summaries ‚óè Generative Adversarial Net (GAN) that uses a discriminative model to guide the training of the generative model has enjoyed considerable success in generating real-valued data. ‚óè SeqGAN applying policy gradient to update from the discriminative model to the generative model demonstrates significant improvements in synthetic and real-world data.

- 27. References 1. Li, Jiwei, et al. "Deep reinforcement learning for dialogue generation." arXiv preprint arXiv:1606.01541 (2016) 2. Yu, Lantao, et al. "SeqGAN: Sequence Generative Adversarial Nets with Policy Gradient" (2016) 3. Stanford CS224d: Deep Learning for Natural Language Processing 4. DL/ML Tutorial from Hung-yi Lee

Editor's Notes

- #3: My interest on this topic is actually from Iron Man movies. In the movies, we know that Iron man tony stark has an intelligent assistant called JARVIS, they have many interesting conversations. It will be a pleasure to have such smart virtual friend.

- #4: Deep Learning techniques have successful applications in many areas, including Natural Language Processing. These two papers from 2 years played an important role in dialogue systems, with advanced skills in RL and GAN.

- #5: In my understanding, deep learning toolbox provides tools which can be applied in dialogues, at least in these 3 steps. So far, there are some intelligent sentence generation for dialogues from these techniques.

- #6: In seq2seq generation, the simplified architecture is like this one. Here is an example: There are 2 optimizations in this system, MLE and MI.

- #7: The seq2seq model is based on RNN with LSTM. RNN is ---, the structure is this, including input and output. Unfold shows the details here, from xt to ot, the input actually is xt, s(t-1) and the output is ot and st, s(t-1) records the previous information, which is important in sequence tasks. The advantage of RNN, compared to other DL models, is that RNN is suitable to process sequence data.

- #8: However RNN has gradient exploding or vanishing problem when the sequence is long, because the optimization has to consider all memory from previous steps. LSTM was developed to optimize the memory problem with three gates in a cell.

- #9: Encoder and Decoder, a function used to model the complex system.

- #10: An encoder and decoder example from Keras shows the parameters in layers. The encoder and decoder has the same number 256.

- #20: Evaluating dialogue systems is difficult. Metrics such as BLEU (Papineni et al., 2002) and perplexity have been widely used for dialogue quality evaluation (Li et al., 2016a; Vinyals and Le, 2015; Sordoni et al., 2015), but it is widely debated how well these automatic metrics are correlated with true response quality (Liu et al., 2016; Galley et al., 2015). Since the goal of the proposed system is not to predict the highest probability response, but rather the long-term success of the dialogue, we do not employ BLEU or perplexity for evaluation.

- #21: We propose to measure the ease of answering a generated turn by using the negative log likelihood of responding to that utterance with a dull response.

- #36: Li, Jiwei, et al. "Deep reinforcement learning for dialogue generation." arXiv preprint arXiv:1606.01541 (2016). Yu, Lantao, et al. "SeqGAN: Sequence Generative Adversarial Nets with Policy Gradient" (2016)