Nyc hadoop meetup introduction to h base

- 1. Introduction to HBase NYC Hadoop Meetup Jonathan Gray February 11, 2010

- 2. About Me ? Jonathan Gray ¨C HBase Committer ¨C HBase User since early 2008 ¨C Migrated large PostgreSQL instance to HBase ? In production @ streamy.com since June 2008 ¨C Core contributor to performance improvements in HBase 0.20 ¨C Currently consulting around HBase ? As well as Hadoop/MR and Lucene/Katta

- 3. Overview ? Why HBase? ? What is HBase? ? How does HBase work? ? HBase Today and Tomorrow ? HBase vs. RDBMS Example ? HBase and ˇ°NoSQLˇ±

- 4. Why HBase? ? Same reasons we need Hadoop ¨C Datasets growing into Terabytes and Petabytes ¨C Scaling out is cheaper than scaling up ? Continue to grow just by adding commodity nodes ? But sometimes Hadoop is not enough ¨C Need to support random reads and random writes Traditional databases are expensive to scale and difficult to distribute

- 5. What is HBase? ? Distributed ? Column-Oriented ? Multi-Dimensional ? High-Availability ? High-Performance ? Storage System Project Goal Billions of Rows * Millions of Columns * Thousands of Versions Petabytes across thousands of commodity servers

- 6. HBase is notˇ ? A Traditional SQL Database ¨C No joins, no query engine, no types, no SQL ¨C Transactions and secondary indexing possible but these are add-ons, not part of core HBase ? A drop-in replacement for your RDBMS ? You must be OK with RDBMS anti-schema ¨C Denormalized data ¨C Wide and sparsely populated tables Just say ˇ°noˇ± to your inner DBA

- 7. How does HBase work? ? Two types of HBase nodes: Master and RegionServer ? Master (one at a time) ¨C Manages cluster operations ? Assignment, load balancing, splitting ¨C Not part of the read/write path ¨C Highly available with ZooKeeper and standbys ? RegionServer (one or more) ¨C Hosts tables; performs reads, buffers writes ¨C Clients talk directly to them for reads/writes

- 8. HBase Tables ? An HBase cluster is made up of any number of user- defined tables ? Table schema only defines itˇŻs column families ¨C Each family consists of any number of columns ¨C Each column consists of any number of versions ¨C Columns only exist when inserted, NULLs are free ¨C Everything except table/family names are byte[] ¨C Rows in a table are sorted and stored sequentially ¨C Columns in a family are sorted and stored sequentially (Table, Row, Family, Column, Timestamp) ? Value

- 9. HBase Table as Data Structures ? A table maps rows to its families ¨C SortedMap(Row ? List(ColumnFamilies)) ? A family maps column names to versioned values ¨C SortedMap(Column ? SortedMap(VersionedValues)) ? A column maps timestamps to values ¨C SortedMap(Timestamp ? Value) An HBase table is a three-dimensional sorted map (row, column, and timestamp)

- 10. HBase Regions ? Table is made up of any number of regions ? Region is specified by its startKey and endKey ¨C Empty table: (Table, NULL, NULL) ¨C Two-region table: (Table, NULL, ˇ°MidKeyˇ±) and (Table, ˇ°MidKeyˇ±, NULL) ? A region only lives on one RegionServer at a time ? Each region may live on a different node and is made up of several HDFS files and blocks, each of which is replicated by Hadoop

- 11. More HBase Architecture ? Region information and locations stored in special tables called catalog tables -ROOT- table contains location of meta table .META. table contains schema/locations of user regions ? Location of -ROOT- is stored in ZooKeeper ¨C This is the ˇ°bootstrapˇ± location ? ZooKeeper is used for coordination / monitoring ¨C Leader election to decide who is master ¨C Ephemeral nodes to detect RegionServer node failures

- 14. HBase Key Features ? Automatic partitioning of data ¨C As data grows, it is automatically split up ? Transparent distribution of data ¨C Load is automatically balanced across nodes ? Tables are ordered by row, rows by column ¨C Designed for efficient scanning (not just gets) ¨C Composite keys allow ORDER BY / GROUP BY ? Server-side filters ? No SPOF because of ZooKeeper integration

- 15. HBase Key Features (cont) ? Fast adding/removing of nodes while online ¨C Moving locations of data doesnˇŻt move data ? Supports creating/modifying tables online ¨C Both table-level and family-level configuration parameters ? Close ties with Hadoop MapReduce ¨C TableInputFormat/TableOutputFormat ¨C HFileOutputFormat

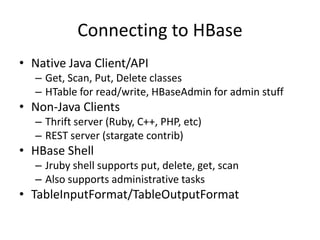

- 16. Connecting to HBase ? Native Java Client/API ¨C Get, Scan, Put, Delete classes ¨C HTable for read/write, HBaseAdmin for admin stuff ? Non-Java Clients ¨C Thrift server (Ruby, C++, PHP, etc) ¨C REST server (stargate contrib) ? HBase Shell ¨C Jruby shell supports put, delete, get, scan ¨C Also supports administrative tasks ? TableInputFormat/TableOutputFormat

- 17. HBase Add-ons ? MapReduce / Cascading / Hive / Pig ¨C Support for HBase as a data source or sink ? Transactional HBase ¨C Distributed transactions using OCC ? Indexed HBase ¨C Utilizes Transactional HBase for secondary indexing ? IHbase ¨C New contrib for in-memory secondary indexes ? HBql ¨C SQL syntax on top of HBase

- 18. HBase Today ? Latest stable release is HBase 0.20.3 ¨C Major improvement over HBase 0.19 ¨C Focus on performance improvement ¨C Add ZooKeeper, remove SPOF ¨C Expansion of in-memory and caching capabilities ¨C Compatible with Hadoop 0.20.x ¨C Recommend upgrading from earlier 0.20.x HBase releases as 0.20.3 includes some important fixes ? Improves logging, shell, faster cluster ops, stability

- 19. HBase in Production ? Streamy ? StumbleUpon ? Adobe ? Meetup ? Ning ? Openplaces ? Powerset ? SocialMedia.com ? TrendMicro

- 20. The Future of HBase ? Next release is HBase 0.21.0 ¨C Release date will be ~1 month after Hadoop 0.21 ? Data durability is fixed in this release ¨C HDFS append/sync finally works in Hadoop 0.21 ¨C This is implemented and working on TRUNK ¨C Have added group commit and knobs to adjust ? Other cool features ¨C Inter-cluster replication ¨C Master Rewrite ¨C Parallel Puts ¨C Co-processors

- 21. HBase Web Crawl Example ? Store web crawl data ¨C Table crawl with family content ¨C Row is URL with Columns ? content:data stores raw crawled data ? content:language stores http language header ? content:type stores http content-type header ¨C If processing raw data for hyperlinks and images, add families links and images ? links:<url> column for each hyperlink ? images:<url> column for each image

- 22. Web Crawl Example in RDBMS ? How would this look in a traditional DB? ¨C Table crawl with columns url, data, language, and type ¨C Table links with columns url and link ¨C Table images with columns url and image ? How will this scale? ¨C 10M documents w/ avg10 links and 10 images ¨C 210M total rows versus 10M total rows ¨C Index bloat with links/images tables

- 23. What is ˇ°NoSQLˇ±? ? Has little to do with not being SQL ¨C SQL is just a query language standard ¨C HBql is an attempt to add SQL syntax to HBase ¨C Millions are trained in SQL; resistance is futile! ? Popularity of Hive and Pig over raw MapReduce ? Has more to do with anti-RDBMS architecture ¨C Dropping the relational aspects ¨C Loosening ACID and transactional elements

- 24. NoSQL Types and Projects ? Column-oriented ¨C HBase, Cassandra, Hypertable ? Key/Value ¨C BerkeleyDB, Tokyo, Memcache, Redis, SimpleDB ? Document ¨C CouchDB, MongoDB ? Other differentiators as wellˇ ¨C Strong vs. Eventual consistency ¨C Database replication vs. Filesystem replication

![HBase Tables

? An HBase cluster is made up of any number of user-

defined tables

? Table schema only defines itˇŻs column families

¨C Each family consists of any number of columns

¨C Each column consists of any number of versions

¨C Columns only exist when inserted, NULLs are free

¨C Everything except table/family names are byte[]

¨C Rows in a table are sorted and stored sequentially

¨C Columns in a family are sorted and stored sequentially

(Table, Row, Family, Column, Timestamp) ? Value](https://image.slidesharecdn.com/nychadoopmeetup-introductiontohbase-100928233306-phpapp02/85/Nyc-hadoop-meetup-introduction-to-h-base-8-320.jpg)