Pipelining cache

Download as pptx, pdf0 likes1,007 views

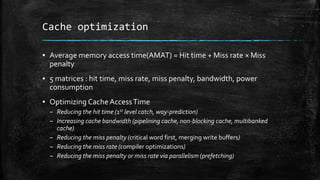

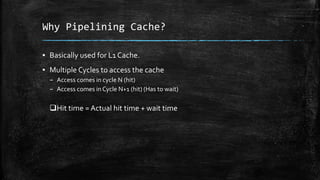

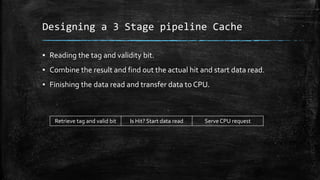

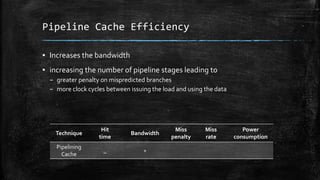

Pipelining cache can improve performance by reducing cache access time. It breaks the cache access process into multiple stages, allowing subsequent requests to begin the pipeline even if earlier requests are still in progress. For example, a three-stage pipeline would involve: 1) reading the tag and validity bit, 2) determining if there is a hit and starting the data read, and 3) finishing the data read and returning the value to the CPU. Pipelining cache can increase bandwidth but also introduces some complexity from potential branch mispredictions and longer latency between request issue and data use. Overall it aims to optimize the average memory access time.

1 of 17

Downloaded 30 times

Ad

Recommended

Relay baton - Good example of one piece continous flow

Relay baton - Good example of one piece continous flowTomas Rybing

?

The document describes the relay baton handoff process in a 4x100 meter relay race as an example of continuous one-piece flow. It outlines each runner's preparation and positioning, then narrates the race from start to finish, highlighting the seamless exchanges between runners and their building speed until the final runner crosses the finish line, setting a new world record.Ensuring QoS in Multi-tenant Hadoop Environments

Ensuring QoS in Multi-tenant Hadoop EnvironmentsBecky Mendenhall

?

The document discusses the importance of Quality of Service (QoS) in multi-tenant Hadoop environments to ensure Service Level Agreements (SLAs) are met. It outlines various scenarios where contention may arise, such as between queues and jobs, and highlights how traditional solutions like preemption are not sufficient to manage real-time resource contention effectively. The proposed solution involves a dynamic approach using Pepperdata to actively manage and prioritize running jobs in real-time, optimizing resource utilization and eliminating excessive swapping.Parallel Prime Number Generation Using The Sieve of Eratosthenes

Parallel Prime Number Generation Using The Sieve of EratosthenesAdrian-Tudor Panescu

?

The document discusses the parallelization of the Sieve of Eratosthenes algorithm for prime number generation, detailing how multiple processes can collaboratively remove composite numbers from a list of natural numbers. It analyzes the computation versus communication trade-offs, Amdahl's Law implications, and presents execution times for different numbers of processes. The conclusion highlights the potential for optimization and the overall benefits of parallelization in this algorithm.Taller syslog

Taller syslogAlumic S.A

?

This document outlines steps for configuring syslog and NTP services on network devices to monitor events and synchronize device clocks. It involves enabling syslog on a server and devices, generating syslog events, manually setting switch clocks, enabling NTP on a server and router to automatically set clocks, and verifying syslog events are timestamped correctly. The overall goal is to effectively monitor the network through centralized logging of timestamped events.Agile processexplained

Agile processexplainedAshish K Agarwal

?

The document outlines an agile process detailing a two-week sprint cycle, including key activities like sprint planning, backlog grooming, and release retrospectives. It emphasizes the importance of defining stories with acceptance criteria and estimates, while also setting team protocols for code quality and testing. Additionally, it mentions metrics such as sprint velocity and burndown charts to measure progress.Quick Sort

Quick Sortpriyankanaidu6

?

Quick sort uses a divide-and-conquer approach to sort a list. It works by selecting a pivot element, partitioning the list into elements less than, equal to, and greater than the pivot, and then recursively sorting the sub-lists. The example shows quick sort being applied to a list of numbers, with the pivot being swapped with other elements to partition the list into sorted sub-lists on each iteration.¤¬¤ó¤Đ¤ěĄĆĄĂĄŻĄę©`ĄÉŁˇ´ł±ő¸é´ˇÜżČËĆŞŁˇŁˇ

¤¬¤ó¤Đ¤ěĄĆĄĂĄŻĄę©`ĄÉŁˇ´ł±ő¸é´ˇÜżČËĆŞŁˇŁˇYuto Matsukubo

?

This document discusses using JIRA for project management. It mentions that JIRA can be used for issue tracking in IT projects and allows configuring workflows. Slack and JIRA can be integrated so that Slack messages can update JIRA issues. The document asks questions about how JIRA could be used to manage projects with different teams like engineering, project management, QA, and customer support and track issues through their lifecycle from creation to resolution.A star algorithm in artificial intelligence

A star algorithm in artificial intelligenceRahul Gupta

?

The document provides an overview of the A* search algorithm, a widely used best-first search technique that finds the shortest path in a search space by utilizing a heuristic function h(n) and path cost g(n). It outlines the steps involved in the algorithm, including placing the starting node on an open list, evaluating nodes, and expanding successors until the goal node is found. Additionally, an example is given to illustrate the application of the algorithm, indicating that the optimal path found has a cost of 6.Pipelining slides

Pipelining slides PrasantaKumarDash2

?

The document discusses the basics of RISC instruction set architectures and pipelining in CPUs. It begins by describing properties of RISC ISAs, including that operations apply to full registers, only load/store instructions affect memory, and instructions are typically one size. It then describes different types of RISC instructions like ALU, load/store, and branches. The document goes on to explain the implementation of a RISC pipeline in 5 stages and the concept of pipelining to improve CPU performance by overlapping instruction execution. It also discusses potential hazards that can degrade pipeline performance like structural, data, and control hazards.Coa.ppt2

Coa.ppt2PrasantaKumarDash2

?

The document discusses RISC instruction set basics and pipelining concepts. It begins by describing properties of RISC architectures, including that operations apply to full registers and only load/store instructions affect memory. It then describes different types of RISC instructions like ALU, load/store, and branches. The document goes on to explain the implementation of instructions in a MIPS64 pipeline with 5 stages: instruction fetch, decode/register fetch, execute, memory access, and write-back. It concludes by defining pipelining and describing how it can increase throughput by overlapping instruction execution.pipelining.pptx

pipelining.pptxMUNAZARAZZAQELEA

?

Pipelining is a technique used in processors to improve throughput by overlapping the execution of multiple instructions. It works by splitting instruction processing into discrete stages - such as fetch, decode, execute, and writeback - so that multiple instructions can be in different stages at the same time. This allows new instructions to begin processing every clock cycle rather than waiting for the full execution of the previous instruction. Pipelining provides the most performance improvement when all stages take the same amount of time. Hazards like long execution times or cache misses can cause the pipeline to stall and reduce its effectiveness.Computer-Architechture-Suggesion-book.pdf

Computer-Architechture-Suggesion-book.pdfticuhulo

?

The document explains the concept of pipeline in computing, detailing how tasks are divided into stages for simultaneous execution to enhance performance. It contrasts single and double pipeline architectures, emphasizing the advantages and disadvantages of each, and outlines the role of cache memory in speeding up data access. Overall, it provides insights into task processing techniques in computer architecture and their implications for performance and efficiency.Pipelining , structural hazards

Pipelining , structural hazardsMunaam Munawar

?

Pipelining is a technique used in advanced microprocessors allowing concurrent execution of multiple instructions across different stages, improving processing efficiency. It consists of stages such as instruction fetch, decode, execution, memory, and write back, enhancing throughput and performance. However, structural hazards may occur due to resource conflicts, which can be mitigated by stalling the pipeline or adding more hardware.CPU Pipelining and Hazards - An Introduction

CPU Pipelining and Hazards - An IntroductionDilum Bandara

?

Pipelining is a technique used in computer architecture to overlap the execution of instructions to increase throughput. It works by breaking down instruction execution into a series of steps and allowing subsequent instructions to begin execution before previous ones complete. This allows multiple instructions to be in various stages of completion simultaneously. Pipelining improves performance but introduces hazards such as structural, data, and control hazards that can reduce the ideal speedup if not addressed properly. Control hazards due to branches are particularly challenging to handle efficiently.Concept of Pipelining

Concept of PipeliningSHAKOOR AB

?

This document discusses instruction pipelining as a technique to improve computer performance. It explains that pipelining allows multiple instructions to be processed simultaneously by splitting instruction execution into stages like fetch, decode, execute, and write. While pipelining does not reduce the time to complete individual instructions, it improves throughput by allowing new instructions to begin processing before previous instructions have finished. The document outlines some challenges to achieving peak performance from pipelining, such as pipeline stalls from hazards like data dependencies between instructions. It provides examples of how data hazards can occur if the results of one instruction are needed by a subsequent instruction before they are available.Pipelining 16 computers Artitacher pdf

Pipelining 16 computers Artitacher pdfMadhuGupta99385

?

Pipelining is a technique where a microprocessor can begin executing the next instruction before finishing the previous one. It works by dividing instruction processing into discrete stages - fetch, decode, execute, memory, and write back. When an instruction enters one stage, the next instruction can enter the following stage so that multiple instructions are in different stages at the same time, improving efficiency. The pipeline allows for faster overall processing but hazards can occur if instructions depend on previous ones, disrupting the smooth flow.Instruction pipelining

Instruction pipeliningShoaib Commando

?

The document discusses instruction pipelining in computers. It begins with an analogy of pipelining laundry tasks to increase throughput. It then explains the concept of dividing instruction execution into stages (fetch, decode, execute, write) and executing instructions in parallel by having different stages work on different instructions. This allows higher instruction throughput. However, hazards like data dependencies between instructions, branches, cache misses can cause the pipeline to stall, reducing performance. Various techniques are discussed to handle hazards and maximize the benefits of pipelining.Introduction_pipeline24.ppt which include

Introduction_pipeline24.ppt which includeGauravDaware2

?

The document discusses various methods to increase computer speed, including parallelism, pipelining, and superscalar architecture. It explains concepts such as CPU time, performance metrics, pipeline hazards, and solutions to optimize instruction execution. Additionally, it covers advanced models like very long instruction word (VLIW) processors and instruction-level parallelism to enhance processing efficiency.pipelining

pipeliningSiddique Ibrahim

?

Pipelining is an essential technique in modern processors that enhances throughput by allowing multiple operations to be processed simultaneously, improving overall system performance. It involves breaking down sequential processes into stages that execute in dedicated clock cycles, similar to an assembly line, while pipeline performance is affected by hazards such as data, instruction, and structural dependencies. Effective pipelining requires sophisticated compilation techniques and the management of these hazards to optimize execution without increasing the time taken for individual tasks.Pipelining in computer architecture

Pipelining in computer architectureRamakrishna Reddy Bijjam

?

Pipelining is a technique used in modern processors to improve performance. It allows multiple instructions to be processed simultaneously using different processor components. This increases throughput compared to sequential processing. However, pipeline stalls can occur due to data hazards when instructions depend on each other, instruction hazards from branches or cache misses, or structural hazards when resources are needed simultaneously. Various techniques like forwarding, reordering, and branch prediction aim to reduce the impact of hazards on pipeline performance.Pipelining

PipeliningShubham Bammi

?

Pipelining is a technique used in modern processors to enhance performance by increasing throughput through simultaneous execution of multiple operations. It breaks instruction execution into stages, but requires careful management to avoid hazards like data and instruction stalls that can degrade performance. Pipelining's effectiveness relies on balancing pipeline stages and minimizing stalls to maintain high instruction throughput.Modern processors

Modern processorsgowrivageesan87

?

The document provides a comprehensive overview of modern cache-based microprocessor architecture, including concepts like pipelining, superscalarity, SIMD, and multithreaded processors. It discusses the performance limitations of CPUs, the importance of memory hierarchy, cache management, and strategies for efficient execution of instructions. Additionally, it introduces vector processors and their capability for executing multiple data operations simultaneously, along with the challenges in utilizing multicore CPUs effectively.Pipeline Computing by S. M. Risalat Hasan Chowdhury

Pipeline Computing by S. M. Risalat Hasan ChowdhuryS. M. Risalat Hasan Chowdhury

?

The document discusses pipeline computing and its various types and applications. It defines pipeline computing as a technique to decompose a sequential process into parallel sub-processes that can execute concurrently. There are two main types - linear and non-linear pipelines. Linear pipelines use a single reservation table while non-linear pipelines use multiple tables. Common applications of pipeline computing include instruction pipelines in CPUs, graphics pipelines in GPUs, software pipelines using pipes, and HTTP pipelining. The document also discusses implementations of pipeline computing and its advantages like reduced cycle time and increased instruction throughput.Computer architecture pipelining

Computer architecture pipeliningMazin Alwaaly

?

The document discusses pipelining in computer architecture, defining it as a technique for decomposing sequential processes into concurrent suboperations, akin to an assembly line. It elaborates on the advantages and disadvantages of pipelining, the types of pipelines (hardware and software), performance impacts due to hazards, and addressing modes, with a specific focus on RISC architecture. Additionally, it highlights the role of cache memory in enhancing pipelined execution efficiency and details issues like data and instruction hazards that can disrupt pipeline performance.week_2Lec02_CS422.pptx

week_2Lec02_CS422.pptxmivomi1

?

The document provides an overview of parallel computing concepts including:

1) Implicit parallelism in microprocessor architectures has led to techniques like pipelining and superscalar execution to better utilize increasing transistor budgets, though dependencies limit parallelism.

2) Memory latency and bandwidth bottlenecks have shifted performance limitations to the memory system, though caches can improve effective latency through higher hit rates.

3) Communication costs, including startup time, per-hop latency, and per-word transfer time, are a major overhead in parallel programs that use techniques like message passing, packet routing, and cut-through routing to reduce communication costs.Pipeline Organization Overview and Performance.pdf

Pipeline Organization Overview and Performance.pdfVenkatesanSatheeswar

?

Pipelining is a crucial technique in modern processors that enhances throughput by breaking down processes into suboperations, allowing multiple tasks to be executed in parallel. While it improves overall performance, pipelining does not speed up individual task execution and can be hindered by hazards such as data, control, and structural issues. Effective handling of these hazards, along with careful design of instruction sets and addressing modes, is vital for optimizing pipelined execution.Sayeh extension(v23)

Sayeh extension(v23)Farzan Dehbashi

?

This document describes a final project for a computer architecture course involving the design of a cache controller and pipelined controller for the SAYEH computer. It includes sections that describe the cache module components like the data array, tag-valid array, and cache replacement policy. It also covers topics like pipelining, hazards, and the design of a hazard control unit for the pipelined SAYEH computer. The document provides instructions for students to implement the project in VHDL, write a report explaining their work, and meet the deadline for submission.pipeline in computer architecture design

pipeline in computer architecture designssuser87fa0c1

?

The document discusses the concept of pipelining in computer architecture, detailing its definition, components, benefits, challenges, and implementation best practices. Pipelining enhances instruction throughput by executing multiple instructions simultaneously in a structured sequence of stages, each handling specific tasks like fetching, decoding, and executing instructions. Despite its advantages, including improved resource utilization and faster execution, pipelining poses challenges such as resource conflicts, data hazards, and increased complexity that require careful management for optimal performance.Advanced Token Development - Decentralized Innovation

Advanced Token Development - Decentralized Innovationarohisinghas720

?

The world of blockchain is evolving at a fast pace, and at the heart of this transformation lies advanced token development. No longer limited to simple digital assets, todayˇŻs tokens are programmable, dynamic, and play a crucial role in driving decentralized applications across finance, governance, gaming, and beyond.

Decipher SEO Solutions for your startup needs.

Decipher SEO Solutions for your startup needs.mathai2

?

A solution deck that gives you an idea of how you can use Decipher SEO to target keywords, build authority and generate high ranking content.

With features like images to product you can create a E-commerce pipeline that is optimized to help your store rank.

With integrations with shopify, woocommerce and wordpress theres a seamless way get your content to your website or storefront.

View more at decipherseo.comMore Related Content

Similar to Pipelining cache (20)

Pipelining slides

Pipelining slides PrasantaKumarDash2

?

The document discusses the basics of RISC instruction set architectures and pipelining in CPUs. It begins by describing properties of RISC ISAs, including that operations apply to full registers, only load/store instructions affect memory, and instructions are typically one size. It then describes different types of RISC instructions like ALU, load/store, and branches. The document goes on to explain the implementation of a RISC pipeline in 5 stages and the concept of pipelining to improve CPU performance by overlapping instruction execution. It also discusses potential hazards that can degrade pipeline performance like structural, data, and control hazards.Coa.ppt2

Coa.ppt2PrasantaKumarDash2

?

The document discusses RISC instruction set basics and pipelining concepts. It begins by describing properties of RISC architectures, including that operations apply to full registers and only load/store instructions affect memory. It then describes different types of RISC instructions like ALU, load/store, and branches. The document goes on to explain the implementation of instructions in a MIPS64 pipeline with 5 stages: instruction fetch, decode/register fetch, execute, memory access, and write-back. It concludes by defining pipelining and describing how it can increase throughput by overlapping instruction execution.pipelining.pptx

pipelining.pptxMUNAZARAZZAQELEA

?

Pipelining is a technique used in processors to improve throughput by overlapping the execution of multiple instructions. It works by splitting instruction processing into discrete stages - such as fetch, decode, execute, and writeback - so that multiple instructions can be in different stages at the same time. This allows new instructions to begin processing every clock cycle rather than waiting for the full execution of the previous instruction. Pipelining provides the most performance improvement when all stages take the same amount of time. Hazards like long execution times or cache misses can cause the pipeline to stall and reduce its effectiveness.Computer-Architechture-Suggesion-book.pdf

Computer-Architechture-Suggesion-book.pdfticuhulo

?

The document explains the concept of pipeline in computing, detailing how tasks are divided into stages for simultaneous execution to enhance performance. It contrasts single and double pipeline architectures, emphasizing the advantages and disadvantages of each, and outlines the role of cache memory in speeding up data access. Overall, it provides insights into task processing techniques in computer architecture and their implications for performance and efficiency.Pipelining , structural hazards

Pipelining , structural hazardsMunaam Munawar

?

Pipelining is a technique used in advanced microprocessors allowing concurrent execution of multiple instructions across different stages, improving processing efficiency. It consists of stages such as instruction fetch, decode, execution, memory, and write back, enhancing throughput and performance. However, structural hazards may occur due to resource conflicts, which can be mitigated by stalling the pipeline or adding more hardware.CPU Pipelining and Hazards - An Introduction

CPU Pipelining and Hazards - An IntroductionDilum Bandara

?

Pipelining is a technique used in computer architecture to overlap the execution of instructions to increase throughput. It works by breaking down instruction execution into a series of steps and allowing subsequent instructions to begin execution before previous ones complete. This allows multiple instructions to be in various stages of completion simultaneously. Pipelining improves performance but introduces hazards such as structural, data, and control hazards that can reduce the ideal speedup if not addressed properly. Control hazards due to branches are particularly challenging to handle efficiently.Concept of Pipelining

Concept of PipeliningSHAKOOR AB

?

This document discusses instruction pipelining as a technique to improve computer performance. It explains that pipelining allows multiple instructions to be processed simultaneously by splitting instruction execution into stages like fetch, decode, execute, and write. While pipelining does not reduce the time to complete individual instructions, it improves throughput by allowing new instructions to begin processing before previous instructions have finished. The document outlines some challenges to achieving peak performance from pipelining, such as pipeline stalls from hazards like data dependencies between instructions. It provides examples of how data hazards can occur if the results of one instruction are needed by a subsequent instruction before they are available.Pipelining 16 computers Artitacher pdf

Pipelining 16 computers Artitacher pdfMadhuGupta99385

?

Pipelining is a technique where a microprocessor can begin executing the next instruction before finishing the previous one. It works by dividing instruction processing into discrete stages - fetch, decode, execute, memory, and write back. When an instruction enters one stage, the next instruction can enter the following stage so that multiple instructions are in different stages at the same time, improving efficiency. The pipeline allows for faster overall processing but hazards can occur if instructions depend on previous ones, disrupting the smooth flow.Instruction pipelining

Instruction pipeliningShoaib Commando

?

The document discusses instruction pipelining in computers. It begins with an analogy of pipelining laundry tasks to increase throughput. It then explains the concept of dividing instruction execution into stages (fetch, decode, execute, write) and executing instructions in parallel by having different stages work on different instructions. This allows higher instruction throughput. However, hazards like data dependencies between instructions, branches, cache misses can cause the pipeline to stall, reducing performance. Various techniques are discussed to handle hazards and maximize the benefits of pipelining.Introduction_pipeline24.ppt which include

Introduction_pipeline24.ppt which includeGauravDaware2

?

The document discusses various methods to increase computer speed, including parallelism, pipelining, and superscalar architecture. It explains concepts such as CPU time, performance metrics, pipeline hazards, and solutions to optimize instruction execution. Additionally, it covers advanced models like very long instruction word (VLIW) processors and instruction-level parallelism to enhance processing efficiency.pipelining

pipeliningSiddique Ibrahim

?

Pipelining is an essential technique in modern processors that enhances throughput by allowing multiple operations to be processed simultaneously, improving overall system performance. It involves breaking down sequential processes into stages that execute in dedicated clock cycles, similar to an assembly line, while pipeline performance is affected by hazards such as data, instruction, and structural dependencies. Effective pipelining requires sophisticated compilation techniques and the management of these hazards to optimize execution without increasing the time taken for individual tasks.Pipelining in computer architecture

Pipelining in computer architectureRamakrishna Reddy Bijjam

?

Pipelining is a technique used in modern processors to improve performance. It allows multiple instructions to be processed simultaneously using different processor components. This increases throughput compared to sequential processing. However, pipeline stalls can occur due to data hazards when instructions depend on each other, instruction hazards from branches or cache misses, or structural hazards when resources are needed simultaneously. Various techniques like forwarding, reordering, and branch prediction aim to reduce the impact of hazards on pipeline performance.Pipelining

PipeliningShubham Bammi

?

Pipelining is a technique used in modern processors to enhance performance by increasing throughput through simultaneous execution of multiple operations. It breaks instruction execution into stages, but requires careful management to avoid hazards like data and instruction stalls that can degrade performance. Pipelining's effectiveness relies on balancing pipeline stages and minimizing stalls to maintain high instruction throughput.Modern processors

Modern processorsgowrivageesan87

?

The document provides a comprehensive overview of modern cache-based microprocessor architecture, including concepts like pipelining, superscalarity, SIMD, and multithreaded processors. It discusses the performance limitations of CPUs, the importance of memory hierarchy, cache management, and strategies for efficient execution of instructions. Additionally, it introduces vector processors and their capability for executing multiple data operations simultaneously, along with the challenges in utilizing multicore CPUs effectively.Pipeline Computing by S. M. Risalat Hasan Chowdhury

Pipeline Computing by S. M. Risalat Hasan ChowdhuryS. M. Risalat Hasan Chowdhury

?

The document discusses pipeline computing and its various types and applications. It defines pipeline computing as a technique to decompose a sequential process into parallel sub-processes that can execute concurrently. There are two main types - linear and non-linear pipelines. Linear pipelines use a single reservation table while non-linear pipelines use multiple tables. Common applications of pipeline computing include instruction pipelines in CPUs, graphics pipelines in GPUs, software pipelines using pipes, and HTTP pipelining. The document also discusses implementations of pipeline computing and its advantages like reduced cycle time and increased instruction throughput.Computer architecture pipelining

Computer architecture pipeliningMazin Alwaaly

?

The document discusses pipelining in computer architecture, defining it as a technique for decomposing sequential processes into concurrent suboperations, akin to an assembly line. It elaborates on the advantages and disadvantages of pipelining, the types of pipelines (hardware and software), performance impacts due to hazards, and addressing modes, with a specific focus on RISC architecture. Additionally, it highlights the role of cache memory in enhancing pipelined execution efficiency and details issues like data and instruction hazards that can disrupt pipeline performance.week_2Lec02_CS422.pptx

week_2Lec02_CS422.pptxmivomi1

?

The document provides an overview of parallel computing concepts including:

1) Implicit parallelism in microprocessor architectures has led to techniques like pipelining and superscalar execution to better utilize increasing transistor budgets, though dependencies limit parallelism.

2) Memory latency and bandwidth bottlenecks have shifted performance limitations to the memory system, though caches can improve effective latency through higher hit rates.

3) Communication costs, including startup time, per-hop latency, and per-word transfer time, are a major overhead in parallel programs that use techniques like message passing, packet routing, and cut-through routing to reduce communication costs.Pipeline Organization Overview and Performance.pdf

Pipeline Organization Overview and Performance.pdfVenkatesanSatheeswar

?

Pipelining is a crucial technique in modern processors that enhances throughput by breaking down processes into suboperations, allowing multiple tasks to be executed in parallel. While it improves overall performance, pipelining does not speed up individual task execution and can be hindered by hazards such as data, control, and structural issues. Effective handling of these hazards, along with careful design of instruction sets and addressing modes, is vital for optimizing pipelined execution.Sayeh extension(v23)

Sayeh extension(v23)Farzan Dehbashi

?

This document describes a final project for a computer architecture course involving the design of a cache controller and pipelined controller for the SAYEH computer. It includes sections that describe the cache module components like the data array, tag-valid array, and cache replacement policy. It also covers topics like pipelining, hazards, and the design of a hazard control unit for the pipelined SAYEH computer. The document provides instructions for students to implement the project in VHDL, write a report explaining their work, and meet the deadline for submission.pipeline in computer architecture design

pipeline in computer architecture designssuser87fa0c1

?

The document discusses the concept of pipelining in computer architecture, detailing its definition, components, benefits, challenges, and implementation best practices. Pipelining enhances instruction throughput by executing multiple instructions simultaneously in a structured sequence of stages, each handling specific tasks like fetching, decoding, and executing instructions. Despite its advantages, including improved resource utilization and faster execution, pipelining poses challenges such as resource conflicts, data hazards, and increased complexity that require careful management for optimal performance.Recently uploaded (20)

Advanced Token Development - Decentralized Innovation

Advanced Token Development - Decentralized Innovationarohisinghas720

?

The world of blockchain is evolving at a fast pace, and at the heart of this transformation lies advanced token development. No longer limited to simple digital assets, todayˇŻs tokens are programmable, dynamic, and play a crucial role in driving decentralized applications across finance, governance, gaming, and beyond.

Decipher SEO Solutions for your startup needs.

Decipher SEO Solutions for your startup needs.mathai2

?

A solution deck that gives you an idea of how you can use Decipher SEO to target keywords, build authority and generate high ranking content.

With features like images to product you can create a E-commerce pipeline that is optimized to help your store rank.

With integrations with shopify, woocommerce and wordpress theres a seamless way get your content to your website or storefront.

View more at decipherseo.comCode and No-Code Journeys: The Coverage Overlook

Code and No-Code Journeys: The Coverage OverlookApplitools

?

Explore practical ways to expand visual and functional UI coverage without deep coding or heavy maintenance in this session. Session recording and more info at applitools.comMilwaukee Marketo User Group June 2025 - Optimize and Enhance Efficiency - Sm...

Milwaukee Marketo User Group June 2025 - Optimize and Enhance Efficiency - Sm...BradBedford3

?

Inspired by the Adobe Summit hands-on lab, Optimize Your Marketo Instance Performance, review the recording from June 5th to learn best practices that can optimize your smart campaign and smart list processing time, inefficient practices to try to avoid, and tips and tricks for keeping your instance running smooth!

You will learn:

How smart campaign queueing works, how flow steps are prioritized, and configurations that slow down smart campaign processing.

Best practices for smart list and smart campaign configurations that yield greater reliability and processing efficiencies.

Generally recommended timelines for reviewing instance performance: walk away from this session with a guideline of what to review in Marketo and how often to review it.

This session will be helpful for any Marketo administrator looking for opportunities to improve and streamline their instance performance. Be sure to watch to learn best practices and connect with your local Marketo peers!Smadav Pro 2025 Rev 15.4 Crack Full Version With Registration Key

Smadav Pro 2025 Rev 15.4 Crack Full Version With Registration Keyjoybepari360

?

?? ???COPY & PASTE LINK???

https://crackpurely.site/smadav-pro-crack-full-version-registration-key/Migrating to Azure Cosmos DB the Right Way

Migrating to Azure Cosmos DB the Right WayAlexander (Alex) Komyagin

?

In this session we cover the benefits of a migration to Cosmos DB, migration paths, common pain points and best practices. We share our firsthand experiences and customer stories. Adiom is the trusted partner for migration solutions that enable seamless online database migrations from MongoDB to Cosmos DB vCore, and DynamoDB to Cosmos DB for NoSQL.MOVIE RECOMMENDATION SYSTEM, UDUMULA GOPI REDDY, Y24MC13085.pptx

MOVIE RECOMMENDATION SYSTEM, UDUMULA GOPI REDDY, Y24MC13085.pptxMaharshi Mallela

?

Movie recommendation system is a software application or algorithm designed to suggest movies to users based on their preferences, viewing history, or other relevant factors. The primary goal of such a system is to enhance user experience by providing personalized and relevant movie suggestions.A Guide to Telemedicine Software Development.pdf

A Guide to Telemedicine Software Development.pdfOlivero Bozzelli

?

Learn how telemedicine software is built from the ground upˇŞstarting with idea validation, followed by tech selection, feature integration, and deployment.

Know more about this: https://www.yesitlabs.com/a-guide-to-telemedicine-software-development/Women in Tech: Marketo Engage User Group - June 2025 - AJO with AWS

Women in Tech: Marketo Engage User Group - June 2025 - AJO with AWSBradBedford3

?

Creating meaningful, real-time engagement across channels is essential to building lasting business relationships. Discover how AWS, in collaboration with Deloitte, set up one of Adobe's first instances of Journey Optimizer B2B Edition to revolutionize customer journeys for B2B audiences.

This session will share the use cases the AWS team has the implemented leveraging Adobe's Journey Optimizer B2B alongside Marketo Engage and Real-Time CDP B2B to deliver unified, personalized experiences and drive impactful engagement.

They will discuss how they are positioning AJO B2B in their marketing strategy and how AWS is imagining AJO B2B and Marketo will continue to work together in the future.

Whether youˇŻre looking to enhance customer journeys or scale your B2B marketing efforts, youˇŻll leave with a clear view of what can be achieved to help transform your own approach.

Speakers:

Britney Young Senior Technical Product Manager, AWS

Erine de Leeuw Technical Product Manager, AWSReimagining Software Development and DevOps with Agentic AI

Reimagining Software Development and DevOps with Agentic AIMaxim Salnikov

?

Key announcements about Developer Productivity from Microsoft Build 2025Async-ronizing Success at Wix - Patterns for Seamless Microservices - Devoxx ...

Async-ronizing Success at Wix - Patterns for Seamless Microservices - Devoxx ...Natan Silnitsky

?

In a world where speed, resilience, and fault tolerance define success, Wix leverages Kafka to power asynchronous programming across 4,000 microservices. This talk explores four key patterns that boost developer velocity while solving common challenges with scalable, efficient, and reliable solutions:

1. Integration Events: Shift from synchronous calls to pre-fetching to reduce query latency and improve user experience.

2. Task Queue: Offload non-critical tasks like notifications to streamline request flows.

3. Task Scheduler: Enable precise, fault-tolerant delayed or recurring workflows with robust scheduling.

4. Iterator for Long-running Jobs: Process extensive workloads via chunked execution, optimizing scalability and resilience.

For each pattern, weˇŻll discuss benefits, challenges, and how we mitigate drawbacks to create practical solutions

This session offers actionable insights for developers and architects tackling distributed systems, helping refine microservices and adopting Kafka-driven async excellence.Modern Platform Engineering with Choreo - The AI-Native Internal Developer Pl...

Modern Platform Engineering with Choreo - The AI-Native Internal Developer Pl...WSO2

?

Building and operating internal platforms has become increasingly complex ˇŞ sprawling toolchains, rising costs, and fragmented developer experiences are slowing teams down. ItˇŻs time for a new approach.

This slide deck explores modern platform engineering with Choreo, WSO2ˇŻs AI-native Internal Developer Platform as a Service. Discover how you can streamline platform operations, empower your developers, and drive faster innovation ˇŞ all while reducing complexity and cost.

Learn more: https://wso2.com/choreo/platform-engineering/Automated Migration of ESRI Geodatabases Using XML Control Files and FME

Automated Migration of ESRI Geodatabases Using XML Control Files and FMESafe Software

?

Efficient data migration is a critical challenge in geospatial data management, especially when working with complex data structures. This presentation explores an automated approach to migrating ESRI Geodatabases using FME and XML-based control files. A key advantage of this method is its adaptability: changes to the data model are seamlessly incorporated into the migration process without requiring modifications to the underlying FME workflow. By separating data model definitions from migration logic, this approach ensures flexibility, reduces maintenance effort, and enhances scalability.Best MLM Compensation Plans for Network Marketing Success in 2025

Best MLM Compensation Plans for Network Marketing Success in 2025LETSCMS Pvt. Ltd.

?

Discover the top MLM compensation plans including Unilevel, Binary, Matrix, Board, and Australian Plans. Learn how to choose the best plan for your business growth with expert insights from MLM Trees. Explore hybrid models, payout strategies, and earning potential.

Learn more: https://www.mlmtrees.com/mlm-plans/

Emvigo Capability Deck 2025: Accelerating Innovation Through Intelligent Soft...

Emvigo Capability Deck 2025: Accelerating Innovation Through Intelligent Soft...Emvigo Technologies

?

Welcome to EmvigoˇŻs Capability Deck for 2025 ¨C a comprehensive overview of how we help businesses launch, scale, and sustain digital success through cutting-edge technology solutions.

With 13+ years of experience and a presence across the UK, UAE, and India, Emvigo is an ISO-certified software company trusted by leading global organizations including the NHS, Verra, London Business School, and George Washington University.

? What We Do

We specialize in:

Rapid MVP development (go from idea to launch in just 4 weeks)

Custom software development

Gen AI applications and AI-as-a-Service (AIaaS)

Enterprise cloud architecture

DevOps, CI/CD, and infrastructure automation

QA and test automation

E-commerce platforms and performance optimization

Digital marketing and analytics integrations

? AI at the Core

Our delivery model is infused with AI ¨C from workflow optimization to proactive risk management. We leverage GenAI, NLP, and ML to make your software smarter, faster, and more secure.

? Impact in Numbers

500+ successful projects delivered

200+ web and mobile apps launched

42-month average client engagement

30+ active projects at any time

? Industries We Serve

Fintech

Healthcare

Education (E-Learning)

Sustainability & Compliance

Real Estate

Energy

Customer Experience platforms

?? Flexible Engagement Models

Whether you're looking for dedicated teams, fixed-cost projects, or time-and-materials models, we deliver with agility and transparency.

? Why Clients Choose Emvigo

? AI-accelerated delivery

? Strong focus on long-term partnerships

? Highly rated on Clutch and Google Reviews

? Proactive and adaptable to change

? Fully GDPR-compliant and security-conscious

? Visit: emvigotech.com

? Contact: sales@emvigotech.com

? Ready to scale your tech with confidence? LetˇŻs build the future, together.Shell Skill Tree - LabEx Certification (LabEx)

Shell Skill Tree - LabEx Certification (LabEx)VICTOR MAESTRE RAMIREZ

?

Shell Skill Tree - LabEx Certification (LabEx)Software Testing & itˇŻs types (DevOps)

Software Testing & itˇŻs types (DevOps)S Pranav (Deepu)

?

NTRODUCTION TO SOFTWARE TESTING

? Definition:

? Software testing is the process of evaluating and

verifying that a software application or system meets

specified requirements and functions correctly.

? Purpose:

? Identify defects and bugs in the software.

? Ensure the software meets quality standards.

? Validate that the software performs as intended in

various scenarios.

? Importance:

? Reduces risks associated with software failures.

? Improves user satisfaction and trust in the product.

? Enhances the overall reliability and performance of

the softwareHow to Choose the Right Web Development Agency.pdf

How to Choose the Right Web Development Agency.pdfCreative Fosters

?

Choosing the right web development agency is key to building a powerful online presence. This detailed guide from Creative Fosters helps you evaluate agencies based on experience, portfolio, technical skills, communication, and post-launch support. Whether you're launching a new site or revamping an old one, these expert tips will ensure you find a reliable team that understands your vision and delivers results. Avoid common mistakes and partner with an agency that aligns with your goals and budget.Emvigo Capability Deck 2025: Accelerating Innovation Through Intelligent Soft...

Emvigo Capability Deck 2025: Accelerating Innovation Through Intelligent Soft...Emvigo Technologies

?

Ad

Pipelining cache

- 1. Pipelining Cache By Riman Mandal

- 2. Contents ? What is Pipelining? ? Cache optimization ? Why Pipelining cache? ? Cache Hit and Cache Access ? How can we implement pipelining to cache ? Cache Pipelining effects ? References

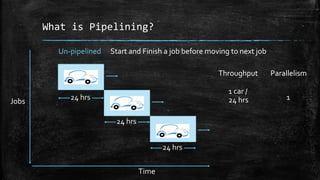

- 3. What is Pipelining? Time Jobs 24 hrs 24 hrs 24 hrs Un-pipelined Throughput Parallelism 1 car / 24 hrs 1 Start and Finish a job before moving to next job

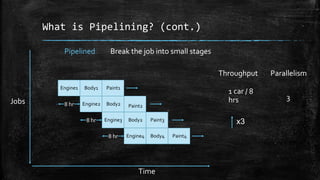

- 4. What is Pipelining? (cont.) Time Jobs Throughput Parallelism 1 car / 8 hrs 3 Pipelined Break the job into small stages Engine1 Engine2 Engine3 Engine4 Body1 Body2 Body2 Body4 Paint1 Paint2 Paint3 Paint4 8 hr 8 hr 8 hr x3

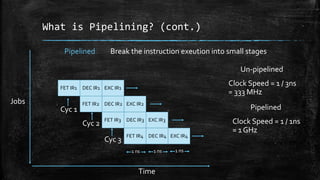

- 5. What is Pipelining? (cont.) Time Jobs 3 ns 3 ns 3 ns Un-pipelined Start and Finish an instruction execution before moving to next instruction FET DEC EXE FET DEC EXE FET DEC EXE Cyc 1 Cyc 2 Cyc 3

- 6. What is Pipelining? (cont.) Time Jobs Pipelined Break the instruction exeution into small stages FET IR1 FET IR2 FET IR3 FET IR4 DEC IR1 DEC IR2 DEC IR3 DEC IR4 EXC IR1 EXC IR2 EXC IR3 EXC IR4 Cyc 1 Cyc 2 Cyc 3 1 ns 1 ns 1 ns Un-pipelined Clock Speed = 1 / 3ns = 333 MHz Pipelined Clock Speed = 1 / 1ns = 1 GHz

- 7. Cache optimization ? Average memory access time(AMAT) = Hit time + Miss rate ˇÁ Miss penalty ? 5 matrices : hit time, miss rate, miss penalty, bandwidth, power consumption ? Optimizing CacheAccessTime ¨C Reducing the hit time (1st level catch, way-prediction) ¨C Increasing cache bandwidth (pipelining cache, non-blocking cache, multibanked cache) ¨C Reducing the miss penalty (critical word first, merging write buffers) ¨C Reducing the miss rate (compiler optimizations) ¨C Reducing the miss penalty or miss rate via parallelism (prefetching)

- 8. Why Pipelining Cache? ? Basically used for L1 Cache. ? Multiple Cycles to access the cache ¨C Access comes in cycle N (hit) ¨C Access comes in Cycle N+1 (hit) (Has to wait) ?Hit time = Actual hit time + wait time

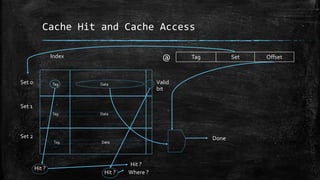

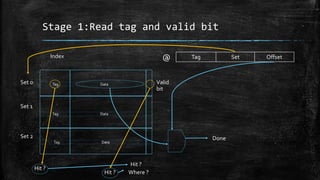

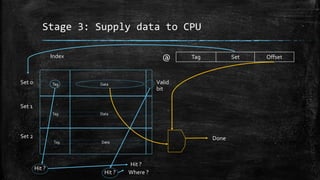

- 9. Cache Hit and Cache Access Tag Set Offset@ Tag Data Tag Data Tag Data Set 0 Set 1 Set 2 Hit ? Hit ? Hit ? Where ? Index Done Valid bit

- 10. Designing a 3 Stage pipeline Cache ? Reading the tag and validity bit. ? Combine the result and find out the actual hit and start data read. ? Finishing the data read and transfer data to CPU. Retrieve tag and valid bit Is Hit? Start data read Serve CPU request

- 11. Stage 1:Read tag and valid bit Tag Set Offset@ Tag Data Tag Data Tag Data Set 0 Set 1 Set 2 Hit ? Hit ? Hit ? Where ? Index Done Valid bit

- 12. Stage 2: If Hit start reading Tag Set Offset@ Tag Data Tag Data Tag Data Set 0 Set 1 Set 2 Hit ? Hit ? Hit ? Where ? Index Done Valid bit

- 13. Stage 3: Supply data to CPU Tag Set Offset@ Tag Data Tag Data Tag Data Set 0 Set 1 Set 2 Hit ? Hit ? Hit ? Where ? Index Done Valid bit

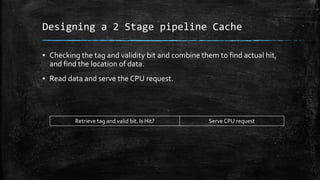

- 14. Designing a 2 Stage pipeline Cache ? Checking the tag and validity bit and combine them to find actual hit, and find the location of data. ? Read data and serve the CPU request. Retrieve tag and valid bit. Is Hit? Serve CPU request

- 15. Example ? Instruction-cache pipeline stages: ¨C Pentium: 1 stage ¨C Pentium Pro through Pentium III: 2 stages ¨C Pentium 4: 4 stages

- 16. Pipeline Cache Efficiency ? Increases the bandwidth ? increasing the number of pipeline stages leading to ¨C greater penalty on mispredicted branches ¨C more clock cycles between issuing the load and using the data Technique Hit time Bandwidth Miss penalty Miss rate Power consumption Pipelining Cache _ +

- 17. References ? https://www.udacity.com/course/high-performance-computer- architecture--ud007 ? https://www.youtube.com/watch?v=r9AxfQB_qlc ? ˇ°ComputerArchitecture: A Quantitative Approach Fifth Editionˇ±, by Hennessy & Patterson