Soham Patra_13000120121.pdf

0 likes9 views

Principal component analysis (PCA) is an unsupervised machine learning algorithm used for dimensionality reduction. It converts correlated variables into uncorrelated principal components. PCA calculates the eigenvalues and eigenvectors of the covariance matrix to project the data onto a new feature space with fewer dimensions while retaining as much information as possible. The first principal component accounts for the largest variation in the data, with each subsequent component accounting for less variation.

1 of 16

Download to read offline

![To find the eigenvalues of a n*n matrix we need to solve the following equation:

det(C â ðI) =0

â = =

= â ð3 + 114 ð 2 â 1170 ð + 2548 = 0

Solving the above equation gives us the roots as

ð 1 = 102.86

ð 2 = 8.06

ð 3 = 3.07

Finding the Eigenvalues

50 29 39

29 28 26

39 26 36

50 - ð 29 39

29 28 - ð 26

39 26 36 - ð

(50 â ð) [ (28- ð)(36- ð) â 26*26 ]

+ 29 [ 26*39 â 29*(36- ð) ]

+ 39 [ 29*26 â (28- ð)*39 ]

Since the eigenvalue ð 3 is very less it can be left out and we can carry

out the operations with ð 1 and ð 2

ð 0 0

0 ð 0

0 0 ð](https://image.slidesharecdn.com/sohampatra13000120121-220729145654-898036e6/85/Soham-Patra_13000120121-pdf-7-320.jpg)

Recommended

ąĘīĮ·Éąð°ųģåīÚąôīĮ·É..ŲØļŲ

ģåŲØŊØąØĐ.ąčąčģŲģæ

ąĘīĮ·Éąð°ųģåīÚąôīĮ·É..ŲØļŲ

ģåŲØŊØąØĐ.ąčąčģŲģæAhmedAbdAldafea

Ėý

Power flow studies are used to analyze power systems under steady state conditions. They compute voltage magnitudes and angles at each bus to determine if loads are met, voltages are within limits, generators are within power limits, and lines are not overloaded. The power flow problem is formulated as a set of nonlinear equations solved iteratively. For a 3-bus system example, the Gauss-Seidel method found bus voltages of 0.98305â -1.8° and 1.0011â -2.68° after two iterations. The slack bus real and reactive powers were calculated as 384 MW and 197.86 MW respectively. Line flows and losses were also determined.Quatum fridge

Quatum fridgeJun Steed Huang

Ėý

In order to provide the design guidance for a multiple stage refrigerator for hosting a quantum computing device targeting for unmanned transportation platform. We provides a modeling analysis based on a preliminary single stage test data, by using Brain Storm Optimization algorithm.Department of MathematicsMTL107 Numerical Methods and Com.docx

Department of MathematicsMTL107 Numerical Methods and Com.docxsalmonpybus

Ėý

Department of Mathematics

MTL107: Numerical Methods and Computations

Exercise Set 8: Approximation-Linear Least Squares Polynomial approximation, Chebyshev

Polynomial approximation.

1. Compute the linear least square polynomial for the data:

i xi yi

1 0 1.0000

2 0.25 1.2840

3 0.50 1.6487

4 0.75 2.1170

5 1.00 2.7183

2. Find the least square polynomials of degrees 1,2 and 3 for the data in the following talbe.

Compute the error E in each case. Graph the data and the polynomials.

:

xi 1.0 1.1 1.3 1.5 1.9 2.1

yi 1.84 1.96 2.21 2.45 2.94 3.18

3. Given the data:

xi 4.0 4.2 4.5 4.7 5.1 5.5 5.9 6.3 6.8 7.1

yi 113.18 113.18 130.11 142.05 167.53 195.14 224.87 256.73 299.50 326.72

a. Construct the least squared polynomial of degree 1, and compute the error.

b. Construct the least squared polynomial of degree 2, and compute the error.

c. Construct the least squared polynomial of degree 3, and compute the error.

d. Construct the least squares approximation of the form beax, and compute the error.

e. Construct the least squares approximation of the form bxa, and compute the error.

4. The following table lists the college grade-point averages of 20 mathematics and computer

science majors, together with the scores that these students received on the mathematics

portion of the ACT (Americal College Testing Program) test while in high school. Plot

these data, and find the equation of the least squares line for this data:

:

ACT Grade-point ACT Grade-point

score average score average

28 3.84 29 3.75

25 3.21 28 3.65

28 3.23 27 3.87

27 3.63 29 3.75

28 3.75 21 1.66

33 3.20 28 3.12

28 3.41 28 2.96

29 3.38 26 2.92

23 3.53 30 3.10

27 2.03 24 2.81

5. Find the linear least squares polynomial approximation to f(x) on the indicated interval

if

a. f(x) = x2 + 3x+ 2, [0, 1]; b. f(x) = x3, [0, 2];

c. f(x) = 1

x

, [1, 3]; d. f(x) = ex, [0, 2];

e. f(x) = 1

2

cosx+ 1

3

sin 2x, [0, 1]; f. f(x) = x lnx, [1, 3];

6. Find the least square polynomial approximation of degrees 2 to the functions and intervals

in Exercise 5.

7. Compute the error E for the approximations in Exercise 6.

8. Use the Gram-Schmidt process to construct Ï0(x), Ï1(x), Ï2(x) and Ï3(x) for the following

intervals.

a. [0,1] b. [0,2] c. [1,3]

9. Obtain the least square approximation polynomial of degree 3 for the functions in Exercise

5 using the results of Exercise 8.

10. Use the Gram-Schmidt procedure to calculate L1, L2, L3 where {L0(x), L1(x), L2(x), L3(x)}

is an orthogonal set of polynomials on (0,â) with respect to the weight functions w(x) =

eâx and L0(x) = 1. The polynomials obtained from this procedure are called the La-

guerre polynomials.

11. Use the zeros of TĖ3, to construct an interpolating polynomial of degree 2 for the following

functions on the interval [-1,1]:

a. f(x) = ex, b. f(x) = sinx, c. f(x) = ln(x+ 2), d. f(x) = x4.

12. Find a bound for the maximum error of the approximation in Exercise 1 on the interval

[-1,1].

13. Use the zer.Response Surface in Tensor Train format for Uncertainty Quantification

Response Surface in Tensor Train format for Uncertainty QuantificationAlexander Litvinenko

Ėý

We apply low-rank Tensor Train format to solve PDEs with uncertain coefficients. First, we approximate uncertain permeability coefficient in TT format, then the operator and then apply iterations to solve stochastic Galerkin system.Principal Component Analysis(PCA) understanding document

Principal Component Analysis(PCA) understanding documentNaveen Kumar

Ėý

PCA is applied to reduce a dataset into fewer dimensions while retaining most of the variation in the data. It works by calculating the covariance matrix of the data and extracting eigenvectors with the highest eigenvalues, which become the principal components. The EJML Java library can be used to perform PCA by adding sample data, computing the basis using eigenvectors, and projecting samples into the reduced eigenvector space. PCA is generally not useful for datasets containing mostly 0s and 1s, as such sparse data is already in a compact format.A New Approach to Design a Reduced Order Observer

A New Approach to Design a Reduced Order ObserverIJERD Editor

Ėý

This document proposes a new method for designing reduced order observers for linear time-invariant systems. The approach is based on inverting matrices of proper dimensions. It reduces the arbitrariness of previous methods by using pole-placement techniques. The method is applied to design a reduced order observer for a 3rd order system. Simulation results show the observer estimates converge to the true system states.E33018021

E33018021IJERA Editor

Ėý

International Journal of Engineering Research and Applications (IJERA) is an open access online peer reviewed international journal that publishes research and review articles in the fields of Computer Science, Neural Networks, Electrical Engineering, Software Engineering, Information Technology, Mechanical Engineering, Chemical Engineering, Plastic Engineering, Food Technology, Textile Engineering, Nano Technology & science, Power Electronics, Electronics & Communication Engineering, Computational mathematics, Image processing, Civil Engineering, Structural Engineering, Environmental Engineering, VLSI Testing & Low Power VLSI Design etc.Solution of matlab chapter 3

Solution of matlab chapter 3AhsanIrshad8

Ėý

This document provides solutions to 21 problems involving vector and matrix operations in MATLAB. Some key problems include:

- Calculating values of functions for given inputs using element-by-element operations

- Finding the length, unit vector, and angle between vectors

- Performing operations like addition, multiplication, exponentiation on vectors and using vectors in expressions

- Computing the center of mass and verifying vector identities

- Solving physics problems involving projectile motion using vector componentsUnit 2

Unit 2ypnrao

Ėý

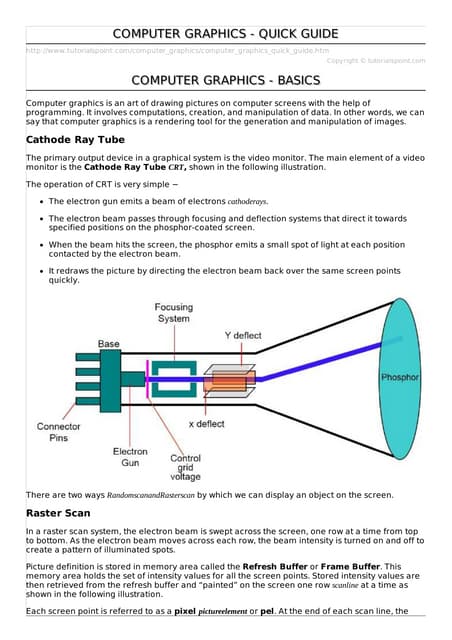

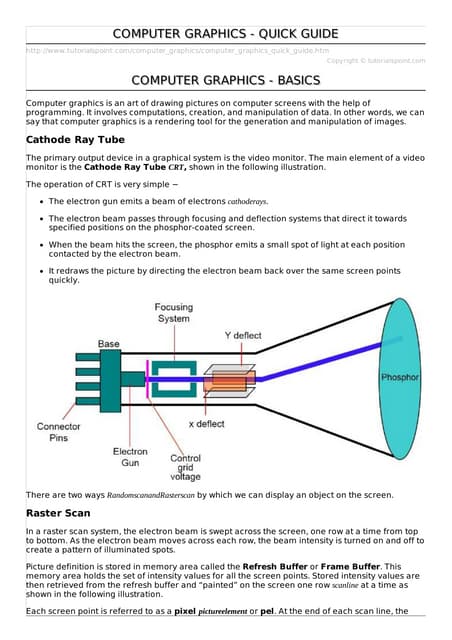

This document provides a brief overview of computer graphics. It discusses how computer graphics works by rendering images through programming computations and data manipulation. It describes the cathode ray tube as the primary output device for early graphical systems. It also summarizes two common scanning techniques - raster scan and random scan/vector scan. Additional topics covered include line generation algorithms like DDA, Bresenham's, and mid-point algorithms. It concludes with some common applications of computer graphics like GUIs, business presentations, mapping, and medical imaging.ADAPTIVE FUZZY KERNEL CLUSTERING ALGORITHM

ADAPTIVE FUZZY KERNEL CLUSTERING ALGORITHMWireilla

Ėý

Fuzzy clustering algorithm can not obtain good clustering effect when the sample characteristic is not obvious and need to determine the number of clusters firstly. For thi0s reason, this paper proposes an adaptive fuzzy kernel clustering algorithm. The algorithm firstly use the adaptive function of clustering number to calculate the optimal clustering number, then the samples of input space is mapped to highdimensional feature space using gaussian kernel and clustering in the feature space. The Matlab simulation results confirmed that the algorithm's performance has greatly improvement than classical clustering algorithm and has faster convergence speed and more accurate clustering results.ADAPTIVE FUZZY KERNEL CLUSTERING ALGORITHM

ADAPTIVE FUZZY KERNEL CLUSTERING ALGORITHMijfls

Ėý

Fuzzy clustering algorithm can not obtain good clustering effect when the sample characteristic is not

obvious and need to determine the number of clusters firstly. For thi0s reason, this paper proposes an

adaptive fuzzy kernel clustering algorithm. The algorithm firstly use the adaptive function of clustering

number to calculate the optimal clustering number, then the samples of input space is mapped to highdimensional

feature space using gaussian kernel and clustering in the feature space. The Matlab simulation

results confirmed that the algorithm's performance has greatly improvement than classical clustering algorithm and has faster convergence speed and more accurate clustering resultsDesign and Implementation of Parallel and Randomized Approximation Algorithms

Design and Implementation of Parallel and Randomized Approximation AlgorithmsAjay Bidyarthy

Ėý

This document summarizes the design and implementation of parallel and randomized approximation algorithms for solving matrix games, linear programs, and semi-definite programs. It presents solvers for these problems that provide approximate solutions in sublinear or near-linear time. It analyzes the performance and precision-time tradeoffs of the solvers compared to other algorithms. It also provides examples of applying the SDP solver to approximate the Lovasz theta function.ML ALL in one (1).pdf

ML ALL in one (1).pdfAADITYADARAKH1

Ėý

MACHINE LEARNING IS GOING TO DEFINE THE NEXT ERA; PEOPLE SAY IT WILL HAVE SIMILAR EFFECTS AS ELECTRICITY OR INTERNET HAD IN their TIMES .ARITIFICIAL INTELLIGENCE IS THE NEXT BEST THING IN THE WORLD OF TECHNOLOGY , BLOCKCHAIN AFTER THAT 5 DimensionalityReduction.pdf

5 DimensionalityReduction.pdfRahul926331

Ėý

This document provides an overview of dimensionality reduction techniques, specifically principal component analysis (PCA). It begins with acknowledging dimensionality reduction aims to choose a lower-dimensional set of features to improve classification accuracy. Feature extraction and feature selection are introduced as two common dimensionality reduction methods. PCA is then explained in detail, including how it seeks a new set of basis vectors that maximizes retained variance from the original data. Key mathematical steps of PCA are outlined, such as computing the covariance matrix and its eigenvectors/eigenvalues to determine the principal components.The rules of indices

The rules of indicesYu Kok Hui

Ėý

The document discusses rules for simplifying expressions involving indices (exponents). It defines indices as powers and explains that the plural of index is indices. It then presents four rules:

1) Multiplication of Indices: an à am = an+m

2) Division of Indices: an ÷ am = an-m

3) For negative indices: a-m = 1/am

4) For Powers of Indices: (am)n = amn

The document applies these rules to simplify various expressions involving integer indices. It also extends the rules to expressions involving fractional indices obtained from roots.assignemts.pdf

assignemts.pdframish32

Ėý

1) The document describes a fractional order nonlinear quarter car suspension model. It establishes integer and fractional order differential equations to model the system.

2) Key parameters of the suspension system are defined including mass, stiffness coefficients, and hysteretic nonlinear damping forces. State space and discrete forms of the fractional order model are presented.

3) Numerical methods for solving the fractional order differential equations are discussed, including the Adams-Bashforth-Moulton algorithm used to analyze the quarter car model. Stability of equilibrium points is analyzed.redes neuronais

redes neuronaisRoland Silvestre

Ėý

This document provides resolutions to exercises related to artificial neural networks. It includes derivatives of activation functions, recursive definitions of B-spline functions, correlation matrices for input patterns, and explanations of linear separability and implementing logic functions like AND, OR, and XOR using perceptrons.Graphics6 bresenham circlesandpolygons

Graphics6 bresenham circlesandpolygonsKetan Jani

Ėý

The document discusses several common algorithms for computer graphics rendering including Bresenham's line drawing algorithm, the midpoint circle algorithm, and scanline polygon filling. Bresenham's algorithm uses only integer calculations to efficiently draw lines. The midpoint circle algorithm incrementally chooses pixel coordinates to draw circles with eightfold symmetry and without floating point operations. Scanline polygon filling determines the edge intersections on each scanline and fills pixels between interior intersections.Graphics6 bresenham circlesandpolygons

Graphics6 bresenham circlesandpolygonsThirunavukarasu Mani

Ėý

The document discusses several common algorithms for computer graphics rendering including Bresenham's line drawing algorithm, the midpoint circle algorithm, and scanline polygon filling. Bresenham's algorithm uses only integer calculations to efficiently draw lines. The midpoint circle algorithm incrementally chooses pixel coordinates to draw circles with eightfold symmetry and without floating point operations. Scanline polygon filling finds the edge intersections on each scanline and fills pixels between interior intersections.The following ppt is about principal component analysis

The following ppt is about principal component analysisSushmit8

Ėý

Principal Components Analysis (PCA) is an exploratory technique used to reduce the dimensionality of data sets while retaining as much information as possible. It transforms a number of correlated variables into a smaller number of uncorrelated variables called principal components. PCA is commonly used for applications like face recognition, image compression, and gene expression analysis by reducing the dimensions of large data sets and finding patterns in the data.pca.ppt

pca.pptShivareddyGangam

Ėý

Principal Components Analysis (PCA) is an exploratory technique used to reduce the dimensionality of data sets while retaining as much information as possible. It transforms a number of correlated variables into a smaller number of uncorrelated variables called principal components. PCA is commonly used for applications like face recognition, image compression, and gene expression analysis by reducing the dimensions of large data sets and finding patterns in the data.Lelt 240 semestre i-2021

Lelt 240 semestre i-2021FrancoMaytaBernal

Ėý

This document provides the mathematical modeling and transfer function of an overhead crane. It defines the variables used such as position, cable length, and angle. It derives the kinetic and potential energies of the crane platform and pendulum and determines the Lagrangian. From this, it obtains the equations of motion for the crane position and cable angle. Small angle approximations yield a transfer function that relates the crane position to the applied force as a function of system parameters like masses and cable length.More Related Content

Similar to Soham Patra_13000120121.pdf (20)

Solution of matlab chapter 3

Solution of matlab chapter 3AhsanIrshad8

Ėý

This document provides solutions to 21 problems involving vector and matrix operations in MATLAB. Some key problems include:

- Calculating values of functions for given inputs using element-by-element operations

- Finding the length, unit vector, and angle between vectors

- Performing operations like addition, multiplication, exponentiation on vectors and using vectors in expressions

- Computing the center of mass and verifying vector identities

- Solving physics problems involving projectile motion using vector componentsUnit 2

Unit 2ypnrao

Ėý

This document provides a brief overview of computer graphics. It discusses how computer graphics works by rendering images through programming computations and data manipulation. It describes the cathode ray tube as the primary output device for early graphical systems. It also summarizes two common scanning techniques - raster scan and random scan/vector scan. Additional topics covered include line generation algorithms like DDA, Bresenham's, and mid-point algorithms. It concludes with some common applications of computer graphics like GUIs, business presentations, mapping, and medical imaging.ADAPTIVE FUZZY KERNEL CLUSTERING ALGORITHM

ADAPTIVE FUZZY KERNEL CLUSTERING ALGORITHMWireilla

Ėý

Fuzzy clustering algorithm can not obtain good clustering effect when the sample characteristic is not obvious and need to determine the number of clusters firstly. For thi0s reason, this paper proposes an adaptive fuzzy kernel clustering algorithm. The algorithm firstly use the adaptive function of clustering number to calculate the optimal clustering number, then the samples of input space is mapped to highdimensional feature space using gaussian kernel and clustering in the feature space. The Matlab simulation results confirmed that the algorithm's performance has greatly improvement than classical clustering algorithm and has faster convergence speed and more accurate clustering results.ADAPTIVE FUZZY KERNEL CLUSTERING ALGORITHM

ADAPTIVE FUZZY KERNEL CLUSTERING ALGORITHMijfls

Ėý

Fuzzy clustering algorithm can not obtain good clustering effect when the sample characteristic is not

obvious and need to determine the number of clusters firstly. For thi0s reason, this paper proposes an

adaptive fuzzy kernel clustering algorithm. The algorithm firstly use the adaptive function of clustering

number to calculate the optimal clustering number, then the samples of input space is mapped to highdimensional

feature space using gaussian kernel and clustering in the feature space. The Matlab simulation

results confirmed that the algorithm's performance has greatly improvement than classical clustering algorithm and has faster convergence speed and more accurate clustering resultsDesign and Implementation of Parallel and Randomized Approximation Algorithms

Design and Implementation of Parallel and Randomized Approximation AlgorithmsAjay Bidyarthy

Ėý

This document summarizes the design and implementation of parallel and randomized approximation algorithms for solving matrix games, linear programs, and semi-definite programs. It presents solvers for these problems that provide approximate solutions in sublinear or near-linear time. It analyzes the performance and precision-time tradeoffs of the solvers compared to other algorithms. It also provides examples of applying the SDP solver to approximate the Lovasz theta function.ML ALL in one (1).pdf

ML ALL in one (1).pdfAADITYADARAKH1

Ėý

MACHINE LEARNING IS GOING TO DEFINE THE NEXT ERA; PEOPLE SAY IT WILL HAVE SIMILAR EFFECTS AS ELECTRICITY OR INTERNET HAD IN their TIMES .ARITIFICIAL INTELLIGENCE IS THE NEXT BEST THING IN THE WORLD OF TECHNOLOGY , BLOCKCHAIN AFTER THAT 5 DimensionalityReduction.pdf

5 DimensionalityReduction.pdfRahul926331

Ėý

This document provides an overview of dimensionality reduction techniques, specifically principal component analysis (PCA). It begins with acknowledging dimensionality reduction aims to choose a lower-dimensional set of features to improve classification accuracy. Feature extraction and feature selection are introduced as two common dimensionality reduction methods. PCA is then explained in detail, including how it seeks a new set of basis vectors that maximizes retained variance from the original data. Key mathematical steps of PCA are outlined, such as computing the covariance matrix and its eigenvectors/eigenvalues to determine the principal components.The rules of indices

The rules of indicesYu Kok Hui

Ėý

The document discusses rules for simplifying expressions involving indices (exponents). It defines indices as powers and explains that the plural of index is indices. It then presents four rules:

1) Multiplication of Indices: an à am = an+m

2) Division of Indices: an ÷ am = an-m

3) For negative indices: a-m = 1/am

4) For Powers of Indices: (am)n = amn

The document applies these rules to simplify various expressions involving integer indices. It also extends the rules to expressions involving fractional indices obtained from roots.assignemts.pdf

assignemts.pdframish32

Ėý

1) The document describes a fractional order nonlinear quarter car suspension model. It establishes integer and fractional order differential equations to model the system.

2) Key parameters of the suspension system are defined including mass, stiffness coefficients, and hysteretic nonlinear damping forces. State space and discrete forms of the fractional order model are presented.

3) Numerical methods for solving the fractional order differential equations are discussed, including the Adams-Bashforth-Moulton algorithm used to analyze the quarter car model. Stability of equilibrium points is analyzed.redes neuronais

redes neuronaisRoland Silvestre

Ėý

This document provides resolutions to exercises related to artificial neural networks. It includes derivatives of activation functions, recursive definitions of B-spline functions, correlation matrices for input patterns, and explanations of linear separability and implementing logic functions like AND, OR, and XOR using perceptrons.Graphics6 bresenham circlesandpolygons

Graphics6 bresenham circlesandpolygonsKetan Jani

Ėý

The document discusses several common algorithms for computer graphics rendering including Bresenham's line drawing algorithm, the midpoint circle algorithm, and scanline polygon filling. Bresenham's algorithm uses only integer calculations to efficiently draw lines. The midpoint circle algorithm incrementally chooses pixel coordinates to draw circles with eightfold symmetry and without floating point operations. Scanline polygon filling determines the edge intersections on each scanline and fills pixels between interior intersections.Graphics6 bresenham circlesandpolygons

Graphics6 bresenham circlesandpolygonsThirunavukarasu Mani

Ėý

The document discusses several common algorithms for computer graphics rendering including Bresenham's line drawing algorithm, the midpoint circle algorithm, and scanline polygon filling. Bresenham's algorithm uses only integer calculations to efficiently draw lines. The midpoint circle algorithm incrementally chooses pixel coordinates to draw circles with eightfold symmetry and without floating point operations. Scanline polygon filling finds the edge intersections on each scanline and fills pixels between interior intersections.The following ppt is about principal component analysis

The following ppt is about principal component analysisSushmit8

Ėý

Principal Components Analysis (PCA) is an exploratory technique used to reduce the dimensionality of data sets while retaining as much information as possible. It transforms a number of correlated variables into a smaller number of uncorrelated variables called principal components. PCA is commonly used for applications like face recognition, image compression, and gene expression analysis by reducing the dimensions of large data sets and finding patterns in the data.pca.ppt

pca.pptShivareddyGangam

Ėý

Principal Components Analysis (PCA) is an exploratory technique used to reduce the dimensionality of data sets while retaining as much information as possible. It transforms a number of correlated variables into a smaller number of uncorrelated variables called principal components. PCA is commonly used for applications like face recognition, image compression, and gene expression analysis by reducing the dimensions of large data sets and finding patterns in the data.Lelt 240 semestre i-2021

Lelt 240 semestre i-2021FrancoMaytaBernal

Ėý

This document provides the mathematical modeling and transfer function of an overhead crane. It defines the variables used such as position, cable length, and angle. It derives the kinetic and potential energies of the crane platform and pendulum and determines the Lagrangian. From this, it obtains the equations of motion for the crane position and cable angle. Small angle approximations yield a transfer function that relates the crane position to the applied force as a function of system parameters like masses and cable length.Recently uploaded (20)

Water Industry Process Automation & Control Monthly - April 2025

Water Industry Process Automation & Control Monthly - April 2025Water Industry Process Automation & Control

Ėý

Welcome to the April 2025 edition of WIPAC Monthly, the magazine brought to you by the LInkedIn Group Water Industry Process Automation & Control.

In this month's issue, along with all of the industries news we have a number of great articles for your edification

The first article is my annual piece looking behind the storm overflow numbers that are published each year to go into a bit more depth and look at what the numbers are actually saying.

The second article is a taster of what people will be seeing at the SWAN Annual Conference next month in Berlin and looks at the use of fibre-optic cable for leak detection and how its a technology we should be using more of

The third article, by Rob Stevens, looks at what the options are for the Continuous Water Quality Monitoring that the English Water Companies will be installing over the next year and the need to ensure that we install the right technology from the start.

Hope you enjoy the current edition,

OliverWhy the Engineering Model is Key to Successful Projects

Why the Engineering Model is Key to Successful ProjectsMaadhu Creatives-Model Making Company

Ėý

In this PDF document, the importance of engineering models in successful project execution is discussed. It explains how these models enhance visualization, planning, and communication. Engineering models help identify potential issues early, reducing risks and costs. Ultimately, they improve collaboration and client satisfaction by providing a clear representation of the project.he Wright brothers, Orville and Wilbur, invented and flew the first successfu...

he Wright brothers, Orville and Wilbur, invented and flew the first successfu...HardeepZinta2

Ėý

The Wright brothers, Orville and Wilbur, invented and flew the first successful airplane in 1903. Their flight took place in Kitty Hawk, North Carolina. Optimize AI Latency & Response Time with LLumo

Optimize AI Latency & Response Time with LLumosgupta86

Ėý

Long response times kill user experience. We provide real-time monitoring and optimizations to ensure fast, seamless interactions.NFPA 70B & 70E Changes and Additions Webinar Presented By Fluke

NFPA 70B & 70E Changes and Additions Webinar Presented By FlukeTranscat

Ėý

Join us for this webinar about NFPA 70B & 70E changes and additions. NFPA 70B and NFPA 70E are both essential standards from the National Fire Protection Association (NFPA) that focus on electrical safety in the workplace. Both standards are critical for protecting workers, reducing the risk of electrical accidents, and ensuring compliance with safety regulations in industrial and commercial environments.

Fluke Sales Applications Manager Curt Geeting is presenting on this engaging topic:

Curt has worked for Fluke for 24 years. He currently is the Senior Sales Engineer in the NYC & Philadelphia Metro Markets. In total, Curt has worked 40 years in the industry consisting of 14 years in Test Equipment Distribution, 4+ years in Mfg. Representation, NAED Accreditation, Level 1 Thermographer, Level 1 Vibration Specialist, and Power Quality SME.Unit-03 Cams and Followers in Mechanisms of Machines.pptx

Unit-03 Cams and Followers in Mechanisms of Machines.pptxKirankumar Jagtap

Ėý

Unit-03 Cams and Followers.pptxAirport Components Part1 ppt.pptx-Site layout,RUNWAY,TAXIWAY,TAXILANE

Airport Components Part1 ppt.pptx-Site layout,RUNWAY,TAXIWAY,TAXILANEPriyanka Dange

Ėý

RUNWAY,TAXIWAY,TAXILANEDesigning of full bridge LLC Resonant converter

Designing of full bridge LLC Resonant converterNITISHKUMAR143199

Ėý

Designing of full bridge LLC Resonant converterKamal 2, new features and practical examples

Kamal 2, new features and practical examplesIgor Aleksandrov

Ėý

šÝšÝßĢs about Kamal 2 features and some practical examples.

Showed live on the Tropical on Rails 2025, SÃĢo Paulo, Brazil, April 4th, 2025.Knowledge-Based Agents in AI: Principles, Components, and Functionality

Knowledge-Based Agents in AI: Principles, Components, and FunctionalityRashmi Bhat

Ėý

This PowerPoint presentation provides an in-depth exploration of Knowledge-Based Agents (KBAs) in Artificial Intelligence (AI). It explains how these agents make decisions using stored knowledge and logical reasoning rather than direct sensor input. The presentation covers key components such as the Knowledge Base (KB), Inference Engine, Perception, and Action Execution.

Key topics include:

â

Definition and Working Mechanism of Knowledge-Based Agents

â

The Process of TELL, ASK, and Execution in AI Agents

â

Representation of Knowledge and Decision-Making Approaches

â

Logical Inference and Rule-Based Reasoning

â

Applications of Knowledge-Based Agents in Real-World AI

This PPT is useful for students, educators, and AI enthusiasts who want to understand how intelligent agents operate using stored knowledge and logic-based inference. The slides are well-structured with explanations, examples, and an easy-to-follow breakdown of AI agent functions.Floating Offshore Wind in the Celtic Sea

Floating Offshore Wind in the Celtic Seapermagoveu

Ėý

Floating offshore wind (FLOW) governance arrangements in the Celtic Sea case are changing and innovating in response to different drivers including domestic political priorities (e.g. net-zero, decarbonization, economic growth) and external shocks that emphasize the need for energy security (e.g. the war in Ukraine).

To date, the rules of the game that guide floating wind in the UK have evolved organically rather than being designed with intent, which has created policy incoherence and fragmented governance arrangements. Despite this fragmentation, the UK has a well-established offshore wind sector and is positioning itself to become a global leader in floating wind.

Marine governance arrangements are in a state of flux as new actors, resources, and rules of the game are being introduced to deliver on this aspiration. However, the absence of a clear roadmap to deliver on ambitious floating wind targets by 2030 creates uncertainty for investors, reduces the likelihood that a new floating wind sector will deliver economic and social value to the UK, and risks further misalignment between climate and nature goals.Project Manager | Integrated Design Expert

Project Manager | Integrated Design ExpertBARBARA BIANCO

Ėý

Barbara Bianco

Project Manager and Project Architect, with extensive experience in managing and developing complex projects from concept to completion. Since September 2023, she has been working as a Project Manager at MAB Arquitectura, overseeing all project phases, from concept design to construction, with a strong focus on artistic direction and interdisciplinary coordination.

Previously, she worked at Progetto CMR for eight years (2015-2023), taking on roles of increasing responsibility: initially as a Project Architect, and later as Head of Research & Development and Competition Area (2020-2023).

She graduated in Architecture from the University of Genoa and obtained a Level II Masterâs in Digital Architecture and Integrated Design from the INArch Institute in Rome, earning the MAD Award. In 2009, she won First Prize at Urban Promo Giovani with the project "From Urbanity to Humanity", a redevelopment plan for the Maddalena district of Genoa focused on the visual and perceptive rediscovery of the city.

Experience & Projects

Barbara has developed projects for major clients across various sectors (banking, insurance, real estate, corporate), overseeing both the technical and aesthetic aspects while coordinating multidisciplinary teams. Notable projects include:

The Sign Business District for Covivio, Milan

New L'OrÃĐal Headquarters in Milan, Romolo area

Redevelopment of Via C. Colombo in Rome for Prelios, now the PWC headquarters

Interior design for Spark One & Spark Two, two office buildings in the Santa Giulia district, Milan (Spark One: 53,000 mÂē) for In.Town-Lendlease

She has also worked on international projects such as:

International Specialized Hospital of Uganda (ISHU) â Kampala

Palazzo Milano, a residential building in Taiwan for Chonghong Construction

Chua Lang Street Building, a hotel in Hanoi

Manjiangwan Masterplan, a resort in China

Key Skills

âïļ Integrated design: managing and developing projects from concept to completion

âïļ Artistic direction: ensuring aesthetic quality and design consistency

âïļ Project management: coordinating clients, designers, and multidisciplinary consultants

âïļ Software proficiency: AutoCAD, Photoshop, InDesign, Office Suite

âïļ Languages: Advanced English, Basic French

âïļ Leadership & problem-solving: ability to lead teams and manage complex processes in dynamic environmentsUHV UNIT-5 IMPLICATIONS OF THE ABOVE HOLISTIC UNDERSTANDING OF HARMONY ON ...

UHV UNIT-5 IMPLICATIONS OF THE ABOVE HOLISTIC UNDERSTANDING OF HARMONY ON ...ariomthermal2031

Ėý

IMPLICATIONS OF THE ABOVE HOLISTIC UNDERSTANDING OF HARMONY ON PROFESSIONAL ETHICS Water Industry Process Automation & Control Monthly - April 2025

Water Industry Process Automation & Control Monthly - April 2025Water Industry Process Automation & Control

Ėý

Soham Patra_13000120121.pdf

- 2. It is an unsupervised learning algorithm that is used for the dimensionality reduction in machine learning Unsupervised learning â discovering patterns in the data set without human help example - clustering Dimensionality Reduction â Types: 1. Feature Elimination â eliminating some of the features to reduce feature space 2. Feature Extraction â create new features where each new feature is a combination of the old features What is Principal Component Analysis ?

- 3. PCA converts correlated features into a set of uncorrelated features with the help of orthogonal transformation. These new features are called Principal Components. It is a technique to draw strong patterns from the given dataset by reducing the no of variables. PCA Algorithm is based on mainly two mathematical concepts: 1. Variance and Covariance 2. Eigenvalues and Eigenvector What is Principal Component Analysis ?

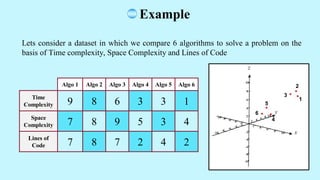

- 4. Lets consider a dataset in which we compare 6 algorithms to solve a problem on the basis of Time complexity, Space Complexity and Lines of Code Algo 1 Algo 2 Algo 3 Algo 4 Algo 5 Algo 6 Time Complexity 9 8 6 3 3 1 Space Complexity 7 8 9 5 3 4 Lines of Code 7 8 7 2 4 2 1 2 5 4 6 3 Example

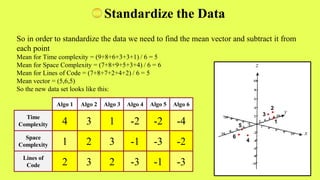

- 5. Algo 1 Algo 2 Algo 3 Algo 4 Algo 5 Algo 6 Time Complexity 4 3 1 -2 -2 -4 Space Complexity 1 2 3 -1 -3 -2 Lines of Code 2 3 2 -3 -1 -3 So in order to standardize the data we need to find the mean vector and subtract it from each point Mean for Time complexity = (9+8+6+3+3+1) / 6 = 5 Mean for Space Complexity = (7+8+9+5+3+4) / 6 = 6 Mean for Lines of Code = (7+8+7+2+4+2) / 6 = 5 Mean vector = (5,6,5) So the new data set looks like this: 1 2 5 4 6 3 Standardize the Data

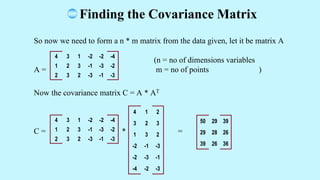

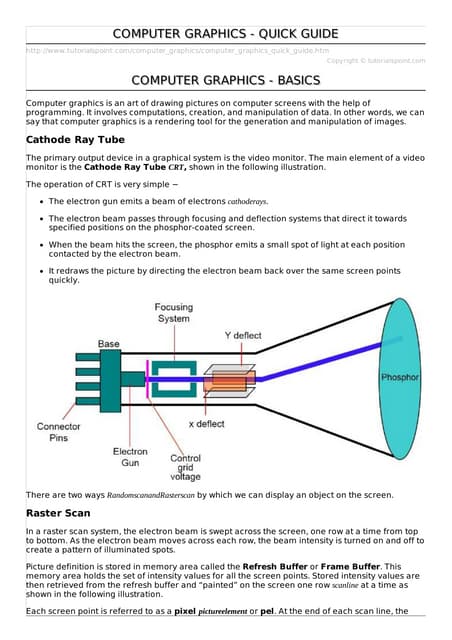

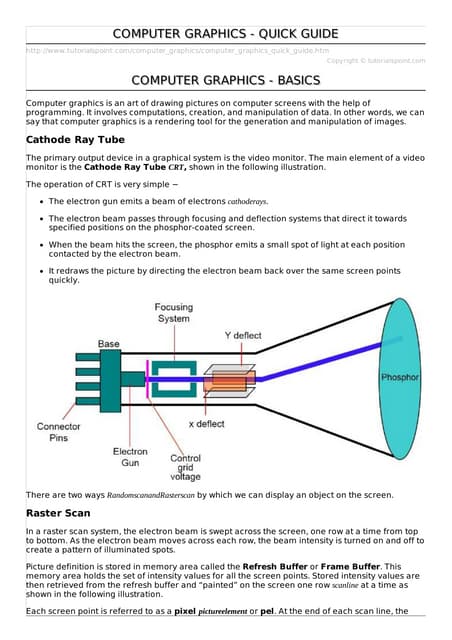

- 6. So now we need to form a n * m matrix from the data given, let it be matrix A (n = no of dimensions variables A = m = no of points ) Now the covariance matrix C = A * AT C = * = 4 3 1 -2 -2 -4 1 2 3 -1 -3 -2 2 3 2 -3 -1 -3 4 1 2 3 2 3 1 3 2 -2 -1 -3 -2 -3 -1 -4 -2 -3 50 29 39 29 28 26 39 26 36 Finding the Covariance Matrix 4 3 1 -2 -2 -4 1 2 3 -1 -3 -2 2 3 2 -3 -1 -3

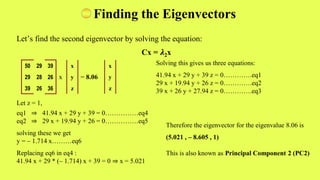

- 7. To find the eigenvalues of a n*n matrix we need to solve the following equation: det(C â ðI) =0 â = = = â ð3 + 114 ð 2 â 1170 ð + 2548 = 0 Solving the above equation gives us the roots as ð 1 = 102.86 ð 2 = 8.06 ð 3 = 3.07 Finding the Eigenvalues 50 29 39 29 28 26 39 26 36 50 - ð 29 39 29 28 - ð 26 39 26 36 - ð (50 â ð) [ (28- ð)(36- ð) â 26*26 ] + 29 [ 26*39 â 29*(36- ð) ] + 39 [ 29*26 â (28- ð)*39 ] Since the eigenvalue ð 3 is very less it can be left out and we can carry out the operations with ð 1 and ð 2 ð 0 0 0 ð 0 0 0 ð

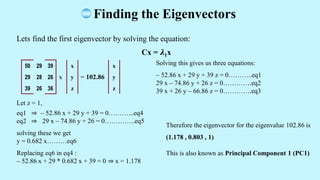

- 8. Lets find the first eigenvector by solving the equation: Cx = ð1x x = 102.86 Let z = 1, eq1 â â 52.86 x + 29 y + 39 = 0âĶâĶâĶ...eq4 eq2 â 29 x â 74.86 y + 26 = 0âĶâĶâĶâĶ..eq5 solving these we get y = 0.682 xâĶâĶâĶeq6 Replacing eq6 in eq4 : â 52.86 x + 29 * 0.682 x + 39 = 0 â x = 1.178 Finding the Eigenvectors 50 29 39 29 28 26 39 26 36 x y z x y z Solving this gives us three equations: â 52.86 x + 29 y + 39 z = 0âĶâĶâĶ..eq1 29 x â 74.86 y + 26 z = 0âĶâĶâĶâĶ.eq2 39 x + 26 y â 66.86 z = 0âĶâĶâĶâĶ.eq3 Therefore the eigenvector for the eigenvalue 102.86 is (1.178 , 0.803 , 1) This is also known as Principal Component 1 (PC1)

- 9. 50 29 39 29 28 26 39 26 36 Letâs find the second eigenvector by solving the equation: Cx = ð2x x = 8.06 Let z = 1, eq1 â 41.94 x + 29 y + 39 = 0âĶâĶâĶâĶâĶeq4 eq2 â 29 x + 19.94 y + 26 = 0âĶâĶâĶâĶâĶeq5 solving these we get y = â 1.714 xâĶâĶâĶeq6 Replacing eq6 in eq4 : 41.94 x + 29 * (â 1.714) x + 39 = 0 â x = 5.021 x y z x y z Solving this gives us three equations: 41.94 x + 29 y + 39 z = 0âĶâĶâĶâĶ.eq1 29 x + 19.94 y + 26 z = 0âĶâĶâĶâĶ.eq2 39 x + 26 y + 27.94 z = 0âĶâĶâĶâĶ.eq3 Therefore the eigenvector for the eigenvalue 8.06 is (5.021 , â 8.605 , 1) This is also known as Principal Component 2 (PC2) 50 29 39 29 28 26 39 26 36 Finding the Eigenvectors

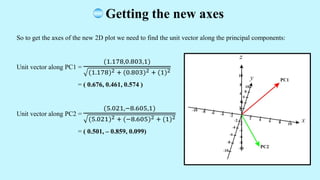

- 10. So to get the axes of the new 2D plot we need to find the unit vector along the principal components: Unit vector along PC1 = (1.178,0.803,1) (1.178)2 + (0.803)2 + (1)2 = ( 0.676, 0.461, 0.574 ) Unit vector along PC2 = (5.021,â8.605,1) (5.021)2 + (â8.605)2 + (1)2 = ( 0.501, â 0.859, 0.099) Getting the new axes

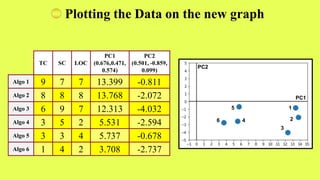

- 11. Plotting the Data on the new graph TC SC LOC PC1 (0.676,0.471, 0.574) PC2 (0.501, -0.859, 0.099) Algo 1 9 7 7 13.399 -0.811 Algo 2 8 8 8 13.768 -2.072 Algo 3 6 9 7 12.313 -4.032 Algo 4 3 5 2 5.531 -2.594 Algo 5 3 3 4 5.737 -0.678 Algo 6 1 4 2 3.708 -2.737 1 2 3 5 4 6 PC2 PC1

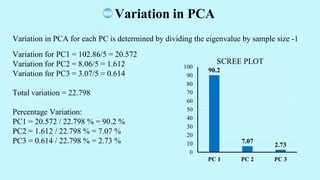

- 12. Variation in PCA for each PC is determined by dividing the eigenvalue by sample size -1 Variation for PC1 = 102.86/5 = 20.572 Variation for PC2 = 8.06/5 = 1.612 Variation for PC3 = 3.07/5 = 0.614 Total variation = 22.798 Percentage Variation: PC1 = 20.572 / 22.798 % = 90.2 % PC2 = 1.612 / 22.798 % = 7.07 % PC3 = 0.614 / 22.798 % = 2.73 % Variation in PCA 90.2 7.07 2.73 0 10 20 30 40 50 60 70 80 90 100 PC 1 PC 2 PC 3 SCREE PLOT

- 13. Application The principal component analysis is a widely used unsupervised learning method to perform dimensionality reduction. 1. used for finding hidden patterns if data has high dimensions like in finance, data mining, Psychology 2. Used in image compression 3. Used in noise cancellation

- 14. Advantages and Disadvantages Advantages: 1. Less misleading data means model accuracy improves. 2. Fewer dimensions mean less computing. Less data means that algorithms train faster. 3. Less data means less storage space required. 4. Removes redundant features and noise. 5. Dimensionality Reduction helps us to visualize the data that is present in higher dimensions in 2D or 3D Disadvantages: 1. While doing dimensionality reduction, we lost some of the information, which can possibly affect the performance of subsequent training algorithms. 2. It can be computationally intensive. 3. Transformed features are often hard to interpret. 4. It makes the independent variables less interpretable.

- 15. References 1. https://towardsdatascience.com/a-one-stop-shop-for-principal-component-analysis-5582fb7e0a9c 2. https://www.youtube.com/watch?v=FgakZw6K1QQ 3. https://www.youtube.com/watch?v=FgakZw6K1QQ 4. https://www.javatpoint.com/principal-component-analysis 5. https://www.simplilearn.com/tutorials/machine-learning-tutorial/principal-component-analysis 6. https://www.analyticsvidhya.com/blog/2021/05/20-questions-to-test-your-skills-on-dimensionality- reduction-pca/ 7. https://www.wolframalpha.com/ 8. https://c3d.libretexts.org/CalcPlot3D/index.html 9. https://research.google.com/colaboratory/