Unit 1 - Introduction to Big Data and hadoop.pptx

- 1. INTRODUCTION TO BIG DATA (UNIT 1) Dr. P. Rambabu, M. Tech., Ph.D., F.I.E. 15-July-2024

- 2. Big Data Analytics and Applications UNIT-I Introduction to Big Data: Defining Big Data, Big Data Types, Analytics, examples, Technologies, The evolution of Big Data Architecture. Basics of Hadoop: Hadoop Architecture, Main Components of Hadoop Framework, Analysis Big data using Hadoop, Hadoop clustering. UNIT-II: MapReduce: Analyzing the data with Unix Tool & Hadoop, Hadoop streaming, Hadoop Pipes. Hadoop Distributed File System: Design of HDFS, Concepts, Basic File system Operations, Interfaces, Data Flow. Hadoop I/O: Data Integrity, Compression, Serialization, File-Based Data Structures. UNIT-III: Developing A MapReduce Application: UNIT Tests with MRUNIT, Running Locally on Test Data. How MapReduce Works: Anatomy of MapReduce Job Run, Classic MapReduce, Yarn, Failures in Classic MapReduce and Yarn, Job Scheduling, Shuffle and Sort, Task Execution. MapReduce Types and Formats: MapReduce types, Input Formats, Output Formats.

- 3. Introduction to Big Data Unit 4: NoSQL Data Management: Types of NoSQL, Query Model for Big Data, Benefits of NoSQL, MongoDB. Hbase: Data Model and Implementations, Hbase Clients, Hbase Examples, Praxis. Hive: Comparison with Traditional Databases, HiveQL, Tables, Querying Data, User Defined Functions. Sqoop: Sqoop Connectors, Text and Binary File Formats, Imports, Working with Imported Data. FLUME: Apache Flume, Data Sources for FLUME, Components of FLUME Architecture. Unit 5: Pig: Grunt, Comparison with Databases, Pig Latin, User Defined Functions, Data Processing Operators. Spark: Installing steps, Distributed Datasets, Shared Variables, Anatomy of spark Job Run. Scala: Environment Setup, Basic syntax, Data Types, Functions, Pattern Matching.

- 5. Big Data Analytics and Applications Defining Big Data Big Data refers to extremely large datasets that are difficult to manage, process, and analyze using traditional data processing tools. The primary characteristics of Big Data are often described by the "3 Vs": 1. Volume: The amount of data generated is vast and continuously growing. 2. Velocity: The speed at which new data is generated and needs to be processed. 3. Variety: The different types of data (structured, semi-structured, and unstructured). Additional characteristics sometimes included are: 4. Veracity: The quality and accuracy of the data. 5. Value: The potential insights and benefits derived from analyzing the data.

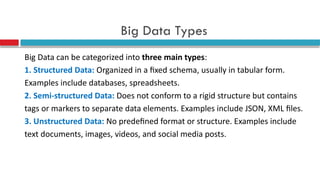

- 6. Big Data Types Big Data can be categorized into three main types: 1. Structured Data: Organized in a fixed schema, usually in tabular form. Examples include databases, spreadsheets. 2. Semi-structured Data: Does not conform to a rigid structure but contains tags or markers to separate data elements. Examples include JSON, XML files. 3. Unstructured Data: No predefined format or structure. Examples include text documents, images, videos, and social media posts.

- 7. Big Data Technologies To manage and analyze Big Data, several technologies and tools are used, including: 1. Hadoop: An open-source framework that allows for the distributed processing of large datasets across clusters of computers. 2. HDFS (Hadoop Distributed File System): A scalable, fault-tolerant storage system. 3. MapReduce: A programming model for processing large datasets with a distributed algorithm. 4. Spark: An open-source unified analytics engine for large-scale data processing, known for its speed and ease of use. 5. NoSQL Databases: Designed to handle large volumes of varied data. Examples include MongoDB, Cassandra, HBase.

- 8. Big Data Technologies 6. Kafka: A distributed streaming platform used for building real-time data pipelines and streaming applications. 7. Hive: A data warehousing tool built on top of Hadoop for querying and analyzing large datasets with SQL-like queries. 8. Pig: A high-level platform for creating MapReduce programs used with Hadoop

- 9. Examples of Big Data Big Data is used in various industries and applications: Healthcare: Analyzing patient data to improve treatment outcomes, predict epidemics, and reduce costs. Finance: Detecting fraud, managing risk, and personalizing customer services. Retail: Optimizing supply chain management, enhancing customer experience, and improving inventory management. Telecommunications: Managing network traffic, improving customer service, and preventing churn. Social Media: Analyzing user behavior, sentiment analysis, and targeted advertising.

- 10. The Evolution of Big Data Architecture The architecture of Big Data systems has evolved to handle the growing complexity and demands of data processing. Key stages include: Batch Processing: Initial systems focused on batch processing large volumes of data using tools like Hadoop and MapReduce. Data is processed in large chunks at scheduled intervals. Real-time Processing: The need for real-time data analysis led to the development of technologies like Apache Storm and Apache Spark Streaming. These systems process data in real-time or near real-time.

- 11. The Evolution of Big Data Architecture Lambda Architecture: A hybrid approach combining batch and real-time processing to provide comprehensive data analysis. The Lambda architecture consists of: ’āś Batch Layer: Stores all historical data and periodically processes it using batch processing. ’āś Speed Layer: Processes real-time data streams to provide immediate results. ’āś Serving Layer: Merges results from the batch and speed layers to deliver a unified view. Kappa Architecture: Simplifies the Lambda architecture by using a single processing pipeline for both batch and real-time data, typically leveraging stream processing systems.

- 12. Evolution of Big Data Architecture

- 13. Evolution of Big Data and its ecosystem: The evolution of Big Data and its ecosystem has undergone significant transformations over the years. Here's a brief overview: Early 2000s:- Big Data emerges as a term to describe large, complex datasets.- Hadoop (2005) and MapReduce (2004) are developed to process large data sets. 2005-2010:- Hadoop becomes the foundation for Big Data processing.- NoSQL databases like Cassandra (2008), MongoDB (2009), and Couchbase (2010) emerge.- Data warehousing and business intelligence tools adapt to Big Data. 2010-2015:- Hadoop ecosystem expands with tools like Pig (2010), Hive (2010), and HBase (2010).- Spark (2010) and Flink (2011) emerge as in-memory processing engines.- Data science and machine learning gain prominence.

- 14. 2015-2020:- Cloud-based Big Data services like AWS EMR (2012), Google Cloud Dataproc (2015), and Azure HDInsight (2013) become popular.- Containers and orchestration tools like Docker (2013) and Kubernetes (2014) simplify deployment.- Streaming data processing with Kafka (2011), Storm (2010), and Flink gains traction. 2020-present:- AI and machine learning continue to drive Big Data innovation.- Cloud- native architectures and serverless computing gain popularity.- Data governance, security, and ethics become increasingly important.- Emerging trends include edge computing, IoT, and Explainable AI (XAI).

- 15. The Big Data ecosystem has expanded to include: 1. Data ingestion tools e.g., Flume (2011), NiFi (2014) 2. Data processing frameworks e.g., Hadoop (2005), Spark (2010), Flink (2014) 3. NoSQL databases e.g., Hbase (2008), Cassandra (2008), MongoDB (2009), Couchbase (2011) 4. Data warehousing and BI tools e.g., Hive (2008), Impala (2012), Tableau (2003), Presto (2013), SparkSQL (2014), Power BI (2015) 5. Streaming data processing e.g., Flink (2010), Kafka (2011), Storm (2011), Spark Streaming (2013) 6. Machine learning and AI frameworks e.g., Scikit-learn (2010), TensorFlow (2015), PyTorch (2016) 7. Cloud-based Big Data services e.g., AWS EMR (2009), Azure HDInsight (2013), Google Cloud Dataproc (2016) 8. Containers and orchestration tools e.g., Docker (2013), Kubernetes (2014)

- 16. Evolution of Big Data and its ecosystem

- 17. Dr. Rambabu Palaka Professor School of Engineering Malla Reddy University, Hyderabad Mobile: +91-9652665840 Email: drrambabu@mallareddyuniversity.ac.in