Workloads

Download as PPTX, PDF0 likes554 views

What is Workload, The various types of workloads possible and the way of Selecting Workloads accordingly as per different systems and different workload criteria applicable.

1 of 21

Downloaded 16 times

Recommended

Understanding the importance of software performance testing and its types

Understanding the importance of software performance testing and its typesAlisha Henderson

Ěý

Performance testing ensures that software systems meet requirements for speed, responsiveness, and reliability under different loads. It examines how systems perform under varying usage conditions by testing for factors like response times and scalability. There are several types of performance tests that are selected based on the testing goals, including load testing to determine maximum operating capacity and stress testing to identify points of failure. Proper performance testing requires defining metrics, configuring test environments, executing multiple test iterations, analyzing results, and retesting as needed to validate performance.The process of performance testing

The process of performance testingValeria Moreno Zapata

Ěý

This chapter describes the process of performance testing software projects. It outlines 6 key steps: 1) capturing non-functional requirements, 2) building a performance test environment, 3) scripting use cases, 4) building test scenarios, 5) executing performance tests, and 6) analyzing results and reporting. The goal is to test software performance early to avoid delays from fixing issues late in the project timeline. Each step in the process is explained in detail, from identifying requirements to analyzing test data and creating reports.Icsm2009 jiang

Icsm2009 jiangSAIL_QU

Ěý

This document proposes an automated framework for analyzing the performance of load tests. The framework includes:

1. Log abstraction to extract relevant performance data from load test logs.

2. Scenario sequence recovery to identify distinct scenarios within a load test.

3. Performance summarization to analyze metrics like response times.

4. Statistical analysis to compare performance between scenarios, configurations, and load test runs.

Case studies are presented to demonstrate how the framework can be used to recommend configurations, certify platforms, and study system changes. The framework aims to make performance analysis more efficient and reveal deeper insights compared to current manual practices.Performance Testing Overview

Performance Testing OverviewJames Venetsanakos

Ěý

This document provides an overview of performance testing. It defines performance testing and how it differs from other types of testing. It then describes various types of performance tests like end-to-end testing, component testing, load testing, and mobile testing. It also discusses performance test assets, the test process, tool selection, best practices, and recommended resources. The overall purpose is to introduce the topic of performance testing and provide guidance on how to approach it.Performance testing : An Overview

Performance testing : An Overviewsharadkjain

Ěý

The document summarizes the results of performance testing on a system. It provides throughput and scalability numbers from tests, graphs of metrics, and recommendations for developers to improve performance based on issues identified. The performance testing process and approach are also outlined. The resultant deliverable is a performance and scalability document containing the test results but not intended as a formal system sizing guide.Performance Testing And Its Type | Benefits Of Performance Testing

Performance Testing And Its Type | Benefits Of Performance TestingKostCare

Ěý

Performance testing is in general, a testing practice performed to determine how a system performs in terms of responsiveness and stability under a particular workload. It can also serve to investigate, measure, validate or verify other quality attributes of the system, such as scalability, reliability and resource usage.Cocomo Cost Drivers

Cocomo Cost DriversUsama Fayyaz

Ěý

This presentation discusses cost drivers in COCOMO modeling, specifically personnel attributes. It defines five personnel cost drivers: ACAP (Analyst Capability), AEXP (Application Experience), PCAP (Programmer Capability), VEXP (Virtual Machine Experience), and LEXP (Programming Language Experience). Each cost driver is rated on a scale from very low to extra high, with associated multipliers to estimate their impact on project costs. Details are provided on how to assess each cost driver.Performance testing

Performance testingRanpreet kaur

Ěý

The document discusses performance testing, which is done to evaluate how a system performs under certain workloads. It aims to determine a system's speed, scalability, stability and other quality attributes. The document outlines different types of performance tests like load testing and stress testing. It explains key performance metrics such as response time, throughput and how performance testing helps improve quality and reduce risks. Overall, performance testing is important to ensure applications meet expectations before release.Performance testing

Performance testingJyoti Babbar

Ěý

Performance testing refers to test activities that check a system's performance under different workloads. The objectives are to validate performance requirements, check current capacity, and identify performance issues. Performance problems can include memory leaks, inefficient algorithms, and hardware/software incompatibilities. Key aspects of performance tested are response time, throughput, stability, availability, and speed. Common types of performance testing are load testing, stress testing, endurance testing, spike testing, volume testing, and availability testing.Introduction to Performance Testing

Introduction to Performance TestingTharinda Liyanage

Ěý

Performance testing determines how responsive and stable a system is under different workloads. It tests for speed, scalability, stability, and ensures a positive user experience. Types of performance testing include load testing to evaluate behavior under expected loads, and stress testing to find upper capacity limits. Key metrics measured are response time, throughput, and resource utilization. Performance testing involves planning test goals, methodology, implementation, validation of results, and interpreting results. Common tools used for performance testing are JMeter, LoadRunner, and Webload.Fault tolerance and computing

Fault tolerance and computingPalani murugan

Ěý

Fault tolerance systems use hardware and software redundancy to continue operating satisfactorily despite failures. They employ techniques like triplication and voting where multiple processors compute the same parameter and vote to ignore suspect values. Duplication and comparison has two processors compare outputs and drop off if disagreement. Self-checking pairs can detect their own errors. Fault tolerant software uses multi-version programming with different teams developing versions, recovery blocks that re-execute if checks fail, and exception handlers.Software Fault Tolerance

Software Fault ToleranceAnkit Singh

Ěý

The document discusses software fault tolerance techniques. It begins by explaining why fault tolerant software is needed, particularly for safety critical systems. It then covers single version techniques like checkpointing and process pairs. Next it discusses multi-version techniques using multiple variants of software. It also covers software fault injection testing. Finally it provides examples of fault tolerant systems used in aircraft like the Airbus and Boeing.Software testing performance testing

Software testing performance testingGaneshKumarKanthiah

Ěý

The document discusses performance testing which evaluates a system's response time, throughput, and utilization under different loads and versions. Performance testing ensures a product meets requirements like transactions processed per time period, response times, and resource needs under various loads. It involves planning test cases, automating test execution, analyzing results, and tuning performance. Benchmarking compares a product's performance to competitors. Capacity planning determines hardware needs to satisfy requirements based on load patterns and performance data.N-version programming

N-version programmingshabnam0102

Ěý

This document discusses N-Version Programming (NVP), a software fault tolerance technique where multiple independent teams develop different versions of the same program from the same specifications. The key aspects of NVP covered are design diversity, output comparison using voting, the role of the coordination team, and how NVP compares to recovery blocks as another fault tolerance approach. NVP aims to reduce faults by developing diverse versions while recovery blocks execute versions serially with acceptance tests. The document provides an overview of NVP as a software reliability technique.Introduction to Total Data Driven Test Automation

Introduction to Total Data Driven Test AutomationVNITO Alliance

Ěý

IMT Solutions developed a framework for total data-driven test automation that improved productivity, quality, and reduced time to market. The framework includes keyword-driven test cases, test suites, driver scripts, controllers, utilities, and application libraries. Using this framework, IMT has seen successes like requiring minimal training for junior engineers, reusing test scripts across releases, and reducing automation teams to as few as two people.Debugging Apps & Analysing Usage

Debugging Apps & Analysing UsageXpand IT

Ěý

Debugging Apps & Analysing Usage - Jorge Borralho, Project Manager, Xpand IT

Xpand IT presentation during the Visual Studio 2013 - Live SeminarPredictive Analytics based Regression Test Optimization

Predictive Analytics based Regression Test OptimizationSTePINForum

Ěý

by Raja Balusamy, Group Manager & Shivakumar Balur, Senior Chief Engineer, Samsung R&D at STeP-IN SUMMIT 2018 - 15th International Conference on Software Testing on August 30, 2018 at Taj, MG Road, BengaluruSslc

Sslcchitrambasrm

Ěý

Quality assurance aims to identify and correct errors early in the development process through reviews and testing at each phase. The System Software Lifecycle (SSLC) model aims to ensure quality when developing software. It has five stages: requirements specification, design specification, testing and implementation, and maintenance and support. Testing is an important but difficult part of development that helps eliminate errors by determining what causes failures. Validation and certification ensure the software meets standards through simulated and live testing. Maintenance provides adjustments to comply with specifications and improve quality through problem reporting and resolution.The art of architecture

The art of architectureADDQ

Ěý

The document discusses test and measurement system architecture. It begins by introducing some LabVIEW tools and frameworks. It then defines software architecture and discusses key aspects like different design levels, architectural patterns, and considerations for configuration, maintenance, and managing product variants. Examples of simplifying complex test systems through use of XML configuration and separating generic test code from product-specific code are provided. Strategies for background measurement processes and sequential "hit and run" testing are also contrasted. The conclusion emphasizes that architecture involves more than just software design and to keep designs simple.Is accurate system-level power measurement challenging? Check this out!

Is accurate system-level power measurement challenging? Check this out!Deepak Shankar

Ěý

The most common method of computing power of a system or semiconductor is with spreadsheets. Spreadsheets generates worst case power consumption and, in most cases, is insufficient to make architecture decisions. Accurate power measurement requires knowledge of use-cases, processing time, resource consumption and any transitions. Doing this at the RTL-level or using software tools is both too late and requires huge model construction effort. Based on our experience, a systems-level model with timing, power and functionality is the only real solution to measure accurate power consumption. Unfortunately, system-level models are hard to construct because of the complex expressions, right-level of abstraction and defining the right workload. Fortunately, there is a solution that enables to you to build functional models that can generate accurate power measures. These measurements can be used to make architecture decisions, conduct performance-power trade-off, determining power management quality, and compliance with requirements.

During this Presentation, we will demonstrate how system-level power modeling and measurement works. We shall go over the requirements to create the model, what outputs to capture and how to ensure accuracy. During the presentation, the speaker will demonstrate real-life examples, share best practices, and compare with real hardware. This presentation will cover power from the perspective of semiconductor, systems and embedded software.

QSpiders - Introduction to JMeter

QSpiders - Introduction to JMeterQspiders - Software Testing Training Institute

Ěý

Intoduction to performance testing, Throughput, Response time, Tuning, Benchmarking, Performance Testing Process, Load Testing, JMeterSoftware testing strategies And its types

Software testing strategies And its typesMITULJAMANG

Ěý

Software Testing is a type of investigation to find out if there is any default or error present in the software so that the errors can be reduced or removed to increase the quality of the software and to check whether it fulfills the specifies requirements or not.

According to Glen Myers, software testing has the following objectives:

The process of investigating and checking a program to find whether there is an error or not and does it fulfill the requirements or not is called testing.

When the number of errors found during the testing is high, it indicates that the testing was good and is a sign of good test case.Finding an unknown error that’s wasn’t discovered yet is a sign of a successful and a good test caseModeling the Performance of Ultra-Large-Scale Systems Using Layered Simulations

Modeling the Performance of Ultra-Large-Scale Systems Using Layered SimulationsSAIL_QU

Ěý

This document discusses modeling the performance of ultra-large-scale systems using layered simulations. It presents a three-layered simulation model consisting of a world view layer, component layer, and physical layer. This model allows performance to be evaluated at different levels of abstraction. Two case studies are described that apply this modeling approach: one identifies a CPU bottleneck in an RSS cloud system, and another compares centralized versus distributed monitoring of a large-scale system. The layered simulation model enables performance to be evaluated earlier in the development process and helps different stakeholders understand performance impacts.Fault tolerant presentation

Fault tolerant presentationskadyan1

Ěý

Presentation was delivered in a fault tolerance class which talk about the achieving fault tolerance in databases by making use of the replication.Different commercial databases were studied and looked into the approaches they took for replication.Then based on the study an architecture was suggested for military database design using an asynchronous approach and making use of the cluster patterns. Reinventing Performance Testing, CMG imPACt 2016 slides

Reinventing Performance Testing, CMG imPACt 2016 slidesAlexander Podelko

Ěý

Load testing is an important part of the performance engineering process. However the industry is changing and load testing should adjust to these changes - a stereotypical, last-moment performance check is not enough anymore. There are multiple aspects of load testing - such as environment, load generation, testing approach, life-cycle integration, feedback and analysis - and none remains static. This presentation discusses how performance testing is adapting to industry trends to remain relevant and bring value to the table.Fundamentals of Software Engineering

Fundamentals of Software Engineering Madhar Khan Pathan

Ěý

Integration Testing, Non-incremental, Integration Testing, Incremental Integration Testing, Top-down Integration, Bottom-up Integration, Sandwich Integration, Regression Testing, Smoke Testing, Benefits of Smoke Testing , Fundamentals of Software EngineeringMultiple Dimensions of Load Testing

Multiple Dimensions of Load TestingAlexander Podelko

Ěý

Load testing is an important part of the performance engineering process. It remains the main way to ensure appropriate performance and reliability in production. It is important to see a bigger picture beyond stereotypical, last-moment load testing. There are multiple dimensions of load testing: environment, load generation, testing approach, life-cycle integration, feedback and analysis. This paper discusses these dimensions and how load testing tools support them. Hdl based simulators

Hdl based simulatorsPrachi Pandey

Ěý

This document discusses HDL-based simulators. It defines simulation as modeling a design's function and performance. There are two main types of simulators: HDL-based and schematic-based. HDL-based simulators use an HDL language like VHDL to describe the design and testbench. These can be either event-driven or cycle-based. Event-driven simulators efficiently model all nodes and detect glitches. Cycle-based simulators compute the steady-state response at each clock cycle without detailed timing.Benchmarks

BenchmarksAmit Kumar Rathi

Ěý

The document discusses benchmarks and benchmarking. It provides definitions and examples of benchmarks, benchmarking, and different types of benchmarks. Some key points:

- Benchmarking is comparing performance metrics to industry best practices to measure performance. Benchmarks are tests used to assess relative performance.

- In computing, benchmarks run programs or operations to assess performance of hardware or software. Common benchmarks include Dhrystone, LINPACK, and SPEC.

- There are different types of benchmarks, including synthetic benchmarks designed to test specific components, real programs, microbenchmarks that test small pieces of code, and benchmark suites that test with a variety of applications.Unit 3 part2

Unit 3 part2Karthik Vivek

Ěý

The document discusses various topics related to program design and analysis including:

1) Program-level performance analysis to understand execution time on complex platforms and optimize for time, energy, and size.

2) Measuring program performance using simulation, timers, or logic analyzers and analyzing metrics like average, worst, and best-case execution times.

3) Optimizing program performance by analyzing paths, loops, instruction timing considering factors like caches, pipelines, and data-dependent variations.

4) Validating program functionality through testing strategies like black-box and clear-box testing that examine inputs, outputs, and execution paths to verify correct behavior.More Related Content

What's hot (20)

Performance testing

Performance testingJyoti Babbar

Ěý

Performance testing refers to test activities that check a system's performance under different workloads. The objectives are to validate performance requirements, check current capacity, and identify performance issues. Performance problems can include memory leaks, inefficient algorithms, and hardware/software incompatibilities. Key aspects of performance tested are response time, throughput, stability, availability, and speed. Common types of performance testing are load testing, stress testing, endurance testing, spike testing, volume testing, and availability testing.Introduction to Performance Testing

Introduction to Performance TestingTharinda Liyanage

Ěý

Performance testing determines how responsive and stable a system is under different workloads. It tests for speed, scalability, stability, and ensures a positive user experience. Types of performance testing include load testing to evaluate behavior under expected loads, and stress testing to find upper capacity limits. Key metrics measured are response time, throughput, and resource utilization. Performance testing involves planning test goals, methodology, implementation, validation of results, and interpreting results. Common tools used for performance testing are JMeter, LoadRunner, and Webload.Fault tolerance and computing

Fault tolerance and computingPalani murugan

Ěý

Fault tolerance systems use hardware and software redundancy to continue operating satisfactorily despite failures. They employ techniques like triplication and voting where multiple processors compute the same parameter and vote to ignore suspect values. Duplication and comparison has two processors compare outputs and drop off if disagreement. Self-checking pairs can detect their own errors. Fault tolerant software uses multi-version programming with different teams developing versions, recovery blocks that re-execute if checks fail, and exception handlers.Software Fault Tolerance

Software Fault ToleranceAnkit Singh

Ěý

The document discusses software fault tolerance techniques. It begins by explaining why fault tolerant software is needed, particularly for safety critical systems. It then covers single version techniques like checkpointing and process pairs. Next it discusses multi-version techniques using multiple variants of software. It also covers software fault injection testing. Finally it provides examples of fault tolerant systems used in aircraft like the Airbus and Boeing.Software testing performance testing

Software testing performance testingGaneshKumarKanthiah

Ěý

The document discusses performance testing which evaluates a system's response time, throughput, and utilization under different loads and versions. Performance testing ensures a product meets requirements like transactions processed per time period, response times, and resource needs under various loads. It involves planning test cases, automating test execution, analyzing results, and tuning performance. Benchmarking compares a product's performance to competitors. Capacity planning determines hardware needs to satisfy requirements based on load patterns and performance data.N-version programming

N-version programmingshabnam0102

Ěý

This document discusses N-Version Programming (NVP), a software fault tolerance technique where multiple independent teams develop different versions of the same program from the same specifications. The key aspects of NVP covered are design diversity, output comparison using voting, the role of the coordination team, and how NVP compares to recovery blocks as another fault tolerance approach. NVP aims to reduce faults by developing diverse versions while recovery blocks execute versions serially with acceptance tests. The document provides an overview of NVP as a software reliability technique.Introduction to Total Data Driven Test Automation

Introduction to Total Data Driven Test AutomationVNITO Alliance

Ěý

IMT Solutions developed a framework for total data-driven test automation that improved productivity, quality, and reduced time to market. The framework includes keyword-driven test cases, test suites, driver scripts, controllers, utilities, and application libraries. Using this framework, IMT has seen successes like requiring minimal training for junior engineers, reusing test scripts across releases, and reducing automation teams to as few as two people.Debugging Apps & Analysing Usage

Debugging Apps & Analysing UsageXpand IT

Ěý

Debugging Apps & Analysing Usage - Jorge Borralho, Project Manager, Xpand IT

Xpand IT presentation during the Visual Studio 2013 - Live SeminarPredictive Analytics based Regression Test Optimization

Predictive Analytics based Regression Test OptimizationSTePINForum

Ěý

by Raja Balusamy, Group Manager & Shivakumar Balur, Senior Chief Engineer, Samsung R&D at STeP-IN SUMMIT 2018 - 15th International Conference on Software Testing on August 30, 2018 at Taj, MG Road, BengaluruSslc

Sslcchitrambasrm

Ěý

Quality assurance aims to identify and correct errors early in the development process through reviews and testing at each phase. The System Software Lifecycle (SSLC) model aims to ensure quality when developing software. It has five stages: requirements specification, design specification, testing and implementation, and maintenance and support. Testing is an important but difficult part of development that helps eliminate errors by determining what causes failures. Validation and certification ensure the software meets standards through simulated and live testing. Maintenance provides adjustments to comply with specifications and improve quality through problem reporting and resolution.The art of architecture

The art of architectureADDQ

Ěý

The document discusses test and measurement system architecture. It begins by introducing some LabVIEW tools and frameworks. It then defines software architecture and discusses key aspects like different design levels, architectural patterns, and considerations for configuration, maintenance, and managing product variants. Examples of simplifying complex test systems through use of XML configuration and separating generic test code from product-specific code are provided. Strategies for background measurement processes and sequential "hit and run" testing are also contrasted. The conclusion emphasizes that architecture involves more than just software design and to keep designs simple.Is accurate system-level power measurement challenging? Check this out!

Is accurate system-level power measurement challenging? Check this out!Deepak Shankar

Ěý

The most common method of computing power of a system or semiconductor is with spreadsheets. Spreadsheets generates worst case power consumption and, in most cases, is insufficient to make architecture decisions. Accurate power measurement requires knowledge of use-cases, processing time, resource consumption and any transitions. Doing this at the RTL-level or using software tools is both too late and requires huge model construction effort. Based on our experience, a systems-level model with timing, power and functionality is the only real solution to measure accurate power consumption. Unfortunately, system-level models are hard to construct because of the complex expressions, right-level of abstraction and defining the right workload. Fortunately, there is a solution that enables to you to build functional models that can generate accurate power measures. These measurements can be used to make architecture decisions, conduct performance-power trade-off, determining power management quality, and compliance with requirements.

During this Presentation, we will demonstrate how system-level power modeling and measurement works. We shall go over the requirements to create the model, what outputs to capture and how to ensure accuracy. During the presentation, the speaker will demonstrate real-life examples, share best practices, and compare with real hardware. This presentation will cover power from the perspective of semiconductor, systems and embedded software.

QSpiders - Introduction to JMeter

QSpiders - Introduction to JMeterQspiders - Software Testing Training Institute

Ěý

Intoduction to performance testing, Throughput, Response time, Tuning, Benchmarking, Performance Testing Process, Load Testing, JMeterSoftware testing strategies And its types

Software testing strategies And its typesMITULJAMANG

Ěý

Software Testing is a type of investigation to find out if there is any default or error present in the software so that the errors can be reduced or removed to increase the quality of the software and to check whether it fulfills the specifies requirements or not.

According to Glen Myers, software testing has the following objectives:

The process of investigating and checking a program to find whether there is an error or not and does it fulfill the requirements or not is called testing.

When the number of errors found during the testing is high, it indicates that the testing was good and is a sign of good test case.Finding an unknown error that’s wasn’t discovered yet is a sign of a successful and a good test caseModeling the Performance of Ultra-Large-Scale Systems Using Layered Simulations

Modeling the Performance of Ultra-Large-Scale Systems Using Layered SimulationsSAIL_QU

Ěý

This document discusses modeling the performance of ultra-large-scale systems using layered simulations. It presents a three-layered simulation model consisting of a world view layer, component layer, and physical layer. This model allows performance to be evaluated at different levels of abstraction. Two case studies are described that apply this modeling approach: one identifies a CPU bottleneck in an RSS cloud system, and another compares centralized versus distributed monitoring of a large-scale system. The layered simulation model enables performance to be evaluated earlier in the development process and helps different stakeholders understand performance impacts.Fault tolerant presentation

Fault tolerant presentationskadyan1

Ěý

Presentation was delivered in a fault tolerance class which talk about the achieving fault tolerance in databases by making use of the replication.Different commercial databases were studied and looked into the approaches they took for replication.Then based on the study an architecture was suggested for military database design using an asynchronous approach and making use of the cluster patterns. Reinventing Performance Testing, CMG imPACt 2016 slides

Reinventing Performance Testing, CMG imPACt 2016 slidesAlexander Podelko

Ěý

Load testing is an important part of the performance engineering process. However the industry is changing and load testing should adjust to these changes - a stereotypical, last-moment performance check is not enough anymore. There are multiple aspects of load testing - such as environment, load generation, testing approach, life-cycle integration, feedback and analysis - and none remains static. This presentation discusses how performance testing is adapting to industry trends to remain relevant and bring value to the table.Fundamentals of Software Engineering

Fundamentals of Software Engineering Madhar Khan Pathan

Ěý

Integration Testing, Non-incremental, Integration Testing, Incremental Integration Testing, Top-down Integration, Bottom-up Integration, Sandwich Integration, Regression Testing, Smoke Testing, Benefits of Smoke Testing , Fundamentals of Software EngineeringMultiple Dimensions of Load Testing

Multiple Dimensions of Load TestingAlexander Podelko

Ěý

Load testing is an important part of the performance engineering process. It remains the main way to ensure appropriate performance and reliability in production. It is important to see a bigger picture beyond stereotypical, last-moment load testing. There are multiple dimensions of load testing: environment, load generation, testing approach, life-cycle integration, feedback and analysis. This paper discusses these dimensions and how load testing tools support them. Hdl based simulators

Hdl based simulatorsPrachi Pandey

Ěý

This document discusses HDL-based simulators. It defines simulation as modeling a design's function and performance. There are two main types of simulators: HDL-based and schematic-based. HDL-based simulators use an HDL language like VHDL to describe the design and testbench. These can be either event-driven or cycle-based. Event-driven simulators efficiently model all nodes and detect glitches. Cycle-based simulators compute the steady-state response at each clock cycle without detailed timing.Similar to Workloads (20)

Benchmarks

BenchmarksAmit Kumar Rathi

Ěý

The document discusses benchmarks and benchmarking. It provides definitions and examples of benchmarks, benchmarking, and different types of benchmarks. Some key points:

- Benchmarking is comparing performance metrics to industry best practices to measure performance. Benchmarks are tests used to assess relative performance.

- In computing, benchmarks run programs or operations to assess performance of hardware or software. Common benchmarks include Dhrystone, LINPACK, and SPEC.

- There are different types of benchmarks, including synthetic benchmarks designed to test specific components, real programs, microbenchmarks that test small pieces of code, and benchmark suites that test with a variety of applications.Unit 3 part2

Unit 3 part2Karthik Vivek

Ěý

The document discusses various topics related to program design and analysis including:

1) Program-level performance analysis to understand execution time on complex platforms and optimize for time, energy, and size.

2) Measuring program performance using simulation, timers, or logic analyzers and analyzing metrics like average, worst, and best-case execution times.

3) Optimizing program performance by analyzing paths, loops, instruction timing considering factors like caches, pipelines, and data-dependent variations.

4) Validating program functionality through testing strategies like black-box and clear-box testing that examine inputs, outputs, and execution paths to verify correct behavior.Daniel dauwe ece 561 Trial 3

Daniel dauwe ece 561 Trial 3cinedan

Ěý

This document outlines a project that tested the performance and power usage of applications running simultaneously on multicore processors. It discusses benchmarking tools like performance counters, PAPI, and the HPC Toolkit. Tests were run on AMD and Intel processors using C-Ray and Ramspeed applications pinned to specific cores. Control tests showed baseline performance for each application alone on each core. Interference tests examined increases in runtime, cache misses, and power when applications shared the processor. Results showed interference effects. Future work could test more applications and cores simultaneously to better understand multicore interference.Daniel dauwe ece 561 Benchmarking Results

Daniel dauwe ece 561 Benchmarking Resultscinedan

Ěý

This document outlines a project that tested the performance and power usage of applications running simultaneously on multicore processors. It discusses benchmarking tools like performance counters, PAPI, and the HPC Toolkit. Tests were run on AMD and Intel processors using C-Ray and Ramspeed applications pinned to specific cores. Control tests showed baseline performance for each application alone on each core. Interference tests examined increases in runtime, cache misses, and power when applications shared the processor. Results showed interference effects. Future work could test more applications and cores simultaneously to better understand multicore interference.Daniel dauwe ece 561 Benchmarking Results Trial 2

Daniel dauwe ece 561 Benchmarking Results Trial 2cinedan

Ěý

This document outlines a project that tested the performance and power usage of applications running simultaneously on multicore processors. It discusses benchmarking tools like performance counters, PAPI, and the HPC Toolkit. Tests were run on AMD and Intel processors using C-Ray and Ramspeed applications pinned to specific cores. Control tests showed baseline performance for each application alone on each core. Interference tests showed increases in runtime, cache misses, and power when applications shared the processor. Future tests are proposed to analyze interference between more applications and cores.Unit 3 part2

Unit 3 part2Karthik Vivek

Ěý

Program-level performance analysis.

Optimizing for:

Execution time.

Energy/power.

Program size.

Program validation and testingUnit 3 part2

Unit 3 part2Karthik Vivek

Ěý

Program-level performance analysis.

Optimizing for:

Execution time.

Energy/power.

Program size.

Program validation and testingPerformance testing

Performance testing ekatechserv

Ěý

EKA Testing Services is a technology company that provides testing and knowledge management services. It was founded over 15 years ago by an industry pioneer in software testing. EKA has developed test frameworks and tools to help customers improve business performance through more efficient testing. These include a cloud-based test platform and frameworks for functionality and automation testing using open-source tools. EKA also provides performance testing services to analyze how software performs under different loads and identify any issues.Leveraging HP Performance Center

Leveraging HP Performance CenterMartin Spier

Ěý

Martin Spier and Rex Black presented on leveraging HP Performance Center at Expedia. Rex introduced himself as a performance consultant and Martin as a performance engineer at Expedia. They discussed how performance engineering aims to answer questions about an application's performance. Performance Center was highlighted as a tool that allows sharing resources across teams to improve efficiency and enable distributed testing. Expedia leverages Performance Center's centralized management and reusable test artifacts to test applications early and often across their global, agile teams.Technical Testing Introduction

Technical Testing Introductionextentconf Tsoy

Ěý

The document discusses technical testing of trading systems. It outlines the key approaches to performance, stability, and operability testing. The main tasks involved are using test tools to emulate real loads and conditions, preparing test environments and load shapes based on production data, executing automated tests, and thoroughly analyzing results to validate performance under stress.Technical Testing Introduction

Technical Testing IntroductionIosif Itkin

Ěý

The document discusses technical testing of trading systems. It outlines the key approaches to performance, stability, and operability testing. The main tasks involved are using test tools to emulate real loads and conditions, preparing test environments and load shapes based on production data, executing automated tests, and thoroughly analyzing results to validate findings. Technical testing helps validate systems' non-functional requirements around performance, stability, and handling high volumes of data and users.Neotys PAC 2018 - Ramya Ramalinga Moorthy

Neotys PAC 2018 - Ramya Ramalinga MoorthyNeotys_Partner

Ěý

This document discusses best practices for performance testing in Agile and DevOps environments. It recommends implementing early and continuous performance testing as part of CI/CD pipelines using tools like JMeter and WebPageTest. Additionally, it stresses the importance of system-level performance tests during targeted sprints and prior to production deployments. The use of application performance management tools to monitor tests and production is also highlighted to facilitate quick feedback loops and issue resolution.Response time difference analysis of performance testing tools

Response time difference analysis of performance testing toolsSpoorthi Sham

Ěý

Computer Systems Performance Analysis - Response time difference analysis of performance testing toolsJMeter - Performance testing your webapp

JMeter - Performance testing your webappAmit Solanki

Ěý

JMeter is an open-source tool for performance and load testing web applications. It can test applications by simulating heavy loads to determine stability and identify performance bottlenecks. JMeter simulates multiple users accessing web services concurrently to model expected usage during peak periods. It provides instant visual feedback and allows tests to be run interactively or in batch mode for later analysis. Tests are composed of thread groups, samplers, timers, listeners and other elements to control test flow and capture response data. JMeter also supports distributed testing across multiple servers to simulate very large loads.Performance Test şÝşÝߣshow Recent

Performance Test şÝşÝߣshow RecentFuture Simmons

Ěý

Automated performance testing simulates real users to determine an application's speed, scalability, and stability under load before deployment. It helps detect bottlenecks, ensures the system can handle peak load, and provides confidence that the application will work as expected on launch day. The process involves evaluating user expectations and system limits, creating test scripts, executing load, stress, and duration tests while monitoring servers, and analyzing results to identify areas for improvement.Performance Test şÝşÝߣshow R E C E N T

Performance Test şÝşÝߣshow R E C E N TFuture Simmons

Ěý

Automated performance testing simulates real users to determine an application's speed, scalability, and stability under load before deployment. It helps detect bottlenecks, ensures the system can handle peak usage, and provides confidence that the application will work as expected on launch day. The process involves evaluating user needs, drafting test scripts, executing different types of load tests, and monitoring servers and applications to identify performance issues or degradation over time.Fundamentals Performance Testing

Fundamentals Performance TestingBhuvaneswari Subramani

Ěý

Performance testing is one of the kinds of Non-Functional Testing. Building any successful product hinges on its performance. User experience is the deciding unit of fruitful application and Performance testing helps to reach there. You will learn the key concept of performance testing, how the IT industry gets benefitted, what are the different types of Performance Testing, their lifecycle, and much more.Performance testing

Performance testingChalana Kahandawala

Ěý

The document provides an overview of performance testing, including:

- Defining performance testing and comparing it to functional testing

- Explaining why performance testing is critical to evaluate a system's scalability, stability, and ability to meet user expectations

- Describing common types of performance testing like load, stress, scalability, and endurance testing

- Identifying key performance metrics and factors that affect software performance

- Outlining the performance testing process from planning to scripting, testing, and result analysis

- Introducing common performance testing tools and methodologies

- Providing examples of performance test scenarios and best practices for performance testingPerformance testing basics

Performance testing basicsCharu Anand

Ěý

This document discusses performance testing, which determines how a system responds under different workloads. It defines key terms like response time and throughput. The performance testing process is outlined as identifying the test environment and criteria, planning tests, implementing the test design, executing tests, and analyzing results. Common metrics that are monitored include response time, throughput, CPU utilization, memory usage, network usage, and disk usage. Performance testing helps evaluate systems, identify bottlenecks, and ensure performance meets criteria before production.Your score increases as you pick a category, fill out a long description and ...

Your score increases as you pick a category, fill out a long description and ...SENTHILR44

Ěý

tep-07.RelQ.pptRecently uploaded (20)

The Constitution, Government and Law making bodies .

The Constitution, Government and Law making bodies .saanidhyapatel09

Ěý

This PowerPoint presentation provides an insightful overview of the Constitution, covering its key principles, features, and significance. It explains the fundamental rights, duties, structure of government, and the importance of constitutional law in governance. Ideal for students, educators, and anyone interested in understanding the foundation of a nation’s legal framework.

TLE 7 - 3rd Topic - Hand Tools, Power Tools, Instruments, and Equipment Used ...

TLE 7 - 3rd Topic - Hand Tools, Power Tools, Instruments, and Equipment Used ...RizaBedayo

Ěý

Hand Tools, Power Tools, and Equipment in Industrial ArtsAdventure Activities Final By H R Gohil Sir

Adventure Activities Final By H R Gohil SirGUJARATCOMMERCECOLLE

Ěý

Adventure Activities Final By H R Gohil SirHow to use Init Hooks in Odoo 18 - Odoo şÝşÝߣs

How to use Init Hooks in Odoo 18 - Odoo şÝşÝߣsCeline George

Ěý

In this slide, we’ll discuss on how to use Init Hooks in Odoo 18. In Odoo, Init Hooks are essential functions specified as strings in the __init__ file of a module.Computer Application in Business (commerce)

Computer Application in Business (commerce)Sudar Sudar

Ěý

The main objectives

1. To introduce the concept of computer and its various parts. 2. To explain the concept of data base management system and Management information system.

3. To provide insight about networking and basics of internet

Recall various terms of computer and its part

Understand the meaning of software, operating system, programming language and its features

Comparing Data Vs Information and its management system Understanding about various concepts of management information system

Explain about networking and elements based on internet

1. Recall the various concepts relating to computer and its various parts

2 Understand the meaning of software’s, operating system etc

3 Understanding the meaning and utility of database management system

4 Evaluate the various aspects of management information system

5 Generating more ideas regarding the use of internet for business purpose APM People Interest Network Conference - Tim Lyons - The neurological levels ...

APM People Interest Network Conference - Tim Lyons - The neurological levels ...Association for Project Management

Ěý

APM People Interest Network Conference 2025

-Autonomy, Teams and Tension: Projects under stress

-Tim Lyons

-The neurological levels of

team-working: Harmony and tensions

With a background in projects spanning more than 40 years, Tim Lyons specialised in the delivery of large, complex, multi-disciplinary programmes for clients including Crossrail, Network Rail, ExxonMobil, Siemens and in patent development. His first career was in broadcasting, where he designed and built commercial radio station studios in Manchester, Cardiff and Bristol, also working as a presenter and programme producer. Tim now writes and presents extensively on matters relating to the human and neurological aspects of projects, including communication, ethics and coaching. He holds a Master’s degree in NLP, is an NLP Master Practitioner and International Coach. He is the Deputy Lead for APM’s People Interest Network.

Session | The Neurological Levels of Team-working: Harmony and Tensions

Understanding how teams really work at conscious and unconscious levels is critical to a harmonious workplace. This session uncovers what those levels are, how to use them to detect and avoid tensions and how to smooth the management of change by checking you have considered all of them.Rass MELAI : an Internet MELA Quiz Finals - El Dorado 2025

Rass MELAI : an Internet MELA Quiz Finals - El Dorado 2025Conquiztadors- the Quiz Society of Sri Venkateswara College

Ěý

Finals of Rass MELAI : a Music, Entertainment, Literature, Arts and Internet Culture Quiz organized by Conquiztadors, the Quiz society of Sri Venkateswara College under their annual quizzing fest El Dorado 2025. How to attach file using upload button Odoo 18

How to attach file using upload button Odoo 18Celine George

Ěý

In this slide, we’ll discuss on how to attach file using upload button Odoo 18. Odoo features a dedicated model, 'ir.attachments,' designed for storing attachments submitted by end users. We can see the process of utilizing the 'ir.attachments' model to enable file uploads through web forms in this slide.QuickBooks Desktop to QuickBooks Online How to Make the Move

QuickBooks Desktop to QuickBooks Online How to Make the MoveTechSoup

Ěý

If you use QuickBooks Desktop and are stressing about moving to QuickBooks Online, in this webinar, get your questions answered and learn tips and tricks to make the process easier for you.

Key Questions:

* When is the best time to make the shift to QuickBooks Online?

* Will my current version of QuickBooks Desktop stop working?

* I have a really old version of QuickBooks. What should I do?

* I run my payroll in QuickBooks Desktop now. How is that affected?

*Does it bring over all my historical data? Are there things that don't come over?

* What are the main differences between QuickBooks Desktop and QuickBooks Online?

* And moreHow to Configure Flexible Working Schedule in Odoo 18 Employee

How to Configure Flexible Working Schedule in Odoo 18 EmployeeCeline George

Ěý

In this slide, we’ll discuss on how to configure flexible working schedule in Odoo 18 Employee module. In Odoo 18, the Employee module offers powerful tools to configure and manage flexible working schedules tailored to your organization's needs.N.C. DPI's 2023 Language Diversity Briefing

N.C. DPI's 2023 Language Diversity BriefingMebane Rash

Ěý

The number of languages spoken in NC public schools.The Story Behind the Abney Park Restoration Project by Tom Walker

The Story Behind the Abney Park Restoration Project by Tom WalkerHistory of Stoke Newington

Ěý

Presented at the 24th Stoke Newington History Talks event on 27th Feb 2025

https://stokenewingtonhistory.com/stoke-newington-history-talks/Blind Spots in AI and Formulation Science Knowledge Pyramid (Updated Perspect...

Blind Spots in AI and Formulation Science Knowledge Pyramid (Updated Perspect...Ajaz Hussain

Ěý

This presentation delves into the systemic blind spots within pharmaceutical science and regulatory systems, emphasizing the significance of "inactive ingredients" and their influence on therapeutic equivalence. These blind spots, indicative of normalized systemic failures, go beyond mere chance occurrences and are ingrained deeply enough to compromise decision-making processes and erode trust.

Historical instances like the 1938 FD&C Act and the Generic Drug Scandals underscore how crisis-triggered reforms often fail to address the fundamental issues, perpetuating inefficiencies and hazards.

The narrative advocates a shift from reactive crisis management to proactive, adaptable systems prioritizing continuous enhancement. Key hurdles involve challenging outdated assumptions regarding bioavailability, inadequately funded research ventures, and the impact of vague language in regulatory frameworks.

The rise of large language models (LLMs) presents promising solutions, albeit with accompanying risks necessitating thorough validation and seamless integration.

Tackling these blind spots demands a holistic approach, embracing adaptive learning and a steadfast commitment to self-improvement. By nurturing curiosity, refining regulatory terminology, and judiciously harnessing new technologies, the pharmaceutical sector can progress towards better public health service delivery and ensure the safety, efficacy, and real-world impact of drug products.A PPT Presentation on The Princess and the God: A tale of ancient India by A...

A PPT Presentation on The Princess and the God: A tale of ancient India by A...Beena E S

Ěý

A PPT Presentation on The Princess and the God: A tale of ancient India by Aaron ShepardAPM People Interest Network Conference - Tim Lyons - The neurological levels ...

APM People Interest Network Conference - Tim Lyons - The neurological levels ...Association for Project Management

Ěý

Rass MELAI : an Internet MELA Quiz Finals - El Dorado 2025

Rass MELAI : an Internet MELA Quiz Finals - El Dorado 2025Conquiztadors- the Quiz Society of Sri Venkateswara College

Ěý

Workloads

- 1. PRESENTED BY: NOQAIYA ALI M.TECH CSE 1ST YEAR

- 2. ď‚ž A workload is anything a computer is asked to do ď‚ž Test workload: any workload used to analyze performance ď‚ž Real workload: any observed during normal operations ď‚ž Synthetic: created for controlled testing

- 3. TEST WORKLOAD FOR COMPUTER SYSTEMS ď‚žAddition Instruction ď‚žInstruction Mixes ď‚žKernels ď‚žSynthetic Programs ď‚žApplication Benchmarks

- 4. ADDITION INSTRUCTION Sole workload used, and the addition time was the sole performance metric

- 5. INSTRUCTION MIXES Specification of various instructions coupled with their usage frequency

- 6. KERNELS Kernel = nucleus Commonly used kernels: Sieve, Puzzle, Tree Searching, Ackerman’s Function and Sorting

- 7. SYNTHETIC PROGRAMS To measure I/O performance lead analysts ⇒ Exerciser loops Exerciser loops can be quickly developed and given to different vendors

- 8. APPLICATION BENCHMARKS ď‚žApplication dependant ď‚žEx: Debit-Credit Benchmark

- 10. Some well-known Benchmarks Sieve Used to compare, Microprocessors, Personal computers and high level languages Ackerman’s Function Used to assess the efficiency of the procedure calling mechanism in ALGOL-like languages.

- 11. Some well-known Benchmarks ď‚žWhetstone ď‚žLINPACK ď‚žDHRYSTONE ď‚žLawrence Livermore Loops ď‚žDebit-Credit Benchmark

- 12. SPEC Benchmark Suite Systems Performance Evaluation Cooperative (SPEC): Nonprofit corporation formed by leading computer vendors to develop a standardized set of benchmarks.

- 13. Some well-known Benchmarks GCC Espresso Spice 2g6 Doduc NASA7 LI Eqntott Matrix300 Fpppp Tomcatv

- 15. 4 Major Considerations in Selecting Workload ď‚žServices exercised ď‚žLevel of detail ď‚žLoading level ď‚žImpact of other components ď‚žTimeliness

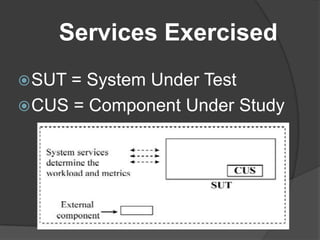

- 16. Services Exercised ď‚ž SUT = System Under Test ď‚ž CUS = Component Under Study

- 17. Level of Detail ď‚žMost frequent request ď‚žFrequency of request types ď‚žTime-stamped sequence of requests ď‚žAverage resource demand ď‚žDistribution of resource demands

- 19. Timeliness ď‚žDifficult to achieve. ď‚žNew systems implying new workloads ď‚žUsers tend to optimize the demand