Google Colaboratoryの使い方

5 likes7,545 views

Google Colaboratoryの使い方について、授業で使う範囲で必要なことを説明してみました。

1 of 22

Downloaded 29 times

Recommended

博士課程の誤解と真実 ー進学に向けて、両親を説得した資料をもとにー

博士課程の誤解と真実 ー進学に向けて、両親を説得した資料をもとにーAtsuto ONODA

?

東京理科大学の「こうよう会」にて行った講演のスライドです(一部改変あり)。本スライドは、私自身が大学院博士課程へと進学する際、両親に賛同を得てもらうために、その説得に用いた資料をベースに作りました。データ等は最新のものになっていますが、概ね、当時のままです。もし、両親に進学を反対されていて、どうしても説得したいと考えている学生がおりましたら、本資料を参考に、ご自身の所属大学のデータを用いて説得してみてはいかがでしょうか?

Twitter→ @ONODA_in_Onodac

Website→http://www.atsuto-onoda.com/

補足説明:各方面からの疑問に対して補足いたします。

1.修士2年生は親への説得は必要か?

親子関係は人それぞれですが、私の場合は親に納得してもらい、後顧の憂いなく博士課程で研究に没頭したかったので、説明しました。またプレゼンスライドはあくまでも「今回の講演用」であり、説得時にはプレゼンをしたわけではなく、あくまでも資料(就職率や奨学金等)を用意しただけです。

2.牛丼は何?

「辛そうにしているときはとりあえず、美味しいご飯を食べさせてあげてください。私は1杯300円の牛丼に救われました。」という趣旨の内容をお話しするために用意いたしました。

3.熱意はいらんの?

親に説明したときは、もちろん、自分の行っている研究内容について触れ、自分の目指している夢についても語りました。しかし、今回の講演では、それは不要(時間的に足りない)と判断して泣く泣くカットいたしました。

4.就職率100%にバイトなどのワーキングプアは含まれていないのか?

大学が公開している公的な資料によると、就職率と就職先の名前は書いてありますが、そこでの待遇等は記載されておりません(プライバシー的な問題が大きいのでしょう)。私も、就職後の情報を記載することが重要だと思ってはいるのですが、残念ながらそこまで追いかけることができませんでした。申し訳ございません。ただ、正規雇用と判断される企業への就職率は7割を超えている(残り3割は教員、公務員、未定等)ため、少なく見積もっても7割はワーキングプアではないのではないかと想定しております。

5.理系限定じゃね? 文系は?

すみません。うちの大学理系総合大学故……誰か文系版作って。

6.そんなに学振DCなどの金銭的援助を得られる奴ばっかじゃないだろ

これは、最低(援助なし)と最高(様々な援助有)を示すことで、この範疇に入りますという趣旨のスライドです。限りなく不可能に近いかとは思いますが、理論上の最高値を示すことで、「世の中には博士の支援制度がたくさんあるんですよ」、ということを説明しています。なので、必ずしも全員が通るわけではない、特に育志賞(賞金110万円)は年間20人弱、学振DCは全博士の1割程度しか通らないと、口頭で述べています。ただ、「それ以外の財団や大学、国外の政府が行っている奨学金制度、それに加えて理研や産総研が行っているRA制度などを活用することで、生活費を賄うことができます。実際に私の同級生は全員何かしらの形で生活費を自分で準備していました。」と述べました。

更に同時に、理論上最高値であったとしても、程修士卒の理系会社員には400万円程低いということも説明いたしました。

7.デメリットに触れないのはフェアじゃない

私にとって博士課程の最大のデメリットは、「その期間会社員になって得られたはずの経験が得られない」というものです。これには当然、金銭を受け取るというのも含まれます。これに関しては、上記の援助に関する部分で同時にお話ししています。博士号の学位取得できない可能性等に関しましては、本学の場合、就職した方全員が学位を取得しているうえ、取得できなかったとしても、満期修了退学後に大学へ論文を提出することで学位取得できる(論博ではないです)ため、高確率で取得できると判断し、割愛いたしました。

8.博士課程の厳しさについてもっと触れるべき

申し訳ございません。私としては、「博士号の意義」と「メンタルヘルス」の部分で述べたつもりになっていたのですが、足りていませんでした。次回以降改善いたします。

畳み込みニューラルネットワークの研究动向

畳み込みニューラルネットワークの研究动向Yusuke Uchida

?

2017年12月に開催されたパターン認識?メディア理解研究会(PRMU)にて発表した畳み込みニューラルネットワークのサーベイ

「2012年の画像認識コンペティションILSVRCにおけるAlexNetの登場以降,画像認識においては畳み込みニューラルネットワーク (CNN) を用いることがデファクトスタンダードとなった.ILSVRCでは毎年のように新たなCNNのモデルが提案され,一貫して認識精度の向上に寄与してきた.CNNは画像分類だけではなく,セグメンテーションや物体検出など様々なタスクを解くためのベースネットワークとしても広く利用されてきている.

本稿では,AlexNet以降の代表的なCNNの変遷を振り返るとともに,近年提案されている様々なCNNの改良手法についてサーベイを行い,それらを幾つかのアプローチに分類し,解説する.更に,代表的なモデルについて複数のデータセットを用いて学習および網羅的な精度評価を行い,各モデルの精度および学習時間の傾向について議論を行う.」最适输送入门

最适输送入门joisino

?

IBIS 2021 https://ibisml.org/ibis2021/ における最適輸送についてのチュートリアルスライドです。

『最適輸送の理論とアルゴリズム』好評発売中! https://www.amazon.co.jp/dp/4065305144

Speakerdeck にもアップロードしました: https://speakerdeck.com/joisino/zui-shi-shu-song-ru-men【メタサーベイ】基盤モデル / Foundation Models

【メタサーベイ】基盤モデル / Foundation Modelscvpaper. challenge

?

cvpaper.challenge の メタサーベイ発表スライドです。

cvpaper.challengeはコンピュータビジョン分野の今を映し、トレンドを創り出す挑戦です。論文サマリ作成?アイディア考案?議論?実装?論文投稿に取り組み、凡ゆる知識を共有します。

http://xpaperchallenge.org/cv/ゼロから始める深層強化学習(NLP2018講演資料)/ Introduction of Deep Reinforcement Learning

ゼロから始める深層強化学習(NLP2018講演資料)/ Introduction of Deep Reinforcement LearningPreferred Networks

?

Introduction of Deep Reinforcement Learning, which was presented at domestic NLP conference.

言語処理学会第24回年次大会(NLP2018) での講演資料です。

http://www.anlp.jp/nlp2018/#tutorial摆顿尝轮読会闭近年のエネルギーベースモデルの进展

摆顿尝轮読会闭近年のエネルギーベースモデルの进展Deep Learning JP

?

1. The document discusses energy-based models (EBMs) and how they can be applied to classifiers. It introduces noise contrastive estimation and flow contrastive estimation as methods to train EBMs.

2. One paper presented trains energy-based models using flow contrastive estimation by passing data through a flow-based generator. This allows implicit modeling with EBMs.

3. Another paper argues that classifiers can be viewed as joint energy-based models over inputs and outputs, and should be treated as such. It introduces a method to train classifiers as EBMs using contrastive divergence.AIのラボからロボティクスへ --- 東大松尾研究室のWRS2020パートナーロボットチャレンジへの挑戦

AIのラボからロボティクスへ --- 東大松尾研究室のWRS2020パートナーロボットチャレンジへの挑戦Tatsuya Matsushima

?

2022.8.19の #robosemi での発表資料です.

お気軽にご連絡ください

松尾研ロボットチームサイト:https://trail.t.u-tokyo.ac.jp/ja/

松尾研サイト:https://weblab.t.u-tokyo.ac.jp/【DL輪読会】GPT-4Technical Report

【DL輪読会】GPT-4Technical ReportDeep Learning JP

?

2023/4/28

Deep Learning JP

http://deeplearning.jp/seminar-2/

ブラックボックスからXAI (説明可能なAI) へ - LIME (Local Interpretable Model-agnostic Explanat...

ブラックボックスからXAI (説明可能なAI) へ - LIME (Local Interpretable Model-agnostic Explanat...西岡 賢一郎

?

機械学習の社会実装では、予測精度が高くても、機械学習がブラックボックであるために使うことができないということがよく起きます。

このスライドでは機械学習が不得意な予測結果の根拠を示すために考案されたLIMEの論文を解説します。

Ribeiro, Marco Tulio, Sameer Singh, and Carlos Guestrin. "" Why should i trust you?" Explaining the predictions of any classifier." Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining. 2016.PFN のオンプレML基盤の取り組み / オンプレML基盤 on Kubernetes ?PFN、ヤフー?

PFN のオンプレML基盤の取り組み / オンプレML基盤 on Kubernetes ?PFN、ヤフー?Preferred Networks

?

PFNは,「現実世界を計算可能にする」をVisionとして,膨大な計算量を必要とするシミュレーションや深層学習などの計算ワークロードを実行するためのオンプレML基盤を持っています.

この取り組みについて、「使いやすい環境」、「リソースの効率的かつフェアな利用」、「信頼性?運用省力化」の観点から紹介します。

本イベント「オンプレML基盤 on Kubernetes ?PFN、ヤフー?」では、オンプレミスの Kubernetes クラスタ上に構築された機械学習基盤を持つ PFN とヤフーのエンジニアが自社での取り組みについて語り尽くします!

イベントサイト: https://ml-kubernetes.connpass.com/event/239859/【DL輪読会】Scaling Laws for Neural Language Models

【DL輪読会】Scaling Laws for Neural Language ModelsDeep Learning JP

?

This document summarizes a research paper on scaling laws for neural language models. Some key findings of the paper include:

- Language model performance depends strongly on model scale and weakly on model shape. With enough compute and data, performance scales as a power law of parameters, compute, and data.

- Overfitting is universal, with penalties depending on the ratio of parameters to data.

- Large models have higher sample efficiency and can reach the same performance levels with less optimization steps and data points.

- The paper motivated subsequent work by OpenAI on applying scaling laws to other domains like computer vision and developing increasingly large language models like GPT-3.【DL輪読会】Llama 2: Open Foundation and Fine-Tuned Chat Models

【DL輪読会】Llama 2: Open Foundation and Fine-Tuned Chat ModelsDeep Learning JP

?

2023/7/20

Deep Learning JP

http://deeplearning.jp/seminar-2/勾配ブースティングの基礎と最新の動向 (MIRU2020 Tutorial)

勾配ブースティングの基礎と最新の動向 (MIRU2020 Tutorial)RyuichiKanoh

?

Gradient Boostingは近年Kaggleなどのコンペティションで注目を集めている分類や回帰問題に対するアルゴリズムの一つである。XGBoost, LightGBM, CatBoostなどが有名ではあるが、それらを土台にして近年はDeepGBMやNGBoostといった新規アルゴリズムの登場、汎化理論解析の進展、モデル解釈性の付与方法の多様化など、理論から応用まで多岐にわたる研究が行われている。本チュートリアルでは、Gradient Boostingに関する近年の研究動向やテクニックを、それらの社会実装までを見据えながら紹介していく。 フ?ースティンク?入门

フ?ースティンク?入门Retrieva inc.

?

ブースティングはアンサンブル学習の一つです。アンサンブル学習では、性能の低い学習器を組み合わせて、高性能な学習器を作ります。教師あり機械学習の問題設定の復習から始めて、バギングやブースティングのアルゴリズムについて解説します。

レトリバセミナーで話したときの動画はこちらです: https://www.youtube.com/watch?v=SJtdG62691g础迟迟别苍迟颈辞苍の基础から罢谤补苍蝉蹿辞谤尘别谤の入门まで

础迟迟别苍迟颈辞苍の基础から罢谤补苍蝉蹿辞谤尘别谤の入门までAGIRobots

?

自分の知识整理のために、础迟迟别苍迟颈辞苍の起源から、罢谤补苍蝉蹿辞谤尘别谤の入门レベルまでの内容をスライドにまとめました【DL輪読会】ViTPose: Simple Vision Transformer Baselines for Human Pose Estimation

【DL輪読会】ViTPose: Simple Vision Transformer Baselines for Human Pose EstimationDeep Learning JP

?

2022/11/18

Deep Learning JP

http://deeplearning.jp/seminar-2/Vision and Language(メタサーベイ )

Vision and Language(メタサーベイ )cvpaper. challenge

?

cvpaper.challenge の メタサーベイ発表スライドです。

cvpaper.challengeはコンピュータビジョン分野の今を映し、トレンドを創り出す挑戦です。論文サマリ作成?アイディア考案?議論?実装?論文投稿に取り組み、凡ゆる知識を共有します。2020の目標は「トップ会議30+本投稿」することです。

http://xpaperchallenge.org/cv/ 【DL輪読会】SimCSE: Simple Contrastive Learning of Sentence Embeddings (EMNLP 2021)

【DL輪読会】SimCSE: Simple Contrastive Learning of Sentence Embeddings (EMNLP 2021)Deep Learning JP

?

2021/12/03

Deep Learning JP:

http://deeplearning.jp/seminar-2/Triplet Loss 徹底解説

Triplet Loss 徹底解説tancoro

?

ClassificationとMetric Learningの違い、Contrastive Loss と Triplet Loss、Triplet Lossの改良の変遷など 【メタサーベイ】Video Transformer

【メタサーベイ】Video Transformercvpaper. challenge

?

cvpaper.challenge の メタサーベイ発表スライドです。

cvpaper.challengeはコンピュータビジョン分野の今を映し、トレンドを創り出す挑戦です。論文サマリ作成?アイディア考案?議論?実装?論文投稿に取り組み、凡ゆる知識を共有します。

http://xpaperchallenge.org/cv/ モデル高速化百选

モデル高速化百选Yusuke Uchida

?

画像センシングシンポジウム (SSII 2019) の企画セッション「深層学習の高速化 ? 高速チップ、分散学習、軽量モデル ??」の講演資料です。

深層学習モデルを高速化する下記6種類の手法の解説です。

- 畳み込みの分解 (Factorization)

- 枝刈り (Pruning)

- アーキテクチャ探索 (Neural Architecture Search; NAS)

- 早期終了、動的計算グラフ?(Early Termination, Dynamic Computation Graph)

- 蒸留 (Distillation)

- 量子化 (Quantization)Introduction of network analysis with Google Colaboratory -- Introduction of ...

Introduction of network analysis with Google Colaboratory -- Introduction of ...tm1966

?

Introduction of network analysis with Google Colaboratory -- Introduction of Google ColaboratoryMore Related Content

What's hot (20)

AIのラボからロボティクスへ --- 東大松尾研究室のWRS2020パートナーロボットチャレンジへの挑戦

AIのラボからロボティクスへ --- 東大松尾研究室のWRS2020パートナーロボットチャレンジへの挑戦Tatsuya Matsushima

?

2022.8.19の #robosemi での発表資料です.

お気軽にご連絡ください

松尾研ロボットチームサイト:https://trail.t.u-tokyo.ac.jp/ja/

松尾研サイト:https://weblab.t.u-tokyo.ac.jp/【DL輪読会】GPT-4Technical Report

【DL輪読会】GPT-4Technical ReportDeep Learning JP

?

2023/4/28

Deep Learning JP

http://deeplearning.jp/seminar-2/

ブラックボックスからXAI (説明可能なAI) へ - LIME (Local Interpretable Model-agnostic Explanat...

ブラックボックスからXAI (説明可能なAI) へ - LIME (Local Interpretable Model-agnostic Explanat...西岡 賢一郎

?

機械学習の社会実装では、予測精度が高くても、機械学習がブラックボックであるために使うことができないということがよく起きます。

このスライドでは機械学習が不得意な予測結果の根拠を示すために考案されたLIMEの論文を解説します。

Ribeiro, Marco Tulio, Sameer Singh, and Carlos Guestrin. "" Why should i trust you?" Explaining the predictions of any classifier." Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining. 2016.PFN のオンプレML基盤の取り組み / オンプレML基盤 on Kubernetes ?PFN、ヤフー?

PFN のオンプレML基盤の取り組み / オンプレML基盤 on Kubernetes ?PFN、ヤフー?Preferred Networks

?

PFNは,「現実世界を計算可能にする」をVisionとして,膨大な計算量を必要とするシミュレーションや深層学習などの計算ワークロードを実行するためのオンプレML基盤を持っています.

この取り組みについて、「使いやすい環境」、「リソースの効率的かつフェアな利用」、「信頼性?運用省力化」の観点から紹介します。

本イベント「オンプレML基盤 on Kubernetes ?PFN、ヤフー?」では、オンプレミスの Kubernetes クラスタ上に構築された機械学習基盤を持つ PFN とヤフーのエンジニアが自社での取り組みについて語り尽くします!

イベントサイト: https://ml-kubernetes.connpass.com/event/239859/【DL輪読会】Scaling Laws for Neural Language Models

【DL輪読会】Scaling Laws for Neural Language ModelsDeep Learning JP

?

This document summarizes a research paper on scaling laws for neural language models. Some key findings of the paper include:

- Language model performance depends strongly on model scale and weakly on model shape. With enough compute and data, performance scales as a power law of parameters, compute, and data.

- Overfitting is universal, with penalties depending on the ratio of parameters to data.

- Large models have higher sample efficiency and can reach the same performance levels with less optimization steps and data points.

- The paper motivated subsequent work by OpenAI on applying scaling laws to other domains like computer vision and developing increasingly large language models like GPT-3.【DL輪読会】Llama 2: Open Foundation and Fine-Tuned Chat Models

【DL輪読会】Llama 2: Open Foundation and Fine-Tuned Chat ModelsDeep Learning JP

?

2023/7/20

Deep Learning JP

http://deeplearning.jp/seminar-2/勾配ブースティングの基礎と最新の動向 (MIRU2020 Tutorial)

勾配ブースティングの基礎と最新の動向 (MIRU2020 Tutorial)RyuichiKanoh

?

Gradient Boostingは近年Kaggleなどのコンペティションで注目を集めている分類や回帰問題に対するアルゴリズムの一つである。XGBoost, LightGBM, CatBoostなどが有名ではあるが、それらを土台にして近年はDeepGBMやNGBoostといった新規アルゴリズムの登場、汎化理論解析の進展、モデル解釈性の付与方法の多様化など、理論から応用まで多岐にわたる研究が行われている。本チュートリアルでは、Gradient Boostingに関する近年の研究動向やテクニックを、それらの社会実装までを見据えながら紹介していく。 フ?ースティンク?入门

フ?ースティンク?入门Retrieva inc.

?

ブースティングはアンサンブル学習の一つです。アンサンブル学習では、性能の低い学習器を組み合わせて、高性能な学習器を作ります。教師あり機械学習の問題設定の復習から始めて、バギングやブースティングのアルゴリズムについて解説します。

レトリバセミナーで話したときの動画はこちらです: https://www.youtube.com/watch?v=SJtdG62691g础迟迟别苍迟颈辞苍の基础から罢谤补苍蝉蹿辞谤尘别谤の入门まで

础迟迟别苍迟颈辞苍の基础から罢谤补苍蝉蹿辞谤尘别谤の入门までAGIRobots

?

自分の知识整理のために、础迟迟别苍迟颈辞苍の起源から、罢谤补苍蝉蹿辞谤尘别谤の入门レベルまでの内容をスライドにまとめました【DL輪読会】ViTPose: Simple Vision Transformer Baselines for Human Pose Estimation

【DL輪読会】ViTPose: Simple Vision Transformer Baselines for Human Pose EstimationDeep Learning JP

?

2022/11/18

Deep Learning JP

http://deeplearning.jp/seminar-2/Vision and Language(メタサーベイ )

Vision and Language(メタサーベイ )cvpaper. challenge

?

cvpaper.challenge の メタサーベイ発表スライドです。

cvpaper.challengeはコンピュータビジョン分野の今を映し、トレンドを創り出す挑戦です。論文サマリ作成?アイディア考案?議論?実装?論文投稿に取り組み、凡ゆる知識を共有します。2020の目標は「トップ会議30+本投稿」することです。

http://xpaperchallenge.org/cv/ 【DL輪読会】SimCSE: Simple Contrastive Learning of Sentence Embeddings (EMNLP 2021)

【DL輪読会】SimCSE: Simple Contrastive Learning of Sentence Embeddings (EMNLP 2021)Deep Learning JP

?

2021/12/03

Deep Learning JP:

http://deeplearning.jp/seminar-2/Triplet Loss 徹底解説

Triplet Loss 徹底解説tancoro

?

ClassificationとMetric Learningの違い、Contrastive Loss と Triplet Loss、Triplet Lossの改良の変遷など 【メタサーベイ】Video Transformer

【メタサーベイ】Video Transformercvpaper. challenge

?

cvpaper.challenge の メタサーベイ発表スライドです。

cvpaper.challengeはコンピュータビジョン分野の今を映し、トレンドを創り出す挑戦です。論文サマリ作成?アイディア考案?議論?実装?論文投稿に取り組み、凡ゆる知識を共有します。

http://xpaperchallenge.org/cv/ モデル高速化百选

モデル高速化百选Yusuke Uchida

?

画像センシングシンポジウム (SSII 2019) の企画セッション「深層学習の高速化 ? 高速チップ、分散学習、軽量モデル ??」の講演資料です。

深層学習モデルを高速化する下記6種類の手法の解説です。

- 畳み込みの分解 (Factorization)

- 枝刈り (Pruning)

- アーキテクチャ探索 (Neural Architecture Search; NAS)

- 早期終了、動的計算グラフ?(Early Termination, Dynamic Computation Graph)

- 蒸留 (Distillation)

- 量子化 (Quantization)Similar to Google Colaboratoryの使い方 (20)

Introduction of network analysis with Google Colaboratory -- Introduction of ...

Introduction of network analysis with Google Colaboratory -- Introduction of ...tm1966

?

Introduction of network analysis with Google Colaboratory -- Introduction of Google ColaboratoryGoogle colab 2

Google colab 2Masatoshi Itagaki

?

Python機械学習勉強会in新潟での発表。

Google ColabでKeras Tutorinalのbasic_text_classification.ipynpの日本語版を試す。Introduction of network analysis with Google Colaboratory -- Orientation

Introduction of network analysis with Google Colaboratory -- Orientationtm1966

?

tutorial slides of Google Colaboratory for network analysis on May 23, 2019今时のオンプレな驳颈迟丑耻产クローン环境构筑

今时のオンプレな驳颈迟丑耻产クローン环境构筑You&I

?

わんくま同盟 名古屋勉強会 #48 のセッション発表資料。

http://www.wankuma.com/seminar/20190525nagoya48/Django から各種チャットツールに通知するライブラリを作った話

Django から各種チャットツールに通知するライブラリを作った話Yusuke Miyazaki

?

PyCon JP 2015 で発表した Lightning Talk のスライドです。

概要: Django アプリケーションから Slack や HipChat といったチャットツールに簡単に通知を送ることができるライブラリ、django-channels を開発しました。このライブラリを使うと、エンジニア以外のメンバーともサービスの情報を共有しやすくなります。このトークではライブラリを開発するに至った経緯や、ライブラリの利用例などについて紹介します。

https://pycon.jp/2015/ja/schedule/presentation/97/pf-5. リストと繰り返し

pf-5. リストと繰り返しkunihikokaneko1

?

トピックス:Python, Google Colaboratory, リスト, 繰り返し, for

Python 入門(Google Colaboratory を使用)(全8回)

https://www.kkaneko.jp/pro/pf/index.html

金子邦彦研究室ホームページ

https://www.kkaneko.jp/index.html入門書を読み終わったらなにしよう? ?Python と WebAPI の使い方から学ぶ次の一歩? / next-step-python-programing

入門書を読み終わったらなにしよう? ?Python と WebAPI の使い方から学ぶ次の一歩? / next-step-python-programingKei IWASAKI

?

みんなの Python 勉強会#33 の発表資料です

https://startpython.connpass.com/event/73168/笔测迟丑辞苍と搁によるテ?ータ分析环境の构筑と机械学习によるテ?ータ认识

笔测迟丑辞苍と搁によるテ?ータ分析环境の构筑と机械学习によるテ?ータ认识Katsuhiro Morishita

?

2014-09-03開催の熊本高専 高専カフェで紹介する、PythonとRを使ったデータ分析環境の解説です。pf-1. Python,Google Colaboratory

pf-1. Python,Google Colaboratorykunihikokaneko1

?

トピックス:プログラミング, ソースコード, Python, Python プログラムの実行, Google Colaboratory, Google アカウント, コードセルの作成

Python 入門(Google Colaboratory を使用)(全8回)

https://www.kkaneko.jp/pro/pf/index.html

金子邦彦研究室ホームページ

https://www.kkaneko.jp/index.htmlMore from Katsuhiro Morishita (20)

笔测迟丑辞苍のパッケージ管理ツールの话蔼2020

笔测迟丑辞苍のパッケージ管理ツールの话蔼2020Katsuhiro Morishita

?

2020年5月23日のかごもくで発表させて顶いた、笔测迟丑辞苍のバージョン管理ツールの话です。全然详しくないので辫颈辫と惫别苍惫の话しかしていませんが???。SIgfox触ってみた in IoTLT in 熊本市 vol.3

SIgfox触ってみた in IoTLT in 熊本市 vol.3Katsuhiro Morishita

?

マルツパーツさんで購入したSigfoxモジュールをArduino M0で制御した話をIoTLT 熊本市 vol.3でお話ししたときのスライドです。贰虫肠别濒でのグラフの作成方法谤别

贰虫肠别濒でのグラフの作成方法谤别Katsuhiro Morishita

?

Excelを使ったグラフの作成方法と、論文などへ掲載する上で必要となる編集機能の使い方を解説しました。

Excelの起動方法や、ヒストグラムの作成方法を追記しました。

机械学习と主成分分析

机械学习と主成分分析Katsuhiro Morishita

?

機械学習における主成分分析について説明するとともに、実際のデータを用いた処理について説明しました。

2018-05-17 誤字修正のため、再アップ

それまでのログ:view: 6934, like 4, download: 35笔测迟丑辞苍で始めた数値计算の授业@わんくま勉强会2018-04

笔测迟丑辞苍で始めた数値计算の授业@わんくま勉强会2018-04Katsuhiro Morishita

?

2017年度の数値計算の授業で取り組んだ結果について、2018年4月のわんくま勉強会で報告した資料です。内容は採点の方法とか、学生の躓いた点とかです。 タイトルは、前年度にVBAを使ったことを考慮して決めました(笑)

*リンクを更新したのでアップロードしなおしています。サービス変えようかなぁ。。。マークシート読み込みプログラムを作ってみた蔼2018-04-04

マークシート読み込みプログラムを作ってみた蔼2018-04-04Katsuhiro Morishita

?

マークシート試験を実施したので、そのマークシートを読み取って採点するプログラムをPythonで作ったお話です。

# これは2018-04-04に熊本市の未来会議室で開催されたオトナのプログラミング勉強会のLTで発表した資料に加筆したものです。オトナの画像认识 2018年3月21日実施

オトナの画像认识 2018年3月21日実施Katsuhiro Morishita

?

オトナのプログラミング勉强会で実施した、ニューラルネットワークを利用した画像认识の资料です。办别谤补蝉を利用した颁狈狈の使い方について简単に绍介しています。LoRa-WANで河川水位を計測してみた@IoTLT@熊本市 vol.001

LoRa-WANで河川水位を計測してみた@IoTLT@熊本市 vol.001Katsuhiro Morishita

?

尝辞搁补-奥础狈を使った河川水位计测デバイスを作ったはなしです。省电力に広域のセンシングに向いています。シリーズML-08 ニューラルネットワークを用いた識別?分類ーシングルラベルー

シリーズML-08 ニューラルネットワークを用いた識別?分類ーシングルラベルーKatsuhiro Morishita

?

机械学习シリーズスライドです。このスライドでは、ニューラルネットワークを用いた识别(シングルラベル)の概要について説明しました。シリーズML-07 ニューラルネットワークによる非線形回帰

シリーズML-07 ニューラルネットワークによる非線形回帰Katsuhiro Morishita

?

机械学习シリーズスライドです。このスライドでは、ニューラルネットワークによる非线形回帰について説明しました。シリーズML-06 ニューラルネットワークによる線形回帰

シリーズML-06 ニューラルネットワークによる線形回帰Katsuhiro Morishita

?

机械学习シリーズスライドです。このスライドでは、碍别谤补蝉を使ったニューラルネットワークによる线形回帰问题について説明しました。笔补苍诲补蝉利用上のエラーとその対策

笔补苍诲补蝉利用上のエラーとその対策Katsuhiro Morishita

?

笔测迟丑辞苍の便利ライブラリの一つである辫补苍诲补蝉を使っているうちに出てくる文字コード由来のエラーとその対策についてまとめました 。Pythonによる、デジタル通信のための ビタビ符号化?復号ライブラリの作成

Pythonによる、デジタル通信のための ビタビ符号化?復号ライブラリの作成Katsuhiro Morishita

?

デジタル通信において误り订正に使われているビタビ符号を笔测迟丑辞苍で実装した报告です。*これは2017-07-05に熊本市の未来会议室で开催されたオトナのオブジェクト勉强会での尝罢资料です。

Google Colaboratoryの使い方

- 2. Colaboratoryとは ? ブラウザ上でPythonのプログラムを実行できる環境 ? jupyter notebook環境をGoogleがネット上に提供したもの ? ネットとPCがあれば誰でもプログラムを書ける ? 最初から数値計算や機械学習のライブラリが入ってる ? ノートブックを開く度に新しい仮想環境が提供されるので、安心 ? 足りないライブラリは追加でインストールできる ? Googleアカウントが必要 2

- 3. 始め方 ? 下記のURLにアクセス ? https://colab.research.google.com/?hl=ja ? Googleにログインしていないならログイン 3

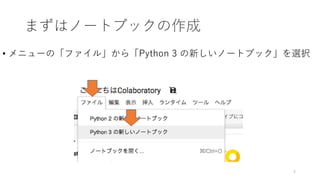

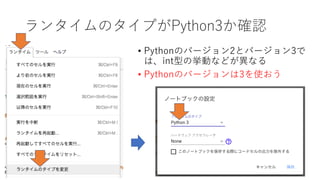

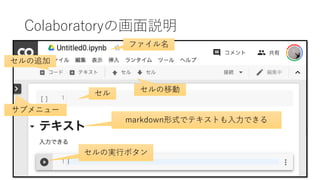

- 4. まずはノートブックの作成 ? メニューの「ファイル」から「Python 3 の新しいノートブック」を選択 4

- 7. ノートブックの保存場所 ? ノートブックは、標準ではGoogleドライブのマイドライブ内の Colab Notebooksというフォルダに保存される ? ただし、任意の場所にノートブックを移動させたり、作成できる ? 右クリック→その他→Colaboratory 7

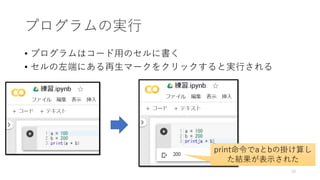

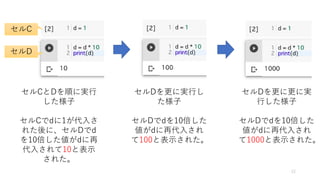

- 11. セルの実行のされ方 ? セルAを実行しても、Bは実行されない ? セル単位で実行される ? Pythonでは、表示はprint()関数を使う ? jupyter notebookでは、セルの最後に書い た処理の返り値は表示される ? 実行すると、変数などの処理内容はメモリ 内に保存される ? セルAを実行すると、変数aとbが保持される ? 保持された変数は他のセルでも参照できる 11 セルA セルB

- 13. Colaboratory (jupyter noteboook)利用上の注意 ? 長い複雑な処理を結果を確認しながら小分けに書ける ? すごく便利だが、 ? セルを上から順に実行しなくても動く。動いてしまう。 ? 処理結果が他のセルに依存しやすいし、変数名が同じだと中身が 上書きされて意図通りに動かないことがある ? 基本的に、1つのノートブックには、1つのテーマとした方が良い ? 作ったプログラムをローカル上で動かす方法もあるが、省略 ? 卒研等で必要なら相談してください 13

- 14. Colaboratoryへのファイルのアップ方法1 ? ファイルをColaboratoryで扱うには、アップロードが必要 ? 下記のコードをセルに書き込んで、実行せよ ? 表示された「ファイル選択」をクリックしてアップロード 14 # show upload dialog from google.colab import files uploaded = files.upload() ファイルをアップロードできるようになります

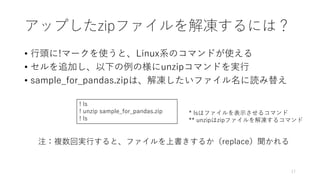

- 17. アップしたzipファイルを解凍するには? ? 行頭に!マークを使うと、Linux系のコマンドが使える ? セルを追加し、以下の例の様にunzipコマンドを実行 ? sample_for_pandas.zipは、解凍したいファイル名に読み替え 17 ! ls ! unzip sample_for_pandas.zip ! ls 注:複数回実行すると、ファイルを上書きするか(replace)聞かれる * lsはファイルを表示させるコマンド ** unzipはzipファイルを解凍するコマンド

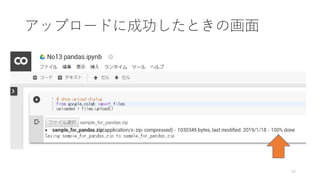

- 18. 解凍に成功したときの画面 ? sample_for_pandasという、ディレ クトリ?が作成される ? データはsample_for_pandasの中に 保存されている ? ls ./sample_for_pandas -allでフォ ルダ内のファイル一覧を確認できる 18 ?Linux系では、フォルダのことをディレクトリと言います。

- 19. ? Colaboratoryで保存したファイルはGoogleのサーバー内にある ? 保存したファイルを自分のPCで使うには、ダウンロードが必要 ? 下記のコードを実行してファイルをダウンロードしよう ? 許可を要求されたら、「許可」をクリック 19 from google.colab import files files.download('save_sample.xlsx') files.download('save_sample_utf8.csv') Colaboratoryからのファイルダウンロード方法1

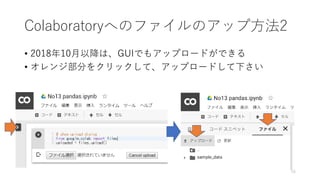

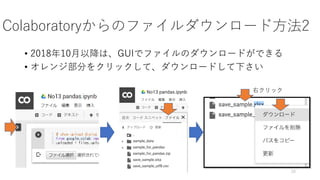

- 20. Colaboratoryからのファイルダウンロード方法2 ? 2018年10月以降は、GUIでファイルのダウンロードができる ? オレンジ部分をクリックして、ダウンロードして下さい 20 右クリック

- 21. 付録 21

- 22. 参考文献 ? Google Colabの使い方まとめ ? https://qiita.com/shoji9x9/items/0ff0f6f603df18d631ab ? 機械学習をやるには参考になる ? そうでなくとも、自分のGoogleドライブをファイル置き場に使う方法 がわかり易い 22