1 of 14

Download to read offline

Ad

Recommended

『バックドア基準の入门』@统数研研究集会

『バックドア基準の入门』@统数研研究集会takehikoihayashi

?

2017年2月17日に行われた統計数理研究所での研究集会『因果推論の基礎』での講演内容です(配布用の改変あり)。スライドだけだと口頭での説明がないので分かりにくい部分もあるかもしれません。

[http://www.ism.ac.jp/events/2017/meeting0216_17.html:title]研究発表のためのパワーポイント资料作成の基本

研究発表のためのパワーポイント资料作成の基本Hisashi Ishihara

?

分かりやすい研究発表资料を作るための基本ルールである「话题?メッセージ?补足情报の3要素明确化」「绞り込み」「视覚化」について具体例を交えて绍介しています.研究室に新规配属された学生さん向けに作成した资料です.PyData.Tokyo Meetup #21 講演資料「Optuna ハイパーパラメータ最適化フレームワーク」太田 健

PyData.Tokyo Meetup #21 講演資料「Optuna ハイパーパラメータ最適化フレームワーク」太田 健Preferred Networks

?

The document discusses the Optuna hyperparameter optimization framework, highlighting its features like define-by-run, pruning, and distributed optimization. It provides examples of successful applications in competitions and introduces the use of LightGBM hyperparameter tuning. Additionally, it outlines the installation procedure, key components of Optuna, and the introduction of the lightgbmtuner for automated optimization.最适输送の解き方

最适输送の解き方joisino

?

最適輸送問題(Wasserstein 距離)を解く方法についてのさまざまなアプローチ?アルゴリズムを紹介します。

線形計画を使った定式化の基礎からはじめて、以下の五つのアルゴリズムを紹介します。

1. ネットワークシンプレックス法

2. ハンガリアン法

3. Sinkhorn アルゴリズム

4. ニューラルネットワークによる推定

5. スライス法

このスライドは第三回 0x-seminar https://sites.google.com/view/uda-0x-seminar/home/0x03 で使用したものです。自己完結するよう心がけたのでセミナーに参加していない人にも役立つスライドになっています。

『最適輸送の理論とアルゴリズム』好評発売中! https://www.amazon.co.jp/dp/4065305144

Speakerdeck にもアップロードしました: https://speakerdeck.com/joisino/zui-shi-shu-song-nojie-kifangDeep Learning Lab 異常検知入門

Deep Learning Lab 異常検知入門Shohei Hido

?

2018/02/14 Deep Learning Lab 異常検知ナイト

「異常検知入門」発表資料です

https://dllab.connpass.com/event/77248/[DL輪読会]Pay Attention to MLPs (gMLP)

[DL輪読会]Pay Attention to MLPs (gMLP)Deep Learning JP

?

The document summarizes a research paper that compares the performance of MLP-based models to Transformer-based models on various natural language processing and computer vision tasks. The key points are:

1. Gated MLP (gMLP) architectures can achieve performance comparable to Transformers on most tasks, demonstrating that attention mechanisms may not be strictly necessary.

2. However, attention still provides benefits for some NLP tasks, as models combining gMLP and attention outperformed pure gMLP models on certain benchmarks.

3. For computer vision, gMLP achieved results close to Vision Transformers and CNNs on image classification, indicating gMLP can match their data efficiency.机械学习モデルの判断根拠の説明

机械学习モデルの判断根拠の説明Satoshi Hara

?

The document discusses the rights of data subjects under the EU GDPR, particularly regarding automated decision-making and profiling. It outlines conditions under which such decisions can be made, emphasizing the need for measures that protect the data subjects' rights and freedoms. Additionally, it includes references to various machine learning and artificial intelligence interpretability frameworks and studies.搁罢叠における机械学习の活用事例

搁罢叠における机械学习の活用事例MicroAd, Inc.(Engineer)

?

2021/11/06 オンライン勉強会

「搁罢叠における机械学习の活用事例」

https://microad.connpass.com/event/229093/レコメント?アルコ?リス?ムの基本と周辺知识と実装方法

レコメント?アルコ?リス?ムの基本と周辺知识と実装方法Takeshi Mikami

?

レコメンドアルゴリズムの基本と周辺知識と実装方法

?Pythonを使った機械学習の紹介

?レコメンドアルゴリズムの基礎

?レコメンドの周辺知識と実装方法叠贰搁罢分类ワークショップ.辫辫迟虫

叠贰搁罢分类ワークショップ.辫辫迟虫Kouta Nakayama

?

理化学研究所 言語情報アクセス技術チーム主催 BERT分類ワークショップの資料です。

http://shinra-project.info/shinra2022/bert_workshop_shinra2022/

BERTの説明、実行可能なColabコード、質問に関する回答が含まれています。Word Tour: One-dimensional Word Embeddings via the Traveling Salesman Problem...

Word Tour: One-dimensional Word Embeddings via the Traveling Salesman Problem...joisino

?

NLP コロキウム https://nlp-colloquium-jp.github.io/ で発表した際のスライドです。

論文: https://arxiv.org/abs/2205.01954

GitHub: https://github.com/joisino/wordtour

概要

単語埋め込みは現代の自然言語処理の中核技術のひとつで、文書分類や類似度測定をはじめとして、さまざまな場面で使用されていることは知っての通りです。しかし、ふつう埋め込み先は何百という高次元であり、使用する時には多くの時間やメモリを消費するうえに、高次元埋め込みを視覚的に表現できないため解釈が難しいことが問題です。そこで本研究では、【一次元】の単語埋め込みを教師なしで得る方法を提案します。とはいえ、単語のあらゆる側面を一次元で捉えるのは不可能であるので、本研究ではまず単語埋め込みが満たすべき性質を健全性と完全性という二つに分解します。提案法の WordTour は、完全性はあきらめ、健全性のみを課すことで一次元埋め込みを可能にし、それでいて、全ての、とまでは言わないまでも、いくつかの応用において有用な一次元埋め込みを得ることに成功しました。阶层ベイズと奥础滨颁

阶层ベイズと奥础滨颁Hiroshi Shimizu

?

HijiyamaR#3で発表しました。

階層ベイズを使った場合に,最尤法のAICと結果が大きく異なります。その問題についてどのように考えたらいいかについて発表しました。SSII2021 [OS2-03] 自己教師あり学習における対照学習の基礎と応用

SSII2021 [OS2-03] 自己教師あり学習における対照学習の基礎と応用SSII

?

The document explores contrastive self-supervised learning, discussing its methodologies that reduce human annotation costs while promoting general representation learning. It highlights the effectiveness of various frameworks like MoCo and SimCLR, emphasizing their capabilities in distinguishing features among instances and the importance of both positive and negative samples. Additionally, the results demonstrate significant improvements in video tasks through the proposed inter-intra contrastive learning framework.ICLR2019 読み会in京都 ICLRから読み取るFeature Disentangleの研究動向

ICLR2019 読み会in京都 ICLRから読み取るFeature Disentangleの研究動向Yamato OKAMOTO

?

2019年6月2日の「ICLR'19 読み会in京都」での発表資料です。

https://connpass.com/event/127970/

そもそもFeature Disentangleとは何か?

どんな課題を解決できるのか?

どんな研究が増えてるのか?

紹介します。

[紹介論文] Emerging Disentanglement in Auto-Encoder Based Unsupervised Image Content Transfer研究効率化Tips Ver.2

研究効率化Tips Ver.2cvpaper. challenge

?

The document outlines strategies for enhancing research efficiency, emphasizing the importance of effective literature review, management skills, and collaborative efforts among researchers. It discusses two main methods for skill enhancement: learning from peers and leveraging online resources, while highlighting the challenges and advantages of each approach. Additionally, it provides insights into the dynamics of various research labs, communication practices, and the value of sharing knowledge across institutions.最近の碍补驳驳濒别に学ぶテーブルデータの特徴量エンジニアリング

最近の碍补驳驳濒别に学ぶテーブルデータの特徴量エンジニアリングmlm_kansai

?

MACHINE LEARNING Meetup KANSAI #4 https://mlm-kansai.connpass.com/event/119084/ での、能見さんの発表資料です。More Related Content

What's hot (20)

最适输送の解き方

最适输送の解き方joisino

?

最適輸送問題(Wasserstein 距離)を解く方法についてのさまざまなアプローチ?アルゴリズムを紹介します。

線形計画を使った定式化の基礎からはじめて、以下の五つのアルゴリズムを紹介します。

1. ネットワークシンプレックス法

2. ハンガリアン法

3. Sinkhorn アルゴリズム

4. ニューラルネットワークによる推定

5. スライス法

このスライドは第三回 0x-seminar https://sites.google.com/view/uda-0x-seminar/home/0x03 で使用したものです。自己完結するよう心がけたのでセミナーに参加していない人にも役立つスライドになっています。

『最適輸送の理論とアルゴリズム』好評発売中! https://www.amazon.co.jp/dp/4065305144

Speakerdeck にもアップロードしました: https://speakerdeck.com/joisino/zui-shi-shu-song-nojie-kifangDeep Learning Lab 異常検知入門

Deep Learning Lab 異常検知入門Shohei Hido

?

2018/02/14 Deep Learning Lab 異常検知ナイト

「異常検知入門」発表資料です

https://dllab.connpass.com/event/77248/[DL輪読会]Pay Attention to MLPs (gMLP)

[DL輪読会]Pay Attention to MLPs (gMLP)Deep Learning JP

?

The document summarizes a research paper that compares the performance of MLP-based models to Transformer-based models on various natural language processing and computer vision tasks. The key points are:

1. Gated MLP (gMLP) architectures can achieve performance comparable to Transformers on most tasks, demonstrating that attention mechanisms may not be strictly necessary.

2. However, attention still provides benefits for some NLP tasks, as models combining gMLP and attention outperformed pure gMLP models on certain benchmarks.

3. For computer vision, gMLP achieved results close to Vision Transformers and CNNs on image classification, indicating gMLP can match their data efficiency.机械学习モデルの判断根拠の説明

机械学习モデルの判断根拠の説明Satoshi Hara

?

The document discusses the rights of data subjects under the EU GDPR, particularly regarding automated decision-making and profiling. It outlines conditions under which such decisions can be made, emphasizing the need for measures that protect the data subjects' rights and freedoms. Additionally, it includes references to various machine learning and artificial intelligence interpretability frameworks and studies.搁罢叠における机械学习の活用事例

搁罢叠における机械学习の活用事例MicroAd, Inc.(Engineer)

?

2021/11/06 オンライン勉強会

「搁罢叠における机械学习の活用事例」

https://microad.connpass.com/event/229093/レコメント?アルコ?リス?ムの基本と周辺知识と実装方法

レコメント?アルコ?リス?ムの基本と周辺知识と実装方法Takeshi Mikami

?

レコメンドアルゴリズムの基本と周辺知識と実装方法

?Pythonを使った機械学習の紹介

?レコメンドアルゴリズムの基礎

?レコメンドの周辺知識と実装方法叠贰搁罢分类ワークショップ.辫辫迟虫

叠贰搁罢分类ワークショップ.辫辫迟虫Kouta Nakayama

?

理化学研究所 言語情報アクセス技術チーム主催 BERT分類ワークショップの資料です。

http://shinra-project.info/shinra2022/bert_workshop_shinra2022/

BERTの説明、実行可能なColabコード、質問に関する回答が含まれています。Word Tour: One-dimensional Word Embeddings via the Traveling Salesman Problem...

Word Tour: One-dimensional Word Embeddings via the Traveling Salesman Problem...joisino

?

NLP コロキウム https://nlp-colloquium-jp.github.io/ で発表した際のスライドです。

論文: https://arxiv.org/abs/2205.01954

GitHub: https://github.com/joisino/wordtour

概要

単語埋め込みは現代の自然言語処理の中核技術のひとつで、文書分類や類似度測定をはじめとして、さまざまな場面で使用されていることは知っての通りです。しかし、ふつう埋め込み先は何百という高次元であり、使用する時には多くの時間やメモリを消費するうえに、高次元埋め込みを視覚的に表現できないため解釈が難しいことが問題です。そこで本研究では、【一次元】の単語埋め込みを教師なしで得る方法を提案します。とはいえ、単語のあらゆる側面を一次元で捉えるのは不可能であるので、本研究ではまず単語埋め込みが満たすべき性質を健全性と完全性という二つに分解します。提案法の WordTour は、完全性はあきらめ、健全性のみを課すことで一次元埋め込みを可能にし、それでいて、全ての、とまでは言わないまでも、いくつかの応用において有用な一次元埋め込みを得ることに成功しました。阶层ベイズと奥础滨颁

阶层ベイズと奥础滨颁Hiroshi Shimizu

?

HijiyamaR#3で発表しました。

階層ベイズを使った場合に,最尤法のAICと結果が大きく異なります。その問題についてどのように考えたらいいかについて発表しました。SSII2021 [OS2-03] 自己教師あり学習における対照学習の基礎と応用

SSII2021 [OS2-03] 自己教師あり学習における対照学習の基礎と応用SSII

?

The document explores contrastive self-supervised learning, discussing its methodologies that reduce human annotation costs while promoting general representation learning. It highlights the effectiveness of various frameworks like MoCo and SimCLR, emphasizing their capabilities in distinguishing features among instances and the importance of both positive and negative samples. Additionally, the results demonstrate significant improvements in video tasks through the proposed inter-intra contrastive learning framework.ICLR2019 読み会in京都 ICLRから読み取るFeature Disentangleの研究動向

ICLR2019 読み会in京都 ICLRから読み取るFeature Disentangleの研究動向Yamato OKAMOTO

?

2019年6月2日の「ICLR'19 読み会in京都」での発表資料です。

https://connpass.com/event/127970/

そもそもFeature Disentangleとは何か?

どんな課題を解決できるのか?

どんな研究が増えてるのか?

紹介します。

[紹介論文] Emerging Disentanglement in Auto-Encoder Based Unsupervised Image Content Transfer研究効率化Tips Ver.2

研究効率化Tips Ver.2cvpaper. challenge

?

The document outlines strategies for enhancing research efficiency, emphasizing the importance of effective literature review, management skills, and collaborative efforts among researchers. It discusses two main methods for skill enhancement: learning from peers and leveraging online resources, while highlighting the challenges and advantages of each approach. Additionally, it provides insights into the dynamics of various research labs, communication practices, and the value of sharing knowledge across institutions.最近の碍补驳驳濒别に学ぶテーブルデータの特徴量エンジニアリング

最近の碍补驳驳濒别に学ぶテーブルデータの特徴量エンジニアリングmlm_kansai

?

MACHINE LEARNING Meetup KANSAI #4 https://mlm-kansai.connpass.com/event/119084/ での、能見さんの発表資料です。Viewers also liked (20)

オープニングトーク - 創設の思い?目的?進行方針 -データマイニング+WEB勉強会@東京

オープニングトーク - 創設の思い?目的?進行方針 -データマイニング+WEB勉強会@東京Koichi Hamada

?

「データマイニング+WEB 勉強会@東京」、オープニングトークの資料です hamadakoichi 濱田晃一

Crowd-Powered Parameter Analysis for Visual Design Exploration (UIST 2014)

Crowd-Powered Parameter Analysis for Visual Design Exploration (UIST 2014)Yuki Koyama

?

This document describes a crowd-powered approach to analyzing design spaces and exploring visual design parameters. It involves analyzing a design space by sampling parameter sets and gathering pairwise comparisons from crowd workers to estimate goodness values for points. User interfaces like a smart suggestion interface and VisOpt slider are introduced to facilitate design exploration based on the estimated goodness function.[CEDEC2016] 大規模学習を用いたCGの最先端研究の紹介 - 前半

[CEDEC2016] 大規模学習を用いたCGの最先端研究の紹介 - 前半Yuki Koyama

?

The document consists of a collection of research works and publications related to various topics in medical imaging, 3D modeling, and semantic editing, featuring significant contributions from different authors. It highlights developments in tools and techniques for interactive deformation, crowd-powered design analysis, and the integration of semantic attributes in content creation. Additionally, it presents findings and methodologies from conferences such as SIGGRAPH and UIST, emphasizing the relationship between design elements and user interactions.Real-Time Example-Based Elastic Deformation (SCA '12)

Real-Time Example-Based Elastic Deformation (SCA '12)Yuki Koyama

?

Our method performs shape matching for example-based elastic materials. It matches shapes by finding a linear transformation including rotation and stretching that aligns the example shape to the target shape. This process is faster than the finite element method, taking milliseconds versus seconds. However, it is less physically accurate for simulating deformations. The method works best for thin structures like cloth or hair, and could be improved by increasing physical accuracy while maintaining speed.View-Dependent Control of Elastic Rod Simulation for 3D Character Animation (...

View-Dependent Control of Elastic Rod Simulation for 3D Character Animation (...Yuki Koyama

?

This document presents a method for view-dependent control of elastic rod simulation for 3D character animation. The method extends view-dependent geometry techniques to allow rods like hair and ears to change shape based on the camera view during physical simulation. Weights are calculated from example poses and view directions to blend between a base pose and example poses. A suppression algorithm is used to separately update rod velocities and positions in order to reduce unwanted "ghost momentum" caused by view changes without fully damping the simulation. The method allows more stylized 2D-like shapes during animation but has limitations including incomplete suppression of ghost forces and increased computation costs.Visualization of Supervised Learning with {arules} + {arulesViz}

Visualization of Supervised Learning with {arules} + {arulesViz}Takashi J OZAKI

?

This document discusses visualizing supervised learning models using association rules and the arules and arulesViz packages in R. It shows how association rules generated from sample user activity data can be represented as graphs, allowing intuitive visualization of relationships between variables even in high-dimensional data. The visualizations are compared to results from GLMs and random forests to show how nodes are located based on their "closeness" in different supervised learning models. While less quantitative, this technique provides a more intuitive understanding of supervised learning that is useful for presentations.[CHI 2016] SelPh: Progressive Learning and Support of Manual Photo Color Enha...

[CHI 2016] SelPh: Progressive Learning and Support of Manual Photo Color Enha...Yuki Koyama

?

This document describes a system called SelPh that aims to support manual photo color enhancement. It proposes a "self-reinforcing" workflow where a user's manual edits are used to progressively train a preference model, which then helps support further manual enhancements. The system visualizes enhancement goodness, provides interactive optimization, allows auto-enhancements, indicates enhancement confidence levels, and references similar photos. A prototype was created and evaluated in a user study with photographers to understand how the system could help with enhancing many photos.綺麗なデザインの盛り付け方 -超小ネタ編-

綺麗なデザインの盛り付け方 -超小ネタ編-wariemon

?

Event : カレーとデザインの夕べ -CURRY DESIGN-

Location : Fablic, inc.

Date : 2015.11.25

Speaker : 割石裕太 / wariemon - UI Designer @Fablic, inc. / OH

"カレーとデザインの夕べ -CURRY DESIGN-" の

4番目のスピーカーとしてLTさせていただきました。

ゆるいLTを聞きながら、もうやんカレーを楽しもうという会だったので、今回は、カレーのビジュアルを iOS アプリデザインにおける 白背景のアプリアイコンに例え、超細かい実践ネタをLTさせていただきました。初めての机械学习

初めての机械学习Katsuhiro Morishita

?

初めて机械学习に触れる工学部系の方を想定して作成したスライドです。后半には四国电力の需要电力を対象とした予测をテーマとしたハンズオン资料を载せています。讲义で使用した场合は90分程度です。协调フィルタリングを利用した推荐システム构筑

协调フィルタリングを利用した推荐システム构筑Masayuki Ota

?

Ventures Salon vol.6 で発表した資料です。

https://www.facebook.com/events/736789056438293

発表用&学習用の資料になっています。リクルートにおけるマルチモーダル Deep Learning Web API 開発事例

リクルートにおけるマルチモーダル Deep Learning Web API 開発事例Recruit Technologies

?

2017/01/27 PyData.Tokyo Meetup #12 -強化学習での、舟木の講演資料になりますAd

ユーザー分析における特徴量の作り方

- 1. 0 ユーザ分析における特徴量の作り方 How to provide feature quantities 第37回 TokyoWebmining

- 2. アジェンダ - 1 - 1. 自己紹介 2. 議論したいこと

- 4. 自己紹介 - 3 - Twitter :obnym 本名 :尾花山 和哉 (四股名ではない) 略歴 :”尾花山和哉”もしくは”kazuya obanayama”でググった感じです。 最近は共通ポイントデータを相手にPPDMとかやってます。 ちょっと宣伝ですが、最近、購買情報や個人情報を直接取得せずに、 レコメンドやクラスタリングを行う技術を開発しました。(特許申請中) この領域に興味のある方、情報交換しましょう!

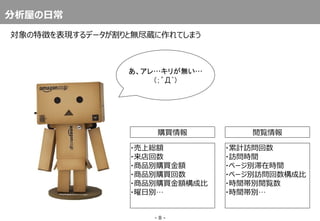

- 9. 分析屋の日常 対象の特徴を表現するデータが割りと無尽蔵に作れてしまう - 8 - あ、アレ…キリが無い… (;?Д`) 購買情報 閲覧情报 ?売上総額 ?来店回数 ?商品別購買金額 ?商品別購買回数 ?商品別購買金額構成比 ?曜日別… ?累計訪問回数 ?訪問時間 ?ページ別滞在時間 ?ページ別訪問回数構成比 ?時間帯別閲覧数 ?時間帯別…

- 14. 本日のアンカンファレンス内容 - 13 - ぜひ、皆さんで議論しましょう! ? 特徴量の作り方 比率? 絶対値? ヒューリスティクス? ? 特徴量の捨て方/まとめ方 変数選択法? 主成分?