AttnGAN

- 1. AttnGAN: Fine-Grained Text to Image Generation with Attentional Generative Adversarial Networks Tao Xu, Pengchuan Zhang, Qiuyuan Huang, Han Zhang, Zhe Gan, Xiaolei Huang, Xiaodong He ~GANのキホンから~ 2018/10/29 @第1回 NIPS 2018(世界初!!) 理論と実装を話し合う会

- 2. 自己紹介 石井 孝明 @takaaki5564 仕事?興味: 大学院では非平衡統計物理を研究。 現在はComputer Visionのアプリ開発。 Deep Learning?SLAMなどアルゴリズムのASIC最適化を仕事にしています。 Udemy: https://www.udemy.com/yolossd-a/learn/v4/overview 趣味: Amazon映画、漫画、フットサル

- 3. 紹介論文 AttnGAN : Fine-Grained Text to Image Generation with Attentional Generative Adversarial Networks Tao Xu, Pengchuan Zhang, Qiuyuan Huang, Han Zhang, Zhe Gan, Xiaolei Huang, Xiaodong He CVPR2018 本発表では、 ?GANの基本を復習 ?AttnGANモデルの概要について 説明します。(実装についてはまた別の機会に!)

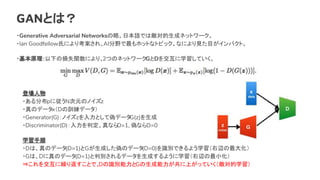

- 4. GANとは? 登場人物 ?ある分布pに従うN次元のノイズz ?真のデータx(Dの訓練データ) ?Generator(G):ノイズzを入力として偽データG(z)を生成 ?Discriminator(D):入力を判定。真ならD=1, 偽ならD=0 学習手順 ?Dは、真のデータ(D=1)とGが生成した偽のデータ(D=0)を識別できるよう学習(右辺の最大化) ?Gは、Dに真のデータ(D=1)と判別されるデータを生成するように学習(右辺の最小化) ?これを交互に繰り返すことで、Dの識別能力とGの生成能力が共に上がっていく(敵対的学習) ?Generative Adversarial Networksの略。日本語では敵対的生成ネットワーク。 ?Ian Goodfellow氏により考案され、AI分野で最もホットなトピック。なにより見た目がインパクト。 ?基本原理:以下の損失関数により、2つのネットワークGとDを交互に学習していく。

- 5. ここでいくつかの疑問が。 ノイズzの正体ってなに…? ? AutoEncoder (自己符号化器) のボトルネックに相当する潜在変数 (Latent Variable) に相当するもの。 潜在空間はいわば概念のようなもので、例えば「腕掛けのある椅子の画像から腕掛けを引き算」したりできる。 GANとは?

- 6. 生成器Gはノイズzからどうやって画像を生成するのか? ? Transposed Convolution により入力よりも高解像度なデータを生成する。 zとG(z)にはどんな関係があるのか? ? Gの生成する画像は、入力がzのときの出力x=(x0,x1,x2,....)の条件付き同時確率分布P(x|z)の、サンプリングしたもの とみなせる。つまりzを連続的に変化させるとGの生成画像も連続的に変化させることができる。 GANとは?

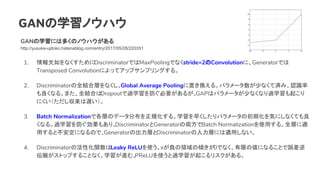

- 8. GANの学習ノウハウ GANの学習には多くのノウハウがある http://yusuke-ujitoko.hatenablog.com/entry/2017/05/28/220351 1. 情報欠如をなくすためにDiscriminatorではMaxPoolingでなくstride=2のConvolutionに、Generatorでは Transposed Convolutionによってアップサンプリングする。 2. Discriminatorの全結合層をなくし、Global Average Poolingに置き換える。パラメータ数が少なくて済み、認識率 も良くなる。また、全結合はDropoutで過学習を防ぐ必要があるが、GAPはパラメータが少なくなり過学習も起こり にくい(ただし収束は遅い)。 3. Batch Normalizationで各層のデータ分布を正規化する。学習を早くしたりパラメータの初期化を気にしなくても良 くなる。過学習を防ぐ効果もあり。DiscriminatorとGeneratorの両方でBatch Normalizationを使用する。全層に適 用すると不安定になるので、Generatorの出力層とDiscriminatorの入力層には適用しない。 4. Discriminatorの活性化関数はLeaky ReLUを使う。xが負の領域の傾きが0でなく、有限の値になることで誤差逆 伝搬がストップすることなく、学習が進む。PReLUを使うと過学習が起こるリスクがある。

- 9. GANの学習ノウハウ 5. Generatorの最後の層でTanhにかけて入力画像を[-1, 1]に正規化する。 6. GANの元論文ではGeneratorの損失関数はmin(log(1-D(G(z)))のようになっている が、実際は max(log(D(G(z)))を使う。前者は初期段階で勾配が消失する。 7. ノイズzを一様分布でなくガウシアンにする。 また、補間をするときには直線ではなくgreat circleに沿って補間を行う。 8. 学習時のミニバッチは、①全て訓練データx、または②全て生成データG(z)にする。 9. ADAM最適化を使う。DiscriminatorにはSGDを使い、GeneratorにはADAMを使う。 などなど。

- 11. テキストから画像を生成するGAN GAN-INT-CLS: Generative Adversarial Text to Image Synthesis, ICML 2016 (link) GANをテキストからの画像生成に初めて利用。 64x64の画像生成に成功。 GAWWN: Learning What and Where to Draw, NIPS 2016 (link) どの位置にどの物体があるかを BoundingBoxで指定することができた。 StackGAN: Text to Photo-realistic Image Synthesis with Stacked Generative Adversarial Networks, ICCV 2017 (link), C. Ledig, et.al. 2ステージ訓練により 256x256の圧倒的な高解像度を生成。もやもや画像が 2ステージ目でくっきり。 TAC-GAN: Text Conditioned Auxiliary Classifier Generative Adversarial Network, arXiv 2017 (link) 訓練アシストのための auxiliary classifierを使用。同じテキストから多様なタイプの画像が生成できる。 StackGAN++: Realistic Image Synthesis with Stacked Generative Adversarial Networks, arXiv 2017 (link), H. Zhang, et.al. StackGAN-v2と呼ばれ、tree-likeネットワークを使用 AttnGAN: Fine-Grained Text to Image Generation with Attentional Generative Adversarial Networks, CVPR 2018 (link) Tao Xu, et.al. Attentionドリブンな方法で細部を生成できるように AttnGAN以降はend-to-end学習系の論文が出ている。 FusedGAN: Semi-supervised FusedGAN for Conditional Image Generation, arXiv 2018 (link) 2ステージ訓練を End-to-Endで学習できるよう1 stageにfuseした。 HDGAN: Photographic Text-to-Image Synthesis with a Hierarchically-nested Adversarial Network, arXiv 2018 (link) hierarchical-nestedネットワーク構造で、高解像度画像を end-to-endで学習した。

- 12. LAPGAN(Laplacian Pyramid of Generative Adversarial Network) Deep Generative Image Models using a ?Laplacian Pyramid of Adversarial Networks, NIPS(2015) 低解像度と高解像度の画像の差を学習し、低解像度画像をもとに高解像度の画像を生成する。各解像度のGeneratorを 学習し、段階的に高解像度の画像を生成。 AlignDRAW(Align Deep Recurrent Attention Writer) Generating Images from Captions with Attention, ICLR(2016) VAE(Variational Auto Encoder)はエンコーダの出力が正規分布の平均と共分散行列であり、潜在変数からのデータを再 構成する確率的変分推論アルゴリズムによって訓練する。そのVAEを拡張し、Attention機構を再帰的に組み込んだ Deep Recurrent Attention Writer(DRAW)を追加したのがAlignDRAWである。AlignDRAWによりテキスト内の各単語 ごとに画像パッチを生成し、反復的に画像を生成した。 しかしこれらのモデルが生成する画像は、単語レベルの情報を欠いてしまっている。 高解像度画像生成の従来手法

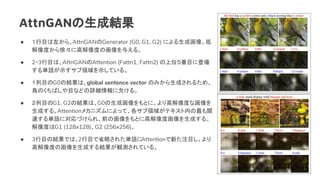

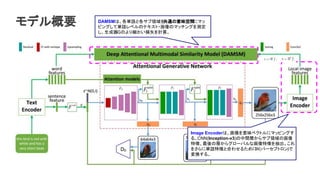

- 13. AttnGANの生成結果 ● 1行目は左から、AttnGANのGenerator (G0, G1, G2) による生成画像。低 解像度から徐々に高解像度の画像を与える。 ● 2?3行目は、AttnGANのAttention (Fattn1, Fattn2) の上位5番目に登場 する単語が示すサブ領域を示している。 ● 1列目のG0の結果は、global sentence vector のみから生成されるため、 鳥のくちばしや目などの詳細情報に欠ける。 ● 2列目のG1, G2の結果は、G0の生成画像をもとに、より高解像度な画像を 生成する。Attentionメカニズムによって、各サブ領域がテキスト内の最も関 連する単語に対応づけられ、前の画像をもとに高解像度画像を生成する。 解像度はG1 (128x128)、G2 (256x256)。 ● 3行目の結果では、2行目で省略された単語にAttentionで新た注目し、より 高解像度の画像を生成する結果が観測されている。

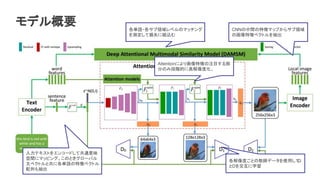

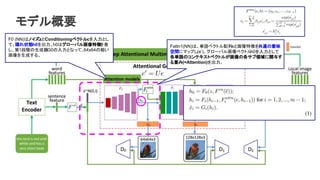

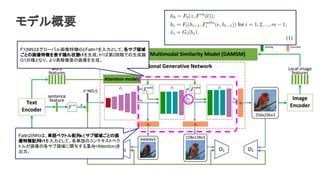

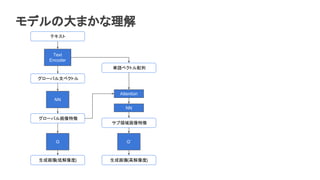

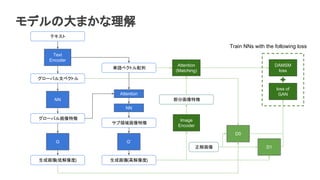

- 23. モデルの大まかな理解 テキスト Text Encoder グローバル文ベクトル 単語ベクトル配列 生成画像(高解像度) G サブ領域画像特徴 グローバル画像特徴 G’ NN Attention NN 生成画像(低解像度) 正解画像 D0 D1 Image Encoder DAMSM loss 部分画像特徴 Attention (Matching) loss of GAN Train NNs with the following loss

- 29. AttnGANのまとめ 1. テキストから画像を合成するためのGANに、Attentionメカニズムを新たに導入し た。 2. DAMSMによりテキストと画像のマッチングの詳細な評価方法を提案した。 3. AttnGANが生成するAttention層を視覚化し、 GANが関連する単語を元に画像の部分領域を段階的に生成する様子が見られ た。

![GANの学習ノウハウ

5. Generatorの最後の層でTanhにかけて入力画像を[-1, 1]に正規化する。

6. GANの元論文ではGeneratorの損失関数はmin(log(1-D(G(z)))のようになっている

が、実際は max(log(D(G(z)))を使う。前者は初期段階で勾配が消失する。

7. ノイズzを一様分布でなくガウシアンにする。

また、補間をするときには直線ではなくgreat circleに沿って補間を行う。

8. 学習時のミニバッチは、①全て訓練データx、または②全て生成データG(z)にする。

9. ADAM最適化を使う。DiscriminatorにはSGDを使い、GeneratorにはADAMを使う。

などなど。](https://image.slidesharecdn.com/attngan2-181103141142/85/AttnGAN-9-320.jpg)