Big data- hadoop -MapReduce

Download as PPT, PDF1 like101 views

1) The document discusses performance analysis of MapReduce tasks in Hadoop for big data. It analyzes how the number of bytes written and read by map and reduce tasks changes with increasing number of input files. 2) Hadoop uses HDFS for storage and MapReduce for processing large datasets across clusters. The experiment uses word count application on increasing number of input files to analyze task behavior. 3) The results show that the number of bytes written does not increase at the same rate as the number of files, as the reduce function just combines outputs from map without much increase in size.

1 of 15

Download to read offline

![DepartmentofMechanicalEngineering Humility Entrepreneurship Teamwork

Learning Social Responsibility Respect for IndividualDeliver The Promise

GMRInstituteofTechnology,Rajam REFERENCES

•[1] Shankar Ganesh Manikandan, Siddarth Ravi , “Big Data Analysis using Apache

Hadoop”, IEEE,2014

•[2] Ankita Saldhi, Abhinav Goel”,” Big Data Analysis Using Hadoop Cluster”,

IEEE,2014

•[3] Amrit Pal, Pinki Agrawal, Kunal Jain, Kunal Jain, ”A Performance Analysis of

MapReduce Task with Large Number of Files Dataset in Big Data Using Hadoop”,

2014 Fourth International Conference on Communication Systems and Network

Technologies

•[4] Aditya B. Patel, Manashvi Birla, Ushma Nair,” “Big Data Problem Using Hadoop

and Map Reduce”, NIRMA UNIVERSITY INTERNATIONAL CONFERENCE ON

ENGINEERING, NUiCONE -2012

14November 13, 2016](https://image.slidesharecdn.com/mkappt-copy-161113145327/85/Big-data-hadoop-MapReduce-14-320.jpg)

Recommended

McNeill John IT Analyst Support 11 2016

McNeill John IT Analyst Support 11 2016John McNeill

Ã˝

This resume is for John W. McNeill, who has over 18 years of experience in IT support. He has competency with computer hardware, software, printing, and networking. His experience includes positions at Pomeroy, M&T Bank, and Mac's Resource Services, where he provided IT support, software/hardware installation and configuration, troubleshooting, and training. He has education and certifications in areas such as A+, SQL Server, and graphic arts.Jodi Daggett resume 2016

Jodi Daggett resume 2016Jodi Tucker Daggett

Ã˝

Jodi Daggett is an experienced application systems analyst and data analyst seeking a new opportunity. She has over 18 years of experience in healthcare IT working with various electronic health record systems like Epic, NextGen, and Meditech. Her experience includes developing and testing interfaces, managing integration projects, and cleaning up patient data. She is proficient in SQL, Microsoft Office, and project management tools. She aims to leverage her skills and experience to provide value to a new employer.01Karen Brown ResumeUpdated 01 2017-

01Karen Brown ResumeUpdated 01 2017-Karen H Brown

Ã˝

Karen Brown is seeking a position that utilizes her extensive computer skills and experience working in office settings. She has a bachelor's degree in international business and experience managing teams and international students. Her background includes office management, substitute teaching, and coordinating mission work in West Africa.Usman_Kunnath_Resume_Production_Support_Engineer

Usman_Kunnath_Resume_Production_Support_EngineerUsman Kunnath

Ã˝

Usman Kunnath is a senior application production support engineer with over 4 years of experience working with data warehousing tools like IBM InfoSphere DataStage and Informatica. He has expertise in shell scripting, DB2, SQL Server, JIRA, ManageNow, and ServiceNow. Currently residing in Phoenix, Arizona, he is looking for opportunities utilizing his skills in ETL development, database administration, and production support.Resume

ResumeRanganathan Narayanan

Ã˝

Ranganathan Narayanan has over 7 years of experience as a Performance Engineer. He currently works at Swiss Re Shared Services as a Performance Engineer and Assistant Vice President. Previously, he worked at BA Continuum India and Infosys Technologies as a Performance Test Lead. He has expertise in performance testing, profiling, monitoring, and tuning applications to identify and resolve performance bottlenecks.BIGDATA- Survey on Scheduling Methods in Hadoop MapReduce Framework

BIGDATA- Survey on Scheduling Methods in Hadoop MapReduce FrameworkMahantesh Angadi

Ã˝

This seminar presentation provides an overview of scheduling methods in the Hadoop MapReduce framework. It begins with motivations for using distributed computing for big data and introduces Hadoop and MapReduce. The presentation then surveys several proposed scheduling methods, including static methods like FIFO and adaptive methods like the Fair Scheduler. It summarizes five research papers on scheduling, discussing proposed approaches like optimizing job completion time and learning node capabilities for heterogeneous clusters. The presentation concludes that scheduling algorithms should improve data locality and use prediction to efficiently schedule jobs on heterogeneous Hadoop clusters.BIGDATA- Survey on Scheduling Methods in Hadoop MapReduce

BIGDATA- Survey on Scheduling Methods in Hadoop MapReduceMahantesh Angadi

Ã˝

The document summarizes a technical seminar presentation on scheduling methods in the Hadoop MapReduce framework. The presentation covers the motivation for Hadoop and MapReduce, provides an introduction to big data and Hadoop, and describes HDFS and the MapReduce programming model. It then discusses challenges in MapReduce scheduling and surveys the literature on existing scheduling methods. The presentation surveys five papers on proposed MapReduce scheduling methods, summarizing the key points of each. It concludes that improving data locality can enhance performance and that future work could consider scheduling algorithms for heterogeneous clusters.On Traffic-Aware Partition and Aggregation in Map Reduce for Big Data Applica...

On Traffic-Aware Partition and Aggregation in Map Reduce for Big Data Applica...dbpublications

Ã˝

The MapReduce programming model simplifies

large-scale data processing on commodity cluster by

exploiting parallel map tasks and reduces tasks.

Although many efforts have been made to improve

the performance of MapReduce jobs, they ignore the

network traffic generated in the shuffle phase, which

plays a critical role in performance enhancement.

Traditionally, a hash function is used to partition

intermediate data among reduce tasks, which,

however, is not traffic-efficient because network

topology and data size associated with each key are

not taken into consideration. In this paper, we study

to reduce network traffic cost for a MapReduce job

by designing a novel intermediate data partition

scheme. Furthermore, we jointly consider the

aggregator placement problem, where each

aggregator can reduce merged traffic from multiple

map tasks. A decomposition-based distributed

algorithm is proposed to deal with the large-scale

optimization problem for big data application and an

online algorithm is also designed to adjust data

partition and aggregation in a dynamic manner.

Finally, extensive simulation results demonstrate that

our proposals can significantly reduce network traffic

cost under both offline and online cases.Future of Data Intensive Applicaitons

Future of Data Intensive ApplicaitonsMilind Bhandarkar

Ã˝

"Big Data" is a much-hyped term nowadays in Business Computing. However, the core concept of collaborative environments conducting experiments over large shared data repositories has existed for decades. In this talk, I will outline how recent advances in Cloud Computing, Big Data processing frameworks, and agile application development platforms enable Data Intensive Cloud Applications. I will provide a brief history of efforts in building scalable & adaptive run-time environments, and the role these runtime systems will play in new Cloud Applications. I will present a vision for cloud platforms for science, where data-intensive frameworks such as Apache Hadoop will play a key role.An Analytical Study on Research Challenges and Issues in Big Data Analysis.pdf

An Analytical Study on Research Challenges and Issues in Big Data Analysis.pdfApril Knyff

Ã˝

This document discusses research challenges and issues in big data analysis. It begins with an introduction to big data and its key characteristics of volume, velocity, variety, and veracity. It then discusses challenges related to big data storage, privacy and security of data, and data processing. Specifically, it explores issues around data accessibility, application domains, privacy and security, and analytics such as heterogeneity, incompleteness, and real-time analysis of streaming data.Hadoop performance modeling for job estimation and resource provisioning

Hadoop performance modeling for job estimation and resource provisioningLeMeniz Infotech

Ã˝

Hadoop performance modeling for job estimation and resource provisioning

Do Your Projects With Technology Experts

To Get this projects Call : 9566355386 / 99625 88976

Web : http://www.lemenizinfotech.com

Web : http://www.ieeemaster.com

Mail : projects@lemenizinfotech.com

Blog : http://ieeeprojectspondicherry.weebly.com

Blog : http://www.ieeeprojectsinpondicherry.blogspot.in/

Youtube:https://www.youtube.com/watch?v=eesBNUnKvws Presentation1

Presentation1Atul Singh

Ã˝

The document discusses analyzing the MovieLens dataset using a big data approach with Pig Hadoop. It introduces the dataset and discusses how big data is changing businesses by uncovering hidden insights. The main functionalities of the project are outlined, including analyzing aspects like movie ratings by year, gender, and age. The requirements, modules, and system design are then described. The modules involve loading the data into HDFS, analyzing it with MapReduce, storing results in HDFS, and reading results. The system design shows the data flowing from HDFS to MapReduce processing to end users. References are provided to learn more about related big data and Hadoop topics.AnupDudaniDataScience2015

AnupDudaniDataScience2015Anup Dudani

Ã˝

Anup Dudani is a software engineer and data analyst based in San Jose, CA. He holds an MS in Software Engineering from San Jose State University and a BE in Computer Science and Engineering from Amravati University in India. His technical skills include Java, Python, R, Hadoop, Spark, and AWS. He has work experience developing distributed RESTful applications and analyzing public datasets. His projects include developing prototype applications for eBay and Yelp and analyzing NYC subway ridership data.Optimizing Bigdata Processing by using Hybrid Hierarchically Distributed Data...

Optimizing Bigdata Processing by using Hybrid Hierarchically Distributed Data...IJCSIS Research Publications

Ã˝

Data is the most valuable entity in today’s world which has to be managed. The huge data

available is to be processed for knowledge and predictions.This huge data in other words big data is

available from various sources like Facebook, twitter and many more resources. The processing time taken

by the frameworks such as Spark ,MapReduce Hierachial Distributed Matrix(HHDM) is more. Hence

Hybrid Hierarchically Distributed Data Matrix(HHHDM) is proposed. This framework is used to develop

Bigdata applications. In existing system developed programs are by default or automatically roughly

defined, jobs are without any functionality being described to be reusable.It also reduces the ability to

optimize data flow of job sequences and pipelines. To overcome the problems of existing framework we

introduce a HHHDM method for developing the big data processing jobs. The proposed method is a Hybrid

method which has the advantages of Hierarchial Distributed Matrix (HHDM) which is functional,

stronglytyped for writing big data applications which are composable. To improve the performance of

executing HHHDM jobs multiple optimizationsis applied to the HHHDM method. The experimental results

show that the improvement of the processing time is 65-70 percent when compared to the existing

technology that is spark.Social Media Market Trender with Dache Manager Using Hadoop and Visualization...

Social Media Market Trender with Dache Manager Using Hadoop and Visualization...IRJET Journal

Ã˝

This document proposes using Apache Hadoop and a data-aware cache framework called Dache to analyze large amounts of social media data from Twitter in real-time. The goals are to overcome limitations of existing analytics tools by leveraging Hadoop's ability to handle big data, improve processing speed through Dache caching, and provide visualizations of trends. Data would be grabbed from Twitter using Flume, stored in HDFS, converted to CSV format using MapReduce, analyzed using Dache to optimize Hadoop jobs, and visualized using tools like Tableau. The system aims to efficiently analyze social media trends at low cost using open source tools.Multi-Cloud Services

Multi-Cloud ServicesIRJET Journal

Ã˝

This document discusses the design and implementation of an automation framework for data management and storage on cloud platforms using automation tools like Ansible. It proposes using Hadoop for large-scale data distribution and storage, AWS cloud for infrastructure provisioning, and Ansible for automating the deployment and management of the Hadoop cluster. The methodology involves requirements analysis, system design, implementation, and testing. The results indicate the system meets specifications by automatically creating cloud storage spaces as needed by users. The framework has potential for future extension by integrating a front-end interface and additional features.B1803031217

B1803031217IOSR Journals

Ã˝

This document provides a survey of distributed heterogeneous big data mining adaptation in the cloud. It discusses how big data is large, heterogeneous, and distributed, making it difficult to analyze with traditional tools. The cloud helps overcome these issues by providing scalable infrastructure on demand. However, directly applying Hadoop MapReduce in the cloud is inefficient due to its assumption of homogeneous nodes. The document surveys different approaches for improving MapReduce performance in heterogeneous cloud environments through techniques like optimized task scheduling and resource allocation.IRJET- Comparatively Analysis on K-Means++ and Mini Batch K-Means Clustering ...

IRJET- Comparatively Analysis on K-Means++ and Mini Batch K-Means Clustering ...IRJET Journal

Ã˝

This document provides an overview and comparative analysis of the K-Means++ and Mini Batch K-Means clustering algorithms in cloud computing using MapReduce. It first introduces cloud computing and its advantages for processing big data using Hadoop MapReduce. It then discusses the K-Means++ algorithm as an improved version of the standard K-Means algorithm that initializes cluster centroids more intelligently. Finally, it compares the performance of K-Means++ and Mini Batch K-Means when implemented using MapReduce for large-scale clustering in cloud environments.Performance evaluation of Map-reduce jar pig hive and spark with machine lear...

Performance evaluation of Map-reduce jar pig hive and spark with machine lear...IJECEIAES

Ã˝

Big data is the biggest challenges as we need huge processing power system and good algorithms to make a decision. We need Hadoop environment with pig hive, machine learning and hadoopecosystem components. The data comes from industries. Many devices around us and sensor, and from social media sites. According to McKinsey There will be a shortage of 15000000 big data professionals by the end of 2020. There are lots of technologies to solve the problem of big data Storage and processing. Such technologies are Apache Hadoop, Apache Spark, Apache Kafka, and many more. Here we analyse the processing speed for the 4GB data on cloudx lab with Hadoop mapreduce with varing mappers and reducers and with pig script and Hive querries and spark environment along with machine learning technology and from the results we can say that machine learning with Hadoop will enhance the processing performance along with with spark, and also we can say that spark is better than Hadoop mapreduce pig and hive, spark with hive and machine learning will be the best performance enhanced compared with pig and hive, Hadoop mapreduce jar.Resume_latest_22_01

Resume_latest_22_01Raghu Golla

Ã˝

Raghu Golla has experience as a Software Development Engineer at Shipler Technology developing projects using Django, Python, HTML, CSS, and JQuery. His projects include an automatic billing system, vendor ratings tracking module, and integrating a new website. He has a M.Tech from NIT Karnataka and B.Tech from JNTU Hyderabad. His areas of research include data aggregation in wireless sensor networks, load balancing algorithms, and SQL injection detection. He has published papers on load balancing and has one accepted to IEEE ICACNI 2016.Influence of Hadoop in Big Data Analysis and Its Aspects

Influence of Hadoop in Big Data Analysis and Its Aspects IJMER

Ã˝

This paper is an effort to present the basic understanding of BIG DATA and

HADOOP and its usefulness to an organization from the performance perspective. Along-with the

introduction of BIG DATA, the important parameters and attributes that make this emerging concept

attractive to organizations has been highlighted. The paper also evaluates the difference in the

challenges faced by a small organization as compared to a medium or large scale operation and

therefore the differences in their approach and treatment of BIG DATA. As Hadoop is a Substantial

scale, open source programming system committed to adaptable, disseminated, information

concentrated processing. A number of application examples of implementation of BIG DATA across

industries varying in strategy, product and processes have been presented. This paper also deals

with the technology aspects of BIG DATA for its implementation in organizations. Since HADOOP has

emerged as a popular tool for BIG DATA implementation. Map reduce is a programming structure for

effectively composing requisitions which prepare boundless measures of information (multi-terabyte

information sets) in- parallel on extensive bunches of merchandise fittings in a dependable,

shortcoming tolerant way. A Map reduce skeleton comprises of two parts. They are “mapper" and

"reducer" which have been examined in this paper. The paper deals with the overall architecture of

HADOOP along with the details of its various components in Big Data.big data and hadoop

big data and hadoopShamama Kamal

Ã˝

This document presents information on Big Data and its association with Hadoop. It discusses what Big Data is, defining it as too large and complex for traditional databases. It also covers the 3 V's of Big Data: volume, variety, and velocity. The document then introduces Hadoop as a tool for Big Data analytics, describing what Hadoop is and its key components and features like being scalable, reliable, and economical. MapReduce is discussed as Hadoop's programming model using mappers and reducers. Finally, the document concludes that Hadoop enables distributed, parallel processing of large data across inexpensive servers.Sustainable Software for a Digital Society

Sustainable Software for a Digital SocietyPatricia Lago

Ã˝

Software is being developed since decades without taking sustainability into consideration. This holds for its energy efficiency, that is the amount of energy software consumes while ensuring other system qualities like security, performance, reliability, etc. etc. Software un-sustainability, however, is becoming increasingly evident with the growing digitalization of our society, and its dependence on software. Finally IT specialists are becoming aware that software solutions can, and should, be designed with sustainability concerns in mind. In doing so, they can create solutions that are technically more stable (hence requiring less modifications over time), target societal goals with a higher certainty, or help sustaining the business goals of both developing and consuming organizations. Everything sounds great. The real question is: how? How can we redirect software engineering practices toward sustainable software solutions? And how can we turn sustainability into a business so that companies will finally invest in it? This talk explores results and challenges in engineering software for a more sustainable digital society.PriyankaDighe_Resume_new

PriyankaDighe_Resume_newPriyanka Dighe

Ã˝

Priyanka Dighe received her M.S. in Computer Science and Engineering from UC San Diego in 2017 and B.E. in Computer Science from BITS Pilani in 2013. She has work experience as a Software Engineer at Microsoft and Bloomreach, developing applications for Word and implementing alerting services. She completed internships at HP Labs and Bloomreach focusing on predictive analytics using social media and implementing alerting pipelines. Her skills include programming in Java, C, Python, and technologies like Spark, Hadoop, and Play Framework.Big data with hadoop

Big data with hadoopAnusha sweety

Ã˝

Most common technology which is used to store meta data and large databases.we can find numerous applications in the real world.It is the very useful for creating new database oriented appsPerformance Improvement of Heterogeneous Hadoop Cluster using Ranking Algorithm

Performance Improvement of Heterogeneous Hadoop Cluster using Ranking AlgorithmIRJET Journal

Ã˝

This document proposes using a ranking algorithm and sampling algorithm to improve the performance of a heterogeneous Hadoop cluster. The ranking algorithm prioritizes data distribution based on node frequency, so that higher frequency nodes are processed first. The sampling algorithm randomly selects nodes for data distribution instead of evenly distributing across all nodes. The proposed approach reduces computation time and improves overall cluster performance compared to the existing approach of evenly distributing data across nodes of varying sizes. Results show the proposed approach reduces execution time for various file sizes compared to the existing approach.ongc report

ongc reportPrachi Chauhan

Ã˝

This document provides a summary of a project report on big data Twitter data retrieval and text mining. The project involved creating a Twitter application, installing and loading R packages for Twitter API access and text analysis, authenticating with Twitter via OAuth, extracting text from Twitter timelines, transforming and analyzing the text through techniques like stemming words and finding frequent terms and word associations, and showcasing results with a word cloud. The project was completed as part of a summer training program at the GEOPIC center of ONGC in India under the guidance of a chief manager.LARGE-SCALE DATA PROCESSING USING MAPREDUCE IN CLOUD COMPUTING ENVIRONMENT

LARGE-SCALE DATA PROCESSING USING MAPREDUCE IN CLOUD COMPUTING ENVIRONMENTijwscjournal

Ã˝

The computer industry is being challenged to develop methods and techniques for affordable data processing on large datasets at optimum response times. The technical challenges in dealing with the increasing demand to handle vast quantities of data is daunting and on the rise. One of the recent processing models with a more efficient and intuitive solution to rapidly process large amount of data in parallel is called MapReduce. It is a framework defining a template approach of programming to perform large-scale data computation on clusters of machines in a cloud computing environment. MapReduce provides automatic parallelization and distribution of computation based on several processors. It hides the complexity of writing parallel and distributed programming code. This paper provides a comprehensive systematic review and analysis of large-scale dataset processing and dataset handling challenges and

requirements in a cloud computing environment by using the MapReduce framework and its open-source implementation Hadoop. We defined requirements for MapReduce systems to perform large-scale data processing. We also proposed the MapReduce framework and one implementation of this framework on Amazon Web Services. At the end of the paper, we presented an experimentation of running MapReduce

system in a cloud environment. This paper outlines one of the best techniques to process large datasets is MapReduce; it also can help developers to do parallel and distributed computation in a cloud environment. More Related Content

Similar to Big data- hadoop -MapReduce (20)

Future of Data Intensive Applicaitons

Future of Data Intensive ApplicaitonsMilind Bhandarkar

Ã˝

"Big Data" is a much-hyped term nowadays in Business Computing. However, the core concept of collaborative environments conducting experiments over large shared data repositories has existed for decades. In this talk, I will outline how recent advances in Cloud Computing, Big Data processing frameworks, and agile application development platforms enable Data Intensive Cloud Applications. I will provide a brief history of efforts in building scalable & adaptive run-time environments, and the role these runtime systems will play in new Cloud Applications. I will present a vision for cloud platforms for science, where data-intensive frameworks such as Apache Hadoop will play a key role.An Analytical Study on Research Challenges and Issues in Big Data Analysis.pdf

An Analytical Study on Research Challenges and Issues in Big Data Analysis.pdfApril Knyff

Ã˝

This document discusses research challenges and issues in big data analysis. It begins with an introduction to big data and its key characteristics of volume, velocity, variety, and veracity. It then discusses challenges related to big data storage, privacy and security of data, and data processing. Specifically, it explores issues around data accessibility, application domains, privacy and security, and analytics such as heterogeneity, incompleteness, and real-time analysis of streaming data.Hadoop performance modeling for job estimation and resource provisioning

Hadoop performance modeling for job estimation and resource provisioningLeMeniz Infotech

Ã˝

Hadoop performance modeling for job estimation and resource provisioning

Do Your Projects With Technology Experts

To Get this projects Call : 9566355386 / 99625 88976

Web : http://www.lemenizinfotech.com

Web : http://www.ieeemaster.com

Mail : projects@lemenizinfotech.com

Blog : http://ieeeprojectspondicherry.weebly.com

Blog : http://www.ieeeprojectsinpondicherry.blogspot.in/

Youtube:https://www.youtube.com/watch?v=eesBNUnKvws Presentation1

Presentation1Atul Singh

Ã˝

The document discusses analyzing the MovieLens dataset using a big data approach with Pig Hadoop. It introduces the dataset and discusses how big data is changing businesses by uncovering hidden insights. The main functionalities of the project are outlined, including analyzing aspects like movie ratings by year, gender, and age. The requirements, modules, and system design are then described. The modules involve loading the data into HDFS, analyzing it with MapReduce, storing results in HDFS, and reading results. The system design shows the data flowing from HDFS to MapReduce processing to end users. References are provided to learn more about related big data and Hadoop topics.AnupDudaniDataScience2015

AnupDudaniDataScience2015Anup Dudani

Ã˝

Anup Dudani is a software engineer and data analyst based in San Jose, CA. He holds an MS in Software Engineering from San Jose State University and a BE in Computer Science and Engineering from Amravati University in India. His technical skills include Java, Python, R, Hadoop, Spark, and AWS. He has work experience developing distributed RESTful applications and analyzing public datasets. His projects include developing prototype applications for eBay and Yelp and analyzing NYC subway ridership data.Optimizing Bigdata Processing by using Hybrid Hierarchically Distributed Data...

Optimizing Bigdata Processing by using Hybrid Hierarchically Distributed Data...IJCSIS Research Publications

Ã˝

Data is the most valuable entity in today’s world which has to be managed. The huge data

available is to be processed for knowledge and predictions.This huge data in other words big data is

available from various sources like Facebook, twitter and many more resources. The processing time taken

by the frameworks such as Spark ,MapReduce Hierachial Distributed Matrix(HHDM) is more. Hence

Hybrid Hierarchically Distributed Data Matrix(HHHDM) is proposed. This framework is used to develop

Bigdata applications. In existing system developed programs are by default or automatically roughly

defined, jobs are without any functionality being described to be reusable.It also reduces the ability to

optimize data flow of job sequences and pipelines. To overcome the problems of existing framework we

introduce a HHHDM method for developing the big data processing jobs. The proposed method is a Hybrid

method which has the advantages of Hierarchial Distributed Matrix (HHDM) which is functional,

stronglytyped for writing big data applications which are composable. To improve the performance of

executing HHHDM jobs multiple optimizationsis applied to the HHHDM method. The experimental results

show that the improvement of the processing time is 65-70 percent when compared to the existing

technology that is spark.Social Media Market Trender with Dache Manager Using Hadoop and Visualization...

Social Media Market Trender with Dache Manager Using Hadoop and Visualization...IRJET Journal

Ã˝

This document proposes using Apache Hadoop and a data-aware cache framework called Dache to analyze large amounts of social media data from Twitter in real-time. The goals are to overcome limitations of existing analytics tools by leveraging Hadoop's ability to handle big data, improve processing speed through Dache caching, and provide visualizations of trends. Data would be grabbed from Twitter using Flume, stored in HDFS, converted to CSV format using MapReduce, analyzed using Dache to optimize Hadoop jobs, and visualized using tools like Tableau. The system aims to efficiently analyze social media trends at low cost using open source tools.Multi-Cloud Services

Multi-Cloud ServicesIRJET Journal

Ã˝

This document discusses the design and implementation of an automation framework for data management and storage on cloud platforms using automation tools like Ansible. It proposes using Hadoop for large-scale data distribution and storage, AWS cloud for infrastructure provisioning, and Ansible for automating the deployment and management of the Hadoop cluster. The methodology involves requirements analysis, system design, implementation, and testing. The results indicate the system meets specifications by automatically creating cloud storage spaces as needed by users. The framework has potential for future extension by integrating a front-end interface and additional features.B1803031217

B1803031217IOSR Journals

Ã˝

This document provides a survey of distributed heterogeneous big data mining adaptation in the cloud. It discusses how big data is large, heterogeneous, and distributed, making it difficult to analyze with traditional tools. The cloud helps overcome these issues by providing scalable infrastructure on demand. However, directly applying Hadoop MapReduce in the cloud is inefficient due to its assumption of homogeneous nodes. The document surveys different approaches for improving MapReduce performance in heterogeneous cloud environments through techniques like optimized task scheduling and resource allocation.IRJET- Comparatively Analysis on K-Means++ and Mini Batch K-Means Clustering ...

IRJET- Comparatively Analysis on K-Means++ and Mini Batch K-Means Clustering ...IRJET Journal

Ã˝

This document provides an overview and comparative analysis of the K-Means++ and Mini Batch K-Means clustering algorithms in cloud computing using MapReduce. It first introduces cloud computing and its advantages for processing big data using Hadoop MapReduce. It then discusses the K-Means++ algorithm as an improved version of the standard K-Means algorithm that initializes cluster centroids more intelligently. Finally, it compares the performance of K-Means++ and Mini Batch K-Means when implemented using MapReduce for large-scale clustering in cloud environments.Performance evaluation of Map-reduce jar pig hive and spark with machine lear...

Performance evaluation of Map-reduce jar pig hive and spark with machine lear...IJECEIAES

Ã˝

Big data is the biggest challenges as we need huge processing power system and good algorithms to make a decision. We need Hadoop environment with pig hive, machine learning and hadoopecosystem components. The data comes from industries. Many devices around us and sensor, and from social media sites. According to McKinsey There will be a shortage of 15000000 big data professionals by the end of 2020. There are lots of technologies to solve the problem of big data Storage and processing. Such technologies are Apache Hadoop, Apache Spark, Apache Kafka, and many more. Here we analyse the processing speed for the 4GB data on cloudx lab with Hadoop mapreduce with varing mappers and reducers and with pig script and Hive querries and spark environment along with machine learning technology and from the results we can say that machine learning with Hadoop will enhance the processing performance along with with spark, and also we can say that spark is better than Hadoop mapreduce pig and hive, spark with hive and machine learning will be the best performance enhanced compared with pig and hive, Hadoop mapreduce jar.Resume_latest_22_01

Resume_latest_22_01Raghu Golla

Ã˝

Raghu Golla has experience as a Software Development Engineer at Shipler Technology developing projects using Django, Python, HTML, CSS, and JQuery. His projects include an automatic billing system, vendor ratings tracking module, and integrating a new website. He has a M.Tech from NIT Karnataka and B.Tech from JNTU Hyderabad. His areas of research include data aggregation in wireless sensor networks, load balancing algorithms, and SQL injection detection. He has published papers on load balancing and has one accepted to IEEE ICACNI 2016.Influence of Hadoop in Big Data Analysis and Its Aspects

Influence of Hadoop in Big Data Analysis and Its Aspects IJMER

Ã˝

This paper is an effort to present the basic understanding of BIG DATA and

HADOOP and its usefulness to an organization from the performance perspective. Along-with the

introduction of BIG DATA, the important parameters and attributes that make this emerging concept

attractive to organizations has been highlighted. The paper also evaluates the difference in the

challenges faced by a small organization as compared to a medium or large scale operation and

therefore the differences in their approach and treatment of BIG DATA. As Hadoop is a Substantial

scale, open source programming system committed to adaptable, disseminated, information

concentrated processing. A number of application examples of implementation of BIG DATA across

industries varying in strategy, product and processes have been presented. This paper also deals

with the technology aspects of BIG DATA for its implementation in organizations. Since HADOOP has

emerged as a popular tool for BIG DATA implementation. Map reduce is a programming structure for

effectively composing requisitions which prepare boundless measures of information (multi-terabyte

information sets) in- parallel on extensive bunches of merchandise fittings in a dependable,

shortcoming tolerant way. A Map reduce skeleton comprises of two parts. They are “mapper" and

"reducer" which have been examined in this paper. The paper deals with the overall architecture of

HADOOP along with the details of its various components in Big Data.big data and hadoop

big data and hadoopShamama Kamal

Ã˝

This document presents information on Big Data and its association with Hadoop. It discusses what Big Data is, defining it as too large and complex for traditional databases. It also covers the 3 V's of Big Data: volume, variety, and velocity. The document then introduces Hadoop as a tool for Big Data analytics, describing what Hadoop is and its key components and features like being scalable, reliable, and economical. MapReduce is discussed as Hadoop's programming model using mappers and reducers. Finally, the document concludes that Hadoop enables distributed, parallel processing of large data across inexpensive servers.Sustainable Software for a Digital Society

Sustainable Software for a Digital SocietyPatricia Lago

Ã˝

Software is being developed since decades without taking sustainability into consideration. This holds for its energy efficiency, that is the amount of energy software consumes while ensuring other system qualities like security, performance, reliability, etc. etc. Software un-sustainability, however, is becoming increasingly evident with the growing digitalization of our society, and its dependence on software. Finally IT specialists are becoming aware that software solutions can, and should, be designed with sustainability concerns in mind. In doing so, they can create solutions that are technically more stable (hence requiring less modifications over time), target societal goals with a higher certainty, or help sustaining the business goals of both developing and consuming organizations. Everything sounds great. The real question is: how? How can we redirect software engineering practices toward sustainable software solutions? And how can we turn sustainability into a business so that companies will finally invest in it? This talk explores results and challenges in engineering software for a more sustainable digital society.PriyankaDighe_Resume_new

PriyankaDighe_Resume_newPriyanka Dighe

Ã˝

Priyanka Dighe received her M.S. in Computer Science and Engineering from UC San Diego in 2017 and B.E. in Computer Science from BITS Pilani in 2013. She has work experience as a Software Engineer at Microsoft and Bloomreach, developing applications for Word and implementing alerting services. She completed internships at HP Labs and Bloomreach focusing on predictive analytics using social media and implementing alerting pipelines. Her skills include programming in Java, C, Python, and technologies like Spark, Hadoop, and Play Framework.Big data with hadoop

Big data with hadoopAnusha sweety

Ã˝

Most common technology which is used to store meta data and large databases.we can find numerous applications in the real world.It is the very useful for creating new database oriented appsPerformance Improvement of Heterogeneous Hadoop Cluster using Ranking Algorithm

Performance Improvement of Heterogeneous Hadoop Cluster using Ranking AlgorithmIRJET Journal

Ã˝

This document proposes using a ranking algorithm and sampling algorithm to improve the performance of a heterogeneous Hadoop cluster. The ranking algorithm prioritizes data distribution based on node frequency, so that higher frequency nodes are processed first. The sampling algorithm randomly selects nodes for data distribution instead of evenly distributing across all nodes. The proposed approach reduces computation time and improves overall cluster performance compared to the existing approach of evenly distributing data across nodes of varying sizes. Results show the proposed approach reduces execution time for various file sizes compared to the existing approach.ongc report

ongc reportPrachi Chauhan

Ã˝

This document provides a summary of a project report on big data Twitter data retrieval and text mining. The project involved creating a Twitter application, installing and loading R packages for Twitter API access and text analysis, authenticating with Twitter via OAuth, extracting text from Twitter timelines, transforming and analyzing the text through techniques like stemming words and finding frequent terms and word associations, and showcasing results with a word cloud. The project was completed as part of a summer training program at the GEOPIC center of ONGC in India under the guidance of a chief manager.LARGE-SCALE DATA PROCESSING USING MAPREDUCE IN CLOUD COMPUTING ENVIRONMENT

LARGE-SCALE DATA PROCESSING USING MAPREDUCE IN CLOUD COMPUTING ENVIRONMENTijwscjournal

Ã˝

The computer industry is being challenged to develop methods and techniques for affordable data processing on large datasets at optimum response times. The technical challenges in dealing with the increasing demand to handle vast quantities of data is daunting and on the rise. One of the recent processing models with a more efficient and intuitive solution to rapidly process large amount of data in parallel is called MapReduce. It is a framework defining a template approach of programming to perform large-scale data computation on clusters of machines in a cloud computing environment. MapReduce provides automatic parallelization and distribution of computation based on several processors. It hides the complexity of writing parallel and distributed programming code. This paper provides a comprehensive systematic review and analysis of large-scale dataset processing and dataset handling challenges and

requirements in a cloud computing environment by using the MapReduce framework and its open-source implementation Hadoop. We defined requirements for MapReduce systems to perform large-scale data processing. We also proposed the MapReduce framework and one implementation of this framework on Amazon Web Services. At the end of the paper, we presented an experimentation of running MapReduce

system in a cloud environment. This paper outlines one of the best techniques to process large datasets is MapReduce; it also can help developers to do parallel and distributed computation in a cloud environment. Optimizing Bigdata Processing by using Hybrid Hierarchically Distributed Data...

Optimizing Bigdata Processing by using Hybrid Hierarchically Distributed Data...IJCSIS Research Publications

Ã˝

Recently uploaded (20)

ARCH 2025: New Mexico Respite Provider Registry

ARCH 2025: New Mexico Respite Provider RegistryAllen Shaw

Ã˝

Demonstration of the New Mexico Respite Provider Registry, presented at the 2025 National Lifespan Respite Conference in Huntsville, AlabamaThe rise of AI Agents - Beyond Automation_ The Rise of AI Agents in Service ...

The rise of AI Agents - Beyond Automation_ The Rise of AI Agents in Service ...Yasen Lilov

Ã˝

Deep dive into how agency service-based business can leverage AI and AI Agents for automation and scale. Case Study example with platforms used outlined in the slides.Hadoop-and-R-Programming-Powering-Big-Data-Analytics.pptx

Hadoop-and-R-Programming-Powering-Big-Data-Analytics.pptxMdTahammulNoor

Ã˝

Hadoop and its uses in data analyticsExploratory data analysis (EDA) is used by data scientists to analyze and inv...

Exploratory data analysis (EDA) is used by data scientists to analyze and inv...jimmy841199

Ã˝

EDA review" can refer to several things, including the European Defence Agency (EDA), Electronic Design Automation (EDA), Exploratory Data Analysis (EDA), or Electron Donor-Acceptor (EDA) photochemistry, and requires context to understand the specific meaning. Presentation_DM_applications for another services

Presentation_DM_applications for another servicesaldowilmeryapita

Ã˝

almacenamiento del petroleo y lecytura de amalsisis Drillingis_optimizedusingartificialneural.pptx

Drillingis_optimizedusingartificialneural.pptxsinghsanjays2107

Ã˝

Drilling optimization using real time dataLITERATURE-MODEL.pptxddddddddddddddddddddddddddddddddd

LITERATURE-MODEL.pptxdddddddddddddddddddddddddddddddddMaimai708843

Ã˝

√πªÂ≥Û¥⁄≥‹¥«≤ı≥Û≤µ¥«≥‹≥Û≤µ¥«≥Û≤ıæ±¥«≤µ≤ıMastering Data Science with Tutort Academy

Mastering Data Science with Tutort Academyyashikanigam1

Ã˝

## **Mastering Data Science with Tutort Academy: Your Ultimate Guide**

### **Introduction**

Data Science is transforming industries by enabling data-driven decision-making. Mastering this field requires a structured learning path, practical exposure, and expert guidance. Tutort Academy provides a comprehensive platform for professionals looking to build expertise in Data Science.

---

## **Why Choose Data Science as a Career?**

- **High Demand:** Companies worldwide are seeking skilled Data Scientists.

- **Lucrative Salaries:** Competitive pay scales make this field highly attractive.

- **Diverse Applications:** Used in finance, healthcare, e-commerce, and more.

- **Innovation-Driven:** Constant advancements make it an exciting domain.

---

## **How Tutort Academy Helps You Master Data Science**

### **1. Comprehensive Curriculum**

Tutort Academy offers a structured syllabus covering:

- **Python & R for Data Science**

- **Machine Learning & Deep Learning**

- **Big Data Technologies**

- **Natural Language Processing (NLP)**

- **Data Visualization & Business Intelligence**

- **Cloud Computing for Data Science**

### **2. Hands-on Learning Approach**

- **Real-World Projects:** Work on datasets from different domains.

- **Live Coding Sessions:** Learn by implementing concepts in real-time.

- **Industry Case Studies:** Understand how top companies use Data Science.

### **3. Mentorship from Experts**

- **Guidance from Industry Leaders**

- **Career Coaching & Resume Building**

- **Mock Interviews & Job Assistance**

### **4. Flexible Learning for Professionals**

- **Best DSA Course Online:** Strengthen your problem-solving skills.

- **System Design Course Online:** Master scalable system architectures.

- **Live Courses for Professionals:** Balance learning with a full-time job.

---

## **Key Topics Covered in Tutort Academy’s Data Science Program**

### **1. Programming for Data Science**

- Python, SQL, and R

- Data Structures & Algorithms (DSA)

- System Design & Optimization

### **2. Data Wrangling & Analysis**

- Handling Missing Data

- Data Cleaning Techniques

- Feature Engineering

### **3. Statistics & Probability**

- Descriptive & Inferential Statistics

- Hypothesis Testing

- Probability Distributions

### **4. Machine Learning & AI**

- Supervised & Unsupervised Learning

- Model Evaluation & Optimization

- Deep Learning with TensorFlow & PyTorch

### **5. Big Data & Cloud Technologies**

- Hadoop, Spark, and AWS for Data Science

- Data Pipelines & ETL Processes

### **6. Data Visualization & Storytelling**

- Tools like Tableau, Power BI, and Matplotlib

- Creating Impactful Business Reports

### **7. Business Intelligence & Decision Making**

- How data drives strategic business choices

- Case Studies from Leading Organizations

---

## **Mastering Data Science: A Step-by-Step Plan**

### **Step 1: Learn the Fundamentals**

Start with **Python for Data Science, Statistics, and Linear Algebra.** Understanding these basics is crucial for advanced tMeasureCamp Belgrade 2025 - Yasen Lilov - Past - Present - Prompt

MeasureCamp Belgrade 2025 - Yasen Lilov - Past - Present - PromptYasen Lilov

Ã˝

My point of view of how digital analytics is evolving in the age of AI with a reflection on the last 10+ years.

Introduction to Microsoft Power BI is a business analytics service

Introduction to Microsoft Power BI is a business analytics serviceKongu Engineering College, Perundurai, Erode

Ã˝

Microsoft Power BI is a business analytics service that allows users to visualize data and share insights across an organization, or embed them in apps or websites, offering a consolidated view of data from both on-premises and cloud sourcesCapital market of Nigeria and its economic values

Capital market of Nigeria and its economic valuesezehnelson104

Ã˝

Shows detailed Explanation of the Nigerian capital market and how it affects the country's vast economyConstruction Management full notes (15CV61).pdf

Construction Management full notes (15CV61).pdfAjaharuddin1

Ã˝

Manage of construction which reflects the cost management High-Paying Data Analytics Opportunities in Jaipur and Boost Your Career.pdf

High-Paying Data Analytics Opportunities in Jaipur and Boost Your Career.pdfvinay salarite

Ã˝

Jaipur offers high-paying data analytics opportunities with a booming tech industry and a growing need for skilled professionals. With competitive salaries and career growth potential, the city is ideal for aspiring data analysts. Platforms like Salarite make it easy to discover and apply for these lucrative roles, helping you boost your career.Introduction to Microsoft Power BI is a business analytics service

Introduction to Microsoft Power BI is a business analytics serviceKongu Engineering College, Perundurai, Erode

Ã˝

Big data- hadoop -MapReduce

- 1. DepartmentofMechanicalEngineering Humility Entrepreneurship Teamwork Learning Social Responsibility Respect for IndividualDeliver The Promise GMRInstituteofTechnology,Rajam Term Paper Final-Review GMR Institute of Technology An Autonomous Institute Affiliated to JNTUK, Kakinada 1 Department of Computer Science Engineering

- 2. DepartmentofMechanicalEngineering Humility Entrepreneurship Teamwork Learning Social Responsibility Respect for IndividualDeliver The Promise GMRInstituteofTechnology,Rajam Performance analysis of MapReduce task in Big data using Hadoop 2November 13, 2016 TITLE by M. S. V. S. K .Avadhani (14341A05A4) Under the Guidance and supervision Of Mrs. K . Jayasri Assistant Professor Department Of Computer Science Engineering

- 3. DepartmentofMechanicalEngineering Humility Entrepreneurship Teamwork Learning Social Responsibility Respect for IndividualDeliver The Promise GMRInstituteofTechnology,Rajam ABSTRACT  Big Data is a huge amount of data that cannot be managed by the traditional data management system.  There can be three forms of data, structured form, unstructured form and semi structured form. Most of the part of big data is in unstructured form.  Unstructured data is difficult to handle.  Hadoop is a technological answer to Big Data.  The Apache Hadoop project provides better tools and techniques to handle this huge amount of data.  A Hadoop Distributed File System (HDFS) for storage and the MapReduce techniques for processing the data.  This paper discusses the work done on Hadoop by applying a number of files as input to the system and then analysing the performance of the Hadoop .

- 4. DepartmentofMechanicalEngineering Humility Entrepreneurship Teamwork Learning Social Responsibility Respect for IndividualDeliver The Promise GMRInstituteofTechnology,Rajam ABSTRACT(contd..) Besides it discusses the behaviour of the map method and the reduce method with increasing number of files and the amount of bytes written and read by these tasks. oKeywords: Big data Hadoop  HDFS  MapReduce. 4November 13, 2016

- 5. DepartmentofMechanicalEngineering Humility Entrepreneurship Teamwork Learning Social Responsibility Respect for IndividualDeliver The Promise GMRInstituteofTechnology,Rajam 5November 13, 2016

- 6. DepartmentofMechanicalEngineering Humility Entrepreneurship Teamwork Learning Social Responsibility Respect for IndividualDeliver The Promise GMRInstituteofTechnology,Rajam Hadoop • Hadoop is an open-source framework that allows to store and process big data in a distributed environment across clusters of commodity hardware. • Storing HDFS(Hadoop Distributed File System) • Processing MapReduce 6November 13, 2016

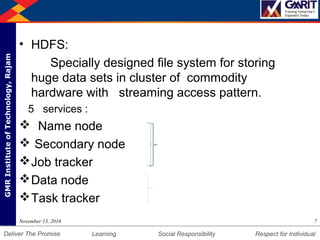

- 7. DepartmentofMechanicalEngineering Humility Entrepreneurship Teamwork Learning Social Responsibility Respect for IndividualDeliver The Promise GMRInstituteofTechnology,Rajam • HDFS: Specially designed file system for storing huge data sets in cluster of commodity hardware with streaming access pattern. 5 services :  Name node  Secondary node Job tracker Data node Task tracker 7November 13, 2016

- 8. DepartmentofMechanicalEngineering Humility Entrepreneurship Teamwork Learning Social Responsibility Respect for IndividualDeliver The Promise GMRInstituteofTechnology,Rajam 8November 13, 2016

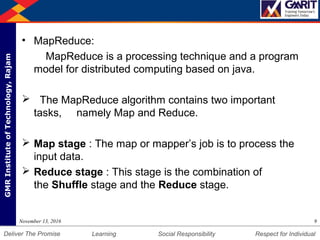

- 9. DepartmentofMechanicalEngineering Humility Entrepreneurship Teamwork Learning Social Responsibility Respect for IndividualDeliver The Promise GMRInstituteofTechnology,Rajam • MapReduce: MapReduce is a processing technique and a program model for distributed computing based on java.  The MapReduce algorithm contains two important tasks, namely Map and Reduce.  Map stage : The map or mapper’s job is to process the input data.  Reduce stage : This stage is the combination of the Shuffle stage and the Reduce stage. 9November 13, 2016

- 10. DepartmentofMechanicalEngineering Humility Entrepreneurship Teamwork Learning Social Responsibility Respect for IndividualDeliver The Promise GMRInstituteofTechnology,Rajam 10November 13, 2016

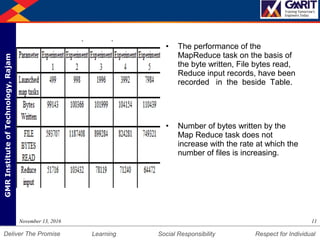

- 11. DepartmentofMechanicalEngineering Humility Entrepreneurship Teamwork Learning Social Responsibility Respect for IndividualDeliver The Promise GMRInstituteofTechnology,Rajam • The performance of the MapReduce task on the basis of the byte written, File bytes read, Reduce input records, have been recorded in the beside Table. • Number of bytes written by the Map Reduce task does not increase with the rate at which the number of files is increasing. 11November 13, 2016

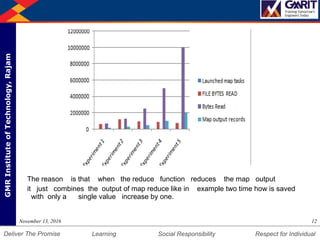

- 12. DepartmentofMechanicalEngineering Humility Entrepreneurship Teamwork Learning Social Responsibility Respect for IndividualDeliver The Promise GMRInstituteofTechnology,Rajam The reason is that when the reduce function reduces the map output it just combines the output of map reduce like in example two time how is saved with only a single value increase by one. 12November 13, 2016

- 13. DepartmentofMechanicalEngineering Humility Entrepreneurship Teamwork Learning Social Responsibility Respect for IndividualDeliver The Promise GMRInstituteofTechnology,Rajam Conclusion: We have analyzed the performance of the map reduce task with the increase number of files. We have used the word count application of the Map reduce for this analysis. The output shows that the Bytes written do not increase in the same proportion as compared to the amount of files increase. 13November 13, 2016

- 14. DepartmentofMechanicalEngineering Humility Entrepreneurship Teamwork Learning Social Responsibility Respect for IndividualDeliver The Promise GMRInstituteofTechnology,Rajam REFERENCES •[1] Shankar Ganesh Manikandan, Siddarth Ravi , “Big Data Analysis using Apache Hadoop”, IEEE,2014 •[2] Ankita Saldhi, Abhinav Goel”,” Big Data Analysis Using Hadoop Cluster”, IEEE,2014 •[3] Amrit Pal, Pinki Agrawal, Kunal Jain, Kunal Jain, ”A Performance Analysis of MapReduce Task with Large Number of Files Dataset in Big Data Using Hadoop”, 2014 Fourth International Conference on Communication Systems and Network Technologies •[4] Aditya B. Patel, Manashvi Birla, Ushma Nair,” “Big Data Problem Using Hadoop and Map Reduce”, NIRMA UNIVERSITY INTERNATIONAL CONFERENCE ON ENGINEERING, NUiCONE -2012 14November 13, 2016

- 15. DepartmentofMechanicalEngineering Humility Entrepreneurship Teamwork Learning Social Responsibility Respect for IndividualDeliver The Promise GMRInstituteofTechnology,Rajam Thank you…. -Avadhani M.k 15November 13, 2016