data modelingEntity-Relationship (E-R) Models UML (unified modeling language).pptx

Download as PPTX, PDF0 likes15 views

Entity-Relationship (E-R) Models UML (unified modeling language)

1 of 16

Download to read offline

![Introduction

ŌŚ” Process of creating a data model for an information system by

applying formal data modeling techniques.

ŌŚ” Process used to define and analyze data requirements needed

to support the business processes.

ŌŚ” Therefore, the process of data modeling involves professional

data modelers working closely with business stakeholders, as

well as potential users of the information system[1].](https://image.slidesharecdn.com/datamodeling-241227103608-a82bea5e/85/data-modelingEntity-Relationship-E-R-Models-UML-unified-modeling-language-pptx-2-320.jpg)

![ŌŚ” A data model provides a way to describe the design of

a database at the physical, logical and view levels.

ŌŚ” There are three different types of data models

produced while progressing from requirements to the

actual database to be used for the information

system[2].

What is Data Model](https://image.slidesharecdn.com/datamodeling-241227103608-a82bea5e/85/data-modelingEntity-Relationship-E-R-Models-UML-unified-modeling-language-pptx-4-320.jpg)

![ŌŚ” Conceptual: describes WHAT the system contains

ŌŚ” Logical: describes HOW the system will be implemented,

regardless of the DBMS

ŌŚ” Physical: describes HOW the system will be implemented using a

specific DBMS [3]

Different Data Models](https://image.slidesharecdn.com/datamodeling-241227103608-a82bea5e/85/data-modelingEntity-Relationship-E-R-Models-UML-unified-modeling-language-pptx-5-320.jpg)

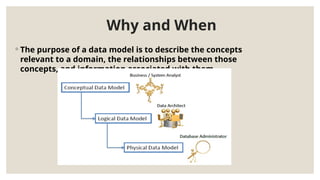

![ŌŚ” Used to model data in a standard, consistent, predictable

manner in order to manage it as a resource.

ŌŚ” To have a clear picture of the base data that your business

needs

ŌŚ” To identify missing and redundant base data [4].

Why and When](https://image.slidesharecdn.com/datamodeling-241227103608-a82bea5e/85/data-modelingEntity-Relationship-E-R-Models-UML-unified-modeling-language-pptx-13-320.jpg)

![ŌŚ” To Establish a baseline for communication across

functional boundaries within your organization

ŌŚ” Provides a basis for defining business rules

ŌŚ” Makes it cheaper, easier, and faster to upgrade your IT

solutions[5].

Why and When](https://image.slidesharecdn.com/datamodeling-241227103608-a82bea5e/85/data-modelingEntity-Relationship-E-R-Models-UML-unified-modeling-language-pptx-14-320.jpg)

![References

ŌŚ” [1] Pedersen, Torben Bach, and Christian S. Jensen. "Multidimensional data modeling for

complex data." Proceedings 15th International Conference on Data Engineering (Cat. No.

99CB36337). IEEE, 1999.

ŌŚ” [2] Kitchenham, Barbara A., Robert T. Hughes, and Stephen G. Linkman. "Modeling

software measurement data." IEEE Transactions on Software Engineering 27.9 (2001): 788-

804.

ŌŚ” [3] Chebotko, Artem, Andrey Kashlev, and Shiyong Lu. "A big data modeling methodology

for Apache Cassandra." 2015 IEEE International Congress on Big Data. IEEE, 2015.

ŌŚ” [4] Peckham, Joan, and Fred Maryanski. "Semantic data models." ACM Computing Surveys

(CSUR) 20.3 (1988): 153-189.

ŌŚ” [5] Lv, Zhihan, et al. "Next-generation big data analytics: State of the art, challenges, and

future research topics." IEEE Transactions on Industrial Informatics 13.4 (2017): 1891-1899.](https://image.slidesharecdn.com/datamodeling-241227103608-a82bea5e/85/data-modelingEntity-Relationship-E-R-Models-UML-unified-modeling-language-pptx-15-320.jpg)

Recommended

Data Modelling..pptx

Data Modelling..pptxDhanshreeKondkar1

╠²

The document discusses data modeling and provides details about the data modeling process. It defines data modeling as the process of creating a data model for an information system by applying formal modeling techniques to define data requirements to support business processes. The document outlines three types of data models - conceptual, logical, and physical - and describes what each contains. It also provides an example entity relationship diagram and explains the key components and relationships. Finally, it discusses the importance and significance of data modeling for managing data as a resource and establishing communication across an organization.Data modelling it's process and examples

Data modelling it's process and examplesJayeshGadhave1

╠²

Data modeling is the process of creating a visual representation of data to communicate connections and relationships. It involves expressing data through symbols and text to simplify complex systems. There are several types and examples of data models, including entity-relationship, hierarchical, network, relational, and object-oriented models. Data modeling is important because it provides structure to organize data and enable organizations to make better decisions based on useful insights from large datasets.Data Models [DATABASE SYSTEMS: Design, Implementation, and Management]![Data Models [DATABASE SYSTEMS: Design, Implementation, and Management]](https://cdn.slidesharecdn.com/ss_thumbnails/coronelpptch02-datamodels-190903105908-thumbnail.jpg?width=560&fit=bounds)

![Data Models [DATABASE SYSTEMS: Design, Implementation, and Management]](https://cdn.slidesharecdn.com/ss_thumbnails/coronelpptch02-datamodels-190903105908-thumbnail.jpg?width=560&fit=bounds)

![Data Models [DATABASE SYSTEMS: Design, Implementation, and Management]](https://cdn.slidesharecdn.com/ss_thumbnails/coronelpptch02-datamodels-190903105908-thumbnail.jpg?width=560&fit=bounds)

![Data Models [DATABASE SYSTEMS: Design, Implementation, and Management]](https://cdn.slidesharecdn.com/ss_thumbnails/coronelpptch02-datamodels-190903105908-thumbnail.jpg?width=560&fit=bounds)

Data Models [DATABASE SYSTEMS: Design, Implementation, and Management]Usman Tariq

╠²

In this PPT, you will learn:

ŌĆó About data modeling and why data models are important

ŌĆó About the basic data-modeling building blocks

ŌĆó What business rules are and how they influence database design

ŌĆó How the major data models evolved

ŌĆó About emerging alternative data models and the needs they fulfill

ŌĆó How data models can be classified by their level of abstraction

Author: Carlos Coronel | Steven Morris Data Modeling Training.pptx

Data Modeling Training.pptxssuser23b3eb

╠²

Data Modeling Training.pptxData Modeling Training.pptxData Modeling Training.pptxData Modeling Training.pptxData modeling 101 - Basics - Software Domain

Data modeling 101 - Basics - Software DomainAbdul Ahad

╠²

The document provides an overview of data modeling. It defines data modeling as creating conceptual representations of data objects and their relationships. The key points covered include:

- Data modeling involves multiple steps like requirements gathering, conceptual design, logical design, and physical design.

- It describes different levels of abstraction including conceptual, logical, and physical levels.

- Examples of different data modeling techniques are provided, such as ER modeling, hierarchical modeling, network modeling, relational modeling, object-oriented modeling, and object-relational modeling.

- Benefits of data modeling include improved understanding of data, improved data quality, and increased efficiency. Limitations include potential lack of flexibility and complexity.

- The significanceData models

Data modelsUsman Tariq

╠²

This document provides an overview of data modeling concepts. It discusses the importance of data modeling, the basic building blocks of data models including entities, attributes, and relationships. It also covers different types of data models such as conceptual, logical, and physical models. The document discusses relational and non-relational data models as well as emerging models like object-oriented, XML, and big data models. Business rules and their role in database design are also summarized.Data Modeling PPT

Data Modeling PPTTrinath

╠²

The document discusses data modeling, which involves creating a conceptual model of the data required for an information system. There are three types of data models - conceptual, logical, and physical. A conceptual data model describes what the system contains, a logical model describes how the system will be implemented regardless of the database, and a physical model describes the implementation using a specific database. Common elements of a data model include entities, attributes, and relationships. Data modeling is used to standardize and communicate an organization's data requirements and establish business rules.Physical Database Requirements.pdf

Physical Database Requirements.pdfseifusisay06

╠²

The document discusses physical database requirements and defines three stages of database design: conceptual, logical, and physical. It provides details on each stage, including that physical database design implements the logical data model in a DBMS and involves selecting file storage and ensuring efficient access. The document also covers database architectures, noting that a three-tier architecture separates the user applications from the physical database.1.1 Data Modelling - Part I (Understand Data Model).pdf

1.1 Data Modelling - Part I (Understand Data Model).pdfRakeshKumar145431

╠²

Data modeling is the process of creating a data model for data stored in a database. It ensures consistency in naming conventions, default values, semantics, and security while also ensuring data quality. There are three main types of data models: conceptual, logical, and physical. The conceptual model establishes entities, attributes, and their relationships. The logical model defines data element structure and relationships. The physical model describes database-specific implementation. The primary goal is accurately representing required data objects. Drawbacks include requiring application modifications for even small structure changes and lacking a standard data manipulation language.Exploring Data Modeling Techniques in Modern Data Warehouses

Exploring Data Modeling Techniques in Modern Data Warehousespriyanka rajput

╠²

This article delves deep into data modeling techniques in modern data warehouses, shedding light on their significance and various approaches. If you are aspiring to be a data analyst or data scientist, understanding data modeling is essential, making a Data Analytics Course in Bangalore, Lucknow, Bangalore, Pune, Delhi, Mumbai, Gandhinagar, and other cities across India an attractive proposition.

1-SDLC - Development Models ŌĆō Waterfall, Rapid Application Development, Agile...

1-SDLC - Development Models ŌĆō Waterfall, Rapid Application Development, Agile...JOHNLEAK1

╠²

This document provides information about different types of data models:

1. Conceptual data models define entities, attributes, and relationships at a high level without technical details.

2. Logical data models build on conceptual models by adding more detail like data types but remain independent of specific databases.

3. Physical data models describe how the database will be implemented for a specific database system, including keys, constraints and other features.Data Modeling.docx

Data Modeling.docxMichuki Samuel

╠²

Data modeling is the process of creating a visual representation of data within an information system to illustrate the relationships between different data types and structures. The goal is to model data at conceptual, logical, and physical levels to support business needs and requirements. Conceptual models provide an overview of key entities and relationships, logical models add greater detail, and physical models specify how data will be stored in databases. Data modeling benefits include reduced errors, improved communication and performance, and easier management of data mapping.Trends in Data Modeling

Trends in Data ModelingDATAVERSITY

╠²

Businesses cannot compete without data. Every organization produces and consumes it. Data trends are hitting the mainstream and businesses are adopting buzzwords such as Big Data, data vault, data scientist, etc., to seek solutions for their fundamental data issues. Few realize that the importance of any solution, regardless of platform or technology, relies on the data model supporting it. Data modeling is not an optional task for an organizationŌĆÖs data remediation effort. Instead, it is a vital activity that supports the solution driving your business.

This webinar will address emerging trends around data model application methodology, as well as trends around the practice of data modeling itself. We will discuss abstract models and entity frameworks, as well as the general shift from data modeling being segmented to becoming more integrated with business practices.

Takeaways:

How are anchor modeling, data vault, etc. different and when should I apply them?

Integrating data models to business models and the value this creates

Application development (Data first, code first, object first)DBMS data modeling.pptx

DBMS data modeling.pptxMrwafaAbbas

╠²

The document discusses three types of data models: conceptual, logical, and physical. A conceptual data model defines business concepts and rules and is created by business stakeholders. A logical data model defines how the system should be implemented regardless of the specific database and is created by data architects and analysts. A physical data model describes how the system will be implemented using a specific database management system and is created by database administrators and developers.Dbms notes

Dbms notesProf. Dr. K. Adisesha

╠²

The document provides an introduction to database management systems (DBMS). It can be summarized as follows:

1. A DBMS allows for the storage and retrieval of large amounts of related data in an organized manner. It removes data redundancy and allows for fast retrieval of data.

2. Key components of a DBMS include the database engine, data definition subsystem, data manipulation subsystem, application generation subsystem, and data administration subsystem.

3. A DBMS uses a data model to represent the organization of data in a database. Common data models include the entity-relationship model, object-oriented model, and relational model.Is 581 milestone 7 and 8 case study coastline systems consulting

Is 581 milestone 7 and 8 case study coastline systems consultingprintwork4849

╠²

IS 581 Milestone 7 and 8 Case study Coastline Systems Consulting

IS 581 Milestone 5 and 6 Case study Coastline Systems Consulting

IS 581 Milestone 3 and 4 Case study Coastline Systems Consulting

IS 581 Milestone 1 and 2 Case study Coastline Systems Consulting

IS 581 Milestone 9 and 10 Case study Coastline Systems Consulting

IS 581 Milestone 11 and 12 Case study Coastline Systems Consulting

chapter5-220725172250-dc425eb2.pdf

chapter5-220725172250-dc425eb2.pdfMahmoudSOLIMAN380726

╠²

Data development involves analyzing, designing, implementing, deploying, and maintaining data solutions to maximize the value of enterprise data. It includes defining data requirements, designing data components like databases and reports, and implementing these components. Effective data development requires collaboration between business experts, data architects, analysts, developers and other roles. The activities of data development follow the system development lifecycle and include data modeling, analysis, design, implementation, and maintenance.Chapter 5: Data Development

Chapter 5: Data Development Ahmed Alorage

╠²

The document discusses data development and data modeling concepts. It describes data development as defining data requirements, designing data solutions, and implementing components like databases, reports, and interfaces. Effective data development requires collaboration between business experts, data architects, analysts and developers. It also outlines the key activities in data modeling including analyzing information needs, developing conceptual, logical and physical data models, designing databases and information products, and implementing and testing the data solution.EContent_11_2024_01_23_18_48_10_DatamodelsUnitIVpptx__2023_11_10_16_13_01.pdf

EContent_11_2024_01_23_18_48_10_DatamodelsUnitIVpptx__2023_11_10_16_13_01.pdfsitework231

╠²

The document discusses different types of data models including conceptual, logical, and physical models. It describes conceptual models as focusing on business significance without technical details, logical models as adding more structure and relationships from a business perspective, and physical models as depicting the actual database layout. The document also covers other data modeling techniques such as hierarchical, network, object-oriented, relational, and dimensional modeling. Dimensional modeling structures data into facts and dimensions for efficient data warehousing.Understanding Data Modelling Techniques: A CompreŌĆ”.pdf

Understanding Data Modelling Techniques: A CompreŌĆ”.pdfLynn588356

╠²

This document provides an overview of data modeling techniques. It discusses the types of data models including conceptual, logical and physical models. It also outlines some common data modeling techniques such as hierarchical, relational, entity-relationship, object-oriented and dimensional modeling. Dimensional modeling includes star and snowflake schemas. The benefits of effective data modeling are also highlighted such as improved data quality, reduced costs and quicker time to market.Is 581 milestone 7 and 8 case study coastline systems consulting

Is 581 milestone 7 and 8 case study coastline systems consultingsivakumar4841

╠²

IS 581 Milestone 7 and 8 Case study Coastline Systems Consulting

IS 581 Milestone 5 and 6 Case study Coastline Systems Consulting

IS 581 Milestone 3 and 4 Case study Coastline Systems Consulting

IS 581 Milestone 1 and 2 Case study Coastline Systems Consulting

IS 581 Milestone 9 and 10 Case study Coastline Systems Consulting

IS 581 Milestone 11 and 12 Case study Coastline Systems Consulting

Data Modelling on the Relation between two or more variables

Data Modelling on the Relation between two or more variablesAminuHassanJakada1

╠²

Note on Business Analytics Data modeling techniques used for big data in enterprise networks

Data modeling techniques used for big data in enterprise networksDr. Richard Otieno

╠²

This document discusses data modeling techniques for big data in enterprise networks. It begins by defining big data and its characteristics, including volume, velocity, variety, veracity, value, variability, visualization and more. It then discusses various data modeling techniques and models that can be used for big data, including relational, non-relational, network, hierarchical and others. Finally, it examines some limitations in modeling big data for enterprise networks and calls for continued research on developing new modeling techniques to better handle the complexities of big data.DATA MODELING.pptx

DATA MODELING.pptxNishimwePrince

╠²

This paper above describes a brief touch on conceptual data modelling, and creating rich E-R Diagrams.Methodology conceptual databases design roll no. 99 & 111

Methodology conceptual databases design roll no. 99 & 111Manoj Nolkha

╠²

The document describes the methodology for conceptual database design. It outlines the objectives of database design which include determining information requirements, having a distinct structure, minimizing redundancy, and allowing for flexibility. The methodology involves three phases - conceptual, logical, and physical design. Conceptual design involves building a conceptual data model by identifying entities, relationships, attributes, domains, and keys. The model is checked for redundancy and validated against user requirements.Data-Ed Online: Trends in Data Modeling

Data-Ed Online: Trends in Data ModelingDATAVERSITY

╠²

Businesses cannot compete without data. Every organization produces and consumes it. Data trends are hitting the mainstream and businesses are adopting buzzwords such as Big Data, data vault, data scientist, etc., to seek solutions for their fundamental data issues. Few realize that the importance of any solution, regardless of platform or technology, relies on the data model supporting it. Data modeling is not an optional task for an organizationŌĆÖs data remediation effort. Instead, it is a vital activity that supports the solution driving your business.

This webinar will address emerging trends around data model application methodology, as well as trends around the practice of data modeling itself. We will discuss abstract models and entity frameworks, as well as the general shift from data modeling being segmented to becoming more integrated with business practices.

Takeaways:

How are anchor modeling, data vault, etc. different and when should I apply them?

Integrating data models to business models and the value this creates

Application development (Data first, code first, object first)Data-Ed: Trends in Data Modeling

Data-Ed: Trends in Data ModelingData Blueprint

╠²

The document discusses emerging trends in data modeling. It provides an overview of different types of data models including conceptual, logical and physical models. It also discusses different modeling approaches such as third normal form, star schema, and data vault. Additionally, it covers new technologies like NoSQL and key-value stores. The webinar aims to address trends in data model application technologies and the practice of data modeling itself.Conceptual framework for entity integration from multiple data sources - Draz...

Conceptual framework for entity integration from multiple data sources - Draz...Institute of Contemporary Sciences

╠²

Entity matching and entity resolution are becoming more important disciplines in data management over time, based on increasing number of data sources that should be addressed in economy that is undergoing digital transformation process, growing data volumes and increasing requirements related to data privacy. Data matching process is also called record linkage, entity matching or entity resolution in some published works. For long time research about the process was focused on matching entities from same dataset (i.e. deduplication) or from two datasets. Different algorithms used for matching different types of attributes were described in the literature, developed and implemented in data matching and data cleansing platforms. Entity resolution is element of larger entity integration process that include data acquisition, data profiling, data cleansing, schema alignment, data matching and data merge (fusion).

We can use motivating example of global pharmaceutical company with offices in more than 60 countries worldwide that migrated customer data from various legacy systems in different countries to new common CRM system in the cloud. Migration was phased by regions and countries, with new sources and data incrementally added and merged with data already migrated in previous phases. Entity integration in such case require deep understanding of data architectures, data content and each step of the process. Even with such deep understanding, design and implementation of the solution require many iterations in development process that consume human resources, time and financial resources. Reducing the number of iterations by automating and optimizing steps in the process can save vast amount of resources. There is a lot of available literature addressing any of the steps in the process, proposing different options for improvement of results or processing optimization, but the whole process still require a lot of human work and subject matter specific knowledge and many iterations to produce results that will have high F-measure (both high precision and recall). Most of the algorithms used in the various steps of the process are Human in the loop (HITL) algorithms that require human interaction. Human is always part of the simulation and consequently influences the outcome.

This paper is a part of the work in progress aimed to define conceptual framework that will try to automate and optimize some steps of entity integration process and try to reduce requirements for human influence in the process. In this paper focus will be on conceptual process definition, recommended data architecture and use of existing open source solutions for entity integration process automation and optimization.types of data modeling tecnologyesy.pptx

types of data modeling tecnologyesy.pptxssuser2690b8

╠²

Entity-Relationship (E-R) Models

UML (unified modeling language)

types of data modelingEntity-Relationship (E-R) Models UML .pptx

types of data modelingEntity-Relationship (E-R) Models UML .pptxssuser2690b8

╠²

Entity-Relationship (E-R) Models

UML (unified modeling language)

More Related Content

Similar to data modelingEntity-Relationship (E-R) Models UML (unified modeling language).pptx (20)

1.1 Data Modelling - Part I (Understand Data Model).pdf

1.1 Data Modelling - Part I (Understand Data Model).pdfRakeshKumar145431

╠²

Data modeling is the process of creating a data model for data stored in a database. It ensures consistency in naming conventions, default values, semantics, and security while also ensuring data quality. There are three main types of data models: conceptual, logical, and physical. The conceptual model establishes entities, attributes, and their relationships. The logical model defines data element structure and relationships. The physical model describes database-specific implementation. The primary goal is accurately representing required data objects. Drawbacks include requiring application modifications for even small structure changes and lacking a standard data manipulation language.Exploring Data Modeling Techniques in Modern Data Warehouses

Exploring Data Modeling Techniques in Modern Data Warehousespriyanka rajput

╠²

This article delves deep into data modeling techniques in modern data warehouses, shedding light on their significance and various approaches. If you are aspiring to be a data analyst or data scientist, understanding data modeling is essential, making a Data Analytics Course in Bangalore, Lucknow, Bangalore, Pune, Delhi, Mumbai, Gandhinagar, and other cities across India an attractive proposition.

1-SDLC - Development Models ŌĆō Waterfall, Rapid Application Development, Agile...

1-SDLC - Development Models ŌĆō Waterfall, Rapid Application Development, Agile...JOHNLEAK1

╠²

This document provides information about different types of data models:

1. Conceptual data models define entities, attributes, and relationships at a high level without technical details.

2. Logical data models build on conceptual models by adding more detail like data types but remain independent of specific databases.

3. Physical data models describe how the database will be implemented for a specific database system, including keys, constraints and other features.Data Modeling.docx

Data Modeling.docxMichuki Samuel

╠²

Data modeling is the process of creating a visual representation of data within an information system to illustrate the relationships between different data types and structures. The goal is to model data at conceptual, logical, and physical levels to support business needs and requirements. Conceptual models provide an overview of key entities and relationships, logical models add greater detail, and physical models specify how data will be stored in databases. Data modeling benefits include reduced errors, improved communication and performance, and easier management of data mapping.Trends in Data Modeling

Trends in Data ModelingDATAVERSITY

╠²

Businesses cannot compete without data. Every organization produces and consumes it. Data trends are hitting the mainstream and businesses are adopting buzzwords such as Big Data, data vault, data scientist, etc., to seek solutions for their fundamental data issues. Few realize that the importance of any solution, regardless of platform or technology, relies on the data model supporting it. Data modeling is not an optional task for an organizationŌĆÖs data remediation effort. Instead, it is a vital activity that supports the solution driving your business.

This webinar will address emerging trends around data model application methodology, as well as trends around the practice of data modeling itself. We will discuss abstract models and entity frameworks, as well as the general shift from data modeling being segmented to becoming more integrated with business practices.

Takeaways:

How are anchor modeling, data vault, etc. different and when should I apply them?

Integrating data models to business models and the value this creates

Application development (Data first, code first, object first)DBMS data modeling.pptx

DBMS data modeling.pptxMrwafaAbbas

╠²

The document discusses three types of data models: conceptual, logical, and physical. A conceptual data model defines business concepts and rules and is created by business stakeholders. A logical data model defines how the system should be implemented regardless of the specific database and is created by data architects and analysts. A physical data model describes how the system will be implemented using a specific database management system and is created by database administrators and developers.Dbms notes

Dbms notesProf. Dr. K. Adisesha

╠²

The document provides an introduction to database management systems (DBMS). It can be summarized as follows:

1. A DBMS allows for the storage and retrieval of large amounts of related data in an organized manner. It removes data redundancy and allows for fast retrieval of data.

2. Key components of a DBMS include the database engine, data definition subsystem, data manipulation subsystem, application generation subsystem, and data administration subsystem.

3. A DBMS uses a data model to represent the organization of data in a database. Common data models include the entity-relationship model, object-oriented model, and relational model.Is 581 milestone 7 and 8 case study coastline systems consulting

Is 581 milestone 7 and 8 case study coastline systems consultingprintwork4849

╠²

IS 581 Milestone 7 and 8 Case study Coastline Systems Consulting

IS 581 Milestone 5 and 6 Case study Coastline Systems Consulting

IS 581 Milestone 3 and 4 Case study Coastline Systems Consulting

IS 581 Milestone 1 and 2 Case study Coastline Systems Consulting

IS 581 Milestone 9 and 10 Case study Coastline Systems Consulting

IS 581 Milestone 11 and 12 Case study Coastline Systems Consulting

chapter5-220725172250-dc425eb2.pdf

chapter5-220725172250-dc425eb2.pdfMahmoudSOLIMAN380726

╠²

Data development involves analyzing, designing, implementing, deploying, and maintaining data solutions to maximize the value of enterprise data. It includes defining data requirements, designing data components like databases and reports, and implementing these components. Effective data development requires collaboration between business experts, data architects, analysts, developers and other roles. The activities of data development follow the system development lifecycle and include data modeling, analysis, design, implementation, and maintenance.Chapter 5: Data Development

Chapter 5: Data Development Ahmed Alorage

╠²

The document discusses data development and data modeling concepts. It describes data development as defining data requirements, designing data solutions, and implementing components like databases, reports, and interfaces. Effective data development requires collaboration between business experts, data architects, analysts and developers. It also outlines the key activities in data modeling including analyzing information needs, developing conceptual, logical and physical data models, designing databases and information products, and implementing and testing the data solution.EContent_11_2024_01_23_18_48_10_DatamodelsUnitIVpptx__2023_11_10_16_13_01.pdf

EContent_11_2024_01_23_18_48_10_DatamodelsUnitIVpptx__2023_11_10_16_13_01.pdfsitework231

╠²

The document discusses different types of data models including conceptual, logical, and physical models. It describes conceptual models as focusing on business significance without technical details, logical models as adding more structure and relationships from a business perspective, and physical models as depicting the actual database layout. The document also covers other data modeling techniques such as hierarchical, network, object-oriented, relational, and dimensional modeling. Dimensional modeling structures data into facts and dimensions for efficient data warehousing.Understanding Data Modelling Techniques: A CompreŌĆ”.pdf

Understanding Data Modelling Techniques: A CompreŌĆ”.pdfLynn588356

╠²

This document provides an overview of data modeling techniques. It discusses the types of data models including conceptual, logical and physical models. It also outlines some common data modeling techniques such as hierarchical, relational, entity-relationship, object-oriented and dimensional modeling. Dimensional modeling includes star and snowflake schemas. The benefits of effective data modeling are also highlighted such as improved data quality, reduced costs and quicker time to market.Is 581 milestone 7 and 8 case study coastline systems consulting

Is 581 milestone 7 and 8 case study coastline systems consultingsivakumar4841

╠²

IS 581 Milestone 7 and 8 Case study Coastline Systems Consulting

IS 581 Milestone 5 and 6 Case study Coastline Systems Consulting

IS 581 Milestone 3 and 4 Case study Coastline Systems Consulting

IS 581 Milestone 1 and 2 Case study Coastline Systems Consulting

IS 581 Milestone 9 and 10 Case study Coastline Systems Consulting

IS 581 Milestone 11 and 12 Case study Coastline Systems Consulting

Data Modelling on the Relation between two or more variables

Data Modelling on the Relation between two or more variablesAminuHassanJakada1

╠²

Note on Business Analytics Data modeling techniques used for big data in enterprise networks

Data modeling techniques used for big data in enterprise networksDr. Richard Otieno

╠²

This document discusses data modeling techniques for big data in enterprise networks. It begins by defining big data and its characteristics, including volume, velocity, variety, veracity, value, variability, visualization and more. It then discusses various data modeling techniques and models that can be used for big data, including relational, non-relational, network, hierarchical and others. Finally, it examines some limitations in modeling big data for enterprise networks and calls for continued research on developing new modeling techniques to better handle the complexities of big data.DATA MODELING.pptx

DATA MODELING.pptxNishimwePrince

╠²

This paper above describes a brief touch on conceptual data modelling, and creating rich E-R Diagrams.Methodology conceptual databases design roll no. 99 & 111

Methodology conceptual databases design roll no. 99 & 111Manoj Nolkha

╠²

The document describes the methodology for conceptual database design. It outlines the objectives of database design which include determining information requirements, having a distinct structure, minimizing redundancy, and allowing for flexibility. The methodology involves three phases - conceptual, logical, and physical design. Conceptual design involves building a conceptual data model by identifying entities, relationships, attributes, domains, and keys. The model is checked for redundancy and validated against user requirements.Data-Ed Online: Trends in Data Modeling

Data-Ed Online: Trends in Data ModelingDATAVERSITY

╠²

Businesses cannot compete without data. Every organization produces and consumes it. Data trends are hitting the mainstream and businesses are adopting buzzwords such as Big Data, data vault, data scientist, etc., to seek solutions for their fundamental data issues. Few realize that the importance of any solution, regardless of platform or technology, relies on the data model supporting it. Data modeling is not an optional task for an organizationŌĆÖs data remediation effort. Instead, it is a vital activity that supports the solution driving your business.

This webinar will address emerging trends around data model application methodology, as well as trends around the practice of data modeling itself. We will discuss abstract models and entity frameworks, as well as the general shift from data modeling being segmented to becoming more integrated with business practices.

Takeaways:

How are anchor modeling, data vault, etc. different and when should I apply them?

Integrating data models to business models and the value this creates

Application development (Data first, code first, object first)Data-Ed: Trends in Data Modeling

Data-Ed: Trends in Data ModelingData Blueprint

╠²

The document discusses emerging trends in data modeling. It provides an overview of different types of data models including conceptual, logical and physical models. It also discusses different modeling approaches such as third normal form, star schema, and data vault. Additionally, it covers new technologies like NoSQL and key-value stores. The webinar aims to address trends in data model application technologies and the practice of data modeling itself.Conceptual framework for entity integration from multiple data sources - Draz...

Conceptual framework for entity integration from multiple data sources - Draz...Institute of Contemporary Sciences

╠²

Entity matching and entity resolution are becoming more important disciplines in data management over time, based on increasing number of data sources that should be addressed in economy that is undergoing digital transformation process, growing data volumes and increasing requirements related to data privacy. Data matching process is also called record linkage, entity matching or entity resolution in some published works. For long time research about the process was focused on matching entities from same dataset (i.e. deduplication) or from two datasets. Different algorithms used for matching different types of attributes were described in the literature, developed and implemented in data matching and data cleansing platforms. Entity resolution is element of larger entity integration process that include data acquisition, data profiling, data cleansing, schema alignment, data matching and data merge (fusion).

We can use motivating example of global pharmaceutical company with offices in more than 60 countries worldwide that migrated customer data from various legacy systems in different countries to new common CRM system in the cloud. Migration was phased by regions and countries, with new sources and data incrementally added and merged with data already migrated in previous phases. Entity integration in such case require deep understanding of data architectures, data content and each step of the process. Even with such deep understanding, design and implementation of the solution require many iterations in development process that consume human resources, time and financial resources. Reducing the number of iterations by automating and optimizing steps in the process can save vast amount of resources. There is a lot of available literature addressing any of the steps in the process, proposing different options for improvement of results or processing optimization, but the whole process still require a lot of human work and subject matter specific knowledge and many iterations to produce results that will have high F-measure (both high precision and recall). Most of the algorithms used in the various steps of the process are Human in the loop (HITL) algorithms that require human interaction. Human is always part of the simulation and consequently influences the outcome.

This paper is a part of the work in progress aimed to define conceptual framework that will try to automate and optimize some steps of entity integration process and try to reduce requirements for human influence in the process. In this paper focus will be on conceptual process definition, recommended data architecture and use of existing open source solutions for entity integration process automation and optimization.Conceptual framework for entity integration from multiple data sources - Draz...

Conceptual framework for entity integration from multiple data sources - Draz...Institute of Contemporary Sciences

╠²

More from ssuser2690b8 (9)

types of data modeling tecnologyesy.pptx

types of data modeling tecnologyesy.pptxssuser2690b8

╠²

Entity-Relationship (E-R) Models

UML (unified modeling language)

types of data modelingEntity-Relationship (E-R) Models UML .pptx

types of data modelingEntity-Relationship (E-R) Models UML .pptxssuser2690b8

╠²

Entity-Relationship (E-R) Models

UML (unified modeling language)

data modeling data modeling and its context .pptx

data modeling data modeling and its context .pptxssuser2690b8

╠²

data modeling Representing information and a simplified explanation of its formsThe Mental Health Care Patient Management System.pptx

The Mental Health Care Patient Management System.pptxssuser2690b8

╠²

The Mental Health Care Patient Management System.pptxresistor colors and it conclusion by colors.pptx

resistor colors and it conclusion by colors.pptxssuser2690b8

╠²

resistor colors and it conclusion by colorsResistor Color Code and Measurement of Resistance.pptx

Resistor Color Code and Measurement of Resistance.pptxssuser2690b8

╠²

Resistor Color Code and Measurement of ResistanceRecently uploaded (20)

JRC_AI Watch. European landscape on the use of Artificial Intelligence by the...

JRC_AI Watch. European landscape on the use of Artificial Intelligence by the...fcoccetti

╠²

AI in the public sector in EuropeInternet Download Manager (IDM) 6.42.27 Crack Latest 2025

Internet Download Manager (IDM) 6.42.27 Crack Latest 2025umnazadiwe

╠²

Ō×Ī’ĖŤæē DOWNLOAD LINK ¤æē¤æē https://upcommunity.net/dl/

Internet Download Manager or IDM is an advanced download manager software that makes it easier to manage your downloaded files with the intelligent system, this program will speed up the downloading of files with its new technology, and according to the manufacturer, It can download up to 5 times faster than usual.

ØŚĪØŚóØŚ¦ØŚś ¤æć

¤īŹ¤ō▒¤æē COPY LINK & PASTE INTO GOOGLE ¤æē¤æē https://upcommunity.net/dl/JRC_AI Watch. European landscape on the use of Artificial Intelligence by the...

JRC_AI Watch. European landscape on the use of Artificial Intelligence by the...fcoccetti

╠²

AI in the public sector - Use casesCognitive Chasms - A Grounded Theory of GenAI Adoption

Cognitive Chasms - A Grounded Theory of GenAI AdoptionDr. Tathagat Varma

╠²

My research talk based on my Doctoral Research on "Cognitive Chasms - A Grounded Theory of GenAI Adoption" from Indian School of Business (ISB).JRC_Al Watch Road to the Adoption of Artificial Intelligence by the Public Se...

JRC_Al Watch Road to the Adoption of Artificial Intelligence by the Public Se...fcoccetti

╠²

Al Watch Road to the Adoption in the public sectorJust The Facts - Data Modeling Zone 2025

Just The Facts - Data Modeling Zone 2025Marco Wobben

╠²

Fully Communication Oriented Information Modeling (FCOIM) is a groundbreaking approach that empowers organizations to communicate with unparalleled precision and elevate their data modeling efforts. FCOIM leverages natural language to facilitate clear, efficient, and accurate communication between stakeholders, ensuring a seamless data modeling process. With the ability to generate artifacts such as JSON, SQL, and DataVault, FCOIM enables data professionals to create robust and integrated data solutions, aligning perfectly with the projectŌĆÖs requirements.

You will learn:

* The fundamentals of FCOIM and its role in enhancing communication within data modeling processes.

* How natural language modeling revolutionizes data-related discussions, fostering collaboration and understanding.

* Practical techniques to generate JSON, SQL, and DataVault artifacts from FCOIM models, streamlining data integration and analysis.OpenMetadata Community Meeting - 19th March 2025

OpenMetadata Community Meeting - 19th March 2025OpenMetadata

╠²

The community meetup was held Wednesday March 19, 2025 @ 9:00 AM PST.

The OpenMetadata 1.7 Release Community Meeting is here! We're excited to showcase our brand-new user experience and operational workflows, especially when it comes to getting started with OpenMetadata more quickly. We also have a Community Spotlight with Gorgias, an ecommerce conversational AI platform, and how they use OpenMetadata to manage their data assets and facilitate discovery with AI.

Release 1.7 Highlights:

¤Ä© Design Showcase: Brand-new UX for improved productivity for data teams

¤ÜĆ Day 1 Experience: AI agents to auto document, tier, classify PII, & test quality

¤öŹ Search Relevancy: Customizable search for more contextual, precise results

¤öä Lineage Improvements: Scalable visualization of services, domains, & products

¤Śæ’ĖÅ Domain Enhancements: Improved tag & glossary management across domains

¤ż¢ Reverse Metadata: Sync metadata back to sources for consistent governance

¤æż Persona UI Customization: Views & workflows tailored to user responsibilities

Ō×Ģ ŌĆ”And more!

Community Spotlight:

Antoine Balliet & Anas El Mhamdi, Senior Data Engineers from Gorgias, will share data management learnings with OpenMetadata, including data source coverage, asset discovery, and data assistance. Gorgias is the Conversational AI platform for ecommerce that drives sales and resolves support inquiries. Trusted by over 15,000 ecommerce brands, Gorgias supports growing independent shops to globally recognizable retailers.

Monthly meeting to present new and up-coming releases, discuss questions and hear from community members.Ethical Hacker Certificate - Cisco Networking Academy Program

Ethical Hacker Certificate - Cisco Networking Academy ProgramVICTOR MAESTRE RAMIREZ

╠²

Ethical Hacker Certificate - Cisco Networking Academy Programdata modelingEntity-Relationship (E-R) Models UML (unified modeling language).pptx

- 2. Introduction ŌŚ” Process of creating a data model for an information system by applying formal data modeling techniques. ŌŚ” Process used to define and analyze data requirements needed to support the business processes. ŌŚ” Therefore, the process of data modeling involves professional data modelers working closely with business stakeholders, as well as potential users of the information system[1].

- 3. What is Data Model ŌŚ” Data Model is a collection of conceptual tools for describing data, data relationships, data semantics and consistency constraint. ŌŚ” A data model is a conceptual representation of data structures required for data base and is very powerful in expressing and communicating the business requirements ŌŚ” A data model visually represents the nature of data, business rules governing the data, and how it will be organized in the database

- 4. ŌŚ” A data model provides a way to describe the design of a database at the physical, logical and view levels. ŌŚ” There are three different types of data models produced while progressing from requirements to the actual database to be used for the information system[2]. What is Data Model

- 5. ŌŚ” Conceptual: describes WHAT the system contains ŌŚ” Logical: describes HOW the system will be implemented, regardless of the DBMS ŌŚ” Physical: describes HOW the system will be implemented using a specific DBMS [3] Different Data Models

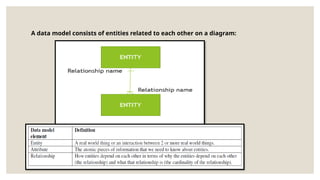

- 6. A data model consists of entities related to each other on a diagram:

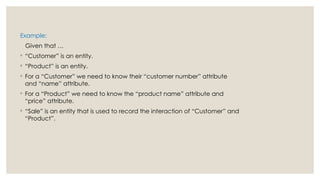

- 7. Example: Given that ŌĆ” ŌŚ” ŌĆ£CustomerŌĆØ is an entity. ŌŚ” ŌĆ£ProductŌĆØ is an entity. ŌŚ” For a ŌĆ£CustomerŌĆØ we need to know their ŌĆ£customer numberŌĆØ attribute and ŌĆ£nameŌĆØ attribute. ŌŚ” For a ŌĆ£ProductŌĆØ we need to know the ŌĆ£product nameŌĆØ attribute and ŌĆ£priceŌĆØ attribute. ŌŚ” ŌĆ£SaleŌĆØ is an entity that is used to record the interaction of ŌĆ£CustomerŌĆØ and ŌĆ£ProductŌĆØ.

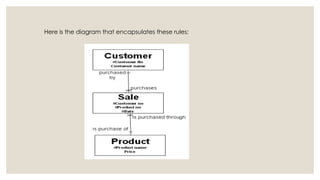

- 8. Here is the diagram that encapsulates these rules:

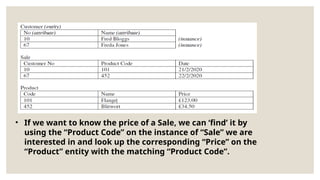

- 9. ŌĆó If we want to know the price of a Sale, we can ŌĆśfindŌĆÖ it by using the ŌĆ£Product CodeŌĆØ on the instance of ŌĆ£SaleŌĆØ we are interested in and look up the corresponding ŌĆ£PriceŌĆØ on the ŌĆ£ProductŌĆØ entity with the matching ŌĆ£Product CodeŌĆØ.

- 10. Types of Data Models ŌŚ” Entity-Relationship (E-R) Models ŌŚ” UML (unified modeling language)

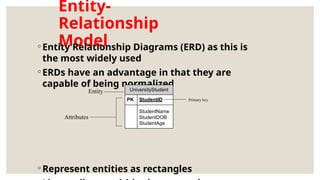

- 11. Entity- Relationship Model ŌŚ” Entity Relationship Diagrams (ERD) as this is the most widely used ŌŚ” ERDs have an advantage in that they are capable of being normalized ŌŚ” Represent entities as rectangles UniversityStudent PK StudentID StudentName StudentDOB StudentAge Entity Attributes Primary key

- 12. Why and When ŌŚ” The purpose of a data model is to describe the concepts relevant to a domain, the relationships between those concepts, and information associated with them

- 13. ŌŚ” Used to model data in a standard, consistent, predictable manner in order to manage it as a resource. ŌŚ” To have a clear picture of the base data that your business needs ŌŚ” To identify missing and redundant base data [4]. Why and When

- 14. ŌŚ” To Establish a baseline for communication across functional boundaries within your organization ŌŚ” Provides a basis for defining business rules ŌŚ” Makes it cheaper, easier, and faster to upgrade your IT solutions[5]. Why and When

- 15. References ŌŚ” [1] Pedersen, Torben Bach, and Christian S. Jensen. "Multidimensional data modeling for complex data." Proceedings 15th International Conference on Data Engineering (Cat. No. 99CB36337). IEEE, 1999. ŌŚ” [2] Kitchenham, Barbara A., Robert T. Hughes, and Stephen G. Linkman. "Modeling software measurement data." IEEE Transactions on Software Engineering 27.9 (2001): 788- 804. ŌŚ” [3] Chebotko, Artem, Andrey Kashlev, and Shiyong Lu. "A big data modeling methodology for Apache Cassandra." 2015 IEEE International Congress on Big Data. IEEE, 2015. ŌŚ” [4] Peckham, Joan, and Fred Maryanski. "Semantic data models." ACM Computing Surveys (CSUR) 20.3 (1988): 153-189. ŌŚ” [5] Lv, Zhihan, et al. "Next-generation big data analytics: State of the art, challenges, and future research topics." IEEE Transactions on Industrial Informatics 13.4 (2017): 1891-1899.