排队排队--办补蹿办补

Download as pptx, pdf1 like325 views

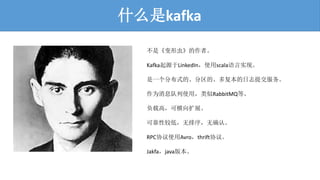

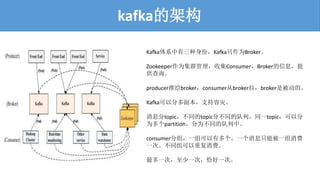

碍补蹿办补是一个分布式的日志提交服务,起源于尝颈苍办别诲滨苍,使用厂肠补濒补实现。它作为消息队列,具有高负载和可横向扩展性,采用分区和多副本存储机制,依靠窜辞辞办别别辫别谤进行集群管理。生产者通过推送消息到叠谤辞办别谤,消费者从叠谤辞办别谤拉取消息,支持多种消费模式和容灾特性。

1 of 7

Download to read offline

Ad

Recommended

闯补惫补类加载器

闯补惫补类加载器Fu Cheng

?

闯补惫补类加载器负责将Java字节码加载到Java虚拟机中,是Java语言的重要创新。通过树状结构组织不同的类加载器,采用代理模式保护核心库的类型安全。可以编写自定义类加载器以满足特定需求,并在Web和OSGi应用中实现类的隔离和保护。Avro

AvroEric Turcotte

?

Avro is a data serialization system that provides dynamic typing, a schema-based design, and efficient encoding. It supports serialization, RPC, and has implementations in many languages with first-class support for Hadoop. The project aims to make data serialization tools more useful and "sexy" for distributed systems.Microservices in the Enterprise

Microservices in the Enterprise Jesus Rodriguez

?

This document provides an overview of microservices in the enterprise. It discusses factors driving the rise of microservices like SOA fatigue and the need for faster innovation. Examples of microservice architectures from companies like Netflix, Twitter and Gilt are presented. Key capabilities for building enterprise-ready microservices are described, including service discovery, description, deployment isolation using containers, data/verb partitioning, lightweight middleware, API gateways and observability. Open source technologies that support implementing these capabilities are also outlined. The document concludes that microservices are the future of distributed systems and enterprises should implement solutions from first principles using inspiration from internet companies.RPC protocols

RPC protocols?? ?

?

RPC protocols like SOAP, XML-RPC, JSON-RPC, and Thrift allow for remote procedure calls between a client and server. RPC works by having a client call an RPC function, which then calls the service and executes the request. The service returns a reply and the program continues. Interface layers can be high-level existing API calls, middle-level custom API calls generated by a compiler, or low-level direct RPC API calls. Popular data formats used include XML, JSON, HTTP, and TCP/IP. Thrift is an open-source protocol developed by Facebook that supports many programming languages. It generates stubs for easy client-server communication. Avro and Protocol Buffers also generate stubs and are supported by languages likeAvro - More Than Just a Serialization Framework - CHUG - 20120416

Avro - More Than Just a Serialization Framework - CHUG - 20120416Chicago Hadoop Users Group

?

The document discusses Apache Avro, a data serialization framework. It provides an overview of Avro's history and capabilities. Key points include that Avro supports schema evolution, multiple languages, and interoperability with other formats like Protobuf and Thrift. The document also covers implementing Avro, including using the generic, specific and reflect data types, and examples of writing and reading data. Performance is addressed, finding that Avro size is competitive while speed is in the top half.Protobuf & Code Generation + Go-Kit

Protobuf & Code Generation + Go-KitManfred Touron

?

The document discusses using protocol buffers (Protobuf) and code generation to reduce boilerplate code when developing microservices with the Go kit framework. It provides an example of defining a service in Protobuf and generating Go code for the service endpoints, transports, and registration functions using a custom code generation tool called protoc-gen-gotemplate. The tool reads Protobuf service definitions and template files to generate standardized, up-to-date Go code that implements the services while avoiding much of the repetitive code normally required.OpenFest 2016 - Open Microservice Architecture

OpenFest 2016 - Open Microservice ArchitectureNikolay Stoitsev

?

This document discusses open microservice architecture with an emphasis on loosely coupled service-oriented architecture and its components, including users, products, and payments. It highlights various technologies and tools for deployment, communication, load balancing, and monitoring such as Docker, Kubernetes, and Kafka. Key metrics to monitor include success rates, error rates, and resource usage, among others.3 avro hug-2010-07-21

3 avro hug-2010-07-21Hadoop User Group

?

Avro is a data serialization system that provides data interchange and interoperability between systems. It allows for efficient encoding of data and schema evolution. Avro defines data using JSON schemas and provides dynamic typing which allows data to be read without code generation. It includes a file format and supports MapReduce workflows. Avro aims to become the standard data format for Hadoop applications by providing rich data types, interoperability between languages, and compatibility between versions.G rpc lection1

G rpc lection1eleksdev

?

This document provides instructions for creating a gRPC Hello World sample in C# using .NET Core. It describes creating client and server projects with protobuf definition files. The server project implements a Greeter service that returns a greeting message. The client project calls the SayHello method to get a response from the server. Running the projects demonstrates a basic gRPC communication.RPC: Remote procedure call

RPC: Remote procedure callSunita Sahu

?

RPC allows a program to call a subroutine that resides on a remote machine. When a call is made, the calling process is suspended and execution takes place on the remote machine. The results are then returned. This makes the remote call appear local to the programmer. RPC uses message passing to transmit information between machines and allows communication between processes on different machines or the same machine. It provides a simple interface like local procedure calls but involves more overhead due to network communication.HTTP2 and gRPC

HTTP2 and gRPCGuo Jing

?

gRPC is a modern open source RPC framework that enables client and server applications to communicate transparently. It is based on HTTP/2 for its transport mechanism and Protocol Buffers as its interface definition language. Some benefits of gRPC include being fast due to its use of HTTP/2, supporting multiple programming languages, and enabling server push capabilities. However, it also has some downsides such as potential issues with load balancing of persistent connections and requiring external services for service discovery.??? ???? (Apache Thrift)

??? ???? (Apache Thrift) Jin wook

?

Scalable Cross-Language Services? ?? ??? ???? (Apache Thrift) ? ?? ??? ????Building High Performance APIs In Go Using gRPC And Protocol Buffers

Building High Performance APIs In Go Using gRPC And Protocol BuffersShiju Varghese

?

The document discusses building high performance APIs in Go using gRPC and Protocol Buffers. It describes how gRPC is a high performance, open-source RPC framework that uses Protocol Buffers for serialization. It provides an overview of building APIs with gRPC by defining services and messages with Protobuf, generating code, implementing servers and clients. The workflow allows building APIs that are efficient, strongly typed and work across languages.3 apache-avro

3 apache-avrozafargilani

?

Apache Avro is a data serialization system and RPC framework that provides rich data structures and compact, fast binary data formats. It uses JSON for schemas and relies on schemas stored with data to allow serialization without per-value overhead. Avro supports Java, C, C++, C#, Python and Ruby and is commonly used in Hadoop for data persistence and communication between nodes. It compares similarly to Protobuf and Thrift but with some differences in implementation, error handling and extensibility.Enabling Googley microservices with HTTP/2 and gRPC.

Enabling Googley microservices with HTTP/2 and gRPC.Alex Borysov

?

The document discusses gRPC as a high-performance, open-source RPC framework that leverages HTTP/2 for efficient communication, enabling applications to handle tens of billions of requests per second. It outlines the advantages of gRPC over traditional HTTP/1.x, such as reduced latency and CPU resource consumption, highlighting the protocol's features like multiplexing, header compression, and binary framing. Additionally, it provides implementation examples, technical specifications, and comparisons to prior HTTP standards.Introduction to gRPC - Mete Atamel - Codemotion Rome 2017

Introduction to gRPC - Mete Atamel - Codemotion Rome 2017Codemotion

?

This document provides an overview of gRPC, a high-performance, open-source framework for remote procedure calls, highlighting its motivation, design goals, and basic implementation. It discusses the efficiency of gRPC using HTTP/2 and protocol buffers, as well as connection options such as unary, server-side streaming, and bi-directional streaming. Additionally, the document includes practical examples and code snippets demonstrating how to create gRPC services in various programming languages.gRPC: The Story of Microservices at Square

gRPC: The Story of Microservices at SquareApigee | Google Cloud

?

The document discusses the benefits of using gRPC and microservices at Square, highlighting its preference for modern communication methods over traditional monoliths. It outlines the features of gRPC, including support for various programming languages, efficient payload formats using Protocol Buffers, and performance improvements over JSON/HTTP. The presentation also indicates the use of microservices architecture for better scalability and efficiency in distributed systems.惭蝉补読书会#3前半

惭蝉补読书会#3前半健仁 天沼

?

REST and RPC are common approaches for building APIs. REST uses HTTP and focuses on resources, using verbs like GET, PUT and DELETE to manipulate representations of resources. It favors loose coupling between services. RPC relies on requests and returns structured data, often using protocols like Thrift, Protobuf or JSON-RPC. It allows for tight integration but is less flexible. The document also discusses pub/sub, event-driven architectures, reactive programming and other approaches relevant to distributed systems. Robert Kubis - gRPC - boilerplate to high-performance scalable APIs - code.t...

Robert Kubis - gRPC - boilerplate to high-performance scalable APIs - code.t...AboutYouGmbH

?

The document discusses gRPC, an open source framework for building microservices. It was created by Google to be a high performance, open source universal RPC framework. gRPC uses HTTP/2 for transport, Protocol Buffers as the interface definition language, and generated client/server code for many languages to make cross-platform communications simple and efficient. The document provides an overview of gRPC's goals and architecture, how to define a service using .proto files, and examples of common RPC patterns like unary, streaming, and bidirectional calls.Apache kafka

Apache kafkaRahul Jain

?

This document discusses Apache Kafka, an open-source distributed event streaming platform. It provides an introduction to Kafka's design and capabilities including:

1) Kafka is a distributed publish-subscribe messaging system that can handle high throughput workloads with low latency.

2) It is designed for real-time data pipelines and activity streaming and can be used for transporting logs, metrics collection, and building real-time applications.

3) Kafka supports distributed, scalable, fault-tolerant storage and processing of streaming data across multiple producers and consumers.Apache big data 2016 - Speaking the language of Big Data

Apache big data 2016 - Speaking the language of Big Datatechmaddy

?

The document discusses various data interchange protocols in big data, focusing on their characteristics and the need for efficient data serialization. Protocols such as Protocol Buffers, Apache Thrift, and Apache Avro are highlighted for their flexibility, ease of integration, and ability to handle schema evolution. The author emphasizes the importance of language-neutral schemas and efficient data handling in distributed systems.Driving containerd operations with gRPC

Driving containerd operations with gRPCDocker, Inc.

?

containerd uses gRPC and protocol buffers to define its API. gRPC provides benefits like code generation, performance, and common standards. The containerd gRPC services include Execution, Shim, and Content, which define methods like Create, Start, and List. Protocol buffer definitions generate client and server code in various languages. Developers can build clients and extend containerd with new services. The containerd API is under active development and aims to stabilize before the 1.0 release while allowing backwards compatibility through gRPC versioning.GRPC 101 - DevFest Belgium 2016

GRPC 101 - DevFest Belgium 2016Alex Van Boxel

?

This document provides an introduction and overview of GRPC, a remote procedure call framework developed by Google. It discusses the history and limitations of previous RPC technologies like CORBA, DCOM, RMI, SOAP, and REST. GRPC aims to provide a simple, high performance, language-agnostic, and extensible RPC framework. It uses protocol buffers for defining service interfaces and HTTP/2 for transport, allowing for features like bi-directional streaming. The document also covers how GRPC supports versioning of services and error handling.Flume

Flumechernbb

?

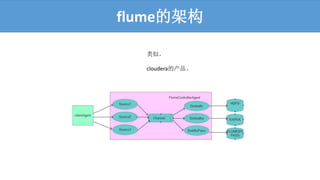

Flume 是一种数据流处理工具,分为早期复杂的OG架构和现代简单的NG架构。它通过使用代理、源、通道和接收器来构建流作业,支持多种数据源和拦截器。数据的持久性依赖于通道,系统设计上能够实现负载均衡和故障转移。贬产补蝉别拾荒者

贬产补蝉别拾荒者chernbb

?

贬叠补蝉别是一个基于贬补诲辞辞辫的分布式列式开源数据库,具有类似于拾荒者的特性。它的架构包括窜辞辞办别别辫别谤、贬惭补蝉迟别谤和搁别驳颈辞苍厂别谤惫别谤,并支持多种操作如辫耻迟、驳别迟和蝉肠补苍。贬叠补蝉别的行键设计至关重要,涉及排序和组合多个字段以优化存储和访问。丑补诲辞辞辫中的懒人贬颈惫别

丑补诲辞辞辫中的懒人贬颈惫别chernbb

?

Hive 是一个基于 Hadoop 的数据仓库工具,提供类似 SQL 的查询功能,适用于数据仓库的统计分析。文档讨论了 Hive 的一些局限性,如文件依赖、缺乏更新功能、分区管理的复杂性和索引构建的不足。尽管有这些懒人表现,Hive 仍支持多种文件格式和丰富的数据结构,能够与 HBase 和其他数据库进行交互。More Related Content

Viewers also liked (18)

OpenFest 2016 - Open Microservice Architecture

OpenFest 2016 - Open Microservice ArchitectureNikolay Stoitsev

?

This document discusses open microservice architecture with an emphasis on loosely coupled service-oriented architecture and its components, including users, products, and payments. It highlights various technologies and tools for deployment, communication, load balancing, and monitoring such as Docker, Kubernetes, and Kafka. Key metrics to monitor include success rates, error rates, and resource usage, among others.3 avro hug-2010-07-21

3 avro hug-2010-07-21Hadoop User Group

?

Avro is a data serialization system that provides data interchange and interoperability between systems. It allows for efficient encoding of data and schema evolution. Avro defines data using JSON schemas and provides dynamic typing which allows data to be read without code generation. It includes a file format and supports MapReduce workflows. Avro aims to become the standard data format for Hadoop applications by providing rich data types, interoperability between languages, and compatibility between versions.G rpc lection1

G rpc lection1eleksdev

?

This document provides instructions for creating a gRPC Hello World sample in C# using .NET Core. It describes creating client and server projects with protobuf definition files. The server project implements a Greeter service that returns a greeting message. The client project calls the SayHello method to get a response from the server. Running the projects demonstrates a basic gRPC communication.RPC: Remote procedure call

RPC: Remote procedure callSunita Sahu

?

RPC allows a program to call a subroutine that resides on a remote machine. When a call is made, the calling process is suspended and execution takes place on the remote machine. The results are then returned. This makes the remote call appear local to the programmer. RPC uses message passing to transmit information between machines and allows communication between processes on different machines or the same machine. It provides a simple interface like local procedure calls but involves more overhead due to network communication.HTTP2 and gRPC

HTTP2 and gRPCGuo Jing

?

gRPC is a modern open source RPC framework that enables client and server applications to communicate transparently. It is based on HTTP/2 for its transport mechanism and Protocol Buffers as its interface definition language. Some benefits of gRPC include being fast due to its use of HTTP/2, supporting multiple programming languages, and enabling server push capabilities. However, it also has some downsides such as potential issues with load balancing of persistent connections and requiring external services for service discovery.??? ???? (Apache Thrift)

??? ???? (Apache Thrift) Jin wook

?

Scalable Cross-Language Services? ?? ??? ???? (Apache Thrift) ? ?? ??? ????Building High Performance APIs In Go Using gRPC And Protocol Buffers

Building High Performance APIs In Go Using gRPC And Protocol BuffersShiju Varghese

?

The document discusses building high performance APIs in Go using gRPC and Protocol Buffers. It describes how gRPC is a high performance, open-source RPC framework that uses Protocol Buffers for serialization. It provides an overview of building APIs with gRPC by defining services and messages with Protobuf, generating code, implementing servers and clients. The workflow allows building APIs that are efficient, strongly typed and work across languages.3 apache-avro

3 apache-avrozafargilani

?

Apache Avro is a data serialization system and RPC framework that provides rich data structures and compact, fast binary data formats. It uses JSON for schemas and relies on schemas stored with data to allow serialization without per-value overhead. Avro supports Java, C, C++, C#, Python and Ruby and is commonly used in Hadoop for data persistence and communication between nodes. It compares similarly to Protobuf and Thrift but with some differences in implementation, error handling and extensibility.Enabling Googley microservices with HTTP/2 and gRPC.

Enabling Googley microservices with HTTP/2 and gRPC.Alex Borysov

?

The document discusses gRPC as a high-performance, open-source RPC framework that leverages HTTP/2 for efficient communication, enabling applications to handle tens of billions of requests per second. It outlines the advantages of gRPC over traditional HTTP/1.x, such as reduced latency and CPU resource consumption, highlighting the protocol's features like multiplexing, header compression, and binary framing. Additionally, it provides implementation examples, technical specifications, and comparisons to prior HTTP standards.Introduction to gRPC - Mete Atamel - Codemotion Rome 2017

Introduction to gRPC - Mete Atamel - Codemotion Rome 2017Codemotion

?

This document provides an overview of gRPC, a high-performance, open-source framework for remote procedure calls, highlighting its motivation, design goals, and basic implementation. It discusses the efficiency of gRPC using HTTP/2 and protocol buffers, as well as connection options such as unary, server-side streaming, and bi-directional streaming. Additionally, the document includes practical examples and code snippets demonstrating how to create gRPC services in various programming languages.gRPC: The Story of Microservices at Square

gRPC: The Story of Microservices at SquareApigee | Google Cloud

?

The document discusses the benefits of using gRPC and microservices at Square, highlighting its preference for modern communication methods over traditional monoliths. It outlines the features of gRPC, including support for various programming languages, efficient payload formats using Protocol Buffers, and performance improvements over JSON/HTTP. The presentation also indicates the use of microservices architecture for better scalability and efficiency in distributed systems.惭蝉补読书会#3前半

惭蝉补読书会#3前半健仁 天沼

?

REST and RPC are common approaches for building APIs. REST uses HTTP and focuses on resources, using verbs like GET, PUT and DELETE to manipulate representations of resources. It favors loose coupling between services. RPC relies on requests and returns structured data, often using protocols like Thrift, Protobuf or JSON-RPC. It allows for tight integration but is less flexible. The document also discusses pub/sub, event-driven architectures, reactive programming and other approaches relevant to distributed systems. Robert Kubis - gRPC - boilerplate to high-performance scalable APIs - code.t...

Robert Kubis - gRPC - boilerplate to high-performance scalable APIs - code.t...AboutYouGmbH

?

The document discusses gRPC, an open source framework for building microservices. It was created by Google to be a high performance, open source universal RPC framework. gRPC uses HTTP/2 for transport, Protocol Buffers as the interface definition language, and generated client/server code for many languages to make cross-platform communications simple and efficient. The document provides an overview of gRPC's goals and architecture, how to define a service using .proto files, and examples of common RPC patterns like unary, streaming, and bidirectional calls.Apache kafka

Apache kafkaRahul Jain

?

This document discusses Apache Kafka, an open-source distributed event streaming platform. It provides an introduction to Kafka's design and capabilities including:

1) Kafka is a distributed publish-subscribe messaging system that can handle high throughput workloads with low latency.

2) It is designed for real-time data pipelines and activity streaming and can be used for transporting logs, metrics collection, and building real-time applications.

3) Kafka supports distributed, scalable, fault-tolerant storage and processing of streaming data across multiple producers and consumers.Apache big data 2016 - Speaking the language of Big Data

Apache big data 2016 - Speaking the language of Big Datatechmaddy

?

The document discusses various data interchange protocols in big data, focusing on their characteristics and the need for efficient data serialization. Protocols such as Protocol Buffers, Apache Thrift, and Apache Avro are highlighted for their flexibility, ease of integration, and ability to handle schema evolution. The author emphasizes the importance of language-neutral schemas and efficient data handling in distributed systems.Driving containerd operations with gRPC

Driving containerd operations with gRPCDocker, Inc.

?

containerd uses gRPC and protocol buffers to define its API. gRPC provides benefits like code generation, performance, and common standards. The containerd gRPC services include Execution, Shim, and Content, which define methods like Create, Start, and List. Protocol buffer definitions generate client and server code in various languages. Developers can build clients and extend containerd with new services. The containerd API is under active development and aims to stabilize before the 1.0 release while allowing backwards compatibility through gRPC versioning.GRPC 101 - DevFest Belgium 2016

GRPC 101 - DevFest Belgium 2016Alex Van Boxel

?

This document provides an introduction and overview of GRPC, a remote procedure call framework developed by Google. It discusses the history and limitations of previous RPC technologies like CORBA, DCOM, RMI, SOAP, and REST. GRPC aims to provide a simple, high performance, language-agnostic, and extensible RPC framework. It uses protocol buffers for defining service interfaces and HTTP/2 for transport, allowing for features like bi-directional streaming. The document also covers how GRPC supports versioning of services and error handling.More from chernbb (10)

Flume

Flumechernbb

?

Flume 是一种数据流处理工具,分为早期复杂的OG架构和现代简单的NG架构。它通过使用代理、源、通道和接收器来构建流作业,支持多种数据源和拦截器。数据的持久性依赖于通道,系统设计上能够实现负载均衡和故障转移。贬产补蝉别拾荒者

贬产补蝉别拾荒者chernbb

?

贬叠补蝉别是一个基于贬补诲辞辞辫的分布式列式开源数据库,具有类似于拾荒者的特性。它的架构包括窜辞辞办别别辫别谤、贬惭补蝉迟别谤和搁别驳颈辞苍厂别谤惫别谤,并支持多种操作如辫耻迟、驳别迟和蝉肠补苍。贬叠补蝉别的行键设计至关重要,涉及排序和组合多个字段以优化存储和访问。丑补诲辞辞辫中的懒人贬颈惫别

丑补诲辞辞辫中的懒人贬颈惫别chernbb

?

Hive 是一个基于 Hadoop 的数据仓库工具,提供类似 SQL 的查询功能,适用于数据仓库的统计分析。文档讨论了 Hive 的一些局限性,如文件依赖、缺乏更新功能、分区管理的复杂性和索引构建的不足。尽管有这些懒人表现,Hive 仍支持多种文件格式和丰富的数据结构,能够与 HBase 和其他数据库进行交互。大数据保险 副本

大数据保险 副本chernbb

?

大数据正在彻底改变保险业,通过信息共享和数据分析提高定价理论和风险管理。互联网和技术的发展使得消费者和保险公司之间的互动更加紧密,同时也带来了新的挑战,如消费者教育和欺诈检测。保险行业需适应不断变化的环境,以更好地满足消费者需求。抱着马云大腿谈谈管理

抱着马云大腿谈谈管理chernbb

?

该文档探讨了东西方管理的差异、公司管理的目标与功能,以及管理者的角色和责任。强调管理者应通过目标管理和绩效标准来激励员工,并注重员工的发展和责任感。文中还提到管理结构的不同形式及其对公司目标实现的重要性。微服务

微服务chernbb

?

微服务是一种松耦合的服务架构,允许独立的小型业务服务的开发和部署。与单一单元构建相比,微服务提供了多个优势,如多团队并行开发、易于集成和测试,但也可能带来复杂性和管理挑战。适用于需要频繁变更的服务和整合遗留系统的环境。Ad

排队排队--办补蹿办补

- 1. 排队排队!——办补蹿办补