"Learning transferable architectures for scalable image recognition" Paper Review

2 likes517 views

"Learning transferable architectures for scalable image recognition, 2018 CVPR" Paper Review. NASNet

1 of 17

Downloaded 15 times

Recommended

"From image level to pixel-level labeling with convolutional networks" Paper ...

"From image level to pixel-level labeling with convolutional networks" Paper ...LEE HOSEONG

╠²

"From image level to pixel-level labeling with convolutional networks, 2015 CVPR" Paper Review"Searching for Activation Functions" Paper Review

"Searching for Activation Functions" Paper ReviewLEE HOSEONG

╠²

"Searching for Activation Functions, 2018 ICLR" Paper Review"Dataset and metrics for predicting local visible differences" Paper Review

"Dataset and metrics for predicting local visible differences" Paper ReviewLEE HOSEONG

╠²

"Dataset and metrics for predicting local visible differences, 2018 SIGGPRAH" Paper Review"Learning From Noisy Large-Scale Datasets With Minimal Supervision" Paper Review

"Learning From Noisy Large-Scale Datasets With Minimal Supervision" Paper ReviewLEE HOSEONG

╠²

"Learning From Noisy Large-Scale Datasets With Minimal Supervision, 2017 CVPR" Paper Review"Google Vizier: A Service for Black-Box Optimization" Paper Review

"Google Vizier: A Service for Black-Box Optimization" Paper ReviewLEE HOSEONG

╠²

"Google Vizier: A Service for Black-Box Optimization, 2017 KDD" Paper Review"simple does it weakly supervised instance and semantic segmentation" Paper r...

"simple does it weakly supervised instance and semantic segmentation" Paper r...LEE HOSEONG

╠²

"simple does it weakly supervised instance and semantic segmentation, 2017 CVPR" Paper review"How does batch normalization help optimization" Paper Review

"How does batch normalization help optimization" Paper ReviewLEE HOSEONG

╠²

"How does batch normalization help optimization" Paper ReviewPR-313 Training BatchNorm and Only BatchNorm: On the Expressive Power of Rand...

PR-313 Training BatchNorm and Only BatchNorm: On the Expressive Power of Rand...Sunghoon Joo

╠²

Training BatchNorm and Only BatchNorm: On the Expressive Power of Random Features in CNNs

Jonathan Frankle, David J. Schwab, Ari S. Morcos

ICLR 2021

Paper link: https://arxiv.org/abs/2008.09093

Video presentation link: https://youtu.be/bI8ceHOoYxk

reviewed by Sunghoon Joo (ņŻ╝ņä▒Ēøł)PR-203: Class-Balanced Loss Based on Effective Number of Samples

PR-203: Class-Balanced Loss Based on Effective Number of SamplesSunghoon Joo

╠²

PR-203: Class-Balanced Loss Based on Effective Number of Samples[ĒĢ£ĻĄŁņ¢┤] Neural Architecture Search with Reinforcement Learning![[ĒĢ£ĻĄŁņ¢┤] Neural Architecture Search with Reinforcement Learning](https://cdn.slidesharecdn.com/ss_thumbnails/nas-170612232020-thumbnail.jpg?width=560&fit=bounds)

![[ĒĢ£ĻĄŁņ¢┤] Neural Architecture Search with Reinforcement Learning](https://cdn.slidesharecdn.com/ss_thumbnails/nas-170612232020-thumbnail.jpg?width=560&fit=bounds)

![[ĒĢ£ĻĄŁņ¢┤] Neural Architecture Search with Reinforcement Learning](https://cdn.slidesharecdn.com/ss_thumbnails/nas-170612232020-thumbnail.jpg?width=560&fit=bounds)

![[ĒĢ£ĻĄŁņ¢┤] Neural Architecture Search with Reinforcement Learning](https://cdn.slidesharecdn.com/ss_thumbnails/nas-170612232020-thumbnail.jpg?width=560&fit=bounds)

[ĒĢ£ĻĄŁņ¢┤] Neural Architecture Search with Reinforcement LearningKiho Suh

╠²

ļ¬©ļæÉņØśņŚ░ĻĄ¼ņåīņŚÉņä£ ļ░£Ēæ£Ē¢łļŹś ŌĆ£Neural Architecture Search with Reinforcement LearningŌĆØņØ┤ļØ╝ļŖö ļģ╝ļ¼Ėļ░£Ēæ£ ņ×ÉļŻīļź╝ Ļ│Ąņ£ĀĒĢ®ļŗłļŗż. ļ©ĖņŗĀļ¤¼ļŗØ Ļ░£ļ░£ ņŚģļ¼┤ņżæ ņØ╝ļČĆļź╝ ņ×ÉļÅÖĒÖöĒĢśļŖö ĻĄ¼ĻĖĆņØś AutoMLņØ┤ ļŁśĒĢśļĀżļŖöņ¦Ć ņØ┤ ļģ╝ļ¼ĖņØä ĒåĄĒĢ┤ ņל ļ│┤ņŚ¼ņżŹļŗłļŗż.

ņØ┤ ļģ╝ļ¼ĖņŚÉņä£ļŖö ļöźļ¤¼ļŗØ ĻĄ¼ņĪ░ļź╝ ļ¦īļō£ļŖö ļöźļ¤¼ļŗØ ĻĄ¼ņĪ░ņŚÉ ļīĆĒĢ┤ņä£ ņäżļ¬ģĒĢ®ļŗłļŗż. 800Ļ░£ņØś GPUļź╝ Ēś╣ņØĆ 400Ļ░£ņØś CPUļź╝ ņŹ╝Ļ│Ā State of Art Ēś╣ņØĆ State of Art ļ░öļĪ£ ņĢäļלņØ┤ņ¦Ćļ¦ī ļŹö ļ╣Āļź┤Ļ│Ā ļŹö ņ×æņØĆ ļäżĒŖĖņøīĒü¼ļź╝ ņØ┤Ļ▓āņØä ĒåĄĒĢ┤ ļ¦īļōżņŚłņŖĄļŗłļŗż. ņØ┤ņĀ£ Feature EngineeringņŚÉņä£ Neural Network Engineeringņ£╝ļĪ£ ĒÄśļ¤¼ļŗżņ×äņØ┤ ļ│ĆĒ¢łļŖöļŹ░ ņØ┤Ļ▓āņØś ņ▓½ ņŗ£ļÅä ĒĢ£ ļģ╝ļ¼Ėņ×ģļŗłļŗż. PR-339: Maintaining discrimination and fairness in class incremental learning

PR-339: Maintaining discrimination and fairness in class incremental learningSunghoon Joo

╠²

PR-339: Maintaining discrimination and fairness in class incremental learning

Paper link: http://arxiv.org/abs/1911.07053

Video presentation link: https://youtu.be/hptinxZIXT4

#class imbalance, #knowledge distillation, # class incremental learningPR-218: MFAS: Multimodal Fusion Architecture Search

PR-218: MFAS: Multimodal Fusion Architecture SearchSunghoon Joo

╠²

PR-218: MFAS: Multimodal Fusion Architecture Search

Paper link: http://arxiv.org/abs/1903.06496

Review video link: https://youtu.be/MuJpHR1CpTcAlexNet, VGG, GoogleNet, Resnet

AlexNet, VGG, GoogleNet, ResnetJungwon Kim

╠²

https://drive.google.com/open?id=1XylJLmwjhIiagep6p-hfQIZEYmMPzlgv74lSv-V8BZwDeep learning seminar_snu_161031

Deep learning seminar_snu_161031Jinwon Lee

╠²

paper review : Learning without Forgetting, Less-forgetting Learning in Deep Neural NetworksReview MLP Mixer

Review MLP MixerWoojin Jeong

╠²

MLP Mixer paper review

MLP Mixer ļģ╝ļ¼ĖņØä ņØ┤ĒĢ┤ĒĢśĻĖ░ ņ£äĒĢ£ ļ░░Ļ▓Įņ¦ĆņŗØĻ│╝ ļģ╝ļ¼ĖņŚÉ ļīĆĒĢ£ ļ”¼ļĘ░, ļ░£Ēæ£ņ×ÉņØś ņŻ╝Ļ┤ĆņĀü ĒĢ┤ņäØEncoding in Style: a Style Encoder for Image-to-Image Translation

Encoding in Style: a Style Encoder for Image-to-Image Translationtaeseon ryu

╠²

ņśżļŖś ļģ╝ļ¼ĖņØĆ ņĀ£ļ¬®ņŚÉņä£ ņ£ĀņČöĻ░Ć Ļ░ĆļŖźĒĢśļō» Image to ImageņŚÉ Ļ┤ĆļĀ©ļÉ£ ļģ╝ļ¼Ėņ×ģļŗłļŗż. ņØ╝ļ░śņĀüņØĖ GANņØś ĒśĢņŗØņØä ļØäņ¦Ć ņĢŖĻ│Ā, Pix2PixņØś ņĀĢņŗĀņØä ņØ┤ņ¢┤ļ░øņĢä, Discriminatorļź╝ ņé¼ņÜ®ĒĢśņ¦Ć ņĢŖņĢä ĒĢÖņŖĄ ņŗ£Ļ░äņŚÉ ņĄ£ņĀüĒÖöļź╝ ņØ┤ļżä ļāłņ£╝ļ®░, ņä▒ļŖźņØĆ ņØĖņĮöļŹö ņĢäĒéżĒģŹņ▓śļź╝ ņČöĻ░Ć ĒĢśļŖö ļ░®ņŗØņ£╝ļĪ£ Latent VectorņØś ņĄ£ņĀüĒÖöļź╝ ņØ┤ļŻ©ņ¢┤ ļé┤ņ¢┤ ņØ┤ļ»Ėņ¦Ćļź╝ ņØ┤ĒĢ┤ĒĢśĻ│Ā, ļåÆņØĆ ņä▒ļŖźņØä ņ×Éļ×æĒĢśļŖö Image to Image Translation ļ¬©ļŹĖņØä ļ¦īļō£ļŖöļīĆ ņä▒Ļ│ĄĒĢśņśĆņŖĄļŗłļŗż.

ļģ╝ļ¼Ė ļ”¼ļĘ░ļź╝ ņ£äĒĢ┤ ņØ┤ļ»Ėņ¦Ć ņ▓śļ”¼ĒīĆ Ļ╣ĆņżĆņ▓ĀļŗśņØ┤ ĻĖ░ņ┤łļČĆĒä░ ļģ╝ļ¼ĖņØś ņ×ÉņäĖĒĢ£ ļ”¼ļĘ░Ļ╣īņ¦Ć ļÅäņÖĆņŻ╝ņģ©ņŖĄļŗłļŗż.Imagination-Augmented Agents for Deep Reinforcement Learning

Imagination-Augmented Agents for Deep Reinforcement Learningņä▒ņ×¼ ņĄ£

╠²

I will introduce a paper about I2A architecture made by deepmind. That is about Imagination-Augmented Agents for Deep Reinforcement Learning

This slide were presented at Deep Learning Study group in DAVIAN LAB.

Paper link: https://arxiv.org/abs/1707.06203Yolo v2 urop ļ░£Ēæ£ņ×ÉļŻī

Yolo v2 urop ļ░£Ēæ£ņ×ÉļŻīDaeHeeKim31

╠²

ĻĄŁļ»╝ļīĆ ņåīĒöäĒŖĖņø©ņ¢┤ĒĢÖļČĆ AI ļ×®ņŗż ĒĢÖļČĆņŚ░ĻĄ¼ ļ░£Ēæ£ņ×ÉļŻī

yolo 9000 ļģ╝ļ¼Ė ļé┤ņÜ®ĻĖ░ņżĆ,

Yolo v2ņŚÉņä£ ļ░öļĆÉņĀÉņØä ņżæņĀÉņĀüņ£╝ļĪ£ ņ×æņä▒ĒĢśņśĆņØīImage net classification with deep convolutional neural networks

Image net classification with deep convolutional neural networks Korea, Sejong University.

╠²

Paper review : Image net classification with deep convolutional neural networks ņŖżļ¦łĒŖĖĒÅ░ ņ£äņØś ļöźļ¤¼ļŗØ

ņŖżļ¦łĒŖĖĒÅ░ ņ£äņØś ļöźļ¤¼ļŗØNAVER Engineering

╠²

ļ░£Ēæ£ņØ╝: 2018.1.

ļ░£Ēæ£ņ×É: ņŗĀļ▓öņżĆ(ĒĢśņØ┤ĒŹ╝ņ╗żļäźĒŖĖ)

ņ×æņØĆ ļöźļ¤¼ļŗØ ļ¬©ļŹĖņØä ņל ļ¦īļōżņ¢┤ ļ¬©ļ░öņØ╝ņŚÉ ļ░░ĒżĒĢ┤ ļ│Ė ņØ┤ņĢ╝ĻĖ░

- ņ×æĻ│Ā ņä▒ļŖź ņóŗņØĆ CNN ļ¬©ļŹĖņØä ņäżĻ│äĒĢśĻ│Ā ĒĢÖņŖĄĒĢśĻĖ░

- ņל ĒĢÖņŖĄļÉ£ ļöźļ¤¼ļŗØ ļ¬©ļŹĖņØä ļ¬©ļ░öņØ╝ņŚÉņä£ ĒÜ©ņ£©ņĀüņ£╝ļĪ£ ļÅīļ”¼ĻĖ░Yolo v1 urop ļ░£Ēæ£ņ×ÉļŻī

Yolo v1 urop ļ░£Ēæ£ņ×ÉļŻīDaeHeeKim31

╠²

ĻĄŁļ»╝ļīĆ ņåīĒöäĒŖĖņø©ņ¢┤ĒĢÖļČĆ AI ļ×®ņŗż ĒĢÖļČĆņŚ░ĻĄ¼ ļ░£Ēæ£ņ×ÉļŻī

yolo ļģ╝ļ¼Ė ļé┤ņÜ®ĻĖ░ņżĆņ£╝ļĪ£ ņ×æņä▒ĒĢśņśĆņØīLecture 4: Neural Networks I

Lecture 4: Neural Networks ISang Jun Lee

╠²

- POSTECH EECE695J, "ļöźļ¤¼ļŗØ ĻĖ░ņ┤ł ļ░Å ņ▓ĀĻ░ĢĻ│ĄņĀĢņŚÉņØś ĒÖ£ņÜ®", Week 4

- Contents: perceptron, multilayer perceptron, back propagation, vanishing gradient

- Video: https://youtu.be/3iqOoT6mNhkDeep Learning & Convolutional Neural Network

Deep Learning & Convolutional Neural Networkagdatalab

╠²

ļöźļ¤¼ļŗØņØś ļ░£ņĀä Ļ│╝ņĀĢĻ│╝ ĒĢ®ņä▒Ļ│▒ ņŗĀĻ▓Įļ¦ØņØś ņøÉļ”¼ļź╝ ņŚ¼ļ¤¼ ņ×ÉļŻīļōżņØä ņ░ĖĻ│ĀĒĢśņŚ¼ ņĀĢļ”¼ĒĢśņśĆņŖĄļŗłļŗż. ņŖ¼ļØ╝ņØ┤ļō£ ļÆĘļČĆļČäņŚÉņä£ļŖö ļČäņĢ╝ļ│äļĪ£ CNN ņĢīĻ│Āļ”¼ņ”śņØ┤ ņé¼ņÜ®ļÉ£ ņé¼ļĪĆļÅä ņåīĻ░£ĒĢśņśĆņŖĄļŗłļŗż.ļöźļ¤¼ļŗØ ļģ╝ļ¼ĖņØĮĻĖ░ efficient netv2 ļģ╝ļ¼Ėļ”¼ļĘ░

ļöźļ¤¼ļŗØ ļģ╝ļ¼ĖņØĮĻĖ░ efficient netv2 ļģ╝ļ¼Ėļ”¼ļĘ░taeseon ryu

╠²

ņĢłļģĢĒĢśņäĖņÜö ļöźļ¤¼ļŗØ ļģ╝ļ¼ĖņØĮĻĖ░ ļ¬©ņ×ä ņ×ģļŗłļŗż

ņśżļŖś ņØ┤ļ»Ėņ¦Ć ņ▓śļ”¼ĒīĆņŚÉņä£ ļ”¼ļĘ░ĒĢĀ ļģ╝ļ¼ĖņØĆ efficient net 2 ļģ╝ļ¼Ė ņ×ģļŗłļŗż.

ļ¼ĖņØś : tfkeras@kakao.com[paper review] ņåÉĻĘ£ļ╣ł - Eye in the sky & 3D human pose estimation in video with ...![[paper review] ņåÉĻĘ£ļ╣ł - Eye in the sky & 3D human pose estimation in video with ...](https://cdn.slidesharecdn.com/ss_thumbnails/190321eyeposegyubin-190517100712-thumbnail.jpg?width=560&fit=bounds)

![[paper review] ņåÉĻĘ£ļ╣ł - Eye in the sky & 3D human pose estimation in video with ...](https://cdn.slidesharecdn.com/ss_thumbnails/190321eyeposegyubin-190517100712-thumbnail.jpg?width=560&fit=bounds)

![[paper review] ņåÉĻĘ£ļ╣ł - Eye in the sky & 3D human pose estimation in video with ...](https://cdn.slidesharecdn.com/ss_thumbnails/190321eyeposegyubin-190517100712-thumbnail.jpg?width=560&fit=bounds)

![[paper review] ņåÉĻĘ£ļ╣ł - Eye in the sky & 3D human pose estimation in video with ...](https://cdn.slidesharecdn.com/ss_thumbnails/190321eyeposegyubin-190517100712-thumbnail.jpg?width=560&fit=bounds)

[paper review] ņåÉĻĘ£ļ╣ł - Eye in the sky & 3D human pose estimation in video with ...Gyubin Son

╠²

1. Eye in the Sky: Real-time Drone Surveillance System (DSS) for Violent Individuals Identification using ScatterNet Hybrid Deep Learning Network

https://arxiv.org/abs/1806.00746

2. 3D human pose estimation in video with temporal convolutions and semi-supervised training

https://arxiv.org/abs/1811.11742More Related Content

What's hot (20)

PR-203: Class-Balanced Loss Based on Effective Number of Samples

PR-203: Class-Balanced Loss Based on Effective Number of SamplesSunghoon Joo

╠²

PR-203: Class-Balanced Loss Based on Effective Number of Samples[ĒĢ£ĻĄŁņ¢┤] Neural Architecture Search with Reinforcement Learning![[ĒĢ£ĻĄŁņ¢┤] Neural Architecture Search with Reinforcement Learning](https://cdn.slidesharecdn.com/ss_thumbnails/nas-170612232020-thumbnail.jpg?width=560&fit=bounds)

![[ĒĢ£ĻĄŁņ¢┤] Neural Architecture Search with Reinforcement Learning](https://cdn.slidesharecdn.com/ss_thumbnails/nas-170612232020-thumbnail.jpg?width=560&fit=bounds)

![[ĒĢ£ĻĄŁņ¢┤] Neural Architecture Search with Reinforcement Learning](https://cdn.slidesharecdn.com/ss_thumbnails/nas-170612232020-thumbnail.jpg?width=560&fit=bounds)

![[ĒĢ£ĻĄŁņ¢┤] Neural Architecture Search with Reinforcement Learning](https://cdn.slidesharecdn.com/ss_thumbnails/nas-170612232020-thumbnail.jpg?width=560&fit=bounds)

[ĒĢ£ĻĄŁņ¢┤] Neural Architecture Search with Reinforcement LearningKiho Suh

╠²

ļ¬©ļæÉņØśņŚ░ĻĄ¼ņåīņŚÉņä£ ļ░£Ēæ£Ē¢łļŹś ŌĆ£Neural Architecture Search with Reinforcement LearningŌĆØņØ┤ļØ╝ļŖö ļģ╝ļ¼Ėļ░£Ēæ£ ņ×ÉļŻīļź╝ Ļ│Ąņ£ĀĒĢ®ļŗłļŗż. ļ©ĖņŗĀļ¤¼ļŗØ Ļ░£ļ░£ ņŚģļ¼┤ņżæ ņØ╝ļČĆļź╝ ņ×ÉļÅÖĒÖöĒĢśļŖö ĻĄ¼ĻĖĆņØś AutoMLņØ┤ ļŁśĒĢśļĀżļŖöņ¦Ć ņØ┤ ļģ╝ļ¼ĖņØä ĒåĄĒĢ┤ ņל ļ│┤ņŚ¼ņżŹļŗłļŗż.

ņØ┤ ļģ╝ļ¼ĖņŚÉņä£ļŖö ļöźļ¤¼ļŗØ ĻĄ¼ņĪ░ļź╝ ļ¦īļō£ļŖö ļöźļ¤¼ļŗØ ĻĄ¼ņĪ░ņŚÉ ļīĆĒĢ┤ņä£ ņäżļ¬ģĒĢ®ļŗłļŗż. 800Ļ░£ņØś GPUļź╝ Ēś╣ņØĆ 400Ļ░£ņØś CPUļź╝ ņŹ╝Ļ│Ā State of Art Ēś╣ņØĆ State of Art ļ░öļĪ£ ņĢäļלņØ┤ņ¦Ćļ¦ī ļŹö ļ╣Āļź┤Ļ│Ā ļŹö ņ×æņØĆ ļäżĒŖĖņøīĒü¼ļź╝ ņØ┤Ļ▓āņØä ĒåĄĒĢ┤ ļ¦īļōżņŚłņŖĄļŗłļŗż. ņØ┤ņĀ£ Feature EngineeringņŚÉņä£ Neural Network Engineeringņ£╝ļĪ£ ĒÄśļ¤¼ļŗżņ×äņØ┤ ļ│ĆĒ¢łļŖöļŹ░ ņØ┤Ļ▓āņØś ņ▓½ ņŗ£ļÅä ĒĢ£ ļģ╝ļ¼Ėņ×ģļŗłļŗż. PR-339: Maintaining discrimination and fairness in class incremental learning

PR-339: Maintaining discrimination and fairness in class incremental learningSunghoon Joo

╠²

PR-339: Maintaining discrimination and fairness in class incremental learning

Paper link: http://arxiv.org/abs/1911.07053

Video presentation link: https://youtu.be/hptinxZIXT4

#class imbalance, #knowledge distillation, # class incremental learningPR-218: MFAS: Multimodal Fusion Architecture Search

PR-218: MFAS: Multimodal Fusion Architecture SearchSunghoon Joo

╠²

PR-218: MFAS: Multimodal Fusion Architecture Search

Paper link: http://arxiv.org/abs/1903.06496

Review video link: https://youtu.be/MuJpHR1CpTcAlexNet, VGG, GoogleNet, Resnet

AlexNet, VGG, GoogleNet, ResnetJungwon Kim

╠²

https://drive.google.com/open?id=1XylJLmwjhIiagep6p-hfQIZEYmMPzlgv74lSv-V8BZwDeep learning seminar_snu_161031

Deep learning seminar_snu_161031Jinwon Lee

╠²

paper review : Learning without Forgetting, Less-forgetting Learning in Deep Neural NetworksReview MLP Mixer

Review MLP MixerWoojin Jeong

╠²

MLP Mixer paper review

MLP Mixer ļģ╝ļ¼ĖņØä ņØ┤ĒĢ┤ĒĢśĻĖ░ ņ£äĒĢ£ ļ░░Ļ▓Įņ¦ĆņŗØĻ│╝ ļģ╝ļ¼ĖņŚÉ ļīĆĒĢ£ ļ”¼ļĘ░, ļ░£Ēæ£ņ×ÉņØś ņŻ╝Ļ┤ĆņĀü ĒĢ┤ņäØEncoding in Style: a Style Encoder for Image-to-Image Translation

Encoding in Style: a Style Encoder for Image-to-Image Translationtaeseon ryu

╠²

ņśżļŖś ļģ╝ļ¼ĖņØĆ ņĀ£ļ¬®ņŚÉņä£ ņ£ĀņČöĻ░Ć Ļ░ĆļŖźĒĢśļō» Image to ImageņŚÉ Ļ┤ĆļĀ©ļÉ£ ļģ╝ļ¼Ėņ×ģļŗłļŗż. ņØ╝ļ░śņĀüņØĖ GANņØś ĒśĢņŗØņØä ļØäņ¦Ć ņĢŖĻ│Ā, Pix2PixņØś ņĀĢņŗĀņØä ņØ┤ņ¢┤ļ░øņĢä, Discriminatorļź╝ ņé¼ņÜ®ĒĢśņ¦Ć ņĢŖņĢä ĒĢÖņŖĄ ņŗ£Ļ░äņŚÉ ņĄ£ņĀüĒÖöļź╝ ņØ┤ļżä ļāłņ£╝ļ®░, ņä▒ļŖźņØĆ ņØĖņĮöļŹö ņĢäĒéżĒģŹņ▓śļź╝ ņČöĻ░Ć ĒĢśļŖö ļ░®ņŗØņ£╝ļĪ£ Latent VectorņØś ņĄ£ņĀüĒÖöļź╝ ņØ┤ļŻ©ņ¢┤ ļé┤ņ¢┤ ņØ┤ļ»Ėņ¦Ćļź╝ ņØ┤ĒĢ┤ĒĢśĻ│Ā, ļåÆņØĆ ņä▒ļŖźņØä ņ×Éļ×æĒĢśļŖö Image to Image Translation ļ¬©ļŹĖņØä ļ¦īļō£ļŖöļīĆ ņä▒Ļ│ĄĒĢśņśĆņŖĄļŗłļŗż.

ļģ╝ļ¼Ė ļ”¼ļĘ░ļź╝ ņ£äĒĢ┤ ņØ┤ļ»Ėņ¦Ć ņ▓śļ”¼ĒīĆ Ļ╣ĆņżĆņ▓ĀļŗśņØ┤ ĻĖ░ņ┤łļČĆĒä░ ļģ╝ļ¼ĖņØś ņ×ÉņäĖĒĢ£ ļ”¼ļĘ░Ļ╣īņ¦Ć ļÅäņÖĆņŻ╝ņģ©ņŖĄļŗłļŗż.Imagination-Augmented Agents for Deep Reinforcement Learning

Imagination-Augmented Agents for Deep Reinforcement Learningņä▒ņ×¼ ņĄ£

╠²

I will introduce a paper about I2A architecture made by deepmind. That is about Imagination-Augmented Agents for Deep Reinforcement Learning

This slide were presented at Deep Learning Study group in DAVIAN LAB.

Paper link: https://arxiv.org/abs/1707.06203Yolo v2 urop ļ░£Ēæ£ņ×ÉļŻī

Yolo v2 urop ļ░£Ēæ£ņ×ÉļŻīDaeHeeKim31

╠²

ĻĄŁļ»╝ļīĆ ņåīĒöäĒŖĖņø©ņ¢┤ĒĢÖļČĆ AI ļ×®ņŗż ĒĢÖļČĆņŚ░ĻĄ¼ ļ░£Ēæ£ņ×ÉļŻī

yolo 9000 ļģ╝ļ¼Ė ļé┤ņÜ®ĻĖ░ņżĆ,

Yolo v2ņŚÉņä£ ļ░öļĆÉņĀÉņØä ņżæņĀÉņĀüņ£╝ļĪ£ ņ×æņä▒ĒĢśņśĆņØīImage net classification with deep convolutional neural networks

Image net classification with deep convolutional neural networks Korea, Sejong University.

╠²

Paper review : Image net classification with deep convolutional neural networks ņŖżļ¦łĒŖĖĒÅ░ ņ£äņØś ļöźļ¤¼ļŗØ

ņŖżļ¦łĒŖĖĒÅ░ ņ£äņØś ļöźļ¤¼ļŗØNAVER Engineering

╠²

ļ░£Ēæ£ņØ╝: 2018.1.

ļ░£Ēæ£ņ×É: ņŗĀļ▓öņżĆ(ĒĢśņØ┤ĒŹ╝ņ╗żļäźĒŖĖ)

ņ×æņØĆ ļöźļ¤¼ļŗØ ļ¬©ļŹĖņØä ņל ļ¦īļōżņ¢┤ ļ¬©ļ░öņØ╝ņŚÉ ļ░░ĒżĒĢ┤ ļ│Ė ņØ┤ņĢ╝ĻĖ░

- ņ×æĻ│Ā ņä▒ļŖź ņóŗņØĆ CNN ļ¬©ļŹĖņØä ņäżĻ│äĒĢśĻ│Ā ĒĢÖņŖĄĒĢśĻĖ░

- ņל ĒĢÖņŖĄļÉ£ ļöźļ¤¼ļŗØ ļ¬©ļŹĖņØä ļ¬©ļ░öņØ╝ņŚÉņä£ ĒÜ©ņ£©ņĀüņ£╝ļĪ£ ļÅīļ”¼ĻĖ░Yolo v1 urop ļ░£Ēæ£ņ×ÉļŻī

Yolo v1 urop ļ░£Ēæ£ņ×ÉļŻīDaeHeeKim31

╠²

ĻĄŁļ»╝ļīĆ ņåīĒöäĒŖĖņø©ņ¢┤ĒĢÖļČĆ AI ļ×®ņŗż ĒĢÖļČĆņŚ░ĻĄ¼ ļ░£Ēæ£ņ×ÉļŻī

yolo ļģ╝ļ¼Ė ļé┤ņÜ®ĻĖ░ņżĆņ£╝ļĪ£ ņ×æņä▒ĒĢśņśĆņØīLecture 4: Neural Networks I

Lecture 4: Neural Networks ISang Jun Lee

╠²

- POSTECH EECE695J, "ļöźļ¤¼ļŗØ ĻĖ░ņ┤ł ļ░Å ņ▓ĀĻ░ĢĻ│ĄņĀĢņŚÉņØś ĒÖ£ņÜ®", Week 4

- Contents: perceptron, multilayer perceptron, back propagation, vanishing gradient

- Video: https://youtu.be/3iqOoT6mNhkDeep Learning & Convolutional Neural Network

Deep Learning & Convolutional Neural Networkagdatalab

╠²

ļöźļ¤¼ļŗØņØś ļ░£ņĀä Ļ│╝ņĀĢĻ│╝ ĒĢ®ņä▒Ļ│▒ ņŗĀĻ▓Įļ¦ØņØś ņøÉļ”¼ļź╝ ņŚ¼ļ¤¼ ņ×ÉļŻīļōżņØä ņ░ĖĻ│ĀĒĢśņŚ¼ ņĀĢļ”¼ĒĢśņśĆņŖĄļŗłļŗż. ņŖ¼ļØ╝ņØ┤ļō£ ļÆĘļČĆļČäņŚÉņä£ļŖö ļČäņĢ╝ļ│äļĪ£ CNN ņĢīĻ│Āļ”¼ņ”śņØ┤ ņé¼ņÜ®ļÉ£ ņé¼ļĪĆļÅä ņåīĻ░£ĒĢśņśĆņŖĄļŗłļŗż.ļöźļ¤¼ļŗØ ļģ╝ļ¼ĖņØĮĻĖ░ efficient netv2 ļģ╝ļ¼Ėļ”¼ļĘ░

ļöźļ¤¼ļŗØ ļģ╝ļ¼ĖņØĮĻĖ░ efficient netv2 ļģ╝ļ¼Ėļ”¼ļĘ░taeseon ryu

╠²

ņĢłļģĢĒĢśņäĖņÜö ļöźļ¤¼ļŗØ ļģ╝ļ¼ĖņØĮĻĖ░ ļ¬©ņ×ä ņ×ģļŗłļŗż

ņśżļŖś ņØ┤ļ»Ėņ¦Ć ņ▓śļ”¼ĒīĆņŚÉņä£ ļ”¼ļĘ░ĒĢĀ ļģ╝ļ¼ĖņØĆ efficient net 2 ļģ╝ļ¼Ė ņ×ģļŗłļŗż.

ļ¼ĖņØś : tfkeras@kakao.com[paper review] ņåÉĻĘ£ļ╣ł - Eye in the sky & 3D human pose estimation in video with ...![[paper review] ņåÉĻĘ£ļ╣ł - Eye in the sky & 3D human pose estimation in video with ...](https://cdn.slidesharecdn.com/ss_thumbnails/190321eyeposegyubin-190517100712-thumbnail.jpg?width=560&fit=bounds)

![[paper review] ņåÉĻĘ£ļ╣ł - Eye in the sky & 3D human pose estimation in video with ...](https://cdn.slidesharecdn.com/ss_thumbnails/190321eyeposegyubin-190517100712-thumbnail.jpg?width=560&fit=bounds)

![[paper review] ņåÉĻĘ£ļ╣ł - Eye in the sky & 3D human pose estimation in video with ...](https://cdn.slidesharecdn.com/ss_thumbnails/190321eyeposegyubin-190517100712-thumbnail.jpg?width=560&fit=bounds)

![[paper review] ņåÉĻĘ£ļ╣ł - Eye in the sky & 3D human pose estimation in video with ...](https://cdn.slidesharecdn.com/ss_thumbnails/190321eyeposegyubin-190517100712-thumbnail.jpg?width=560&fit=bounds)

[paper review] ņåÉĻĘ£ļ╣ł - Eye in the sky & 3D human pose estimation in video with ...Gyubin Son

╠²

1. Eye in the Sky: Real-time Drone Surveillance System (DSS) for Violent Individuals Identification using ScatterNet Hybrid Deep Learning Network

https://arxiv.org/abs/1806.00746

2. 3D human pose estimation in video with temporal convolutions and semi-supervised training

https://arxiv.org/abs/1811.11742Similar to "Learning transferable architectures for scalable image recognition" Paper Review (20)

Image Deep Learning ņŗżļ¼┤ņĀüņÜ®

Image Deep Learning ņŗżļ¼┤ņĀüņÜ®Youngjae Kim

╠²

Image Deep Learning ņŗżļ¼┤ņĀüņÜ®

ņĀäņ▓śļ”¼

ĒĢÖņŖĄ

ĒÅēĻ░Ć

ServiceSummary in recent advances in deep learning for object detection

Summary in recent advances in deep learning for object detectionņ░ĮĻĖ░ ļ¼Ė

╠²

Summary in recent advances in deep learning for object detectionSummary in recent advances in deep learning for object detection

Summary in recent advances in deep learning for object detectionņ░ĮĻĖ░ ļ¼Ė

╠²

Summary in recent advances in deep learning for object detectionßäåßģĄßćĆßäćßģĪßäāßģĪßå©ßäćßģ«ßäÉßģź ßäēßģĄßäīßģĪßå©ßäÆßģĪßäéßģ│ßå½ßäāßģĄßåĖßäģßģźßäéßģĄßå╝ 8ņן

ßäåßģĄßćĆßäćßģĪßäāßģĪßå©ßäćßģ«ßäÉßģź ßäēßģĄßäīßģĪßå©ßäÆßģĪßäéßģ│ßå½ßäāßģĄßåĖßäģßģźßäéßģĄßå╝ 8ņןSunggon Song

╠²

ßäåßģĄßćĆßäćßģĪßäāßģĪßå©ßäćßģ«ßäÉßģź ßäēßģĄßäīßģĪßå©ßäÆßģĪßäéßģ│ßå½ßäāßģĄßåĖßäģßģźßäéßģĄßå╝

8ņן ļöźļ¤¼ļŗØ

[ļČĆņŖżĒŖĖņ║ĀĒöä Tech Talk] ļ░░ņ¦ĆņŚ░_Structure of Model and Task![[ļČĆņŖżĒŖĖņ║ĀĒöä Tech Talk] ļ░░ņ¦ĆņŚ░_Structure of Model and Task](https://cdn.slidesharecdn.com/ss_thumbnails/boostcampaitechtechtalkbaejiyeon-211210113740-thumbnail.jpg?width=560&fit=bounds)

![[ļČĆņŖżĒŖĖņ║ĀĒöä Tech Talk] ļ░░ņ¦ĆņŚ░_Structure of Model and Task](https://cdn.slidesharecdn.com/ss_thumbnails/boostcampaitechtechtalkbaejiyeon-211210113740-thumbnail.jpg?width=560&fit=bounds)

![[ļČĆņŖżĒŖĖņ║ĀĒöä Tech Talk] ļ░░ņ¦ĆņŚ░_Structure of Model and Task](https://cdn.slidesharecdn.com/ss_thumbnails/boostcampaitechtechtalkbaejiyeon-211210113740-thumbnail.jpg?width=560&fit=bounds)

![[ļČĆņŖżĒŖĖņ║ĀĒöä Tech Talk] ļ░░ņ¦ĆņŚ░_Structure of Model and Task](https://cdn.slidesharecdn.com/ss_thumbnails/boostcampaitechtechtalkbaejiyeon-211210113740-thumbnail.jpg?width=560&fit=bounds)

[ļČĆņŖżĒŖĖņ║ĀĒöä Tech Talk] ļ░░ņ¦ĆņŚ░_Structure of Model and TaskCONNECT FOUNDATION

╠²

[ļČĆņŖżĒŖĖņ║ĀĒöä Tech Talk]

Structure of Model and Task ļ░£Ēæ£ņ×ÉļŻīņ×ģļŗłļŗż.

ļ░£Ēæ£ņ×É : ļ░░ņ¦ĆņŚ░ ņ║ĀĒŹ╝Semantic Image Synthesis with Spatially-Adaptive Normalization(GAUGAN, SPADE)

Semantic Image Synthesis with Spatially-Adaptive Normalization(GAUGAN, SPADE)jungminchung

╠²

NVIDIA paper - GAUGAN

ņØĖĻ│Ąņ¦ĆļŖźņŚ░ĻĄ¼ņøÉ ņäĖļ»Ėļéś ļ░£Ēæ£ ņ×ÉļŻīņ×ģļŗłļŗż. [Paper Review] Visualizing and understanding convolutional networks![[Paper Review] Visualizing and understanding convolutional networks](https://cdn.slidesharecdn.com/ss_thumbnails/visualizingandunderstandingconvolutionalnetworks-171116075511-thumbnail.jpg?width=560&fit=bounds)

![[Paper Review] Visualizing and understanding convolutional networks](https://cdn.slidesharecdn.com/ss_thumbnails/visualizingandunderstandingconvolutionalnetworks-171116075511-thumbnail.jpg?width=560&fit=bounds)

![[Paper Review] Visualizing and understanding convolutional networks](https://cdn.slidesharecdn.com/ss_thumbnails/visualizingandunderstandingconvolutionalnetworks-171116075511-thumbnail.jpg?width=560&fit=bounds)

![[Paper Review] Visualizing and understanding convolutional networks](https://cdn.slidesharecdn.com/ss_thumbnails/visualizingandunderstandingconvolutionalnetworks-171116075511-thumbnail.jpg?width=560&fit=bounds)

[Paper Review] Visualizing and understanding convolutional networksKorea, Sejong University.

╠²

Visualizing and understanding convolutional networksDeep Object Detectors #1 (~2016.6)

Deep Object Detectors #1 (~2016.6)Ildoo Kim

╠²

ļöźļ¤¼ļŗØņ£╝ļĪ£ ĻĄ¼ĒśäļÉ£ Object Detection ModelņŚÉ ļīĆĒĢ£ ņĀĢļ”¼ 1ĒÄĖņ×ģļŗłļŗż.

R-CNN, Fast R-CNN, Faster R-CNN, YOLO, SSD(Single Shot Detector) ļō▒ ņŻ╝ņÜö ļ¬©ļŹĖļōżņØ┤ ļéśņś© ļ░░Ļ▓ĮĻ│╝ Ļ░ü ļ¬©ļŹĖļōżņØ┤ ĒĢ┤Ļ▓░ĒĢśĻ│Āņ×É Ē¢łļŹś ļ¼ĖņĀ£ļōżņŚÉ ļīĆĒĢ┤ ņÜöņĢĮļÉ£ ĒśĢĒā£ļĪ£ ņĀĢļ”¼ļÉśņ¢┤ ņ׳ņŖĄļŗłļŗż.

2016.6 ĻĖ░ņżĆņ£╝ļĪ£ ņĀĢļ”¼ļÉ£ ļé┤ņÜ®ņØ┤ļ®░, ņØ┤Ēøä ļé┤ņÜ®ņŚÉ ļīĆĒĢ£ ņŖ¼ļØ╝ņØ┤ļō£ļŖö ļö░ļĪ£ ņŚģļĪ£ļō£ ņśłņĀĢņ×ģļŗłļŗż.

ņÜöņĢĮ ļģ╝ļ¼Ė ļ¬®ļĪØ ļ░Å Ļ┤ĆļĀ© ļ¦üĒü¼ļŖö : https://github.com/ildoonet/deep-object-detection-models/20200309 (FSRI) deep-family_v2-br31_rabbit

20200309 (FSRI) deep-family_v2-br31_rabbitjason min

╠²

Makers & ML "DEEPFAMILY -TOY"

Object Detection in NVIDIA Jetson nano

http://aitimes.org/archives/1896ņŚ░ĻĄ¼ņŗż ņäĖļ»Ėļéś Show and tell google image captioning

ņŚ░ĻĄ¼ņŗż ņäĖļ»Ėļéś Show and tell google image captioninghkh

╠²

ņä£Ļ░ĢļīĆĒĢÖĻĄÉ ņ×ÉņŚ░ņ¢┤ņ▓śļ”¼ņŚ░ĻĄ¼ņŗż ļ×® ņäĖļ»Ėļéś ņ×ÉļŻī

2017-05-18

ļ░£Ēæ£ņ×É: ĒŚłĻ┤æĒśĖDeep neural networks cnn rnn_ae_some practical techniques

Deep neural networks cnn rnn_ae_some practical techniquesKang Pilsung

╠²

1. Neural Network Ļ░£ņÜö

2. Convolutional Neural Network

3. Recurrent Neural Network

4. Auto-Encoder

5. Practical Techniques for Traning Neural NetworkLeNet & GoogLeNet

LeNet & GoogLeNetInstitute of Agricultural Machinery, NARO

╠²

ļöźļ¤¼ļŗØ ļ│ĄņŖĄ ņŖżĒä░ļöö ņ×ÉļŻī ņ×ģļŗłļŗż. LeNet-5 ņÖĆ GoogLeNetņØä ļŗżņŗ£ĒĢ£ļ▓ł ņé┤ĒÄ┤ļ│┤ņĢśņŖĄļŗłļŗż.ßäåßģĄßćĆßäćßģĪßäāßģĪßå©ßäćßģ«ßäÉßģź ßäēßģĄßäīßģĪßå©ßäÆßģĪßäéßģ│ßå½ßäāßģĄßåĖßäģßģźßäéßģĄßå╝ 8ņן

ßäåßģĄßćĆßäćßģĪßäāßģĪßå©ßäćßģ«ßäÉßģź ßäēßģĄßäīßģĪßå©ßäÆßģĪßäéßģ│ßå½ßäāßģĄßåĖßäģßģźßäéßģĄßå╝ 8ņןSunggon Song

╠²

More from LEE HOSEONG (14)

Unsupervised anomaly detection using style distillation

Unsupervised anomaly detection using style distillationLEE HOSEONG

╠²

The document discusses using convolutional autoencoders for unsupervised anomaly detection. It describes training a convolutional autoencoder model on normal data to learn the distribution of normal examples, then using the model to detect anomalies in new data based on the reconstruction error. The process involves training the autoencoder to minimize the difference between inputs and outputs, then using the trained model to encode new data and flag examples with a high reconstruction error as anomalies.do adversarially robust image net models transfer better

do adversarially robust image net models transfer betterLEE HOSEONG

╠²

The document discusses an experiment comparing the transfer learning performance of standard ImageNet models versus adversarially robust ImageNet models. The experiment finds that robust models consistently match or outperform standard models on a variety of downstream transfer learning tasks, despite having lower accuracy on ImageNet. Further analysis shows robust models improve with increased width and that the optimal level of robustness depends on properties of the downstream task like dataset granularity. Overall, the findings suggest adversarially robust models transfer learned representations better than standard models."The Many Faces of Robustness: A Critical Analysis of Out-of-Distribution Gen...

"The Many Faces of Robustness: A Critical Analysis of Out-of-Distribution Gen...LEE HOSEONG

╠²

"The Many Faces of Robustness: A Critical Analysis of Out-of-Distribution Generalization" Paper ReviewMixed Precision Training Review

Mixed Precision Training ReviewLEE HOSEONG

╠²

This document discusses mixed precision training techniques for deep neural networks. It introduces three techniques to train models with half-precision floating point without losing accuracy: 1) Maintaining a FP32 master copy of weights, 2) Scaling the loss to prevent small gradients, and 3) Performing certain arithmetic like dot products in FP32. Experimental results show these techniques allow a variety of networks to match the accuracy of FP32 training while reducing memory and bandwidth. The document also discusses related work and PyTorch's new Automatic Mixed Precision features.MVTec AD: A Comprehensive Real-World Dataset for Unsupervised Anomaly Detection

MVTec AD: A Comprehensive Real-World Dataset for Unsupervised Anomaly DetectionLEE HOSEONG

╠²

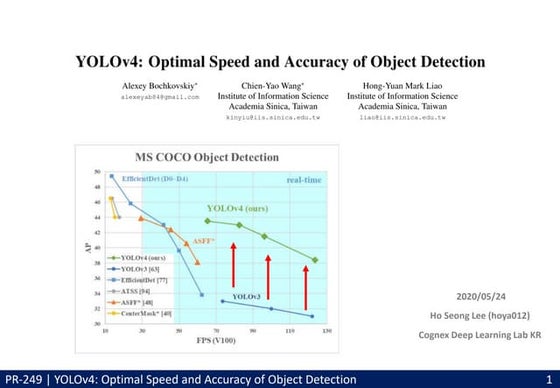

Paper review. "MVTec AD: A Comprehensive Real-World Dataset for Unsupervised Anomaly Detection", 2019 CVPRYOLOv4: optimal speed and accuracy of object detection review

YOLOv4: optimal speed and accuracy of object detection reviewLEE HOSEONG

╠²

YOLOv4 builds upon previous YOLO models and introduces techniques like CSPDarknet53, SPP, PAN, Mosaic data augmentation, and modifications to existing methods to achieve state-of-the-art object detection speed and accuracy while being trainable on a single GPU. Experiments show that combining these techniques through a "bag of freebies" and "bag of specials" approach improves classifier and detector performance over baselines on standard datasets. The paper contributes an efficient object detection model suitable for production use with limited resources.FixMatch:simplifying semi supervised learning with consistency and confidence

FixMatch:simplifying semi supervised learning with consistency and confidenceLEE HOSEONG

╠²

This document summarizes the FixMatch paper, which proposes a simple semi-supervised learning method that achieves state-of-the-art results. FixMatch combines pseudo-labeling and consistency regularization by generating pseudo-labels for unlabeled data using a model's prediction on a weakly augmented version and enforcing consistency on a strongly augmented version. Extensive ablation studies show that FixMatch outperforms previous methods on standard benchmarks even with limited labeled data and identifies consistency regularization and pseudo-labeling as the most important factors for its success."Revisiting self supervised visual representation learning" Paper Review

"Revisiting self supervised visual representation learning" Paper ReviewLEE HOSEONG

╠²

This paper revisits self-supervised visual representation learning techniques. It conducts a large-scale study comparing different CNN architectures (ResNet, RevNet, VGG) and self-supervised techniques (rotation, exemplar, jigsaw, relative patch location). The study finds that using modern CNN architectures like ResNet instead of older AlexNet models significantly improves performance. Increasing the width of networks also boosts performance of self-supervised learning. Evaluation of representations on a new dataset shows the learned features generalize well.Unsupervised visual representation learning overview: Toward Self-Supervision

Unsupervised visual representation learning overview: Toward Self-SupervisionLEE HOSEONG

╠²

Self-supervised learning uses unlabeled data to learn visual representations through pretext tasks like predicting relative patch location, solving jigsaw puzzles, or image rotation. These tasks require semantic understanding to solve but only use unlabeled data. The features learned through pretraining on pretext tasks can then be transferred to downstream tasks like image classification and object detection, often outperforming supervised pretraining. Several papers introduce different pretext tasks and evaluate feature transfer on datasets like ImageNet and PASCAL VOC. Recent work combines multiple pretext tasks and shows improved generalization across tasks and datasets.Human uncertainty makes classification more robust, ICCV 2019 Review

Human uncertainty makes classification more robust, ICCV 2019 ReviewLEE HOSEONG

╠²

1. The document summarizes a research paper that proposes training deep neural networks on soft labels representing human uncertainty in image classification, which improves generalization and robustness compared to training on hard labels.

2. Experiments show that models trained on soft labels constructed from human responses better fit patterns of human uncertainty and improve accuracy, cross-entropy, and a new second-best accuracy measure on various generalization datasets.

3. Alternative soft label methods are also explored, finding that human uncertainty provides a more important contribution than soft labels alone. While robustness to adversarial attacks is improved, defenses are still needed.Single Image Super Resolution Overview

Single Image Super Resolution OverviewLEE HOSEONG

╠²

This document provides an overview of single image super resolution using deep learning. It discusses how super resolution can be used to generate a high resolution image from a low resolution input. Deep learning models like SRCNN were early approaches for super resolution but newer models use deeper networks and perceptual losses. Generative adversarial networks have also been applied to improve perceptual quality. Key applications are in satellite imagery, medical imaging, and video enhancement. Metrics like PSNR and SSIM are commonly used but may not correlate with human perception. Overall, deep learning has advanced super resolution techniques but challenges remain in fully evaluating perceptual quality.2019 ICLR Best Paper Review

2019 ICLR Best Paper ReviewLEE HOSEONG

╠²

This document provides a review of the paper "The Lottery Ticket Hypothesis: Finding Sparse, Trainable Neural Networks" presented at ICLR 2019. The paper proposes that dense neural networks contain sparse subnetworks that are capable of learning in isolation with the same accuracy in fewer iterations if they retain their original initialization weights. Through experiments on MNIST, CIFAR10 and ImageNet datasets, the paper finds evidence that iterative pruning can discover such "winning tickets" and achieve better performance than one-shot pruning or training sparse subnetworks from random initialization. However, further work is needed to test the hypothesis on larger datasets and optimize the resulting architectures.2019 cvpr paper_overview

2019 cvpr paper_overviewLEE HOSEONG

╠²

Statistics and Visualization of acceptance rate, main keyword for the main Computer Vision conference (CVPR)

20 CVPR Paper ReviewPelee: a real time object detection system on mobile devices Paper Review

Pelee: a real time object detection system on mobile devices Paper ReviewLEE HOSEONG

╠²

This document summarizes the Pelee object detection system which uses the PeleeNet efficient feature extraction network for real-time object detection on mobile devices. PeleeNet improves on DenseNet with two-way dense layers, a stem block, dynamic bottleneck layers, and transition layers without compression. Pelee uses SSD with PeleeNet, selecting fewer feature maps and adding residual prediction blocks for faster, more accurate detection compared to SSD and YOLO. The document concludes that PeleeNet and Pelee achieve real-time classification and detection on devices, outperforming existing models in speed, cost and accuracy with simple code."Learning transferable architectures for scalable image recognition" Paper Review

- 1. Learning Transferable Architectures for Scalable Image Recognition Barret Zoph, Vijay Vasudevanm Jonathon Shlens, Quoc V. Le Google Brain

- 2. ļ¬®ņ░© ŌĆó Introduction ŌĆó Contribution ŌĆó Method ŌĆó Result

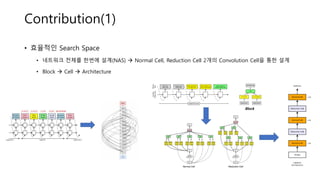

- 3. Introduction ŌĆó Neural Architecture Search with Reinforcement Learning(NAS) ĒøäņåŹ ļģ╝ļ¼Ė ŌĆó NAS : CNN(CIFAR-10), RNN(Penn Treebank) ĻĄ¼ņĪ░ ņäżĻ│ä ŌĆó CIFAR-10 ņØ┤ļØ╝ļŖö ņ×æņØĆ ļŹ░ņØ┤Ēä░ņģŗ ĒĢÖņŖĄņŚÉ 800 GPU, 28days ņåīņÜö ’āĀ Ēü░ ļŹ░ņØ┤Ēä░ņģŗņØĆ?? ŌĆó Learning Transferable Architectures for Scalable Image Recognition (2018, CVPR) ŌĆó CNNņŚÉ ņ┤łņĀÉņØä ļ¦×ņČöņ¢┤ ņØ┤ņŗØ Ļ░ĆļŖźĒĢśĻ│Ā ļŹö ĒÜ©ņ£©ņĀüņØĖ architectureļź╝ ņĀ£ņĢł(NASNet) ŌĆó CIFAR-10ņ£╝ļĪ£ ņ░ŠņØĆ Convolution CellņØä ņØ┤ņÜ®ĒĢśņŚ¼ ImageNetņŚÉ ņĀüņÜ® ’āĀ SOTA ņä▒ļŖź ļŗ¼ņä▒! ŌĆó NAS ļīĆļ╣ä ĒĢÖņŖĄņŚÉ ņåīņÜöļÉśļŖö ņŗ£Ļ░ä ļŗ©ņČĢ (500GPU, 4days ņåīņÜö, x7 speed up) * NAS : Nvidia K40 GPU / NASNet : Nvidia P100s GPU

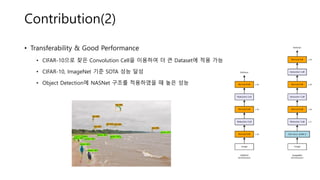

- 4. Contribution(1) ŌĆó ĒÜ©ņ£©ņĀüņØĖ Search Space ŌĆó ļäżĒŖĖņøīĒü¼ ņĀäņ▓┤ļź╝ ĒĢ£ļ▓łņŚÉ ņäżĻ│ä(NAS) ’āĀ Normal Cell, Reduction Cell 2Ļ░£ņØś Convolution CellņØä ĒåĄĒĢ£ ņäżĻ│ä ŌĆó Block ’āĀ Cell ’āĀ Architecture Block

- 5. Contribution(2) ŌĆó Transferability & Good Performance ŌĆó CIFAR-10ņ£╝ļĪ£ ņ░ŠņØĆ Convolution CellņØä ņØ┤ņÜ®ĒĢśņŚ¼ ļŹö Ēü░ DatasetņŚÉ ņĀüņÜ® Ļ░ĆļŖź ŌĆó CIFAR-10, ImageNet ĻĖ░ņżĆ SOTA ņä▒ļŖź ļŗ¼ņä▒ ŌĆó Object DetectionņŚÉ NASNet ĻĄ¼ņĪ░ļź╝ ņĀüņÜ®ĒĢśņśĆņØä ļĢī ļåÆņØĆ ņä▒ļŖź

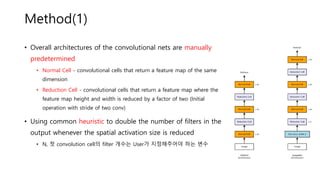

- 6. Method(1) ŌĆó Overall architectures of the convolutional nets are manually predetermined ŌĆó Normal Cell - convolutional cells that return a feature map of the same dimension ŌĆó Reduction Cell - convolutional cells that return a feature map where the feature map height and width is reduced by a factor of two (Initial operation with stride of two conv) ŌĆó Using common heuristic to double the number of filters in the output whenever the spatial activation size is reduced ŌĆó N, ņ▓½ convolution cellņØś filter Ļ░£ņłśļŖö UserĻ░Ć ņ¦ĆņĀĢĒĢ┤ņŻ╝ņ¢┤ņĢ╝ ĒĢśļŖö ļ│Ćņłś

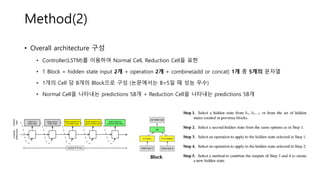

- 7. Method(2) ŌĆó Overall architecture ĻĄ¼ņä▒ ŌĆó Controller(LSTM)ļź╝ ņØ┤ņÜ®ĒĢśņŚ¼ Normal Cell, Reduction CellņØä Ēæ£Ēśä ŌĆó 1 Block = hidden state input 2Ļ░£ + operation 2Ļ░£ + combine(add or concat) 1Ļ░£ ņ┤Ø 5Ļ░£ņØś ļ¼Ėņ×ÉņŚ┤ ŌĆó 1Ļ░£ņØś Cell ļŗ╣ BĻ░£ņØś Blockņ£╝ļĪ£ ĻĄ¼ņä▒ (ļģ╝ļ¼ĖņŚÉņä£ļŖö B=5ņØ╝ ļĢī ņä▒ļŖź ņÜ░ņłś) ŌĆó Normal CellņØä ļéśĒāĆļé┤ļŖö predictions 5BĻ░£ + Reduction CellņØä ļéśĒāĆļé┤ļŖö predictions 5BĻ░£ Block

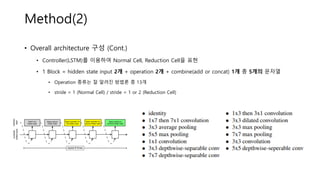

- 8. Method(2) ŌĆó Overall architecture ĻĄ¼ņä▒ (Cont.) ŌĆó Controller(LSTM)ļź╝ ņØ┤ņÜ®ĒĢśņŚ¼ Normal Cell, Reduction CellņØä Ēæ£Ēśä ŌĆó 1 Block = hidden state input 2Ļ░£ + operation 2Ļ░£ + combine(add or concat) 1Ļ░£ ņ┤Ø 5Ļ░£ņØś ļ¼Ėņ×ÉņŚ┤ ŌĆó Operation ņóģļźśļŖö ņל ņĢīļĀżņ¦ä ļ░®ļ▓ĢļĪĀ ņżæ 13Ļ░£ ŌĆó stride = 1 (Normal Cell) / stride = 1 or 2 (Reduction Cell)

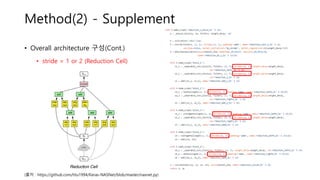

- 9. Method(2) - Supplement ŌĆó Overall architecture ĻĄ¼ņä▒(Cont.) ŌĆó stride = 1 or 2 (Reduction Cell) (ņČ£ņ▓ś : https://github.com/titu1994/Keras-NASNet/blob/master/nasnet.py)

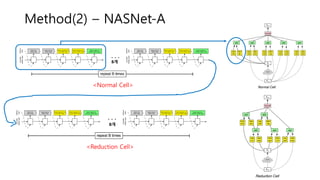

- 10. Method(2) ŌĆō NASNet-A ┬Ę ┬Ę ┬Ę <Normal Cell> ┬Ę ┬Ę ┬Ę <Reduction Cell> BĻ░£ BĻ░£

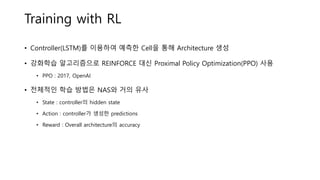

- 11. Training with RL ŌĆó Controller(LSTM)ļź╝ ņØ┤ņÜ®ĒĢśņŚ¼ ņśłņĖĪĒĢ£ CellņØä ĒåĄĒĢ┤ Architecture ņāØņä▒ ŌĆó Ļ░ĢĒÖöĒĢÖņŖĄ ņĢīĻ│Āļ”¼ņ”śņ£╝ļĪ£ REINFORCE ļīĆņŗĀ Proximal Policy Optimization(PPO) ņé¼ņÜ® ŌĆó PPO : 2017, OpenAI ŌĆó ņĀäņ▓┤ņĀüņØĖ ĒĢÖņŖĄ ļ░®ļ▓ĢņØĆ NASņÖĆ Ļ▒░ņØś ņ£Āņé¼ ŌĆó State : controllerņØś hidden state ŌĆó Action : controllerĻ░Ć ņāØņä▒ĒĢ£ predictions ŌĆó Reward : Overall architectureņØś accuracy

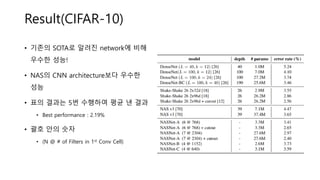

- 12. Result(CIFAR-10) ŌĆó ĻĖ░ņĪ┤ņØś SOTAļĪ£ ņĢīļĀżņ¦ä networkņŚÉ ļ╣äĒĢ┤ ņÜ░ņłśĒĢ£ ņä▒ļŖź! ŌĆó NASņØś CNN architectureļ│┤ļŗż ņÜ░ņłśĒĢ£ ņä▒ļŖź ŌĆó Ēæ£ņØś Ļ▓░Ļ│╝ļŖö 5ļ▓ł ņłśĒ¢ēĒĢśņŚ¼ ĒÅēĻĘĀ ļéĖ Ļ▓░Ļ│╝ ŌĆó Best performance : 2.19% ŌĆó Ļ┤äĒśĖ ņĢłņØś ņł½ņ×É ŌĆó (N @ # of Filters in 1st Conv Cell)

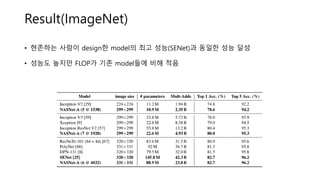

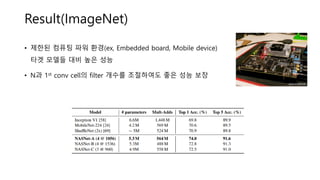

- 13. Result(ImageNet) ŌĆó ĒśäņĪ┤ĒĢśļŖö ņé¼ļ×īņØ┤ designĒĢ£ modelņØś ņĄ£Ļ│Ā ņä▒ļŖź(SENet)Ļ│╝ ļÅÖņØ╝ĒĢ£ ņä▒ļŖź ļŗ¼ņä▒ ŌĆó ņä▒ļŖźļÅä ļåÆņ¦Ćļ¦ī FLOPĻ░Ć ĻĖ░ņĪ┤ modelļōżņŚÉ ļ╣äĒĢ┤ ņĀüņØī

- 14. Result(ImageNet) ŌĆó ņĀ£ĒĢ£ļÉ£ ņ╗┤Ēō©Ēīģ Ēīīņøī ĒÖśĻ▓Į(ex, Embedded board, Mobile device) ĒāĆĻ▓¤ ļ¬©ļŹĖļōż ļīĆļ╣ä ļåÆņØĆ ņä▒ļŖź ŌĆó NĻ│╝ 1st conv cellņØś filter Ļ░£ņłśļź╝ ņĪ░ņĀłĒĢśņŚ¼ļÅä ņóŗņØĆ ņä▒ļŖź ļ│┤ņן

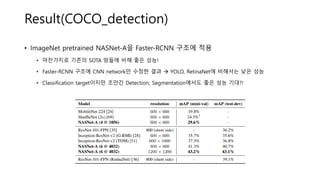

- 15. Result(COCO_detection) ŌĆó ImageNet pretrained NASNet-AņØä Faster-RCNN ĻĄ¼ņĪ░ņŚÉ ņĀüņÜ® ŌĆó ļ¦łņ░¼Ļ░Ćņ¦ĆļĪ£ ĻĖ░ņĪ┤ņØś SOTA ļ¦ØļōżņŚÉ ļ╣äĒĢ┤ ņóŗņØĆ ņä▒ļŖź! ŌĆó Faster-RCNN ĻĄ¼ņĪ░ņŚÉ CNN networkļ¦ī ņłśņĀĢĒĢ£ Ļ▓░Ļ│╝ ’āĀ YOLO, RetinaNetņŚÉ ļ╣äĒĢ┤ņä£ļŖö ļé«ņØĆ ņä▒ļŖź ŌĆó Classification targetņØ┤ņ¦Ćļ¦ī ņĪ░ļ¦īĻ░ä Detection, SegmentationņŚÉņä£ļÅä ņóŗņØĆ ņä▒ļŖź ĻĖ░ļīĆ?!

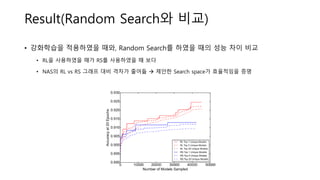

- 16. Result(Random SearchņÖĆ ļ╣äĻĄÉ) ŌĆó Ļ░ĢĒÖöĒĢÖņŖĄņØä ņĀüņÜ®ĒĢśņśĆņØä ļĢīņÖĆ, Random Searchļź╝ ĒĢśņśĆņØä ļĢīņØś ņä▒ļŖź ņ░©ņØ┤ ļ╣äĻĄÉ ŌĆó RLņØä ņé¼ņÜ®ĒĢśņśĆņØä ļĢīĻ░Ć RSļź╝ ņé¼ņÜ®ĒĢśņśĆņØä ļĢī ļ│┤ļŗż ŌĆó NASņØś RL vs RS ĻĘĖļלĒöä ļīĆļ╣ä Ļ▓®ņ░©Ļ░Ć ņżäņ¢┤ļō” ’āĀ ņĀ£ņĢłĒĢ£ Search spaceĻ░Ć ĒÜ©ņ£©ņĀüņ×äņØä ņ”Øļ¬ģ

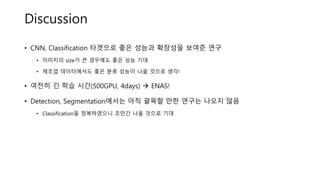

- 17. Discussion ŌĆó CNN, Classification ĒāĆĻ▓¤ņ£╝ļĪ£ ņóŗņØĆ ņä▒ļŖźĻ│╝ ĒÖĢņןņä▒ņØä ļ│┤ņŚ¼ņżĆ ņŚ░ĻĄ¼ ŌĆó ņØ┤ļ»Ėņ¦ĆņØś sizeĻ░Ć Ēü░ Ļ▓ĮņÜ░ņŚÉļÅä ņóŗņØĆ ņä▒ļŖź ĻĖ░ļīĆ ŌĆó ņĀ£ņĪ░ņŚģ ļŹ░ņØ┤Ēä░ņŚÉņä£ļÅä ņóŗņØĆ ļČäļźś ņä▒ļŖźņØ┤ ļéśņś¼ Ļ▓āņ£╝ļĪ£ ņāØĻ░ü! ŌĆó ņŚ¼ņĀäĒ׳ ĻĖ┤ ĒĢÖņŖĄ ņŗ£Ļ░ä(500GPU, 4days) ’āĀ ENAS! ŌĆó Detection, SegmentationņŚÉņä£ļŖö ņĢäņ¦ü Ļ┤äļ¬®ĒĢĀ ļ¦īĒĢ£ ņŚ░ĻĄ¼ļŖö ļéśņśżņ¦Ć ņĢŖņØī ŌĆó ClassificationņØä ņĀĢļ│ĄĒĢśņśĆņ£╝ļŗł ņĪ░ļ¦īĻ░ä ļéśņś¼ Ļ▓āņ£╝ļĪ£ ĻĖ░ļīĆ