Object Detection & Instance Segmentationの論文紹介 | OHS勉強会#3

- 1. OHS#3 論文紹介 Object Detection & Instance Segmentation 半谷

- 2. Contents ? Object Detection ? タスクについて ? R-CNN ? Faster R-CNN ? Region Proposal Networkのしくみ ? SSD: Single Shot Multibox Detector ? Instance Segmentation ? タスクについて ? End-to-End Instance Segmentation and Counting with Recurrent Attention 2

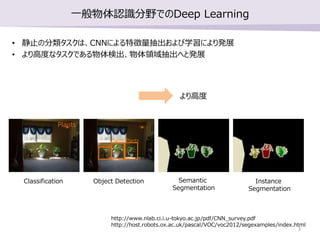

- 3. 一般物体認識分野でのDeep Learning ? 静止の分類タスクは、CNNによる特徴量抽出および学習により発展 ? より高度なタスクである物体検出、物体領域抽出へと発展 Classification Object Detection Semantic Segmentation Instance Segmentation Plants http://www.nlab.ci.i.u-tokyo.ac.jp/pdf/CNN_survey.pdf http://host.robots.ox.ac.uk/pascal/VOC/voc2012/segexamples/index.html Plants Plants Plants より高度 3

- 4. Object Detection 紹介する論文: SSD: Single Shot MultiBox Detector

- 5. Object Detection ? 画像中の複数の物体を漏れなく/重複無く検出することが目的。 ? 物体の検出精度(Precision)と、漏れなく検出できているかの指標である適合率 (Recall)の関係(Precision-recall curve)から算出した、Average Precision (AP) が主な指標。 ? 実問題への応用が期待され、APのほか予測時の計算時間も重要で、リアルタイム性が求め られている。 http://host.robots.ox.ac.uk/pascal/VOC/voc2007/ Precision Recall1 1 面積 = AP 5

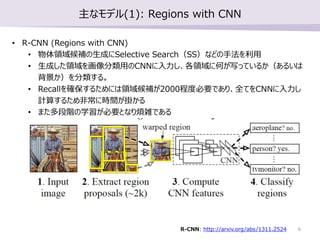

- 6. 主なモデル(1): Regions with CNN ? R-CNN (Regions with CNN) ? 物体領域候補の生成にSelective Search(SS)などの手法を利用 ? 生成した領域を画像分類用のCNNに入力し、各領域に何が写っているか(あるいは 背景か)を分類する。 ? Recallを確保するためには領域候補が2000程度必要であり、全てをCNNに入力し 計算するため非常に時間が掛かる ? また多段階の学習が必要となり煩雑である R-CNN: http://arxiv.org/abs/1311.2524 6

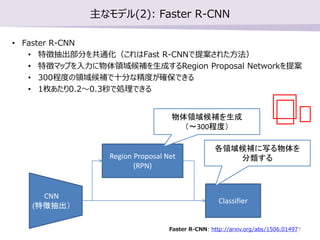

- 7. 主なモデル(2): Faster R-CNN ? Faster R-CNN ? 特徴抽出部分を共通化(これはFast R-CNNで提案された方法) ? 特徴マップを入力に物体領域候補を生成するRegion Proposal Networkを提案 ? 300程度の領域候補で十分な精度が確保できる ? 1枚あたり0.2~0.3秒で処理できる Region Proposal Net (RPN) CNN (特徴抽出) Classifier 物体領域候補を生成 (~300程度) 各領域候補に写る物体を 分類する Faster R-CNN: http://arxiv.org/abs/1506.014977

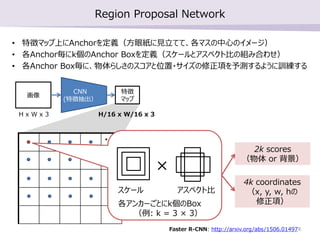

- 8. Region Proposal Network ? 特徴マップ上にAnchorを定義(方眼紙に見立てて、各マスの中心のイメージ) ? 各Anchor毎にk個のAnchor Boxを定義(スケールとアスペクト比の組み合わせ) ? 各Anchor Box毎に、物体らしさのスコアと位置?サイズの修正項を予測するように訓練する Faster R-CNN: http://arxiv.org/abs/1506.01497 画像 特徴 マップ CNN (特徴抽出) ??? スケール アスペクト比 × 各アンカーごとにk個のBox (例: k = 3 × 3) 2k scores (物体 or 背景) 4k coordinates (x, y, w, hの 修正項) H x W x 3 H/16 x W/16 x 3 8

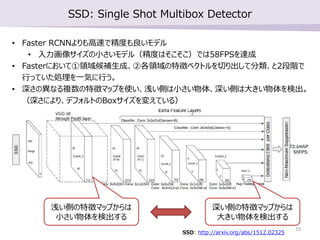

- 9. SSD: Single Shot Multibox Detector Region Proposal Net (RPN) CNN (特徴抽出) Classifier ① 物体領域候補を生成 (物体らしさのスコア) ② 各クラスに分類 CNN (特徴抽出) Region Proposal + Classifier 物体領域候補を生成 (クラス毎のスコア)SSD Faster R-CNN ? Faster RCNNよりも高速で精度も良いモデル ? 入力画像サイズの小さいモデル(精度はそこそこ)では58FPSを達成 ? Fasterにおいて①領域候補生成、②各領域の特徴ベクトルを切り出して分類、と2段階で 行っていた処理を一気に行う。 ? 深さの異なる複数の特徴マップを使い、浅い側は小さい物体、深い側は大きい物体を検出。 SSD: http://arxiv.org/abs/1512.02325 9

- 10. SSD: Single Shot Multibox Detector ? Faster RCNNよりも高速で精度も良いモデル ? 入力画像サイズの小さいモデル(精度はそこそこ)では58FPSを達成 ? Fasterにおいて①領域候補生成、②各領域の特徴ベクトルを切り出して分類、と2段階で 行っていた処理を一気に行う。 ? 深さの異なる複数の特徴マップを使い、浅い側は小さい物体、深い側は大きい物体を検出。 (深さにより、デフォルトのBoxサイズを変えている) 浅い側の特徴マップからは 小さい物体を検出する 深い側の特徴マップからは 大きい物体を検出する SSD: http://arxiv.org/abs/1512.02325 10

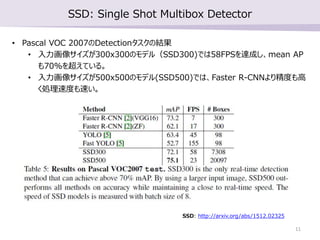

- 11. SSD: Single Shot Multibox Detector ? Pascal VOC 2007のDetectionタスクの結果 ? 入力画像サイズが300x300のモデル(SSD300)では58FPSを達成し、mean AP も70%を超えている。 ? 入力画像サイズが500x500のモデル(SSD500)では、Faster R-CNNより精度も高 く処理速度も速い。 SSD: http://arxiv.org/abs/1512.02325 11

- 12. Instance Segmentation 紹介する論文: End-to-End Instance Segmentation and Counting with Recurrent Attention

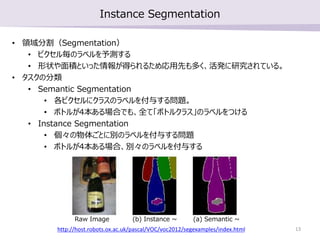

- 13. Instance Segmentation ? 領域分割(Segmentation) ? ピクセル毎のラベルを予測する ? 形状や面積といった情報が得られるため応用先も多く、活発に研究されている。 ? タスクの分類 ? Semantic Segmentation ? 各ピクセルにクラスのラベルを付与する問題。 ? ボトルが4本ある場合でも、全て「ボトルクラス」のラベルをつける ? Instance Segmentation ? 個々の物体ごとに別のラベルを付与する問題 ? ボトルが4本ある場合、別々のラベルを付与する (b) Instance ~ (a) Semantic ~Raw Image http://host.robots.ox.ac.uk/pascal/VOC/voc2012/segexamples/index.html 13

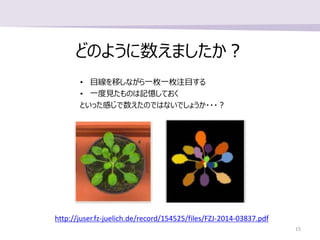

- 16. End-to-End Instance Segmentation and Counting with Recurrent Attention ? Instance Segmentation用のニューラルネットワーク ? ステップ毎に1つの物体に注目して領域分割する ? 一度見た領域は記憶しておく (人間の数え方を参考にしている) End-to-End Instance Segmentation and Counting with Recurrent Attention: https://arxiv.org/abs/1605.09410 16

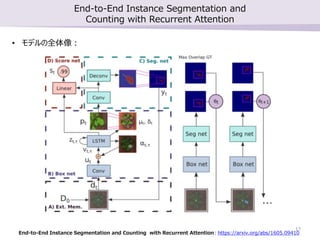

- 17. End-to-End Instance Segmentation and Counting with Recurrent Attention End-to-End Instance Segmentation and Counting with Recurrent Attention: https://arxiv.org/abs/1605.09410 ? モデルの全体像: 17

- 18. End-to-End Instance Segmentation and Counting with Recurrent Attention End-to-End Instance Segmentation and Counting with Recurrent Attention: https://arxiv.org/abs/1605.09410 一度見た領域を記憶しておく部品 18

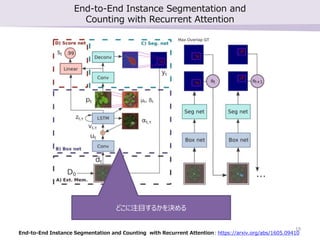

- 19. End-to-End Instance Segmentation and Counting with Recurrent Attention End-to-End Instance Segmentation and Counting with Recurrent Attention: https://arxiv.org/abs/1605.09410 どこに注目するかを決める 19

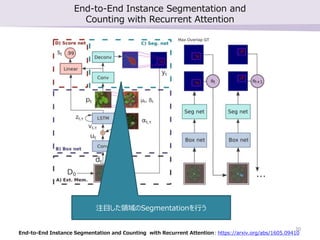

- 20. End-to-End Instance Segmentation and Counting with Recurrent Attention End-to-End Instance Segmentation and Counting with Recurrent Attention: https://arxiv.org/abs/1605.09410 注目した領域のSegmentationを行う 20

- 21. End-to-End Instance Segmentation and Counting with Recurrent Attention End-to-End Instance Segmentation and Counting with Recurrent Attention: https://arxiv.org/abs/1605.09410 物体が見つかったかどうかの判定を行う (スコアが0.5以下になったら終了) 21

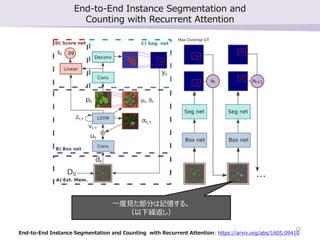

- 22. End-to-End Instance Segmentation and Counting with Recurrent Attention End-to-End Instance Segmentation and Counting with Recurrent Attention: https://arxiv.org/abs/1605.09410 一度見た部分は記憶する。 (以下繰返し) 22

- 23. End-to-End Instance Segmentation and Counting with Recurrent Attention End-to-End Instance Segmentation and Counting with Recurrent Attention: https://arxiv.org/abs/1605.09410 ? 結果(1)葉っぱの領域分割 23

- 24. End-to-End Instance Segmentation and Counting with Recurrent Attention End-to-End Instance Segmentation and Counting with Recurrent Attention: https://arxiv.org/abs/1605.09410 ? 結果(2)車両の領域分割 24

Editor's Notes

- #11: 動画; https://drive.google.com/file/d/0BzKzrI_SkD1_R09NcjM1eElLcWc/view?pref=2&pli=1 コード; https://github.com/weiliu89/caffe/tree/ssd

![[DL輪読会]NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis](https://cdn.slidesharecdn.com/ss_thumbnails/nerfdlseminar1-200327021512-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Model soups: averaging weights of multiple fine-tuned models improves ...](https://cdn.slidesharecdn.com/ss_thumbnails/dl0401-220405031053-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]YOLOv4: Optimal Speed and Accuracy of Object Detection](https://cdn.slidesharecdn.com/ss_thumbnails/200515dlseminar-200515082345-thumbnail.jpg?width=560&fit=bounds)

![SSII2021 [OS2-02] 深層学習におけるデータ拡張の原理と最新動向](https://cdn.slidesharecdn.com/ss_thumbnails/os2-03latest-210610045610-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]data2vec: A General Framework for Self-supervised Learning in Speech,...](https://cdn.slidesharecdn.com/ss_thumbnails/220204nonakadl1-220204025334-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Objects as Points](https://cdn.slidesharecdn.com/ss_thumbnails/20190614centernetkuboshizuma-190614004246-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]GENESIS: Generative Scene Inference and Sampling with Object-Centric L...](https://cdn.slidesharecdn.com/ss_thumbnails/20191206genesis-191206004127-thumbnail.jpg?width=560&fit=bounds)

![[DL Hacks]Semantic Instance Segmentation with a Discriminative Loss Function](https://cdn.slidesharecdn.com/ss_thumbnails/taniai20180528-180528084124-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Auto-DeepLab: Hierarchical Neural Architecture Search for Semantic Ima...](https://cdn.slidesharecdn.com/ss_thumbnails/20190125misono-190125024053-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]One Model To Learn Them All](https://cdn.slidesharecdn.com/ss_thumbnails/dljp20170714ono-170714005853-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Focal Loss for Dense Object Detection](https://cdn.slidesharecdn.com/ss_thumbnails/focalloss-180208092846-thumbnail.jpg?width=560&fit=bounds)