TVMの次期グラフIR Relayの紹介

- 2. 注:このLTは 11/3開催 『XX for ML 論文読み会 #1』の続編です ? 前回はRelayの論文を紹介 ? /bonotake/relay-a-new-ir-for-machine-learning-frameworks ? 本日はGitHubにあるソースコードの内容も踏まえて紹介します

- 3. 自己紹介 今井 健男(bonotake) フリーランスエンジニア 兼 国立情報学研究所 特任研究員(予定) ? 深層学習コンパイラの研究?開発 ? 書籍 (共訳) ? D. Jackson, 『抽象によるソフトウェア設計』(Alloy本) ? B. Pierce, 『型システム入門』(TAPL) ? その他活動 ? 日本ソフトウェア科学会 機械学習工学研究会(MLSE) 発足メンバー / 現 運営委員

- 4. 記事を書きました Interface 1月号(11/25 発売) ? 『実験研究 注目AIコンパイラで 広がる組み込み人工知能の世界』 w/ LeapMind 山田さん 情報処理 2019年1月号 ? 『機械学習応用システムの開発?運用環境』 w/ BrainPad 太田さん ※ 画像はいずれも掲載号のものではありません

- 5. TVM ? 主にワシントン大学のグループ(正確にはDMLC)が中 心になって開発している深層学習コンパイラ ? 作者:Tianqi Chen ? Kaggler大好き XGBoost の作者 ? MXNet の初期開発者 ? OSSのコンパイラでは最も人気

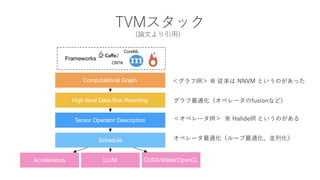

- 6. TVMスタック (論文より引用)MAPL’18, June 18, 2018, Philadelphia, PA, USA Frameworks Computational Graph High level Data-?ow Rewriting Tensor Operator Description Schedule LLVMAccelerators CUDA/Metal/OpenCL CNTK CoreML These graphs areeasy to optimize struct programs in a deeply-embe guage (eDSL) without high-level a A moreexpressivestyle popular workslikeChainer, PyTorch, and G tion of graphs with dynamic topo runtime data and support di ere tive computations. This expressiv user but has limited the ability fo optimize user-de ned graphs. Mo requires a Python interpreter, ma accelerators and FPGAsextremely In summary, static graphs are the expressivity found in higher- <グラフIR> ※ 従来は NNVM というのがあった グラフ最適化(オペレータのfusionなど) オペレータ最適化(ループ最適化、並列化) <オペレータIR> ※ HalideIR というのがある

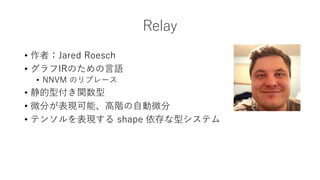

- 7. Relay ? 作者:Jared Roesch ? グラフIRのための言語 ? NNVM のリプレース ? 静的型付き関数型 ? 微分が表現可能、高階の自動微分 ? テンソルを表現する shape 依存な型システム

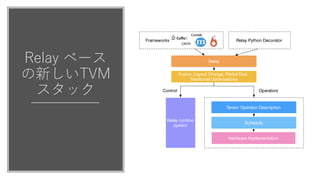

- 8. Relay ベース の新しいTVM スタック programs’ computational expressivity. FrameworkslikeTen- sorFlow represent di erentiable computation using static graphs, which are data ow graphs with a xed topology. Relay Fusion, Layout Change, Partial Eval, Traditional Optimizations Tensor Operator Description Schedule Hardware Implementation Frameworks CNTK CoreML Relay Python Decorator Operators Relay runtime system Control Figure 2. The new TVM stack integrated with Relay. w w

- 9. Relay ベース の新しいTVM スタック programs’ computational expressivity. FrameworkslikeTen- sorFlow represent di erentiable computation using static graphs, which are data ow graphs with a xed topology. Relay Fusion, Layout Change, Partial Eval, Traditional Optimizations Tensor Operator Description Schedule Hardware Implementation Frameworks CNTK CoreML Relay Python Decorator Operators Relay runtime system Control Figure 2. The new TVM stack integrated with Relay. w w TVMの一部というより、 新しい汎用グラフIR or 深層学習用DSLであり、 TVMはそのバックエンドに なるというイメージ (紹介者の主観)

- 10. 現在のRelay進捗 ? 現在もTVMのGitHub上で開発進行中 ? C++ で 約9000行 + Python で 約2000行(11/10 時点) ? この1週間で、バックエンド (TVMとは独立のコンパイラ、インタプリタ)のディレクトリが 追加された

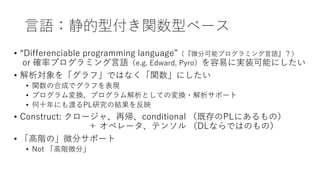

- 11. 言語:静的型付き関数型ベース ? “Differenciable programming language”(『微分可能プログラミング言語』?) or 確率プログラミング言語(e.g. Edward, Pyro)を容易に実装可能にしたい ? 解析対象を「グラフ」ではなく「関数」にしたい ? 関数の合成でグラフを表現 ? プログラム変換、プログラム解析としての変換?解析サポート ? 何十年にも渡るPL研究の結果を反映 ? Construct: クロージャ、再帰、conditional (既存のPLにあるもの) + オペレータ、テンソル (DLならではのもの) ? 「高階の」微分サポート ? Not 「高階微分」

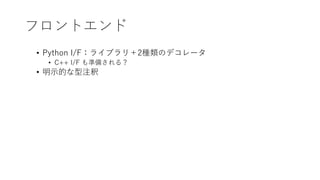

- 12. フロントエンド ? Python I/F:ライブラリ+2種類のデコレータ ? C++ I/F も準備される? ? 明示的な型注釈

- 13. PythonでのRelay記述例(1) ネットワークの記述 @relay_model def lenet(x: Tensor[Float, (1, 28, 28)]) -> Tensor[Float, 10]: conv1 = relay.conv2d(x, num_filter=20, ksize=[1, 5, 5, 1], no_bias=False) tanh1 = relay.tanh(conv1) pool1 = relay.max_pool(tanh1, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1]) conv2 = relay.conv2d(pool1, num_filter=50, ksize=[1, 5, 5, 1], no_bias=False) tanh2 = relay.tanh(conv2) pool2 = relay.max_pool(tanh2, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1]) flatten = relay.flatten_layer(pool2) fc1 = relay.linear(flatten, num_hidden=500) tanh3 = relay.tanh(fc1) return relay.linear(tanh3, num_hidden=10)

- 14. PythonでのRelay記述例(2) 実際の学習 @relay def loss(x: Tensor[Float, (1, 28, 28)], y: Tensor[Float, 10]) -> Float: return relay.softmax_cross_entropy(lenet(x), y) @relay def train_lenet(training_data: Tensor[Float, (60000, 1, 28, 28)]) -> Model: model = relay.create_model(lenet) for x, y in data: model_grad = relay.grad(model, loss, (x, y)) relay.update_model_params(model, model_grad) return relay.export_model(model) training_data, test_data = relay.datasets.mnist() model = train_lenet(training_data) print(relay.argmax(model(test_data[0])))

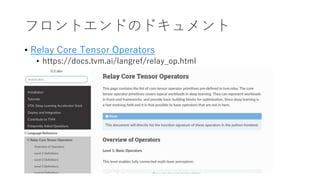

- 15. フロントエンドのドキュメント ? Relay Core Tensor Operators ? https://docs.tvm.ai/langref/relay_op.html

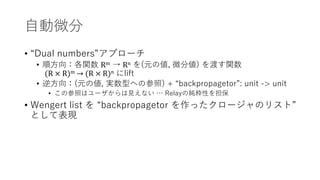

- 16. 自動微分 ? “Dual numbers”アプローチ ? 順方向:各関数 Rm → Rn を(元の値, 微分値) を渡す関数 (R × R)m → (R × R)n にlift ? 逆方向:(元の値, 実数型への参照) + “backpropagetor”: unit -> unit ? この参照はユーザからは見えない … Relayの純粋性を担保 ? Wengert list を “backpropagetor を作ったクロージャのリスト” として表現

- 17. 実装は…?? ? GitHub上にそれらしきコードは見当たらず ? 未公開?? ? まったくの未実装ってことはないと思いますが ? 他の人が書いた自動微分の実装はissueに挙がっている (https://github.com/dmlc/tvm/issues/1996)

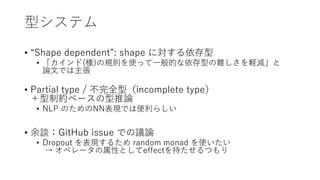

- 18. 型システム ? “Shape dependent”: shape に対する依存型 ? 「カインド(種)の規則を使って一般的な依存型の難しさを軽減」と 論文では主張 ? Partial type / 不完全型(incomplete type) +型制約ベースの型推論 ? NLP のためのNN表現では便利らしい ? 余談:GitHub issue での議論 ? Dropout を表現するため random monad を使いたい → オペレータの属性としてeffectを持たせるつもり

- 19. 実装を見てみる 実際のコードを御覧ください 参考) ? include/tvm/relay/type.h ? src/tvm/relay/op/nn/convolution.cc ? src/tvm/relay/pass/type_solver.cc ? src/tvm/relay/pass/type_infer.cc

- 20. shape-dependent typeの仕組み ? Op毎に TypeRelation(のサブクラス)オブジェクトを割り当て、 型チェック時にそのオブジェクト内のチェックコードが走る ? 「TypeRelationの推論」がグラフレベルの最適化passとして走る ? よくあるshape inference? 所感 ? 推論がどこまで効いてるのかは未確認(あんまり大したことしてない?) ? Incomplete type を相当意識した実装 ? Op毎にTypeRelationがユーザ定義でもりもり与えられてる ? 各TypeRelation クラスは Op とは独立して定義可能なので、同じTypeRelation を持つOp同士では再利用できる ? でもそんなのってIdentityくらいしかなくない? ? TypeRelation の実装コードの正当性はどこで保証する??

- 21. とはいえ ? 従来アドホックになされていたshape check/inference や dimension check がきちんと体系化されることはいいこと ? ということで今後が楽しみです

- 22. おしまい

Editor's Notes

- #4: Title: Toward new definitions of equivalence in verifying deep learning compilers Author name: Takeo Imai Author affiliation: LeapMind Inc. (Currently, he is a freelance engineer and also at National Institute of Informatics) Abstract (word count: 373/500): A deep learning compiler is a compiler that takes a deep neural network (or DNN) as an input, optimizes it for efficient computation, and outputs code that runs on hardware or a platform. Optimizations applied during the compilation include graph optimizations like operator fusion and tensor optimizations like loop optimizations for matrix multiplication and accumulation. In addition to those classical optimizations, a deep learning compiler often applies optimizations specific to deep learning accelerators. One common example is quantization, which reduces the bit length of parameters and its computations in a DNN. A compiler may quantize 32bit float values into n-bit integer, where n = 8 is most common and n = 1 or 2 for some specific hardware devices. In this talk, we shed light to the difficulties in defining what is equivalence for deep learning compilers. For compilers of ordinary programming languages, the behavior of a program before/after compilation must be equivalent, regardless of optimization passes applied in the compilation process. It is commonly understood that having the “same” behavior is not to have exactly the same output values from the same input, and an output value including some tiny errors like rounding errors in floating point operations are generally accepted, according to the ordinary equivalence criteria. A deep learning compiler, however, sometimes produces a code that does not keep the equivalence in a classical sense; for example, a tiny rounding error caused at a hidden layer may change the value from 0 to 1 after a 1-bit quantization, which may bring a completely different final classification result compared with the original DNN’s behavior. This is because the final output of a DNN is discrete-valued .The classical equivalence criteria do not take into consideration such tiny-error, big-difference cases. This is a fundamental issue in the equivalence verification of deep learning compilers. We consider that we need to start from redefining the equivalence of DNN computation, or “relaxing” the equivalence criteria in order that some difference of individual discrete-valued results can be acceptable. And then, we need to propose new testing or verification methods according to the new equivalence criteria. We present issues around the correctness of deep learning compilers described above, and offer a direction for our future work about DNN compilation.

- #20: include/tvm/relay/type.h src/tvm/relay/op/nn/convolution.cc

![PythonでのRelay記述例(1) ネットワークの記述

@relay_model

def lenet(x: Tensor[Float, (1, 28, 28)]) -> Tensor[Float, 10]:

conv1 = relay.conv2d(x, num_filter=20, ksize=[1, 5, 5, 1],

no_bias=False)

tanh1 = relay.tanh(conv1)

pool1 = relay.max_pool(tanh1, ksize=[1, 2, 2, 1],

strides=[1, 2, 2, 1])

conv2 = relay.conv2d(pool1, num_filter=50, ksize=[1, 5, 5, 1],

no_bias=False)

tanh2 = relay.tanh(conv2)

pool2 = relay.max_pool(tanh2, ksize=[1, 2, 2, 1],

strides=[1, 2, 2, 1])

flatten = relay.flatten_layer(pool2)

fc1 = relay.linear(flatten, num_hidden=500)

tanh3 = relay.tanh(fc1)

return relay.linear(tanh3, num_hidden=10)](https://image.slidesharecdn.com/20181110compilerstudy-181110130617/85/TVM-IR-Relay-13-320.jpg)

![PythonでのRelay記述例(2) 実際の学習

@relay

def loss(x: Tensor[Float, (1, 28, 28)], y: Tensor[Float, 10]) -> Float:

return relay.softmax_cross_entropy(lenet(x), y)

@relay

def train_lenet(training_data: Tensor[Float, (60000, 1, 28, 28)]) -> Model:

model = relay.create_model(lenet)

for x, y in data:

model_grad = relay.grad(model, loss, (x, y))

relay.update_model_params(model, model_grad)

return relay.export_model(model)

training_data, test_data = relay.datasets.mnist()

model = train_lenet(training_data)

print(relay.argmax(model(test_data[0])))](https://image.slidesharecdn.com/20181110compilerstudy-181110130617/85/TVM-IR-Relay-14-320.jpg)

![[DL輪読会]Relational inductive biases, deep learning, and graph networks](https://cdn.slidesharecdn.com/ss_thumbnails/180629dlseminarrelationalinductivebias-180706003755-thumbnail.jpg?width=560&fit=bounds)

![SSII2021 [TS2] 深層強化学習 ? 強化学習の基礎から応用まで ?](https://cdn.slidesharecdn.com/ss_thumbnails/ts2-01-210607042910-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]MetaFormer is Actually What You Need for Vision](https://cdn.slidesharecdn.com/ss_thumbnails/20220121metaformer-220121085750-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Deep Reinforcement Learning that Matters](https://cdn.slidesharecdn.com/ss_thumbnails/deeprlthatmatters-171212050658-thumbnail.jpg?width=560&fit=bounds)

![[AI08] 深層学習フレームワーク Chainer × Microsoft で広がる応用](https://cdn.slidesharecdn.com/ss_thumbnails/ai08-170705031536-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Learning to Simulate Complex Physics with Graph Networks](https://cdn.slidesharecdn.com/ss_thumbnails/learningtosimulatecomplexphysicswithgraphnetworks-200508054213-thumbnail.jpg?width=560&fit=bounds)