量子アニーリング解説 1

- 1. 量子アニーリング解説 ?1 2012/10 ? @_kohta ?

- 2. アウトライン ?? 量子力学入門 ? –? 状態、純粋状態、混合状態 ? ?? 古典力学の世界と量子力学の世界 ? ?? 量子力学の世界 ? –? 純粋状態と混合状態 ? ?? 机械学习への応用 ? –? クラス分類問題 ? ?? 量子アニーリングによる最適化 ? ?? to ?be ?con1nued ?

- 3. 量子力学入門 background ?image ?: ?h9p://personal.ashland.edu/rmichael/courses/phys403/phys403.html

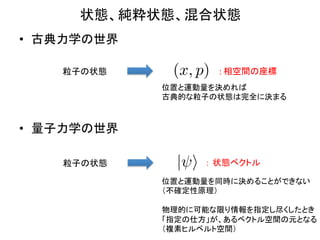

- 4. 状態、純粋状態、混合状態 ?? 古典力学の世界 ? 粒子の状態 (x, p) : ?相空間の座標 ? 位置と運動量を決めれば ? 古典的な粒子の状態は完全に決まる ?? 量子力学の世界 粒子の状態 | i : 状態ベクトル ? 位置と運動量を同時に決めることができない ? (不確定性原理) ? ? 物理的に可能な限り情報を指定し尽くしたとき ? 「指定の仕方」が、あるベクトル空間の元となる ? (複素ヒルベルト空間) ?

- 5. 状態、純粋状態、混合状態 ?? 純粋状態 ? –? 前述の「物理的に可能な限り情報を指定し尽くした状態」 を純粋状態と呼ぶ ? –? 普通の量子力学で扱う対象で、シュレディンガー方程式 ? H| i = E| i に従う ? ? 系のHamiltonian エネルギー固有値 –? Hamiltonianは状態ベクトルに対する(エルミート)演算子 で、(有限次元の場合)行列で書くこともできる ? ?? 要するに行列Hの固有値問題 ?

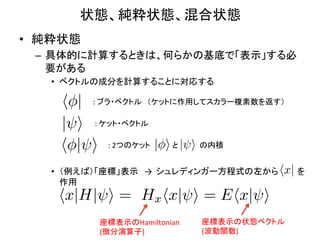

- 6. 状態、純粋状態、混合状態 ?? 純粋状態 ? –? 具体的に計算するときは、何らかの基底で「表示」する必 要がある ? ?? ベクトルの成分を計算することに対応する ? h | : ?ブラ?ベクトル (ケットに作用してスカラー複素数を返す) | i : ?ケット?ベクトル h | i : ?2つのケット | i と の内積 | i ?? (例えば)「座標」表示 → ? ?シュレディンガー方程式の左から hx| を 作用 ? hx|H| i = Hx hx| i = Ehx| i 座標表示のHamiltonian ? 座標表示の状態ベクトル ? (微分演算子) (波動関数)

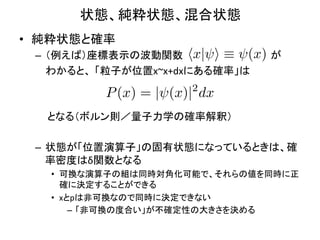

- 7. 状態、純粋状態、混合状態 ?? 純粋状態と確率 ? –? (例えば)座標表示の波動関数 hx| i ? (x) が ? わかると、 「粒子が位置x~x+dxにある確率」は ? ? P (x) = | (x)|2 dx ? ? ? ? ? ?となる(ボルン則/量子力学の確率解釈) ? ? –? 状態が「位置演算子」の固有状態になっているときは、確 率密度はδ関数となる ? ?? 可換な演算子の組は同時対角化可能で、それらの値を同時に正 確に決定することができる ? ?? xとpは非可換なので同時に決定できない ? –? 「非可換の度合い」が不確定性の大きさを決める

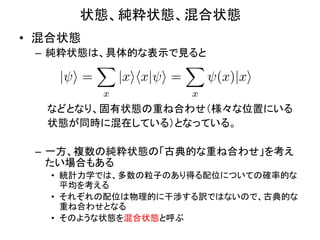

- 8. 状態、純粋状態、混合状態 ?? 混合状態 ? –? 純粋状態は、具体的な表示で見ると ? X X | i= |xihx| i = (x)|xi x x ? ? ? ? ?などとなり、固有状態の重ね合わせ(様々な位置にいる ? ? ? ? ? ?状態が同時に混在している)となっている。 ? ? –? 一方、複数の純粋状態の「古典的な重ね合わせ」を考え たい場合もある ? ?? 統計力学では、多数の粒子のあり得る配位についての確率的な 平均を考える ? ?? それぞれの配位は物理的に干渉する訳ではないので、古典的な 重ね合わせとなる ? ?? そのような状態を混合状態と呼ぶ

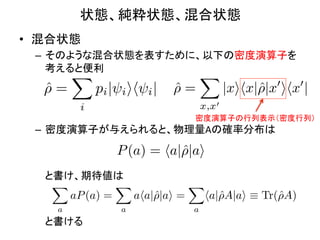

- 9. 状態、純粋状態、混合状態 ?? 混合状態 ? –? 定義から、純粋状態 | 1 i, · · · , | k i を確率的重み ? p1 , · · · , pk ? ? ? ? で混合した混合状態に対して、物理量Aの ? ? ? ? ?確率分布は ? X ? P (a) = pi |ha| i i|2 ? i となる。 ? 物理量Aが固有値aをとる状態のブラベクトル

- 10. 状態、純粋状態、混合状態 ?? 混合状態 ? –? そのような混合状態を表すために、以下の密度演算子を 考えると便利 ? X X ?= ? pi | i ih i | ?= ? |xihx|?|x0 ihx0 | ? i x,x0 密度演算子の行列表示(密度行列) –? 密度演算子が与えられると、物理量Aの確率分布は ? P (a) = ha|?|ai ? ? ? ? ?と書け、期待値は ? X X X ? aP (a) = aha|?|ai = ? ha|?A|ai ? Tr(?A) ? ? ? a a a ? ? ? ?と書ける ?

- 11. 机械学习への応用

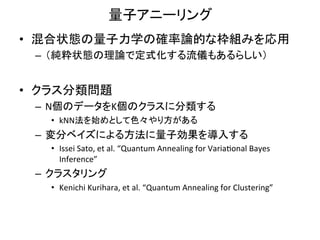

- 12. 量子アニーリング ?? 混合状態の量子力学の確率論的な枠組みを応用 ? –? (純粋状態の理論で定式化する流儀もあるらしい) ? ?? クラス分類問題 ? –? N個のデータをK個のクラスに分類する ? ?? kNN法を始めとして色々やり方がある ? –? 変分ベイズによる方法に量子効果を導入する ? ?? Issei ?Sato, ?et ?al. ?“Quantum ?Annealing ?for ?Varia1onal ?Bayes ? Inference” ? ? –? クラスタリング ? ?? Kenichi ?Kurihara, ?et ?al. ?“Quantum ?Annealing ?for ?Clustering” ?

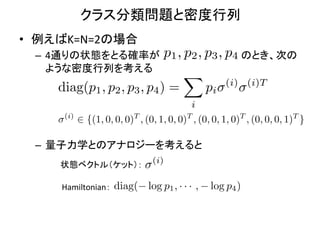

- 13. クラス分類問題と密度行列 ?? クラス分類問題 ? –? データ x = x1 , · · · , xN をK個のクラスに分類する 問題 ? ?? 単一データ xk のクラス割り当てを以下のように書く ? ?k = (0, · · · , 0, 1, 0, · · · , 0)T K次元 0 1 ?? N個のデータ全てに対するある割り当ては ? a11 B ··· a1l B B . .. . C A?B =@ .. . . A . N = ?k=1 ?k ak1 B ··· akl B クロネッカー積 ? ? ? ?となる。 ? T T –? K=N=2のとき、 ?1 = (1, 0) ?2 = (0, 1) なら ? ?1 ? ?2 = (0, 1, 0, 0)T

- 14. クラス分類問題と密度行列 ?? 例えばK=N=2の場合 ? –? 4通りの状態をとる確率が p1 , p2 , p3 , p4 のとき、次の ような密度行列を考える ? X diag(p1 , p2 , p3 , p4 ) = pi (i) (i)T i (i) 2 {(1, 0, 0, 0)T , (0, 1, 0, 0)T , (0, 0, 1, 0)T , (0, 0, 0, 1)T } –? 量子力学とのアナロジーを考えると ? (i) 状態ベクトル(ケット): Hamiltonian: diag( log p1 , · · · , log p4 )

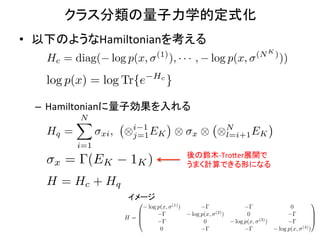

- 15. クラス分類の量子力学的定式化 ?? 以下のようなHamiltonianを考える ? (1) (N K ) Hc = diag( log p(x, ), · · · , log p(x, )) Hc log p(x) = log Tr{e } –? Hamiltonianに量子効果を入れる ? N X Hq = xi , ? i 1 EK ? j=1 x ? ?N l=i+1 EK i=1 後の鈴木-?‐Tro9er展開で ? x = (EK 1K ) うまく計算できる形になる H = Hc + Hq イメージ 0 (1) 1 log p(x, ) 0 B log p(x, (2) ) 0 C H=B @ (3) C A 0 log p(x, ) (4) 0 log p(x, )

- 16. 密度行列と古典的確率 ?? Hamiltonianが対角なら、問題は(通常の)古典的な確率 モデルと完全に一致する ? –? 密度行列を用いることで、Hamiltonianの非対角項に量子論的 効果を入れることができるようになる ? –? 量子アニーリングでは、非対角項を使って局所最適解から抜 け出すことを考える ? ?? 一般的な処方箋 ? –? データと状態について、対角要素が古典的確率となる Hamiltonianを設計する ? –? 適当な量子効果を入れた相互作用Hamiltonianを追加し、鈴 木-?‐Tro9er展開を用いて対角な(サンプリング可能な)確率モデ ルの積として近似する ? –? 量子効果を徐々に弱めるアニーリングを行いながらサンプリン グし、最適解を求める ?

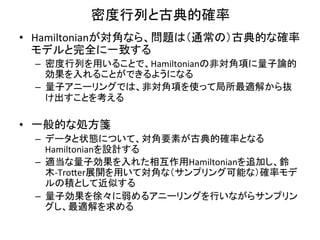

- 17. 変分ベイズ法とクラス分類 ?? 変分ベイズ法の枠組み ? –? 隠れ変数σ、パラメータθがある観測変数xの確率分布 ? ? P (x, , ?) ? –? 観測xに対するσの事後分布 ? P ( , ?|x) ? ? ? ? ? ? ? ? ?を求めたいが、厳密に計算するのは難しい ? ? ?? クラス分類問題 ? –? 観測変数x(データ)と、隠れ変数σ(データのクラス分類) に対して、データxに対するσの事後分布を求める問題

- 18. 変分ベイズ法とクラス分類 ?? 変分ベイズ法の枠組み ? –? xについての周辺尤度が ? XZ P (x, , ?) log P (x) = d?q( , ?) log + KL(q||P ( , ?|x)) q( , ?) XZ P (x, , ?) d?q( , ?) log ? F [q] q( , ?) ? ? ? ?と書け、等号が q( , ?) = P ( , ?|x) のときに成り立つ ? ? ? ? ?ことを利用する ? ? –? ?F ?[q] ?を最大化するqを変分的に求め、それを事後分布と ? ? ? ? ? ? 見なすことができる(F[q]を変分自由エネルギーと呼ぶ) ? ?? そのままではqを求めることができない

- 19. 変分ベイズ以外の方法 ?? MCMCなどを用いて、なんとか からサン P ( , ?|x) プリングする方法もある ? –? クラスラベル空間全体から一度にサンプリングするのは 難しい ? –? 1変数を残して他を固定してサンプリングする過程を繰り 返す、ギブスサンプラーの方法を使える形にしたい

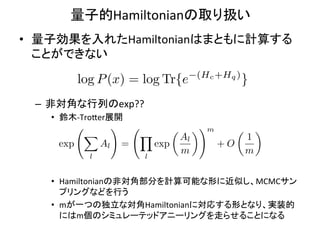

- 20. 量子的Hamiltonianの取り扱い ?? 量子効果を入れたHamiltonianはまともに計算する ことができない ? (Hc +Hq ) log P (x) = log Tr{e } –? 非対角な行列のexp?? ? ?? 鈴木-?‐Tro9er展開 ? ! ? ◆! m ? ◆ X Y Al 1 exp Al = exp +O m m l l ?? Hamiltonianの非対角部分を計算可能な形に近似し、MCMCサン プリングなどを行う ? ?? mが一つの独立な対角Hamiltonianに対応する形となり、実装的 にはm個のシミュレーテッドアニーリングを走らせることになる ?

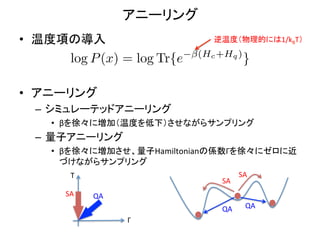

- 21. アニーリング ?? 温度項の導入 ? 逆温度(物理的には1/kBT) ? (Hc +Hq ) log P (x) = log Tr{e } ?? アニーリング ? –? シミュレーテッドアニーリング ? ?? βを徐々に増加(温度を低下)させながらサンプリング ? –? 量子アニーリング ? ?? βを徐々に増加させ、量子Hamiltonianの係数Γを徐々にゼロに近 づけながらサンプリング T SA SA SA QA QA QA Γ

- 22. 実験結果(文献より) ?? 変分ベイズの方法 ? –? 対数尤度値で見て、SAに比べ分類性能が10%程度改善し たらしい ? –? SAに比べ、局所解に陥りにくくなる性質があるらしい

![変分ベイズ法とクラス分類

?? 変分ベイズ法の枠組み

?

–? xについての周辺尤度が

?

XZ P (x, , ?)

log P (x) = d?q( , ?) log + KL(q||P ( , ?|x))

q( , ?)

XZ P (x, , ?)

d?q( , ?) log ? F [q]

q( , ?)

?

?

?

?と書け、等号が q( , ?) = P ( , ?|x) のときに成り立つ

?

?

?

?

?ことを利用する

?

?

–?

?F

?[q]

?を最大化するqを変分的に求め、それを事後分布と

?

?

?

?

?

?

見なすことができる(F[q]を変分自由エネルギーと呼ぶ)

?

?? そのままではqを求めることができない](https://image.slidesharecdn.com/quantumannealing-121022082430-phpapp02/85/1-18-320.jpg)

![[Paper reading] Hamiltonian Neural Networks](https://cdn.slidesharecdn.com/ss_thumbnails/hamiltoniannn-200131102421-thumbnail.jpg?width=560&fit=bounds)