1 of 7

Download to read offline

Recommended

lec34.ppt

lec34.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

1. The document discusses analytic functions of complex variables through examples. It defines analytic functions as those whose derivatives of all orders exist in the region of analyticity.

2. The Cauchy-Riemann equations are derived and their implications are explored, including that they imply the Laplace equation and orthogonality of level curves.

3. Several examples are worked through to determine if functions are analytic by checking if they satisfy the Cauchy-Riemann equations. The Cauchy-Riemann equations are also derived in polar coordinates.lec33.ppt

lec33.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

The Cauchy Riemann (CR) conditions provide a necessary and sufficient condition for a function f(z) = u(x, y) + iv(x, y) to be analytic in a region. The CR conditions require that the partial derivatives of u and v satisfy âu/âx = âv/ây and âu/ây = -âv/âx. If a function satisfies these conditions at all points in a region, then it is analytic in that region. The document proves this using cases where ây = 0 and âx = 0, showing the derivatives must be equal. Examples are provided to demonstrate checking functions for analyticlec31.ppt

lec31.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

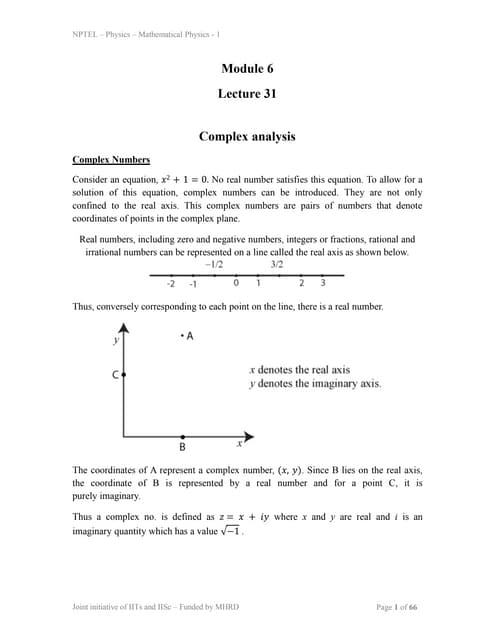

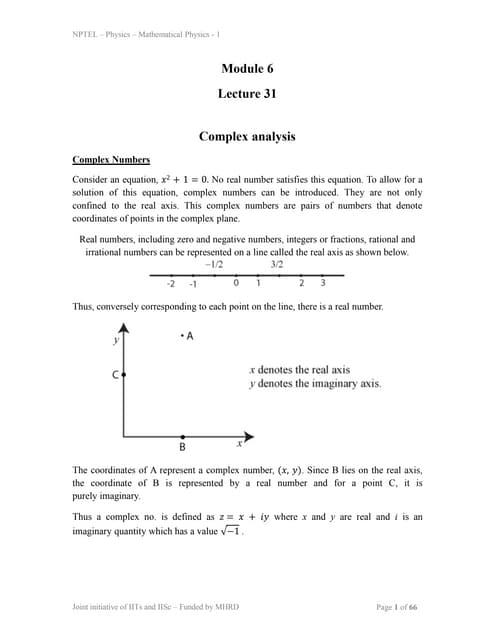

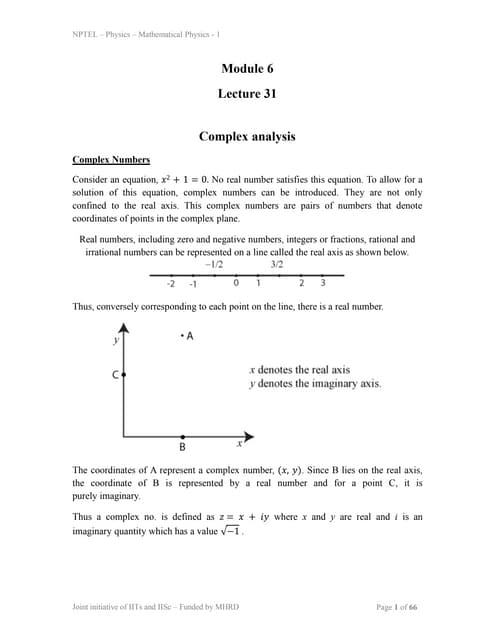

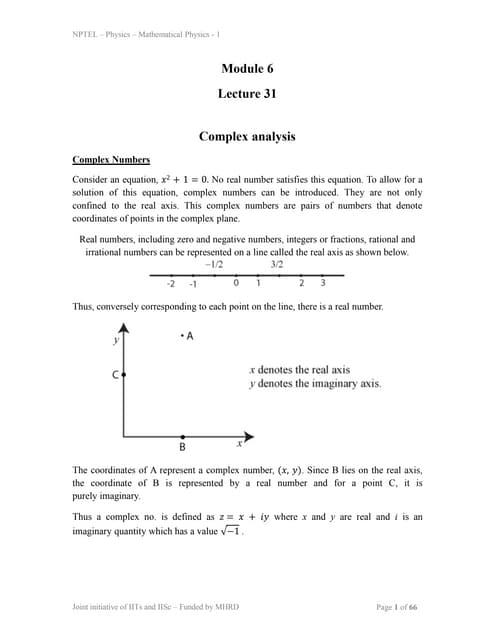

Complex numbers allow solutions to equations like x2 + 1 = 0 by extending real numbers to include imaginary numbers. A complex number z is defined as z = x + iy, where x and y are real numbers and i is the imaginary unit equal to â-1. Complex numbers can be added and multiplied following specific rules, such as z1 + z2 = (x1 + x2) + i(y1 + y2) for addition and z1z2 = (x1x2 - y1y2) + i(y1x2 + x1y2) for multiplication. The inverse of a complex number z is calculated as z-1 = (x/(x2+ylec32.ppt

lec32.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

The document discusses properties of complex numbers including:

- Commutativity and associativity of addition and multiplication

- Additive and multiplicative identities and inverses

- Conjugates, modulus, and triangle inequality

- Polar form representation using modulus and argument

- Exponential form for products, quotients, and powers

- Roots of complex numbers and finding nth roots

- Representing functions of a complex variable using modulus and argumentlec42.ppt

lec42.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

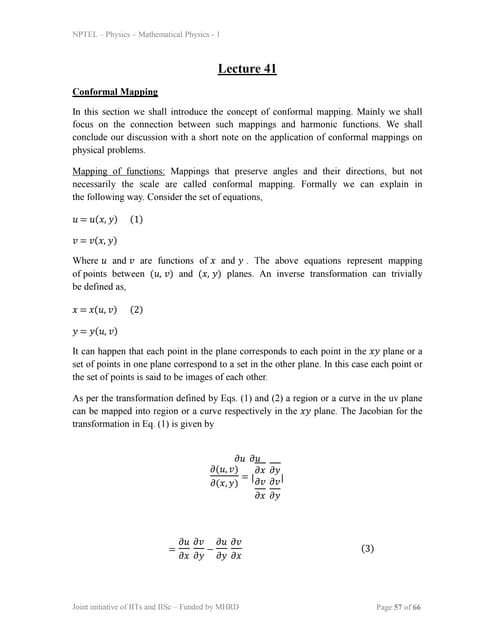

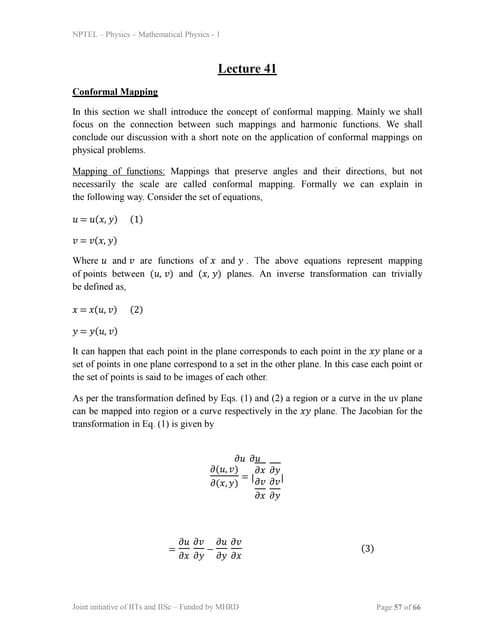

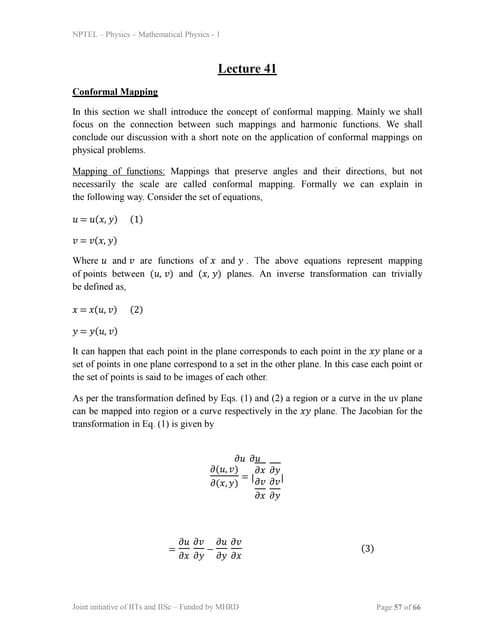

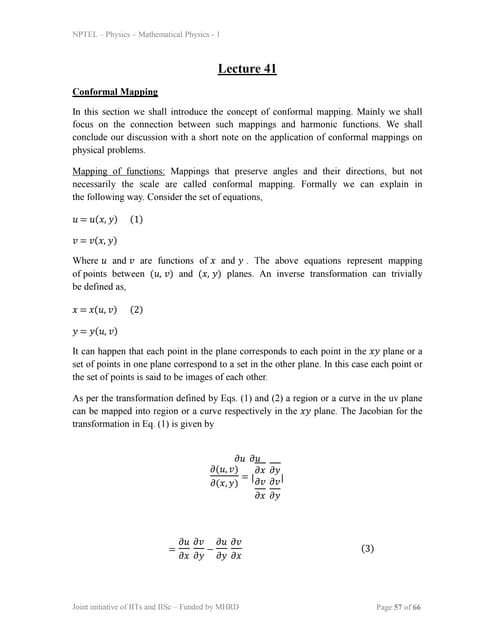

1) The document discusses examples of calculating the Jacobian of transformations. It defines the Jacobian as the determinant of the partial derivatives of the transformed coordinates.

2) It then discusses MÃķbius transformations, which are fractional linear transformations of the form (az+b)/(cz+d). The Jacobian of a MÃķbius transformation depends only on z.

3) Several examples are given of using MÃķbius transformations to map one geometric region to another, such as mapping a circle to a line.lec41.ppt

lec41.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

This document discusses conformal mapping, which maps curves and regions in such a way that preserves angles and their directions. It provides examples of conformal mappings:

1) The mapping w = ez maps a vertical line in the z-plane to a circle in the w-plane, with the phase angle increasing along the circle.

2) The mapping Ï = eiÎļ0(z-z0)/(z-z0) maps an area in the upper half z-plane to the interior of a unit circle in the Ï-plane. Points on the x-axis in z are mapped to the boundary of the circle.lec39.ppt

lec39.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

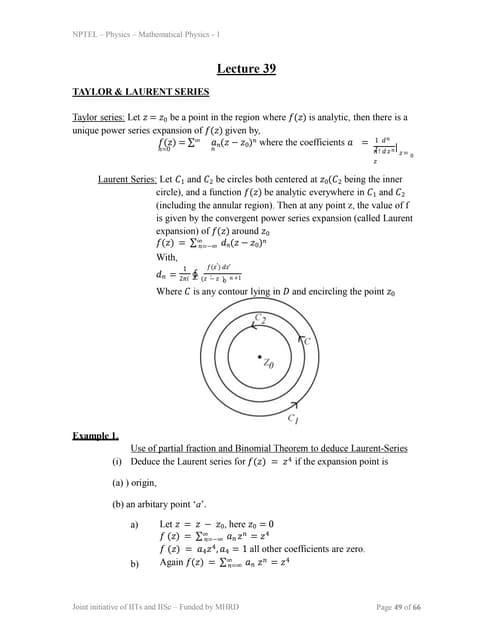

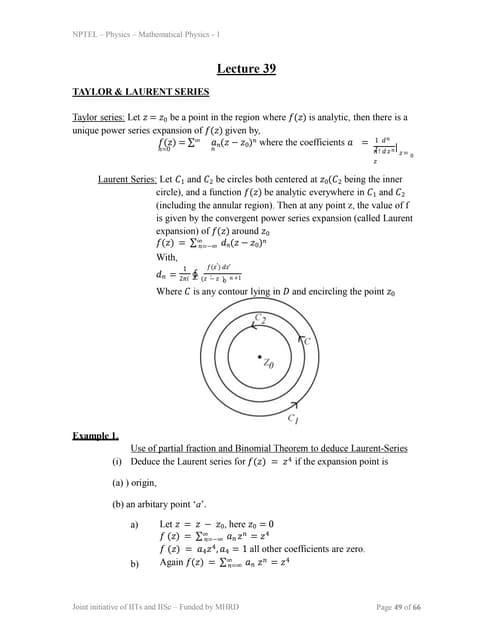

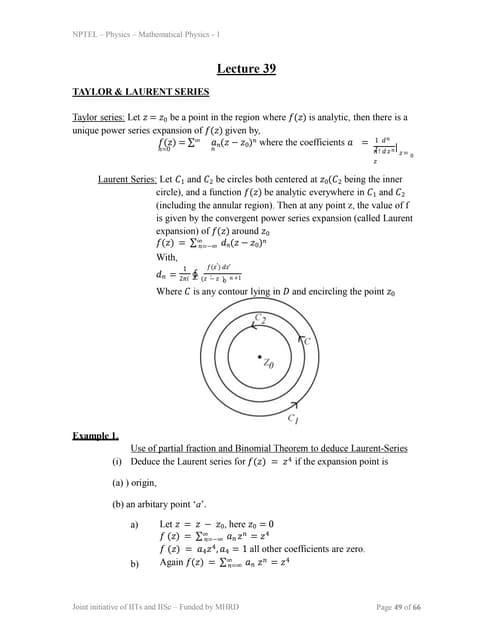

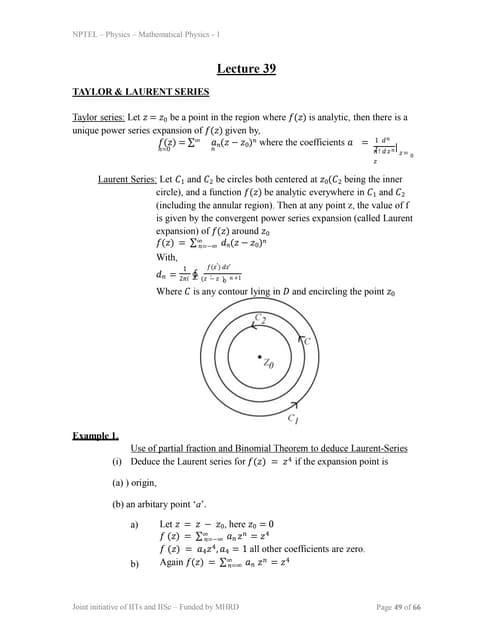

The document discusses Taylor and Laurent series expansions. It provides examples of using these expansions to represent functions around points.

Taylor series provides a power series representation of an analytic function around a point. Laurent series allows representing functions in annular regions, including points where the function is not analytic, using both positive and negative powers of (z - z0). Examples show deducing Laurent series expansions for simple functions like z4 and 1/z4 around various points, and evaluating coefficients via contour integrals and the residue theorem. The document also gives an example of using a contour integral to compute a Greens function in many-particle physics.lec38.ppt

lec38.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

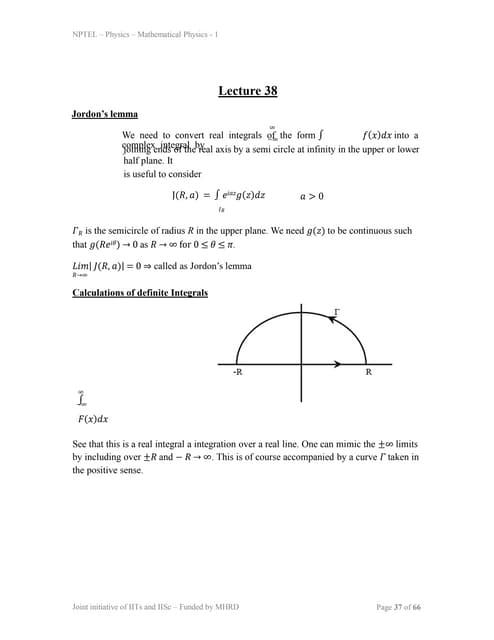

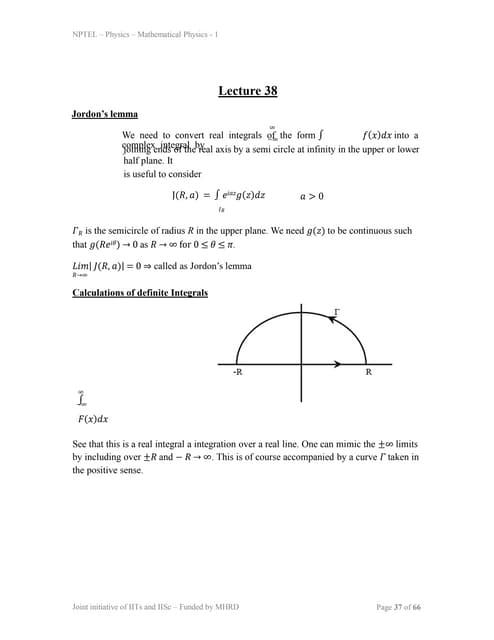

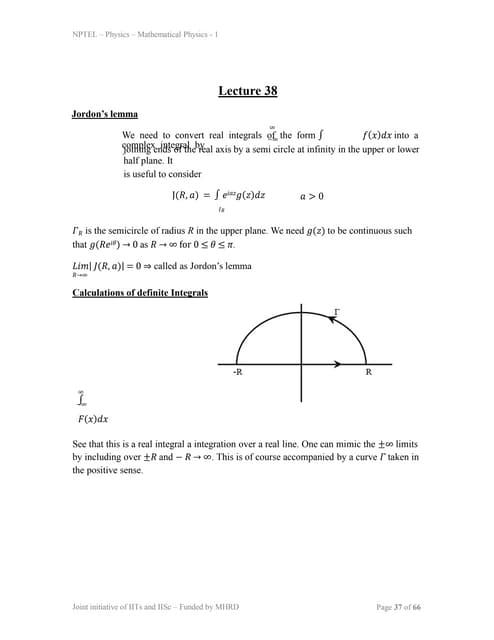

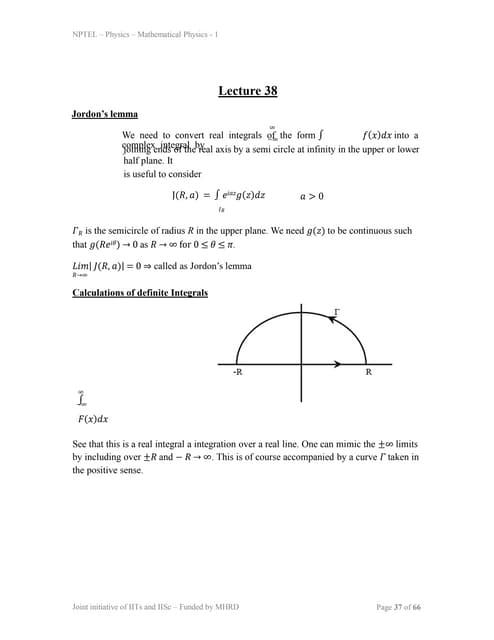

1) Jordan's lemma is used to convert real integrals over the infinite real axis into complex integrals over a contour enclosing the real axis in the complex plane.

2) Several examples are provided of using residues and Jordan's lemma to evaluate definite integrals over the real line or infinite intervals that involve functions with poles, including integrals of x^2, sin(x)/x, 1/(x^2+a^2)^2, and sin(x)/(x(x^2+a^2)).

3) The technique involves closing the contour with a semicircle at infinity where the integral over the semicircle goes to zero by Jordan's lemma, leaving the original integral equal to the residue theorem applied to thelec37.ppt

lec37.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

1) The document discusses evaluating contour integrals using the residue theorem. It provides examples of calculating residues and evaluating integrals where the contour encloses poles.

2) The residue of a function f(z) at a pole z=a is the coefficient of the (z-a)^-1 term in the Laurent series expansion of f(z) about z=a.

3) According to the residue theorem, the value of a contour integral of a function along a closed loop is equal to 2Ïi times the sum of the residues of the function enclosed by the contour.lec23.ppt

lec23.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

1) The document discusses representation of the Dirac delta function in cylindrical and spherical coordinate systems. It shows that Îī(r - r') = Îī(Ï - Ï')Îī(Ï - Ï')Îī(z - z')/Ï in cylindrical coordinates and Îī(r - r') = Îī(r - r')Îī(Îļ - Îļ')Îī(Ï - Ï')/r^2 in spherical coordinates.

2) It also derives the important relation â^2(1/r) = -4ÏÎī(r) and shows its application to the Laplace equation for electrostatic potential.

3) The completeness of eigenfunctions of harmonic oscillators and Legendlec21.ppt

lec21.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

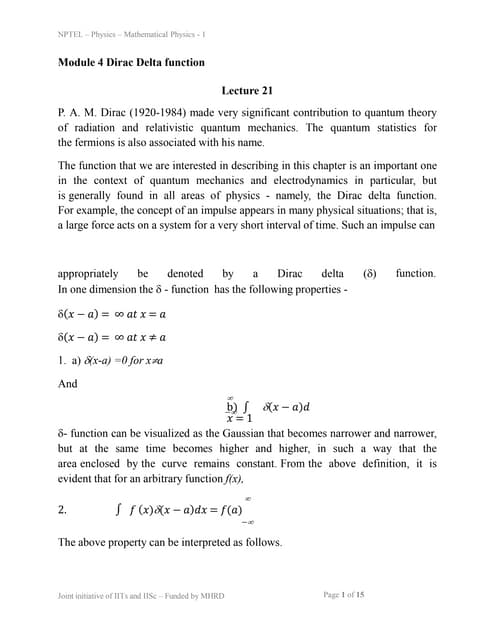

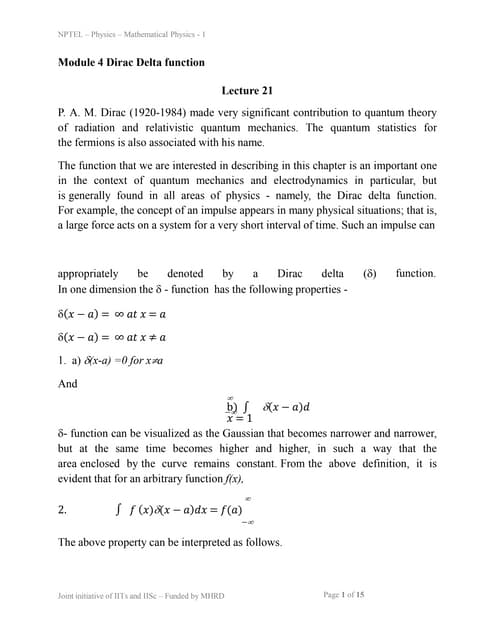

1. The Dirac delta function is an important concept in quantum mechanics and electrodynamics that describes an impulse or large force acting over a very short time interval.

2. The key properties of the Dirac delta function are that it is equal to infinity at a single point and zero everywhere else, and that the integral of the function over its entire range is equal to one.

3. The Dirac delta function can be used to find the value of an arbitrary function f(x) at a specific point a, as the integral of f(x) multiplied by the Dirac delta function over all x is equal to f(a).lec20.ppt

lec20.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

This document contains a series of tutorial problems related to matrices and linear algebra. Problem 1 asks to invert a 3x3 matrix. Problem 2 asks to write a vector as a linear combination of two other vectors. Problem 3 involves finding the inverse, trace, and determinant of related matrices. Problem 4 proves a property about powers of similar matrices. Problem 5 diagonalizes a 2x2 matrix and finds its eigenvalues and eigenvectors.lec19.ppt

lec19.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

This document discusses finding the eigenvalues and eigenfunctions of a spin-1/2 particle pointing along an arbitrary direction. It shows that the eigenvalue equation reduces to a set of two linear, homogeneous equations. The eigenvalues are found to be Âą1/2, and the corresponding eigenvectors are written in terms of the direction angles Îļ and ÎĶ. As an example, it shows that for a spin oriented along the z-axis, the eigenvectors reduce to simple forms as expected for a spin-1/2 particle. It also introduces the Gauss elimination method for numerically solving systems of linear equations that arise in eigenvalue problems.lec18.ppt

lec18.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

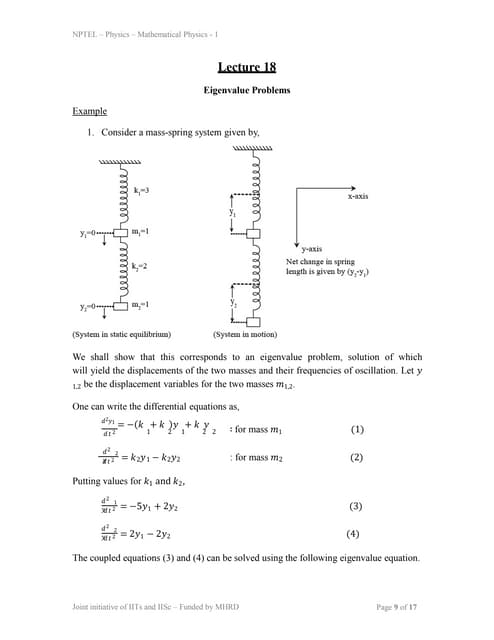

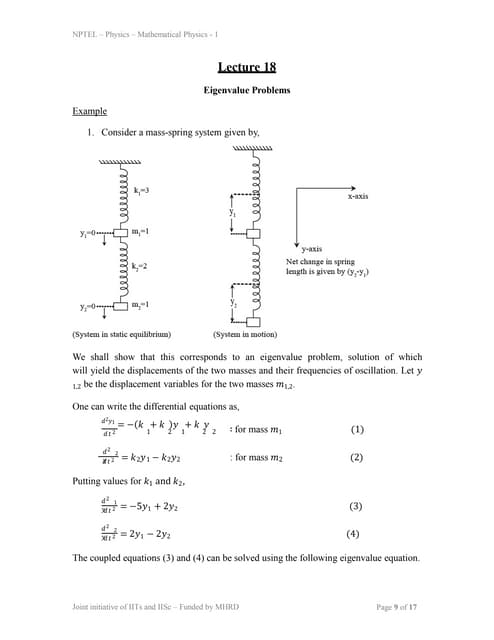

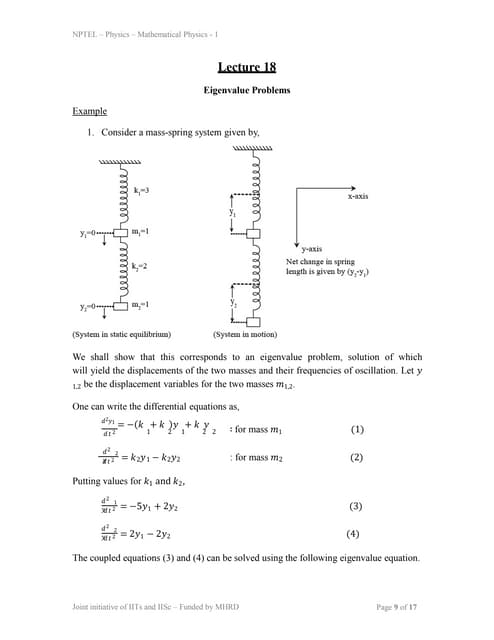

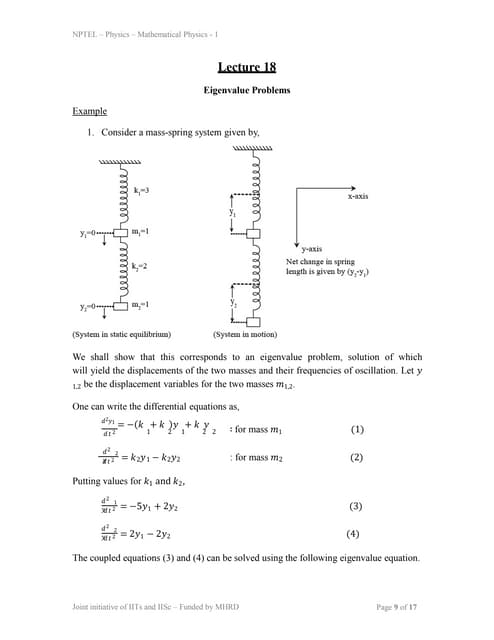

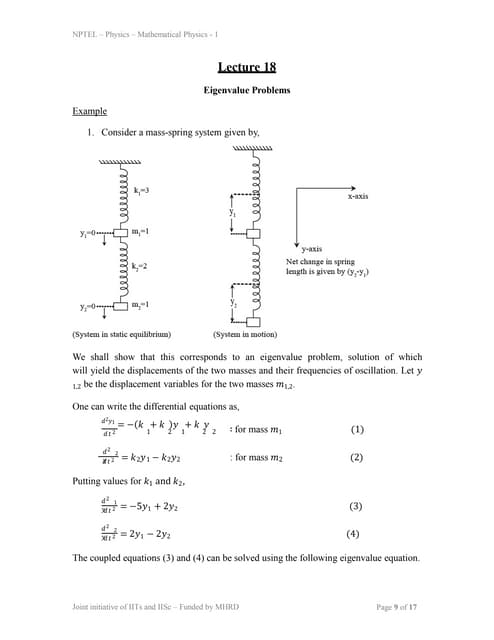

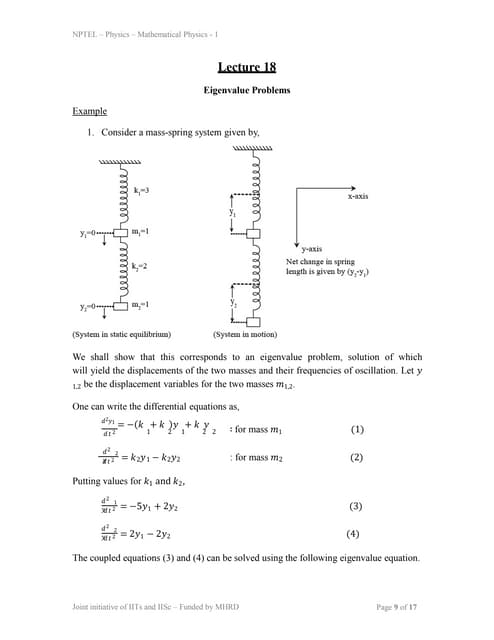

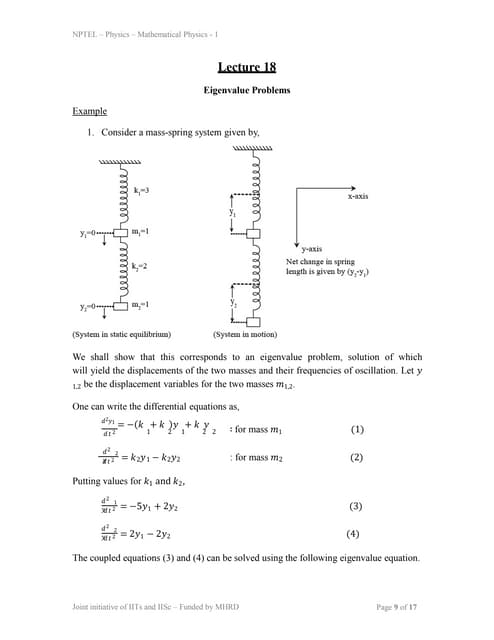

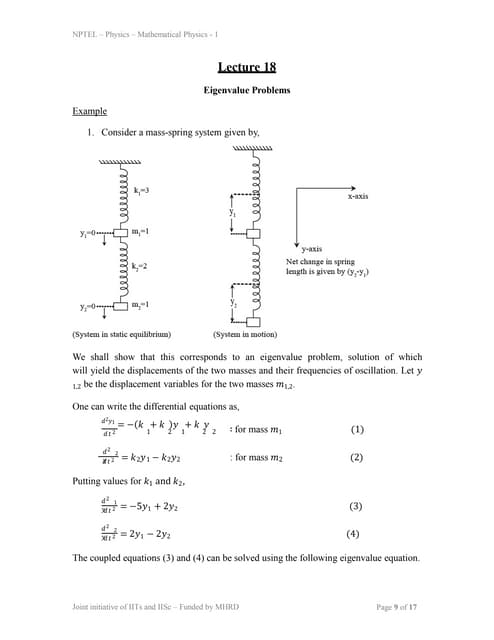

This document discusses solving a mass-spring system as an eigenvalue problem. It:

1) Sets up differential equations to model the displacements of two masses connected by springs.

2) Transforms the coupled differential equations into a matrix eigenvalue equation.

3) Solves the eigenvalue equation to obtain the frequencies of oscillation for the two masses.

4) Combines the eigenvectors with complex exponential functions to obtain general solutions for the displacements of each mass over time.lec17.ppt

lec17.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

This document discusses linear transformations and matrices. It introduces how linear transformations on physical quantities are usually described by matrices, where a column vector u representing a physical quantity is transformed into another column vector Au by a transformation matrix A. As an example, it discusses orthogonal transformations, where the transformation matrix A is orthogonal. It proves that for an orthogonal transformation, the inner product of two vectors remains invariant. It also discusses properties of other types of matrices like Hermitian, skew-Hermitian and unitary matrices.lec16.ppt

lec16.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

This document discusses properties of symmetric, skew-symmetric, and orthogonal matrices. It defines each type of matrix and provides examples. Key points include:

- Symmetric matrices have Aij = Aji for all i and j. Skew-symmetric matrices have Aij = -Aji. Orthogonal matrices satisfy AT = A-1.

- The eigenvalues of symmetric matrices are always real. The eigenvalues of skew-symmetric matrices are either zero or purely imaginary.

- Any real square matrix can be written as the sum of a symmetric matrix and skew-symmetric matrix.lec30.ppt

lec30.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

1) The document discusses calculating the moment of inertia tensor for a cylinder with radius R and height H. It is shown that the only non-zero components of the inertia tensor are Ixx = (3MH + 4MR2)/12, Iyy = Ixx, and Izz = MR2/2.

2) Equations for velocity, acceleration, and the Christoffel symbols in an arbitrary coordinate system are presented. Expressions for calculating acceleration in cylindrical coordinates using the metric tensor and Christoffel symbols are given.lec28.ppt

lec28.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

Tensors obey algebraic properties including addition, multiplication, contraction, and symmetrization. Addition of tensors combines their components. Multiplication of tensors combines their indices and ranks to form a new tensor. Contraction sets equal a covariant and contravariant index, reducing the tensor's rank. Symmetric tensors do not change sign under index interchange, while antisymmetric tensors change sign.lec27.ppt

lec27.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

1) The document discusses tensors with multiple indices and the cross product of two vectors A and B. The components of the cross product vector C are given by Ai Bj - Aj Bi.

2) It describes how tensor components transform between coordinate systems using transformations of partial derivatives. The transformation property for cross products is derived.

3) Tensors are defined by their rank, with the number of covariant and contravariant indices specifying a tensor's rank. Vectors have a rank of 1. Examples calculate tensor components in different coordinate systems.lec26.ppt

lec26.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

1) There are two types of vectors - contravariant vectors whose components transform according to Equation 1, and covariant vectors whose components transform according to Equation 2.

2) The dot product of two contravariant or two covariant vectors is not independent of the coordinate system.

3) The dot product of a contravariant and a covariant vector is independent of the coordinate system.lec25.ppt

lec25.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

The document discusses the transformation properties of vectors between two coordinate systems. It states that if a vector has components (x1, x2, x3) in one coordinate system and (xĖ

1, xĖ

2, xĖ

3) in another, there is a relation such that xĖ

i = ai1x1 + ai2x2 + ai3x3, where the aij are the components of the transformation matrix. This relation can be written compactly as xĖ

i = aijxj, using Einstein summation convention. The document then presents two theorems establishing that a set of functions can represent a tensor if their inner product transforms as a tensor under coordinate transformations.lec2.ppt

lec2.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

1) The document discusses the gradient operator and provides examples of calculating the electric field and gradient of functions.

2) It defines the gradient operator mathematically and provides an example of calculating the gradient of a scalar function.

3) Examples are given of using the gradient operator to calculate the electric field from a potential and the gradient of 1/r.lec1.ppt

lec1.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

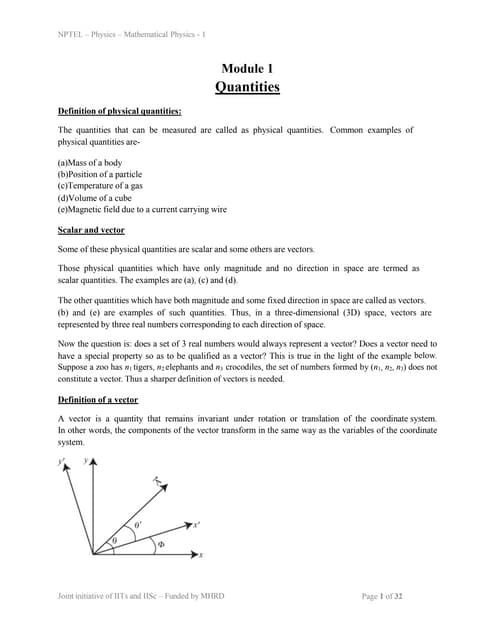

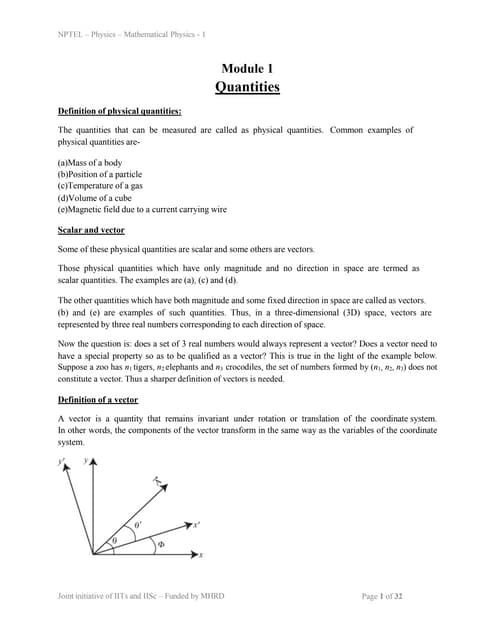

This document defines vectors and scalar quantities, and describes their key properties and relationships. It begins by defining physical quantities that can be measured, and distinguishes between scalar and vector quantities. Scalars have only magnitude, while vectors have both magnitude and direction. The document then provides a more rigorous definition of vectors as quantities that remain invariant under coordinate system rotations or translations. It describes how to represent and transform vectors between different coordinate systems. Vector addition, subtraction, and multiplication operations like the scalar and vector products are defined. Derivatives of vectors are also discussed. Examples of velocity and acceleration vectors in uniform circular motion are provided.lec15.ppt

lec15.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

The rank of a matrix is the maximum number of linearly independent rows. A matrix has rank 0 only if it is the zero matrix. The inverse of a matrix A, denoted A^-1, is defined such that A*A^-1 = I, the identity matrix. To calculate the inverse, one takes the transpose of the cofactor matrix and divides each element by the determinant of the original matrix.lec14.ppt

lec14.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

The document discusses matrix representations of operators and changes of basis in quantum mechanics. Some key points:

- Matrix elements of an operator are computed using a basis of kets. The expectation value of an operator is computed from its matrix elements and the state vectors.

- If two operators commute, they have the same set of eigenkets.

- A change of basis is a unitary transformation that relates two different sets of basis kets that span the same space. It establishes a link between the two basis representations.

- Linear algebra concepts like linear independence of eigenvectors and Hermitian operators having real eigenvalues are important in quantum mechanics.lec13.ppt

lec13.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

A Hilbert space is an infinite-dimensional vector space consisting of sequences of real numbers that satisfy a convergence condition. It allows vector addition and scalar multiplication. In quantum mechanics, state vectors span a Hilbert space. For identical particles, boson state vectors are symmetric and fermion state vectors are antisymmetric. Linear algebra concepts like operators, eigenvectors, and superposition are used in Dirac's formulation of quantum mechanics postulates. Observables are represented by operators and eigenvectors correspond to eigenvalues. Any state can be written as a superposition of eigenvectors.lec11.ppt

lec11.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

The document discusses the Gram-Schmidt orthogonalization (GSO) process for constructing an orthonormal basis from a set of linearly independent vectors. It explains that GSO works by taking a vector, normalizing it to unit length to create the first basis vector, then subtracting the component of the next vector along this first vector to make it orthogonal, and repeating this process to iteratively construct an orthonormal basis. An example applies GSO to three vectors in R3, finding the orthonormal basis vectors by removing components along each preceding vector at each step.lec10.ppt

lec10.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

The document discusses similarity transformations and the Cauchy-Schwarz inequality. It states that if matrix A is similar to matrix B, then B is similar to A, and if A is similar to B and B is similar to C, then A is similar to C. It also proves that if A is similar to B, then the inverse of A is similar to the inverse of B. Additionally, it defines the inner product and norm of vectors, and proves the Cauchy-Schwarz inequality that the square of the inner product of two vectors is less than or equal to the product of their norms. It provides an example using the Cauchy-Schwarz inequality to prove an inequality involving positive real numbers.Chapter 2. Strategic Management: Corporate Governance.pdf

Chapter 2. Strategic Management: Corporate Governance.pdfRommel Regala

Ėý

This course provides students with a comprehensive understanding of strategic management principles, frameworks, and applications in business. It explores strategic planning, environmental analysis, corporate governance, business ethics, and sustainability. The course integrates Sustainable Development Goals (SDGs) to enhance global and ethical perspectives in decision-making.More Related Content

More from Rai Saheb Bhanwar Singh College Nasrullaganj (20)

lec37.ppt

lec37.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

1) The document discusses evaluating contour integrals using the residue theorem. It provides examples of calculating residues and evaluating integrals where the contour encloses poles.

2) The residue of a function f(z) at a pole z=a is the coefficient of the (z-a)^-1 term in the Laurent series expansion of f(z) about z=a.

3) According to the residue theorem, the value of a contour integral of a function along a closed loop is equal to 2Ïi times the sum of the residues of the function enclosed by the contour.lec23.ppt

lec23.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

1) The document discusses representation of the Dirac delta function in cylindrical and spherical coordinate systems. It shows that Îī(r - r') = Îī(Ï - Ï')Îī(Ï - Ï')Îī(z - z')/Ï in cylindrical coordinates and Îī(r - r') = Îī(r - r')Îī(Îļ - Îļ')Îī(Ï - Ï')/r^2 in spherical coordinates.

2) It also derives the important relation â^2(1/r) = -4ÏÎī(r) and shows its application to the Laplace equation for electrostatic potential.

3) The completeness of eigenfunctions of harmonic oscillators and Legendlec21.ppt

lec21.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

1. The Dirac delta function is an important concept in quantum mechanics and electrodynamics that describes an impulse or large force acting over a very short time interval.

2. The key properties of the Dirac delta function are that it is equal to infinity at a single point and zero everywhere else, and that the integral of the function over its entire range is equal to one.

3. The Dirac delta function can be used to find the value of an arbitrary function f(x) at a specific point a, as the integral of f(x) multiplied by the Dirac delta function over all x is equal to f(a).lec20.ppt

lec20.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

This document contains a series of tutorial problems related to matrices and linear algebra. Problem 1 asks to invert a 3x3 matrix. Problem 2 asks to write a vector as a linear combination of two other vectors. Problem 3 involves finding the inverse, trace, and determinant of related matrices. Problem 4 proves a property about powers of similar matrices. Problem 5 diagonalizes a 2x2 matrix and finds its eigenvalues and eigenvectors.lec19.ppt

lec19.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

This document discusses finding the eigenvalues and eigenfunctions of a spin-1/2 particle pointing along an arbitrary direction. It shows that the eigenvalue equation reduces to a set of two linear, homogeneous equations. The eigenvalues are found to be Âą1/2, and the corresponding eigenvectors are written in terms of the direction angles Îļ and ÎĶ. As an example, it shows that for a spin oriented along the z-axis, the eigenvectors reduce to simple forms as expected for a spin-1/2 particle. It also introduces the Gauss elimination method for numerically solving systems of linear equations that arise in eigenvalue problems.lec18.ppt

lec18.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

This document discusses solving a mass-spring system as an eigenvalue problem. It:

1) Sets up differential equations to model the displacements of two masses connected by springs.

2) Transforms the coupled differential equations into a matrix eigenvalue equation.

3) Solves the eigenvalue equation to obtain the frequencies of oscillation for the two masses.

4) Combines the eigenvectors with complex exponential functions to obtain general solutions for the displacements of each mass over time.lec17.ppt

lec17.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

This document discusses linear transformations and matrices. It introduces how linear transformations on physical quantities are usually described by matrices, where a column vector u representing a physical quantity is transformed into another column vector Au by a transformation matrix A. As an example, it discusses orthogonal transformations, where the transformation matrix A is orthogonal. It proves that for an orthogonal transformation, the inner product of two vectors remains invariant. It also discusses properties of other types of matrices like Hermitian, skew-Hermitian and unitary matrices.lec16.ppt

lec16.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

This document discusses properties of symmetric, skew-symmetric, and orthogonal matrices. It defines each type of matrix and provides examples. Key points include:

- Symmetric matrices have Aij = Aji for all i and j. Skew-symmetric matrices have Aij = -Aji. Orthogonal matrices satisfy AT = A-1.

- The eigenvalues of symmetric matrices are always real. The eigenvalues of skew-symmetric matrices are either zero or purely imaginary.

- Any real square matrix can be written as the sum of a symmetric matrix and skew-symmetric matrix.lec30.ppt

lec30.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

1) The document discusses calculating the moment of inertia tensor for a cylinder with radius R and height H. It is shown that the only non-zero components of the inertia tensor are Ixx = (3MH + 4MR2)/12, Iyy = Ixx, and Izz = MR2/2.

2) Equations for velocity, acceleration, and the Christoffel symbols in an arbitrary coordinate system are presented. Expressions for calculating acceleration in cylindrical coordinates using the metric tensor and Christoffel symbols are given.lec28.ppt

lec28.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

Tensors obey algebraic properties including addition, multiplication, contraction, and symmetrization. Addition of tensors combines their components. Multiplication of tensors combines their indices and ranks to form a new tensor. Contraction sets equal a covariant and contravariant index, reducing the tensor's rank. Symmetric tensors do not change sign under index interchange, while antisymmetric tensors change sign.lec27.ppt

lec27.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

1) The document discusses tensors with multiple indices and the cross product of two vectors A and B. The components of the cross product vector C are given by Ai Bj - Aj Bi.

2) It describes how tensor components transform between coordinate systems using transformations of partial derivatives. The transformation property for cross products is derived.

3) Tensors are defined by their rank, with the number of covariant and contravariant indices specifying a tensor's rank. Vectors have a rank of 1. Examples calculate tensor components in different coordinate systems.lec26.ppt

lec26.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

1) There are two types of vectors - contravariant vectors whose components transform according to Equation 1, and covariant vectors whose components transform according to Equation 2.

2) The dot product of two contravariant or two covariant vectors is not independent of the coordinate system.

3) The dot product of a contravariant and a covariant vector is independent of the coordinate system.lec25.ppt

lec25.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

The document discusses the transformation properties of vectors between two coordinate systems. It states that if a vector has components (x1, x2, x3) in one coordinate system and (xĖ

1, xĖ

2, xĖ

3) in another, there is a relation such that xĖ

i = ai1x1 + ai2x2 + ai3x3, where the aij are the components of the transformation matrix. This relation can be written compactly as xĖ

i = aijxj, using Einstein summation convention. The document then presents two theorems establishing that a set of functions can represent a tensor if their inner product transforms as a tensor under coordinate transformations.lec2.ppt

lec2.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

1) The document discusses the gradient operator and provides examples of calculating the electric field and gradient of functions.

2) It defines the gradient operator mathematically and provides an example of calculating the gradient of a scalar function.

3) Examples are given of using the gradient operator to calculate the electric field from a potential and the gradient of 1/r.lec1.ppt

lec1.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

This document defines vectors and scalar quantities, and describes their key properties and relationships. It begins by defining physical quantities that can be measured, and distinguishes between scalar and vector quantities. Scalars have only magnitude, while vectors have both magnitude and direction. The document then provides a more rigorous definition of vectors as quantities that remain invariant under coordinate system rotations or translations. It describes how to represent and transform vectors between different coordinate systems. Vector addition, subtraction, and multiplication operations like the scalar and vector products are defined. Derivatives of vectors are also discussed. Examples of velocity and acceleration vectors in uniform circular motion are provided.lec15.ppt

lec15.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

The rank of a matrix is the maximum number of linearly independent rows. A matrix has rank 0 only if it is the zero matrix. The inverse of a matrix A, denoted A^-1, is defined such that A*A^-1 = I, the identity matrix. To calculate the inverse, one takes the transpose of the cofactor matrix and divides each element by the determinant of the original matrix.lec14.ppt

lec14.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

The document discusses matrix representations of operators and changes of basis in quantum mechanics. Some key points:

- Matrix elements of an operator are computed using a basis of kets. The expectation value of an operator is computed from its matrix elements and the state vectors.

- If two operators commute, they have the same set of eigenkets.

- A change of basis is a unitary transformation that relates two different sets of basis kets that span the same space. It establishes a link between the two basis representations.

- Linear algebra concepts like linear independence of eigenvectors and Hermitian operators having real eigenvalues are important in quantum mechanics.lec13.ppt

lec13.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

A Hilbert space is an infinite-dimensional vector space consisting of sequences of real numbers that satisfy a convergence condition. It allows vector addition and scalar multiplication. In quantum mechanics, state vectors span a Hilbert space. For identical particles, boson state vectors are symmetric and fermion state vectors are antisymmetric. Linear algebra concepts like operators, eigenvectors, and superposition are used in Dirac's formulation of quantum mechanics postulates. Observables are represented by operators and eigenvectors correspond to eigenvalues. Any state can be written as a superposition of eigenvectors.lec11.ppt

lec11.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

The document discusses the Gram-Schmidt orthogonalization (GSO) process for constructing an orthonormal basis from a set of linearly independent vectors. It explains that GSO works by taking a vector, normalizing it to unit length to create the first basis vector, then subtracting the component of the next vector along this first vector to make it orthogonal, and repeating this process to iteratively construct an orthonormal basis. An example applies GSO to three vectors in R3, finding the orthonormal basis vectors by removing components along each preceding vector at each step.lec10.ppt

lec10.pptRai Saheb Bhanwar Singh College Nasrullaganj

Ėý

The document discusses similarity transformations and the Cauchy-Schwarz inequality. It states that if matrix A is similar to matrix B, then B is similar to A, and if A is similar to B and B is similar to C, then A is similar to C. It also proves that if A is similar to B, then the inverse of A is similar to the inverse of B. Additionally, it defines the inner product and norm of vectors, and proves the Cauchy-Schwarz inequality that the square of the inner product of two vectors is less than or equal to the product of their norms. It provides an example using the Cauchy-Schwarz inequality to prove an inequality involving positive real numbers.Recently uploaded (20)

Chapter 2. Strategic Management: Corporate Governance.pdf

Chapter 2. Strategic Management: Corporate Governance.pdfRommel Regala

Ėý

This course provides students with a comprehensive understanding of strategic management principles, frameworks, and applications in business. It explores strategic planning, environmental analysis, corporate governance, business ethics, and sustainability. The course integrates Sustainable Development Goals (SDGs) to enhance global and ethical perspectives in decision-making.Azure Administrator Interview Questions By ScholarHat

Azure Administrator Interview Questions By ScholarHatScholarhat

Ėý

Azure Administrator Interview Questions By ScholarHatHelping Autistic Girls Shine Webinar šÝšÝßĢs

Helping Autistic Girls Shine Webinar šÝšÝßĢsPooky Knightsmith

Ėý

For more information about my speaking and training work, visit: https://www.pookyknightsmith.com/speaking/Odoo 18 Accounting Access Rights - Odoo 18 šÝšÝßĢs

Odoo 18 Accounting Access Rights - Odoo 18 šÝšÝßĢsCeline George

Ėý

In this slide, weâll discuss on accounting access rights in odoo 18. To ensure data security and maintain confidentiality, Odoo provides a robust access rights system that allows administrators to control who can access and modify accounting data. One Click RFQ Cancellation in Odoo 18 - Odoo šÝšÝßĢs

One Click RFQ Cancellation in Odoo 18 - Odoo šÝšÝßĢsCeline George

Ėý

In this slide, weâll discuss the one click RFQ Cancellation in odoo 18. One-Click RFQ Cancellation in Odoo 18 is a feature that allows users to quickly and easily cancel Request for Quotations (RFQs) with a single click.ASP.NET Interview Questions PDF By ScholarHat

ASP.NET Interview Questions PDF By ScholarHatScholarhat

Ėý

ASP.NET Interview Questions PDF By ScholarHatHow to Configure Recurring Revenue in Odoo 17 CRM

How to Configure Recurring Revenue in Odoo 17 CRMCeline George

Ėý

This slide will represent how to configure Recurring revenue. Recurring revenue are the income generated at a particular interval. Typically, the interval can be monthly, yearly, or we can customize the intervals for a product or service based on its subscription or contract. Administrative bodies( D and C Act, 1940

Administrative bodies( D and C Act, 1940P.N.DESHMUKH

Ėý

These presentation include information about administrative bodies such as D.T.A.B

CDL AND DCC, etc.Interim Guidelines for PMES-DM-17-2025-PPT.pptx

Interim Guidelines for PMES-DM-17-2025-PPT.pptxsirjeromemanansala

Ėý

This is the latest issuance on PMES as replacement of RPMS. Kindly message me to gain full access of the presentation. AI and Academic Writing, Short Term Course in Academic Writing and Publicatio...

AI and Academic Writing, Short Term Course in Academic Writing and Publicatio...Prof. (Dr.) Vinod Kumar Kanvaria

Ėý

AI and Academic Writing, Short Term Course in Academic Writing and Publication, UGC-MMTTC, MANUU, 25/02/2025, Prof. (Dr.) Vinod Kumar Kanvaria, University of Delhi, vinodpr111@gmail.comYear 10 The Senior Phase Session 3 Term 1.pptx

Year 10 The Senior Phase Session 3 Term 1.pptxmansk2

Ėý

Year 10 The Senior Phase Session 3 Term 1.pptxASP.NET Web API Interview Questions By Scholarhat

ASP.NET Web API Interview Questions By ScholarhatScholarhat

Ėý

ASP.NET Web API Interview Questions By ScholarhatInventory Reporting in Odoo 17 - Odoo 17 Inventory App

Inventory Reporting in Odoo 17 - Odoo 17 Inventory AppCeline George

Ėý

This slide will helps us to efficiently create detailed reports of different records defined in its modules, both analytical and quantitative, with Odoo 17 ERP.NUTRITIONAL ASSESSMENT AND EDUCATION - 5TH SEM.pdf

NUTRITIONAL ASSESSMENT AND EDUCATION - 5TH SEM.pdfDolisha Warbi

Ėý

NUTRITIONAL ASSESSMENT AND EDUCATION, Introduction, definition, types - macronutrient and micronutrient, food pyramid, meal planning, nutritional assessment of individual, family and community by using appropriate method, nutrition education, nutritional rehabilitation, nutritional deficiency disorder, law/policies regarding nutrition in India, food hygiene, food fortification, food handling and storage, food preservation, food preparation, food purchase, food consumption, food borne diseases, food poisoningAI and Academic Writing, Short Term Course in Academic Writing and Publicatio...

AI and Academic Writing, Short Term Course in Academic Writing and Publicatio...Prof. (Dr.) Vinod Kumar Kanvaria

Ėý