Chapter 10 sequence modeling recurrent and recursive nets

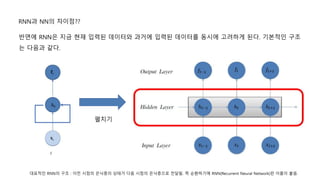

- 2. ņÜ░ļ”¼ļŖö ņśżļŖś RNN(Recurrent neural network)ņŚÉ ļīĆĒĢ┤ņä£ ļ░░ņÜĖ Ļ▓āņØ┤ļŗż. ņØ┤Ļ▒ĖļĪ£ļŖö ļ¼┤ņŚćņØä ĒĢĀ ņłś ņ׳ļŖöņ¦Ćļź╝ ņåīĻ░£ĒĢśĻ│Ā ņ¢┤ļ¢ż ņŗØņ£╝ļĪ£ ļé┤ļČĆ ĻĄ¼ņĪ░Ļ░Ć ņāØĻ▓╝ļŖöņ¦Ć ņĢīņĢäļ│┤ņ×É.

- 3. RNNņØ┤ Ēלņō░ļŖö ļČäņĢ╝ ļŹ░ņØ┤Ēä░Ļ░Ć ņł£ņ░©ņĀüņ£╝ļĪ£ ņĀ£ņŗ£ļÉśĻ▒░ļéś ņäĀĒøäĻ┤ĆĻ│äĻ░Ć ņżæņÜöĒĢ£ Ļ▓ĮņÜ░ņŚÉ RNNņØ┤ ĒלņØä ļ░£Ē£śĒĢ£ļŗż.

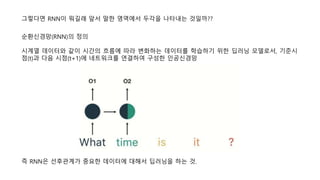

- 4. ĻĘĖļĀćļŗżļ®┤ RNNņØ┤ ļŁÉĻĖĖļל ņĢ×ņä£ ļ¦ÉĒĢ£ ņśüņŚŁņŚÉņä£ ļæÉĻ░üņØä ļéśĒāĆļé┤ļŖö Ļ▓āņØ╝Ļ╣ī?? ņ”ē RNNņØĆ ņäĀĒøäĻ┤ĆĻ│äĻ░Ć ņżæņÜöĒĢ£ ļŹ░ņØ┤Ēä░ņŚÉ ļīĆĒĢ┤ņä£ ļöźļ¤¼ļŗØņØä ĒĢśļŖö Ļ▓ā. ņł£ĒÖśņŗĀĻ▓Įļ¦Ø(RNN)ņØś ņĀĢņØś ņŗ£Ļ│äņŚ┤ ļŹ░ņØ┤Ēä░ņÖĆ Ļ░ÖņØ┤ ņŗ£Ļ░äņØś ĒØÉļ”äņŚÉ ļö░ļØ╝ ļ│ĆĒÖöĒĢśļŖö ļŹ░ņØ┤Ēä░ļź╝ ĒĢÖņŖĄĒĢśĻĖ░ ņ£äĒĢ£ ļöźļ¤¼ļŗØ ļ¬©ļŹĖļĪ£ņä£, ĻĖ░ņżĆņŗ£ ņĀÉ(t)Ļ│╝ ļŗżņØī ņŗ£ņĀÉ(t+1)ņŚÉ ļäżĒŖĖņøīĒü¼ļź╝ ņŚ░Ļ▓░ĒĢśņŚ¼ ĻĄ¼ņä▒ĒĢ£ ņØĖĻ│ĄņŗĀĻ▓Įļ¦Ø

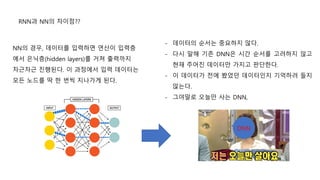

- 5. - ļŹ░ņØ┤Ēä░ņØś ņł£ņä£ļŖö ņżæņÜöĒĢśņ¦Ć ņĢŖļŗż. - ļŗżņŗ£ ļ¦ÉĒĢ┤ ĻĖ░ņĪ┤ DNNņØĆ ņŗ£Ļ░ä ņł£ņä£ļź╝ Ļ│ĀļĀżĒĢśņ¦Ć ņĢŖĻ│Ā Ēśäņ×¼ ņŻ╝ņ¢┤ņ¦ä ļŹ░ņØ┤Ēä░ļ¦ī Ļ░Ćņ¦ĆĻ│Ā ĒīÉļŗ©ĒĢ£ļŗż. - ņØ┤ ļŹ░ņØ┤Ēä░Ļ░Ć ņĀäņŚÉ ļ┤żņŚłļŹś ļŹ░ņØ┤Ēä░ņØĖņ¦Ć ĻĖ░ņ¢ĄĒĢśļĀż ļōżņ¦Ć ņĢŖļŖöļŗż. - ĻĘĖņĢ╝ļ¦ÉļĪ£ ņśżļŖśļ¦ī ņé¼ļŖö DNN, NNņØś Ļ▓ĮņÜ░, ļŹ░ņØ┤Ēä░ļź╝ ņ×ģļĀźĒĢśļ®┤ ņŚ░ņé░ņØ┤ ņ×ģļĀźņĖĄ ņŚÉņä£ ņØĆļŗēņĖĄ(hidden layers)ļź╝ Ļ▒░ņ│É ņČ£ļĀźĻ╣īņ¦Ć ņ░©ĻĘ╝ņ░©ĻĘ╝ ņ¦äĒ¢ēļÉ£ļŗż. ņØ┤ Ļ│╝ņĀĢņŚÉņä£ ņ×ģļĀź ļŹ░ņØ┤Ēä░ļŖö ļ¬©ļōĀ ļģĖļō£ļź╝ ļö▒ ĒĢ£ ļ▓łņö® ņ¦ĆļéśĻ░ĆĻ▓ī ļÉ£ļŗż. RNNĻ│╝ NNņØś ņ░©ņØ┤ņĀÉ?? DNN

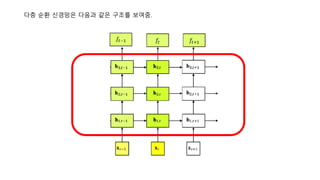

- 6. ĒÄ╝ņ╣śĻĖ░ RNNĻ│╝ NNņØś ņ░©ņØ┤ņĀÉ?? ļīĆĒæ£ņĀüņØĖ RNNņØś ĻĄ¼ņĪ░ : ņØ┤ņĀä ņŗ£ņĀÉņØś ņØĆļŗēņĖĄņØś ņāüĒā£Ļ░Ć ļŗżņØī ņŗ£ņĀÉņØś ņØĆļŗēņĖĄņ£╝ļĪ£ ņĀäļŗ¼ļÉ©. ņ”ē ņł£ĒÖśĒĢśĻĖ░ņŚÉ RNN(Recurrent Neural Network)ļ×Ć ņØ┤ļ”äņØ┤ ļČÖņØī. ļ░śļ®┤ņŚÉ RNNņØĆ ņ¦ĆĻĖł Ēśäņ×¼ ņ×ģļĀźļÉ£ ļŹ░ņØ┤Ēä░ņÖĆ Ļ│╝Ļ▒░ņŚÉ ņ×ģļĀźļÉ£ ļŹ░ņØ┤Ēä░ļź╝ ļÅÖņŗ£ņŚÉ Ļ│ĀļĀżĒĢśĻ▓ī ļÉ£ļŗż. ĻĖ░ļ│ĖņĀüņØĖ ĻĄ¼ņĪ░ ļŖö ļŗżņØīĻ│╝ Ļ░Öļŗż.

- 7. ļŗżņĖĄ ņł£ĒÖś ņŗĀĻ▓Įļ¦ØņØĆ ļŗżņØīĻ│╝ Ļ░ÖņØĆ ĻĄ¼ņĪ░ļź╝ ļ│┤ņŚ¼ņżī.

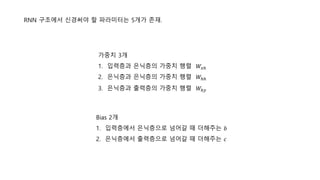

- 8. RNN ĻĄ¼ņĪ░ņŚÉņä£ ņŗĀĻ▓ĮņŹ©ņĢ╝ ĒĢĀ ĒīīļØ╝ļ»ĖĒä░ļŖö 5Ļ░£Ļ░Ć ņĪ┤ņ×¼. Ļ░Ćņżæņ╣ś 3Ļ░£ 1. ņ×ģļĀźņĖĄĻ│╝ ņØĆļŗēņĖĄņØś Ļ░Ćņżæņ╣ś Ē¢ēļĀ¼ ØæŖØæźŌäÄ 2. ņØĆļŗēņĖĄĻ│╝ ņØĆļŗēņĖĄņØś Ļ░Ćņżæņ╣ś Ē¢ēļĀ¼ ØæŖŌäÄŌäÄ 3. ņØĆļŗēņĖĄĻ│╝ ņČ£ļĀźņĖĄņØś Ļ░Ćņżæņ╣ś Ē¢ēļĀ¼ ØæŖŌäÄØæ” Bias 2Ļ░£ 1. ņ×ģļĀźņĖĄņŚÉņä£ ņØĆļŗēņĖĄņ£╝ļĪ£ ļäśņ¢┤Ļ░ł ļĢī ļŹöĒĢ┤ņŻ╝ļŖö ØæÅ 2. ņØĆļŗēņĖĄņŚÉņä£ ņČ£ļĀźņĖĄņ£╝ļĪ£ ļäśņ¢┤Ļ░ł ļĢī ļŹöĒĢ┤ņŻ╝ļŖö ØæÉ

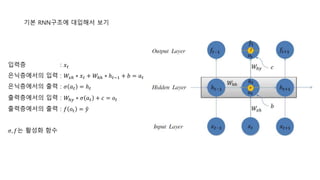

- 9. RNNņØś ĻĖ░ļ│ĖņĀüņØĖ Ļ│äņé░ ņ×ģļĀźņĖĄ : Øæź ØæĪ ņØĆļŗēņĖĄņŚÉņä£ņØś ņ×ģļĀź : ØæŖØæźŌäÄ ŌłŚ Øæź ØæĪ + ØæŖŌäÄŌäÄ ŌłŚ ŌäÄ ØæĪŌłÆ1 + ØæÅ = ØæÄ ØæĪ ņØĆļŗēņĖĄņŚÉņä£ņØś ņČ£ļĀź : Ø£Ä ØæÄ ØæĪ = ŌäÄ ØæĪ ņČ£ļĀźņĖĄņŚÉņä£ņØś ņ×ģļĀź : ØæŖŌäÄØæ” ŌłŚ Ø£Ä ØæÄ ØæĪ + ØæÉ = Øæ£ ØæĪ ņČ£ļĀźņĖĄņŚÉņä£ņØś ņČ£ļĀź : Øæō(Øæ£ ØæĪ) Ø£Ä, ØæōļŖö ĒÖ£ņä▒ĒÖö ĒĢ©ņłś

- 10. ĻĖ░ļ│Ė RNNĻĄ¼ņĪ░ņŚÉ ļīĆņ×ģĒĢ┤ņä£ ļ│┤ĻĖ░ ņ×ģļĀźņĖĄ : Øæź ØæĪ ņØĆļŗēņĖĄņŚÉņä£ņØś ņ×ģļĀź : ØæŖØæźŌäÄ ŌłŚ Øæź ØæĪ + ØæŖŌäÄŌäÄ ŌłŚ ŌäÄ ØæĪŌłÆ1 + ØæÅ = ØæÄ ØæĪ ņØĆļŗēņĖĄņŚÉņä£ņØś ņČ£ļĀź : Ø£Ä ØæÄ ØæĪ = ŌäÄ ØæĪ ņČ£ļĀźņĖĄņŚÉņä£ņØś ņ×ģļĀź : ØæŖŌäÄØæ” ŌłŚ Ø£Ä ØæÄ ØæĪ + ØæÉ = Øæ£ØæĪ ņČ£ļĀźņĖĄņŚÉņä£ņØś ņČ£ļĀź : Øæō Øæ£ØæĪ = Øæ” Ø£Ä, ØæōļŖö ĒÖ£ņä▒ĒÖö ĒĢ©ņłś

- 11. ĒĢ£ĻĖĆņ×É ņö® ņ×ģļĀźĒĢ┤ņä£ HelloļØ╝ļŖö ļŗ©ņ¢┤ļź╝ ņśłņĖĪĒĢśļŖö ņśłņŗ£ ņ▓śņØīņŚÉ h[1,0,0,0]ņØ┤ļØ╝ļŖö ņ×ģļĀźņ£╝ļĪ£ e[0,1,0,0]ņØ┤ļØ╝ļŖö ņČ£ļĀźņØä ņśłņĖĪĒĢśļŖö ļ░®Ē¢źņ£╝ļĪ£ ĒĢÖņŖĄņØ┤ ņ¦äĒ¢ēļÉ©. ĻĘĖ ļŗżņØī l[0,0,1,0]Ļ│╝ o[0,0,0,1]ņŚÉ ļīĆĒĢ┤ņä£ ļÅä ļ¦łņ░¼Ļ░Ćņ¦ĆļĪ£ ĒĢÖņŖĄņØ┤ ņ¦äĒ¢ēļÉ©.

- 12. ņØ┤ļ¤¼ĒĢ£ RNNņØĆ ĒŖ╣ņ¦ĢņØ┤ ĒĢ£Ļ░Ćņ¦ĆĻ░Ć ņ׳ļŖöļŹ░, ļ¦żĻ░£ļ│Ćņłśļź╝ Ļ│Ąņ£ĀĒĢ£ļŗżļŖö Ļ▓ā. ņ”ē ņ×ģļĀźĻ░Æņ£╝ļĪ£ Øæź ØæĪŌłÆ1ņØä ļäŻļōĀ, Øæź ØæĪ+4ļź╝ ļäŻļōĀ Ļ░Ćņżæņ╣śĒ¢ēļĀ¼ ØæŖØæźŌäÄļŖö Ļ░ÖņØĆ Ļ▓āņØä ņé¼ņÜ®ĒĢ£ļŗż. ļ¦łņ░¼Ļ░Ćņ¦ĆļĪ£ ŌäÄ ØæĪņŚÉņä£ ŌäÄ ØæĪ+1ļĪ£ ļäśņ¢┤Ļ░ł ļĢīļéś, ŌäÄ ØæĪ+17ņŚÉņä£ ŌäÄ ØæĪ+18ļĪ£ ļäśņ¢┤Ļ░ł ļĢī, ļśæĻ░ÖņØĆ ØæŖŌäÄŌäÄļź╝ ņé¼ņÜ®ĒĢ£ļŗż. ņØ┤ļŖö ļŗżļźĖ ļ¦żĻ░£ļ│ĆņłśņŚÉļÅä ļ¦łņ░¼Ļ░Ćņ¦ĆņØ┤ļŗż. ņØ┤ļ¤¼ĒĢ£ ĒŖ╣ņ¦Ģņ£╝ļĪ£ ņØĖĒĢ┤ ĒĢÖņŖĄņØś ņåŹļÅäļŖö ļ╣Āļź┤Ļ▓ī ņ”ØĻ░ĆĒĢśņ¦Ćļ¦ī, ņåÉņŗżņØä ņĄ£ņåīļĪ£ ĒĢśļŖö ļ¦żĻ░£ļ│Ćņłśļź╝ ļ░£Ļ▓¼ĒĢśļŖö Ļ│╝ņĀĢņŚÉ ņ׳ņ¢┤ņä£ļŖö ņĄ£ņĀüĒÖöĻ░Ć ļŗżņåī ņ¢┤ļĀżņÜĖ ņłś ņ׳ļŗżļŖö ņĀÉņØ┤ ņ׳ļŗż. RNNņØś ĒŖ╣ņ¦Ģ

- 13. ņ¢æļ░®Ē¢ź RNN ņØ┤ņĀäņØś ņĀĢļ│┤ļōżņØä ĒÖ£ņÜ®ĒĢśļŖö RNNņ×Éņ▓┤ļĪ£ļÅä ņóŗņØĆ ņä▒ļŖźņØä ĻĖ░ļīĆĒĢĀ ņłś ņ׳ņ¦Ćļ¦ī, ņØ┤ĒøäņØś ņĀĢļ│┤ļź╝ ņé¼ņÜ®ĒĢśņŚ¼ ļŹö ņóŗņØĆ Ļ▓░Ļ│╝ļź╝ ĻĖ░ļīĆĒĢĀ ņłś ņ׳ļŗż. Ex) ŌĆśĒæĖļźĖ ĒĢśļŖśņŚÉ _____ ņØ┤ ļ¢Āņ׳ļŗżŌĆÖ ļØ╝ļŖö ļ¼ĖņןņŚÉņä£ Ļ░ĆņÜ┤ļŹ░ ļ╣łņ╣ĖņŚÉ ļōżņ¢┤Ļ░ł ļŗ©ņ¢┤ļź╝ ņśłņĖĪĒĢĀ ļĢī, ŌĆśĒæĖļźĖŌĆÖ, ŌĆśĒĢśļŖśŌĆÖ ņØ┤ļØ╝ļŖö ņĀĢļ│┤ļĪ£ ĻĄ¼ļ”äņØä ņśłņĖĪĒĢĀ ņłśļÅä ņ׳ņ¦Ćļ¦ī, ņØ┤ĒøäņØś ņĀĢļ│┤ņØĖ ŌĆśļ¢Āņ׳ļŗżŌĆÖļź╝ ĒåĄĒĢ┤ņä£ ļ│┤ļŗż ņĀĢĒÖĢĒĢśĻ▓ī ĻĄ¼ļ”äņØ┤ļØ╝ļŖö ļŗ©ņ¢┤ļź╝ ņśłņĖĪĒĢĀ ņłś ņ׳ļŗż.

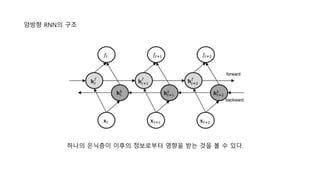

- 14. ņ¢æļ░®Ē¢ź RNNņØś ĻĄ¼ņĪ░ ĒĢśļéśņØś ņØĆļŗēņĖĄņØ┤ ņØ┤ĒøäņØś ņĀĢļ│┤ļĪ£ļČĆĒä░ ņśüĒ¢źņØä ļ░øļŖö Ļ▓āņØä ļ│╝ ņłś ņ׳ļŗż.

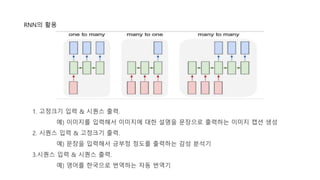

- 15. RNNņØś ĒÖ£ņÜ® 1. Ļ│ĀņĀĢĒü¼ĻĖ░ ņ×ģļĀź & ņŗ£ĒĆĆņŖż ņČ£ļĀź. ņśł) ņØ┤ļ»Ėņ¦Ćļź╝ ņ×ģļĀźĒĢ┤ņä£ ņØ┤ļ»Ėņ¦ĆņŚÉ ļīĆĒĢ£ ņäżļ¬ģņØä ļ¼Ėņןņ£╝ļĪ£ ņČ£ļĀźĒĢśļŖö ņØ┤ļ»Ėņ¦Ć ņ║Īņģś ņāØņä▒ 2. ņŗ£ĒĆĆņŖż ņ×ģļĀź & Ļ│ĀņĀĢĒü¼ĻĖ░ ņČ£ļĀź. ņśł) ļ¼ĖņןņØä ņ×ģļĀźĒĢ┤ņä£ ĻĖŹļČĆņĀĢ ņĀĢļÅäļź╝ ņČ£ļĀźĒĢśļŖö Ļ░Éņä▒ ļČäņäØĻĖ░ 3.ņŗ£ĒĆĆņŖż ņ×ģļĀź & ņŗ£ĒĆĆņŖż ņČ£ļĀź. ņśł) ņśüņ¢┤ļź╝ ĒĢ£ĻĄŁņ£╝ļĪ£ ļ▓łņŚŁĒĢśļŖö ņ×ÉļÅÖ ļ▓łņŚŁĻĖ░

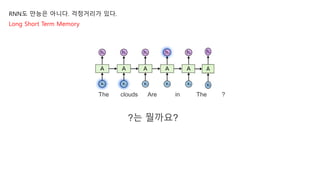

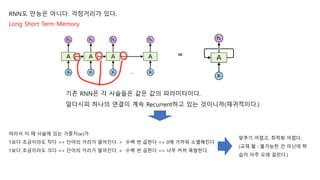

- 16. RNNļÅä ļ¦īļŖźņØĆ ņĢäļŗłļŗż. Ļ▒▒ņĀĢĻ▒░ļ”¼Ļ░Ć ņ׳ļŗż. Long Short Term Memory The clouds Are in The ? ?ļŖö ļŁśĻ╣īņÜö?

- 17. RNNļÅä ļ¦īļŖźņØĆ ņĢäļŗłļŗż. Ļ▒▒ņĀĢĻ▒░ļ”¼Ļ░Ć ņ׳ļŗż. Long Short Term Memory "the clouds are in the ļØ╝ļŖö ņ×ģļĀźĻ░ÆņØä ļ░øĻ│Ā ļ¦łņ¦Ćļ¦ē ļŗ©ņ¢┤ļź╝ ņśłņĖĪĒĢ┤ņĢ╝ ĒĢ£ļŗżļ®┤, ļ│┤ĒåĄ ņÜ░ļ”¼ļŖö ļŹö ņØ┤ņāüņØś ļ¼Ėļ¦ź(ņĀĢļ│┤)ņØ┤ ĒĢäņÜöĒĢśņ¦Ć ņĢŖļŗż. ļ¬ģĒÖĢĒĢśĻ▓ī ļŗżņØīņŚÉ ņ×ģļĀźļÉĀ ļŗ©ņ¢┤ļŖö skyĻ░Ć ļÉĀ ĒÖĢļźĀņØ┤ ļåÆļŗż. ņØ┤ļ¤¼ĒĢ£ Ļ▓ĮņÜ░ņ▓ś ļ¤╝, ņĀ£Ļ│ĄļÉ£ ļŹ░ņØ┤Ēä░ņÖĆ ņśłņĖĪĒĢ┤ņĢ╝ ĒĢĀ ņĀĢļ│┤ņØś ņ×ģļĀź ņ£äņ╣ś ņ░©ņØ┤(Gap)Ļ░Ć Ēü¼ņ¦Ć ņĢŖļŗżļ®┤, RNNņØĆ Ļ│╝Ļ▒░ņØś ļŹ░ņØ┤ Ēä░ļź╝ ĻĖ░ļ░śņ£╝ļĪ£ ņēĮĻ▓ī ĒĢÖņŖĄĒĢĀ ņłś ņ׳ļŗż. The clouds Are in The ?

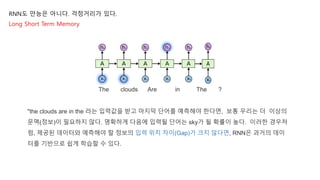

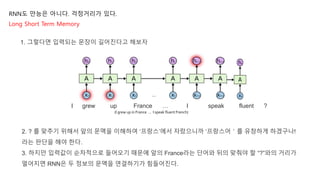

- 18. RNNļÅä ļ¦īļŖźņØĆ ņĢäļŗłļŗż. Ļ▒▒ņĀĢĻ▒░ļ”¼Ļ░Ć ņ׳ļŗż. Long Short Term Memory 2. ? ļź╝ ļ¦×ņČöĻĖ░ ņ£äĒĢ┤ņä£ ņĢ×ņØś ļ¼Ėļ¦źņØä ņØ┤ĒĢ┤ĒĢśņŚ¼ ŌĆśĒöäļ×æņŖżŌĆÖņŚÉņä£ ņ×Éļ×Éņ£╝ļŗłĻ╣ī ŌĆśĒöäļ×æņŖżņ¢┤’╝ćļź╝ ņ£Āņ░ĮĒĢśĻ▓ī ĒĢśĻ▓ĀĻĄ¼ļéś! ļØ╝ļŖö ĒīÉļŗ©ņØä ĒĢ┤ņĢ╝ ĒĢ£ļŗż. 3. ĒĢśņ¦Ćļ¦ī ņ×ģļĀźĻ░ÆņØ┤ ņł£ņ░©ņĀüņ£╝ļĪ£ ļōżņ¢┤ņśżĻĖ░ ļĢīļ¼ĖņŚÉ ņĢ×ņØś FranceļØ╝ļŖö ļŗ©ņ¢┤ņÖĆ ļÆżņØś ļ¦×ņČ░ņĢ╝ ĒĢĀ ŌĆ£?ŌĆØņÖĆņØś Ļ▒░ļ”¼Ļ░Ć ļ®Ćņ¢┤ņ¦Ćļ®┤ RNNņØĆ ļæÉ ņĀĢļ│┤ņØś ļ¼Ėļ¦źņØä ņŚ░Ļ▓░ĒĢśĻĖ░Ļ░Ć Ēלļōżņ¢┤ņ¦äļŗż. grew up France ŌĆ” I speak fluent ? (I grew up in France ... I speak fluent French) 1. ĻĘĖļĀćļŗżļ®┤ ņ×ģļĀźļÉśļŖö ļ¼ĖņןņØ┤ ĻĖĖņ¢┤ņ¦äļŗżĻ│Ā ĒĢ┤ļ│┤ņ×É I

- 19. RNNļÅä ļ¦īļŖźņØĆ ņĢäļŗłļŗż. Ļ▒▒ņĀĢĻ▒░ļ”¼Ļ░Ć ņ׳ļŗż. Long Short Term Memory grew up France ŌĆ” I speak fluent ? (I grew up in France ... I speak fluent French) ņÖ£ Ļ▒░ļ”¼Ļ░Ć ļ®Ćņ¢┤ņ¦Ćļ®┤ ļ¦×ņČöĻĖ░Ļ░Ć Ēלļōżņ¦Ć?

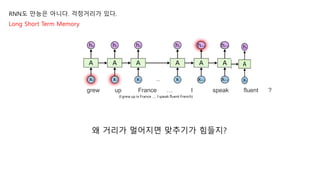

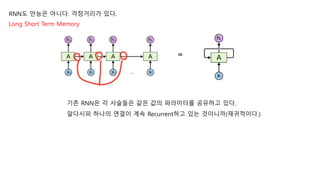

- 20. RNNļÅä ļ¦īļŖźņØĆ ņĢäļŗłļŗż. Ļ▒▒ņĀĢĻ▒░ļ”¼Ļ░Ć ņ׳ļŗż. ĻĖ░ņĪ┤ RNNņØĆ Ļ░ü ņé¼ņŖ¼ļōżņØĆ Ļ░ÖņØĆ Ļ░ÆņØś ĒīīļØ╝ļ»ĖĒä░ļź╝ Ļ│Ąņ£ĀĒĢśĻ│Ā ņ׳ļŗż. ņĢīļŗżņŗ£Ēö╝ ĒĢśļéśņØś ņŚ░Ļ▓░ņØ┤ Ļ│äņåŹ RecurrentĒĢśĻ│Ā ņ׳ļŖö Ļ▓āņØ┤ļŗłĻ╣ī(ņ×¼ĻĘĆņĀüņØ┤ļŗż.) Long Short Term Memory

- 21. RNNļÅä ļ¦īļŖźņØĆ ņĢäļŗłļŗż. Ļ▒▒ņĀĢĻ▒░ļ”¼Ļ░Ć ņ׳ļŗż. Long Short Term Memory ļö░ļØ╝ņä£ ņØ┤ ļĢī ņé¼ņŖ¼ņŚÉ ņ׳ļŖö Ļ░Ćņżæņ╣ś(Øæż)Ļ░Ć 1ļ│┤ļŗż ņĪ░ĻĖłņØ┤ļØ╝ļÅä ņ×æļŗż => ļŗ©ņ¢┤ņØś Ļ▒░ļ”¼Ļ░Ć ļ®Ćņ¢┤ņ¦äļŗż. = ņłśļ░▒ ļ▓ł Ļ│▒ĒĢ£ļŗż => 0ņŚÉ Ļ░ĆĻ╣īņøī ņåīļ®ĖĒĢ┤ņ¦äļŗż 1ļ│┤ļŗż ņĪ░ĻĖłņØ┤ļØ╝ļÅä Ēü¼ļŗż => ļŗ©ņ¢┤ņØś Ļ▒░ļ”¼Ļ░Ć ļ®Ćņ¢┤ņ¦äļŗż. = ņłśļ░▒ ļ▓ł Ļ│▒ĒĢ£ļŗż => ļäłļ¼┤ ņ╗żņĀĖ ĒÅŁļ░£ĒĢ£ļŗż. ļ¦×ņČöĻĖ░ ņ¢┤ļĀĄĻ│Ā, ņĄ£ņĀüĒÖö ņ¢┤ļĀĄļŗż. (ĻĄÉņ×¼ ņÖł : ļČłĻ░ĆļŖźĒĢ£ Ļ▒┤ ņĢäļŗīļŹ░ ĒĢÖ ņŖĄņØ┤ ņĢäņŻ╝ ņśżļל Ļ▒Ėļ”░ļŗż.) ĻĖ░ņĪ┤ RNNņØĆ Ļ░ü ņé¼ņŖ¼ļōżņØĆ Ļ░ÖņØĆ Ļ░ÆņØś ĒīīļØ╝ļ»ĖĒä░ņØ┤ļŗż. ņĢīļŗżņŗ£Ēö╝ ĒĢśļéśņØś ņŚ░Ļ▓░ņØ┤ Ļ│äņåŹ RecurrentĒĢśĻ│Ā ņ׳ļŖö Ļ▓āņØ┤ļŗłĻ╣ī(ņ×¼ĻĘĆņĀüņØ┤ļŗż.)

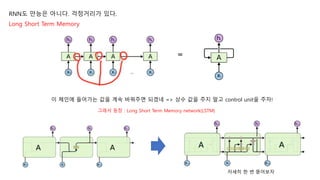

- 22. RNNļÅä ļ¦īļŖźņØĆ ņĢäļŗłļŗż. Ļ▒▒ņĀĢĻ▒░ļ”¼Ļ░Ć ņ׳ļŗż. Long Short Term Memory ņØ┤ ņ▓┤ņØĖņŚÉ ļōżņ¢┤Ļ░ĆļŖö Ļ░ÆņØä Ļ│äņåŹ ļ░öĻ┐öņŻ╝ļ®┤ ļÉśĻ▓Āļäż => ņāüņłś Ļ░ÆņØä ņŻ╝ņ¦Ć ļ¦ÉĻ│Ā control unitņØä ņŻ╝ņ×É! ĻĘĖļלņä£ ļō▒ņן : Long Short Term Memory network(LSTM) ņ×ÉņäĖĒ׳ ĒĢ£ ļ▓ł ļ£»ņ¢┤ļ│┤ņ×É

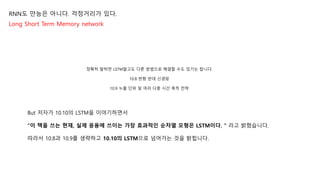

- 23. RNNļÅä ļ¦īļŖźņØĆ ņĢäļŗłļŗż. Ļ▒▒ņĀĢĻ▒░ļ”¼Ļ░Ć ņ׳ļŗż. Long Short Term Memory network ņĀĢĒÖĢĒ׳ ļ¦ÉĒĢśļ®┤ LSTMļ¦ÉĻ│ĀļÅä ļŗżļźĖ ļ░®ļ▓Ģņ£╝ļĪ£ ĒĢ┤Ļ▓░ĒĢĀ ņłśļÅä ņ׳ĻĖ░ļŖö ĒĢ®ļŗłļŗż. 10.8 ļ░śĒ¢ź ļ░śļīĆ ņŗĀĻ▓Įļ¦Ø 10.9 ļłäņČ£ ļŗ©ņ£ä ļ░Å ņŚ¼ļ¤¼ ļŗżņżæ ņŗ£Ļ░ä ņČĢņĀü ņĀäļץ But ņĀĆņ×ÉĻ░Ć 10.10ņØś LSTMņØä ņØ┤ņĢ╝ĻĖ░ĒĢśļ®┤ņä£ ŌĆ£ņØ┤ ņ▒ģņØä ņō░ļŖö Ēśäņ×¼, ņŗżņĀ£ ņØæņÜ®ņŚÉ ņō░ņØ┤ļŖö Ļ░Ćņן ĒÜ©Ļ│╝ņĀüņØĖ ņł£ņ░©ņŚ┤ ļ¬©ĒśĢņØĆ LSTMņØ┤ļŗż. ŌĆ£ ļØ╝Ļ│Ā ļ░ØĒśöņŖĄļŗłļŗż. ļö░ļØ╝ņä£ 10.8Ļ│╝ 10.9ļź╝ ņāØļץĒĢśĻ│Ā 10.10ņØś LSTMņ£╝ļĪ£ ļäśņ¢┤Ļ░ĆļŖö Ļ▓āņØä ļ░ØĒ×Öļŗłļŗż.

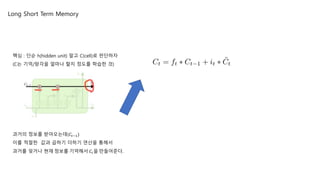

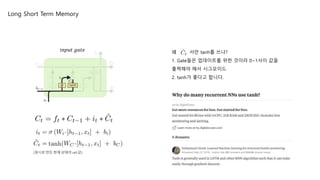

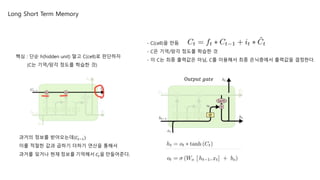

- 24. Long Short Term Memory ĒĢĄņŗ¼ : ļŗ©ņł£ h(hidden unit) ļ¦ÉĻ│Ā C(cell)ļĪ£ ĒīÉļŗ©ĒĢśņ×É (CļŖö ĻĖ░ņ¢Ą/ļ¦ØĻ░üņØä ņ¢╝ļ¦łļéś ĒĢĀņ¦Ć ņĀĢļÅäļź╝ ĒĢÖņŖĄĒĢ£ Ļ▓ā)

- 25. Long Short Term Memory Ļ│╝Ļ▒░ņØś ņĀĢļ│┤ļź╝ ļ░øņĢäņśżļŖöļŹ░(ØÉČØæĪŌłÆ1) ņØ┤ļź╝ ņĀüņĀłĒĢ£ Ļ░ÆĻ│╝ Ļ│▒ĒĢśĻĖ░ ļŹöĒĢśĻĖ░ ņŚ░ņé░ņØä ĒåĄĒĢ┤ņä£ Ļ│╝Ļ▒░ļź╝ ņ×ŖĻ▒░ļéś Ēśäņ×¼ ņĀĢļ│┤ļź╝ ĻĖ░ņ¢ĄĒĢ┤ņä£ ØÉČØæĪņØä ļ¦īļōżņ¢┤ņżĆļŗż. ĒĢĄņŗ¼ : ļŗ©ņł£ h(hidden unit) ļ¦ÉĻ│Ā C(cell)ļĪ£ ĒīÉļŗ©ĒĢśņ×É (CļŖö ĻĖ░ņ¢Ą/ļ¦ØĻ░üņØä ņ¢╝ļ¦łļéś ĒĢĀņ¦Ć ņĀĢļÅäļź╝ ĒĢÖņŖĄĒĢ£ Ļ▓ā)

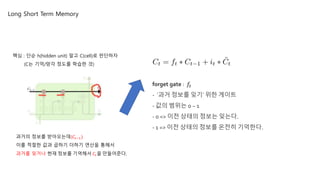

- 26. Long Short Term Memory ĒĢĄņŗ¼ : ļŗ©ņł£ h(hidden unit) ļ¦ÉĻ│Ā C(cell)ļĪ£ ĒīÉļŗ©ĒĢśņ×É (CļŖö ĻĖ░ņ¢Ą/ļ¦ØĻ░ü ņĀĢļÅäļź╝ ĒĢÖņŖĄĒĢ£ Ļ▓ā) Ļ│╝Ļ▒░ņØś ņĀĢļ│┤ļź╝ ļ░øņĢäņśżļŖöļŹ░(ØÉČØæĪŌłÆ1) ņØ┤ļź╝ ņĀüņĀłĒĢ£ Ļ░ÆĻ│╝ Ļ│▒ĒĢśĻĖ░ ļŹöĒĢśĻĖ░ ņŚ░ņé░ņØä ĒåĄĒĢ┤ņä£ Ļ│╝Ļ▒░ļź╝ ņ×ŖĻ▒░ļéś Ēśäņ×¼ ņĀĢļ│┤ļź╝ ĻĖ░ņ¢ĄĒĢ┤ņä£ ØÉČØæĪņØä ļ¦īļōżņ¢┤ņżĆļŗż. forget gate : ØæōØæĪ - ŌĆśĻ│╝Ļ▒░ ņĀĢļ│┤ļź╝ ņ×ŖĻĖ░ŌĆÖ ņ£äĒĢ£ Ļ▓īņØ┤ĒŖĖ - Ļ░ÆņØś ļ▓öņ£äļŖö 0 ~ 1 - 0 => ņØ┤ņĀä ņāüĒā£ņØś ņĀĢļ│┤ļŖö ņ×ŖļŖöļŗż. - 1 => ņØ┤ņĀä ņāüĒā£ņØś ņĀĢļ│┤ļź╝ ņś©ņĀäĒ׳ ĻĖ░ņ¢ĄĒĢ£ļŗż.

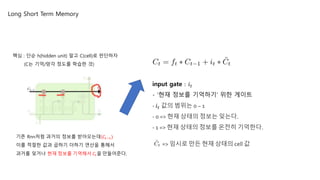

- 27. Long Short Term Memory ĒĢĄņŗ¼ : ļŗ©ņł£ h(hidden unit) ļ¦ÉĻ│Ā C(cell)ļĪ£ ĒīÉļŗ©ĒĢśņ×É (CļŖö ĻĖ░ņ¢Ą/ļ¦ØĻ░ü ņĀĢļÅäļź╝ ĒĢÖņŖĄĒĢ£ Ļ▓ā) ĻĖ░ņĪ┤ Rnnņ▓śļ¤╝ Ļ│╝Ļ▒░ņØś ņĀĢļ│┤ļź╝ ļ░øņĢäņśżļŖöļŹ░(ØÉČØæĪŌłÆ1) ņØ┤ļź╝ ņĀüņĀłĒĢ£ Ļ░ÆĻ│╝ Ļ│▒ĒĢśĻĖ░ ļŹöĒĢśĻĖ░ ņŚ░ņé░ņØä ĒåĄĒĢ┤ņä£ Ļ│╝Ļ▒░ļź╝ ņ×ŖĻ▒░ļéś Ēśäņ×¼ ņĀĢļ│┤ļź╝ ĻĖ░ņ¢ĄĒĢ┤ņä£ ØÉČØæĪņØä ļ¦īļōżņ¢┤ņżĆļŗż. input gate : Øæ¢ ØæĪ - ŌĆśĒśäņ×¼ ņĀĢļ│┤ļź╝ ĻĖ░ņ¢ĄĒĢśĻĖ░ŌĆÖ ņ£äĒĢ£ Ļ▓īņØ┤ĒŖĖ - Øæ¢ ØæĪ Ļ░ÆņØś ļ▓öņ£äļŖö 0 ~ 1 - 0 => Ēśäņ×¼ ņāüĒā£ņØś ņĀĢļ│┤ļŖö ņ×ŖļŖöļŗż. - 1 => Ēśäņ×¼ ņāüĒā£ņØś ņĀĢļ│┤ļź╝ ņś©ņĀäĒ׳ ĻĖ░ņ¢ĄĒĢ£ļŗż. => ņ×äņŗ£ļĪ£ ļ¦īļōĀ Ēśäņ×¼ ņāüĒā£ņØś cell Ļ░Æ

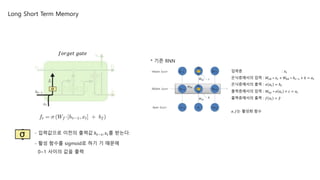

- 28. Long Short Term Memory - ĒÖ£ņä▒ ĒĢ©ņłśļź╝ sigmoidļĪ£ ĒĢśĻĖ░ ĻĖ░ ļĢīļ¼ĖņŚÉ 0~1 ņé¼ņØ┤ņØś Ļ░ÆņØä ņČ£ļĀź ØæōØæ£Øæ¤ØæöØæÆØæĪ ØæöØæÄØæĪØæÆ * ĻĖ░ņĪ┤ RNN - ņ×ģļĀźĻ░Æņ£╝ļĪ£ ņØ┤ņĀäņØś ņČ£ļĀźĻ░Æ ŌäÄ ØæĪŌłÆ1, ØæźØæĪļź╝ ļ░øļŖöļŗż. ņ×ģļĀźņĖĄ : ØæźØæĪ ņØĆļŗēņĖĄņŚÉņä£ņØś ņ×ģļĀź : ØæŖØæźŌäÄ ŌłŚ ØæźØæĪ + ØæŖŌäÄŌäÄ ŌłŚ ŌäÄ ØæĪŌłÆ1 + ØæÅ = ØæÄ ØæĪ ņØĆļŗēņĖĄņŚÉņä£ņØś ņČ£ļĀź : Ø£Ä ØæÄ ØæĪ = ŌäÄ ØæĪ ņČ£ļĀźņĖĄņŚÉņä£ņØś ņ×ģļĀź : ØæŖŌäÄØæ” ŌłŚ Ø£Ä ØæÄ ØæĪ + ØæÉ = Øæ£ØæĪ ņČ£ļĀźņĖĄņŚÉņä£ņØś ņČ£ļĀź : Øæō Øæ£ØæĪ = Øæ” Ø£Ä, ØæōļŖö ĒÖ£ņä▒ĒÖö ĒĢ©ņłś

- 29. Long Short Term Memory iØæøØæØØæóØæĪ ØæöØæÄØæĪØæÆ (ņ×äņŗ£ļĪ£ ļ¦īļōĀ Ēśäņ×¼ ņāüĒā£ņØś cell Ļ░Æ) ņÖ£ ņä£ļ¦ī tanhļź╝ ņō░ļāÉ? 1. GateļōżņØĆ ņŚģļŹ░ņØ┤ĒŖĖļź╝ ņ£äĒĢ£ Ļ▓āņØ┤ļØ╝ 0~1ņé¼ņØ┤ Ļ░ÆņØä ņČ£ļĀźĒĢ┤ņĢ╝ ĒĢ┤ņä£ ņŗ£ĻĘĖļ¬©ņØ┤ļō£ 2. tanhĻ░Ć ņóŗļŗżĻ│Ā ĒĢ®ļŗłļŗż.

- 30. Long Short Term Memory OØæóØæĪØæØØæóØæĪ ØæöØæÄØæĪØæÆ - C(cell)ņØä ļ¦īļō¼ - CņØĆ ĻĖ░ņ¢Ą/ļ¦ØĻ░ü ņĀĢļÅäļź╝ ĒĢÖņŖĄĒĢ£ Ļ▓ā - ņØ┤ CļŖö ņĄ£ņóģ ņČ£ļĀźĻ░ÆņØĆ ņĢäļŗś, Cļź╝ ņØ┤ņÜ®ĒĢ┤ņä£ ņĄ£ņóģ ņØĆļŗēņĖĄņŚÉņä£ ņČ£ļĀźĻ░ÆņØä Ļ▓░ņĀĢĒĢ£ļŗż. ĒĢĄņŗ¼ : ļŗ©ņł£ h(hidden unit) ļ¦ÉĻ│Ā C(cell)ļĪ£ ĒīÉļŗ©ĒĢśņ×É (CļŖö ĻĖ░ņ¢Ą/ļ¦ØĻ░ü ņĀĢļÅäļź╝ ĒĢÖņŖĄĒĢ£ Ļ▓ā) Ļ│╝Ļ▒░ņØś ņĀĢļ│┤ļź╝ ļ░øņĢäņśżļŖöļŹ░(ØÉČØæĪŌłÆ1) ņØ┤ļź╝ ņĀüņĀłĒĢ£ Ļ░ÆĻ│╝ Ļ│▒ĒĢśĻĖ░ ļŹöĒĢśĻĖ░ ņŚ░ņé░ņØä ĒåĄĒĢ┤ņä£ Ļ│╝Ļ▒░ļź╝ ņ×ŖĻ▒░ļéś Ēśäņ×¼ ņĀĢļ│┤ļź╝ ĻĖ░ņ¢ĄĒĢ┤ņä£ ØÉČØæĪņØä ļ¦īļōżņ¢┤ņżĆļŗż.

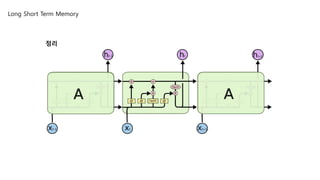

- 31. ņ¦ĆĻĖłĻ╣īņ¦Ć LSTMņØĆ ĻĄēņןĒ׳ ĒÅēļ▓öĒĢ£ LSTM ļ¬©ļōĀ LSTMņØ┤ ņ£äņÖĆ ļÅÖņØ╝ĒĢ£ ĻĄ¼ņĪ░ļŖö x (ĻĘĖļלļÅäŌĆ”ļ╣äņŖĘļ╣äņŖĘĒĢśĻĖ░ļŖö ĒĢ┤ņÜö) Long Short Term Memory ņĀĢļ”¼

- 32. ņ¦ĆĻĖłĻ╣īņ¦Ć LSTMņØĆ ĻĄēņןĒ׳ ĒÅēļ▓öĒĢ£ LSTM ļ¬©ļōĀ LSTMņØ┤ ņ£äņÖĆ ļÅÖņØ╝ĒĢ£ ĻĄ¼ņĪ░ļŖö x (ĻĘĖļלļÅäŌĆ”ļ╣äņŖĘļ╣äņŖĘĒĢśĻĖ░ļŖö ĒĢ┤ņÜö) Long Short Term Memory

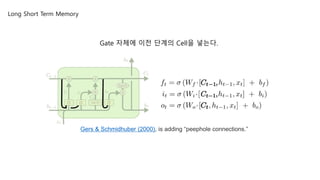

- 33. Gate ņ×Éņ▓┤ņŚÉ ņØ┤ņĀä ļŗ©Ļ│äņØś CellņØä ļäŻļŖöļŗż. Long Short Term Memory Gers & Schmidhuber (2000), is adding ŌĆ£peephole connections.ŌĆØ

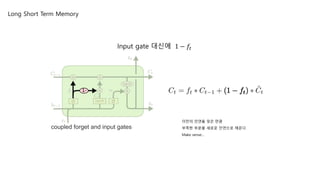

- 34. Input gate ļīĆņŗĀņŚÉ 1 ŌłÆ ØæōØæĪ ņØ┤ņĀäņØś ņØĖņŚ░ņØä ņ×ŖņØĆ ļ¦īĒü╝ ļČĆņĪ▒ĒĢ£ ļČĆļČäņØä ņāłļĪ£ņÜ┤ ņØĖņŚ░ņ£╝ļĪ£ ņ▒äņÜ┤ļŗż. Make senseŌĆ” Long Short Term Memory coupled forget and input gates

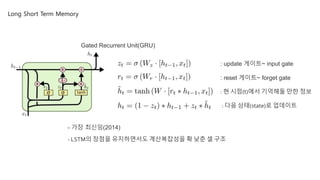

- 35. Long Short Term Memory Gated Recurrent Unit(GRU) - Ļ░Ćņן ņĄ£ņŗĀņ×ä(2014) - LSTMņØś ņןņĀÉņØä ņ£Āņ¦ĆĒĢśļ®┤ņä£ļÅä Ļ│äņé░ļ│Ąņ×Īņä▒ņØä ĒÖĢ ļé«ņČś ņģĆ ĻĄ¼ņĪ░ : update Ļ▓īņØ┤ĒŖĖ~ input gate : reset Ļ▓īņØ┤ĒŖĖ~ forget gate : Ēśä ņŗ£ņĀÉ(t)ņŚÉņä£ ĻĖ░ņ¢ĄĒĢ┤ļæś ļ¦īĒĢ£ ņĀĢļ│┤ : ļŗżņØī ņāüĒā£(state)ļĪ£ ņŚģļŹ░ņØ┤ĒŖĖ

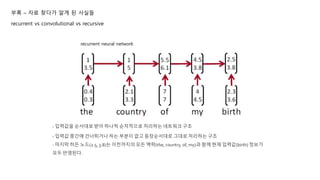

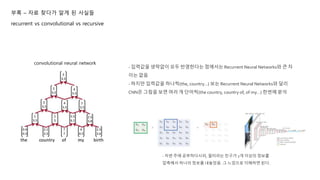

- 36. ļČĆļĪØ ŌĆō ņ×ÉļŻī ņ░ŠļŗżĻ░Ć ņĢīĻ▓ī ļÉ£ ņé¼ņŗżļōż recurrent vs convolutional vs recursive recurrent neural network - ņ×ģļĀźĻ░ÆņØä ņł£ņä£ļīĆļĪ£ ļ░øņĢä ĒĢśļéśņö® ņł£ņ░©ņĀüņ£╝ļĪ£ ņ▓śļ”¼ĒĢśļŖö ļäżĒŖĖņøīĒü¼ ĻĄ¼ņĪ░ - ņ×ģļĀźĻ░Æ ņżæĻ░äņŚÉ Ļ▒┤ļäłļø░Ļ▒░ļéś ĒĢśļŖö ļČĆļČäņØ┤ ņŚåĻ│Ā ļō▒ņןņł£ņä£ļīĆļĪ£ ĻĘĖļīĆļĪ£ ņ▓śļ”¼ĒĢśļŖö ĻĄ¼ņĪ░ - ļ¦łņ¦Ćļ¦ē Ē׳ļōĀ ļģĖļō£(2.5, 3.8)ļŖö ņØ┤ņĀäĻ╣īņ¦ĆņØś ļ¬©ļōĀ ļ¦źļØĮ(the, country, of, my)Ļ│╝ ĒĢ©Ļ╗ś Ēśäņ×¼ ņ×ģļĀźĻ░Æ(birth) ņĀĢļ│┤Ļ░Ć ļ¬©ļæÉ ļ░śņśüļÉ£ļŗż.

- 37. ļČĆļĪØ ŌĆō ņ×ÉļŻī ņ░ŠļŗżĻ░Ć ņĢīĻ▓ī ļÉ£ ņé¼ņŗżļōż recurrent vs convolutional vs recursive convolutional neural network - ņ×ģļĀźĻ░ÆņØä ņāØļץņŚåņØ┤ ļ¬©ļæÉ ļ░śņśüĒĢ£ļŗżļŖö ņĀÉņŚÉņä£ļŖö Recurrent Neural NetworksņÖĆ Ēü░ ņ░© ņØ┤ļŖö ņŚåņØī - ĒĢśņ¦Ćļ¦ī ņ×ģļĀźĻ░ÆņØä ĒĢśļéśņö®(the, countryŌĆ”) ļ│┤ļŖö Recurrent Neural NetworksņÖĆ ļŗ¼ļ”¼ CNNņØĆ ĻĘĖļ”╝ņØä ļ│┤ļ®┤ ņŚ¼ļ¤¼ Ļ░£ ļŗ©ņ¢┤ņö®(the country, country of, of myŌĆ”) ĒĢ£ļ▓łņŚÉ ļČäņäØ - ņĀĆļ▓ł ņŻ╝ņŚÉ Ļ│ĄļČĆĒĢśļŗżņŗ£Ēö╝, ĒĢäĒä░ļØ╝ļŖö ņ╣£ĻĄ¼Ļ░Ć 2Ļ░£ ņØ┤ņāüņØś ņĀĢļ│┤ļź╝ ņĢĢņČĢĒĢ┤ņä£ ĒĢśļéśņØś ņĀĢļ│┤ļź╝ ļé┤ļåōņĢśņØī. ĻĘĖ ļŖÉļéīņ£╝ļĪ£ ņØ┤ĒĢ┤ĒĢśļ®┤ ļÉ£ļŗż.

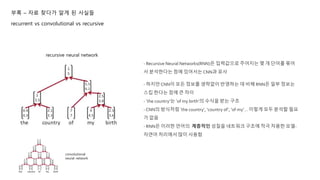

- 38. ļČĆļĪØ ŌĆō ņ×ÉļŻī ņ░ŠļŗżĻ░Ć ņĢīĻ▓ī ļÉ£ ņé¼ņŗżļōż recurrent vs convolutional vs recursive recursive neural network - ĒĢśņ¦Ćļ¦ī CNNņØ┤ ļ¬©ļōĀ ņĀĢļ│┤ļź╝ ņāØļץņŚåņØ┤ ļ░śņśüĒĢśļŖö ļŹ░ ļ╣äĒĢ┤ RNNņØĆ ņØ╝ļČĆ ņĀĢļ│┤ļŖö ņŖżĒéĄ ĒĢ£ļŗżļŖö ņĀÉņŚÉ Ēü░ ņ░©ņØ┤ - ŌĆśthe countryŌĆÖļŖö ŌĆśof my birthŌĆÖņØś ņłśņŗØņØä ļ░øļŖö ĻĄ¼ņĪ░ - CNNņØś ļ░®ņŗØņ▓śļ¤╝ ŌĆśthe countryŌĆÖ, ŌĆścountry ofŌĆÖ, ŌĆśof myŌĆÖŌĆ” ņØ┤ļĀćĻ▓ī ļ¬©ļæÉ ļČäņäØĒĢĀ ĒĢäņÜö Ļ░Ć ņŚåņØī - RNNņØĆ ņØ┤ļ¤¼ĒĢ£ ņ¢Ėņ¢┤ņØś Ļ│äņĖĄņĀüņØĖ ņä▒ņ¦łņØä ļäżĒŖĖņøīĒü¼ ĻĄ¼ņĪ░ņŚÉ ņĀüĻĘ╣ ņ░©ņÜ®ĒĢ£ ļ¬©ļŹĖ- ņ×ÉņŚ░ņ¢┤ ņ▓śļ”¼ņŚÉņä£ ļ¦ÄņØ┤ ņé¼ņÜ®ĒĢ© - Recursive Neural Networks(RNN)ņØĆ ņ×ģļĀźĻ░Æņ£╝ļĪ£ ņŻ╝ņ¢┤ņ¦ĆļŖö ļ¬ć Ļ░£ ļŗ©ņ¢┤ļź╝ ļ¼Čņ¢┤ ņä£ ļČäņäØĒĢ£ļŗżļŖö ņĀÉņŚÉ ņ׳ņ¢┤ņä£ļŖöCNNĻ│╝ ņ£Āņé¼ convolutional neural network

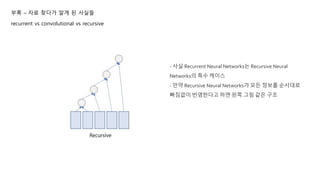

- 39. ļČĆļĪØ ŌĆō ņ×ÉļŻī ņ░ŠļŗżĻ░Ć ņĢīĻ▓ī ļÉ£ ņé¼ņŗżļōż recurrent vs convolutional vs recursive - ņé¼ņŗż Recurrent Neural NetworksļŖö Recursive Neural NetworksņØś ĒŖ╣ņłś ņ╝ĆņØ┤ņŖż - ļ¦īņĢĮ Recursive Neural NetworksĻ░Ć ļ¬©ļōĀ ņĀĢļ│┤ļź╝ ņł£ņä£ļīĆļĪ£ ļ╣Āņ¦ÉņŚåņØ┤ ļ░śņśüĒĢ£ļŗżĻ│Ā ĒĢśļ®┤ ņÖ╝ņ¬Į ĻĘĖļ”╝ Ļ░ÖņØĆ ĻĄ¼ņĪ░

- 40. ņØ┤ļź╝ Ļ░üļÅä ĒÜīņĀäĒĢ┤ņä£ ļ│┤ļ®┤ ļ│Ėņ¦łņĀüņ£╝ļĪ£ Recurrent Neural NetworksņÖĆ Ļ░ÖņØī ļČĆļĪØ ŌĆō ņ×ÉļŻī ņ░ŠļŗżĻ░Ć ņĢīĻ▓ī ļÉ£ ņé¼ņŗżļōż recurrent vs convolutional vs recursive ņśż ņ¦▒ ņŗĀĻĖ░..ņØ┤ņ»żņŚÉņä£ ļ░Ģņłś ĒĢ£ ļ▓ł

- 41. ļČĆļĪØ ŌĆō ņ×ÉļŻī ņ░ŠļŗżĻ░Ć ņĢīĻ▓ī ļÉ£ ņé¼ņŗżļōż convolutional + recursive ĻĖ░ņĪ┤ cnn -> ņé¼ņ¦äņØ┤ ļōżņ¢┤ņśżļ®┤ => ņÜöĻ▓āņØ┤ ļ¼┤ņŚćņØĖĻ░Ć? ļČäļźś

- 42. ļČĆļĪØ ŌĆō ņ×ÉļŻī ņ░ŠļŗżĻ░Ć ņĢīĻ▓ī ļÉ£ ņé¼ņŗżļōż convolutional + recursive ņŗ£Ļ│äņŚ┤ ļŹ░ņØ┤Ēä░ ņØĖņŗØņØĆ ĒĢ£ Ļ░£ņØś ļŹ░ņØ┤Ēä░ņŚÉ labelĒĢśļŖö Ļ▓ī ņĢäļŗłļØ╝ ņŚ░ņåŹņĀüņØĖ label ņśłņĖĪņØ┤ ĒĢäņÜö ņØ╝ļ░śņĀüņØĖ ļŹ░ņØ┤Ēä░ ņØĖņŗØĻ│╝ ļŗżļź┤Ļ▓ī ņŗ£Ļ│äņŚ┤ ņØ┤ļ»Ėņ¦Ć ļŹ░ņØ┤Ēä░ļź╝ ņØĖņŗØĒĢśĻ│Ā ņŗČļŗż. CNN + RNN ņØä ĒĢśņ×É! => CRNN

- 43. ļČĆļĪØ ŌĆō ņ×ÉļŻī ņ░ŠļŗżĻ░Ć ņĢīĻ▓ī ļÉ£ ņé¼ņŗżļōż convolutional + recursive ļ¼Ėļ¦źņŚÉ ļö░ļźĖ ņåÉĻĖĆņö© ļŗ©ņ¢┤, ļ¼Ėņן ņØĖņŗØ ņĢģļ│┤ ļ¼Ėļ¦ź ņØĖņŗØņŚÉņä£ ļåÆņØĆ ņä▒ļŖźņØä ļ│┤ņØĖļŗżĻ│Ā ĒĢ©

- 44. ļüØ!

Editor's Notes

- #25: Hidden unitņØ┤ļØ╝ļŖö Ļ▓āļÅä ņ׳ļŖöļŹ░ ņØ┤ņĀ£ļŖö cellņØ┤ļØ╝ļŖö Ļ▓āļÅä ņ׳ļŗż. Ļ│▒ĒĢśĻĖ░ļ×æ ļŹöĒĢśĻĖ░Ļ░Ć ļ│┤ņØ┤ņŻĀ? ņØ┤Ļ▓ī ļŁÉļāÉļ®┤ ĻĖ░ņĪ┤ņØś Ļ▓ā, Ct-1Ļ▓āņØ┤ļ×æ Ctļź╝ ņĀüņĀłĒ׳ ņä×ņ¢┤ņżä Ļ▒░ņśłņÜö ņśøļéĀĻ▒░ļ×æ Ēśäņ×¼ Ļ▒░ļ×æ ņĀüņĀłĒ׳ ņä×ņ¢┤ņŻ╝ļŖö Ļ▒░ => ĒĢśļéśņØś long term memoryļØ╝ ĒĢĀ Ļ▓āņØ┤ņĢ╝. ĻĘĖĻ▒Ė ļłäĻ░Ć ĒĢśļāÉ? CellņØ┤ ĒĢ£ļŗż.

- #27: Hidden unitņØ┤ļØ╝ļŖö Ļ▓āļÅä ņ׳ļŖöļŹ░ ņØ┤ņĀ£ļŖö cellņØ┤ļØ╝ļŖö Ļ▓āļÅä ņ׳ļŗż. Ļ│▒ĒĢśĻĖ░ļ×æ ļŹöĒĢśĻĖ░Ļ░Ć ļ│┤ņØ┤ņŻĀ? ņØ┤Ļ▓ī ļŁÉļāÉļ®┤ ĻĖ░ņĪ┤ņØś Ļ▓ā, Ct-1Ļ▓āņØ┤ļ×æ Ctļź╝ ņĀüņĀłĒ׳ ņä×ņ¢┤ņżä Ļ▒░ņśłņÜö ņśøļéĀĻ▒░ļ×æ Ēśäņ×¼ Ļ▒░ļ×æ ņĀüņĀłĒ׳ ņä×ņ¢┤ņżśņä£ long term memoryļź╝ ļ¦īļōĀļŗż. ĻĖ┤ ņŗ£Ļ░äļÅÖņĢł ĻĖ░ņ¢ĄĒĢśļŖö ļ®öļ¬©ļ”¼ļź╝ ļ¦īļōĀļŗż. ĻĘĖĻ▒Ė ļłäĻ░Ć ĒĢśļāÉ? CellņØ┤ ĒĢ£ļŗż.

- #28: Output gatesļŖö ņ¢╝ļ¦łļéś ļ░¢ņ£╝ļĪ£ Ēæ£ņČ£ĒĢĀņ¦Ć ņØ┤ļ¬©ļæÉĻ░Ć 0ņŚÉņä£ 1ņé¼ņØ┤ Ļ▓░Ļ│╝ņĀüņ£╝ļĪ£ ĻĖ░ņĪ┤ņØś ņŗ¼ĒöīĒĢ£ rnnņŚÉ ļ╣äĒĢ┤ņä£ Ē׳ļōĀ ņ£Āļŗø ļīĆņŗĀņŚÉ cellņØ┤ļØ╝ļŖö Ļ▓āņØä ļ¦īļō¼ ņØ┤ cellņØĆ ļĪ▒ĒģĆ ļ®öļ¬©ļ”¼ļź╝ ĻĖ░ņ¢ĄĒĢ£ļŗż ņĀüņĀłĒĢ£ t-1Ļ│╝ tļź╝ ņĪ░ĒĢ®ĒĢ┤ņä£ ņØ┤ outputņØä ļ¦īļōĀļŗż. ĻĖ░ņ¢ĄĒĢĀņ¦Ć ļ¦Éņ¦ĆļÅä ļŹ░ņØ┤Ēä░ņŚÉ ņØśĒĢ┤ņä£ ĒĢÖņŖĄļÉśĻ│Ā ĒĢÖņŖĄļÉ£ļŗżļŖö Ļ▒┤ gateĻ░Ć ĒĢÖņŖĄļÉ£ļŗż.

- #29: ņØĖĒÆŗ x(t)ņÖĆ ņØ┤ņĀäņØś ļ®öļ¬©ļ”¼ h(t-1) W[x,h]+b = W_x*x + W_h*h + b ļź╝ Ļ░äļץĒ׳ Ēæ£ĒśäĒĢ£ Ļ▓āņ×ģļŗłļŗż.’╗┐ Ht => short term memory ĻĘĖļĢīĻĘĖ ņł£Ļ░äņØś Ļ▓░Ļ│╝ Ļ░ÆņØ┤ļØ╝Ļ│Ā ļ│┤ļ®┤ ļÉśļĀżļéś

- #30: TanhļŖö ņāłļĪ£ņÜ┤ ņģĆ ņŖżĒģīņØ┤ĒŖĖņŚÉ ļŹöĒĢ┤ņ¦ł ņłś ņ׳ļŖö ņāłļĪ£ņÜ┤ Ēøäļ│┤ Ļ░ÆņØä ļ¦īļōżņ¢┤ļéĖļŗżĻ│Ā ļ┤ÉļÅä Ļ┤£ņ░«ņØä Ļ▓ā Ļ░Öļŗż.

- #38: ņĀĆļ▓łņŻ╝ļŖö rgbņĮöļō£ ņśĆļŖöļŹ░ ņØ┤ļ▓łņŚÉļŖö the country of my birthļØ╝ļŖö ņŗ£ĒĆĆņŖż ļŹ░ņØ┤Ēä░ŌĆ”ņĀĆ ĻĄ¼ņĪ░ļź╝ ļ│┤Ļ│Ā ņ×ģļĀź ļŹ░ņØ┤Ēä░ļŖö ļäłļ¼┤ ņŗĀĻ▓Įņō░ņ¦Ć ļ¦łņŗ£ĻĖĖ

![ĒĢ£ĻĖĆņ×É ņö® ņ×ģļĀźĒĢ┤ņä£ HelloļØ╝ļŖö ļŗ©ņ¢┤ļź╝ ņśłņĖĪĒĢśļŖö ņśłņŗ£

ņ▓śņØīņŚÉ h[1,0,0,0]ņØ┤ļØ╝ļŖö ņ×ģļĀźņ£╝ļĪ£

e[0,1,0,0]ņØ┤ļØ╝ļŖö ņČ£ļĀźņØä ņśłņĖĪĒĢśļŖö

ļ░®Ē¢źņ£╝ļĪ£ ĒĢÖņŖĄņØ┤ ņ¦äĒ¢ēļÉ©.

ĻĘĖ ļŗżņØī l[0,0,1,0]Ļ│╝ o[0,0,0,1]ņŚÉ ļīĆĒĢ┤ņä£

ļÅä ļ¦łņ░¼Ļ░Ćņ¦ĆļĪ£ ĒĢÖņŖĄņØ┤ ņ¦äĒ¢ēļÉ©.](https://image.slidesharecdn.com/chapter10sequencemodelingrecurrentandrecursivenets-190312114942/85/Chapter-10-sequence-modeling-recurrent-and-recursive-nets-11-320.jpg)

![[ĻĖ░ņ┤łĻ░£ļģÉ] Graph Convolutional Network (GCN)](https://cdn.slidesharecdn.com/ss_thumbnails/agistdkimgcn190507-190507153736-thumbnail.jpg?width=560&fit=bounds)

![[ņ╗┤Ēō©Ēä░ļ╣äņĀäĻ│╝ ņØĖĻ│Ąņ¦ĆļŖź] 7. ĒĢ®ņä▒Ļ│▒ ņŗĀĻ▓Įļ¦Ø 2](https://cdn.slidesharecdn.com/ss_thumbnails/lec7convolutionnetworks2-210213150820-thumbnail.jpg?width=560&fit=bounds)