Learning how to explain neural networks: PatternNet and PatternAttribution

- 1. Learning how to explain neural networks PatternNet and PatternAttribution PJ Kindermans et al. 2017 ņåÉĻĘ£ļ╣ł Ļ│ĀļĀżļīĆĒĢÖĻĄÉ ņé░ņŚģĻ▓ĮņśüĻ│ĄĒĢÖĻ│╝ Data Science & Business Analytics ņŚ░ĻĄ¼ņŗż

- 2. / 29 ļ¬®ņ░© 1. Ļ│╝Ļ▒░ ļ░®ļ▓ĢļĪĀļōżņØś ņĀäļ░śņĀüņØĖ ļ¼ĖņĀ£ņĀÉ 2. Ļ│╝Ļ▒░ ļ░®ļ▓ĢļĪĀ ļČäņäØ 1) DeConvNet 2) Guided BackProp 3. Linear model ĻĄ¼ņāü 1) Deterministic distractor 2) Additive isotropic Gaussian noise 4. Approaches 5. Quality criterion for signal estimator 6. Learning to estimate signal 1) Existing estimators 2) PatternNet & PatternAttribution 7. Experiments 2

- 3. / 29 0. ņÜöņĢĮ DataļŖö ņżæņÜöĒĢ£ ņØśļ»Ėļź╝ ļŗ┤Ļ│Ā ņ׳ļŖö SignalĻ│╝ ņōĖļ¬©ņŚåļŖö ļČĆļČäņØĖ DistractorļĪ£ ĻĄ¼ņä▒ļÉ£ļŗż. ņŗ£Ļ░üĒÖöļź╝ ĒĢ£ļŗżļ®┤ Signal ļČĆļČäņŚÉ ņżæņĀÉņØä ļæÉņ¢┤ņĢ╝ ĒĢ£ļŗż. ModelņØś weightļŖö DistractorņŚÉ ņśüĒ¢źņØä ļ¦ÄņØ┤ ļ░øĻĖ░ ļĢīļ¼ĖņŚÉŌĆ© ņŗ£Ļ░üĒÖöļź╝ ĒĢĀ ļĢī weightņŚÉļ¦ī ņØśņĪ┤ĒĢśļ®┤ ņóŗņ¦Ć ņĢŖņØĆ Ļ▓░Ļ│╝ļź╝ ļéĖļŗż. output yņÖĆ distractorņØś correlationņ£╝ļĪ£ signalņØś ņ¦łņØä ĒīÉļŗ©, ĒÅēĻ░ĆĒĢĀ ņłś ņ׳ļŗż. ņČ®ļČäĒ׳ ĒĢÖņŖĄļÉ£ ļ¬©ļŹĖņØś { weight, input, output } Ļ░Æņ£╝ļĪ£ linear, non-linearŌĆ© ļæÉ ļ░®ņŗØņ£╝ļĪ£ signalņØä ĻĄ¼ĒĢĀ ņłś ņ׳Ļ│Ā, ņØ┤ ņŗ£ĻĘĖļäÉļĪ£ ņŗ£Ļ░üĒÖöĒĢ£ļŗż. 3

- 4. / 29 1. Ļ│╝Ļ▒░ ļ░®ļ▓ĢļĪĀļōżņØś ļ¼ĖņĀ£ņĀÉ Deep learningņØä ņäżļ¬ģĒĢśĻĖ░ ņ£äĒĢ£ ļŗżņ¢æĒĢ£ ņŗ£ļÅäļōżņØ┤ ņ׳ņ¢┤ņÖöņØī ŌĆóDeConvNet, Guided BackProp, LRP(Layer-wise Relevance Propagation) ņ£ä ļ¬©ļŹĖļōżņØś ņŻ╝ņÜö Ļ░ĆņĀĢņØĆ ņżæņÜöĒĢ£ ņĀĢļ│┤Ļ░Ć ņĢĢņČĢļÉ£ OutputņØä ļÆżļĪ£ Backpropagation ņŗ£Ēéż ļ®┤ inputņŚÉ ņżæņÜöĒĢ£ ņĀĢļ│┤ļōżņØ┤ ņ¢┤ļ¢╗Ļ▓ī ĒĢ©ņČĢļÉśņ¢┤ņ׳ļŖöņ¦Ć ņĢī ņłś ņ׳ļŗżļŖö Ļ▓ā 4

- 5. / 29 1. Ļ│╝Ļ▒░ ļ░®ļ▓ĢļĪĀļōżņØś ļ¼ĖņĀ£ņĀÉ(ContŌĆÖd) ĒĢśņ¦Ćļ¦ī Ļ│╝Ļ▒░ ļ░®ļ▓ĢļĪĀļōżņØ┤ ņČöņČ£ĒĢ£ saliency mapļōżņØ┤ Ļ▓ēņ£╝ļĪĀ ĻĘĖļ¤┤ņŗĖĒĢ┤ļ│┤ņŚ¼ļÅä,ŌĆ© ņØ┤ļĪĀņĀüņ£╝ļĪ£ ņÖäļ▓ĮĒĢśĻ▓ī input dataņØś ņżæņÜöĒĢ£ ļČĆļČäļōżņØä ņČöņČ£ĒĢśņ¦ä ļ¬╗ĒĢ© DataļŖö SignalĻ│╝ Distractor ļæÉ Ļ░Ćņ¦ĆļĪ£ ĻĄ¼ļČäĒĢĀ ņłś ņ׳ļŖöļŹ░ ŌĆóRelevant signal: Dataļź╝ ļéśĒāĆļé┤ļŖö ņżæņÜöĒĢ£ ļČĆļČä, ĒĢĄņŗ¼ ļé┤ņÜ® ŌĆóDistracting component: DataņÖĆ Ļ┤ĆĻ│äņŚåļŖö noise SignalņØ┤ ņĢäļŗłļØ╝ DistractorņŚÉ Ēü¼Ļ▓ī ņóīņ¦ĆņÜ░ņ¦ĆļÉśļŖö Weight vectorļĪ£ ļ¬©ļŹĖņØä ņäżļ¬ģĒĢ© ŌĆóņØ╝ļ░śņĀüņ£╝ļĪ£ Deep learning modelņØ┤ ĒĢśļŖö ņØ╝ņØĆ DataņŚÉņä£ Distractorļź╝ ņĀ£Ļ▒░ĒĢśļŖö Ļ▓ā ŌĆóĻĘĖļלņä£ weight vectorļź╝ filterļØ╝Ļ│Ā ļČĆļźĖļŗż 5 - DistractorņØś directionņŚÉ ļö░ļØ╝ weight vectorņØś directionņØ┤ ļ¦łĻĄ¼ ļ│ĆĒĢśļŖöŌĆ© Ļ▓āņØä ļ│╝ ņłś ņ׳ņØī - Weight vectorņØś ņŚŁĒĢĀņØĆ distractorņØś ņĀ£Ļ▒░ņØ┤ļ»ĆļĪ£ŌĆ© ļŗ╣ņŚ░ĒĢ£ Ēśäņāü

- 6. / 29 2.1 Ļ│╝Ļ▒░ ļ░®ļ▓ĢļĪĀ - DeConvNet by Zeiler & Fergus DeconvNet ņØ┤ņĀäĻ╣īņ¦ĆļŖö ŌĆóinput layerņÖĆ ļ░öļĪ£ ļ¦×ļŗ┐ņĢäņ׳ļŖö ņ▓½ ļ▓łņ¦Ė layerņØś ĒÜ©Ļ│╝ņŚÉ ļīĆĒĢ┤ņä£ļ¦ī ņäżļ¬ģĒĢśĻ▒░ļéś -> ņäżļ¬ģ ļČĆņĪ▒ ŌĆóHessian matrixļź╝ ņØ┤ņÜ®ĒĢ┤ņä£ ļŗżļźĖ layerņØś ĒÜ©Ļ│╝ļÅä ņäżļ¬ģŌĆ© -> layerļź╝ ļäśņ¢┤Ļ░łņłśļĪØ ļ│Ąņ×ĪĒĢ┤ņ¦ĆĻĖ░ ļĢīļ¼ĖņŚÉ quadratic approximationņ£╝ļĪĀ ĒĢ£Ļ│äĻ░Ć ņ׳ņØī ņŻ╝ņÜö ņ╗©ņģē ŌĆóGradient descentļź╝ ĒÖ£ņÜ®ĒĢśņŚ¼ ĒŖ╣ņĀĢ neuronņØä ĒÖ£ņä▒ĒÖöņŗ£ĒéżļŖö input spaceņØś Ēī©Ēä┤ ņ░ŠĻĖ░ ŌĆóĻ░ÖņØĆ ļ¦źļØĮņŚÉņä£ ļ╣äņŖĘĒĢ£ ņŚ░ĻĄ¼ļōż ņĪ┤ņ×¼ ŌĆóSimonyan et al. -> fully connected layerņŚÉņä£ saliency mapņØä ņ░ŠļŖö ņŚ░ĻĄ¼ ŌĆóGirchick et al. -> imageņØś ņ¢┤ļ¢ż patchĻ░Ć filterļź╝ activation ņŗ£ĒéżļŖöņ¦Ć ņ░ŠĻĖ░ 6 (1) Adaptive Deconvolutional Networks for Mid and High Level Feature Learning - Matthew D. Zeiler and Rob Fergus, 2011 (2) Visualizing and Understanding Convolutioinal Networks - Matthew D. Zeiler and Rob Fergus, 2014

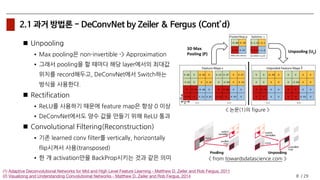

- 7. / 29 2.1 Ļ│╝Ļ▒░ ļ░®ļ▓ĢļĪĀ - DeConvNet by Zeiler & Fergus (ContŌĆÖd) ņé¼ņÜ®ĒĢ£ ļäżĒŖĖņøīĒü¼ ĻĄ¼ņĪ░(AlexNet) 1. Ļ░ü layerļōżņØĆ Convolution layerļĪ£ ĻĄ¼ņä▒ļÉśņ¢┤ ņ׳ņØī 2. Convolution layer ļÆżņŚÉļŖö ĒĢŁņāü ReLU ļČÖņØī 3. (Optionally) Max pooling layer ņé¼ņÜ® DeConvNet ņĀłņ░© 1. imageļź╝ ĻĖ░ņĪ┤ ĒĢÖņŖĄļÉ£ ConvNet model(ņÜ░ņĖĪ)ņŚÉ ĒåĄĻ│╝ 2. ņé┤ĒÄ┤ļ│╝ feature mapņØä ņäĀņĀĢĒĢśĻ│Ā,ŌĆ© ļŗżļźĖ ļ¬©ļōĀ feature mapļōżņØä 0ņ£╝ļĪ£ ļ¦īļōĀ ļŗżņØī,ŌĆ© DeConvNet layer(ņóīņĖĪ)ņŚÉ ĒåĄĻ│╝ (i) Unpool (ii) Rectify (iii)Reconstruction 7 (1) Adaptive Deconvolutional Networks for Mid and High Level Feature Learning - Matthew D. Zeiler and Rob Fergus, 2011 (2) Visualizing and Understanding Convolutioinal Networks - Matthew D. Zeiler and Rob Fergus, 2014

- 8. / 29 2.1 Ļ│╝Ļ▒░ ļ░®ļ▓ĢļĪĀ - DeConvNet by Zeiler & Fergus (ContŌĆÖd) Unpooling ŌĆóMax poolingņØĆ non-invertible -> Approximation ŌĆóĻĘĖļלņä£ poolingņØä ĒĢĀ ļĢīļ¦łļŗż ĒĢ┤ļŗ╣ layerņŚÉņä£ņØś ņĄ£ļīĆĻ░Æ ņ£äņ╣śļź╝ recordĒĢ┤ļæÉĻ│Ā, DeConvNetņŚÉņä£ SwitchĒĢśļŖö ļ░®ņŗØņØä ņé¼ņÜ®ĒĢ£ļŗż. Rectification ŌĆóReLUļź╝ ņé¼ņÜ®ĒĢśĻĖ░ ļĢīļ¼ĖņŚÉ feature mapņØĆ ĒĢŁņāü 0 ņØ┤ņāü ŌĆóDeConvNetņŚÉņä£ļÅä ņ¢æņłś Ļ░ÆņØä ļ¦īļōżĻĖ░ ņ£äĒĢ┤ ReLU ĒåĄĻ│╝ Convolutional Filtering(Reconstruction) ŌĆóĻĖ░ņĪ┤ learned conv filterļź╝ vertically, horizontally flipņŗ£ņ╝£ņä£ ņé¼ņÜ®(transposed) ŌĆóĒĢ£ Ļ░£ activationļ¦īņØä BackPropņŗ£ĒéżļŖö Ļ▓āĻ│╝ Ļ░ÖņØĆ ņØśļ»Ė 8 (1) Adaptive Deconvolutional Networks for Mid and High Level Feature Learning - Matthew D. Zeiler and Rob Fergus, 2011 (2) Visualizing and Understanding Convolutioinal Networks - Matthew D. Zeiler and Rob Fergus, 2014 < ļģ╝ļ¼Ė(1)ņØś figure > < from towardsdatascience.com >

- 9. / 29 2.1 Ļ│╝Ļ▒░ ļ░®ļ▓ĢļĪĀ - DeConvNet by Zeiler & Fergus (ContŌĆÖd) Occlusion Sensitivity test ŌĆóĒŖ╣ņĀĢ ļČĆļČäņØä ĒÜīņāē ņāüņ×ÉļĪ£ Ļ░Ćļ”╝ ĒĢ┤ļŗ╣ ļČĆļČäņØ┤ Ļ░ĆļĀżņĪīņØä ļĢī ŌĆó(b) total activationņØś ļ│ĆĒÖö ŌĆó(d) probability score ļ│ĆĒÖö ŌĆó(e) most probable class ļ│ĆĒÖö (c) projection Ļ▓░Ļ│╝ ŌĆóĻ▓ĆņØĆ ĒģīļæÉļ”¼ļŖö ĒĢ┤ļŗ╣ ņØ┤ļ»Ėņ¦Ć ŌĆóļŗżļźĖ 3Ļ░£ļŖö ļŗżļźĖ ņØ┤ļ»Ėņ¦ĆņŚÉ ļīĆĒĢ£ ņśłņŗ£ ĒĢ┤ņäØ: Convolution filterĻ░ĆŌĆ© ņØ┤ļ»Ėņ¦ĆņØś ĒŖ╣ņĀĢ ļČĆļČä(Ļ░Øņ▓┤)ņØäŌĆ© ņל ņ×ĪņĢäļé┤Ļ│Ā ņ׳ļŗż.ŌĆ© 9

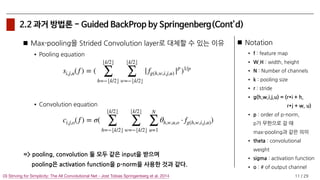

- 10. / 29 2.2 Ļ│╝Ļ▒░ ļ░®ļ▓ĢļĪĀ - Guided BackProp by Springenberg ĒĢ┤ļŗ╣ ļģ╝ļ¼ĖņØś ļ¬®ņĀü ŌĆóņāłļĪ£ņÜ┤ ļ¬©ļŹĖņØ┤ Ļ░£ļ░£ļÉĀ ļĢī ņé¼ņŗżņāü ļ▓ĀņØ┤ņŖżļŖö ļÅÖņØ╝ĒĢ£ļŹ░ ņ×ÉĻŠĖ ļ│Ąņ×ĪĒĢ┤ņĀĖĻ░ĆĻ│Ā ņ׳ļŗż. ŌĆóļäżĒŖĖņøīĒü¼ļź╝ ĻĄ¼ņä▒ĒĢśļŖö ņÜöņåī ņżæ ņĄ£Ļ│ĀņØś ņä▒ļŖźņØä ļé┤ļŖöļŹ░ ņ׳ņ¢┤ Ļ░Ćņן ņżæņÜöĒĢ£ ļČĆļČäņØ┤ ļ¼┤ņŚćņØ╝ņ¦Ć ņ░ŠņĢäļ│┤ņ×É ŌĆóļ¬©ļŹĖ ĒÅēĻ░Ć ļ░®ļ▓ĢņØ┤ ŌĆ£deconvolution approachŌĆØ(Guided BackProp) ļģ╝ļ¼ĖņØś Ļ▓░ļĪĀ ŌĆóConvolution layerļ¦īņ£╝ļĪ£ ļ¬©ļōĀ ļäżĒŖĖņøīĒü¼ļź╝ ĻĄ¼ņä▒ ŌĆómax-pooling layerļź╝ ņō░ņ¦Ć ļ¦ÉĻ│Ā -> Strided convolution layer ņé¼ņÜ®(stride 2, 3x3 filter) 10(3) Striving for Simplicity: The All Convolutional Net - Jost Tobias Springenberg et al. 2014

- 11. / 29 2.2 Ļ│╝Ļ▒░ ļ░®ļ▓ĢļĪĀ - Guided BackProp by Springenberg(ContŌĆÖd) Max-poolingņØä Strided Convolution layerļĪ£ ļīĆņ▓┤ĒĢĀ ņłś ņ׳ļŖö ņØ┤ņ£Ā ŌĆóPooling equation ŌĆóConvolution equation => pooling, convolution ļæś ļ¬©ļæÉ Ļ░ÖņØĆ inputņØä ļ░øņ£╝ļ®░ŌĆ© poolingņØĆ activation functionņØä p-normņØä ņé¼ņÜ®ĒĢ£ Ļ▓āĻ│╝ Ļ░Öļŗż. 11(3) Striving for Simplicity: The All Convolutional Net - Jost Tobias Springenberg et al. 2014 si,j,u(f ) = ( ŌīŖk/2Ōīŗ Ōłæ h=ŌłÆŌīŖk/2Ōīŗ ŌīŖk/2Ōīŗ Ōłæ w=ŌłÆŌīŖk/2Ōīŗ | fg(h,w,i,j,u) |p )1/p Notation ŌĆó f : feature map ŌĆó W,H : width, height ŌĆó N : Number of channels ŌĆó k : pooling size ŌĆó r : stride ŌĆó g(h,w,i,j,u) = (r*i + h,ŌĆ© r*j + w, u) ŌĆó p : order of p-norm,ŌĆ© pĻ░Ć ļ¼┤ĒĢ£ņ£╝ļĪ£ Ļ░ł ļĢīŌĆ© max-poolingĻ│╝ Ļ░ÖņØĆ ņØśļ»Ė ŌĆó theta : convolutional weight ŌĆó sigma : activation function ŌĆó o : # of output channel ci,j,o(f ) = Žā( ŌīŖk/2Ōīŗ Ōłæ h=ŌłÆŌīŖk/2Ōīŗ ŌīŖk/2Ōīŗ Ōłæ w=ŌłÆŌīŖk/2Ōīŗ N Ōłæ u=1 ╬Ėh,w,u,o Ōŗģ fg(h,w,i,j,u))

- 12. / 29 2.2 Ļ│╝Ļ▒░ ļ░®ļ▓ĢļĪĀ - Guided BackProp by Springenberg(ContŌĆÖd) ņŗżĒŚśņŚÉņä£ ļ╣äĻĄÉĒĢ£ ļäżĒŖĖņøīĒü¼ ņóģļźś ŌĆóA, B, C : Convolutional filter sizeĻ░Ć ļŗżļ”ä ŌĆóCņŚÉņä£ 3Ļ░Ćņ¦Ć ĒśĢĒā£ļĪ£ ņóĆ ļŹö ļŗżņ¢æĒÖö: ĒŖ╣Ē׳ ņÜ░ņĖĪ ļ╣©Ļ░ä Ēæ£ņŗ£ļÉ£ ļæÉ ļ¬©ļŹĖ ļ╣äĻĄÉ 12(3) Striving for Simplicity: The All Convolutional Net - Jost Tobias Springenberg et al. 2014 Ļ░Ćņן ņÜ░ņłś

- 13. / 29 2.2 Ļ│╝Ļ▒░ ļ░®ļ▓ĢļĪĀ - Guided BackProp by Springenberg(ContŌĆÖd) All-CNN-C ļ¬©ļŹĖņØä Deconvolution ļ░®ņŗØņ£╝ļĪ£ ĒÅēĻ░Ć ŌĆóņ¦üņĀäņŚÉ ņåīĻ░£ĒĢ£ Zeiler & Fergus(2014)ņØś Deconvolution ļ░®ļ▓ĢņØĆŌĆ© ĒĢ┤ļŗ╣ ļ¬©ļŹĖņŚÉ Max-Pooling layerĻ░Ć ņŚåņ¢┤ņä£ ņä▒ļŖźņØ┤ ņóŗņ¦Ć ņĢŖĻ▓ī ļéśņś┤ Guided BackProp ŌĆóConvolution layerļ¦ī ņé¼ņÜ®ĒĢśĻĖ░ ļĢīļ¼ĖņŚÉ switch ĒĢäņÜöņŚåņØī ŌĆóActivation Ļ░ÆņØ┤ 0 ņØ┤ņāüņØ┤Ļ│Ā, GradientļÅä 0 ņØ┤ņāüņØĖ Ļ▓āļ¦ī ņĀäĒīī a) Zeiler&Fergus(2014) 13

- 14. / 29 2.3 Ļ│╝Ļ▒░ ļ░®ļ▓ĢļĪĀ - Weight vectorņŚÉļ¦ī ņ¦æņżæ DeConvNet, Guided BackProp ļ░®ļ▓Ģ ļ¬©ļæÉŌĆ© Weight(Conv filter) ņŚ░ņé░ Ļ▓░Ļ│╝ņŚÉ Ļ┤Ćņŗ¼ WeightĻ░Ć DataņØś SignalņØä ļö░ļØ╝Ļ░Ćņ¦Ć ņĢŖņ£╝ļ»ĆļĪ£ ņØ┤ļ¤¼ĒĢ£ ļ░®ļ▓ĢļōżņØĆŌĆ© DataņØś ņżæņÜöĒĢ£ ļČĆļČäņØä ņ×ĪņĢäļé┤ņ¦Ć ļ¬╗ĒĢĀ ņłś ņ׳ņØī 14

- 15. / 29 3.1 ļŗ©ņł£ĒĢ£ Linear Model ĻĄ¼ņāü - Deterministic distractor ļŗ©ņł£ĒĢ£ Linear modelņØä ĒåĄĒĢ┤ signalĻ│╝ distractorņØś ņøĆņ¦üņ×ä Ļ┤Ćņ░░ 15 Notation ŌĆó w : filter or weight ŌĆó x : data ŌĆó y : condensed output ŌĆó s : relevant signal ŌĆó d : distracting component. ņøÉĒĢśļŖö outputņŚÉ ļīĆĒĢ┤ ņĢäļ¼┤ļ¤░ ņĀĢļ│┤ļÅä Ļ░Ćņ¦ĆĻ│Ā ņ׳ņ¦Ć ņĢŖņØĆ ļČĆļČä ŌĆó a_s : direction of signal.ŌĆ© ņøÉĒĢśļŖö outputņØś ĒŹ╝ņ¦ä ļ¬©ņ¢æ ŌĆó a_d : direction of distractor s = asyx = s + d d = adŽĄ as = (1,0)T ad = (1,1)T y Ōłł [ŌłÆ1,1] ŽĄ Ōł╝ ØÆ®(╬╝, Žā2 ) ŌĆóData x ļŖö signal sņÖĆ distractor dņØś ĒĢ® ŌĆóņ£ä ņłśņŗØņŚÉņä£ ņØä ļ¦īņĪ▒ĒĢśĻĖ░ ņ£äĒĢ┤ņäĀ ŌĆó ņØ┤Ļ│Ā, ņØ┤ņ¢┤ņĢ╝ ĒĢśĻĖ░ ļĢīļ¼Ė wT x = y w = [1, ŌłÆ 1]T wT asy = y wT adŽĄ = 0

- 16. / 29 3.1 ļŗ©ņł£ĒĢ£ Linear Model ĻĄ¼ņāü - Deterministic distractor 16 , ļæÉ ņŗØ ļ¬©ļæÉļź╝ ņČ®ņĪ▒ņŗ£ņ╝£ņĢ╝ ĒĢ© ŌĆóweightļŖö distractorļź╝ ņĀ£Ļ▒░ĒĢ┤ņĢ╝ĒĢśĻĖ░ ļĢīļ¼ĖņŚÉ distractorņØś directionĻ│╝ orthogonal ĒĢśļĀżĻ│Ā ĒĢ© ŌĆóņ”ē wļŖö signalņØś directionĻ│╝ alignĒĢśņ¦Ć ņĢŖļŖöļŗż. ŌĆóweightļŖö distractorņÖĆ orthogonal ĒĢ©ņØä ņ£Āņ¦ĆĒĢśļ®┤ņä£,ŌĆ© Ēü¼ĻĖ░ ņĪ░ņĀĢņØä ĒåĄĒĢ┤ ņØä ņ£Āņ¦ĆĒĢśļĀż ĒĢ£ļŗż. Weight vectorļŖö distractorņŚÉ ņØśĒĢ┤ Ēü¼Ļ▓ī ņóīņ¦ĆņÜ░ņ¦Ć ļÉ© weight vectorļ¦īņ£╝ļĪ£ ņ¢┤ļ¢ż input patternņØ┤ outputņŚÉ ņśüĒ¢źņØä ļü╝ņ╣śļŖöņ¦Ć ņĢī ņłś ņŚåņØī wT asy = y wT adŽĄ = 0 - signal directionņØĆ ĻĘĖļīĆļĪ£ ņ£Āņ¦Ć - distractor directionņØ┤ ļ░öļĆīļŗłŌĆ© weight direction ņŚŁņŗ£ ļ░öļĆ£ wT as = 1

- 17. / 29 3.2 ļŗ©ņł£ĒĢ£ Linear Model ĻĄ¼ņāü - No distractor, Additive isotropic Gaussian noise 17 Isotropic Gaussian noiseļź╝ ņäĀĒāØĒĢ£ ņØ┤ņ£Ā ŌĆózero mean: noiseņØś meanņØĆ biasļź╝ ĒåĄĒĢ┤ ņ¢╝ļ¦ł ļōĀņ¦Ć ņāüņćäļÉĀ ņłś ņ׳ņ£╝ļ»ĆļĪ£ ļŗ©ņł£Ē׳ 0ņ£╝ļĪ£ ņĀĢĒĢ£ļŗż. ŌĆócorrelationņØ┤ļéś structureĻ░Ć ņŚåĻĖ░ ļĢīļ¼ĖņŚÉ weight vectorļź╝ ņל ĒĢÖņŖĄĒĢ£ļŗżĻ│Ā ĒĢ┤ņä£ŌĆ© noiseĻ░Ć ņĀ£Ļ▒░ļÉśņ¦Ć ņĢŖļŖöļŗż. ŌĆóGaussian noiseļź╝ ņČöĻ░ĆĒĢśļŖö Ļ▓āņØĆŌĆ© L2 regularizationĻ│╝ Ļ░ÖņØĆ ĒÜ©Ļ│╝ļź╝ ļéĖļŗż.ŌĆ© ņ”ē weightļź╝ shirink ņŗ£Ēé©ļŗż. ņ£ä ņĪ░Ļ▒┤ ļĢīļ¼ĖņŚÉ ņØä ļ¦īņĪ▒ĒĢśļŖöŌĆ© Ļ░Ćņן ņ×æņØĆ weight vectorļŖöŌĆ© ņÖĆ Ļ░ÖņØĆ ļ░®Ē¢źņØś vector < Gaussian pattern > yn = ╬▓xn + ŽĄ N ŌłÅ n=1 ØÆ®(yn |╬▓xn, Žā2 ) N ŌłÅ n=1 ØÆ®(yn |╬▓xn, Žā2 )ØÆ®(╬▓|0,╬╗ŌłÆ1 ) N Ōłæ n=1 ŌłÆ 1 Žā2 (yn ŌłÆ ╬▓xn)2 ŌłÆ ╬╗╬▓2 + const < Gaussian noise & L2 regularization > ŌĆöŌĆö> likelihood, Ō¼ć Logarithm wT as = 1 as as wŌĆ▓ wŌĆ▓ŌĆ▓ w 1

- 18. / 29 4. Approaches 18 Functions ŌĆódata xņŚÉņä£ output yļź╝ ļĮæņĢäļé╝ ļĢī ņō░ļŖö ļ░®ļ▓Ģ. ex) gradients, saliency map ŌĆóyļź╝ xļĪ£ ļ»ĖļČäĒĢ┤ņä£ inputņØś ļ│ĆĒÖöĻ░Ć ņ¢┤ļ¢╗Ļ▓ī outputņØä ļ│ĆĒĢśĻ▓īĒĢśļŖöņ¦Ć ņé┤ĒÄ┤ļ│Ėļŗż. ŌĆóĒĢ┤ļŗ╣ modelņØś gradientļź╝ ņō░ļŖö Ļ▓āņØ┤Ļ│Ā Ļ▓░ĻĄŁ ņØ┤ gradientļŖö weightļŗż. Signal ŌĆóSignal: ļ¬©ļŹĖņØś neuronņØä activate ņŗ£ĒéżļŖö ļŹ░ņØ┤Ēä░ņØś ĻĄ¼ņä▒ ņÜöņåī ŌĆóOutputņŚÉņä£ input spaceĻ╣īņ¦Ć gradientļź╝ backpropņŗ£ņ╝£ņä£ ļ│ĆĒÖö Ļ┤Ćņ░░ ŌĆóDeConvNet, Guded BackPropņØĆ ņĀäĒīīļÉ£ Ļ▓āņØ┤ signalņØ┤ļØ╝ ļ│┤ņן ļ¬╗ĒĢ£ļŗż. Attribution ŌĆóĒŖ╣ņĀĢ SignalņØ┤ ņ¢╝ļ¦łļéś outputņŚÉ ĻĖ░ņŚ¼ĒĢśļŖöņ¦Ć ļéśĒāĆļé┤ļŖö ņ¦ĆĒæ£ ŌĆóLinear modelņŚÉņäĀ signalĻ│╝ weight vectorņØś element-wise Ļ│▒ņ£╝ļĪ£ ļéśĒāĆļé┤ņ¢┤ņ¦É ŌĆóDeep taylor decomposition ļģ╝ļ¼ĖņŚÉņäĀ activation Ļ░ÆņØä inputņŚÉ ļīĆĒĢ£ŌĆ© contributionņ£╝ļĪ£ ļČäĒĢ┤ĒĢśĻ│Ā, LRPņŚÉņäĀ relevanceļØ╝ ņ╣ŁĒĢ£ļŗż. y = wT x Ōłéy/Ōłéx = w PatternNet PatternAttribution

- 19. / 29 5. Quality criterion for signal estimator 19 ņØ┤ņĀä ņłśņŗØņŚÉņä£ ņ£ĀļÅä wT x = y wT s + wT d = y (x = s + d)wT (s + d) = y wT s = y (wT d = 0) (wT )ŌłÆ1 wT s = (wT )ŌłÆ1 y ╠és = uuŌłÆ1 (wT )ŌłÆ1 y ╠és = u(wT u)ŌłÆ1 y u = random vector (wT u ŌēĀ 0) Quality measure Žü S(x) = ╠és Žü(S) = 1 ŌłÆ maxvcorr(wT x, vT (x ŌłÆ S(x))) ╠éd = x ŌłÆ S(x) y = wT x, , = 1 ŌłÆ maxv vT cov[y, ╠éd] Žā2 vT ╠éd Žā2 y ŌĆóņóŗņØĆ signal estimatorļŖö correlationņØä 0ņ£╝ļĪ£ -> Ēü░ ŌĆówļŖö ņØ┤ļ»Ė ņל ĒĢÖņŖĄļÉ£ ļ¬©ļŹĖņØś weightļØ╝ Ļ░ĆņĀĢ ŌĆócorrelationņØĆ scaleņŚÉ invariant ĒĢśĻĖ░ ļĢīļ¼ĖņŚÉŌĆ© ņØś ļČäņé░ņØĆ ņØś ļČäņé░Ļ│╝ Ļ░ÖņØä Ļ▓āņØ┤ļ×Ć ņĀ£ņĢĮņĪ░Ļ▒┤ ņČöĻ░Ć ŌĆóS(x)ļź╝ Ļ│ĀņĀĢņŗ£ĒéżĻ│Ā optimal ļź╝ ņ░ŠļŖöļŹ░ŌĆ© ĒĢÖņŖĄ ļ░®ņŗØņØĆ dņÖĆ yņŚÉ ļīĆĒĢ£ Least-squares regression Žü vT ╠éd y v illposed problem. ņØ┤ļīĆļĪĀ ĒÆĆļ”¼ņ¦Ć ņĢŖļŖöļŗż. ļŗżļźĖ ļ░®ņŗØņØ┤ ĒĢäņÜö

- 20. / 29 6.1 ĻĖ░ņĪ┤ Signal estimator ļ░®ņŗØ 20 The identity estimator ŌĆódataņŚÉ distractor ņŚåņØ┤, signalļ¦ī ņĪ┤ņ×¼ĒĢ£ļŗż Ļ░ĆņĀĢ ŌĆódataĻ░Ć ņØ┤ļ»Ėņ¦ĆņØ╝ ļĢī signalņØĆ ņØ┤ļ»Ėņ¦Ć ĻĘĖļīĆļĪ£ņØ┤ļŗż. ŌĆóļŗ©ņł£ĒĢ£ linear modelņØ╝ ļĢī attribution ĻĄ¼ĒĢśļŖö ņłśņŗØ.ŌĆ© (distractorĻ░Ć ņĪ┤ņ×¼ĒĢśļŹöļØ╝ļÅä, attributionņŚÉ ĒżĒĢ©) ŌĆóņŗżņĀ£ ļŹ░ņØ┤Ēä░ņŚÉ distractorĻ░Ć ņŚåņØä ņłś ņŚåĻ│Ā,ŌĆ© forward passņŚÉņäĀ ņĀ£Ļ▒░ļÉśņ¦Ćļ¦īŌĆ© backward passņŚÉņäĀ element wise Ļ│▒ņŚÉ ņØśĒĢ┤ ņ£Āņ¦Ć ŌĆóņŗ£Ļ░üĒÖöņŚÉ noiseĻ░Ć ļ¦ÄņØ┤ ļ│┤ņØĖļŗż(LRP) Sx(x) = x r = w ŌŖÖ x = w ŌŖÖ s + w ŌŖÖ d The filter based estimator ŌĆóĻ┤ĆņĖĪļÉ£ signalņØĆ weightņØś directionņŚÉ ņåŹĒĢ©ņØä Ļ░ĆņĀĢŌĆ© ex) DeConvNet, Guided BackProp ŌĆóweightļŖö normalize ļÉśņ¢┤ņĢ╝ ĒĢ© ŌĆólinear modelņØ╝ ļĢī attribution Ļ│ĄņŗØņØĆ ļŗżņØīĻ│╝ Ļ░ÖĻ│ĀŌĆ© signalņØä ņŹ® ņĀ£ļīĆļĪ£ ņ×¼ĻĄ¼ņä▒ĒĢśņ¦Ć ļ¬╗ĒĢ© Sw(x) = w wTw wT x r = w ŌŖÖ w wTw y

- 21. / 29 6.2 PatternNet & PatternAttribution 21 ĒĢÖņŖĄ ļ░®ņŗØ ļ░Å Ļ░ĆņĀĢ ŌĆó criterionņØä ņĄ£ņĀüĒÖöĒĢśļŖö ĒĢÖņŖĄļ░®ņŗØ ŌĆóļ¬©ļōĀ Ļ░ĆļŖźĒĢ£ ļ▓ĪĒä░ ņŚÉņä£ yņÖĆ dņØś correlationņØ┤ 0ņØ╝ ļĢīŌĆ© signal estimator SĻ░Ć optimalņØ┤ļØ╝ ĒĢĀ ņłś ņ׳ļŗż. ŌĆóLinear modelņØ╝ ļĢī yņÖĆ dņØś covarianceļŖö 0ņØ┤ļŗż cov[y, x] ŌłÆ cov[y, S(x)] = 0 Žü v cov[y, x] = cov[y, S(x)] cov[y, ╠éd] = 0 Žü(S) = 1 ŌłÆ maxvcorr(wT x, vT (x ŌłÆ S(x))) = 1 ŌłÆ maxv vT cov[y, ╠éd] Žā2 vT ╠éd Žā2 y Quality measure

- 22. / 29 6.2 PatternNet & PatternAttribution 22 The linear estimator ŌĆólinear neuronņØĆ data xņŚÉņä£ linearĒĢ£ signalļ¦ī ņČöņČ£ Ļ░ĆļŖź ŌĆóņ£ä ņŗØņŚÉņä£ņ▓śļ¤╝ yņŚÉļŗż linear ņŚ░ņé░ņØä Ē¢łņØä ļĢī signal ļéśņś┤ ŌĆólinear modelņØ╝ ļĢī y, dņØś covarianceļŖö 0ņØ┤ļ»ĆļĪ£ Sa(x) = awT x = ay cov[x, y] = cov[S(x), y] = cov[awT x, y] = a Ōŗģ cov[y, y] ŌćÆ a = cov[x, y] Žā2 y ŌĆóļ¦īņĢĮ dņÖĆ sĻ░Ć orthogonal ĒĢśļŗżļ®┤ DeConvNetĻ│╝ Ļ░ÖņØĆ filter-based ļ░®ņŗØ Ļ│╝ ņØ╝ņ╣śĒĢ£ļŗż. ŌĆóConvolution layerņŚÉņä£ ļ¦żņÜ░ ņל ļÅÖņ×æ ŌĆóFC layerņŚÉ ReLUĻ░Ć ņŚ░Ļ▓░ļÉśņ¢┤ņ׳ļŖö ļČĆļČä ņŚÉņäĀ correlationņØä ņÖäņĀäĒ׳ ņĀ£Ļ▒░ĒĢĀ ņłś ņŚå ņ£╝ļ»ĆļĪ£ ņĢäļל Ēæ£ņ▓śļ¤╝ criterion ņłśņ╣ś ļé«ņØī VGG16ņŚÉņä£ criterion ļ╣äĻĄÉ ļ¦ēļīĆĻĘĖļלĒöä ņł£ņä£ļīĆļĪ£ random, S_w,ŌĆ© S_a, S_a+-

- 23. / 29 6.2 PatternNet & PatternAttribution 23 The two-component(Non-linear) estimator ŌĆóņĢ×ņäĀ linear estimatorņÖĆ Ļ░ÖņØĆ trickņØä ņō░ņ¦Ćļ¦īŌĆ© y Ļ░ÆņØś ļČĆĒśĖņŚÉ ļö░ļØ╝ Ļ░üĻ░ü ļŗżļź┤Ļ▓ī ņ▓śļ”¼ĒĢ£ļŗż. ŌĆóļē┤ļ¤░ņØ┤ ĒÖ£ņä▒ĒÖöļÉśņ¢┤ņ׳ļŖöņ¦ĆņØś ņŚ¼ļČĆņŚÉ ļīĆĒĢ£ ņĀĢļ│┤ļŖöŌĆ© distractorņŚÉļÅä ņĪ┤ņ×¼ yĻ░Ć ņØīņłśņØĖ ļČĆļČäļÅä Ļ│äņé░ ĒĢäņÜö ŌĆóReLU ļĢīļ¼ĖņŚÉ ņśżņ¦ü positive domainļ¦īŌĆ© locally ņŚģļŹ░ņØ┤ĒŖĖ ļÉśĻĖ░ ļĢīļ¼ĖņŚÉ ņØ┤ļź╝ ļ│┤ņĀĢ ŌĆócovariance Ļ│ĄņŗØņØĆ ļŗżņØīĻ│╝ Ļ░ÖĻ│Ā ŌĆóļČĆĒśĖņŚÉ ļö░ļØ╝ ļö░ļĪ£ Ļ│äņé░, Ļ░Ćņżæņ╣śļĪ£ ĒĢ®ĒĢ£ļŗż. Sa+ŌłÆ(x) = { a+wŌŖ║ x if╠²wŌŖ║ x > 0 aŌłÆwŌŖ║ x otherwise x = { s+ + d+ if╠²y > 0 sŌłÆ + dŌłÆ otherwise cov(x, y) = Øö╝[xy] ŌłÆ Øö╝[x]Øö╝[y] cov(x, y) = ŽĆ+(Øö╝+[xy] ŌłÆ Øö╝+[x]Øö╝[y]) +(1 ŌłÆ ŽĆ+)(Øö╝ŌłÆ[xy] ŌłÆ Øö╝ŌłÆ[x]Øö╝[y]) cov(s, y) = ŽĆ+(Øö╝+[sy] ŌłÆ Øö╝+[s]Øö╝[y]) +(1 ŌłÆ ŽĆ+)(Øö╝ŌłÆ[sy] ŌłÆ Øö╝ŌłÆ[s]Øö╝[y]) ŌĆócov(x,y), cov(s,y)Ļ░Ć ņØ╝ņ╣śĒĢĀ ļĢī, ņ¢æņØś ļČĆĒśĖņŚÉ ļīĆĒĢ┤ ĒÆĆļ®┤ a+ = Øö╝+[xy] ŌłÆ Øö╝+[x]Øö╝[y] wŌŖ║ Øö╝+[xy] ŌłÆ wŌŖ║ Øö╝+[x]Øö╝[y]

- 24. / 29 6.2 PatternNet & PatternAttribution 24 PatternNet and PatternAttribution

- 25. / 29 6.2 PatternNet & PatternAttribution 25 PatternNet and PatternAttribution ŌĆóPatternNet, Linear ŌĆócov[x,y], cov[s,y]Ļ░Ć Ļ░ÖņĢäņä£ ŌĆóaļź╝ Ļ│äņé░ĒĢĀ ļĢī x, yļĪ£ļ¦ī Ļ│äņé░ Ļ░ĆļŖź ŌĆóPatternNet, Non-linear ŌĆóReLU activation ĒŖ╣ņä▒ Ļ│ĀļĀż ŌĆóņ¢æ/ņØī ļéśļłĀņä£ a Ļ░Æ Ļ│äņé░ ŌĆóNon-linear ļ¬©ļŹĖņŚÉņä£ ņä▒ļŖź ņóŗņØī ŌĆóPatternAttribution ŌĆóa Ļ░ÆņŚÉ wļź╝ element-wise Ļ│▒ĒĢ£ Ļ▓░Ļ│╝ļĪ£ ŌĆóĒĢ┤ņäØĒĢśĻĖ░ ņē¼ņÜ┤ ņóĆ ļŹö Ļ╣öļüöĒĢ£ŌĆ© heat mapņØä ņāØņä▒ĒĢĀ ņłś ņ׳ļŗż. r = w ŌŖÖ a+ a = cov[x, y] Žā2 y a+ = Øö╝+[xy] ŌłÆ Øö╝+[x]Øö╝[y] wŌŖ║ Øö╝+[xy] ŌłÆ wŌŖ║ Øö╝+[x]Øö╝[y]

- 26. / 29 7. Experiments 26 Ļ░Æ ļ╣äĻĄÉ, VGG16 on ImageNetŽü(S) ŌĆóConvolution layer ŌĆóļ│Ė ļģ╝ļ¼ĖņŚÉņä£ ņĀ£ņŗ£ĒĢ£ linear estimatorĻ░Ć ļŗ©ņł£ĒĢśļ®┤ņä£ļÅä ņóŗņØĆ ņä▒ļŖźņØä ļ│┤ņØ┤Ļ│Ā ņ׳ņØī ŌĆóļ│Ė ļģ╝ļ¼ĖņØś non-linear estimator ņŚŁņŗ£ ļŗżļźĖ filter-based, random ļ│┤ļŗż ņøöļō▒ĒĢ£ ņä▒ļŖź ŌĆóFC layer with ReLU ŌĆóļ│Ė ļģ╝ļ¼ĖņØś linear estimator ņä▒ļŖź ĻĖēĻ▓®Ē׳ ļ¢©ņ¢┤ņ¦É ŌĆónon-linear estimator ņä▒ļŖź ņ£Āņ¦Ć

- 27. / 29 7. Experiments 27 Qualitative evaluation ŌĆóĒŖ╣ņĀĢ ņØ┤ļ»Ėņ¦Ć ĒĢ£ ņןņŚÉ ļīĆĒĢ┤ ļ╣äĻĄÉ ĒĢ┤ņäØ ŌĆóMethods ĻĄ¼ļČä ŌĆóSx : Identity estimator ŌĆóSw : DeConvNet, Guided BackProp ŌĆóSa : Linear ŌĆóSa+- : Non-linear ŌĆóņÜ░ņĖĪņ£╝ļĪ£ Ļ░ł ņłśļĪØ ņóĆ ļŹö structureļź╝ŌĆ© ņ×ÉņäĖĒĢśĻ│Ā ņäĖļ░ĆĒĢśĻ▓ī ņ×ĪņĢäļéĖļŗż.

- 29. / 2929 Ļ░Éņé¼ĒĢ®ļŗłļŗż. PatternNet & PatternAttribution

![/ 29

3.1 ļŗ©ņł£ĒĢ£ Linear Model ĻĄ¼ņāü - Deterministic distractor

ļŗ©ņł£ĒĢ£ Linear modelņØä ĒåĄĒĢ┤ signalĻ│╝ distractorņØś ņøĆņ¦üņ×ä Ļ┤Ćņ░░

15

Notation

ŌĆó w : filter or weight

ŌĆó x : data

ŌĆó y : condensed output

ŌĆó s : relevant signal

ŌĆó d : distracting component.

ņøÉĒĢśļŖö outputņŚÉ ļīĆĒĢ┤ ņĢäļ¼┤ļ¤░

ņĀĢļ│┤ļÅä Ļ░Ćņ¦ĆĻ│Ā ņ׳ņ¦Ć ņĢŖņØĆ ļČĆļČä

ŌĆó a_s : direction of signal.ŌĆ©

ņøÉĒĢśļŖö outputņØś ĒŹ╝ņ¦ä ļ¬©ņ¢æ

ŌĆó a_d : direction of distractor

s = asyx = s + d

d = adŽĄ

as = (1,0)T

ad = (1,1)T

y Ōłł [ŌłÆ1,1]

ŽĄ Ōł╝ ØÆ®(╬╝, Žā2

)

ŌĆóData x ļŖö signal sņÖĆ distractor dņØś ĒĢ®

ŌĆóņ£ä ņłśņŗØņŚÉņä£ ņØä ļ¦īņĪ▒ĒĢśĻĖ░ ņ£äĒĢ┤ņäĀ

ŌĆó ņØ┤Ļ│Ā, ņØ┤ņ¢┤ņĢ╝ ĒĢśĻĖ░ ļĢīļ¼Ė

wT

x = y w = [1, ŌłÆ 1]T

wT

asy = y wT

adŽĄ = 0](https://image.slidesharecdn.com/patternnetgyubinson-190517155324/85/Learning-how-to-explain-neural-networks-PatternNet-and-PatternAttribution-15-320.jpg)

![/ 29

5. Quality criterion for signal estimator

19

ņØ┤ņĀä ņłśņŗØņŚÉņä£ ņ£ĀļÅä

wT

x = y

wT

s + wT

d = y

(x = s + d)wT

(s + d) = y

wT

s = y (wT

d = 0)

(wT

)ŌłÆ1

wT

s = (wT

)ŌłÆ1

y

╠és = uuŌłÆ1

(wT

)ŌłÆ1

y

̂s = u(wT

u)ŌłÆ1

y

u = random vector

(wT

u ŌēĀ 0)

Quality measure Žü

S(x) = ̂s

Žü(S) = 1 ŌłÆ maxvcorr(wT

x, vT

(x ŌłÆ S(x)))

╠éd = x ŌłÆ S(x) y = wT

x, ,

= 1 ŌłÆ maxv

vT

cov[y, ̂d]

Žā2

vT ̂d

Žā2

y

ŌĆóņóŗņØĆ signal estimatorļŖö correlationņØä 0ņ£╝ļĪ£ -> Ēü░

ŌĆówļŖö ņØ┤ļ»Ė ņל ĒĢÖņŖĄļÉ£ ļ¬©ļŹĖņØś weightļØ╝ Ļ░ĆņĀĢ

ŌĆócorrelationņØĆ scaleņŚÉ invariant ĒĢśĻĖ░ ļĢīļ¼ĖņŚÉŌĆ©

ņØś ļČäņé░ņØĆ ņØś ļČäņé░Ļ│╝ Ļ░ÖņØä Ļ▓āņØ┤ļ×Ć ņĀ£ņĢĮņĪ░Ļ▒┤ ņČöĻ░Ć

ŌĆóS(x)ļź╝ Ļ│ĀņĀĢņŗ£ĒéżĻ│Ā optimal ļź╝ ņ░ŠļŖöļŹ░ŌĆ©

ĒĢÖņŖĄ ļ░®ņŗØņØĆ dņÖĆ yņŚÉ ļīĆĒĢ£ Least-squares regression

Žü

vT ̂d y

v

illposed problem.

ņØ┤ļīĆļĪĀ ĒÆĆļ”¼ņ¦Ć ņĢŖļŖöļŗż.

ļŗżļźĖ ļ░®ņŗØņØ┤ ĒĢäņÜö](https://image.slidesharecdn.com/patternnetgyubinson-190517155324/85/Learning-how-to-explain-neural-networks-PatternNet-and-PatternAttribution-19-320.jpg)

![/ 29

6.2 PatternNet & PatternAttribution

21

ĒĢÖņŖĄ ļ░®ņŗØ ļ░Å Ļ░ĆņĀĢ

ŌĆó criterionņØä ņĄ£ņĀüĒÖöĒĢśļŖö ĒĢÖņŖĄļ░®ņŗØ

ŌĆóļ¬©ļōĀ Ļ░ĆļŖźĒĢ£ ļ▓ĪĒä░ ņŚÉņä£ yņÖĆ dņØś correlationņØ┤ 0ņØ╝ ļĢīŌĆ©

signal estimator SĻ░Ć optimalņØ┤ļØ╝ ĒĢĀ ņłś ņ׳ļŗż.

ŌĆóLinear modelņØ╝ ļĢī yņÖĆ dņØś covarianceļŖö 0ņØ┤ļŗż

cov[y, x] ŌłÆ cov[y, S(x)] = 0

Žü

v

cov[y, x] = cov[y, S(x)]

cov[y, ̂d] = 0

Žü(S) = 1 ŌłÆ maxvcorr(wT

x, vT

(x ŌłÆ S(x)))

= 1 ŌłÆ maxv

vT

cov[y, ̂d]

Žā2

vT ̂d

Žā2

y

Quality measure](https://image.slidesharecdn.com/patternnetgyubinson-190517155324/85/Learning-how-to-explain-neural-networks-PatternNet-and-PatternAttribution-21-320.jpg)

![/ 29

6.2 PatternNet & PatternAttribution

22

The linear estimator

ŌĆólinear neuronņØĆ data xņŚÉņä£ linearĒĢ£ signalļ¦ī ņČöņČ£ Ļ░ĆļŖź

ŌĆóņ£ä ņŗØņŚÉņä£ņ▓śļ¤╝ yņŚÉļŗż linear ņŚ░ņé░ņØä Ē¢łņØä ļĢī signal ļéśņś┤

ŌĆólinear modelņØ╝ ļĢī y, dņØś covarianceļŖö 0ņØ┤ļ»ĆļĪ£

Sa(x) = awT

x = ay

cov[x, y]

= cov[S(x), y]

= cov[awT

x, y]

= a Ōŗģ cov[y, y]

ŌćÆ a =

cov[x, y]

Žā2

y

ŌĆóļ¦īņĢĮ dņÖĆ sĻ░Ć orthogonal ĒĢśļŗżļ®┤

DeConvNetĻ│╝ Ļ░ÖņØĆ filter-based ļ░®ņŗØ

Ļ│╝ ņØ╝ņ╣śĒĢ£ļŗż.

ŌĆóConvolution layerņŚÉņä£ ļ¦żņÜ░ ņל ļÅÖņ×æ

ŌĆóFC layerņŚÉ ReLUĻ░Ć ņŚ░Ļ▓░ļÉśņ¢┤ņ׳ļŖö ļČĆļČä

ņŚÉņäĀ correlationņØä ņÖäņĀäĒ׳ ņĀ£Ļ▒░ĒĢĀ ņłś ņŚå

ņ£╝ļ»ĆļĪ£ ņĢäļל Ēæ£ņ▓śļ¤╝ criterion ņłśņ╣ś ļé«ņØī

VGG16ņŚÉņä£

criterion ļ╣äĻĄÉ

ļ¦ēļīĆĻĘĖļלĒöä ņł£ņä£ļīĆļĪ£

random, S_w,ŌĆ©

S_a, S_a+-](https://image.slidesharecdn.com/patternnetgyubinson-190517155324/85/Learning-how-to-explain-neural-networks-PatternNet-and-PatternAttribution-22-320.jpg)

![/ 29

6.2 PatternNet & PatternAttribution

23

The two-component(Non-linear) estimator

ŌĆóņĢ×ņäĀ linear estimatorņÖĆ Ļ░ÖņØĆ trickņØä ņō░ņ¦Ćļ¦īŌĆ©

y Ļ░ÆņØś ļČĆĒśĖņŚÉ ļö░ļØ╝ Ļ░üĻ░ü ļŗżļź┤Ļ▓ī ņ▓śļ”¼ĒĢ£ļŗż.

ŌĆóļē┤ļ¤░ņØ┤ ĒÖ£ņä▒ĒÖöļÉśņ¢┤ņ׳ļŖöņ¦ĆņØś ņŚ¼ļČĆņŚÉ ļīĆĒĢ£ ņĀĢļ│┤ļŖöŌĆ©

distractorņŚÉļÅä ņĪ┤ņ×¼ yĻ░Ć ņØīņłśņØĖ ļČĆļČäļÅä Ļ│äņé░ ĒĢäņÜö

ŌĆóReLU ļĢīļ¼ĖņŚÉ ņśżņ¦ü positive domainļ¦īŌĆ©

locally ņŚģļŹ░ņØ┤ĒŖĖ ļÉśĻĖ░ ļĢīļ¼ĖņŚÉ ņØ┤ļź╝ ļ│┤ņĀĢ

ŌĆócovariance Ļ│ĄņŗØņØĆ ļŗżņØīĻ│╝ Ļ░ÖĻ│Ā

ŌĆóļČĆĒśĖņŚÉ ļö░ļØ╝ ļö░ļĪ£ Ļ│äņé░, Ļ░Ćņżæņ╣śļĪ£ ĒĢ®ĒĢ£ļŗż.

Sa+ŌłÆ(x) =

{

a+wŌŖ║

x if╠²wŌŖ║

x > 0

aŌłÆwŌŖ║

x otherwise

x =

{

s+ + d+ if╠²y > 0

sŌłÆ + dŌłÆ otherwise

cov(x, y) = Øö╝[xy] ŌłÆ Øö╝[x]Øö╝[y]

cov(x, y) = ŽĆ+(Øö╝+[xy] ŌłÆ Øö╝+[x]Øö╝[y])

+(1 ŌłÆ ŽĆ+)(Øö╝ŌłÆ[xy] ŌłÆ Øö╝ŌłÆ[x]Øö╝[y])

cov(s, y) = ŽĆ+(Øö╝+[sy] ŌłÆ Øö╝+[s]Øö╝[y])

+(1 ŌłÆ ŽĆ+)(Øö╝ŌłÆ[sy] ŌłÆ Øö╝ŌłÆ[s]Øö╝[y])

ŌĆócov(x,y), cov(s,y)Ļ░Ć ņØ╝ņ╣śĒĢĀ ļĢī, ņ¢æņØś ļČĆĒśĖņŚÉ ļīĆĒĢ┤ ĒÆĆļ®┤

a+ =

Øö╝+[xy] ŌłÆ Øö╝+[x]Øö╝[y]

wŌŖ║ Øö╝+[xy] ŌłÆ wŌŖ║ Øö╝+[x]Øö╝[y]](https://image.slidesharecdn.com/patternnetgyubinson-190517155324/85/Learning-how-to-explain-neural-networks-PatternNet-and-PatternAttribution-23-320.jpg)

![/ 29

6.2 PatternNet & PatternAttribution

25

PatternNet and PatternAttribution

ŌĆóPatternNet, Linear

ŌĆócov[x,y], cov[s,y]Ļ░Ć Ļ░ÖņĢäņä£

ŌĆóaļź╝ Ļ│äņé░ĒĢĀ ļĢī x, yļĪ£ļ¦ī Ļ│äņé░ Ļ░ĆļŖź

ŌĆóPatternNet, Non-linear

ŌĆóReLU activation ĒŖ╣ņä▒ Ļ│ĀļĀż

ŌĆóņ¢æ/ņØī ļéśļłĀņä£ a Ļ░Æ Ļ│äņé░

ŌĆóNon-linear ļ¬©ļŹĖņŚÉņä£ ņä▒ļŖź ņóŗņØī

ŌĆóPatternAttribution

ŌĆóa Ļ░ÆņŚÉ wļź╝ element-wise Ļ│▒ĒĢ£ Ļ▓░Ļ│╝ļĪ£

ŌĆóĒĢ┤ņäØĒĢśĻĖ░ ņē¼ņÜ┤ ņóĆ ļŹö Ļ╣öļüöĒĢ£ŌĆ©

heat mapņØä ņāØņä▒ĒĢĀ ņłś ņ׳ļŗż. r = w ŌŖÖ a+

a =

cov[x, y]

Žā2

y

a+ =

Øö╝+[xy] ŌłÆ Øö╝+[x]Øö╝[y]

wŌŖ║ Øö╝+[xy] ŌłÆ wŌŖ║ Øö╝+[x]Øö╝[y]](https://image.slidesharecdn.com/patternnetgyubinson-190517155324/85/Learning-how-to-explain-neural-networks-PatternNet-and-PatternAttribution-25-320.jpg)

![[ņ╗┤Ēō©Ēä░ļ╣äņĀäĻ│╝ ņØĖĻ│Ąņ¦ĆļŖź] 7. ĒĢ®ņä▒Ļ│▒ ņŗĀĻ▓Įļ¦Ø 2](https://cdn.slidesharecdn.com/ss_thumbnails/lec7convolutionnetworks2-210213150820-thumbnail.jpg?width=560&fit=bounds)

![[Paper Review] Visualizing and understanding convolutional networks](https://cdn.slidesharecdn.com/ss_thumbnails/visualizingandunderstandingconvolutionalnetworks-171116075511-thumbnail.jpg?width=560&fit=bounds)

![[ņ╗┤Ēō©Ēä░ļ╣äņĀäĻ│╝ ņØĖĻ│Ąņ¦ĆļŖź] 7. ĒĢ®ņä▒Ļ│▒ ņŗĀĻ▓Įļ¦Ø 1](https://cdn.slidesharecdn.com/ss_thumbnails/lec7convolutionnetwork-210207104334-thumbnail.jpg?width=560&fit=bounds)

![[ņ╗┤Ēō©Ēä░ļ╣äņĀäĻ│╝ ņØĖĻ│Ąņ¦ĆļŖź] 5. ņŗĀĻ▓Įļ¦Ø](https://cdn.slidesharecdn.com/ss_thumbnails/lec5neuralnetwork-210125014802-thumbnail.jpg?width=560&fit=bounds)

![[ņ╗┤Ēō©Ēä░ļ╣äņĀäĻ│╝ ņØĖĻ│Ąņ¦ĆļŖź] 6. ņŚŁņĀäĒīī 2](https://cdn.slidesharecdn.com/ss_thumbnails/lec6backpropagation2-210203225156-thumbnail.jpg?width=560&fit=bounds)

![[ņ╗┤Ēō©Ēä░ļ╣äņĀäĻ│╝ ņØĖĻ│Ąņ¦ĆļŖź] 8. ĒĢ®ņä▒Ļ│▒ ņŗĀĻ▓Įļ¦Ø ņĢäĒéżĒģŹņ▓ś 5 - Others](https://cdn.slidesharecdn.com/ss_thumbnails/lec8convolutionnetworksarcitecture5others-210215060452-thumbnail.jpg?width=560&fit=bounds)

![[ņ╗┤Ēō©Ēä░ļ╣äņĀäĻ│╝ ņØĖĻ│Ąņ¦ĆļŖź] 8. ĒĢ®ņä▒Ļ│▒ ņŗĀĻ▓Įļ¦Ø ņĢäĒéżĒģŹņ▓ś 1 - ņĢīļĀēņŖżļäĘ](https://cdn.slidesharecdn.com/ss_thumbnails/lec8convolutionnetworksarcitecture1alexnet-210213163008-thumbnail.jpg?width=560&fit=bounds)

![[paper review] ņåÉĻĘ£ļ╣ł - Eye in the sky & 3D human pose estimation in video with ...](https://cdn.slidesharecdn.com/ss_thumbnails/190321eyeposegyubin-190517100712-thumbnail.jpg?width=560&fit=bounds)

![[ņŗĀĻ▓Įļ¦ØĻĖ░ņ┤ł] ņŗ¼ņĖĄņŗĀĻ▓Įļ¦ØĻ░£ņÜö](https://cdn.slidesharecdn.com/ss_thumbnails/nn10-180318142325-thumbnail.jpg?width=560&fit=bounds)

![[ĒÖŹļīĆ ļ©ĖņŗĀļ¤¼ļŗØ ņŖżĒä░ļöö - ĒĢĖņ”łņś© ļ©ĖņŗĀļ¤¼ļŗØ] 5ņן. ņä£ĒżĒŖĖ ļ▓ĪĒä░ ļ©ĖņŗĀ](https://cdn.slidesharecdn.com/ss_thumbnails/handson-mlch-180905033306-thumbnail.jpg?width=560&fit=bounds)