A Beginner's guide to understanding Autoencoder

- 1. A BeginnersŌĆÖ guide to understanding Autoencoder 2017.03.06 ņØ┤ņŖ╣ņØĆ https://blog.keras.io/building-autoencoders-in-keras.html

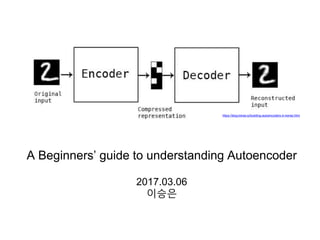

- 2. 2 Autoencoderļź╝ ĒĢ£ ļ¼Ėņןņ£╝ļĪ£ Ēæ£ĒśäĒĢśļ®┤? InputŌĆÖ = Decoder(Encoder(Input)) ņ”ē, inputņØä ņĢĢņČĢĒĢ£ Ēøä ņ×¼ĒśäĒĢśļŖö Ļ▒░. ĻĘĖļלņä£ labelņØ┤ ĒĢäņÜö ņŚåņ£╝ļŗł, Neural NetsņŚÉņä£ Unsupervised Learningņ£╝ļĪ£ ņ£Āļ¬ģĒĢ©

- 3. 3 ņĮöļō£ļĪ£ ļ¦īļōżĻ│Ā ņĮöļō£ļź╝ ĒĢ┤ļÅģĒĢśĻ│Ā1 Input(X)ņØś dimensionņØ┤ ņżäņ¢┤ļō”2 3 X -> XŌĆÖ (dimension ļÅÖņØ╝) ĻĘĖļ”╝ņ£╝ļĪ£ ĒŖ╣ņ¦ĢņØä ņé┤ĒÄ┤ļ│╝Ļ╣īņÜö? Ļ░Ćņן Ļ░äļŗ©ĒĢ£ 1 hidden layerļĪ£ ņé┤ĒÄ┤ļ┤ģņŗ£ļŗż (code: hidden layer) bottleneck code https://en.wikipedia.org/wiki/Autoencoder

- 4. 4 ĻĘĖļ”╝ņØä ņłśņŗØņ£╝ļĪ£ ĒÖĢņØĖĒĢśļ®┤ (encoder) (decoder) (XņÖĆ XŌĆÖņØś Ļ▒░ļ”¼ļź╝ ņĄ£ņåīĒÖöĒĢśļŖö encoder, decoder ņäĀĒāØ) (code) activation function (ReLUĻ░ÖņØ┤ non-linearityļź╝ ņŻ╝ļŖö ĒĢ©ņłś) (output) xņÖĆ xŌĆÖņØś Ļ▒░ļ”¼ņØĖ loss functionņØä ņĄ£ņåīĒÖöļÅäļĪØ trainingĒĢ© zņØś dimensionņØĖ pļŖö xņØś dimensionņØĖ dļ│┤ļŗż ņ×æĻĖ░ ļĢīļ¼ĖņŚÉ encoderļŖö xļź╝ ņĢĢņČĢĒĢśņŚ¼ Ēæ£ĒśäĒĢ£ļŗżĻ│Ā ņāØĻ░üĒĢĀ ņłś ņ׳ņØī ĻĘĖļ”¼Ļ│Ā p < d ņÖĆ Ļ░ÖņØĆ regularizationņØ┤ ņŚåņ£╝ļ®┤ identity function(f(x)=x)ļź╝ ĒĢÖņŖĄĒĢśĻĖ░ ļĢīļ¼ĖņŚÉ Ļ╝Ł ĒĢäņÜöĒĢ£ regularizationņ×ä deterministicĒĢ£ mappingņ£╝ļĪ£ codeņÖĆ outputņØä Ēæ£ĒśäĒĢĀ ņłś ņ׳ņØī https://en.wikipedia.org/wiki/Autoencoder

- 5. 5 ĻĘ╝ļŹ░ autoencoderļź╝ ņō░ļ®┤ ļŁÉĻ░Ć ņóŗņØäĻ╣īņÜö...? ļČĆņĀ£: code(z)ņØĆ ņ¢┤ļ¢ż ņØśļ»Ėļź╝ Ļ░Ćņ¦łĻ╣īņÜö?

- 6. 6 Autoencoderļź╝ ņ▓śņØī ņĀ£ņĢłĒĢ£ ļģ╝ļ¼Ė(Baldi, P. and Hornik, K. 1989)ņŚÉņä£ļŖö ŌĆ£backpropagationņ£╝ļĪ£ PCAļź╝ ĻĄ¼ĒśäŌĆØĒĢśļŖöļŹ░ ņØśņØśļź╝ ļæĀ Baldi, P. and Hornik, K. (1989) Neural networks and principal components analysis: Learning from examples without local minima. Neural Networks ĻĘĖļĀćļŗżļ®┤...PCA(Principal Component Analysis)ļŖö ļ¼┤ņŚćņØĖņ¦Ć...?

- 7. 7 PCA(Principal Component Analysis), ņŻ╝ņä▒ļČä ļČäņäØņØ┤ļ×Ć (Ēś╣ņŗ£ orthogonalņØ┤ļéś projection Ļ░ÖņØĆ ļŗ©ņ¢┤ļź╝ Ēś╣ņŗ£ ļ¬©ļź┤ņŗ£ļ®┤ ņäĀĒśĢļīĆņłś ĻĖ░ņ┤łļØ╝ļÅä Ļ╝Ł Ļ│ĄļČĆĒĢ┤ļ│┤ņŗ£ĻĖĖ!) ŌĆó N correlated variablesņØä M uncorrelated variablesļĪ£ orthogonal transformationĒĢ©. ĻĘĖļל ņä£ dimensionņØ┤ N->M (MņØĆ Nļ│┤ļŗż ņ×æĻ▒░ļéś Ļ░ÖņØī) ņ£╝ļĪ£ ņżäņ¢┤ļō¼! ņØ┤ļĢī MĻ░£ņØś uncorrelated variablesņØä principal componentsļØ╝ ĒĢ© (ļŗ©, ļŹ░ņØ┤Ēä░Ļ░Ć high-dimensional spaceņŚÉņä£ linear-manifoldĒĢś Ļ▓ī ņ׳ļŗżļŖö Ļ░ĆņĀĢņØ┤ ĒĢäņÜöĒĢ©) ŌĆó ņ”ē, ļŹ░ņØ┤Ēä░Ļ░Ć Ļ░Ćņן ļ¦ÄņØ┤ ļČäņé░ļÉ£ M orthogonal directionņØä ņ░ŠņĢäņä£ N-dimensional ļŹ░ņØ┤Ēä░ ļź╝ projectionĒĢ©. ĻĘĖļ¤¼ļ®┤ ĻĘĖ Ļ│╝ņĀĢņŚÉņä£ M orthogonal directionņŚÉ ņ׳ļŹś ļŹ░ņØ┤Ēä░ļŖö ņåÉņŗżļÉśĻ▓Ā ņ¦Ćļ¦ī ļŹ░ņØ┤Ēä░Ļ░Ć Ļ░Ćņן ļ¦ÄņØ┤ ļČäņé░ļÉ£ directionņØ┤ļ»ĆļĪ£ variationņØ┤ Ēü¼ņ¦Ć ņĢŖņĢä ņåÉņŗżņØ┤ ņĄ£ņåīĒÖö ļÉ© ŌĆó M orthogonal directionņ£╝ļĪ£ Ēæ£ĒśäļÉĀ ņłś ņŚåļŖö Ļ░ÆļōżņØĆ ņĀäņ▓┤ ļŹ░ņØ┤Ēä░ņØś ĒÅēĻĘĀĻ░Æņ£╝ļĪ£ reconstruction(ņ×¼ĻĄ¼ņä▒)ĒĢ© ŌĆó (ĻĘĖļ”╝ 2)ņ▓śļ¤╝ ļ╣©Ļ░äņĀÉņØ┤ ņ┤łļĪØņāēņĀÉņ£╝ļĪ£ Ēæ£ĒśäļÉśĻĖ░ ļĢīļ¼ĖņŚÉ ņØ┤ Ļ▒░ļ”¼ļ¦īĒü╝ņØ┤ ņŚÉļ¤¼Ļ░Ć ļÉ© (ĻĘĖļ”╝ 1) PCA: 3ņ░©ņøÉ -> 2ņ░©ņøÉ (ĻĘĖļ”╝ 2) PCA: 2ņ░©ņøÉ -> 1ņ░©ņøÉ http://www.nlpca.org/pca_principal_component_analysis.html https://en.wikipedia.org/wiki/Principal_component_analysis

- 8. 8 PCAļ│┤ļŗż ļ╣äĒÜ©ņ£©ņĀüņ×ä (ņĢäņŻ╝ ļ¦ÄņØĆ ņ¢æņØś ļŹ░ņØ┤Ēä░ļĪ£ ĒĢÖņŖĄņØä ĒĢśļŗżļ│┤ļ®┤ ļŹö ĒÜ©ņ£©ņĀüņØ┤ ļÉĀ ņłśļÅä ņ׳ņØī..) ŌĆó ļśÉĒĢ£, code ņé¼ņØ┤ļĪ£ non-linear layerļź╝ ņČöĻ░ĆĒĢśļ®┤ curved(non-linear) manifold ņ£äļéś ĻĘ╝ņ▓śņŚÉ ņ׳ļŖö ļŹ░ņØ┤Ēä░ļź╝ ĒÜ©ņ£©ņĀüņ£╝ļĪ£ Ēæ£ĒśäĒĢśļŖö PCAņØś generalizeĒĢ£ ļ▓äņĀäņØ┤ Ļ░ĆļŖźĒĢ© ŌĆó EncoderļŖö input spaceņØś ņóīļŻīļź╝ manifoldņØś ņóīĒæ£ļĪ£ ļ│ĆĒÖśĒĢ© ŌĆó DecoderļŖö ļ░śļīĆļĪ£ manifoldņØś ņóīĒæ£ļź╝ output spaceņØś ņóīĒæ£ļĪ£ ļ│ĆĒÖśĒĢ© ŌĆó ĻĘĖļ¤¼ļéś ņ┤łĻĖ░ weightņØ┤ good solutionņŚÉ Ļ░ĆĻ╣īņøīņĢ╝ local minimaņŚÉ ņĢłļ╣Āņ¦É ŌĆó input xņÖĆ output xŌĆÖĻ░Ć Ļ░ÖļÅäļĪØ reconstructĒĢśļŖö networkļź╝ ļ¦īļōżĻ│Ā reconstruction errorļź╝ ņĄ£ ņåīĒÖöĒĢśļÅäļĪØ gradient descent learningņØä ņĀü ņÜ®ĒĢ© ŌĆó Code zņØĆ M hidden unitņ£╝ļĪ£ input NņŚÉ ļīĆĒĢ£ compressed representationņØ┤ ļÉ© ŌĆó PCAņÖĆ autoencoderņØś reconstruction error ļŖö ļÅÖņØ╝ĒĢśņ¦Ćļ¦ī hidden unitĻ│╝ principal componentļŖö Ļ╝Ł ņØ╝ņ╣ś ņĢŖņØä ņłś ņ׳ņØī (PCA axesĻ│╝ ļŗżļź┤Ļ▒░ļéś skewedļÉĀ ņłś ņ׳ĻĖ░ ļĢīļ¼Ė!) PCA ĻĄ¼ĒśäņØä ņ£äĒĢ£ Autoencoder Lecture 15.1 ŌĆö From PCA to autoencoders [Neural Networks for Machine Learning] by Geoffrey Hinton

- 9. 9 ĻĘ╝ļŹ░ ĻĘĖļלņä£... autoencoderļź╝ ņō░ļ®┤ ļŁÉĻ░Ć ņóŗņØäĻ╣īņÜö...? Non-linear Dimension ReductionņØ┤ Ļ░ĆļŖźĒĢśļéś ņ×ģņ”ØņØ┤ ņĢĀļ¦żĒĢśĻ│Ā linearĒĢ£ Ļ▓ĮņÜ░ PCA ņä▒ļŖźņØ┤ ļŹö ņóŗņĢśņØī ņĢäņ¦ü ņל ļ¬©ļź┤Ļ▓ĀļäżņÜöŌĆ”?!?! (autoencoderņŚÉ ļīĆĒĢ£ ņ▓½ ļ░śņØæņØĆ ĻĘĖņĀĆ ĻĘĖļ×¼..) ĻĘĖļלņä£ ņé¼ļ×īļōżļÅä ļŗ╣ņŗ£ PCAļź╝ ļŹö ļ¦ÄņØ┤ ņŹ╝ņØī! (ņŗ£Ļ░üĒÖö, ļČäļźś ņŗ£ ļ¦żņÜ░ ņ£ĀņÜ®ĒĢ©)

- 10. 10 Baldi, P. and Hornik, K. Neural networks and principal components analysis: Learning from examples without local minima. Neural Networks Hinton & Salakhutdinov, Reduction the Dimensionality of Data with Neural Network, Science PretrainingņØä Deep AutoencoderņŚÉ ņĀüņÜ®ĒĢ┤ļ│┤ņ×É! Reduction the Dimensionality of Data with Neural Network, Hinton & Salakhutdinov, Science, 2006 2006 1989

- 11. 11 Reduction the Dimensionality of Data with Neural Network, Hinton & Salakhutdinov, Science, 2006 [Lecture 15.2] Deep autoencoders by Geoffrey Hinton HintonĻ│╝ SalakhutdinovĻ░Ć ļ¦īļōĀ, ņ▓½ļ▓łņ¦Ė ņä▒Ļ│ĄņĀüņØĖ Deep Autoencoder ŌĆó Deep AutoencoderļŖö non-linear dimension reduction ņÖĖņŚÉļÅä ņŚ¼ļ¤¼ ņןņĀÉņØ┤ ņ׳ņØī ŌĆó flexible mapping Ļ░ĆļŖź ŌĆó learning timeņØĆ training case ņłśņŚÉ ļ╣äļĪĆĒĢśĻ▒░ļéś ļŹö ņĀüĻ▓ī Ļ▒Ėļ”╝ ŌĆó ņĄ£ņóģ encoding ļ¬©ļŹĖņØ┤ ĻĮż ļ╣Āļ”ä ŌĆó ĻĘĖļ¤¼ļéś! backpropagationņ£╝ļĪ£ weightņØä ņĄ£ņĀüĒÖöĒĢśĻĖ░ ņ¢┤ļĀżņøīņä£ 2006ļģä ņĀäņŚÉļŖö ņĢł ņō░ ņśĆņØī ŌĆó ņ┤łĻĖ░ weight Ļ░ÆņØ┤ Ēü¼ļ®┤ local minimaļĪ£ ņłśļĀ┤ ŌĆó ņ┤łĻĖ░ weight Ļ░ÆņØ┤ ņ×æņ£╝ļ®┤ backpropagationņŗ£ vanishing gradient ļ¼ĖņĀ£ ļ░£ņāØ ŌĆó ņāłļĪ£ņÜ┤ ļ░®ļ▓ĢņØä autoencoderņŚÉ ņĀüņÜ®ĒĢśņŚ¼ ņ▓½ļ▓łņ¦ĖļĪ£ ņä▒Ļ│ĄņĀüņØĖ deep autoencoderļź╝ ņÖäņä▒ĒĢ© ŌĆó layer-by-layerļĪ£ pre-trainingņØä ņĀüņÜ® ŌĆó Echo-State Netsņ▓śļ¤╝ weightņØä ņ┤łĻĖ░ĒÖö(initialization)ĒĢ© ŌĆó ReconstructionņØś ņÜ®ļÅä(ņøÉļ×£ dimension reductionļ¦ī..)Ļ░Ć ņŻ╝ļ¬®ļ░øĻĖ░ ņŗ£ņ×æĒĢ©

- 12. 12 Deep autoencoderņØś ĻĄ¼ņĪ░ļź╝ ņØ┤ĒĢ┤ĒĢśĻĖ░ ņ£äĒĢ┤ ņĢīņĢäņĢ╝ ĒĢĀ Ļ▓āļōżņØ┤ ņ׳ņ¢┤ņÜö... ņĢäļל ļé┤ņÜ®ņØä ņé┤ĒÄ┤ļ│┤Ļ│Ā ļŗżņŗ£ ļÅīņĢäĻ░Ćļ┤ÉņÜö! RBMĻ│╝ DBF, ĻĘĖļ”¼Ļ│Ā greedy layer-wise training

- 13. 13 ŌĆó Boltzmann machineņØś ļ│ĆĒśĢ*ņ£╝ļĪ£ InputņŚÉ ļīĆĒĢ£ ĒÖĢ ļźĀ ļČäĒżļź╝ learningĒĢĀ ņłś ņ׳ļŖö generative stochastic artificial neural networkļĪ£ visible unitĻ│╝ hidden unitņ£╝ļĪ£ ĻĄ¼ņä▒ļÉ©. ļŗ©, Ļ░ü unitņØĆ binary unit ņ×ä. ŌĆó Boltzmann machineĻ│╝ ļŗżļź┤Ļ▓ī 1 layer of hidden unitņ£╝ļĪ£ ĻĄ¼ņä▒ļÉśĻ│Ā hidden units ņé¼ņØ┤ņØś connectionņØ┤ ņŚåņØī. ņ”ē, visible unitņØś ņāüĒā£Ļ░Ć ņŻ╝ņ¢┤ņ¦ł ļĢī hidden unit activationņØĆ Mutually IndependentĒĢ©. ŌĆó Bipartite graphņØ┤ļ»ĆļĪ£ ņŚŁļÅä ņä▒ļ”Į(hidden unitņØ┤ ņŻ╝ņ¢┤ņ¦ł ļĢī, visible unit activationļÅä MIĒĢ©) ŌĆó ļö░ļØ╝ņä£, visible unitsņØ┤ ņŻ╝ņ¢┤ņ¦Ćļ®┤ 1 stepļ¦īņ£╝ļĪ£ thermal equilibrium(ņŚ┤ĒÅēĒśĢ)ņŚÉ ļÅäļŗ¼ĒĢ© ŌĆó Ļ▓░Ļ│╝ņĀüņ£╝ļĪ£ Boltzmann machineļ│┤ļŗż ĒĢÖņŖĄ ņŗ£ Ļ░äņØ┤ļéś inference ņŗ£Ļ░äņØä Ēü¼Ļ▓ī ņżäņ×ä ŌĆó dimensionality reduction, classification, collaborative filtering, feature learning, topic modeling ļō▒ ļ¬®ņĀüņŚÉ ļ¦×Ļ▓ī supervised, unsupervisedņŚÉ ļŗż ņō░ņØ╝ ņłś ņ׳ņØī RBM, Restricted Boltzmann machine *Boltzmann machine: hidden unitņØä ņČöĻ░ĆĒĢ£ EBM(Energy Based Model)ņØś ņØ╝ņóģņ£╝ļĪ£ Markov NetworkņØ┤ĻĖ░ļÅä ĒĢ©. EnergyļĪ£ ĒÖĢļźĀņØä ĻĄ¼ĒĢśĻ│Ā Log-likelihood gradientļź╝ Ļ│äņé░ĒĢ£ Ēøä, MCMC samplingņØä ĒåĄĒĢ┤ stochasticĒĢśĻ▓ī gradientņØä ņČöņĀĢĒĢśņŚ¼ Ļ│äņé░ņØ┤ ņśżļל Ļ▒Ėļ”¼ļŖö ļŗ©ņĀÉņØ┤ ņ׳ņØī. ņ×ÉņäĖĒĢ£ ļé┤ņÜ®ņØĆ ņ×ÉļŻī1(ĒĢ£ĻĖĆ), ņ×ÉļŻī2(ĒĢ£ĻĖĆ)ļź╝ ņ░¼ņ░¼Ē׳ ļ│┤ĻĖ░ļź╝ ņČöņ▓£ĒĢ© Supervised ļ¼ĖņĀ£ņŚÉļÅä RBM ņĀüņÜ® Ļ░ĆļŖź unsupervisedļØ╝ļ®┤ yļŖö xŌĆÖņ£╝ļĪ£ ņ╣śĒÖś

- 14. 14 RBMņØś training http://www.cs.toronto.edu/~rsalakhu/deeplearning/yoshua_icml2009.pdf http://dsba.korea.ac.kr/wp/wp-content/seminar/Deep%20learning/RBM-2%20by%20H.S.Kim.pdf ŌĆó ĒĢÖņŖĄņØä ņ£äĒĢ┤ņä£ Negative Log-Likelihood(NLL)ņØä ņĄ£ņåīĒÖöĒĢ┤ņĢ╝ĒĢ©. ņŚ¼ĻĖ░ņŚÉ stochastic gradient descentļź╝ ņĀüņÜ®ĒĢśļ®┤ positive phaseņÖĆ negative phaseļĪ£ ļéśļłĀņ¦É ŌĆó Positive phaseļŖö input vectorņÖĆ ņĪ░Ļ▒┤ļČĆ ĒÖĢļźĀ(p(h|v))ļĪ£ Ļ│äņé░ĒĢĀ ņłś ņ׳ņ¦Ćļ¦ī, negative phaseļŖö p(v,h)ņÖĆ E( ņĀĢĒĢ┤ņ¦ä ļ¬©ļŹĖ Ļ░ĆļŖźĒĢśņ¦Ćļ¦ī negative phaseļŖö Ļ│äņé░ņØ┤ p(v,h)ņÖĆ E(v,h) ļź╝ Ļ│äņé░ĒĢśĻĖ░ ņ¢┤ļĀĄĻĖ░ ļĢīļ¼ĖņŚÉ Gibbs samplerļź╝ ņØ┤ņÜ®ĒĢ£ MCMC ņČöņĀĢņ£╝ļĪ£ approximationņØä ņČöņĀĢĒĢ© ŌĆó AutoencoderņÖĆ ļŗżļź┤Ļ▓ī BP(Backpropagation) ļīĆņŗĀ ļ¼┤ĒĢ£ļ▓łņØś gibbs samplingņ£╝ļĪ£ RBM ļ¬©ļŹĖņØ┤ ņŚÉļäłņ¦Ć ĒÅēĒśĢ ņāüĒā£ņŚÉ ņØ┤ļźĖļŗżļŖö ņĀĢļ│┤ ņØ┤ļĪĀņØś CD-kļź╝ ĒåĄĒĢ┤ MLE ļ¼ĖņĀ£ļź╝ ĒĢ┤ Ļ▓░ĒĢ© (hinton 02) Ļ│äņé░ņØ┤ ņ¢┤ļĀżņøĆ

- 15. 15 DBN, Deep Belief Network ŌĆó RBMņØä stackedĒĢśĻ▓ī ņīōņĢäņä£ ļ¦īļōĀ probabilistic generative ļ¬©ļŹĖ ŌĆó Top 2 hidden layerļŖö undirected associative memoryĒĢśĻ│Ā, ļéśļ©Ėņ¦Ć hidden layerļŖö directed graphĒĢ© ŌĆó ĻĘĖļלņä£ stacked RBMņØ┤ļØ╝Ļ│Ā ļČĆļź┤ĻĖ░ļÅä ĒĢ© ŌĆó Ļ░Ćņן Ēü░ ņןņĀÉņØĆ layer-by-layer learningņ£╝ļĪ£ higher level featureļź╝ ņĀä layerņŚÉņä£ learningĒĢ┤ ņĀäļŗ¼ĒĢśļŖö Ļ▓āņ×ä. ņØ┤ļź╝ greedy layer-wise training ļØ╝Ļ│Ā ĒĢ© ŌĆó Ļ░ü layerņŚÉņä£ļŖö unsupervised RBM learningņØä ĒĢ£ļ▓łņö® ņłśĒ¢ēĒĢ©(Gibbs sampling + KL divergence ņĄ£ņåīĒÖö). ĻĘĖļ”¼Ļ│Ā Ļ│äņé░ļÉ£ weightņØś transpose Ļ░ÆņØä inference weightņ£╝ļĪ£ ĒÖ£ņÜ®ĒĢ© ŌĆó ĒĢśņ£ä layerņØś Ļ▓░Ļ│╝ļź╝ ļŗżņØī ņāüņ£ä layerņØś input ļŹ░ņØ┤Ēä░ļĪ£ ĒÖ£ņÜ®ĒĢśļ»ĆļĪ£, Ļ░ü layerņØś ņĄ£ņĀü ĒĢ┤ļŖö ņāüņ£ä layerĻ╣īņ¦Ć ļŗż Ļ│ĀļĀżĒ¢łņØä ļĢīņØś ņĄ£ņĀüĒĢ┤ļŖö ņĢäļŗÉ ņłś ņ׳ņ£╝ļéś Ēø©ņö¼ ĒÜ©ņ£©ņĀüņ×ä ŌĆó Layer-wiseĒĢ£ pretrainingņØś weight ņ£╝ļĪ£ initializeļź╝ ĒĢśĻ│Ā ņĀäņ▓┤ networkļŖö ļŗżņŗ£ backpropagationņ£╝ļĪ£ fine-tuningņØä ņłśĒ¢ēĒĢ©. Fine-tuningņØĆ supervisedĒĢ£ ļ░®ļ▓Ģņ£╝ļĪ£ ņłśĒ¢ē ļÉ© A fast learning algorithm for deep belief nets, Hinton et al. Neural Computation 2006 http://www.cs.toronto.edu/~rsalakhu/deeplearning/yoshua_icml2009.pdf ļ¬©ļōĀ visible unitĻ│╝ hidden unitņØś dimensionņØĆ ļÅÖņØ╝

- 16. 16 Reduction the Dimensionality of Data with Neural Network, Hinton & Salakhutdinov, Science, 2006 DBN ĻĄ¼ņĪ░ņÖĆ greedy layer-wise pre-trainingņØ┤ ņĀüņÜ®ļÉ£ Deep Autoencoder ŌĆó Pretraining: RBM ĻĖ░ļ░śņØś 4Ļ░£ņØś encoder, decoder stackņ£╝ļĪ£ learningņØä ņłśĒ¢ē (ĻĘĖļל ņä£ Stacked AEļØ╝Ļ│ĀļÅä ĒĢ©) ŌĆó Ļ░ü stackņØĆ one layer of feature detectorsņØś ņä▒Ļ▓®ņØä Ļ░Ćņ¦É. ņ£äļĪ£ Ļ░łņłśļĪØ ņČöņāüĒÖöļÉ£ featureļź╝ detectĒĢ© ŌĆó ņĀäņ▓┤ lossļź╝ ņĄ£ņåīĒÖöĒĢśļŖö Ļ▓ī ņĢäļŗłļØ╝ layerļ│äļĪ£ lossļź╝ ņĄ£ņåīĒÖöĒĢ© (Loss function Ļ│äņé░ņŗØņØĆ ņŚ¼ĻĖ░ļĪ£..) ŌĆó Unrolling(ĒÄ╝ņ╣©): Encoder weight Ļ░ÆņØś transpose ĒĢ£ weightņØä DecoderļĪ£ ņöĆ ŌĆó Encoder: weight initializationņØä random ļīĆņŗĀ pretraining Ļ▓░Ļ│╝ ĒÖ£ņÜ® ŌĆó Decoder: Encoder weightņØś transpose matrixļź╝ weightņ£╝ļĪ£ ņöĆ ŌĆó Fine-tuning: ņāüĻĖ░ Ļ│╝ņĀĢņŚÉņä£ ņĀĢĒĢ┤ņ¦ä weight Ļ░ÆļōżņŚÉ ļīĆĒĢ£ backpropņ£╝ļĪ£ ņłśĒ¢ēņ£╝ ļĪ£ ņĀäņ▓┤ņŚÉ ļīĆĒĢ£ ņĄ£ņĀüĒÖöļź╝ ņłśĒ¢ēĒĢ© ļŗżņŗ£ Deep AutoencoderļĪ£ ļÅīņĢäņśżļ®┤...

- 17. 17 ļŗ©, code vectorņØś dimensionņØĆ ņĢ×ņן ĻĘĖļ”╝ņŚÉņä£ ļ│┤ļō»ņØ┤ 30ņ×ä 8ņØä ļ│┤ļ®┤ ņŗżņĀ£ ļŹ░ņØ┤Ēä░(8ņØ┤ ņĢĮĻ░ä ļüŖĻ╣Ć)ļ│┤ļŗż ļéśņØĆ Ļ▒Ė ņĢī ņłś ņ׳ņØī. PCAļŖö 30Ļ░£ņØś linear unitņ£╝ļĪĀ Ēæ£ĒśäņØä ņל ļ¬╗ĒĢśļŖö Ļ▓ī ļ│┤ņ×ä Reconstruction Ļ▓░Ļ│╝ ļ╣äĻĄÉ: ņøÉļ│Ė vs. Deep Autoencoder vs. PCA ņŗżņĀ£ ļŹ░ņØ┤Ēä░ Deep Autoencoder PCA ņØ┤ Ļ▒Ė Ļ│äĻĖ░ļĪ£, dimensionņØä ņżäņØ┤ļŖö ŌĆśPCA ļö░ļØ╝ĒĢśĻĖ░ŌĆÖņŚÉņä£ ļ▓Śņ¢┤ļéś reconstruction ļŗ©Ļ│äĻ░Ć ņŻ╝ļ¬®ļ░øĻĖ░ ņŗ£ņ×æĒĢ©!

- 18. 18 ņé¼ņŗż ĻĖ░ņĪ┤ņØś autoencoderļŖö inputņØä representĒĢśļŖö discriminativeĒĢ£ ļ¬©ļŹĖņØĖ ļ░śļ®┤, RBMņØĆ statistical distributionņØä learningĒĢśļŖö ĒÖĢļźĀļĪĀņĀüņØĖ ļ¬©ļŹĖļĪ£, generative ļ¬©ļŹĖņØś ņä▒Ļ▓®ņØä Ļ░Ćņ¦É. ĻĘĖļ”¼Ļ│Ā ņØ┤ļź╝ ņ¦¼ļĮĢĒĢ£ deep autoencoderļŖö generative ļ¬©ļŹĖņØś ņä▒Ļ▓®ņØä ņ¢┤ļŖÉņĀĢļÅä Ļ░Ćņ¦ĆĻ▓ī ļÉ© ĻĘĖļ¤¼ļéś p(h=0,1) = s(Wx+b)ņØä ĒĢÖņŖĄĒĢśļŖö Ļ▓ī ņĢäļŗłļØ╝, h=s(Wx+b)ļź╝ ĒĢÖņŖĄĒĢśņŚ¼ deterministicĒĢ£ ļ®┤ļÅä ņŚ¼ņĀäĒ׳ Ļ░Ćņ¦ĆĻ│Ā ņ׳ ļŗżĻ│Ā ļ┤ÉņĢ╝ĒĢ© Generative ņÖĆ Discriminative Ļ░Ć ņ¦¼ļĮĢļÉ£ Deep Autoencoder http://sanghyukchun.github.io/61/ ņØ╝ļ░śņĀüņØĖ autoencoder RBM Deep Autoencoder (Stacked Autoencoder) ļæÉ ņä▒Ļ▓®ņØä ļŗż Ļ░Ćņ¦ĆĻ│Ā ņ׳ņØī ņÜöņĢĮĒĢśņ×Éļ®┤..

- 19. 19 Denoising autoencoder (2008) Extracting and Composing Robust Features with Denoising Autoencoders (P. Vincent, H. Larochelle Y. Bengio and P.A. Manzagol, ICMLŌĆÖ08, pages 1096 - 1103, ACM, 2008) Sparse autoencoder (2008) Fast Inference in Sparse Coding Algorithms with Applications to Object Recognition (K. Kavukcuoglu, M. Ranzato, and Y. LeCun, CBLL-TR-2008-12-01, NYU, 2008) Sparse deep belief net model for visual area V2 (H. Lee, C. Ekanadham, and A.Y. Ng., NIPS 20, 2008) Stacked Denoising autoencoder (2010) Stacked Denoising Autoencoders: Learning Useful Representations in a Deep Network with a Local Denoising Criterion (P. Vincent, H. Larochelle, I. Lajoie, Y. Bengio and P.A. Manzagol, J. Mach. Learn. Res. 11 3371-3408, 2010) Variational autoencoder (2013, 2014) Auto-encoding variational Bayes (D. P. Kingma and M. Welling. arXiv preprint arXiv:1312.6114, 2013) Stochastic backpropagation and approximate inference in deep generative models (Rezende, Danilo Jimenez, Mohamed, Shakir, and Wierstra, Daan. arXiv preprint arXiv:1401.4082, 2014) 2006 1989 2008 AutoencoderņØś ĒÖĢņןĒīÉļōż(variants) 2010 2013 ĒĢśĻĖ░ ņÖĖņŚÉļÅä Contrative Autoencoder, Multimodal Autoencoder ļō▒ ņłśļ¦ÄņØĆ variantsĻ░Ć ņĪ┤ņ×¼ĒĢ© ņ¦äņĀĢĒĢ£ ņØśļ»ĖņØś generative model ņÖäņä▒ inputņØä ņåÉņāüņŗ£ĒéżĻ│Ā ļ│ĄĻĄ¼ĒĢ© endcodingĒĢĀ ļĢī inputņØś ņØ╝ļČĆļ¦ī ļäŻņØī ņżĆļ╣ä ņŗ£Ļ░äņØ┤ ļČĆņĪ▒ĒĢ┤ņä£(ŃģĀŃģĀ) denoising autoencoderļ¦ī Ļ░äļŗ©Ē׳ ņĀĢļ”¼Ē¢łņŖĄļŗłļŗż...

- 20. 20 ŌĆó ņåÉņāüļÉ£ ņØ╝ļČĆņØś inputļź╝ Ļ░Ćņ¦ĆĻ│Ā trainingĒĢ┤ņä£ ļ│ĄĻĄ¼ļÉ£ original inputņØä outputņ£╝ļĪ£ ļ¦īļō” ŌĆó identity functionņØä learningņØä ļ░®ņ¦ĆĒĢśĻ│Ā noiseņŚÉ robustĒĢ£ ļ¬©ļŹĖņØä ļ¦īļōżĻĖ░ ņ£äĒĢ© ŌĆó ņåÉņāü ĒöäļĪ£ņäĖņŖż: inputņŚÉ stochastic corruption(ļ×£ļŹżĒĢ£ ņåÉņāü)ņØä ņČöĻ░ĆĒĢ© ŌĆó vņØś ĒÖĢļźĀļĪ£, randomĒĢśĻ▓ī inputņØś ņØ╝ļČĆļź╝ 0ņ£╝ļĪ£ ņäżņĀĢĒĢ©(v~0.5) ŌĆó ņ£ä ļ░®ļ▓Ģ ļ¦ÉĻ│Ā ļŗżļźĖ ļ░®ļ▓ĢņØś corruptionņØä ņé¼ņÜ®ĒĢ┤ļÅä ļ¼┤ļ░®ĒĢ© ŌĆó ņåÉņāü ĒöäļĪ£ņäĖņŖż ņĘ©ņåī: Corrupted input tilda XļĪ£ļČĆĒä░ XŌĆÖļź╝ reconstruct(ļ│ĄņøÉ)ĒĢ© ŌĆó ņØ┤ļź╝ ņ£äĒĢ┤ņäĀ input distributionņØä ņל ņĢīņĢäņĢ╝ĒĢ© ŌĆó Loss functionņØĆ xŌĆÖņÖĆ noiseĻ░Ć ņŚåļŖö ļ│ĖļלņØś input xļź╝ ļ╣äĻĄÉĒĢ┤ņä£ Ļ│äņé░ĒĢ© ŌĆó Unsupervised pretrainingņŚÉņä£ RBMĻ│╝ ļ╣äņŖĘĒĢśĻ▒░ļéś ļŹö ņóŗņØĆ ņä▒ļŖźņØä ļ│┤ņ×ä Denoising autoencoder /zukun/icml2012-tutorial-representationlearning

- 21. 21 ĻĘĖļ¤╝ ņÜöņāłļŖö autoencoderĻ░Ć ņŻ╝ļĪ£ ņ¢┤ļ¢╗Ļ▓ī ĒÖ£ņÜ®ļÉśļéś? Dimension reduction? Reconstruction? Pretraining? (ļīĆĻ░£ 3Ļ░Ćņ¦ĆļŖö ĒĢ©Ļ╗śĒĢśļŖö Ļ▓āņØ┤ĻĖ┤ ĒĢśņ¦Ćļ¦īŌĆ”) Supervised ļ¼ĖņĀ£ņŚÉņä£ PretrainingĒĢśļŖö ņŚŁĒĢĀņØ┤ ņĀ£ņØ╝ ņŻ╝ļ¬®ļ░øĻ│Ā ņ׳ņØī

- 22. 22 Unsupervised PretrainingņØś ĒÜ©Ļ│╝ (1) ŌĆó BengioļŖö RBMņÖĆ DBNņØä ĒÖĢņןĒĢ┤ņä£ continuous value inputņØä ņé¼ņÜ®ĒĢĀ ņłś ņ׳Ļ▓ī ĒÖĢņן ĒĢśĻ│Ā DBNņØä supervised learning taskņŚÉ ņĀüņÜ®ĒĢ£ Ēøä ĒĢśĻĖ░ņÖĆ Ļ░ÖņØĆ ņŗżĒŚśņØä ņłśĒ¢ēĒĢ© ŌĆó ĻĘĖ Ļ▓░Ļ│╝, Greedy unsupervised layer-wise trainingņØ┤ deep networksļź╝ ņĄ£ņĀüĒÖöņÖĆ ņØ╝ļ░śĒÖö(generalization)ņŚÉļÅä ļÅäņøĆņØ┤ ļÉ£ļŗżļŖö Ļ▒Ė ņ”Øļ¬ģĒĢ©. ĒŖ╣Ē׳, DBN ļ┐É ņĢäļŗłļØ╝ deep net, shallow netņŚÉņä£ļÅä ņ£ĀņÜ®ĒĢ©ņØä ļ░ØĒל ŌĆó ĒøäņŚÉ ņØ┤ņÖĆ ņ£Āņé¼ĒĢśĻ▓ī autoencoderļź╝ ĒÖ£ņÜ®ĒĢśņŚ¼ supervised learning taskņØś ņä▒ļŖźņØä ļåÆņØ┤ļŖö ņŚ░ĻĄ¼ļōżņØ┤ ļéśņś┤ Greedy Layer-Wise Training for Deep Networks, Bengio et al. NIPS 2006 *Autoencoderļź╝ AutoAssociator(AA)ļĪ£ Ēæ£ĒśäĒĢśļŖö Ļ▓ĮņÜ░ļÅä ņ׳ņØī *

- 23. 23 /zukun/icml2012-tutorial-representationlearning Why does unsupervised pre-training help deep learning? (Dumitru Erhan, Yoshua Bengio, Aaron Courville, Pierre-Antoine Manzagol, Pascal Vincent,and Samy Bengio. JMLR, 11:625ŌĆō660, February 2010) ņĄ£ņĀüĒĢ┤ ĻĘ╝ņ▓śļĪ£ initial weightņØä ņŻ╝ļŖö ĒÜ©Ļ│╝ļŖö ļīĆļŗ©ĒĢ©! ĒĢśĻĖ░ļŖö SDAE(Stacked Denoising Autoencoder)ļĪ£ pretrainingĒĢ£ Ēøä supervised DBNņŚÉ ņĀüņÜ®ĒĢ£ Ļ▓░Ļ│╝ Unsupervised PretrainingņØś ĒÜ©Ļ│╝ (2)

- 24. 24 ļüØ

- 25. 25 https://ko.wikipedia.org/wiki/ņ£ĀĒü┤ļ”¼ļō£_ĻĖ░ĒĢśĒĢÖ http://astronaut94.tistory.com/6 Euclidean SpaceņÖĆ Manifold(ļŗżņ¢æņ▓┤) Euclidean Space: Euclidean PlaneļŖö 2ņ░©ņøÉņØ┤ĻĖ░ ļĢīļ¼ĖņŚÉ 3ņ░©ņøÉņ£╝ļĪ£ Ļ░Ćļ®┤ Euclidean GeometryļĪ£ ĒÖĢņןļÉ©. Euclidean SpaceņŚÉņä£ ņä▒ļ”ĮĒĢśļŖö 5Ļ░Ćņ¦Ć Ļ│Ąļ”¼Ļ░Ć ņ׳ļŖöļŹ░ ĻĘĖ ļé┤ņÜ®ņØĆ ĒĢśĻĖ░ņÖĆ Ļ░ÖņØī ŌĆó ņ×äņØśņØś ņĀÉĻ│╝ ļŗżļźĖ ĒĢ£ ņĀÉņØä ņŚ░Ļ▓░ĒĢśļŖö ņ¦üņäĀņØĆ ļŗ© ĒĢśļéśļ┐ÉņØ┤ļŗż. ŌĆó ņ×äņØśņØś ņäĀļČäņØĆ ņ¢æļüØņ£╝ļĪ£ ņ¢╝ļ¦łļōĀņ¦Ć ņŚ░ņןĒĢĀ ņłś ņ׳ļŗż. ŌĆó ņ×äņØśņØś ņĀÉņØä ņżæņŗ¼ņ£╝ļĪ£ ĒĢśĻ│Ā ņ×äņØśņØś ĻĖĖņØ┤ļź╝ ļ░śņ¦Ćļ”äņ£╝ļĪ£ ĒĢśļŖö ņøÉņØä ĻĘĖļ”┤ ņłś ņ׳ļŗż. ŌĆó ņ¦üĻ░üņØĆ ļ¬©ļæÉ ņä£ļĪ£ Ļ░Öļŗż. ŌĆó ĒÅēĒ¢ēņäĀ Ļ│ĄņżĆ: ļæÉ ņ¦üņäĀņØ┤ ĒĢ£ ņ¦üņäĀĻ│╝ ļ¦īļéĀ ļĢī, Ļ░ÖņØĆ ņ¬ĮņŚÉ ņ׳ļŖö ļé┤Ļ░üņØś ĒĢ®ņØ┤ 2ņ¦üĻ░ü(180╦Ü)ļ│┤ļŗż ņ×æņ£╝ ļ®┤ ņØ┤ ļæÉ ņ¦üņäĀņØä ņŚ░ņןĒĢĀ ļĢī 2ņ¦üĻ░üļ│┤ļŗż ņ×æņØĆ ļé┤Ļ░üņØä ņØ┤ļŻ©ļŖö ņ¬ĮņŚÉņä£ ļ░śļō£ņŗ£ ļ¦īļé£ļŗż. Manifold: a topological space that is locally Euclidean, ņ”ē, ņ×æņØĆ ņśüņŚŁņŚÉņä£ Euclidean ņØĖ ņ£äņāü Ļ│ĄĻ░äņ£╝ļĪ£ ļ╣äņ£ĀĒü┤ļ”¼ļō£ Ļ│ĄĻ░äņØ┤ ņāØĻĖ░ļ®┤ņä£ ņāØĻ▓©ļé£ Ļ░£ļģÉ ŌĆó ņ£ĀĒü┤ļ”¼ļō£ Ļ│ĄĻ░äņŚÉņä£ņØś 5ļ▓łņ¦Ė Ļ│Ąļ”¼ņØĖ ĒÅēĒ¢ēņäĀ Ļ│Ąļ”¼Ļ░Ć ņ¦ĆĻĄ¼ņØś Ļ┤ĆņĀÉņŚÉņäĀ ņĢł ļ¦×ņ¦Ćļ¦ī ņśüņŚŁ ņØä ņĢäņŻ╝ ņ×æĻ▓ī ļ¦īļōżņ¢┤ņä£ ļĢģņØä ĒĢ£ņĀĢņ¦Ćņ£╝ļ®┤ ņä▒ļ”ĮĒĢ©. ŌĆó ņØ┤ļĀćĻ▓ī ņ×æņØĆ ņśüņŚŁņŚÉņä£ļŖö ņ£ĀĒü┤ļ”¼ļō£ņØś 5ļ▓łņ¦Ė Ļ│Ąļ”¼Ļ╣īņ¦Ć ļ¬©ļōĀ Ļ│Ąļ”¼Ļ░Ć ņä▒ļ”ĮĒĢĀ ņłś ņ׳ņ£╝ļ»Ć ļĪ£ ņØ┤ Ļ│ĄĻ░äņØä ManifoldļØ╝Ļ│Ā ĒĢ©

- 26. 26 ŌĆ£BottleneckŌĆØ code i.e., low-dimensional, typically dense, distributed representation ŌĆ£OvercompleteŌĆØ code i.e., high-dimensional, always sparse, distributed representation Code Input Target = input Code Input Target = input Bottleneck code vs. Overcomplete code /danieljohnlewis/piotr-mirowski-review-autoencoders-deep-learning-ciuuk14 Codeļź╝ Ēæ£ņŗ£ĒĢśļŖö ļæÉ Ļ░Ćņ¦Ć ļ░®ļ▓ĢņŚÉ ļīĆĒĢ£ ņäżļ¬ģņ×ä. ņØ╝ļ░śņĀüņØĖ autoencoderļŖö low-dimensional ĒĢśĻĖ░ ļĢīļ¼ĖņŚÉ ĒĢŁņāü bottleneck codeļØ╝Ļ│Ā ļ│┤ļ®┤ ļÉ©. ļŗ©, sparse codingņØĖ sparse autoencoderļŖö overcomplete codeņØś ĒśĢĒā£ņ×ä

- 28. 28 Pretraining ņĀüņÜ® ņŗ£ņØś Loss Function (ņłśņŗØ) http://stats.stackexchange.com/questions/119959/what-does-pre-training-mean-in-deep-autoencoder

- 29. 29 Unsupervised pretrainingņØ┤ ņל ņ×æļÅÖĒĢśļŖö ņØ┤ņ£ĀļŖö? Why does unsupervised pre-training help deep learning? Dumitru Erhan et al, JMLR 2010 ŌĆó Regularization hypothesis ŌĆó ļ¬©ļŹĖņØä P(x)ņŚÉ Ļ░ĆĻ╣ØļÅäļĪØ ļ¦īļō” ŌĆó P(X)ļź╝ ņל Ēæ£ĒśäĒĢśļ®┤ P(y|X)ļÅä ņל Ēæ£ĒśäĒĢĀ ņłś ņ׳ņØī ŌĆó Optimization hypothesis ŌĆó unsupervised pretrainingņ£╝ļĪ£ ņĄ£ņĀüĒĢ┤ņŚÉ ļŹö Ļ░ĆĻ╣īņÜ┤ initialļĪ£ ņŗ£ņ×æĒĢ© ŌĆó random initializationņŚÉņä£ļŖö ņ¢╗ņØä ņłś ņŚåļŖö lower local minimumņŚÉ ļÅäļŗ¼ Ļ░ĆļŖź ŌĆó layerļ│äļĪ£ trainingĒĢśļŖö Ļ▓ī Ēø©ņö¼ ņē¼ņøĆ

![8

PCAļ│┤ļŗż ļ╣äĒÜ©ņ£©ņĀüņ×ä

(ņĢäņŻ╝ ļ¦ÄņØĆ ņ¢æņØś ļŹ░ņØ┤Ēä░ļĪ£ ĒĢÖņŖĄņØä ĒĢśļŗżļ│┤ļ®┤ ļŹö ĒÜ©ņ£©ņĀüņØ┤ ļÉĀ ņłśļÅä ņ׳ņØī..)

ŌĆó ļśÉĒĢ£, code ņé¼ņØ┤ļĪ£ non-linear layerļź╝ ņČöĻ░ĆĒĢśļ®┤ curved(non-linear) manifold ņ£äļéś

ĻĘ╝ņ▓śņŚÉ ņ׳ļŖö ļŹ░ņØ┤Ēä░ļź╝ ĒÜ©ņ£©ņĀüņ£╝ļĪ£ Ēæ£ĒśäĒĢśļŖö PCAņØś generalizeĒĢ£ ļ▓äņĀäņØ┤ Ļ░ĆļŖźĒĢ©

ŌĆó EncoderļŖö input spaceņØś ņóīļŻīļź╝ manifoldņØś ņóīĒæ£ļĪ£ ļ│ĆĒÖśĒĢ©

ŌĆó DecoderļŖö ļ░śļīĆļĪ£ manifoldņØś ņóīĒæ£ļź╝ output spaceņØś ņóīĒæ£ļĪ£ ļ│ĆĒÖśĒĢ©

ŌĆó ĻĘĖļ¤¼ļéś ņ┤łĻĖ░ weightņØ┤ good solutionņŚÉ Ļ░ĆĻ╣īņøīņĢ╝ local minimaņŚÉ ņĢłļ╣Āņ¦É

ŌĆó input xņÖĆ output xŌĆÖĻ░Ć Ļ░ÖļÅäļĪØ reconstructĒĢśļŖö

networkļź╝ ļ¦īļōżĻ│Ā reconstruction errorļź╝ ņĄ£

ņåīĒÖöĒĢśļÅäļĪØ gradient descent learningņØä ņĀü

ņÜ®ĒĢ©

ŌĆó Code zņØĆ M hidden unitņ£╝ļĪ£ input NņŚÉ ļīĆĒĢ£

compressed representationņØ┤ ļÉ©

ŌĆó PCAņÖĆ autoencoderņØś reconstruction error

ļŖö ļÅÖņØ╝ĒĢśņ¦Ćļ¦ī hidden unitĻ│╝ principal

componentļŖö Ļ╝Ł ņØ╝ņ╣ś ņĢŖņØä ņłś ņ׳ņØī (PCA

axesĻ│╝ ļŗżļź┤Ļ▒░ļéś skewedļÉĀ ņłś ņ׳ĻĖ░ ļĢīļ¼Ė!)

PCA ĻĄ¼ĒśäņØä ņ£äĒĢ£ Autoencoder

Lecture 15.1 ŌĆö From PCA to autoencoders [Neural Networks for Machine Learning] by Geoffrey Hinton](https://image.slidesharecdn.com/autoencoderintrobyselee-170531045306/85/A-Beginner-s-guide-to-understanding-Autoencoder-8-320.jpg)

![11

Reduction the Dimensionality of Data with Neural Network, Hinton & Salakhutdinov, Science, 2006

[Lecture 15.2] Deep autoencoders by Geoffrey Hinton

HintonĻ│╝ SalakhutdinovĻ░Ć ļ¦īļōĀ, ņ▓½ļ▓łņ¦Ė ņä▒Ļ│ĄņĀüņØĖ Deep Autoencoder

ŌĆó Deep AutoencoderļŖö non-linear dimension reduction ņÖĖņŚÉļÅä ņŚ¼ļ¤¼ ņןņĀÉņØ┤ ņ׳ņØī

ŌĆó flexible mapping Ļ░ĆļŖź

ŌĆó learning timeņØĆ training case ņłśņŚÉ ļ╣äļĪĆĒĢśĻ▒░ļéś ļŹö ņĀüĻ▓ī Ļ▒Ėļ”╝

ŌĆó ņĄ£ņóģ encoding ļ¬©ļŹĖņØ┤ ĻĮż ļ╣Āļ”ä

ŌĆó ĻĘĖļ¤¼ļéś! backpropagationņ£╝ļĪ£ weightņØä ņĄ£ņĀüĒÖöĒĢśĻĖ░ ņ¢┤ļĀżņøīņä£ 2006ļģä ņĀäņŚÉļŖö ņĢł ņō░

ņśĆņØī

ŌĆó ņ┤łĻĖ░ weight Ļ░ÆņØ┤ Ēü¼ļ®┤ local minimaļĪ£ ņłśļĀ┤

ŌĆó ņ┤łĻĖ░ weight Ļ░ÆņØ┤ ņ×æņ£╝ļ®┤ backpropagationņŗ£ vanishing gradient ļ¼ĖņĀ£ ļ░£ņāØ

ŌĆó ņāłļĪ£ņÜ┤ ļ░®ļ▓ĢņØä autoencoderņŚÉ ņĀüņÜ®ĒĢśņŚ¼ ņ▓½ļ▓łņ¦ĖļĪ£ ņä▒Ļ│ĄņĀüņØĖ deep autoencoderļź╝

ņÖäņä▒ĒĢ©

ŌĆó layer-by-layerļĪ£ pre-trainingņØä ņĀüņÜ®

ŌĆó Echo-State Netsņ▓śļ¤╝ weightņØä ņ┤łĻĖ░ĒÖö(initialization)ĒĢ©

ŌĆó ReconstructionņØś ņÜ®ļÅä(ņøÉļ×£ dimension reductionļ¦ī..)Ļ░Ć ņŻ╝ļ¬®ļ░øĻĖ░ ņŗ£ņ×æĒĢ©](https://image.slidesharecdn.com/autoencoderintrobyselee-170531045306/85/A-Beginner-s-guide-to-understanding-Autoencoder-11-320.jpg)

![[ĻĖ░ņ┤łĻ░£ļģÉ] Recurrent Neural Network (RNN) ņåīĻ░£](https://cdn.slidesharecdn.com/ss_thumbnails/agistpurnndkim190430-190430140949-thumbnail.jpg?width=560&fit=bounds)

![[ņ╗┤Ēō©Ēä░ļ╣äņĀäĻ│╝ ņØĖĻ│Ąņ¦ĆļŖź] 8. ĒĢ®ņä▒Ļ│▒ ņŗĀĻ▓Įļ¦Ø ņĢäĒéżĒģŹņ▓ś 5 - Others](https://cdn.slidesharecdn.com/ss_thumbnails/lec8convolutionnetworksarcitecture5others-210215060452-thumbnail.jpg?width=560&fit=bounds)