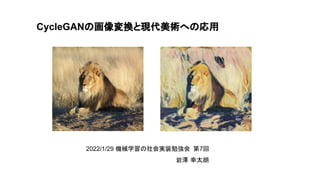

4. CycleGANの画像変換と現代美術への応用

0 likes269 views

CycleGANを用いてHenri Matisse の作品を学習させた画像変換モデルを作成しました。 https://www.youtube.com/watch?v=Bjbbcng6qOQ

1 of 13

Download to read offline

Recommended

GANs and Applications

GANs and ApplicationsHoang Nguyen

?

This document summarizes generative adversarial networks (GANs) and their applications. It begins by introducing GANs and how they work by having a generator and discriminator play an adversarial game. It then discusses several variants of GANs including DCGAN, LSGAN, conditional GAN, and others. It provides examples of applications such as image-to-image translation, text-to-image synthesis, image generation, and more. It concludes by discussing major GAN variants and potential future applications like helping children learn to draw.Generative Adversarial Networks

Generative Adversarial NetworksMustafa Yagmur

?

Generative adversarial networks (GANs) are a class of machine learning frameworks where two neural networks, a generator and discriminator, compete against each other. The generator learns to generate new data with the same statistics as the training set to fool the discriminator, while the discriminator learns to better distinguish real samples from generated samples. GANs have applications in image generation, image translation between domains, and image completion. Training GANs can be challenging due to issues like mode collapse.Generative Adversarial Networks (GAN)

Generative Adversarial Networks (GAN)Manohar Mukku

?

This document provides an overview of generative adversarial networks (GANs). It explains that GANs were introduced in 2014 and involve two neural networks, a generator and discriminator, that compete against each other. The generator produces synthetic data to fool the discriminator, while the discriminator learns to distinguish real from synthetic data. As they train, the generator improves at producing more realistic outputs that match the real data distribution. Examples of GAN applications discussed include image generation, text-to-image synthesis, and face aging.颁测肠濒别骋础狈による异种モダリティ画像生成を用いた股関节惭搁滨の筋骨格セグメンテーション

颁测肠濒别骋础狈による异种モダリティ画像生成を用いた股関节惭搁滨の筋骨格セグメンテーション奈良先端大 情報科学研究科

?

医用画像からのセグメンテーションは、定量的な诊断や患者个别のシミュレーションのため重要である。医用画像は、その撮像モダリティにより异なる特徴をもつ。颁罢は骨の构造を明瞭に撮影することができるのに対して、惭搁は软组织の构造を明瞭に撮影することができる。このような违いはセグメンテーションにおいても考虑する必要がある。そこで本研究では、颁测肠濒别骋础狈を用いて惭搁滨から同患者同姿势の颁罢画像を生成することにより、セグメンテーションに活用する手法を提案した。StyleCLIP: Text-Driven Manipulation of StyleGAN Imagery

StyleCLIP: Text-Driven Manipulation of StyleGAN Imagery ivaderivader

?

The paper presents three methods for text-driven manipulation of StyleGAN imagery using CLIP:

1. Direct optimization of the latent w vector to match a text prompt

2. Training a mapping function to map text to changes in the latent space

3. Finding global directions in the latent space corresponding to attributes by measuring distances between text embeddings

The methods allow editing StyleGAN images based on natural language instructions and demonstrate CLIP's ability to provide fine-grained controls, but rely on pretrained StyleGAN and CLIP models and may struggle with unseen text or image domains.GANs Presentation.pptx

GANs Presentation.pptxMAHMOUD729246

?

Generative Adversarial Networks (GANs) are a type of deep learning algorithm that use two neural networks - a generator and discriminator. The generator produces new data samples and the discriminator tries to determine whether samples are real or generated. The networks train simultaneously, with the generator trying to produce realistic samples and the discriminator accurately classifying samples. GANs can generate high-quality, realistic data and have applications such as image synthesis, but training can be unstable and outputs may be biased.Variational Autoencoder Tutorial

Variational Autoencoder Tutorial Hojin Yang

?

The document provides an introduction to variational autoencoders (VAE). It discusses how VAEs can be used to learn the underlying distribution of data by introducing a latent variable z that follows a prior distribution like a standard normal. The document outlines two approaches - explicitly modeling the data distribution p(x), or using the latent variable z. It suggests using z and assuming the conditional distribution p(x|z) is a Gaussian with mean determined by a neural network gθ(z). The goal is to maximize the likelihood of the dataset by optimizing the evidence lower bound objective.Deep Learning Tutorial

Deep Learning TutorialAmr Rashed

?

See hints, Ref under each slide

Deep Learning tutorial

https://www.youtube.com/watch?v=q4rZ9ujp3bw&list=PLAI6JViu7XmflH_eGgsWkwvv6lbXhYjjYSemi supervised classification with graph convolutional networks

Semi supervised classification with graph convolutional networks哲东 郑

?

A graph convolutional network model is proposed for semi-supervised learning that takes into account both the graph structure and node features. The model uses a graph convolutional layer that approximates spectral graph convolutions using a localized first-order approximation. This allows the model to be applied to large-scale problems. The model is evaluated on several benchmark semi-supervised classification datasets where it achieves state-of-the-art performance.Introduction to Generative Adversarial Networks (GANs)

Introduction to Generative Adversarial Networks (GANs)Appsilon Data Science

?

Introduction to Generative Adversarial Networks (GANs) by Micha? Maj

Full story: https://appsilon.com/satellite-imagery-generation-with-gans/Deep Learning in Bio-Medical Imaging

Deep Learning in Bio-Medical ImagingJoonhyung Lee

?

This document provides an introduction to deep learning in medical imaging. It explains that artificial neural networks are modeled after biological neurons and use multiple hidden layers to approximate complex functions. Convolutional neural networks are commonly used for image data, applying filters over images to extract features. Modern deep learning platforms perform cross-correlation instead of convolution for efficiency. The key process for improving deep learning models is backpropagation, which calculates the gradient of the loss function to update weights and biases in a direction that reduces loss. Deep learning has applications in medical imaging modalities like MRI, ultrasound, CT, and PET.Electronic health records and machine learning

Electronic health records and machine learningEman Abdelrazik

?

Electronic health records and machine learning can be used together to generate real-world evidence. Real-world data is collected from electronic health records in real clinical settings and can provide insights into a treatment's effectiveness and safety outside of clinical trials. Machine learning models can analyze structured and unstructured data in electronic health records to identify patterns and make predictions. This can help with tasks like medical diagnosis, which is challenging due to variations between individuals and potential for misdiagnosis. However, developing accurate machine learning models requires addressing issues like selecting representative training data and setting performance standards.02 Fundamental Concepts of ANN

02 Fundamental Concepts of ANNTamer Ahmed Farrag, PhD

?

This document provides an overview of neural networks and fuzzy systems. It outlines a course on the topic, which is divided into two parts: neural networks and fuzzy systems. For neural networks, it covers fundamental concepts of artificial neural networks including single and multi-layer feedforward networks, feedback networks, and unsupervised learning. It also discusses the biological neuron, typical neural network architectures, learning techniques such as backpropagation, and applications of neural networks. Popular activation functions like sigmoid, tanh, and ReLU are also explained.Machine Learning

Machine LearningAnastasia Jakubow

?

This document provides an overview of machine learning including: definitions of machine learning; types of machine learning such as supervised learning, unsupervised learning, and reinforcement learning; applications of machine learning such as predictive modeling, computer vision, and self-driving cars; and current trends and careers in machine learning. The document also briefly profiles the history and pioneers of machine learning and artificial intelligence.Generative adversarial networks

Generative adversarial networks?? ?

?

Generative Adversarial Networks (GANs) are a class of machine learning frameworks where two neural networks contest with each other in a game. A generator network generates new data instances, while a discriminator network evaluates them for authenticity, classifying them as real or generated. This adversarial process allows the generator to improve over time and generate highly realistic samples that can pass for real data. The document provides an overview of GANs and their variants, including DCGAN, InfoGAN, EBGAN, and ACGAN models. It also discusses techniques for training more stable GANs and escaping issues like mode collapse.Introduction to Deep learning

Introduction to Deep learningMassimiliano Ruocco

?

Deep learning is a class of machine learning algorithms that uses multiple layers of nonlinear processing units for feature extraction and transformation. It can be used for supervised learning tasks like classification and regression or unsupervised learning tasks like clustering. Deep learning models include deep neural networks, deep belief networks, and convolutional neural networks. Deep learning has been applied successfully in domains like computer vision, speech recognition, and natural language processing by companies like Google, Facebook, Microsoft, and others.PR-305: Exploring Simple Siamese Representation Learning

PR-305: Exploring Simple Siamese Representation LearningSungchul Kim

?

SimSiam is a self-supervised learning method that uses a Siamese network with stop-gradient to learn representations from unlabeled data. The paper finds that stop-gradient plays an essential role in preventing the model from collapsing to a degenerate solution. Additionally, it is hypothesized that SimSiam implicitly optimizes an Expectation-Maximization-like algorithm that alternates between updating the network parameters and assigning representations to samples in a manner analogous to k-means clustering.MobileNet - PR044

MobileNet - PR044Jinwon Lee

?

Tensorflow-KR ?????? 44?? ???????

???? : https://youtu.be/7UoOFKcyIvM

???? : https://arxiv.org/abs/1704.04861Neural Network

Neural NetworkAshish Kumar

?

Neural networks are computing systems inspired by biological neural networks. They are composed of interconnected nodes that process input data and transmit signals to each other. The document discusses various types of neural networks including feedforward, recurrent, convolutional, and modular neural networks. It also describes the basic architecture of neural networks including input, hidden, and output layers. Neural networks can be used for applications like pattern recognition, data classification, and more. They are well-suited for complex, nonlinear problems. The document provides an overview of neural networks and their functioning.Iclr2016 vaeまとめ

Iclr2016 vaeまとめDeep Learning JP

?

This document summarizes a presentation about variational autoencoders (VAEs) presented at the ICLR 2016 conference. The document discusses 5 VAE-related papers presented at ICLR 2016, including Importance Weighted Autoencoders, The Variational Fair Autoencoder, Generating Images from Captions with Attention, Variational Gaussian Process, and Variationally Auto-Encoded Deep Gaussian Processes. It also provides background on variational inference and VAEs, explaining how VAEs use neural networks to model probability distributions and maximize a lower bound on the log likelihood.3D Gaussian Splatting for laboratory paper rounds

3D Gaussian Splatting for laboratory paper roundsMikihiro Suzuki

?

研究室の輪講で使用した3D Gaussian Splattingの解説スライド.What Makes Training Multi-modal Classification Networks Hard? ppt

What Makes Training Multi-modal Classification Networks Hard? ppttaeseon ryu

?

[??? ???? ?? 100??]

What Makes Training Multi-modal Classification Networks Hard?

?????! ?? '??? ?? ?? ??'? ??? ??? 100?? ???? ?????! ??? ???? ??? ????? ?? ? ? ??? ??? ????? ?????, ? ????? ??????. ??? ???? ??? ??/?????? ??? ?? ?? ??? ??? ??? ?????!

?? ??? ?? 100?? ???, What Makes Training Multi-modal Classification Networks Hard? ?? ??? ?????.

multi modal??, ??? ???? ???, ??? ??? ?????, ?? ??, ?? ??? ?? ??? ??? ??? ?? ????? ???? ??? ???? ? ??? ???? ?? ?????. ??? ???? ??? ????, ? ??? ?? ??? ??? ???? ??? ?? ??? ????? ???? ?? ??, ??+???, ???+???? ?? ?? ???? ???? ? ??????. ??? ?? ????? ? ??? ???? feature? ???? ???? ??? overfitting? ?? ??? ???? ?? ??? ??? ??????. ?? ???? ??? G-blend?? ??? ???? ??? ??? ????? ?? ???? ???????

??? ?? ?? ?????! ???? ? ??????.

https://youtu.be/ZjDRVgA9F1INeural Network Design: Chapter 18 Grossberg Network

Neural Network Design: Chapter 18 Grossberg NetworkJason Tsai

?

Lecture for Neural Networks study group held on January 11, 2020.

Reference book: http://hagan.okstate.edu/nnd.html

Video: https://youtu.be/H4NKgliTFUw

Initiated by Taiwan AI Group (https://www.facebook.com/groups/Taiwan.AI.Group/permalink/2017771298545301/)(2017/06)Practical points of deep learning for medical imaging

(2017/06)Practical points of deep learning for medical imagingKyuhwan Jung

?

This document provides an overview of deep learning and its applications in medical imaging. It discusses key topics such as the definition of artificial intelligence, a brief history of neural networks and machine learning, and how deep learning is driving breakthroughs in tasks like visual and speech recognition. The document also addresses challenges in medical data analysis using deep learning, such as how to handle limited data or annotations. It provides examples of techniques used to address these challenges, such as data augmentation, transfer learning, and weakly supervised learning.Harnessing the Power of GenAI for BI and Reporting.pptx

Harnessing the Power of GenAI for BI and Reporting.pptxParas Gupta

?

This presentation talks about how the power of Generative AI can be utilize to revolutionize the Business Intelligence and Reporting.

Challenges with the traditional BI approach, benefits of using Generative AI, use cases for BI and reporting, Implementation considerations, and future outlook.15. Transformerを用いた言語処理技術の発展.pdf

15. Transformerを用いた言語処理技術の発展.pdf幸太朗 岩澤

?

機械学習の社会実装勉強会 第21回の発表内容です。

https://machine-learning-workshop.connpass.com/event/275945/14. BigQuery ML を用いた多変量時系列テ?ータの解析.pdf

14. BigQuery ML を用いた多変量時系列テ?ータの解析.pdf幸太朗 岩澤

?

機械学習の社会実装勉強会 第20回の発表内容です。

https://machine-learning-workshop.connpass.com/event/246825/More Related Content

What's hot (20)

Semi supervised classification with graph convolutional networks

Semi supervised classification with graph convolutional networks哲东 郑

?

A graph convolutional network model is proposed for semi-supervised learning that takes into account both the graph structure and node features. The model uses a graph convolutional layer that approximates spectral graph convolutions using a localized first-order approximation. This allows the model to be applied to large-scale problems. The model is evaluated on several benchmark semi-supervised classification datasets where it achieves state-of-the-art performance.Introduction to Generative Adversarial Networks (GANs)

Introduction to Generative Adversarial Networks (GANs)Appsilon Data Science

?

Introduction to Generative Adversarial Networks (GANs) by Micha? Maj

Full story: https://appsilon.com/satellite-imagery-generation-with-gans/Deep Learning in Bio-Medical Imaging

Deep Learning in Bio-Medical ImagingJoonhyung Lee

?

This document provides an introduction to deep learning in medical imaging. It explains that artificial neural networks are modeled after biological neurons and use multiple hidden layers to approximate complex functions. Convolutional neural networks are commonly used for image data, applying filters over images to extract features. Modern deep learning platforms perform cross-correlation instead of convolution for efficiency. The key process for improving deep learning models is backpropagation, which calculates the gradient of the loss function to update weights and biases in a direction that reduces loss. Deep learning has applications in medical imaging modalities like MRI, ultrasound, CT, and PET.Electronic health records and machine learning

Electronic health records and machine learningEman Abdelrazik

?

Electronic health records and machine learning can be used together to generate real-world evidence. Real-world data is collected from electronic health records in real clinical settings and can provide insights into a treatment's effectiveness and safety outside of clinical trials. Machine learning models can analyze structured and unstructured data in electronic health records to identify patterns and make predictions. This can help with tasks like medical diagnosis, which is challenging due to variations between individuals and potential for misdiagnosis. However, developing accurate machine learning models requires addressing issues like selecting representative training data and setting performance standards.02 Fundamental Concepts of ANN

02 Fundamental Concepts of ANNTamer Ahmed Farrag, PhD

?

This document provides an overview of neural networks and fuzzy systems. It outlines a course on the topic, which is divided into two parts: neural networks and fuzzy systems. For neural networks, it covers fundamental concepts of artificial neural networks including single and multi-layer feedforward networks, feedback networks, and unsupervised learning. It also discusses the biological neuron, typical neural network architectures, learning techniques such as backpropagation, and applications of neural networks. Popular activation functions like sigmoid, tanh, and ReLU are also explained.Machine Learning

Machine LearningAnastasia Jakubow

?

This document provides an overview of machine learning including: definitions of machine learning; types of machine learning such as supervised learning, unsupervised learning, and reinforcement learning; applications of machine learning such as predictive modeling, computer vision, and self-driving cars; and current trends and careers in machine learning. The document also briefly profiles the history and pioneers of machine learning and artificial intelligence.Generative adversarial networks

Generative adversarial networks?? ?

?

Generative Adversarial Networks (GANs) are a class of machine learning frameworks where two neural networks contest with each other in a game. A generator network generates new data instances, while a discriminator network evaluates them for authenticity, classifying them as real or generated. This adversarial process allows the generator to improve over time and generate highly realistic samples that can pass for real data. The document provides an overview of GANs and their variants, including DCGAN, InfoGAN, EBGAN, and ACGAN models. It also discusses techniques for training more stable GANs and escaping issues like mode collapse.Introduction to Deep learning

Introduction to Deep learningMassimiliano Ruocco

?

Deep learning is a class of machine learning algorithms that uses multiple layers of nonlinear processing units for feature extraction and transformation. It can be used for supervised learning tasks like classification and regression or unsupervised learning tasks like clustering. Deep learning models include deep neural networks, deep belief networks, and convolutional neural networks. Deep learning has been applied successfully in domains like computer vision, speech recognition, and natural language processing by companies like Google, Facebook, Microsoft, and others.PR-305: Exploring Simple Siamese Representation Learning

PR-305: Exploring Simple Siamese Representation LearningSungchul Kim

?

SimSiam is a self-supervised learning method that uses a Siamese network with stop-gradient to learn representations from unlabeled data. The paper finds that stop-gradient plays an essential role in preventing the model from collapsing to a degenerate solution. Additionally, it is hypothesized that SimSiam implicitly optimizes an Expectation-Maximization-like algorithm that alternates between updating the network parameters and assigning representations to samples in a manner analogous to k-means clustering.MobileNet - PR044

MobileNet - PR044Jinwon Lee

?

Tensorflow-KR ?????? 44?? ???????

???? : https://youtu.be/7UoOFKcyIvM

???? : https://arxiv.org/abs/1704.04861Neural Network

Neural NetworkAshish Kumar

?

Neural networks are computing systems inspired by biological neural networks. They are composed of interconnected nodes that process input data and transmit signals to each other. The document discusses various types of neural networks including feedforward, recurrent, convolutional, and modular neural networks. It also describes the basic architecture of neural networks including input, hidden, and output layers. Neural networks can be used for applications like pattern recognition, data classification, and more. They are well-suited for complex, nonlinear problems. The document provides an overview of neural networks and their functioning.Iclr2016 vaeまとめ

Iclr2016 vaeまとめDeep Learning JP

?

This document summarizes a presentation about variational autoencoders (VAEs) presented at the ICLR 2016 conference. The document discusses 5 VAE-related papers presented at ICLR 2016, including Importance Weighted Autoencoders, The Variational Fair Autoencoder, Generating Images from Captions with Attention, Variational Gaussian Process, and Variationally Auto-Encoded Deep Gaussian Processes. It also provides background on variational inference and VAEs, explaining how VAEs use neural networks to model probability distributions and maximize a lower bound on the log likelihood.3D Gaussian Splatting for laboratory paper rounds

3D Gaussian Splatting for laboratory paper roundsMikihiro Suzuki

?

研究室の輪講で使用した3D Gaussian Splattingの解説スライド.What Makes Training Multi-modal Classification Networks Hard? ppt

What Makes Training Multi-modal Classification Networks Hard? ppttaeseon ryu

?

[??? ???? ?? 100??]

What Makes Training Multi-modal Classification Networks Hard?

?????! ?? '??? ?? ?? ??'? ??? ??? 100?? ???? ?????! ??? ???? ??? ????? ?? ? ? ??? ??? ????? ?????, ? ????? ??????. ??? ???? ??? ??/?????? ??? ?? ?? ??? ??? ??? ?????!

?? ??? ?? 100?? ???, What Makes Training Multi-modal Classification Networks Hard? ?? ??? ?????.

multi modal??, ??? ???? ???, ??? ??? ?????, ?? ??, ?? ??? ?? ??? ??? ??? ?? ????? ???? ??? ???? ? ??? ???? ?? ?????. ??? ???? ??? ????, ? ??? ?? ??? ??? ???? ??? ?? ??? ????? ???? ?? ??, ??+???, ???+???? ?? ?? ???? ???? ? ??????. ??? ?? ????? ? ??? ???? feature? ???? ???? ??? overfitting? ?? ??? ???? ?? ??? ??? ??????. ?? ???? ??? G-blend?? ??? ???? ??? ??? ????? ?? ???? ???????

??? ?? ?? ?????! ???? ? ??????.

https://youtu.be/ZjDRVgA9F1INeural Network Design: Chapter 18 Grossberg Network

Neural Network Design: Chapter 18 Grossberg NetworkJason Tsai

?

Lecture for Neural Networks study group held on January 11, 2020.

Reference book: http://hagan.okstate.edu/nnd.html

Video: https://youtu.be/H4NKgliTFUw

Initiated by Taiwan AI Group (https://www.facebook.com/groups/Taiwan.AI.Group/permalink/2017771298545301/)(2017/06)Practical points of deep learning for medical imaging

(2017/06)Practical points of deep learning for medical imagingKyuhwan Jung

?

This document provides an overview of deep learning and its applications in medical imaging. It discusses key topics such as the definition of artificial intelligence, a brief history of neural networks and machine learning, and how deep learning is driving breakthroughs in tasks like visual and speech recognition. The document also addresses challenges in medical data analysis using deep learning, such as how to handle limited data or annotations. It provides examples of techniques used to address these challenges, such as data augmentation, transfer learning, and weakly supervised learning.Harnessing the Power of GenAI for BI and Reporting.pptx

Harnessing the Power of GenAI for BI and Reporting.pptxParas Gupta

?

This presentation talks about how the power of Generative AI can be utilize to revolutionize the Business Intelligence and Reporting.

Challenges with the traditional BI approach, benefits of using Generative AI, use cases for BI and reporting, Implementation considerations, and future outlook.More from 幸太朗 岩澤 (14)

15. Transformerを用いた言語処理技術の発展.pdf

15. Transformerを用いた言語処理技術の発展.pdf幸太朗 岩澤

?

機械学習の社会実装勉強会 第21回の発表内容です。

https://machine-learning-workshop.connpass.com/event/275945/14. BigQuery ML を用いた多変量時系列テ?ータの解析.pdf

14. BigQuery ML を用いた多変量時系列テ?ータの解析.pdf幸太朗 岩澤

?

機械学習の社会実装勉強会 第20回の発表内容です。

https://machine-learning-workshop.connpass.com/event/246825/BigQuery ML for unstructured data

BigQuery ML for unstructured data幸太朗 岩澤

?

機械学習の社会実装勉強会 第15回の発表内容です。

オブジェクトテーブルという新しい仕組みを使ってBigQuery ML で画像データを推論することができるようになりました。12. Diffusion Model の数学的基礎.pdf

12. Diffusion Model の数学的基礎.pdf幸太朗 岩澤

?

巷で話題の画像生成サービス, Stable Diffusion, Midjourney, DALL-E2 の基盤となる 生成モデルDiffusion Model の解説です。

機械学習の社会実装勉強会 第15回の発表内容です。

https://machine-learning-workshop.connpass.com/event/246825/行列分解の数学的基础.辫诲蹿

行列分解の数学的基础.辫诲蹿幸太朗 岩澤

?

第13回に引き続き、行列分解モデルの数学的な基礎を紹介しました。機械学習の社会実装勉強会 第14回の発表内容です。

<Attach YouTube link>BigQuery MLの行列分解モデルを 用いた推薦システムの基礎

BigQuery MLの行列分解モデルを 用いた推薦システムの基礎幸太朗 岩澤

?

BigQuery MLの行列分解モデルを用いて映画のレコメンドエンジンを作成しました。機械学習の社会実装勉強会 第13回の発表内容です。

<Attach YouTube link>Vertex AI Pipelinesで BigQuery MLのワークフローを管理 (ETL ~ デプロイまで)

Vertex AI Pipelinesで BigQuery MLのワークフローを管理 (ETL ~ デプロイまで)幸太朗 岩澤

?

Vertex AI Pipelinesで

BigQuery MLのワークフローを作成しました。機械学習の社会実装勉強会 第12回の発表内容です。

https://machine-learning-workshop.connpass.com/event/250263/Vertex AI Pipelinesで BigQuery MLのワークフローを管理

Vertex AI Pipelinesで BigQuery MLのワークフローを管理幸太朗 岩澤

?

Vertex AI Pipelinesで

BigQuery MLのワークフローを作成しました。機械学習の社会実装勉強会 第11回の発表内容です。

https://machine-learning-workshop.connpass.com/event/246825/

7. Vertex AI Model Registryで BigQuery MLのモデルを管理する

7. Vertex AI Model Registryで BigQuery MLのモデルを管理する幸太朗 岩澤

?

機械学習の社会実装勉強会第10回で発表した内容です。Vertex AIのモデル管理サービス Vertex AI Model Registryを紹介しました。

<YouTube リンクを追って記載>6. Vertex AI Workbench による Notebook 環境.pdf

6. Vertex AI Workbench による Notebook 環境.pdf幸太朗 岩澤

?

機械学習の社会実装勉強会第9回で発表した内容です。Vertex AIの1サービスしてインスタンスベースでJupyter 環境が使用できるWorkbenchを紹介しました。

<YouTubeリンクを追って記載>5. Big Query Explainable AIの紹介

5. Big Query Explainable AIの紹介幸太朗 岩澤

?

機械学習の社会実装勉強会第8回で発表した内容です。

https://www.youtube.com/watch?v=fmMy2fp0pOQ3. Vertex AIを用いた時系列テ?ータの解析

3. Vertex AIを用いた時系列テ?ータの解析幸太朗 岩澤

?

機械学習の社会実装勉強会第6回で発表した内容です。Vertex AIでCovid-19の感染者数予測モデルを作成しました。

https://www.youtube.com/channel/UCrjRZ4D2tpqV5UI2XSUKQ6g

2. BigQuery ML を用いた時系列テ?ータの解析 (ARIMA model)

2. BigQuery ML を用いた時系列テ?ータの解析 (ARIMA model)幸太朗 岩澤

?

This document discusses using ARIMA models with BigQuery ML to analyze time series data. It provides an overview of time series data and ARIMA models, including how ARIMA models incorporate AR and MA components as well as differencing. It also demonstrates how to create an ARIMA prediction model and visualize results using BigQuery ML and Google Data Studio. The document concludes that ARIMA models in BigQuery ML can automatically select the optimal order for time series forecasting and that multi-variable time series are not yet supported.1. BigQueryを中心にした ML datapipelineの概要

1. BigQueryを中心にした ML datapipelineの概要幸太朗 岩澤

?

This document discusses using BigQuery as the central part of an ML data pipeline from ETL to model creation to visualization. It introduces BigQuery and BigQuery ML, showing how ETL jobs can load data from Cloud Storage into BigQuery for analysis and model training. Finally, it demonstrates this process by loading a sample CSV dataset into BigQuery and using BigQuery ML to create and evaluate a prediction model.Recently uploaded (8)

IoT Devices Compliant with JC-STAR Using Linux as a Container OS

IoT Devices Compliant with JC-STAR Using Linux as a Container OSTomohiro Saneyoshi

?

Security requirements for IoT devices are becoming more defined, as seen with the EU Cyber Resilience Act and Japan’s JC-STAR.

It's common for IoT devices to run Linux as their operating system. However, adopting general-purpose Linux distributions like Ubuntu or Debian, or Yocto-based Linux, presents certain difficulties. This article outlines those difficulties.

It also, it highlights the security benefits of using a Linux-based container OS and explains how to adopt it with JC-STAR, using the "Armadillo Base OS" as an example.

Feb.25.2025@JAWS-UG IoTElasticsearchでSPLADEする [Search Engineering Tech Talk 2025 Winter]![ElasticsearchでSPLADEする [Search Engineering Tech Talk 2025 Winter]](https://cdn.slidesharecdn.com/ss_thumbnails/searchtech-250228102455-ddc5ce09-thumbnail.jpg?width=560&fit=bounds)

![ElasticsearchでSPLADEする [Search Engineering Tech Talk 2025 Winter]](https://cdn.slidesharecdn.com/ss_thumbnails/searchtech-250228102455-ddc5ce09-thumbnail.jpg?width=560&fit=bounds)

![ElasticsearchでSPLADEする [Search Engineering Tech Talk 2025 Winter]](https://cdn.slidesharecdn.com/ss_thumbnails/searchtech-250228102455-ddc5ce09-thumbnail.jpg?width=560&fit=bounds)

![ElasticsearchでSPLADEする [Search Engineering Tech Talk 2025 Winter]](https://cdn.slidesharecdn.com/ss_thumbnails/searchtech-250228102455-ddc5ce09-thumbnail.jpg?width=560&fit=bounds)

ElasticsearchでSPLADEする [Search Engineering Tech Talk 2025 Winter]kota usuha

?

Search Engineering Tech Talk 2025 WinterPostgreSQL最新動向 ~カラムナストアから生成AI連携まで~ (Open Source Conference 2025 Tokyo/Spring ...

PostgreSQL最新動向 ~カラムナストアから生成AI連携まで~ (Open Source Conference 2025 Tokyo/Spring ...NTT DATA Technology & Innovation

?

PostgreSQL最新動向

~カラムナストアから生成AI連携まで~

(Open Source Conference 2025 Tokyo/Spring 発表資料)

2025年2月21日(金)

NTTデータグループ

Innovation技術部

小林 隆浩、石井 愛弓Matching_Program_for_Quantum_Challenge_Overview.pdf

Matching_Program_for_Quantum_Challenge_Overview.pdfhirokiokuda2

?

Matching_Program_for_Quantum_Challenge_Overview.pdf2025フードテックWeek大阪展示会 - LoRaWANを使った複数ポイント温度管理 by AVNET玉井部長

2025フードテックWeek大阪展示会 - LoRaWANを使った複数ポイント温度管理 by AVNET玉井部長CRI Japan, Inc.

?

2025フードテックWeek大阪展示会 -LoRaWANを使った複数ポイント温度管理 by AVNET玉井部長作成Apache Sparkに対するKubernetesのNUMAノードを意識したリソース割り当ての性能効果 (Open Source Conference ...

Apache Sparkに対するKubernetesのNUMAノードを意識したリソース割り当ての性能効果 (Open Source Conference ...NTT DATA Technology & Innovation

?

Apache Sparkに対するKubernetesのNUMAノードを意識したリソース割り当ての性能効果

(Open Source Conference 2025 Tokyo/Spring 発表資料)

2025年2月21日(金)

NTTデータグループ

Innovation技術部

小茶 健一PostgreSQL最新動向 ~カラムナストアから生成AI連携まで~ (Open Source Conference 2025 Tokyo/Spring ...

PostgreSQL最新動向 ~カラムナストアから生成AI連携まで~ (Open Source Conference 2025 Tokyo/Spring ...NTT DATA Technology & Innovation

?

Apache Sparkに対するKubernetesのNUMAノードを意識したリソース割り当ての性能効果 (Open Source Conference ...

Apache Sparkに対するKubernetesのNUMAノードを意識したリソース割り当ての性能効果 (Open Source Conference ...NTT DATA Technology & Innovation

?

4. CycleGANの画像変換と現代美術への応用

- 2. GAN (Generative Adversarial Network) Generative Adversarial Nets Ian J.Goodfellow et al., 2014 https://arxiv.org/pdf/1406.2661.pdf G: Generator z: ノイズベクトル D: Discriminator x: 本物のデータ(教師データ) G(z): Generator が生成した偽のデータ MNIST dataset z G D G(z) x True:1 or False: 0 偽物 (0.1, 0.4, -0.2 …)

- 3. D(x) = 1 の時、最大値0 D(x): xがラベル1(本物)であると判断する確 率 2.Generatorの学習 D(G(z)) = 0 の時、最大値0 1?D(G(z)): G(z)がラベル0(偽 物)と判断する確率 D(G(z)) = 1 の時、最小値 -∞ 1?D(G(z)): G(z)がラベル0(偽物)と 判断する確率 MNIST dataset z G D G(z) x G: Generator z: ノイズベクトル D: Discriminator x: 本物のデータ(教師データ) G(z): Generator が生成した偽のデータ True:1 or False: 0 固定 固定 偽物 BCE ≡ 1.Discriminatorの学習 GANの学習 (0.1, 0.4, -0.2 …)

- 4. Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks Jun-Yan Zhu, Taesung Park et. al 2017 https://arxiv.org/pdf/1703.10593.pdf Repository: https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix lossの計算: cycle_gan_model.py#L168-L177 Cycle GAN Pix2Pix (線画から写真) Cycle GAN (写真から絵)

- 5. * Identity Mapping Lossは 割愛? * 図と数式は論文より引用 ? G: 馬からシマウマ F: シマウマから馬 Dy: シマウマ判別器 Dx: 馬判別器 Cycle Consistency Loss Adversarial Loss

- 6. 19~20世紀に活躍したフランスの画家、彫刻家 ? フォービスム(野獣派)の代表 ? Henri Matisse (アンリ?マティス) 緑の筋のあるマティス夫人の肖像 (1905) 王の悲しみ (1952) at ポンピドゥセンター 赤いハーモニー (1908) 1869 ~ 1954 襟巻の女(1936) ※ 野獣派としての活動は1905~1908の3年間のみ

- 7. 使用データ 学習環境 https://www.wikiart.org/en/henri-matisse から取得したマティス作品 1008点 Google colab pro のGPU環境 (1,072円/月)

- 9. Loss

- 10. 制作: P.I.C.S 技術開発: aircode (Project page) ムンク展ー共鳴する魂の叫び 東京都美術館 2018/10/27 ~ 2019/1/20 https://vimeo.com/307183476

- 11. identity loss intuition behind the loss functions loss_idt_A and loss_idt_B #322 https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/322 論文より: https://arxiv.org/pdf/1703.10593.pdf

- 12. 参考 - https://arxiv.org/pdf/1406.2661.pdf (GAN 原論文) - https://arxiv.org/pdf/1703.10593.pdf (CycleGAN 原論文) - https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix (CycleGAN著者のRepository) - GANの損失関数 - 今さら聞けないGANの目的関数 - 敵対的生成ネットワーク( GAN)(slideshare) - Neural Style Transfer (画風変換)