[DL輪読会]YOLO9000: Better, Faster, Stronger

- 1. DEEP LEARNING JP [DL Papers] “YOLO9000: Better, Faster, Stronger” (CVPR’17 Best Paper) And the History of Object Detection Makoto Kawano, Keio University http://deeplearning.jp/ 1

- 2. 書誌情報 ? CVPR2017 Best Paper Award ? Joseph Redmon, Ali Farhadi(ワシントン大学) ? 選定理由: ? YOLOという前バージョン(同じ著者たち+α)の存在を知っていた ? バージョンアップして,ベストペーパーに選ばれたことを耳にしたから ? この論文を中心に物体検出の歴史みたいなものを話します ? R-CNN(2014)~Mask R-CNN(2017) ? R-CNN, SPPNet, Fast R-CNN, Faster R-CNN, YOLO, SSD, YOLO9000, (Mask R-CNNのさわりだけ) ? ほとんど触れたことがない分野で,宣言したことをものすごく後悔 ? 結構独断と偏見に満ち溢れているので,間違ってたら指摘お願いします 2

- 3. 3

- 4. アジェンダ(歴史) ? NOT End-to-End Learning時代(2013~2015年) ? R-CNN(CVPR’14, 2013/11) ? SPPNet(ECCV’14, 2014/6) ? Fast R-CNN(ICCV’15, 2015/4) ? End-to-End Learning時代(2015年~現在) ? Faster R-CNN(NIPS’15, 2015/6) ? YOLO(2015/6) ? SSD(2015/12) ? YOLO9000(CVPR’17, 2016/12) ? Mask R-CNN(2017/3) 4

- 5. アジェンダ(歴史) ? NOT End-to-End Learning時代(2013~2015年) ? R-CNN(CVPR’14, 2013/11) Girshickら(UCバークレー) ? SPPNet(ECCV’14, 2014/6) Heら(Microsoft) ? Fast R-CNN(ICCV’15, 2015/4) Girshick(Microsoft) ? End-to-End Learning時代(2015年~現在) ? Faster R-CNN(NIPS’15, 2015/6) He+Girshickら(Microsoft) ? YOLO(2015/6) Redmon+Girshickら(ワシントン大学+Facebook) ? SSD(2015/12) Google勢 ? YOLO9000(CVPR’17, 2016/12) Redmonら(ワシントン大学) ? Mask R-CNN(2017/3) He+Girshickら(Facebook) 世界は3人(1人)に振り回されている 5Kaiming He Ross Girshick Joseph Redmon 伝授?

- 6. アジェンダ(系譜?) 6 Fast R-CNN R-CNN SPPnet YOLO Faster R-CNN SSD YOLO9000 Masked R-CNN NOT End-to-End learning時代 End-to-End learning時代 2013年 2015年6月 インスタンス検出時代突入?

- 7. そもそも物体検出とは ? CVタスクの一つ ? 与えられた画像の中から, 物体の位置とカテゴリ(クラス)を当てる ? 基本的な流れ: 1. 画像から物体領域の候補選出(Region Proposal) 枠, Bounding Boxとも呼ばれる 2. 各枠で画像認識 多クラス分類問題 7

- 8. 物体検出の二つの時代 ? Not End-to-End Learning時代 ? 1.領域候補(Region Proposal)と2.物体認識(分類)を別々に行う ? 物体認識で強かったCNNを取り入れてすごいとされた時代 ? End-to-End Learning時代 ? 1.と2.の処理を一つのニューラルネットワークで済ませる ? 精度向上?速度向上を目指す時代 8

- 9. Region Proposal Methods ? Selective Search[]やEdgeBoxes[]など いずれも計算量が膨大 ? SSの場合,ピクセルレベルで類似する領域をグルーピングしていく ? 似たような特徴を持つ領域を結合していき、1つのオブジェクトとして抽出する 9

- 10. Regional-CNN ? 物体の領域を見つける ? 領域をリサイズして,CNNで特徴抽出 ? SVMで画像分類 Selective Search Object Classification 10

- 11. R-CNNの欠点 ? 各工程をそれぞれで学習する必要がある ? 領域候補の回帰 ? CNNのFine-tuning ? SVMの多クラス分類学習 ? テスト(実行)時間が遅い ? Selective Search:1枚あたり2秒くらい 11

- 12. SPPnet ? この時代のCNNは入力画像サイズが固定 ? R-CNNもリサイズしていた ? 領域候補全て(2000個)に対してCNNは遅い ? Spatial Pyramid Poolingの提案 ? 様々なH×Wのグリッドに分割してそれぞれでMaxpooling ? Pros. ? 高速化に成功 ? Cons. ? SPPのどれを逆伝搬すればいいかわからない ? 全層を通しての学習はできない 12

- 13. Fast R-CNN ? 物体検出のための学習を可能にした ? SVMの代わりにSoftmaxと座標の回帰層 ? Region on Interest Pooling Layerの導入 ? Selective Searchなどで出てきた領域をFeature Mapに射影する ? H×Wのグリッドに分割して,各セルでMaxpoolingする ? Spatial Pyramid Pollingレイヤーの一種類だけと同じ ? 個人的に5.4. Do SVMs outperform softmax? という節の貢献大の印象 ? Pros. ? 学習とテスト両方で高精度?高速化を達成 ? Cons. ? 依然としてSelective Searchなど領域候補選出は別のアルゴリズム 13

- 14. End-to-End Learning時代の幕開け ? どんなにCNN側が速くなったり,性能が良くなったりしても, Selective Searchを使っている限り未来はない ? Region ProposalもCNN使えばいいんじゃない?? ? Faster R-CNNとYOLOの登場 ? Faster R-CNN: 2015/6/4 ? YOLO: 2015/6/8 ? どちらもお互いを参照してない ? でも共著に同じ人いる,,, 14

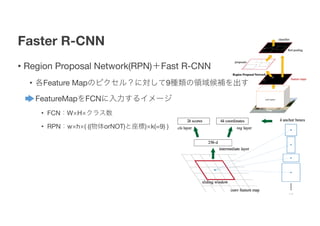

- 15. Faster R-CNN ? Region Proposal Network(RPN)+Fast R-CNN ? 各Feature Mapのピクセル?に対して9種類の領域候補を出す ? FeatureMapをFCNに入力するイメージ ? FCN:W×H×クラス数 ? RPN:w×h×( ((物体orNOT)と座標)×k(=9) ) 15

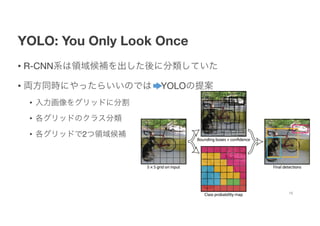

- 16. YOLO: You Only Look Once ? R-CNN系は領域候補を出した後に分類していた ? 両方同時にやったらいいのでは YOLOの提案 ? 入力画像をグリッドに分割 ? 各グリッドのクラス分類 ? 各グリッドで2つ領域候補 16

- 17. YOLO: You Only Look Once ? アーキテクチャはものすごく単純 ? GoogLeNetを参考にしたCNN 各グリッドのクラス分類と座標を算出する 17 λcoord S2 X i=0 BX j=0 1lobj ij ? (xi ? ?xi)2 + (yi ? ?yi)2 ? + λcoord S2 X i=0 BX j=0 1lobj ij " ?p wi ? p ?wi ?2 + ?p hi ? q ?hi ◆2 # + S2 X i=0 1lobj ij (pi(c) ? ?pi(c)) 2 + S2 X i=0 BX j=0 1lobj ij ? Ci ? ?Ci ?2 + λnoobj S2 X i=0 BX j=0 1lnoobj ij ? Ci ? ?Ci ?2 座座標標のの誤誤差差 信信頼頼度度のの誤誤差差 分分類類誤誤差差

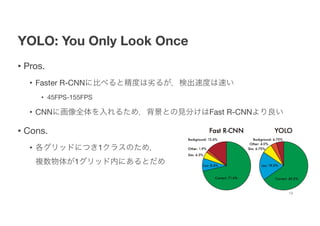

- 18. YOLO: You Only Look Once ? Pros. ? Faster R-CNNに比べると精度は劣るが,検出速度は速い ? 45FPS-155FPS ? CNNに画像全体を入れるため,背景との見分けはFast R-CNNより良い ? Cons. ? 各グリッドにつき1クラスのため, 複数物体が1グリッド内にあるとだめ 18

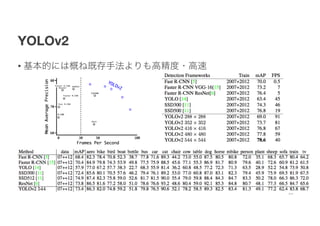

- 19. ? YOLOをStraightForwardに改良したバージョン ? ①②③:ネットワークのアーキテクチャを改良 ? ④:出力をLinearではなく,FCNにした(Faster R-CNN参考) ? ⑤⑥:データを複数解像度で与える ? ⑦⑧:データの事前情報 ①① ②② ③③ ④④ ⑤⑤ ⑥⑥ ⑦⑦ ⑧⑧ YOLOv2

- 20. YOLOv2 ? アーキテクチャの工夫 ? ①全Conv層にBatch Normalizationを入れる ? 収束を速くし,正則化の効果を得る ? ②新しい構造Darknet-19にする ? VGG16のように3×3のフィルタサイズ ? Network In NetworkのGlobal Average Poolingを使う ? ③Passthroughを入れる(わからない) ? add a passthrough layer from the final 3 × 3 × 512 layer to the second to last convolutional layer 20

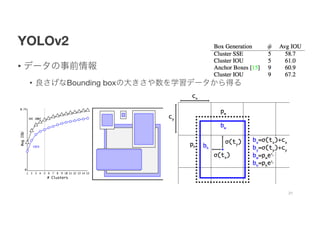

- 21. YOLOv2 ? データの事前情報 ? 良さげなBounding boxの大きさや数を学習データから得る 21

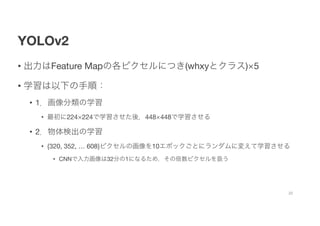

- 22. YOLOv2 ? 出力はFeature Mapの各ピクセルにつき(whxyとクラス)×5 ? 学習は以下の手順: ? 1.画像分類の学習 ? 最初に224×224で学習させた後,448×448で学習させる ? 2.物体検出の学習 ? {320, 352, … 608}ピクセルの画像を10エポックごとにランダムに変えて学習させる ? CNNで入力画像は32分の1になるため,その倍数ピクセルを扱う 22

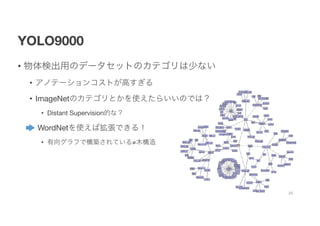

- 25. YOLO9000 ? 物体検出用のデータセットのカテゴリは少ない ? アノテーションコストが高すぎる ? ImageNetのカテゴリとかを使えたらいいのでは? ? Distant Supervision的な? ? WordNetを使えば拡張できる! ? 有向グラフで構築されている≠木構造 25

- 26. YOLO9000 ? ImageNetのvisual nounでWordTreeを構築 ? 条件付き確率で表現可能に 26

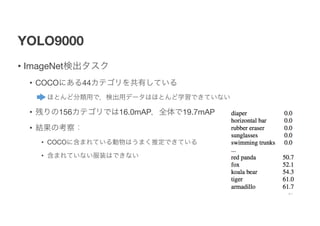

- 27. YOLO9000 ? ImageNet検出タスク ? COCOにある44カテゴリを共有している ? ほとんど分類用で,検出用データはほとんど学習できていない ? 残りの156カテゴリでは16.0mAP,全体で19.7mAP ? 結果の考察: ? COCOに含まれている動物はうまく推定できている ? 含まれていない服装はできない 27

- 28. Mask R-CNN ? 物体検出だけではなく,インスタンス検出だった ? 体力があればやります 28

![DEEP LEARNING JP [DL Papers]

“YOLO9000: Better, Faster, Stronger” (CVPR’17 Best Paper)

And the History of Object Detection

Makoto Kawano, Keio University

http://deeplearning.jp/

1](https://image.slidesharecdn.com/dlreadingpaper20170804-170803075138/85/DL-YOLO9000-Better-Faster-Stronger-1-320.jpg)

![Region Proposal Methods

? Selective Search[]やEdgeBoxes[]など いずれも計算量が膨大

? SSの場合,ピクセルレベルで類似する領域をグルーピングしていく

? 似たような特徴を持つ領域を結合していき、1つのオブジェクトとして抽出する

9](https://image.slidesharecdn.com/dlreadingpaper20170804-170803075138/85/DL-YOLO9000-Better-Faster-Stronger-9-320.jpg)

![SSII2020 [OS2-02] 教師あり事前学習を凌駕する「弱」教師あり事前学習](https://cdn.slidesharecdn.com/ss_thumbnails/200611ssii2020os2weaksupervision-200609142553-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Focal Loss for Dense Object Detection](https://cdn.slidesharecdn.com/ss_thumbnails/focalloss-180208092846-thumbnail.jpg?width=560&fit=bounds)

![SSII2021 [OS2-01] 転移学習の基礎:異なるタスクの知識を利用するための機械学習の方法](https://cdn.slidesharecdn.com/ss_thumbnails/os2-02final-210610091211-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Swin Transformer: Hierarchical Vision Transformer using Shifted Windows](https://cdn.slidesharecdn.com/ss_thumbnails/swintransformer-210514020542-thumbnail.jpg?width=560&fit=bounds)

![SSII2021 [OS2-03] 自己教師あり学習における対照学習の基礎と応用](https://cdn.slidesharecdn.com/ss_thumbnails/os2-04-210605061641-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Life-Long Disentangled Representation Learning with Cross-Domain Laten...](https://cdn.slidesharecdn.com/ss_thumbnails/20180914iwasawa-180919025635-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]AdaShare: Learning What To Share For Efficient Deep Multi-Task Learning](https://cdn.slidesharecdn.com/ss_thumbnails/dl1211-191213002847-thumbnail.jpg?width=560&fit=bounds)