【DL輪読会】An Image is Worth One Word: Personalizing Text-to-Image Generation using Textual Inversion

- 1. 1 DEEP LEARNING JP [DL Papers] http://deeplearning.jp/ “An Image is Worth One Word: Personalizing Text-to- Image Generation usingTextual Inversion” University ofTsukuba M1,Yuki Sato

- 2. 書誌情報 An Image is Worth One Word: Personalizing Text-to-Image Generation using Textual Inversion ? Rinon Gal1, 2, Yuval Alaluf1, Yuval Atzmon2, Or Patashnik1, Amit H. Bermano1, Gal Chechik2, Daniel Cohen-Or1 - 1Tel-Aviv University, 2NVIDIA ? 投稿先: arXiv(2022/08/02) ? プロジェクトページ: https://textual-inversion.github.io/ ? 選定理由: ?近年盛んなText-to-Imageにおいて生成画像の多様性だけではなくユーザの意図 を汲んだ画像生成を実現しており需要が高いと考えられる. ?シンプルな手法で応用の幅が広いと考えられる. ※出典が明記されていない限り図表は論文?プロジェクトページより引用 2

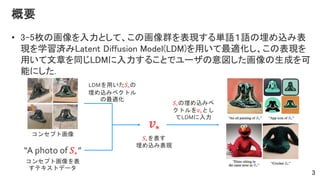

- 3. ? 3-5枚の画像を入力として、この画像群を表現する単語1語の埋め込み表 現を学習済みLatent Diffusion Model(LDM)を用いて最適化し、この表現を 用いて文章を同じLDMに入力することでユーザの意図した画像の生成を可 能にした. 3 コンセプト画像 ?? ??を表す 埋め込み表現 “A photo of ??” コンセプト画像を表 すテキストデータ 概要 LDMを用いた??の 埋め込みベクトル の最適化 ??の埋め込みベ クトルを??とし てLDMに入力

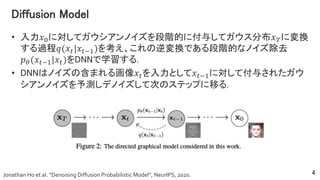

- 4. Diffusion Model ? 入力?0に対してガウシアンノイズを段階的に付与してガウス分布??に変換 する過程?(??|???1)を考え、これの逆変換である段階的なノイズ除去 ??(???1|??)をDNNで学習する. ? DNNはノイズの含まれる画像??を入力として???1に対して付与されたガウ シアンノイズを予測しデノイズして次のステップに移る. 4 Jonathan Ho et al. “Denoising Diffusion Probabilistic Model”, NeurIPS, 2020.

- 5. Latent Diffusion Model ? AutoEncoderの潜在変数に対してDiffusion modelを適用するモデル. ? 入力画像からEncoder?を用いて中間表現を抽出し、中間表現に対して Diffusion modelを適用、再構成された中間表現をDecoder ?に入力して画 像を出力する. ? ?, ?は事前に学習されており、U-Net??と条件付けのEncoder ??の学習時 には固定する. 5 Robin Rombach et al. “High-Resolution Image Synthesis with Latent Diffusion Model”, CVPR, 2022. ? ∈ ??×?×3 ? ∈ ??×?×?

- 6. Latent Diffusion Model ? LDMのでは中間表現に対してノイズを付与しU-Net ??でデノイズする.この 時、デノイズ過程においてクラスラベル等をEncoder ??を用いて中間表現 に変換し??のcross-attentionで用いる. ? ??と??は以下の損失関数で同時に最適化される. 6 Robin Rombach et al. “High-Resolution Image Synthesis with Latent Diffusion Model”, CVPR, 2022. 条件付ける特徴量: ?? ? ∈ ??×?? U-Netの中間特徴量: ?? ?? ∈ ??×?? ? Attention ?, ?, ? = softmax ??? ? ? ? ? = ? ? (?) ? ?? ?? , ? ? ? ∈ ??×?? K = ? ? (?) ? ?? ? , ? ? ? ∈ ??×?? V = ? ? (?) ? ?? ? , ? ? (?) ∈ ??×?? ?

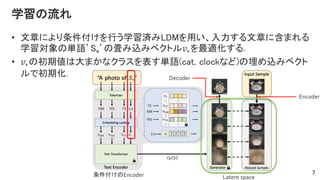

- 7. 学習の流れ ? 文章により条件付けを行う学習済みLDMを用い、入力する文章に含まれる 学習対象の単語’S*’の畳み込みベクトル??を最適化する. ? ??の初期値は大まかなクラスを表す単語(cat, clockなど)の埋め込みベクト ルで初期化. 7 条件付けのEncoder Latent space Encoder Decoder

- 8. 実験設定 ? LAION-400Mで事前学習されたLDMモデル(1.4B params)を使用.text encoderにはBERTが用いられている. ? V100x2で5000epoch学習を行った. ? 学習時に入力する文章はCLIP ImageNet templates[1]にある以下の文章か らランダムにサンプリング. 8 [1] https://github.com/openai/CLIP/blob/main/notebooks/Prompt_Engineering_for_ImageNet.ipynb

- 12. 実験結果: スタイル変換 ? 入力するテキストを”A painting in the style of S*”として学習. 12

- 16. 実験結果: 埋め込み表現の学習手法の比較 ? Extended latent space: 埋め込み表現を学習する単語数を2,3個に拡張. ? Progressive extensions: 2000stepごとに埋め込み表現を追加. ? Regularization: 大まかなクラスを表す埋め込み表現による正則化. ? Pre-image token: 学習データセット全体を表現する“S*”と個別の特徴を表 現する{?? ? }??1を定義して”A photo of S* with Si”というテキストを入力して 最適化を行う. ? Human captions: “S*”を人間のキャプションに置き換える. ? Reference: 学習データセットのデータと“S*”を用いないテキストを入力して 得られる生成データを使用. ? Textual-Inversion: モデルの学習率を2e-2,1e-4で実験. ? Additional setup: Bipartite inversionとpivotal inversionを追加. 16

- 17. 実験結果: 埋め込み表現の評価指標 ? “A photo of S*”のテキストと埋め込み表現を用いて生成された64枚の画像 と埋め込み表現の学習に用いたデータセットのペアごとのCLIP特徴量のコ サイン類似度の平均で再構成の精度を算出する.(Image Similarity) ? 背景の変更、スタイルの変更など様々な難易度のテキスト(ex “A photo of S* on the moon”)を用いて、各テキストを入力として50回のDDIMステップで 64枚の画像を生成し、生成画像のCLIP特徴量の平均を算出、” S*”を含ま ないテキスト(ex “A photo of on the moon”)のCLIP特徴量とのコサイン類 似度を算出する.(Text Similarity) 17

- 18. 実験結果: 埋め込み表現の評価 ? 多くの手法の再構成の精 度は学習用データセットか らランダムに抽出した場合 と同様である. ? 1単語の場合が最もtext similarityが高い. 18

- 19. 実験結果: 人による評価 ? 2つのアンケートを各600件、計1200件収集した. 1. 4つの学習データの画像に対してモデルが生成した5つ目の画像がどの程度類似 しているかランク付けしてもらう. 2. 画像の文脈を表すテキストと生成された画像の類似度をランク付けしてもらう. 19

- 21. Limitationとsocial impact ? Limitation ?再構成の精度がまだ低く、1つの埋め込み表現の学習に2時間かかる. ? Social impact ?T2Iモデルは悪用される可能性が指摘されており、パーソナライズすることでより真偽 が見極めにくくなるように思えるがこのモデルはそこまで強力でない. ?多くのT2Iモデルでは生成結果よって偏りが生じるが実験結果よりこのモデルはこれ を軽減できるだろう. ?ユーザがアーティストの画像を無断で学習に用いて類似画像を生成できるが、将来 的にはアーティストがT2Iモデルによる独自のスタイルの獲得や迅速な初期プロット の作成といった恩恵で相殺されることを期待する. 21

- 22. 所感 ? 生成結果を見ても言語化が難しい画像の特徴を入力された文章の意味に 即して適切に生成結果に反映しており、モデルが学習した単語のニュアン スを理解せずとも意図した画像が生成できる意義は大きい. ? 著者らの提案手法は既存のLDMを用いて埋め込み表現を探索するという シンプルな手法であり、LDMに限らず他の学習済みのT2Iモデルにも応用 が可能と考えられる. ? 1単語で未知の画像を説明できる埋め込み表現が学習できており、 DALLE-2で指摘されているモデル独自の言語[1]の解析にも利用でき、モデ ルの解釈性や安全性の研究にも応用できると考えている. 22 1. Giannis Daras, Alexandros G. Dimakis. “Discovering the HiddenVocabulary of DALLE-2”. arXiv preprint arXiv:2206.00169, 2022.

![1

DEEP LEARNING JP

[DL Papers]

http://deeplearning.jp/

“An Image is Worth One Word: Personalizing Text-to-

Image Generation usingTextual Inversion”

University ofTsukuba M1,Yuki Sato](https://image.slidesharecdn.com/sato20220819-220819032017-7840a5f4/85/DL-An-Image-is-Worth-One-Word-Personalizing-Text-to-Image-Generation-using-Textual-Inversion-1-320.jpg)

![実験設定

? LAION-400Mで事前学習されたLDMモデル(1.4B params)を使用.text

encoderにはBERTが用いられている.

? V100x2で5000epoch学習を行った.

? 学習時に入力する文章はCLIP ImageNet templates[1]にある以下の文章か

らランダムにサンプリング.

8

[1] https://github.com/openai/CLIP/blob/main/notebooks/Prompt_Engineering_for_ImageNet.ipynb](https://image.slidesharecdn.com/sato20220819-220819032017-7840a5f4/85/DL-An-Image-is-Worth-One-Word-Personalizing-Text-to-Image-Generation-using-Textual-Inversion-8-320.jpg)

![所感

? 生成結果を見ても言語化が難しい画像の特徴を入力された文章の意味に

即して適切に生成結果に反映しており、モデルが学習した単語のニュアン

スを理解せずとも意図した画像が生成できる意義は大きい.

? 著者らの提案手法は既存のLDMを用いて埋め込み表現を探索するという

シンプルな手法であり、LDMに限らず他の学習済みのT2Iモデルにも応用

が可能と考えられる.

? 1単語で未知の画像を説明できる埋め込み表現が学習できており、

DALLE-2で指摘されているモデル独自の言語[1]の解析にも利用でき、モデ

ルの解釈性や安全性の研究にも応用できると考えている.

22

1. Giannis Daras, Alexandros G. Dimakis. “Discovering the HiddenVocabulary of DALLE-2”.

arXiv preprint arXiv:2206.00169, 2022.](https://image.slidesharecdn.com/sato20220819-220819032017-7840a5f4/85/DL-An-Image-is-Worth-One-Word-Personalizing-Text-to-Image-Generation-using-Textual-Inversion-22-320.jpg)

![[DL輪読会]Swin Transformer: Hierarchical Vision Transformer using Shifted Windows](https://cdn.slidesharecdn.com/ss_thumbnails/swintransformer-210514020542-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]GLIDE: Guided Language to Image Diffusion for Generation and Editing](https://cdn.slidesharecdn.com/ss_thumbnails/glide2-220107030326-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Few-Shot Unsupervised Image-to-Image Translation](https://cdn.slidesharecdn.com/ss_thumbnails/dlseminarfunit-190517005148-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]StyleGAN-NADA: CLIP-Guided Domain Adaptation of Image Generators](https://cdn.slidesharecdn.com/ss_thumbnails/stylegan-nada-210813013304-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Dense Captioning分野のまとめ](https://cdn.slidesharecdn.com/ss_thumbnails/dlseminar-201202012355-thumbnail.jpg?width=560&fit=bounds)